Abstract

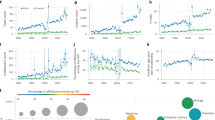

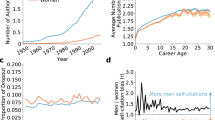

Numerous studies across different research fields have shown that both male and female referees consistently give higher scores to work done by men than to identical work done by women1,2,3. In addition, women are under-represented in prestigious publications and authorship positions4,5 and women receive ~10% fewer citations6,7. In astronomy, similar biases have been measured in conference participation8,9 and success rates for telescope proposals10,11. Even though the number of doctorate degrees awarded to women is constantly increasing, women still tend to be under-represented in faculty positions12. Spurred by these findings, we measure the role of gender in the number of citations that papers receive in astronomy. To account for the fact that the properties of papers written by men and women differ intrinsically, we use a random forest algorithm to control for the non-gender-specific properties of these papers. Here we show that papers authored by women receive 10.4 ± 0.9% fewer citations than would be expected if the papers with the same non-gender-specific properties were written by men.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$29.99 / 30 days

cancel any time

Subscribe to this journal

Receive 12 digital issues and online access to articles

$119.00 per year

only $9.92 per issue

Buy this article

- Purchase on Springer Link

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

References

Budden, A. E. et al. Double-blind review favours increased representation of female authors. Trends Ecol. Evol. 23, 4–6 (2008).

Moss-Racusin, C., Dovidio, J., Brescoll, V., Graham, M. & Handelsman, J. Science faculty’s subtle gender biases favor male students. Proc. Natl Acad. Sci. USA 109, 16,474–16,479 (2012).

Wennerås, C. & Wold, A. Nepotism and sexism in peer-review. Nature 387, 341–343 (1997).

Conley, D. & Stadmark, J. Gender matters: a call to commission more women writers. Nature 488, 590–590 (2012).

West, J. D., Jacquet, J., King, M. M., Correll, S. J. & Bergstrom, C. T. The role of gender in scholarly authorship. PLoS ONE 8, e66212 (2013).

Ghiasi, G., Larivière, V. & Sugimoto, C. R. On the compliance of women engineers with a gendered scientific system. PLoS ONE 10, e0145931 (2015).

Larivière, V., Ni, C., Gingras, Y., Cronin, B. & Sugimoto, C. R. Bibliometrics: global gender disparities in science. Nature 504, 211–213 (2013).

Davenport, J. R. A. et al. Studying gender in conference talks — data from the 223rd Meeting of the American Astronomical Society. Preprint at http://arXiv.org/abs/1403.3091 (2014).

Pritchard, J. et al. Asking gender questions: results from a survey of gender and question asking among UK astronomers at NAM2014. Astron. Geophys. 55, 8–12 (2014).

Patat, F. Gender systematics in telescope time allocation at ESO. The Messenger 165, 2–9 (2016).

Reid, I. N. Gender-correlated systematics in HST proposal selection. Publ. Astron. Soc. Pacif. 126, 923–934 (2014).

Women, Minorities, and Persons with Disabilities in Science and Engineering (National Science Foundation , 2015).

Breiman, L. Random forests. Machine Learning 45, 5–32 (2001).

Hong, L. & Page, S. E. Groups of diverse problem solvers can outperform groups of high-ability problem solvers. Proc. Natl Acad. Sci. USA 101, 16,385–16,389 (2004).

Medin, D. L. & Lee, C. D. Diversity makes better science. APS Observer (27 April 2012); www.psychologicalscience.org/observer/diversity-makes-better-science#.WQB3psYo-aE

Page, S. E. The Difference: How the Power of Diversity Creates Better Groups, Firms, Schools, and Societies (Princeton Univ. Press, 2007).

Ivie, R., White, S., Garrett, A. & Anderson, G. Women Among Physics and Astronomy Faculty (American Institute of Physics Statistical Research Center, 2013).

Pedregosa, F. et al. Machine learning in Python. J. Machine Learning Res. 12, 2825–2830 (2011).

Mathematica, Version 11.1 http://support.wolfram.com/kb/472 (Wolfram Research, 2017).

Breiman, L., Friedman J., Stone, C. J. & Olshen, R. A. Classification and Regression Trees (Chapman & Hall/CRC, 1984).

Hunter, J. D. Matplotlib: a 2D graphics environment. Comput. Sci. Eng. 9, 90–95 (2007).

Acknowledgements

We thank J. Woo for giving detailed comments on the manuscript. We acknowledge the stimulating comments given to us by M. Urry, R. Schubert, R. Marino, B. Trakhtenbrot, I. Moise and E. Pournaras. We thank A. Bluck for proofreading the manuscript. We acknowledge support from the Swiss National Science Foundation. This research made use of the National Aeronautics and Space Administation’s Astrophysics Data System, the arXiv.org preprint server and the Python plotting library Matplotlib21.

Author information

Authors and Affiliations

Contributions

N.C. initiated the project and carried out the data analysis. S.T. created the name-matching algorithm and prepared the sample. S.B. created the algorithm that matched the authors with their geographical location. N.C. and S.T. wrote the paper. All authors discussed the results and commented on the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing financial interests.

Supplementary information

Supplementary Information

Supplementary Figures 1–3 and Supplementary Table 1. (PDF 234 kb)

Rights and permissions

About this article

Cite this article

Caplar, N., Tacchella, S. & Birrer, S. Quantitative evaluation of gender bias in astronomical publications from citation counts. Nat Astron 1, 0141 (2017). https://doi.org/10.1038/s41550-017-0141

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41550-017-0141

This article is cited by

-

Heavy-tailed neuronal connectivity arises from Hebbian self-organization

Nature Physics (2024)

-

Contrasting Views of Autism Spectrum Traits in Adults, Especially in Self-Reports vs. Informant-Reports for Women High in Autism Spectrum Traits

Journal of Autism and Developmental Disorders (2024)

-

Clinical Super-Resolution Computed Tomography of Bone Microstructure: Application in Musculoskeletal and Dental Imaging

Annals of Biomedical Engineering (2024)

-

The Neuro-inspired LA: A Novel Neuroscience Approach to Implementing a Learning Assistant Program for Biomedical Engineering Undergraduate Students

Biomedical Engineering Education (2024)

-

BME Master's Programs: Who Are They for and What Can They Offer?

Biomedical Engineering Education (2024)