Abstract

Schizophrenia and related disorders have heterogeneous outcomes. Individualized prediction of long-term outcomes may be helpful in improving treatment decisions. Utilizing extensive baseline data of 523 patients with a psychotic disorder and variable illness duration, we predicted symptomatic and global outcomes at 3-year and 6-year follow-ups. We classified outcomes as (1) symptomatic: in remission or not in remission, and (2) global outcome, using the Global Assessment of Functioning (GAF) scale, divided into good (GAF ≥ 65) and poor (GAF < 65). Aiming for a robust and interpretable prediction model, we employed a linear support vector machine and recursive feature elimination within a nested cross-validation design to obtain a lean set of predictors. Generalization to out-of-study samples was estimated using leave-one-site-out cross-validation. Prediction accuracies were above chance and ranged from 62.2% to 64.7% (symptomatic outcome), and 63.5–67.6% (global outcome). Leave-one-site-out cross-validation demonstrated the robustness of our models, with a minor drop in predictive accuracies of 2.3% on average. Important predictors included GAF scores, psychotic symptoms, quality of life, antipsychotics use, psychosocial needs, and depressive symptoms. These robust, albeit modestly accurate, long-term prognostic predictions based on lean predictor sets indicate the potential of machine learning models complementing clinical judgment and decision-making. Future model development may benefit from studies scoping patient’s and clinicians' needs in prognostication.

Similar content being viewed by others

Introduction

Schizophrenia is a heterogeneous illness and its long-term outcomes are highly variable1,2,3. Attempts to provide prognostic markers for long-term outcomes, such as Rumke’s “praecox feeling”, have appeared throughout medical history4, but despite an abundance of outcome predictors at group-level, such as sociodemographic characteristics, clinical markers, and neurocognitive markers5,6, at a patient-level, no valid prediction model for long-term outcome of schizophrenia is available to clinicians at present7. An additional challenge is that “outcome” entails symptomatic, social, functional, and personal dimensions, which are only partly interrelated8,9, and may have differing significance for individual patients10,11. These matters complicate clinical decision-making, for example when considering an early switch to clozapine12, antipsychotic dose reduction or discontinuation strategies13, allocations of sheltered housing14, or occupational support15. From a public health perspective, reliable long-term outcome prediction and the resulting treatment stratification are important, as demands usually outweigh the capacity of mental health institutions, even in countries with high mental healthcare expenses16.

Machine learning potentially presents a way to develop models reliably predicting individual outcomes for multifactorial and heterogeneous illnesses such as schizophrenia17,18,19,20,21. In clinical research, machine learning, or pattern recognition, refers to an algorithm that is able to learn from a large multivariate dataset to make an adequate prediction for a patient, for example concerning the future clinical outcome. Modern prospective multicenter studies facilitate the development of prediction models based on machine learning. They provide well-established outcome measures and large numbers of potential predictors (i.e. “features”), in study samples large enough to cover the heterogeneity of the target population19. A landmark study by Koutsouleris et al. recently demonstrated the potential of machine learning for individual outcome prediction in psychosis18. Pre-treatment data from a multicenter clinical trial were used to predict global outcomes after 4 and 52 weeks of treatment in first-episode psychosis. Predictive accuracy was found significantly above chance, at 73.8–75.0%. With an average drop of 2.8%, accuracy was retained when the models were tested on geographic sites left out of the model training procedure, suggestive of its validity in other samples. Unemployment, lower education, functional deficits, and unmet psychosocial needs were found most valuable in predicting 4- and 52-week outcomes.

Here, we extend the use of data-driven model development based on patient reportable data, to long-term (3 and 6 years) symptomatic and global outcomes of patients with schizophrenia-spectrum disorders. To this end, we include a heterogeneous population of schizophrenia-spectrum patients, with variable illness duration and baseline clinical status from the Genetic Risk and Outcome in Psychosis (GROUP) cohort study22. We explore the use of a wide range of baseline markers of genetic and environmental risk and measures of past and baseline clinical state, to predict 3- and 6-year symptomatic and global outcomes. We use data-driven selection to arrive at a model containing predictors from a limited number of measures, aiming at clinical applicability. We assess its generalizability by using leave-one-site-out (LOSO) cross-validation, testing our models on geographic study-sites left out of model development. Additionally, we investigate the use of the features that have been found to predict 4- and 52-week outcomes of first-episode psychosis18, for 3- and 6-year outcomes in the GROUP sample.

Results

Sample characteristics

We included 523 patients with a schizophrenia spectrum disorder who had outcome assessments three (T3) and six (T6) years after baseline. Demographic and clinical baseline characteristics of the study sample and comparisons to patients excluded because of missing follow-up assessments are listed in Table 1. Patients with unfavorable baseline characteristics were more likely to be lost to follow-up. At baseline, T3 and T6, 49%, 37%, and 41% of patients were in symptomatic remission (according to the consensus definition by Andreasen et al. (2005)) respectively; 31%, 44%, and 36% had good global functioning status (Global Assessment of Functioning (GAF) scale ≥ 65) at respective measurements. For symptomatic outcome, 65% and 64% of patients were stable at T3 and T6 relative to baseline, and 68% and 68% for global outcome (Supplementary Fig. 2).

Selection of modalities based on unimodal models

We included demographic information, illness-related variables, Positive and Negative Syndrome Scale (PANSS; present state clinician-rated symptomatology), and either Camberwell Assessment scale of Need Short Appraisal Schedule (CANSAS; clinician-rated and self-reported need of care) or Community Assessment of Psychic Experiences (CAPE; self-reported lifetime psychotic experiences) data for multimodal modeling. Notably, this set is especially rich on indicators of clinical course until inclusion in GROUP (i.e. includes GAF, features from PANSS, and CAPE where applicable). The choice of these modalities was based on unimodal modeling performance for the following modalities: (1) demographic variables; (2) illness-related variables; (3) PANSS; (4) substance use characteristics; (5) neurocognitive task scores; (6) social cognitive task scores; (7) Premorbid Adjustment Scale items; (8) CANSAS; (9) CAPE; (10) extrapyramidal symptoms; (11) genetic features, and familial loading of psychotic disorder, bipolar disorder, and drug abuse; (12) environmental variables of urbanicity and living situation (see the “Methods” section; Supplementary Tables 2 and 4). As mentioned earlier, we additionally trained models using a prespecified set of features that had performed best in predicting 4- and 52-week outcome of first-episode psychosis in the EUFEST study (22 and 24 features, respectively, see part C in Table 2, see the “Methods” section; Supplementary Table 1)18. A summary of the number of features and sample size for unimodal and multimodal models per outcome, and good versus poor outcome distributions is provided Supplementary (Supplementary Tables 2 and 3).

Performance of prediction models: Internal validation

Using a repeated nested cross-validation design (see the “Methods” section, Creation of models (A)) including recursive feature elimination, support vector machine models were trained to predict individual patient’s outcomes based on their baseline data from four modalities. The outcome could be predicted with similar cross-validated balanced accuracies (BACs), regardless of the period, outcome, and the fourth modality included (i.e. CANSAS or CAPE), ranging from 62.2% to 67.6% (Table 2). Model performance was well above benchmark models containing a single feature (GAF) and using threshold values for good/poor global functioning above and below GAF 65 did not result in higher model performance (Supplementary Note 4, Supplementary Table 15).

The 10% most influential features for symptomatic as well as global outcome based on weight and frequency of selection included items from all four modalities in the model: PANSS items, illness-related, demographic features, and either CANSAS or CAPE items (Supplementary Tables 5–12). As illustrated in Fig. 1, generally, the more often a feature is selected, the higher its average weight is (see Supplementary Figs. 3 and 4 for overviews of selection frequency over weight for all models).

Frequency of inclusion of a feature against its (average) weight in the model; shown for prediction of global assessment of functioning (GAF) outcome at T3, containing Positive and Negative Syndrome Scale—general, negative, and positive subscale (PANSS—Gen, Neg, and Pos), Demographic, Illness-related and lifetime psychotic experiences (CAPE) related features. A positive weight reflects that scoring higher on this feature contributes to being classified as ‘poor outcome’. For features with negative weights the opposite holds.

Worse GAF symptoms and GAF disabilities, worse scores on specific items in the positive and negative subdomains of the PANSS (i.e. judgment and insight, hallucinatory behavior, flat affect, unusual thought content, motor retardation), worse score on (health-related) quality of life and the use of antipsychotics were associated with multiple poor outcome endpoints. This was supplemented by a lower number of no needs and met needs, together with housing needs and unmet psychotic disorder needs in models including CANSAS items. In models including the CAPE, items of importance from the CAPE mostly included those from the depression subscale (i.e. guilty and tense feelings, suicidal thoughts, and lack of activity) (Table 3).

The following features were predictive of at least one-fourth of poor outcome endpoints, albeit selected in fewer models than those summed in the previous paragraph: higher age, schizophrenia diagnosis, and a higher level of various present state symptoms in PANSS subdomains of positive, negative, disorganization symptoms and emotional distress (i.e. delusions, suspiciousness/persecution, grandiosity, stereotyped thinking, lack of spontaneity, difficulty in abstract thinking, emotional withdrawal, depressive symptoms, and tension) (Table 3).

Comparison of frequently misclassified patients to those mostly correctly predicted showed the following: patients with a good outcome, who were incorrectly classified as having poor outcome (21–37% over the models) showed less favorable baseline characteristics (e.g. higher PANSS, lower GAF scores, more chronicity, lower parent education, and more schizophrenia diagnoses) when compared to the correctly classified group of patients. Contrary, patients with poor outcome who were mostly incorrectly classified as having good outcome (3–14%) showed favorable baseline characteristics, such as lower PANSS and higher GAF scores, when compared to the most correctly classified group of patients (see Supplementary Table 13 for a detailed overview of significant comparisons).

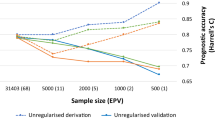

Generalization of the prediction models: LOSO validation

The generalizability of the models was evaluated by consecutively leaving out patients from one of the four geographic sites and testing the model trained on the three remaining sites in these patients (see the “Methods” section, Creation of models (B)). These LOSO validated models had slightly (−2.3% on average) lower accuracies than models trained on the full dataset (Table 2). The range of the average prediction accuracies for symptomatic outcome at T3 and T6 was 59.9–63.8% (Table 2); site-specific BACs ranged from 53.0% to 69.7% (Table 4). For global outcome the range was 61.2–64.8% (Table 2); BACs of the different sites ranged from 53.0% to 68.9% (Table 4). The difference between T3 and T6 prediction accuracy was small (mean BACs were 63.4% and 61.9%, respectively). There was, again, a small difference between CANSAS-based and CAPE-based models (mean BACs were 62.8% and 62.4%, respectively). LOSO models validated on the Utrecht site and single-site models trained on the Utrecht site tended to perform below average, in line with the differential patient profile (higher baseline symptom severity, lower GAF scores, and higher needs) found at this site relative to the other geographic sites and its smaller sample size (Supplementary Note 4.4; Supplementary Table 14).

External validation of EUFEST predictors

Predicting long-term outcome based on the top 10% most predictive features for the short-term outcome (EUFEST study (see the “Methods” section, Creation of models (C))), resulted in accuracies of 59.0–62.7% for symptomatic outcome. For global outcomes, we obtained accuracies of 56.5–66.4% (Table 2).

Discussion

Using a rigorous machine learning approach, we developed individualized models to predict 3- and 6-year symptomatic and global outcomes of patients with schizophrenia-spectrum disorders based on patient-reportable data. The multicenter sample included 523 schizophrenia-spectrum patients with variable illness duration, mainly with established illness. Notably, baseline clinical status was variable, and outcome status remained poor at follow-up in a large share of patients. The data-driven nature of this study allowed us to explore the predictive value of a wide range of measures for the long-term outcome of psychosis. In keeping with clinical applicability, our aim was to arrive at lean models. We report nested-cross-validated balanced accuracies ranging from 62.2% to 67.6%. Suggestive of generalization of model performance to out-of-study samples, leave-site-out cross-validation showed minor drops in accuracy, with balanced accuracies ranging from 59.9% to 64.8%. Models trained in our sample for long-term outcome prediction, utilizing short-term outcome predictors for first-episode psychosis18, yielded comparable balanced accuracies up to 66.4%.

To the best of our knowledge, no prognostic models for the long-term global and symptomatic outcomes of psychosis are presently available23. Our results indicate that while state-of-the-art methods may result in robust (generalizable) performance estimates, predictions are modestly accurate, similar to recent experimental prognostic models for depression based on machine learning predicting long-term clinical outcomes based on patient reportable data17,24. The models did not reach the LOSO cross-validated accuracy of 71% in the study on the one-year outcome of first-episode psychosis18, presumably due to the uncertainty introduced by time, care-as-usual setting, and the heterogeneity of baseline clinical status and illness duration within our target population.

Through a modality-wise learning strategy, a combination of baseline sociodemographic features and clinician-rated symptoms, complemented by self-rated lifetime psychotic experiences (CAPE items) or psychosocial needs (CANSAS items) was selected in the models. Interestingly, in unimodal models, these state-based and context-based modalities outperformed trait-based modalities, including genetic and cognitive task scores. This finding may be partly explained by the relatively large share of patients with a stable clinical state at inclusion and follow-up. We further argue that the performance of trait-based measures may improve if the interaction between genetics and environmental exposures in psychosis outcomes is taken into account25.

Features offering a clinician’s integration of the clinical picture and those with a broad underlying construct (e.g. GAF; insight; schizophrenia diagnosis; quality of life; summed no need/met need items; depression) show to be the most important predictors. These resemble features found to be predictive of one-year outcome in first-episode psychosis: psychosocial needs, global functioning deficits, and affective symptoms (specific quality of life, CAPE, and PANSS items)18.

In comparison to the aforementioned study, and our work, we also note differences, suggesting differential ways to short-term and long-term clinical management of psychosis. We found higher, and not lower symptom severity to predict poor long-term outcome18. In particular, lack of insight appears predictive of poor long-term outcomes across all the models. This may be mediated through poor adherence and eventual service disengagement26. Furthermore, we note that the most important social need in our models (i.e. housing) is different from those (company, daytime activities) predicting short-term outcomes. This could be explained by the lower level of social functioning found in our study cohort, compared to first-episode patients27, suggesting that in a model suited for a functionally heterogeneous population, the entire range of social needs within the CANSAS instrument may have its relevance. In interpreting the influence of features on the predictions, it should be noted that some, frequently selected, features show large variation in weight. This variation could have its origin in the heterogeneity of the disorder and should be the subject of future research.

Within our models, misclassification especially occurs in patients with unfavorable clinical baseline status combined with good outcomes. This may reflect variation in baseline clinical context and acute state at the time of inclusion (i.e. admission due to relapse vs. outpatient treatment) of these patients and/or availability of therapeutic or supportive resources. We note that the higher baseline symptom severity, lower GAF, and higher psychosocial needs found in one geographic site that underperformed in the LOSO validation relative to the others, may support his possibility. To enhance model performance, these contextual factors may be taken into account in future models.

Our models on long-term outcomes of psychosis perform with reasonable accuracies, but at present are not suitable as a stand-alone tool to stratify treatment. Regardless, the machine learning model trained here and a clinician would represent rather different takes on reality. The model sees the patient through the lens of a number of constructs, such as “insight”, whereas a clinician’s judgment is more globally constructed and starts from the moment the clinician meets his patient in the waiting room28,29. Models with modest accuracy may be of use, depending on the level of uncertainty parties involved in clinical decision-making are willing to accept from a model30,31, a level which to our knowledge is unknown for long-term outcomes of psychosis. In addition, the preferable way of interaction between model and clinician remains to be addressed32. We suggest clinicians may inform their decision making, both by the prediction itself and important features in it, for example, high core social needs, affective symptoms, or low quality of life. Apart from clinical practice, modestly accurate model predictions may serve intervention research by offering stratified randomization.

Future prediction tool development should be informed by end-user (i.e. patients and clinicians) needs concerning scope, predictive capacities, and potential clinical consequences. We need to learn how they weigh benefit and harm due to treatment choices against a given outcome probability30. Furthermore, the significance of any predicted outcome might differ per patient, per stage of illness, and per intervention10. Hence, presenting an array of outcome dimensions with accessible features might best fuel the clinician–patient dialog on intervention33. Moreover, clinical guidance on when and how to use prognostic tools might prove essential for future dissemination of prognostic models based on machine learning in psychiatry.

We note the following limitations. Although we present the largest machine learning study to date on outcome in psychosis, based on patient-reportable data, the sample size may not be sufficient to account for the substantial heterogeneity of the out-of-study population with a schizophrenia-spectrum disorder34. It should be noted that the drop in performance was small (on average 2.3%), when the models were applied to patients from geographic sites not part of the training sample, suggesting transportability to samples with a comparable profile. Although we implemented a comprehensive validation procedure, we cannot rule out some overfitting not accounted for35, including that resulting from information leakage because modality selection, imputation, and scaling were performed outside the nested cross-validation pipeline. Apart from this, our approach of taking the four best performing data modalities from unimodal modeling runs together does not necessarily yield the best performing combination in a multimodal model. Instead, models may benefit from a combination of modalities containing a wider range of information, as has been suggested by studies combining patient reportable and imaging data36,37. We suggest future research may address what is a clinically parsimonious set of modalities, that is, an optimum between accuracy and investment to obtain data. Furthermore, we believe that imputation and scaling outside the cross-validation setup has not led to over-optimistic estimates of generalizability, because of the very low number of imputations (<0.5%) and the fact that most of our features’ scales are fixed, thus independent of our dataset.

The GROUP study sample is known to represent a relatively well functioning subset of a population of schizophrenia-spectrum patients in need of specialist care. Generalization to other samples further might be hindered by the exclusion of the most severely affected patients, either due to study drop-out, exclusion of patients with extensive missing data, or incompetence or unwillingness to give study consent38. The nature of the sample included may also explain the association of antipsychotics use with worse outcomes, as antipsychotics use at baseline is likely to be confounded by history or expectation of more severe illness course. The observational sample obtained may however be more representative for clinical practice than those stemming from clinical trials. To improve model reliability, the use of multi-center samples dedicated to prognostic model development or models informed by national registry data, as has been done to predict transition to psychosis from high-risk mental states and suicidal behavior, may be needed39,40,41.

Regarding outcomes, prediction of longitudinal patterns, or adverse events, such as readmission to a psychiatric hospital may also add clinical relevance, especially for long-term outcome42. We used baseline data for outcome prediction, whereas in clinical practice, decisions are typically based on longitudinal, rather than single, examinations. Longitudinally informed models are expected to result in better prediction accuracies. Furthermore, we propose that contextual information, such as baseline clinical context (e.g. acute inpatient or outpatient status, treatment status), or supportive resource status (e.g. family support) may further enhance model performance. The addition of biomarker modalities, including imaging data and genetic data derived from genome-wide association studies, possibly in interaction with environmental exposures, holds the same promise41. However, all additions come at the expense of time investment, model interpretability, and the requirement of larger training datasets20.

In conclusion, we demonstrate the feasibility of a machine-learning approach to long-term outcome prediction in a heterogeneous target population of schizophrenia-spectrum patients, based on a lean set of patient reportable features, overlapping with those predictive of short-term outcome of first-episode psychosis. Future models may benefit from considering patient’s and clinician’s needs, the appropriate nature of the training sample (i.e. sample similarity to the population of interest as well as richness on (contextual) features), and implementation of advancements in machine learning methodology. Individual outcome prediction based on machine learning may inform the treatment stratification needed both from a patient and a public health perspective.

Methods

Participants and data selection

In the GROUP prospective longitudinal cohort study, in- and out-patients with a psychotic disorder presenting consecutively at selected representative mental health services in representative geographical areas in the Netherlands and Belgium from January 8, 2004 until February 6, 2008 were recruited. Inclusion criteria were: (1) psychotic disorder diagnosis according to the Diagnostic and Statistical Manual of Mental Disorders, Fourth Edition APA43; (2) age 16–50 years (extremes included); (3) Dutch language proficiency; (4) ability to provide informed consent. Extensive genetic, cognitive, environmental, and outcome data were collected at baseline (T0), and 3-year (T3) and 6-year (T6) follow-up. The full GROUP sample at baseline included 1119 patients with variable illness duration, including recent-onset psychosis22. Here, we used data of 523 participants for whom outcome assessments at both T3 and T6 were available, with a schizophrenia spectrum disorder (i.e. schizophrenia, schizophreniform disorder, schizoaffective disorder, delusional disorder, brief psychotic disorder, psychotic disorder: not otherwise specified), assessed with the Comprehensive Assessment of Symptoms and History or the Schedules for Clinical Assessment for Neuropsychiatry (see Supplementary Fig. 1 for a selection process flow-chart)44,45. We assessed selection bias by comparing our sample on demographic and clinical characteristics to GROUP patients not included in this study.

The study protocol was approved by the Medical Ethical Review Board of the University Medical Centre Utrecht and by local review boards of participating institutes. Participants provided written informed consent. Database release 5.0 was used in all analyses.

Long-term outcomes and baseline predictors

We selected two long-term outcome measures in a classification approach to outcome prediction: symptomatic remission and global functioning, measured at both T3 and T6. Symptomatic outcome was selected as it traditionally is a mainstay of clinical care. We followed the consensus definition of symptomatic remission by Andreasen et al., operationalized as a mild score (3 or less, implying no functional disturbance related to symptoms) on selected items of the PANSS (i.e. delusions, conceptual disorganization, hallucinatory behavior, mannerism and posturing, blunted effect, social withdrawal, lack of spontaneity and unusual thoughts), maintained for at least 6 months46,47. For global outcome we followed Koutsouleris et al. in operationalizing global outcome with a dichotomization of the Global Assessment of Functioning (GAF) scale, considering a score of <65 poor global outcomes and ≥65 good global outcomes18,43, as GAF scores between 61 and 70 have been proposed as a threshold between at-risk mental states and illness and widely used as markers of recovery as part of more complex criteria48,49. GAF was constructed as a mean composite score of the GAF symptoms and GAF disabilities subscales assessed in the GROUP project, and normally distributed in our sample. To investigate the possibility that a threshold other than GAF 65 would better represent a border between “good” and “poor” outcomes, we tested other cut values in the GAF 50–68 range post-hoc (Supplementary Note 4.2).

All clinical variables assessed at baseline within the GROUP project which permitted a sample size >250 patients were considered for inclusion as a predictor, barring the models which contained a prespecified set of features based on the best-performing features in the EUFEST study by Koutsouleris et al. (see Supplementary Table 1)18. We clustered available candidate baseline predictors in modalities according to information type: (1) demographic variables, including age, sex, education, socioeconomic status, living situation, and employment; (2) illness-related variables, of diagnosis, comorbidities, illness course duration of untreated psychosis, quality of life and medication use; (3) clinician-rated, present state symptoms as measured by the PANSS47; (4) substance use characteristics (i.e. illicit drug use, alcohol use and smoking) indicated by urine analysis and the Composite International Diagnostic Interview50; (5) neurocognitive task scores of IQ, memory, processing speed/attention and executive functioning, assessed with the Wechsler Adult Intelligence Scale-Third Edition short form, Word Learning Task, Continuous Performance Task-HQ and Response Set-shifting Task respectively; (6) social cognitive task scores of theory of mind, affect recognition and facial recognition, assessed with Hinting Task, Degraded Affect Recognition Task and Benton Facial Recognition Test respectively. For psychometric instrument references for cognitive testing, see Supplementary Note 1.5; (7) Premorbid Adjustment Scale items51, comprising social and cognitive functioning in childhood and adolescence; (8) need of care items, measured with the CANSAS52,53; (9) self-rated lifetime psychotic experiences, consisting of Community Assessment of Psychic Experiences questionnaire (CAPE) items54; (10) extrapyramidal symptoms, comprising akathisia, dyskinesia, and Parkinsonian symptoms; (11) genetic features (i.e. polygenic risk score for schizophrenia55, and familial loading of psychotic disorder, bipolar disorder and drug abuse, measures that comprise the absence or presence of affected relatives of the patient56; (12) environmental variables of urbanicity and living situation. For global content of, and features within the modalities, see Supplementary Note 1 and Supplementary Table 1). Within each modality, missing data for every feature and subject with <20% missing values was imputed and scaled; features and subjects with ≥20% missing values were excluded (also see Supplementary Note 2).

Creation of individual prediction models: machine learning strategy

We trained a linear support vector machine (SVM)57, to find the optimal separating hyperplane dividing patients into the two outcome classes (Fig. 2). For a given training dataset, each patient is represented by a labeled datapoint in an m-dimensional feature space. The position of the data point is determined by the score on the m baseline predictors (input features) and its binary label is the outcome (−1: good outcome; +1: poor outcome). SVM returns feature weights, reflecting the relative influence of predictors on outcome prediction. We used weighting by outcome class to account for unbalance between outcome group sizes and blind the algorithm to base rate distribution, to avoid model bias towards the largest outcome group. Internal validation was performed with three-layer, 10-fold nested cross-validation, where the inner cross-validation layer optimized the cost parameter, representing a penalty imposed on cases violating the margin of the decision boundary of the model. The middle layer selected the smallest predictor set with performance within 10% of the best performing set by recursive feature elimination (RFE). The outer layer provided performance estimates, reflecting the accuracy of the ensemble of k models taken together. This validation procedure was repeated 50 times to reduce dependency on the choice of train-test partitions. We employed the e1071 library (version 1.6.8) for SVM in R (version 3.4.0); and the caret package (version 6.0.76) for RFE58,59.

Machine learning pipeline. D = modality; M = Model; training sets in dark blue, test/validation sets in yellow. a Data selection (see Supplementary Fig. 1 for details) and preprocessing including scaling and imputation. b Unimodal models, to identify the most informative modalities. c Multimodal models consisting of 2–4 modalities, including recursive feature elimination (RFE). d External validation of multimodal models using leave-one-site-out (LOSO) validation, where one of the four geographic sites is held out of model training and used for external validation; SVM (support vector machine) setup: RFE is part of the SVM pipeline; (i) In the inner layer, a CV loop is used to find the optimal value for the cost hyperparameter C from 38 points equidistant in 2log, starting at 0.0001 and ending at 37.07. C sets a penalty for violating the margin of the hyperplane; (ii) the middle layer employs a CV loop for RFE, a feature selection algorithm. It starts by including all available features in the model and iteratively eliminates the least informative features from it until the stopping criterion is met. The smallest set of features with performance within 10% of the best-performing set is selected; (iii) in the outer layer, a CV loop is used to define feature weights in the training set (9/10th of the data) and test the accuracy of the model in the validation set (1/10th of the data). Repetition of this procedure yields 10 models, which are repeated 50 times to reduce dependency on the choice of train-test partitions. The final prediction for a patient is an ensemble constituting an average of 50 repetitions.

See Supplementary Note 3 for an elaborate description of the machine learning pipeline, and Supplementary Note 4.3 for a comparison to an alternative nonlinear learning design (random forests classifier + IsoMap dimensionality reduction, implemented in Neuropredict60) which yielded comparable performance in the study sample (Supplementary Table 16).

Creation of individual prediction models: training and validation design

We employed a data-driven, modality wise learning strategy with the aim of automatically identifying a concise set of features from a limited number of clinical instruments. We entered the best performing modalities from preliminary uni-modal modeling runs (Fig. 2b; Supplementary Table 2) together into the SVM to train a multi-modal prediction model (Fig. 2c).

To align with best practice in prognostic model development19, our study included three components: model development, model validation, and comparison to existing models. (A) Data-driven model development including internal validation using repeated 10-fold nested cross-validation (Fig. 2a–c). Single-feature models containing baseline GAF only as a predictor were additionally trained to benchmark model performance (Supplementary Note 4.1). (B) A test of generalization to out of study samples with leave-one-site-out (LOSO) validation (Fig. 2d). Each of the four geographical sites (Amsterdam, Utrecht, Groningen, and Maastricht) of the GROUP study was held out once, and the prediction model was trained on patients from the remaining three sites. This model was then tested on the hold-out site, to yield prediction accuracy in a site geographically distinct from sites the model was trained on. To estimate predictive power in unseen data, the average prediction accuracy from four LOSO-runs was calculated. We assessed differences between geographic sites on the measures included in the models and ran single-site models post-hoc, to offer possible explanations to performance differences between LOSO-runs (Supplementary Note 4.4). (C) Applicability of 4-week and 52-week outcome predictors in first-episode psychosis for 3- and 6-year outcome prediction in a heterogeneous sample. We selected GROUP predictors matching the top 10% 4- and 52-week global outcome predictors from the European First Episode Schizophrenia Trial (EUFEST; Supplementary Table 1)18, and trained the SVM testing their capability of predicting long-term outcomes within the GROUP sample.

We assessed model performance by calculating sensitivity, specificity, balanced accuracy (BAC: the average of sensitivity and specificity), positive predictive value, and negative predictive value. To give an overview of important features to predict long-term outcomes, we listed features with the highest selection chance per model (top 10%), selected in >1 model. Since the entire cross-validated RFE procedure was repeated 50 times we were able to calculate the percentage of misclassified and correctly classified patients within these 50 repeats. To explore ways to enhance future model performance, we compared the profile of ≥90% misclassified patients with that of ≥90% correctly classified patients on sociodemographic and clinical characteristics, separately for the poor and the good outcome groups. We made use of the Transparent Reporting of a multivariable prediction model for Individual Prognosis Or Diagnosis (TRIPOD) statement61.

Reporting summary

Further information on research design is available in the Nature Research Reporting Summary linked to this article.

Data availability

The data that support the findings of this study are available on request from the corresponding author. The data are not publicly available due to them containing information that could compromise research participant privacy or consent.

Code availability

The code used for analyzing the data is publicly available at: https://github.com/patterns-in-psychiatry/GROUP_outcome.

References

Morgan, C. et al. Reappraising the long-term course and outcome of psychotic disorders: the AESOP-10 study. Psychol. Med. 44, 2713–2726 (2014).

Volavka, J. & Vevera, J. Very long-term outcome of schizophrenia. Int. J. Clin. Pract. 72, e13094 (2018).

Lally, J. et al. Remission and recovery from first-episode psychosis in adults: systematic review and meta-analysis of long-term outcome studies. Br. J. Psychiatry 211, 350–358 (2017).

Parnas, J. A disappearing heritage: the clinical core of schizophrenia. Schizophr. Bull. 37, 1121–1130 (2011).

Lambert, M., Karow, A., Leucht, S., Schimmelmann, B. G. & Naber, D. Remission in schizophrenia: validity, frequency, predictors, and patients’ perspective 5 years later. Dialogues Clin. Neurosci. 12, 393–407 (2010).

Diaz-Caneja, C. M. et al. Predictors of outcome in early-onset psychosis: a systematic review. NPJ Schizophr. 1, 14005 (2015).

Millan, M. J. et al. Altering the course of schizophrenia: progress and perspectives. Nat. Rev. Drug Discov. 15, 485–515 (2016).

Strauss, J. S. & Carpenter, W. T. Jr. The prediction of outcome in schizophrenia: I. Characteristics of outcome. Arch. Gen. Psychiatry 27, 739–746 (1972).

Van Eck, R. M., Burger, T. J., Vellinga, A., Schirmbeck, F. & de Haan, L. The relationship between clinical and personal recovery in patients with schizophrenia spectrum disorders: a systematic review and meta-analysis. Schizophr. Bull. 44, 631–642 (2018).

Leamy, M., Bird, V., Le Boutillier, C., Williams, J. & Slade, M. Conceptual framework for personal recovery in mental health: systematic review and narrative synthesis. Br. J. Psychiatry 199, 445–452 (2011).

Wood, L. & Alsawy, S. Recovery in psychosis from a service user perspective: a systematic review and thematic synthesis of current qualitative evidence. Community Ment. Health J. 54, 793–804 (2018).

Leucht, S. et al. The optimization of treatment and management of schizophrenia in Europe (OPTiMiSE) trial: rationale for its methodology and a review of the effectiveness of switching antipsychotics. Schizophr. Bull. 41, 549–558 (2015).

Wunderink, L., Nieboer, R. M., Wiersma, D., Sytema, S. & Nienhuis, F. J. Recovery in remitted first-episode psychosis at 7 years of follow-up of an early dose reduction/discontinuation or maintenance treatment strategy: long-term follow-up of a 2-year randomized clinical trial. JAMA Psychiatry 70, 913–920 (2013).

Drake, R. E. et al. Housing instability and homelessness among rural schizophrenic patients. Am. J. Psychiatry 148, 330–336 (1991).

Killackey, E. et al. Individual placement and support for vocational recovery in first-episode psychosis: randomised controlled trial. Br. J. Psychiatry 214, 76–82 (2019).

van Os, J., Guloksuz, S., Vijn, T. W., Hafkenscheid, A. & Delespaul, P. The evidence-based group-level symptom-reduction model as the organizing principle for mental health care: time for change? World Psychiatry 18, 88–96 (2019).

Kessler, R. C. et al. Testing a machine-learning algorithm to predict the persistence and severity of major depressive disorder from baseline self-reports. Mol. Psychiatry 21, 1366–1371 (2016).

Koutsouleris, N. et al. Multisite prediction of 4-week and 52-week treatment outcomes in patients with first-episode psychosis: a machine learning approach. Lancet Psychiatry 3, 935–946 (2016).

Dwyer, D. B., Falkai, P. & Koutsouleris, N. Machine learning approaches for clinical psychology and psychiatry. Annu. Rev. Clin. Psychol. 14, 91–118 (2018).

Janssen, R. J., Mourao-Miranda, J. & Schnack, H. G. Making individual prognoses in psychiatry using neuroimaging and machine learning. Biol. Psychiatry Cogn. Neurosci. Neuroimaging 3, 798–808 (2018).

Huys, Q. J., Maia, T. V. & Frank, M. J. Computational psychiatry as a bridge from neuroscience to clinical applications. Nat. Neurosci. 19, 404–413 (2016).

Korver, N. et al. Genetic Risk and Outcome of Psychosis (GROUP), a multi-site longitudinal cohort study focused on gene-environment interaction: objectives, sample characteristics, recruitment and assessment methods. Int. J. Methods Psychiatr. Res. 21, 205–221 (2012).

Shatte, A. B. R., Hutchinson, D. M. & Teague, S. J. Machine learning in mental health: a scoping review of methods and applications. Psychol. Med. 49, 1426–1448 (2019).

Dinga, R. et al. Predicting the naturalistic course of depression from a wide range of clinical, psychological, and biological data: a machine learning approach. Transl. Psychiatry 8, 241 (2018).

Guloksuz, S. et al. Examining the independent and joint effects of molecular genetic liability and environmental exposures in schizophrenia: results from the EUGEI study. World Psychiatry 18, 173–182 (2019).

Drake, R. J. Insight into illness: impact on diagnosis and outcome of nonaffective psychosis. Curr. Psychiatry Rep. 10, 210–216 (2008).

Landolt, K. et al. Unmet needs in patients with first-episode schizophrenia: a longitudinal perspective. Psychol. Med. 42, 1461–1473 (2012).

Mol, A. The Body Multiple: Ontology in Medical Practice (Duke University Press, 2002).

van Westen, M., Rietveld, E. & Denys, D. Effective deep brain stimulation for obsessive-compulsive disorder requires clinical expertise. Front. Psychol. 10, 2294 (2019).

Vickers, A. J., Van Calster, B. & Steyerberg, E. W. Net benefit approaches to the evaluation of prediction models, molecular markers, and diagnostic tests. BMJ 352, i6 (2016).

Chekroud, A. M. et al. Cross-trial prediction of treatment outcome in depression: a machine learning approach. Lancet Psychiatry 3, 243–250 (2016).

Starke, G., De Clercq, E., Borgwardt, S. & Elger, B. S. Computing schizophrenia: ethical challenges for machine learning in psychiatry. Psychol. Med. 1–7 (2020).

Fusar-Poli, P. & Van Os, J. Lost in transition: setting the psychosis threshold in prodromal research. Acta Psychiatr. Scand. 127, 248–252 (2013).

Schnack, H. G. Improving individual predictions: machine learning approaches for detecting and attacking heterogeneity in schizophrenia (and other psychiatric diseases). Schizophr. Res. https://doi.org/10.1016/j.schres.2017.10.023 (2017).

Varoquaux, G. et al. Assessing and tuning brain decoders: cross-validation, caveats, and guidelines. Neuroimage 145, 166–179 (2017).

Koutsouleris, N. et al. Prediction models of functional outcomes for individuals in the clinical high-risk state for psychosis or with recent-onset depression: a multimodal, multisite machine learning analysis. JAMA Psychiatry 75, 1156–1172 (2018).

de Wit, S. et al. Individual prediction of long-term outcome in adolescents at ultra-high risk for psychosis: applying machine learning techniques to brain imaging data. Hum. Brain Mapp. 38, 704–714 (2017).

Ruissen, A. M., Widdershoven, G. A., Meynen, G., Abma, T. A. & van Balkom, A. J. A systematic review of the literature about competence and poor insight. Acta Psychiatr. Scand. 125, 103–113 (2012).

Schnack, H. G. & Kahn, R. S. Detecting neuroimaging biomarkers for psychiatric disorders: sample size matters. Front. Psychiatry 7, 50 (2016).

Kessler, R. C. et al. Predicting suicides after outpatient mental health visits in the Army Study to Assess Risk and Resilience in Servicemembers (Army STARRS). Mol. Psychiatry 22, 544–551 (2017).

Koutsouleris, N. et al. Prediction models of functional outcomes for individuals in the clinical high-risk state for psychosis or with recent-onset depression: a multimodal, multisite machine learning analysis. JAMA Psychiatry 75, 1156–1172 (2018).

Sullivan, S. et al. Models to predict relapse in psychosis: a systematic review. PLoS ONE 12, e0183998 (2017).

American Psychiatric Association. Diagnostic and Statistical Manual of Mental Disorders, (4th ed., text revision). Washington, DC (2000).

Andreasen, N. C., Flaum, M. & Arndt, S. The Comprehensive Assessment of Symptoms and History (CASH). An instrument for assessing diagnosis and psychopathology. Arch. Gen. Psychiatry 49, 615–623 (1992).

Wing, J. K. et al. SCAN. Schedules for clinical assessment in neuropsychiatry. Arch. Gen. Psychiatry 47, 589–593 (1990).

Andreasen, N. C. et al. Remission in schizophrenia: proposed criteria and rationale for consensus. Am. J. Psychiatry 162, 441–449 (2005).

Kay, S. R., Fiszbein, A. & Opler, L. A. The Positive and Negative Syndrome Scale (PANSS) for schizophrenia. Schizophr. Bull. 13, 261–276 (1987).

Jaaskelainen, E. et al. A systematic review and meta-analysis of recovery in schizophrenia. Schizophr. Bull. 39, 1296–1306 (2013).

Scott, J. et al. Clinical staging in psychiatry: a cross-cutting model of diagnosis with heuristic and practical value. Br. J. Psychiatry 202, 243–245 (2013).

World Health Organization (WHO). Composite International Diagnostic Interview (CIDI), version 1.0 (Geneva, 1990).

Cannon-Spoor, H. E., Potkin, S. G. & Wyatt, R. J. Measurement of premorbid adjustment in chronic schizophrenia. Schizophr. Bull. 8, 470–484 (1982).

Phelan, M. et al. The Camberwell Assessment of Need: the validity and reliability of an instrument to assess the needs of people with severe mental illness. Br. J. Psychiatry 167, 589–595 (1995).

Andresen, R., Caputi, P. & Oades, L. G. Interrater reliability of the Camberwell Assessment of Need Short Appraisal Schedule. Aust. N. Z. J. Psychiatry 34, 856–861 (2000).

Stefanis, N. C. et al. Evidence that three dimensions of psychosis have a distribution in the general population. Psychol. Med. 32, 347–358 (2002).

McLaughlin, R. L. et al. Genetic correlation between amyotrophic lateral sclerosis and schizophrenia. Nat. Commun. 8, 14774 (2017).

Derks, E. M., Verweij, K. H., Kahn, R. S. & Cahn, W. C. The calculation of familial loading in schizophrenia. Schizophr. Res. 111, 198–199 (2009).

Vapnik, V. N. An overview of statistical learning theory. IEEE Trans. Neural Netw. 10, 988–999 (1999).

Chang, C.-C. & Lin, C.-J. LIBSVM: a library for support vector machines. ACM Trans. Intell. Syst. Technol. 2, 27 (2011).

Kuhn, M. Building Predictive Models in R Using the caret Package. J. Stat. Softw. 28, 1–26 (2008).

Raamana, P. R. Neuropredict: Easy Machine Learning and Standardized Predictive Analysis of Biomarkers https://doi.org/10.5281/ZENODO.1058993 (2017).

Moons, K. G. et al. Transparent Reporting of a multivariable prediction model for Individual Prognosis or Diagnosis (TRIPOD): explanation and elaboration. Ann. Intern. Med. 162, W1–W73 (2015).

Acknowledgements

We are grateful for the generosity of time and effort by the patients, their families and healthy subjects. Furthermore, we would like to thank all research personnel involved in the GROUP project, in particular: Joyce van Baaren, Erwin Veermans, Ger Driessen, Truda Driesen, Erna van’t Hag. The infrastructure for the GROUP study is funded through the Geestkracht program of the Dutch Health Research Council (Zon-Mw, grant number 10-000-1001), and matching funds from participating pharmaceutical companies (Lundbeck, AstraZeneca, Eli Lilly, Janssen Cilag) and universities and mental health care organizations (Amsterdam: Academic Psychiatric Centre of the Academic Medical Center and the mental health institutions: GGZ Ingeest, Arkin, Dijk en Duin, GGZ Rivierduinen, Erasmus Medical Centre, GGZ Noord Holland Noord. Groningen: University Medical Center Groningen and the mental health institutions: Lentis, GGZ Friesland, GGZ Drenthe, Dimence, Mediant, GGNet Warnsveld, Yulius Dordrecht and Parnassia psycho-medical center The Hague. Maastricht: Maastricht University Medical Centre and the mental health institutions: GGzE, GGZ Breburg, GGZ Oost-Brabant, Vincent van Gogh voor Geestelijke Gezondheid, Mondriaan, Virenze riagg, Zuyderland GGZ, MET ggz, Universitair Centrum Sint-Jozef Kortenberg, CAPRI University of Antwerp, PC Ziekeren Sint-Truiden, PZ Sancta Maria Sint-Truiden, GGZ Overpelt, OPZ Rekem. Utrecht: University Medical Center Utrecht and the mental health institutions Altrecht, GGZ Centraal and Delta.)

Author information

Authors and Affiliations

Consortia

Contributions

J.N. and T.J.B. are considered co-first authors. H.G.S. and W.C. are considered co-last authors. The GROUP investigators designed the GROUP study and wrote the protocol. J.N., T.J.B., and H.G.S. drafted the manuscript. J.N., R.J.J., S.M.K., and D.P.J.O. carried out the analyses. J.N., T.J.B., R.J.J., M.S.K., H.S., and W.C. interpreted the data. T.J.B., W.C., H.G.S., M.B.K., F.S. and L.H. critically revised the manuscript. H.S. and W.C. supervised the project. All authors contributed to and have approved the final manuscript. All authors have agreed both to be personally accountable for the author’s own contributions and to ensure that questions related to the accuracy or integrity of any part of the work, even ones in which the author was not personally involved, are appropriately investigated, resolved, and the resolution documented in the literature.

Corresponding author

Ethics declarations

Competing interests

L.H. has received research funding form Eli Lilly and honoraria for educational programs from Eli Lilly, Jansen Cilag, BMS, Astra Zeneca. R.K. is or has been a member of DSMB for Janssen, Otsuka, Sunovion. and Roche. W.C. is or has been an unrestricted research grant holder with, or has received financial compensation as an independent symposium speaker or as an consultant from, Eli Lilly, BMS, Lundbeck, Sanofi-Aventis, Janssen-Cilag, AstraZeneca and Schering-Plough. I.M.-G. is an unrestricted research grant holder with Janssen-Cilag and has received financial compensation as an independent symposium speaker from Eli Lilly, BMS, Lundbeck, and Janssen-Cilag. All other authors report no potential competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

de Nijs, J., Burger, T.J., Janssen, R.J. et al. Individualized prediction of three- and six-year outcomes of psychosis in a longitudinal multicenter study: a machine learning approach. npj Schizophr 7, 34 (2021). https://doi.org/10.1038/s41537-021-00162-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41537-021-00162-3

This article is cited by

-

Shaping tomorrow’s support: baseline clinical characteristics predict later social functioning and quality of life in schizophrenia spectrum disorder

Social Psychiatry and Psychiatric Epidemiology (2024)

-

Investigation of social and cognitive predictors in non-transition ultra-high-risk’ individuals for psychosis using spiking neural networks

Schizophrenia (2023)

-

Adverse outcome analysis in people at clinical high risk for psychosis: results from a 2-year Italian follow-up study

Social Psychiatry and Psychiatric Epidemiology (2023)

-

A neural network approach to optimising treatments for depression using data from specialist and community psychiatric services in Australia, New Zealand and Japan

Neural Computing and Applications (2023)

-

Machine Learning and Non-Affective Psychosis: Identification, Differential Diagnosis, and Treatment

Current Psychiatry Reports (2022)