Abstract

Deficits in social functioning are especially severe amongst schizophrenia individuals with the prevalent comorbidity of social anxiety disorder (SZ&SAD). Yet, the mechanisms underlying the recognition of facial expression of emotions—a hallmark of social cognition—are practically unexplored in SZ&SAD. Here, we aim to reveal the visual representations SZ&SAD (n = 16) and controls (n = 14) rely on for facial expression recognition. We ran a total of 30,000 trials of a facial expression categorization task with Bubbles, a data-driven technique. Results showed that SZ&SAD’s ability to categorize facial expression was impared compared to controls. More severe negative symptoms (flat affect, apathy, reduced social drive) was associated with more impaired emotion recognition ability, and with more biases in attributing neutral affect to faces. Higher social anxiety symptoms, on the other hand, was found to enhance the reaction speed to neutral and angry faces. Most importantly, Bubbles showed that these abnormalities could be explained by inefficient visual representations of emotions: compared to controls, SZ&SAD subjects relied less on fine facial cues (high spatial frequencies) and more on coarse facial cues (low spatial frequencies). SZ&SAD participants also never relied on the eye regions (only on the mouth) to categorize facial expressions. We discuss how possible interactions between early (low sensitivity to coarse information) and late stages of the visual system (overreliance on these coarse features) might disrupt SZ&SAD’s recognition of facial expressions. Our findings offer perceptual mechanisms through which comorbid SZ&SAD impairs crucial aspects of social cognition, as well as functional psychopathology.

Similar content being viewed by others

Introduction

Deficits in social cognition, notably in the recognition of facial expression of emotions, are one of the hallmark impairments in individuals with schizophrenia (SZ)1. Social cognition deficits are significantly related to a person’s level of functioning2, and emotion perception in particular is strongly and consistently associated with various domains of functioning, such as community functioning, social behaviors, social skills, and social problem solving3.

SZ is also associated with multiple comorbid disorders, including anxiety disorders and more specifically social anxiety disorder (SAD)4,5. Several studies have demonstrated that not only does SAD worsen the prognosis of SZ, but also impedes the overall social recovery of the individual6. Furthermore, individuals suffering from comorbid schizophrenia and social anxiety (SZ&SAD) have a higher rate of suicide attempts, a high prevalence of alcohol/substance abuse disorders, and worse quality of life compared to those with SZ without social anxiety7. Thus, considering the serious consequences of comorbid SZ&SAD, better understanding the mechanisms underlying their emotion recognition deficits is important.

To our knowledge, only two studies have examined emotion recognition and confusion patterns in SZ&SAD8,9, and they have led to contradictory results. The current study’s main objective is to resolve this conflict and to shed light on the perceptual mechanisms responsible for the categorization of facial expression of emotions in SZ&SAD. To do so, we used Bubbles10, a data-driven method, which enabled us to reveal the specific visual representations (i.e., the precise facial features and spatial frequency information) that individuals with SZ&SAD rely on to decode facial expression of emotions11,12,13. This also allowed us to measure SZ&SAD’s emotion recognition ability with robust psychophysical metrics, to describe their emotion confusion patterns, and to reveal any association between these facial-affect processing and their psychiatric symptoms. Given the absence of studies on the visual representations in individuals with SZ&SAD and even in SAD, the following paragraphs will focus on reviewing findings relevant to the categorization of facial expression of emotions in individuals with SZ.

In individuals on the SZ spectrum, the presence of facial expression of emotion categorization deficits is now well-established. In an attempt to explain these impairments, clinical and cognitive psychologists have recently proposed that the emotion perception abnormalities of SZ may emerge from over-attributing specific emotions to affective faces, specifically negative emotions such as anger, fear, or disgust14,15,16,17,18. Likewise, psychologists have speculated that socially anxious individuals should also show a bias for negative emotions because of their fear of being judged in a negative way by their peers19,20. Empirical studies in both clinical and non-clinical populations have revealed some associations between a bias for negative emotions and social anxiety traits11,21,22,23,24,25,26. As far as we know, the joint effect of SZ&SAD on categorization biases has only been explored in one study9. The authors observed that the presence of social anxiety in SZ resulted in patients having more difficulty recognizing neutral faces, confusing them with faces expressing other emotions, compared to those with SZ and no social anxiety.

Prior work using behavioral and brain imaging methods with SZ individuals has revealed low-level perceptual abnormalities that may be linked to these facial expression categorization impairments and biases. Indeed, individuals with SZ tend to have reduced contrast sensitivity to low spatial frequency (LSF; coarse visual information) gratings27,28,29 and reduced sensitivity to LSF-filtered faces30,31,32. These studies as well as others33,34 have promoted the idea of a magnocellular deficit in SZ35. Using similar methods, researchers have also proposed that deficiencies at a higher-level of the visual system could explain poor facial-affect processing in SZ. It is thought, for example, that top-down modulation processes such as the “coarse-to-fine” object/face-recognition process36,37 could be linked to the emotion processing impairments of SZ patients38,39. Arguing for deficits on such top-down processes, recent brain imaging studies39 have proposed that individuals with SZ may compensate for their poor processing of LSF (coarse) information with a visual strategy relying more on high spatial frequency (HSF, fine) information. Quite surprisingly, however, even though these past studies have speculated on the nature of the high-level internal representations SZ rely on in various face-recognition tasks, only two studies have looked directly at such visual representations in SZ12,13. These two studies found atypical visual representations in SZ (e.g., less reliance on the eye region; see also eye-tracking studies40,41,42,43), but no evidence for a bias toward HSF in SZ. Again, however, no one has ever explored the effect of clinically diagnosed social anxiety, nor the joint effect of SZ and clinical social anxiety on visual representations.

The objectives of the present study were thus the following: (1) to measure the general ability for facial expression recognition in individuals with SZ&SAD; (2) to examine categorization biases in this population and to explore their links with psychiatric symptoms; and (3) to reveal the visual memory representations underlying the facial expression of four basic emotions in SZ&SAD (joy, fear, anger, neutrality). To be clear, the focus of this study was not to demonstrate that SZ&SAD has a different etiology or pathophysiology than SZ. Rather, this study’s main objective was to examine social cognition in this particular comorbidity because, as we pointed out above, it is relatively frequent, it significantly worsens SZ prognosis and it has received little attention in the SZ literature.

Results

The Bubbles procedure is illustrated in Fig. 1. On each trial, a face expressing an emotion was randomly selected among a set of 40, and randomly sampled using Gaussian apertures (bubbles) in the 2D image plane and in five spatial frequency bands (for more details, see “Methods” section and ref. 10). The quantity of visual information (i.e., the number of bubbles) was adjusted throughout the task by the QUEST algorithm44 so that each subject maintained an accuracy of 75%. This quantity of visual information was our measure of face-emotion categorization performance.

a An original face image was decomposed into five spatial frequency bands using a Laplacian transform. b Small Gaussian apertures (i.e., the bubbles) were then placed at random locations for each spatial frequency band separately. c Finally, the information revealed by the bubbles for each SF band was fused across the five frequency bands to produce one experimental stimulus (bottom image). The authors have obtained consent for publication of the face image depicted in this figure.

Emotion categorization performance

We first tested the hypothesis that individuals with comorbid SZ&SAD have impaired recognition of facial expression of emotions. One SZ&SAD participant was excluded from further analysis because of his poor performance (i.e., number of bubbles threshold >\({\bar{X}}\) ± 3σ; NSZ&SAD = 15). No control participants were excluded based on this criteria. The resulting total sample’s (i.e., SZ&SAD and controls) average number of bubbles to maintain an accuracy of 75% ranged from a low of 46 (i.e., best performance) to a high of 231 (poorest performance).

SZ&SAD performed worse—they required more bubbles—than neurotypicals to categorize facial expression of emotions (MSZ&SAD = 130.3471, MCTL = 90.3380; STDEVSZ&SAD = 50.8760, STDEVCTL = 34.3402, F(26, 1) = 5.75, p = 0.0239, Cohen’s d = 0.91) and were slower than controls (Fgroup_effect(104, 1) = 18.8, p < 0.0001, Cohen’s d = 0.829; Femotion_effect(104, 3) = 4.37, p = 0.006; Finteraction(104, 3) = 0.22, p > 0.3).

Association between emotion categorization performance and clinical psychopathology

We then tested if this impairment in emotion recognition ability is associated with SZ&SAD’s clinical psychopathology. The more negative symptoms (blunted affect, apathy, and reduced social drive as assessed by the BPRS, see “Methods” section) a person with SZ&SAD displayed, the worse this person was at categorizing facial expression of emotions (r(14) = 0.5768, p = 0.0244). There was no association between reaction time and BPRS symptoms (all ps > 0.05). Social anxiety symptoms (as assessed by the total score of the Brief Social Phobia Scale (BSPS), see Method section) were not significantly linked significantly to categorization performance (r(14) = −0.42, p = 0.14); they were linked, however, to faster reaction times for both anger and neutral faces in participants with SZ&SAD (ranger(14) = −0.63, p = 0.01; rneutral = −0.52, p = 0.04). More specific (post-hoc) tests were conducted on the association between the categorization speed of neutral and angry facial expressions and dimensions of social anxiety symptoms. Only the association between the avoidance dimension and the categorization speed of neutral faces reached statistical significance after Bonferroni-correction (rneutral(14) = −0.64, p = 0.0109, Bonferroni-corrected critical p = 0.0166).

Association between emotion confusions and clinical psychopathology

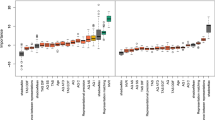

Next, we explored the associations between specific emotion confusions (e.g., angry face labelled as fearful) and clinical psychopathology. The confusion matrix we derived for the SZ&SAD group (shown in Fig. 2a, second row) first revealed three significant frequent confusions during the categorization of facial expression of emotions: (1) neutral faces were often miscategorized as “fearful”, (2) angry faces were often miscategorized as “neutral”, and (3) fearful faces were often miscategorized as “angry” (ProbabilityObserved = 13.14 %, χ2 = 112.0896; ProbabilityObserved = 13.14 %, χ2 = 112.0896; ProbabilityObserved = 10.64 %, χ2 = 24.9799, ProbabilityExpected = 8.3 %, i.e., \(\frac{{\left( {1 - {\rm{accuracyExpected}}} \right)}}{{\left( {n{\rm{Class}} - 1} \right)}}\), all ps < 0.003, the Bonferroni-corrected critical p). There were no significant local contrasts between confusions of SZ&SAD and controls.

The correlation between individual biases in the decoding of specific facial expressions and the four BPRS dimensions described earlier is shown in Fig. 2c, d. Congruent with our previous result, SZ&SAD individuals with more severe negative symptoms were more prone to miscategorize angry and fearful faces as “neutral” (r(14)angerAsneutral = 0.7823; r(14)fearAsneutral = 0.7521, ps < 0.003, Fig. 2c). We also found a negative correlation between negative symptoms and accurate anger categorization (r(14) = −0.7594, p < 0.003; Fig. 2c). Moreover, the higher an individual with SZ&SAD scored on the affect dimension (presence of depression, anxiety and guilt), the more this individual confused angry with joyful faces (r(14) = 0.7756, p < 0.003; Fig. 2d).

Visual representations for accurate emotion categorization

Finally, we revealed the visual memory representations underlying the facial expressions of emotions in SZ&SAD using Bubbles. The classification images (CIs) of control participants show that they used the mouth and, to a lesser extent, the eyes (mostly the left eye) to categorize facial expression of emotions (Fig. 3a; see also ref. 45). SZ&SAD participants, however, only relied on the mouth region to categorize facial expressions.

Regions attaining statistical significance (p < 0.05, two-tailed) in the classification images obtained during the categorization of facial expression of emotions in SZ&SAD (green, n = 16) and controls (red, n = 14). a Classification images (CI) were pooled across participants from the same subject group, spatial frequency scales and emotions. They show the overall use of facial features during this task for SZ&SAD and controls. b CI were pooled across participants from the same subject group and emotions. They show the overall use of facial features at different spatial frequencies during emotion recognition for SZ&SAD and controls. c–f CI were pooled across participants from the same subject group. They show the use of features at different spatial frequencies for c fear, d anger, e happy and f neutral faces for SZ&SAD and controls. All facial features used above statistical threshold for each emotion are also displayed on the left (SZ&SAD) and right side (controls) to help interpretation. The authors have obtained consent for publication of the face images depicted in this figure.

We also examined the use of facial features at different spatial frequencies across emotions (see Fig. 3b). Individuals with SZ&SAD (green) represented emotion cues from the mouth at spatial frequencies (SF) between 10.6 and 42.6 cycles per face (cpf). For controls (red), their visual representation of eye emotion cues contained only high SF (>21.3 cpf), while the mouth region contained only lower spatial frequencies (20.3–5.3 cpf).

We also examined the use of different spatial frequencies across emotions and facial features. This revealed that SZ&SAD relied overall less on high spatial frequency face-emotion information compared to controls (42.6–85.3 cpf; MSZ&SAD = 0.0741, MCONTROL = 0.1414, t(27) = −3.228, p = 0.0033, Cohen’s d = −1.29). In contrast, SZ&SAD used low SF face-emotion information more than controls (2.6–5.3 cpf; MSZ&SAD = 0.2369, MCONTROL = 0.0722, t(27) = 2.4518, p = 0.0210, Cohen’s d = 0.9443; mid-range SFs showed no significant contrasts).

We finally examined the use of facial features at different spatial frequencies for all emotions (see Fig. 3c–f). Individuals with SZ&SAD mostly represented emotion cues from the mouth at spatial frequencies between 10.6 and 42.6 cpf. For controls, their visual representation of eye emotion cues contained only high SFs (>21.3 cpf), while the mouth region contained only lower spatial frequencies (20.3–5.3 cpf). More specifically, CIs for fear recognition showed that individuals with SZ&SAD did not rely on the typical bilateral eye regions in high SF content, whereas controls did (e.g., ref. 45,46). Similarly, CIs for anger recognition showed that SZ&SAD patients did not rely on eye cues in high SFs; they rather used a region between both eyes in mid-high SF (i.e., frown lines) to do so.

Discussion

A better understanding of the mechanisms underlying emotion recognition in individuals with comorbid SZ&SAD is important considering both the prevalence and serious consequences of this additional diagnostic in SZ. Here, we used Bubbles, a data-driven psychophysical approach, and revealed the specific visual representations supporting facial expression of emotion recognition in SZ&SAD. We found, first, that SZ&SAD individuals have impaired recognition of facial expressions: They require more visual information and are slower than controls to recognize emotions at a 75% correct rate. This confirms and extends the evidence for emotion processing impairments in SZ patients1 to SZ&SAD patients. Second, we showed that more severe negative symptoms (blunted affect, apathy, and reduced social drive) were associated with poorer recognition of emotions expressed facially. This also aligns with previous findings on the effects of negative symptoms in SZ32,41,47,48,49,50 and generalizes them to SZ individuals with SAD. Then, we discovered that more severe negative symptoms specifically impact emotion perception through a bias for neutral emotions. Interestingly, affect symptoms (anxiety, depression, guilt, and somatic concern) were associated with a qualitatively different confusion pattern, i.e., with higher confusions of angry faces. Considering that impaired emotion perception is especially present in individuals with SZ suffering from flat affect41,47, our finding of face representation biases toward neutral affect suggest that the visual representations of emotions might not only be central to perception, but also to the expression of facial-affect in these individuals.

Another finding linking clinical psychopathology to emotion processing in individuals with SZ&SAD was that higher social anxiety (specifically avoidance) symptoms actually enhanced the speed at which they react to facial expressions of anger and neutral. This finding supports the idea of an important role of emotion valence in SZ&SAD’s emotion processing mechanisms. Indeed, socially phobic individuals have been described to have an abnormal—but not necessarily impaired—processing of negative emotions from social stimuli26. Faster processing of emotions of negative valence in these individuals is generally thought to arise from an early hypervigilance to threatening social stimuli26,51. While it is tempting to dissociate the respective effect of social anxiety (hypervigilance to negative emotions) and negative symptoms (poor representations biased toward neutral affect) in SZ&SAD, additional experimental studies will be needed to shed light on their respective effect on facial-affect processing in SZ.

Most importantly, we showed that these emotion recognition impairments in SZ&SAD’s could be explained by their inefficient visual representations of facial expression of emotions. Compared to typical controls, SZ&SAD relied less on fine facial cues (high SF) and more on coarse facial cues (LPSs). Several studies with SZ individuals have found disruption in the early stage (magnocellular) process of the vihual system, with, for example, impaired contrast sensitivity for LSF gratings (coarse information27,29,52). It has been proposed, as a consequence of this low-level processing, that SZ individuals should rely on high SFs more than controls for resolving visual tasks39. We clearly demonstrated that this is not the case. Instead, our results are in agreement with two other studies that examined the relative importance of coarse and fine information in the classification of objects53 and faces54, and found a bias for coarse information. However, we disagree with the conclusion reached by the researchers according to which this LSF bias refutates the magnocellular pathway deficits hypothesis of SZ. We rather believe that the impaired recognition of facial expression of emotions in SZ results from an interaction between lower- and higher-level processing mechanisms. In other words, we propose that it is the joint effect of (1) their poor sensitivity to low spatial frequencies in early stages of the visual system and (2) the LSF bias of their higher-level (facial-affect) visual representations that produce these impairments.

Another noteworthy finding is that SZ&SAD individuals never represented the eye regions (only on the mouth area) in their visual representations of facial expression of emotions. Lee et al.12 and Clark et al.13 used the Bubbles technique to evaluate the visual representations of SZ participants during facial emotion recognition tasks, and found, much like we did in SZ&SAD participants, that SZ participants relied less on the eye regions than control participants. Most eye-tracking results have also corroborated these Bubbles findings by showing that SZ individuals tend to gaze less frequently at the eyes of faces40,41,42,43 than controls (but see refs. 38,55,56). However, the SZ participants of Lee et al.12 did use the eye regions in mid-to-high SFs to categorize both fear and happy faces. Likewise, the SZ participants of Clark et al.13 utilized the eye regions to categorize angry faces across spatial frequencies. These findings indicate a somewhat impaired representation of eye cues in SZ. In contrast, our SZ&SAD group did not rely on the eye region at all for any of the emotions or at any SF scale tested in these previous studies. Such extreme impaired eye representation is also at odds with what was found, using a similar method, in (non-clinical) social anxiety participants11. Our results appear to be more in line with findings from eye-tracking studies in clinically diagnosed socially anxious individuals57. That being said, our lack of SZ-only and SAD-only (clinically diagnosed social anxiety) control groups precludes the dissociation between main effects of SZ and SAD and their interaction on facial expression representations. Future studies with these control groups will be needed to draw more definitive conclusions. Nonetheless, we found significant impairments in both social cognition and core visual feature extraction mechanisms in the SZ&SAD population. These deficits stress the need for more empirical work on this population, and for a systematic assessment of SAD comorbidity in samples of SZ individuals.

Interestingly, the underutilization of the eye regions in our SZ&SAD participants can be explained by a systematic confusion of the emotion cues in this area. Indeed, additional CI analysis on the facial information SZ&SAD relied on when they confused a face (i.e., fearful) with another emotion (i.e., anger) revealed that their confusion of these emotions emerged from their misinterpretation of cues from the eyes (see Supplementary Fig. 1). This mechanism might explain why anger and fear tend to be confused in individuals with SZ15,17,18,32,58 as well as in the present study. This confusion analysis also showed that emotion cues systematically misinterpreted by SZ&SAD were mainly present for negative valence emotions, which supports the idea of abnormal representations of emotions with negative valence in SZ&SAD.

Another weakness of this study is the sample size. Although it compares favorably with most Bubbles or reverse-correlation studies (which tend to have few subjects but many trials per subject), it is relatively small for a psychiatric-disorder study. We believe this work is still valuable because it is among the first to describe impairments in the recognition of facial expression of emotions in individuals with comorbid SZ&SAD. Assuredly, additional studies will be needed to draw more definitive conclusions. A systematic assessment of SAD in SZ samples might help to better understand the representations of facial expression of emotions in this population, if only by helping researchers recruit individuals with comorbid SZ&SAD. Finally, results might be tinted by our participants being mainly first-episode patients. We do not believe, however, that this can account for the effects shown here because impairments in facial expression recognition typically get worse after the first episode59,60. If anything, this suggests that our study might underestimate SZ&SAD’s effect on social cognition.

Finally, our finding that the visual representations of typical and SZ&SAD individuals differ may lead to a new kind of perceptual intervention for SZ&SAD individuals. Indeed, some of us recently devised a training procedure capable of inducing the use of specific facial information in typical participants; and showed that the induction of the visual representation of the best face recognizers in individuals with intermediate face-recognition abilities led to an enhancement of their face-recognition abilities46,61,62. We hope that by inducing the visual representations of typical individuals (i.e., eye emotion cues) in SZ&SAD with similar interventions will reduce their impairment to recognize facial expression of emotions and, in turn, help their social functioning.

Methods

Participants and procedure

A total of 30 participants were recruited for this study. The first group consisted of 16 individuals diagnosed with both a SZ spectrum disorder (SZ, n = 9; schizo-affective disorder, n = 7) and comorbid SAD. The second group was composed of 14 normal controls (see Table 1 for sociodemographic and clinical information). Clinical participants were referred and diagnosed by the attending psychiatrist (according to the DSM-5 criteria), by means of the first-episode psychosis clinic of the Institut Universitaire en Santé Mentale de Montréal (IUSMM), the Centre Hospitalier de l’Université de Montréal (CHUM) and the Douglas institute Centre for Personalized Psychological Intervention for Psychosis. At the time of recruitment, most patients (64.7%) had experienced a single psychotic episode. All clinical participants were medicated with atypical antipsychotics (mean dose in chlorpromazine equivalent was 299.9 mg/day), and were considered stabilized by the attending psychiatrist. The normal control group was composed of neurotypical individuals, i.e., individuals who did not have any psychological or neurological disorder. This study was approved by the Ethics and Research Committee of the IUSMM, CHUM, Douglas Institute and the ethics committee of the Université de Montréal, and informed (written) consent was obtained from all participants.

All participants were administered a sociodemographic survey, and performed the Bubbles Facial Emotion Recognition Task (henceforth called Bubbles experiment). In addition, clinical participants were evaluated with the following instruments: (1) Structured Clinical Interview for DSM-5 (SCID63), which allowed to confirm patient diagnosis according to the DSM-5 criteria; (2) Brief Psychiatric Rating Scale–Expanded Version (BPRS64,65, which consists of a 24-item semi-structured interview that assesses the severity of psychiatric symptoms and which was delivered by trained-to-gold-standard graduate students. The following dimensions were used for analyses: negative symptoms, positive symptoms, affect, and activity; (3) BSPS66, which evaluates the severity of social anxiety and includes 18 self-report items answered on a five-point Likert scale (from 0 to 4). The scale has adequate psychometric properties66. The following subscales were generated: fear, avoidance, and physiological activation.

Bubbles experiment

Forty grayscale face stimuli (256 × 256 pixels; ~5.72 × 5.72 deg of visual angle) from the STOIC database ([five females & five males] × four facial expressions12,13,45,67) were used as base faces. The emotions conveyed by these stimuli (joy, fear, anger, and neutrality) are expressed by trained actors and were selected to be highly recognizable.

To map which parts of expressive faces at different spatial scales were used by individuals with SZ&SAD and controls, we used the Bubbles method and an emotion categorization task (joy, fear, anger, neutrality). This sampling procedure is illustrated in Fig. 1 (for further details, see ref. 10). Briefly, faces were drawn randomly from the 40 exemplars as well as their mirror-flipped images, and randomly sampled using Gaussian apertures (bubbles) in the 2D image plane and in five spatial frequency bands. Spatial filtering was achieved with Laplacian pyramid transforms68. The quantity of visual information (i.e., the number of bubbles) was adjusted throughout the task by the QUEST algorithm44 so that each subject maintained an accuracy of 75%. This quantity of visual information was our measure of face-emotion categorization performance (see data analysis section). These stimuli were shown at the center of the screen and remained there until the subject pressed a key corresponding to one of the four possible emotions. Every participant completed 10 blocks of 100 trials each.

Association between emotion categorization performance and clinical psychopathology

To associate emotion recognition processes to clinical psychopathology, individual psychophysical metrics within the SZ&SAD cohort (e.g., individual facial expression recognition performance thresholds) were Spearman-correlated with individual clinical scores (e.g., social anxiety trait score, as measured by the BSPS). Individual psychophysical performance thresholds for the emotion categorization task69,70 were computed from the best-fitted Gaussian cumulative distribution function (i.e., accuracy as a function of number of bubbles apertures across trials), and compared between SZ&SAD and controls with two-tailed unpaired t tests. Individual average reaction times for correct responses were computed for the four emotion categories, discarding outlier trials (trials with Z > 2.5, Z < −2.5 or <200 ms were rejected). Corrections for family-wise error rate (FWER) were applied within each group of post-hoc tests. Here, for example, Bonferroni corrections were applied when specifying the main effect between reaction times and social anxiety, dividing the critical p value by the number of subscales of social anxiety symptoms, i.e., 0.05/3 (0.0166).

Confusions: association with clinical psychopathology

To reveal if SZ&SAD’s clinical psychopathology was associated with specific confusion patterns between emotions, a 4 × 4 (categorized emotion × presented emotion) confusion matrix was first derived for each individual and averaged across participants. Specifically, we computed the probability that each observer classified a given signal (e.g., an angry face) as a specific emotion (e.g., neutral), for each possible categorization and signal type. To know whether and which specific confusion patterns were associated with clinical psychopathology, we Spearman-correlated the between-individual variation in psychiatric score of interest (BRPS & BSPS scores) with every categorization x facial-affect probability pair (e.g., an angry face confused as a neutral one). We controlled for FWER using Bonferroni corrections: we divided the critical p value by 16—0.05/16 (0.0037)—the number of statistical tests against the null hypothesis (e.g., no relationship between negative symptoms and emotion confusions).

Bubbles CIs

Our main goal was to reveal the visual memory representations of individuals with SZ&SAD when categorizing the facial expression of four basic emotions. Considering its use to explore complex memory representations, reverse-correlation techniques prescribes a high number of trial repetition per participant. In this study, thus, robust within-participants measures (1000 trials per individual, for a total of >30 000 trials) was preferred over a large sample size71,72. To reveal these visual representations, we performed multiple linear regressions on the location of the bubbles and z-scored response accuracies across trials, for each subject, spatial frequency band and emotion. Each of these regressions produces a plane of regression coefficients that we call a Classification Image (CI); it reveals parts or features of faces—in their specific spatial frequency band—that are systematically associated with a participant’s accurate emotion categorization. CIs were then summed across participants of each group per emotion and frequency band, smoothed with a Gaussian kernel of 15 pixels of standard deviation, and transformed into Z scores. Cluster tests73 (search region = 65,536 pixels; arbitrary z-score threshold = 2.7; cluster size statistical threshold = 277 pixels; p < 0.05, two-tailed) were used to assess the statistical significance of the resulting group CIs. The Cluster test corrects for FWER while taking into account the spatial correlation that is inherent to the Bubbles method. To specifically test whether SZ&SAD relied more on high SF, we contrasted (unpaired t test), between SZ&SAD and controls, the individual average z-scores contained in the face area for each of the five frequency bands.

Reporting summary

Further information on research design is available in the Nature Research Reporting Summary linked to this article.

Data availability

The data that support the findings of this study are available from the corresponding author upon reasonable request.

Code availability

The MATLAB code that supports the findings of this study are available from the corresponding author upon reasonable request.

References

Green, M. F., Horan, W. P. & Lee, J. Social cognition in schizophrenia. Nat. Rev. Neurosci. 16, 620–631 (2015).

Couture, S. M., Penn, D. L. & Roberts, D. L. The functional significance of social cognition in schizophrenia: a review. Schizophr. Bull. 32 Suppl 1, S44–S63 (2006).

Irani, F., Seligman, S., Kamath, V., Kohler, C. & Gur, R. C. A meta-analysis of emotion perception and functional outcomes in schizophrenia. Schizophr. Res. 137, 203–211 (2012).

Achim, A. M. et al. How prevalent are anxiety disorders in schizophrenia? A meta-analysis and critical review on a significant association. Schizophr. Bull. 37, 811–821 (2011).

Braga, R. J., Reynolds, G. P. & Siris, S. G. Anxiety comorbidity in schizophrenia. Psychiatry Res. 210, 1–7 (2013).

Lysaker, P. H., Yanos, P. T., Outcalt, J. & Roe, D. Association of stigma, self-esteem, and symptoms with concurrent and prospective assessment of social anxiety in schizophrenia. Clin. Schizophr. Relat. Psychoses 4, 41–48 (2010).

Pallanti, S., Quercioli, L. & Hollander, E. Social anxiety in outpatients with schizophrenia: a relevant cause of disability. Am. J. Psychiatry 161, 53–58 (2004).

Achim, A. M. et al. Impact of social anxiety on social cognition and functioning in patients with recent-onset schizophrenia spectrum disorders. Schizophr. Res. 145, 75–81 (2013).

Lecomte, T., Théroux, L., Paquin, K., Potvin, S. & Achim, A. Can social anxiety impact facial emotion recognition in schizophrenia? J. Nerv. Ment. Dis. 207, 140–144 (2019).

Gosselin, F. & Schyns, P. G. Bubbles: a technique to reveal the use of information in recognition tasks. Vis. Res. 41, 2261–2271 (2001).

Langner, O., Becker, E. S. & Rinck, M. Social anxiety and anger identification: bubbles reveal differential use of facial information with low spatial frequencies. Psychol. Sci. 20, 666–670 (2009).

Lee, J., Gosselin, F., Wynn, J. K. & Green, M. F. How do schizophrenia patients use visual information to decode facial emotion? Schizophr. Bull. 37, 1001–1008 (2011).

Clark, C. M., Gosselin, F. & Goghari, V. M. Aberrant patterns of visual facial information usage in schizophrenia. J. Abnorm. Psychol. 122, 513–519 (2013).

Kohler, C. G. et al. Facial emotion recognition in schizophrenia: intensity effects and error pattern. Am. J. Psychiatry 160, 1768–1774 (2003).

Huang, J. et al. Perceptual bias of patients with schizophrenia in morphed facial expression. Psychiatry Res. 185, 60–65 (2011).

Bedwell, J. S. et al. The magnocellular visual pathway and facial emotion misattribution errors in schizophrenia. Prog. Neuropsychopharmacol. Biol. Psychiatry 44, 88–93 (2013).

Premkumar, P. et al. Misattribution bias of threat-related facial expressions is related to a longer duration of illness and poor executive function in schizophrenia and schizoaffective disorder. Eur. Psychiatry 23, 14–19 (2008).

Pinkham, A. E., Brensinger, C., Kohler, C., Gur, R. E. & Gur, R. C. Actively paranoid patients with schizophrenia over attribute anger to neutral faces. Schizophr. Res. 125, 174–178 (2011).

Rapee, R. M. & Heimberg, R. G. A cognitive-behavioral model of anxiety in social phobia. Behav. Res. Ther. 35, 741–756 (1997).

Spokas, M. E., Rodebaugh, T. L. & Heimberg, R. G. Cognitive biases in social phobia. Psychiatry 6, 204–210 (2007).

Montagne, B. et al. Reduced sensitivity in the recognition of anger and disgust in social anxiety disorder. Cogn. Neuropsychiatry 11, 389–401 (2006).

de Jong, P. J. & Martens, S. Detection of emotional expressions in rapidly changing facial displays in high- and low-socially anxious women. Behav. Res Ther. 45, 1285–1294 (2007).

Hirsch, C. R. & Clark, D. M. Information-processing bias in social phobia. Clin. Psychol. Rev. 24, 799–825 (2004).

Philippot, P. & Douilliez, C. Social phobics do not misinterpret facial expression of emotion. Behav. Res. Ther. 43, 639–652 (2005).

Heuer, K., Lange, W.-G., Isaac, L., Rinck, M. & Becker, E. S. Morphed emotional faces: emotion detection and misinterpretation in social anxiety. J. Behav. Ther. Exp. Psychiatry 41, 418–425 (2010).

Machado-de-Sousa, J. P. et al. Facial affect processing in social anxiety: tasks and stimuli. J. Neurosci. Methods 193, 1–6 (2010).

Butler, P. D. & Javitt, D. C. Early-stage visual processing deficits in schizophrenia. Curr. Opin. Psychiatry 18, 151–157 (2005).

Obayashi, C. et al. Decreased spatial frequency sensitivities for processing faces in male patients with chronic schizophrenia. Clin. Neurophysiol. 120, 1525–1533 (2009).

Brenner, C. A. et al. Steady state responses: electrophysiological assessment of sensory function in schizophrenia. Schizophr. Bull. 35, 1065–1077 (2009).

Kim, D.-W., Shim, M., Song, M. J., Im, C.-H. & Lee, S.-H. Early visual processing deficits in patients with schizophrenia during spatial frequency-dependent facial affect processing. Schizophr. Res. 161, 314–321 (2015).

Butler, P. D. et al. Sensory contributions to impaired emotion processing in schizophrenia. Schizophr. Bull. 35, 1095–1107 (2009).

Norton, D., McBain, R., Holt, D. J., Ongur, D. & Chen, Y. Association of impaired facial affect recognition with basic facial and visual processing deficits in schizophrenia. Biol. Psychiatry 65, 1094–1098 (2009).

Li, C.-S.R. Impaired detection of visual motion in schizophrenia patients. Prog. Neuropsychopharmacol. Biol. Psychiatry 26, 929–934 (2002).

Notredame, C.-E., Pins, D., Deneve, S. & Jardri, R. What visual illusions teach us about schizophrenia. Front. Integr. Neurosci. 8, 63 (2014).

Livingstone, M. S. & Hubel, D. H. Psychophysical evidence for separate channels for the perception of form, color, movement, and depth. J. Neurosci. 7, 3416–3468 (1987).

Bar, M. et al. Top-down facilitation of visual recognition. Proc. Natl Acad. Sci. USA 103, 449–454 (2006).

Caplette L., Wicker B. & Gosselin F. Atypical time course of object recognition in autism spectrum disorder. Sci. Rep. 6, https://doi.org/10.1038/srep35494 (2016).

Tso, I. F., Carp, J., Taylor, S. F. & Deldin, P. J. Role of visual integration in gaze perception and emotional intelligence in schizophrenia. Schizophr. Bull. 40, 617–625 (2014).

Calderone, D. J. et al. Contributions of low and high spatial frequency processing to impaired object recognition circuitry in schizophrenia. Cereb. Cortex 23, 1849–1858 (2013).

Bradley, E. R. et al. Oxytocin increases eye gaze in schizophrenia. Schizophr. Res. 212, 177–185 (2019).

Streit, M., Wolwer, W. & Gaebel, W. Facial-affect recognition and visual scanning behaviour in the course of schizophrenia. Schizophr. Res. 24, 311–317 (1997).

Loughland, C. M., Williams, L. M. & Gordon, E. Visual scanpaths to positive and negative facial emotions in an outpatient schizophrenia sample. Schizophr. Res. 55, 159–170 (2002).

Toh, W. L., Rossell, S. L. & Castle, D. J. Current visual scanpath research: a review of investigations into the psychotic, anxiety, and mood disorders. Compr. Psychiatry 52, 567–579 (2011).

Watson, A. B. & Pelli, D. G. QUEST: a Bayesian adaptive psychometric method. Percept. Psychophys. 33, 113–120 (1983).

Blais, C., Roy, C., Fiset, D., Arguin, M. & Gosselin, F. The eyes are not the window to basic emotions. Neuropsychologia 50, 2830–2838 (2012).

Adolphs, R. et al. A mechanism for impaired fear recognition after amygdala damage. Nature 433, 68–72 (2005).

Gur, R. E. et al. Flat affect in schizophrenia: relation to emotion processing and neurocognitive measures. Schizophrenia Bull. 32, 279–287 (2006).

Kohler, C. G., Bilker, W., Hagendoorn, M., Gur, R. E. & Gur, R. C. Emotion recognition deficit in schizophrenia: association with symptomatology and cognition. Biol. Psychiatry 48, 127–136 (2000).

Leszczyńska, A. Facial emotion perception and schizophrenia symptoms. Psychiatr. Pol. 49, 1159–1168 (2015).

Daros, A. R., Ruocco, A. C., Reilly, J. L., Harris, M. S. H. & Sweeney, J. A. Facial emotion recognition in first-episode schizophrenia and bipolar disorder with psychosis. Schizophr. Res. 153, 32–37 (2014).

Aronoff, J., Barclay, A. M. & Stevenson, L. A. The recognition of threatening facial stimuli. J. Personal. Soc. Psychol. 54, 647–655 (1988).

Martinez, A. et al. Magnocellular pathway impairment in schizophrenia: evidence from functional magnetic resonance imaging. J. Neurosci. 28, 7492–7500 (2008).

Laprevote, V., Oliva, A., Thomas, P. & Boucart, M. 373 – Face processing deficit in schizophrenia: Seeing faces in a blurred world? Schizophrenia Res. 98, 187 (2008).

Laprevote, V. et al. Low spatial frequency bias in schizophrenia is not face specific: when the integration of coarse and fine information fails. Front. Psychol. 4, 248 (2013).

Delerue, C., Laprévote, V., Verfaillie, K. & Boucart, M. Gaze control during face exploration in schizophrenia. Neurosci. Lett. 482, 245–249 (2010).

Tso, I. F., Mui, M. L., Taylor, S. F. & Deldin, P. J. Eye-contact perception in schizophrenia: relationship with symptoms and socioemotional functioning. J. Abnorm. Psychol. 121, 616–627 (2012).

Claudino R. G. E., de Lima L. K. S., de Assis E. D. B. & Torro N. Facial expressions and eye tracking in individuals with social anxiety disorder: a systematic review. Psicol: Reflex Crít. 32, https://doi.org/10.1186/s41155-019-0121-8 (2019).

Habel, U. et al. Neural correlates of emotion recognition in schizophrenia. Schizophr. Res. 122, 113–123 (2010).

Kucharska-Pietura, K., David, A. S., Masiak, M. & Phillips, M. L. Perception of facial and vocal affect by people with schizophrenia in early and late stages of illness. Br. J. Psychiatry 187, 523–528 (2005).

Leung, J. S.-Y., Lee, T. M. C. & Lee, C.-C. Facial emotion recognition in Chinese with schizophrenia at early and chronic stages of illness. Psychiatry Res. 190, 172–176 (2011).

Faghel-Soubeyrand, S., Dupuis-Roy, N. & Gosselin, F. Inducing the use of right eye enhances face-sex categorization performance. J. Exp. Psychol. Gen. 148, 1834–1841 (2019).

DeGutis, J., Cohan, S. & Nakayama, K. Holistic face training enhances face processing in developmental prosopagnosia. Brain 137, 1781–1798 (2014).

First, M. B., Spitzer, R. L., Gibbon, M. & Williams, J. B. W. The structured clinical interview for DSM-III-R personality disorders (SCID-II). Part I: description. J. Personal. Disord. 9, 83–91 (1995).

Lukoff, D., Liberman, R. P. & Nuechterlein, K. H. Symptom monitoring in the rehabilitation of schizophrenic patients. Schizophr. Bull. 12, 578–602 (1986).

Ventura J., Green M. F., Shaner A. & Liberman R. P. Training and quality assurance with the Brief Psychiatric Rating Scale: “the drift busters”. Int. J. Methods Psychiatr. Res. https://psycnet.apa.org/record/1994-27973-001 (1993).

Davidson, J. R. et al. The Brief Social Phobia Scale: a psychometric evaluation. Psychol. Med. 27, 161–166 (1997).

Blais, C., Fiset, D., Roy, C., Saumure Régimbald, C. & Gosselin, F. Eye fixation patterns for categorizing static and dynamic facial expressions. Emotion 17, 1107–1119 (2017).

Burt, P. & Adelson, E. The Laplacian pyramid as a compact image code. IEEE Trans. Commun. 31, 532–540 (1983).

Tardif, J. et al. Use of face information varies systematically from developmental prosopagnosics to super-recognizers. Psychol. Sci. 30, 300–308 (2019).

Royer, J. et al. Greater reliance on the eye region predicts better face recognition ability. Cognition 181, 12–20 (2018).

Rutishauser, U. et al. Single-neuron correlates of atypical face processing in autism. Neuron 80, 887–899 (2013).

Ince, R. A. A. et al. The deceptively simple N170 reflects network information processing mechanisms involving visual feature coding and transfer across hemispheres. Cereb. Cortex 26, 4123–4135 (2016).

Chauvin, A., Worsley, K. J., Schyns, P. G., Arguin, M. & Gosselin, F. Accurate statistical tests for smooth classification images. J. Vis. 5, 1 (2005).

Acknowledgements

NSERC Discovery grant (04777-2014) to F.G.; NSERC and FRQNT graduate scholarships to S.F.-S. We thank Antoine Pennou for his help in data recording.

Author information

Authors and Affiliations

Contributions

F.G., T.L., and S.F.-S. designed the experiment. F.G and S.F.-S. programmed the experimental procedure. S.F.-S. supervised data recording for patients and controls, wrote the analysis scripts, created the figures and wrote the first draft of the manuscript. M.L., S.P., A.A.-B., M.V., M.A.B., and T.L. supervised patients’ recruitment. All authors provided substantial input in the interpretation of the results and in writing and reviewing the manuscript. All authors approved the final version of the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Faghel-Soubeyrand, S., Lecomte, T., Bravo, M.A. et al. Abnormal visual representations associated with confusion of perceived facial expression in schizophrenia with social anxiety disorder. npj Schizophr 6, 28 (2020). https://doi.org/10.1038/s41537-020-00116-1

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41537-020-00116-1

This article is cited by

-

The Eyes Have It: Psychotherapy in the Era of Masks

Journal of Clinical Psychology in Medical Settings (2022)