Abstract

In the literature, there are substantial machine learning attempts to classify schizophrenia based on alterations in resting-state (RS) brain patterns using functional magnetic resonance imaging (fMRI). Most earlier studies modelled patients undergoing treatment, entailing confounding with drug effects on brain activity, and making them less applicable to real-world diagnosis at the point of first medical contact. Further, most studies with classification accuracies >80% are based on small sample datasets, which may be insufficient to capture the heterogeneity of schizophrenia, limiting generalization to unseen cases. In this study, we used RS fMRI data collected from a cohort of antipsychotic drug treatment-naive patients meeting DSM IV criteria for schizophrenia (N = 81) as well as age- and sex-matched healthy controls (N = 93). We present an ensemble model -- EMPaSchiz (read as ‘Emphasis’; standing for ‘Ensemble algorithm with Multiple Parcellations for Schizophrenia prediction’) that stacks predictions from several ‘single-source’ models, each based on features of regional activity and functional connectivity, over a range of different a priori parcellation schemes. EMPaSchiz yielded a classification accuracy of 87% (vs. chance accuracy of 53%), which out-performs earlier machine learning models built for diagnosing schizophrenia using RS fMRI measures modelled on large samples (N > 100). To our knowledge, EMPaSchiz is first to be reported that has been trained and validated exclusively on data from drug-naive patients diagnosed with schizophrenia. The method relies on a single modality of MRI acquisition and can be readily scaled-up without needing to rebuild parcellation maps from incoming training images.

Similar content being viewed by others

Introduction

Despite decades of research, there are no precise and reliable etiopathophysiological markers for major psychiatric conditions.1 Impeding factors range from inherent challenges in studying complex genetic disorders2 to weakly established neural bases for cognition, experience and behaviour.3,4 However, a part of the problem is a mismatch between current diagnostic standards for psychiatric illnesses and observations emerging from basic systems and behavioural neuroscience research.5 Recognized biological heterogeneity, also adds to the difficulty of identifying reliable biological markers associated with these conditions.6 Treatments for psychiatric disorders have emerged largely as a result of serendipitous observations7 with an unfortunate range of side-effects8 and this may be why mortality and prevalence rates associated with psychiatric illnesses have not decreased in past years,9 as compared to other medical conditions such as certain types of cancer10 or heart diseases.11

In particular, the underlying pathophysiology of schizophrenia, a severe and debilitating psychotic illness, still remains elusive, with few established consistent findings.12 Currently objectively measurable diagnostic tests for schizophrenia13 are lacking, and the reliability of diagnoses based on observable signs and symptoms leaves room for improvement.5 Further, there is marked heterogeneity within clinical manifestations of ‘schizophrenia’ as well as considerable overlap with other psychiatric diagnoses, leading many to question the validity of a singular disease entity.14

In this context, applying machine learning techniques to MRI data has the potential to provide an objective and evidence-based approach for identification and management of schizophrenia.15,16 Machine-learned MRI models have the potential to identify biological markers and delineate symptom clusters. Recently, an increasing number of studies have attempted to classify schizophrenia (vs. healthy controls) based on functional alterations in resting-state brain patterns (Table 1, see supplementary materials for more description of these studies).

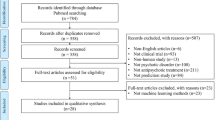

Most earlier studies assessed patients already undergoing treatment, which means their fMRI scans were confounded with antipsychotic drug effects17 – hence, those scans did not correspond to the point of first medical contact, and so may not lead to optimal diagnostic models. Further, diagnostic models obtained from larger datasets (more than 100 subjects) have classification accuracies well below 80% (Fig. 1). Many have observed this phenomenon: “smaller-N studies reach higher prediction accuracy of schizophrenia with neuroimaging data”.18 Even with higher cross-validated accuracy, the smaller samples likely do not capture the heterogeneity of the disease, which suggests that these models will not generalize well to unseen cases.

Negative correlation between sample size and cross-validated (CV) accuracy for predicting schizophrenia using resting-state brain patterns (studies cited in Table 1)

Many of these studies first parcellate the whole brain resting-state information into spatial regions that are considered homogeneous. However, with the increasing number of parcellation methods and atlases now available, the choice of which parcellation to use seems rather arbitrary. These methods can vary widely in principle and can be based on (a) pre-defined ontology of brain structures such as post-mortem cytoarchitecture,19,20 sulco-gyral anatomy,21,22 anatomical connectivity using diffusion imaging23,24 or (b) data-driven modelling of the functional features in the BOLD signal from resting-state25 or task-based fMRI26,27 or even meta-analyses28,29 using analytical techniques such as hierarchical clustering30 or independent components analysis.31 The quality of the brain network obtained and the downstream predictive model may be largely influenced by the selection of the atlas or parcellation used.32,33 Brain segmentations based on these parcellation schemes not only provide a way to reduce the dimensionality of fMRI data but can also provide an elegant way to incorporate prior neurobiological knowledge to ‘refine’ the features. However, to date, there has been no investigation on whether combined learning from multiple predefined parcellation schemes can provide better performance for diagnostic prediction of schizophrenia.

In this study, we eliminated the potential confound of antipsychotic treatment by using resting state fMRI data collected from a cohort of antipsychotic-naive schizophrenia patients (N = 81) as well as age- and gender-matched healthy controls (N = 93). The aim of our study was to improve accuracy for diagnostic prediction, compared to results reported in the literature, by designing a feature creation and learning pipeline that incorporates prior knowledge of neuroanatomy and neurophysiology. Our overall model involves stacking predictions from several single-source models, each based on the specific set of features related to regional fMRI activity and functional connectivity, and a specific a priori parcellation scheme. We demonstrate that our ensemble model yields a classification accuracy of 87% (vs. 53% chance), which is better than any standard single-source model considered in the study. To the best of our knowledge, (1) the performance of our model, based on 174 subjects, outscores earlier machine learning models built for diagnosing schizophrenia using resting-state fMRI measures that have been learned from datasets of N > 100 subjects; and (2) this is the only such classification model that has been built and validated exclusively on never-treated schizophrenia cases.

Our method relies on a single modality of data acquisition for neuroimaging and is easily scalable as it uses a set of pre-defined atlases—i.e., it does not rely on data-driven brain parcellation methods, such as group-independent component analysis.

Results

We show below that (a) our EMPaSchiz ensemble learner, which learns a combination of learned classifiers, each trained on its own neuroimaging feature extractions and brain parcellation schemes, produces a classifier that can predict schizophrenia more accurately than any of the individual predictors (that used just a single feature/parcellation combination). (b) Within this ensemble prediction framework, even a very small fraction of features (as low as top 0.5% selected via univariate tests) can still provide high prediction accuracy (>80%). (c) This learning framework can also produce models that can distinguish clinically symptomatic versus non-symptomatic patients, with moderate accuracy.

Table 2 presents the 5 × 10-fold cross-validation prediction performance of the various learners in EMPaSchiz. Majority class baseline accuracy for schizophrenia prediction (declaring every subject to be control) was 53.4% (93 controls of 174 total subjects). These accuracy values are plotted in Fig. 2. Stacked models with neuroimaging features that are regional—viz., ALFF, fALFF and ReHO—had accuracies in the range of 74 to 76%, while the ones based on functional connectivity—viz., FC-Correlation, FC-partial correlation, FC-precision—showed better performance with 79 to 84% accuracy. The final ensemble model EMPaSchiz (stacked-multi) showed the best performance with accuracy of 87%, sensitivity of 80%, specificity of 93% and precision of 92%, each with standard errors of 1–2%. This accuracy of stacked-multi was significantly better than second best stacked model (stacked-FC-precision at 84%, t-test, p = 0.03).

Comparison of 5×10-fold cross-validation prediction accuracies for stacked learners in EMPaSchiz model. The comprehensive ensemble of EMPaSchiz “stacked-multi” is shown in red. Stacked-multi shows the best performance and performs significantly better than all other stacked models (all p < 0.05). The dotted line is the majority class baseline predictor. SEM standard error of mean

Figure 3 shows a comparative profile of accuracies for various SSM predictors along with EMPaSchiz stacked models. (Supplementary material provides results in tabular format as well as plots of comparisons limited to specific feature types. It also provides results for various ensemble learners that were stacked parcellation-wise.) Prediction accuracies for SSM ranged from 52% (FC-precision with harvard_sub_25) to 83% (FC-precision with basc_multiscale_444) and averaged overall at 73%. In general, basc_multiscale atlases showed better performance than the others. For instance, accuracies of EMPaSchiz stacked models were comparable to basc_multiscale_197 models for FC-correlation at 82% and for FC-partial correlation at 79%.

We examined the effect of feature selection using top-r percentage of total features based on a univariate test, of r percentile of the highest F-value scores, for r = 0.5%, 1%, 2%, 5%, 10%, 20%, 30%, as well as “all regional features +30% connectivity features” (we chose this combination as, for any given parcellation, the number of regional features was much less than that of connectivity features), and all features (no feature selection). Note that each “setting” is applied to all 84 SSMs. Figure 4 shows the comparative profile of model performances with varying levels of r for top-r percentage of features, along with original EMPaSchiz (stacked-multi) model where feature reduction was done using PCA. (Supplementary materials provide results in tabular format as well as additional plots of comparisons of feature selection methods for SSM and MSM models.) Using all features (r = 100%, i.e., no selection/reduction) showed accuracy of 85% (which was slightly poorer than PCA reduced features at 87% but was not a statistically significant difference) and accuracy declined only slightly when r was reduced gradually to as low as 0.5. It is noteworthy that with only 0.5% of top features, our ensemble prediction framework still showed a high prediction accuracy of 82%.

Patients with schizophrenia in our sample showed a range of psychopathological symptom severity, as measured using the clinical scales SANS for negative symptoms (integer values from 0 to 110) and SAPS for positive symptoms (integer values from 8 to 55). We used the first and last quartile of these scales to categorize the 20 least, and the 20 most, severely symptomatic patients. We then used our ensemble prediction framework in leave-one-out cross-validation setup to predict the high-symptomatic patients against non/low-symptomatic ones (majority class baseline accuracy of 50%). We used leave-one-out cross-validation (rather than 10-fold) to deal with low number of subjects (N = 40) that were available for this analysis. Prediction accuracy for stacked-multi model was 73.2% for SANS and 61.9% for SAPS of schizophrenia psychopathology.

To identify some of the key pathological alterations in our schizophrenia sample, we estimated the reliability of a feature’s importance for diagnostic prediction, similar to the approach used by an earlier neuroimaging study34 – sorting the features by their respective mean logistic regression weight divided by its standard error for each feature in a particular learned SSM generated during 50 folds of cross-validation. (This was performed with raw ROI data, without any PCA transformations.) Fig. 5 (respectively Fig. 6) highlight some of the top-most ( > 98 or 99th percentile) reliable features using representative atlases for regional resting state measures (respective connectivity).35 However, given the complexity of our ensemble model (which recall is based on 84 SSM), these depictions should be considered just representative in nature, and cannot be claimed as the ‘only’ important features in the model.

Key pathological alterations in schizophrenia suggested by top-most reliable features—elevated (red) and suppressed (blue) changes in regional activity. Panels show top 98th percentile of top regional features. a Higher ALFF in right caudate and right superior temporal pole (aal). b Higher ALFF in lateral aspect of left superior temporal gyrus and horizontal ramus of the right lateral sulcus, and lower ALFF in left posterior-dorsal cingulate gyrus (destrieux). c Higher fALFF in left putamen, right caudate and lower fALFF in right anterior cingulum (aal). d Higher ReHo in left superior temporal pole, right inferior temporal gyrus, and lower ReHo in left inferior parietal lobule and right superior temporal gyrus (basc_multiscale_197)

Key pathological alterations in schizophrenia suggested by top-most reliable features—network edges show elevated (red) and suppressed (blue) changes in functional connectivity. Panels show top 99th percentile of top functional connectivity features using dosenbach and msdl atlases. a Decreased functional connectivity between regions—left ventral frontal cortex and left lateral cerebellum, left occipital and left angular gyrus, left middle insula and right fusiform gyrus, and lastly left post parietal cortex with three nodes namely right frontal gyrus, left parietal, left precentral gyrus. Increased interhemispheric functional connectivity between left superior frontal gyrus and the right anterior insula. b Decreased functional connectivity between regions—striatum and posterior occipital lobe, right intraparietal sulcus and right frontal pole, ventral anterior cingulate cortex and medial default mode network, left temporo-parietal junction and right parietal cortex, right superior temporal sulcus and Broca’s area. Increased functional connectivity between regions—right anterior insula and striatum, right insula and left auditory cortex, and left anterior intraparietal sulcus and posterior occipital lobe

The pattern of functional connectivity changes (Fig. 6) indicates robust hypo-connectivity between the frontoparietal network (such as post parietal) and the sensorimotor network (such as frontal, parietal, precentral gyrus) with widespread hypo-connectivity in language (e.g.: Broca), attention (e.g.: frontal pole, parietal) and default mode network (e.g.: angular, fusiform gyrus). On other hand, the auditory network as well as the anterior insula, which is implicated in high-level cognitive control, attentional processes and saliency,36 show hyper-connectivity. Similarly, the overall picture (Fig. 5) shows increased regional low frequency activity in the superior temporal gyrus and basal ganglia structures - caudate, putamen, and reduced regional activity in cingulum.

Discussion

This study aimed to build a machine learned classifier for diagnosing schizophrenia that depends on a single neuroimaging modality of acquisition - resting state fMRI. Resting state fMRI is a popular imaging method and possibly better than task-based fMRI, since the latter depends on experimental parameters that require standardization. Further, resting state fMRI is not limited by participants’ attention or cognitive ability to perform a task and hence is applicable to patients with more pronounced disabilities.37

Several recent studies have built diagnostic models using data from patients receiving antipsychotic drug treatment (see Table 1). However, antipsychotics are known to affect brain activity and function,38,39 and a recent study cautions against the practice of interpreting brain changes in a medicated state, noting it might not be related to the pure pathology of schizophrenia.17 We developed the model presented in this study on a sample of never-treated schizophrenia patients, to make our results directly apply to realistic clinical scenarios of diagnosis at first clinical presentation. Further work will be necessary to examine how this may generalize to medicated patients, as well as other confounds, such as multi-site batch effects, remains to be examined.40 It is notable, however, that non-medicated patients are an important group for analysis and represent, perhaps, the most difficult sample for recruitment. In this way our study provides a very important sample to demonstrate the value of our approach.

With respect to diagnostic accuracy of schizophrenia, Schnack and others have observed that smaller sample studies may reach high prediction accuracy at the cost of lower generalizability to external samples -- an effect attributed to clinical heterogeneity, physiological variation, sampling noise and errors in diagnosis.18 In our outline of recent literature on machine learning studies with resting-state fMRI (see the Introduction section), we also observed this relation (see Fig. 1). Nevertheless, our ensemble model outscores earlier models built for diagnosing schizophrenia using resting state fMRI measures, even though it was learned from a large sample. We believe this may be because our feature creation process incorporates prior rich neurobiological knowledge with simultaneous use of regional and connectivity measures that are jointly extracted over various biologically-informed brain atlas schemes. We demonstrate that if we employ standard machine learning pipelines (called SSM here) on this dataset of untreated patients, we obtain a level of performance ( < 80% accuracy) that is similar to the results reported widely in earlier studies with comparable sample sizes. Hence, these drug-naive cases are unlikely to be ‘easier’ to model than standard treated cases. Our results provide encouraging progress toward deploying automated or semi-automated diagnostic systems based on neuroimaging and predictive models in psychiatric clinics. However, the performance of our model is favoured by the fact that the entire sample in this study comes from a single site, meaning it does not need to deal with the challenges of cross-site generalizability and site-specific effects. Future clinical studies with larger cohorts, preferably from multiple clinical sites, would be necessary to justify clinical deployment.

Our EMPaSchiz model used brain parcellations that were based on prior knowledge of anatomy / cytoarchitecture or statistical maps extracted from correlation structure in fMRI data collected and analysed in earlier studies. Hence, these maps might not perfectly adapt to signals in the individual subject images – which might not be an issue for data-driven parcellation or clustering techniques. Our study neither explored that option, nor compared model performance empirically, with features obtained with these two alternative methodologies. However, use of pre-existing parcellations reduces chances of overfitting, and possibly increases the robustness of the resulting model. Note also that these a priori ROIs incorporate nicely biological knowledge of fMRI data into the feature creation process, which can help interpretation of results, and provide an effective way to reduce dimensionality. Our model may be readily scaled-up with relatively little computation, as it does not need to build parcellation maps from incoming training images.

It is often challenging to provide a biological interpretation of complex machine learning models, as the goal of the learning process is to find a model that maximizes prediction performance, which may require (possibly non-linear) combinations of thousands of features. In this study, we produced an effective classifier by seeking the coefficients for the features that collectively optimize the predictive accuracy. In general, such coefficients need not correspond to the inherent correlation of each individual feature with the outcome. This is especially true in our approach of using multiple parcellations of the brain, as this means the “features” will overlap to a large degree. This can be seen as potential limitation for the interpretation of our model. We provide only a snapshot of some representative changes in patient’s brain, showing only the most reliable resting state features; features that, alone, may be neither necessary nor sufficient to obtain the prediction performance of the reported ensemble model. However, several of these brain networks and regions were observed to be altered consistently in schizophrenia.41,42,43

Functional connectivity aberrations observed in our study are consistent with the dysconnectivity hypothesis of schizophrenia.44 This theoretical framework describes schizophrenia as a dysconnection syndrome linking aberrations at the level of synapse with the abnormalities in the long-range connectivity of several brain networks.45 A vital component of the dysconnectivity hypothesis is proposed aberrant connectivity between prefrontal cortex and other brain regions, which is posited to give rise to key symptoms such as delusions and hallucinations.46 A systematic review of fMRI studies on functional connectivity supports reduction in brain region connectivity in subjects with schizophrenia, especially reductions involving prefrontal cortex,47 in agreement with our observations. Our findings of concurrent hyper-connectivity among some regions is also consistent with earlier reports of increased functional connectivity in schizophrenia.48 Another core postulate of the dysconnectivity hypothesis is that modulation of synaptic efficacy with resultant fronto-temporo-parietal aberrations leads to hallucinations / delusions in schizophrenia.49 The hypothesized synaptic efficacy aberrations may be linked to NMDA receptor abnormalities.49 In this context it is of interest that effects on temporoparietal-prefrontal circuitry through transcranial Direct Current Stimulation (possibly via NMDA-dependent mechanisms50) has been shown to ameliorate severity of auditory hallucinations,51,52 possibly through “correction” of functional dysconnectivity.53 It is likely that further systematic application of machine learning techniques to analysis of brain connectivity may be useful for developing prognostic markers for schizophrenia that might predict differential responses to clinical interventions.

A general conceptual limitation of machine learning studies in psychiatry is that the diagnostic labels might themselves be ill defined. Amidst an ever-expanding volume of research data, inconsistencies in neurobiological findings fuel doubts about the validity of the currently defined disease construct of schizophrenia. This might be an issue inherent in psychiatric practice, which contributes to low reliability of diagnosis with nosology such as the DSM criteria. The work reported here may indicate a useful step towards more biological informed diagnoses, as it involves developing algorithms to predict current psychiatric diagnoses based on objective neurobiological features. This approach could also provide us with a framework for evaluating the validity of clinical diagnoses. Lastly, our empirical results show that multi-parcellation ensemble learning models may effectively learn models for early diagnosis of schizophrenia; we anticipate that this approach may work for other psychoses, and for prediction of treatment responses.

Methods

Subjects

This study examined 92 patients attending the clinical services of the National Institute of Mental Health & Neurosciences (NIMHANS, India), who fulfilled DSM-IV criteria for schizophrenia and were never treated with any psychotropic medications including antipsychotics. The diagnosis of schizophrenia was established using the Mini International Neuropsychiatric Interview (MINI) Plus,54 which was confirmed by another psychiatrist through an independent clinical interview. The details related to illness onset and antipsychotic-naive status were carefully ascertained by reliable information obtained from at least one additional adult relative. The Scale for Assessment of Positive Symptoms (SAPS) and Scale for Assessment of Negative Symptoms (SANS) were used to measure psychotic symptoms.55 Clinical assessments and MRI were performed on the day before starting antipsychotics.

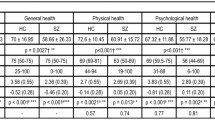

Controls were recruited from among the consenting healthy volunteers from the same locale to match for age and sex. We used 102 age- and sex-matched healthy volunteers, who were screened to rule out any psychiatric diagnosis using the MINI as well as a comprehensive mental status examination. For both cases and controls, we recruited only right-handed subjects to avoid the potential confounds of differential handedness. None of the study subjects had contraindications to MRI or medical illness that could significantly influence CNS function or structure, such as seizure disorder, cerebral palsy, or history suggestive of delayed developmental milestones. There was no history suggestive of DSM-IV psychoactive substance dependence or of head injury associated with loss of consciousness longer than 10 min. No subjects had abnormal movements as assessed by the Abnormal Involuntary Movements Scale. Pregnant or postpartum females were not included. The supplementary material provides a table with details of demographic and clinical profile of 174 subjects who qualified to be included in the study. (See details on excessive head movement in the ‘Image pre-processing’ section)

The catchment area for the subject recruitment involved the southern states of India. We obtained informed written consent after providing a complete description of the study to all the subjects. The NIMHANS ethics committee reviewed and approved the original research protocol. The Research Ethics Board at University of Alberta, Edmonton approved the secondary analysis of archived data.

Image acquisition

Magnetic Resonance Imaging (MRI) was done in a 3.0 Tesla scanner (Magnetom Skyra, Siemens). Resting State Functional MRI: BOLD (Blood Oxygen Level Dependent) sensitive echo-planar imaging was obtained using a 32-channel coil for a duration of 5 minutes 14 s, yielding 153 dynamic scans. The scan parameters were: TR = 2000ms; TE = 30ms; flip angle = 78°; Slice thickness = 3 mm; Slice order: Descending; Slice number = 37; Gap = 25%; Matrix = 64 × 64 × 64 mm3, FOV = 192 × 192, voxel size = 3.0 mm isotropic. Subjects were asked to keep their eyes open during the scan. For intra-subject co-registration, structural MRI: T1-weighted three-dimensional high-resolution MRI was performed (TR = 8.1 msec, TE = 3.7ms, nutation angle = 8°, FOV = 256 mm, slice thickness = 1 mm without inter-slice gap, NEX = 1, matrix = 256 × 256) yielding 165 sagittal slices.

Image pre-processing

We performed pre-processing and feature extraction using MATLAB (The MathWorks, Inc) toolboxes including Statistical parametric mapping (SPM8, http://www.fil.ion.ucl.ac.uk/spm), Data Processing Assistant for Resting-State fMRI (DPARSF)56 as well as Python toolboxes including the nilearn package57 based on scikit-learn, a Python machine learning library.58 We checked acquired images visually for artefacts such as incomplete brain coverage or ghosting; then re-orientated the origin to the anterior commissure in structural MRI and fMRI images. The first ten volumes of each functional time-series were discarded as they were before the time required for the scanner field to reach steady magnetization, and for the participants to adapt to scanning noise. Images were then pre-processed with slice-timing correction, image realignment to correct for motion, and intensity normalization. Since head movement may lead to group-related differences,59,60,61 we excluded images for 11 patients and 9 controls from the study based on excessive head movement (translational > 2.0 mm and/or rotational > 2°).62 This yielded a total of 174 subjects: 93 controls and 81 patients. Functional images were co-registered with the structural image and then normalized to MNI space resampled to 3×3×3 mm3. Nuisance regression was performed to remove noise in the signal induced by head motion using 24 regressors derived from the parameters estimated during motion realignment, scanner drift using a linear term, as well as global fMRI signals from white matter and cerebrospinal fluid segments using SPM’s new segment method.63 Normalized images were smoothed, detrended and band-pass filtered as appropriate—depending on the feature to be extracted, see details below.

Feature extraction

To obtain neurobiologically relevant features, we projected each resting brain information into 14 different parcellations, each based on a specific a priori defined atlas or set of regions of interest (ROIs). Our goal here was to jointly learn from this entire set of neuroimaging features extracted through several brain parcellation schemes to obtain an accurate model; n.b., we are neither trying to compare nor evaluate the influence of any single feature type or ROI definition on prediction accuracy. Our goal is to produce a predictive model whose validation is only its predictive accuracy.

We used the following 14 pre-defined brain parcellation schemes:

-

yeo: intrinsic functional connectivity of cerebral cortex25

-

smith20, smith70: functional networks during activation and rest (at two different resolutions)26

-

harvard_cort_25, harvard_sub_25: Harvard-Oxford cortical and subcortical parcellation (http://www.cma.mgh.harvard.edu/fsl_atlas.html)

-

msdl: multi-subject dictionary learning for functional parcellation64

-

aal: macroscopic anatomical parcellation of single-subject brain65

-

basc_multiscale_122, basc_multiscale_197, basc_multiscale_325 and basc_multiscale_444: multi-level bootstrap analysis of stable clusters in resting-state fMRI, at four different resolutions66

-

destrieux: sulcal depth-based anatomical parcellation of the cerebral cortex67

-

dosenbach: multivariate pattern analysis of functional connectivity28

-

power: graph measures of functional brain organization68

For each of these 14 parcellation schemes, we extracted 3 regional-based and 3 connectivity-based resting brain fMRI features. For regional features, we used:

-

ALFF: amplitude of frequency fluctuations

-

fALFF: fractional ALFF

-

ReHo: regional homogeneity

We smoothed each functional image using a 4 mm FWHM gaussian kernel (except for extraction of ReHo - to avoid overestimation of spatial homogeneity) and band-pass-filtered fMRI time-courses at 0.01–0.08 Hz to capture slow fluctuations that are believed to reflect spontaneous brain activity.69,70 ALFF was calculated as total power within the frequency range between 0.01 and 0.08 Hz to estimate the strength of low frequency oscillations.71 fALFF was calculated as power within the low-frequency range (0.01–0.08 Hz) divided by the total power in the entire detectable frequency range.69 Lastly, ReHo was calculated using Kendall’s coefficient of concordance,72 as a measure of the similarity between the time series of a given voxel and its nearest neighbours.73

We calculated each of these features at the voxel level using the DPARSF toolbox, standardized and then averaged over an ROI. For each ROI, we ran a nuisance regression across the features to remove the effects of confounding variables that are generally recommended and commonly reported in neuroimaging research—age, sex, and total intracranial volume.74 In addition, we also used average framewise displacement to (at least partially) counter systematic yet spurious correlations in functional connectivity that may arise from subject motion.59

We also computed connectivity features with each of the 14 parcellations, by extracting average time series per ROI and then estimating functional connectivity matrices between each pair of regions using one of three statistical measures

-

Pearson correlation

-

partial correlation

-

precision

In each case, the feature vectors were the flattened lower triangular part of these symmetric matrices.

We chose to study the above features as earlier literature established their relevance to schizophrenia pathology. Abnormalities in low-frequency oscillations70,75 and regional homogeneity of blood-oxygen-level-dependent signals76,77 have been well documented in schizophrenia. Further, patients diagnosed with schizophrenia have exhibited changes in functional brain connectivity, as revealed through distant correlations.77,78 In addition to simple Pearson correlation, we described the connectivity structure using partial correlation, which measures the interactions between two ROIs. We use a sparse precision matrix—i.e., the sparse inverse of the covariance matrix—which reveals the brain regions that appear conditionally independent given all other brain regions.79

So, in total, our approach ‘Ensemble algorithm with Multiple Parcellations for Schizophrenia prediction’, abbreviated as: EMPaSchiz (read as ‘Emphasis’) – modelled 84 sources of data (14 parcellation schemes×(3 + 3) feature types) per subject; these descriptions ranged in size from 17 to 98,346 values. We used appropriate masker classes57 to summarize brain signals from non-overlapping clusters (e.g.: basc_multiscale) or overlapping networks (e.g., smith) or spheres centred at seeds with fixed small radius (e.g.: power). Table 3 presents the total number of features per data source. (The supplementary material presents visualizations of a few representative parcellations, overlaid over an MRI slice.)

Prediction and evaluation framework

EMPaSchiz produced a classifier from our multi-source data, in two levels. For the first level, EMPaSchiz trained 84 different L2-regularized logistic regression classifiers, using the ‘liblinear’ solver80 – one for each individual data source to predict the diagnosis; we consider each to be a single-source model (SSM). For the second level, EMPaSchiz then trained a single L2-regularized logistic regression model to take the prediction probabilities computed by each SSM, to predict the schizophrenia-vs-normal label; hence, this is a multi-source model (MSM). Figures 7 and 8 show schematic representations of our prediction and evaluation framework. These computations were performed using the scikit-learn package36 and mlxtend extensions.81

Figure 7a shows performance of learned EMPaSchiz-Performance model. Given a resting state fMRI time series for a subject, the EMPaSchiz-Performance first extracts 6 different feature types (F1 to F6; coded here with different fill colours) over each of 14 brain parcellation schemes (P1 to P14; coded here with border colour) to obtain 84 feature sets (FS1,1 to FS6,14). Each is given to a “single-source model” (SSM), which is a learned logistic regression (LR) classifier of the PCA-projection of that data with learned parameter θi,j (i.e, θ1,1 to θ6,14 each correspond to a specific feature set) trained to predict schizophrenia. This produces a vector of the resulting 84 prediction probability values (P1,1 to P6,14)—one from each LR—which is given to a final trained LR classifier with learned parameter θ*,*. The final prediction probability P*,* is used to predict whether the given subject is “schizophrenia” or “normal”. We also considered 6 other multi-source models, with learned parameters θ1,* to θ6,*—one for each feature type.

Figure 7b, c shows the process for learning the EMPaSchiz-Performance model. The EMPaSchiz-Learner first learns 84 different single-source models SSMi,j: For the ith feature type (i = 1..6) and the jth parcellation (j = 1..14), EMPaSchiz-Learner computes the (i,j)-feature set for the resting state fMRI time series for each of the K labelled subjects in training set, to obtain the feature sets FS*i,j = { FSk i,j } over k = 1..K. It then trains a regularized logistic regression (LR) model θi,j to predict schizophrenia, from each feature set FS * i,j, where the regularization strength C is obtained using internal CV. For example, θ3,12 is learned by fitting LR on FS*3,12 (which corresponds to the 3rd feature type: ReHo with the 12th parcellation: destrieux). After learning all the 84 SSM parameters {θi,j} in this manner, EMPaSchiz-Learner as shown in Fig. 7c, then runs each of these 84 resulting SSMs on each of the K training instances; this produces a new training set P = {P k i,j }, where P ki,j is the probability produced by running the (i,j)-th SSM predictor, with learned parameter θi,j, on the k-th instance. It then learns the multi-source model (MSM) by training the regularized logistic regression (LR) on the set P to predict schizophrenia. This produces the parameter θ*,*. Similarly, six other MSMs θ1,* to θ6,* are learned by training LR with each set P *1,j = {P k 1,j } over k = 1..K, j = 1..14 to P * 6,j = {P k 6,j } over k = 1..K, j = 1..14.

In more detail: EMPaSchiz first used singular value decomposition of each data source to project it to a lower dimensional space. We extracted principal components from the training instances, then projected each instance onto the eigenvectors (PCA). We used all the components—i.e., set the number of principal components to the smaller of the number of original features or the number of instances. Note these components captured all the variance, but reduced the dimensionality by a huge factor, for most datasets, as the final number of features for each data source was at most the number of instances in training set (~157 subjects in our 10-fold cross-validation). For the few data sources that had fewer features than training instances (e.g., yeo-regional has 17 features), this transformation would not change the number of features, but changed the data to a new basis. The motivation for this procedure was to have a uniform pipeline of PCA transformations for all data sources, irrespective of the varying number of features.

Schematic representation for evaluation of the model with cross-validation. We use 5×10-fold cross-validation to evaluate each learner (the original EMPaSchiz-Learner, and its six variants). Here, we first divide the entire dataset of 174 subjects into 10 sets; we then use 9/10 of them to train the EMPaSchiz model (see Fig. 7b, c); we then run that model on the remaining 1/10 of the data (see Fig. 7a). We then compute accuracy as the number of correctly labelled instances, over all 10 folds, and use this as an estimate of the score of the learned EMPaSchiz -Performance system. We run this entire process five times—over five different partitionings and compute the overall accuracy of predictions over these 50 train-test splits. Trained models are depicted in green lines and predictions are depicted in red lines

For SSM, EMPaSchiz set the C parameter (inverse of regularization strength) by internal 10-fold cross-validation on the training split (5 shuffled iterations). We call the MSM that combined predictions from all 14 x 6 = 84 SSMs, ‘stacked-multi’. We also considered six other versions of MSM, each combining SSMs for a specific feature type (14 each): stacked-ALFF, stacked-fALFF, stacked-ReHo, stacked-FC-correlation, stacked-FC-partial correlation, stacked-FC-precision.

The EMPaSchiz model was evaluated in five shuffled iterations of a 10-fold balanced cross-validation approach (90% training set, 10% test set; for a total of 50 train-test splits). We evaluated the model’s generalization performance on the test set (in outer cross-validation), computing:

-

accuracy (Overall, how often is the classifier correct?)

-

sensitivity (When the actual label is ‘patient’, how often is the prediction correct?)

-

specificity (When the actual label is ‘control’, how often is the prediction correct?)

-

precision (When the predicted label is ‘patient’, how often is the prediction correct?)

For each variant, we report the mean and standard errors for these metrics over all 50 train-test splits. To compare MSM models, we used parametric statistical tests (two sided t-test) on the accuracy, using the SciPy package.82

We also performed two additional analyses. First, we explored the effect of feature selection with respect to SSM, using the top-r percentage of the total set of features, based on univariate testing (F-value score) on the model performance. For example, when r = 20%, the EMPaSchiz-Learner would use only 20% of the original features, in each of its 84 SSMs. Note this is instead of using PCA. (So, for the regional features of the ‘aal’ parcellation, instead of using all 116 features, it only considered the top 0.2 × 116 = 23 features, etc.) While computing the cross-validation scores, we ran the feature selection process ‘in fold’ using the ‘pipeline’ class of scikit-learn58 to avoid obtaining optimistically biased estimates. Second, we examined our ensemble prediction framework to distinguish the least symptomatic schizophrenia patients vs. the most symptomatic patients (based on SAPS and SANS); evaluated using leave-one out cross-validation.

DATA AVAILABILITY

The datasets generated during and/or analysed during the current study as well as relevant computer codes that were used to process the data and to generate the results are available from corresponding authors on a reasonable request.

References

Thomas, R. I. The NIMH Research Domain Criteria (RDoC) Project: precision medicine for psychiatry. Am. J. Psychiatry 171, 395–397 (2014).

Gejman, P. V., Sanders, A. R. & Kendler, K. S. Genetics of schizophrenia: new findings and challenges. Annu. Rev. Genom. Hum. Genet. 12, 121–144 (2011).

Sass, L. A. & Parnas, J. Schizophrenia, consciousness, and the self. Schizophr. Bull. 29, 427–444 (2003).

Kapur, S. Psychosis as a state of aberrant salience: a framework linking biology, phenomenology, and pharmacology in schizophrenia. Am. J. Psychiatry 160, 13–23 (2003).

Cuthbert, B. N. & Insel, T. R. Toward the future of psychiatric diagnosis: the seven pillars of RDoC. BMC Med. 11, 126 (2013).

Hyman, S. E. Can neuroscience be integrated into the DSM-V? Nat. Rev. Neurosci. 8, 725–732 (2007).

Pieper, A. A. & Baraban, J. M. Moving beyond serendipity to mechanism-driven psychiatric therapeutics. Neurotherapeutics 14, 533–536 (2017).

Goldberg, J. F. & Ernst, C. L. Core concepts involving adverse psychotropic drug effects: assessment, implications, and management. Psychiatr. Clin. North Am. 39, 375–389 (2016).

Kessler, R. C. et al. Prevalence and treatment of mental disorders, 1990 to 2003. N. Engl. J. Med. 352, 2515–2523 (2005).

Hunger, S. P. et al. Improved survival for children and adolescents with acute lymphoblastic leukemia between 1990 and 2005: a report from the children’s oncology group. J. Clin. Oncol. 30, 1663–1669 (2012).

National Heart, Lung, and Blood Institute. NHLBI Fact Book, Fiscal Year (NHLBI, Bethesda, MD, 2011).

Kahn, R. S. et al. Schizophrenia. Nat. Rev. Dis. Prim. 1, 15067 (2015).

Jablensky, A. The diagnostic concept of schizophrenia: its history, evolution, and future prospects. Dialog. Clin. Neurosci. 12, 271–287 (2010).

Kendell, R. & Jablensky, A. Distinguishing between the validity and utility of psychiatric diagnoses. Am. J. Psychiatry 160, 4–12 (2003).

Huys, Q. J. M., Maia, T. V. & Frank, M. J. Computational psychiatry as a bridge from neuroscience to clinical applications. Nat. Neurosci. 19, 404–413 (2016).

Orrù, G., Pettersson-Yeo, W., Marquand, A. F., Sartori, G. & Mechelli, A. Using support vector machine to identify imaging biomarkers of neurological and psychiatric disease: a critical review. Neurosci. Biobehav. Rev. 36, 1140–1152 (2012).

Lesh, T. A. et al. A multimodal analysis of antipsychotic effects on brain structure and function in first-episode schizophrenia. JAMA Psychiatry 72, 226–234 (2015).

Schnack, H. G. & Kahn, R. S. Detecting neuroimaging biomarkers for psychiatric disorders: sample size matters. Front. Psychiatry 7, 50 (2016).

Zilles, K. & Amunts, K. Receptor mapping: architecture of the human cerebral cortex. Curr. Opin. Neurol. 22, 331–339 (2009).

Eickhoff, S. B., Rottschy, C., Kujovic, M., Palomero-Gallagher, N. & Zilles, K. Organizational principles of human visual cortex revealed by receptor mapping. Cereb. Cortex 18, 2637–2645 (2008).

Talairach, J. & Tournoux, P. Co-Planar Stereotaxic Atlas of the Human Brain: 3-Dimensional Proportional System: An Approach to Cerebral Imaging (G. Thieme, New York, 1988).

Desikan, R. S. et al. An automated labeling system for subdividing the human cerebral cortex on MRI scans into gyral based regions of interest. Neuroimage 31, 968–980 (2006).

Damoiseaux, J. S. & Greicius, M. D. Greater than the sum of its parts: a review of studies combining structural connectivity and resting-state functional connectivity. Brain Struct. Funct. 213, 525–533 (2009).

Roca, P. et al. Inter-subject connectivity-based parcellation of a patch of cerebral cortex. Med Image Comput. Comput. Assist Interv. 13, 347–354 (2010).

Yeo, B. T. et al. The organization of the human cerebral cortex estimated by intrinsic functional connectivity. J. Neurophysiol. 106, 1125–1165 (2011).

Smith, S. M. et al. Correspondence of the brain’s functional architecture during activation and rest. Proc. Natl Acad. Sci. USA 106, 13040–13045 (2009).

Lashkari, D. et al. Search for patterns of functional specificity in the brain: a nonparametric hierarchical Bayesian model for group fMRI data. Neuroimage 59, 1348–1368 (2012).

Dosenbach, N. U. et al. Prediction of individual brain maturity using fMRI. Science 329, 1358–1361 (2010).

Eickhoff, S. B. et al. Co-activation patterns distinguish cortical modules, their connectivity and functional differentiation. Neuroimage 57, 938–949 (2011).

Cordes, D., Haughton, V., Carew, J. D., Arfanakis, K. & Maravilla, K. Hierarchical clustering to measure connectivity in fMRI resting-state data. Magn. Reson. Imaging 20, 305–317 (2002).

McKeown, M. J. et al. Analysis of fMRI data by blind separation into independent spatial components. Hum. Brain. Mapp. 6, 160–188 (1998).

Yao, Z., Hu, B., Xie, Y., Moore, P. & Zheng, J. A review of structural and functional brain networks: small world and atlas. Brain Inform. 2, 45–52 (2015).

Thirion, B., Varoquaux, G., Dohmatob, E. & Poline, J.-B. Which fMRI clustering gives good brain parcellations? Front. Neurosci. 8, 167 (2014).

Cabral, C. et al. Classifying schizophrenia using multimodal multivariate pattern recognition analysis: evaluating the impact of individual clinical profiles on the neurodiagnostic performance. Schizophr. Bull. 42(Suppl 1), S110–S117 (2016).

Xia, M., Wang, J. & He, Y. BrainNet viewer: a network visualization tool for human brain connectomics. PLoS ONE 8, e68910 (2013).

Menon, V. & Uddin, L. Q. Saliency, switching, attention and control: a network model of insula function. Brain. Struct. Funct. 214, 655–667 (2010).

Takamura, T. & Hanakawa, T. Clinical utility of resting-state functional connectivity magnetic resonance imaging for mood and cognitive disorders. J. Neural Transm. 124, 821–839 (2017).

van Amelsvoort, T. & Hernaus, D. Effect of pharmacological interventions on the fronto-cingulo-parietal cognitive control network in psychiatric disorders: a transdiagnostic systematic review of fMRI studies. Front. Psychiatry 7, 82 (2016).

Hu, M. L. et al. A review of the functional and anatomical default mode network in schizophrenia. Neurosci. Bull. 33, 73–84 (2017).

Vega Romero, R., Brown, M. & Greiner, R. The challenge of applying machine learning techniques to diagnose schizophrenia using multi-site fMRI data. MSc Thesis, University of Alberta (2017).

Alderson-Day, B., McCarthy-Jones, S. & Fernyhough, C. Hearing voices in the resting brain: A review of intrinsic functional connectivity research on auditory verbal hallucinations. Neurosci. Biobehav. Rev. 55, 78–87 (2015).

Curcic-Blake, B. et al. Interaction of language, auditory and memory brain networks in auditory verbal hallucinations. Prog. Neurobiol. 148, 1–20 (2017).

Alderson-Day, B. et al. Auditory hallucinations and the brain’s resting-state networks: findings and methodological observations. Schizophr. Bull. 42, 1110–1123 (2016).

Damaraju, E. et al. Dynamic functional connectivity analysis reveals transient states of dysconnectivity in schizophrenia. NeuroImage 5, 298–308 (2014).

Yu, Q. et al. Brain connectivity networks in schizophrenia underlying resting state functional magnetic resonance imaging. Curr. Top. Med. Chem. 12, 2415–2425 (2012).

Zhou, Y., Fan, L., Qiu, C. & Jiang, T. Prefrontal cortex and the dysconnectivity hypothesis of schizophrenia. Neurosci. Bull. 31, 207–219 (2015).

Pettersson-Yeo, W., Allen, P., Benetti, S., McGuire, P. & Mechelli, A. Dysconnectivity in schizophrenia: where are we now? Neurosci. Biobehav. Rev. 35, 1110–1124 (2011).

Fornito, A. & Bullmore, E. T. Reconciling abnormalities of brain network structure and function in schizophrenia. Curr. Opin. Neurobiol. 30, 44–50 (2015).

Friston, K., Brown, H. R., Siemerkus, J. & Stephan, K. E. The dysconnection hypothesis. Schizophr. Res. 176, 83–94 (2016). (2016).

Monte-Silva, K. et al. Induction of late LTP-like plasticity in the human motor cortex by repeated non-invasive brain stimulation. Brain Stimul. 6, 424–432 (2013).

Moseley, P., Alderson-Day, B., Ellison, A., Jardri, R. & Fernyhough, C. Non-invasive brain stimulation and auditory verbal hallucinations: new techniques and future directions. Front. Neurosci. 9, 515 (2015).

Bose, A. et al. Efficacy of fronto-temporal transcranial direct current stimulation for refractory auditory verbal hallucinations in schizophrenia: a randomized, double-blind, sham-controlled study. Schizophr. Res. 195, 475–480 (2018).

Mondino, M. et al. Effects of fronto-temporal transcranial direct current stimulation on auditory verbal hallucinations and resting-state functional connectivity of the left temporo-parietal junction in patients with schizophrenia. Schizophr. Bull. 42, 318–326 (2016).

Sheehan, D. V. et al. The Mini-International Neuropsychiatric Interview (M.I.N.I.): the development and validation of a structured diagnostic psychiatric interview for DSM-IV and ICD-10. J. Clin. Psychiatry 59(Suppl 20), 22–33 (1998). quiz34-57.

Andreasen, N. C., Arndt, S., Miller, D., Flaum, M. & Nopoulos, P. Correlational studies of the scale for the assessment of negative symptoms and the scale for the assessment of positive symptoms: an overview and update. Psychopathology 28, 7–17 (1995).

Chao-Gan, Y. & Yu-Feng, Z. DPARSF: a MATLAB toolbox for “Pipeline” data analysis of resting-state fMRI. Front. Syst. Neurosci. 4, 13 (2010).

Abraham, A. et al. Machine learning for neuroimaging with scikit-learn. Front. Neuroinform. 8, 14 (2014).

Pedregosa, F. et al. Scikit-learn: machine learning in Python. J. Mach. Learn. Res. 12, 2825–2830 (2011).

Power, J. D., Barnes, K. A., Snyder, A. Z., Schlaggar, B. L. & Petersen, S. E. Spurious but systematic correlations in functional connectivity MRI networks arise from subject motion. Neuroimage 59, 2142–2154 (2012).

Satterthwaite, T. D. et al. Impact of in-scanner head motion on multiple measures of functional connectivity: relevance for studies of neurodevelopment in youth. Neuroimage 60, 623–632 (2012).

Van Dijk, K. R., Sabuncu, M. R. & Buckner, R. L. The influence of head motion on intrinsic functional connectivity MRI. Neuroimage 59, 431–438 (2012).

Chang, X. et al. Distinct inter-hemispheric dysconnectivity in schizophrenia patients with and without auditory verbal hallucinations. Sci. Rep. 5, 11218 (2015).

Friston, K. J. et al. Statistical parametric maps in functional imaging: a general linear approach. Hum. Brain Mapp. 2, 189–210 (1994).

Varoquaux, G., Gramfort, A., Pedregosa, F., Michel, V. & Thirion, B. Multi-subject dictionary learning to segment an atlas of brain spontaneous activity. Inf. Process. Med. Imaging 22, 562–573 (2011).

Tzourio-Mazoyer, N. et al. Automated anatomical labeling of activations in SPM using a macroscopic anatomical parcellation of the MNI MRI single-subject brain. Neuroimage 15, 273–289 (2002).

Bellec, P., Rosa-Neto, P., Lyttelton, O. C., Benali, H. & Evans, A. C. Multi-level bootstrap analysis of stable clusters in resting-state fMRI. Neuroimage 51, 1126–1139 (2010).

Destrieux, C., Fischl, B., Dale, A. & Halgren, E. A sulcal depth-based anatomical parcellation of the cerebral cortex. Neuroimage 47, S151 (2009).

Power, J. D. et al. Functional network organization of the human brain. Neuron 72, 665–678 (2011).

Zou, Q.-H. et al. An improved approach to detection of amplitude of low-frequency fluctuation (ALFF) for resting-state fMRI: fractional ALFF. J. Neurosci. Methods 172, 137–141 (2008).

Hoptman, M. J. et al. Amplitude of low-frequency oscillations in schizophrenia: a resting state fMRI study. Schizophr. Res. 117, 13–20 (2010).

Zang, Y. F. et al. Altered baseline brain activity in children with ADHD revealed by resting-state functional MRI. Brain Dev. 29, 83–91 (2007).

Kendall, M. G. Rank Correlation Methods (Griffin, Oxford, 1948).

Zang, Y., Jiang, T., Lu, Y., He, Y. & Tian, L. Regional homogeneity approach to fMRI data analysis. Neuroimage 22, 394–400 (2004).

Crowley, S. et al. Considering total intracranial volume and other nuisance variables in brain voxel based morphometry in idiopathic PD. Brain Imaging Behav. 12, 1–12 (2018).

Turner, J. et al. A multi-site resting state fMRI study on the amplitude of low frequency fluctuations in schizophrenia. Front. Neurosci. 7, 137 (2013).

Liu, H. et al. Decreased regional homogeneity in schizophrenia: a resting state functional magnetic resonance imaging study. Neuroreport 17, 19–22 (2006).

Chen, J. et al. Comparative study of regional homogeneity in schizophrenia and major depressive disorder. Am. J. Med. Genet. 162B, 36–43 (2013).

Lynall, M.-E. et al. Functional connectivity and brain networks in schizophrenia. J. Neurosci. 30, 9477–9487 (2010).

Das, A. et al. Interpretation of the precision matrix and its application in estimating sparse brain connectivity during sleep spindles from human electrocorticography recordings. Neural Comput. 29, 603–642 (2017).

Fan, R.-E., Chang, K.-W., Hsieh, C.-J., Wang, X.-R. & Lin, C.-J. LIBLINEAR: A library for large linear classification. J. Mach. Learn. Res. 9, 1871–1874 (2008).

Raschka, S. MLxtend: Providing machine learning and data science utilities and extensions to Python’s scientific computing stack. J. Open Source Softw. 3, 24 https://doi.org/10.21105/joss.00638 (2018). http://joss.theoj.org/papers/10.21105/joss.00638.

Jones, E., Oliphant, T. & Peterson, P. SciPy: Open source scientific tools for Python (2001). http://www.scipy.org/.

Shen, H., Wang, L., Liu, Y. & Hu, D. Discriminative analysis of resting-state functional connectivity patterns of schizophrenia using low dimensional embedding of fMRI. Neuroimage 49, 3110–3121 (2010).

Fan, Y. et al. Discriminant analysis of functional connectivity patterns on Grassmann manifold. Neuroimage 56, 2058–2067 (2011).

Yu, Y., Shen, H., Zeng, L.-L., Ma, Q. & Hu, D. Convergent and divergent functional connectivity patterns in schizophrenia and depression. PLoS ONE 8, e68250–e68250 (2013).

Anderson, A. & Cohen, M. S. Decreased small-world functional network connectivity and clustering across resting state networks in schizophrenia: an fMRI classification tutorial. Front. Hum. Neurosci. 7, 520–520 (2013).

Arbabshirani, M., Kiehl, K., Pearlson, G. & Calhoun, V. Classification of schizophrenia patients based on resting-state functional network connectivity. Front. Neurosci. 7, 133–133 (2013).

Yu, Y. et al. Functional connectivity-based signatures of schizophrenia revealed by multiclass pattern analysis of resting-state fMRI from schizophrenic patients and their healthy siblings. Biomed. Eng. Online 12, 10–10 (2013).

Guo, S., Kendrick, K. M., Yu, R., Wang, H.-L. S. & Feng, J. Key functional circuitry altered in schizophrenia involves parietal regions associated with sense of self. Hum. Brain. Mapp. 35, 123–139 (2014).

Brodersen, K. H. et al. Dissecting psychiatric spectrum disorders by generative embedding. NeuroImage. Clin. 4, 98–111 (2014).

Anticevic, A. et al. Characterizing thalamo-cortical disturbances in schizophrenia and bipolar illness. Cereb. Cortex 24, 3116–3130 (2014).

Watanabe, T., Kessler, D., Scott, C., Angstadt, M. & Sripada, C. Disease prediction based on functional connectomes using a scalable and spatially-informed support vector machine. Neuroimage 96, 183–202 (2014).

Chyzhyk, D., Grana, M., Ongur, D. & Shinn, A. K. Discrimination of schizophrenia auditory hallucinators by machine learning of resting-state functional MRI. Int. J. Neural Syst. 25, 1550007 (2015).

Cheng, W. et al. Voxel-based, brain-wide association study of aberrant functional connectivity in schizophrenia implicates thalamocortical circuitry. NPJ Schizophr. 1, 15016–15016 (2015).

Peters, H. et al. More consistently altered connectivity patterns for cerebellum and medial temporal lobes than for amygdala and striatum in schizophrenia. Front. Hum. Neurosci. 10, 55–55 (2016).

Mikolas, P. et al. Connectivity of the anterior insula differentiates participants with first-episode schizophrenia spectrum disorders from controls: a machine-learning study. Psychol. Med. 46, 2695–2704 (2016).

Yang, H., He, H. & Zhong, J. Multimodal MRI characterisation of schizophrenia: a discriminative analysis. Lancet 388(Suppl), S36–S36 (2016).

Iwabuchi, S. J. & Palaniyappan, L. Abnormalities in the effective connectivity of visuothalamic circuitry in schizophrenia. Psychol. Med. 47, 1–11 (2017).

Lottman, K. K. et al. Risperidone effects on brain dynamic connectivity—a prospective resting-state fMRI study in schizophrenia. Front. Psychiatry 8, 14–14 (2017).

Guo, W. et al. Family-based case-control study of homotopic connectivity in first-episode, drug-naive schizophrenia at rest. Sci. Rep. 7, 43312–43312 (2017).

Acknowledgements

This study is supported by IBM Alberta Centre for Advanced Studies and MITACS (IT09558) funds to S.V.K.; Wellcome Trust/DBT India Alliance (500236/Z/11/Z) and DST (DST/SJF/LSA-02/2014-15) research grants to G.V.; Alberta Machine Intelligence Institute and NSERC grants to R.G. V.S. is supported by the ICMR.

Author information

Authors and Affiliations

Contributions

G.V., J.C.N., V.S. collected the clinical and neuroimaging data. Clinical symptom ratings were done by V.S., J.C.N. under the supervision of G.V. Data were cleaned and processed by R.A. and S.V.K. S.V.K. designed and implemented the machine learning models, with supervision of R.G., A.J.G., M.R.G.B. and S.M.D. S.V.K. managed the literature search and wrote the first draft of manuscript along with R.G. All authors revised and optimized further versions of the manuscript. All the authors have contributed to and have approved the final manuscript.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Kalmady, S.V., Greiner, R., Agrawal, R. et al. Towards artificial intelligence in mental health by improving schizophrenia prediction with multiple brain parcellation ensemble-learning. npj Schizophr 5, 2 (2019). https://doi.org/10.1038/s41537-018-0070-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41537-018-0070-8

This article is cited by

-

Sampling inequalities affect generalization of neuroimaging-based diagnostic classifiers in psychiatry

BMC Medicine (2023)

-

Reinforcement learning deficits exhibited by postnatal PCP-treated rats enable deep neural network classification

Neuropsychopharmacology (2023)

-

Optimized adaptive neuro-fuzzy inference system based on hybrid grey wolf-bat algorithm for schizophrenia recognition from EEG signals

Cognitive Neurodynamics (2023)

-

Dissecting Psychiatric Heterogeneity and Comorbidity with Core Region-Based Machine Learning

Neuroscience Bulletin (2023)