Abstract

It is known that advantage distillation (that is, information reconciliation using two-way communication) improves noise tolerances for quantum key distribution (QKD) setups. Two-way communication is hence also of interest in the device-independent case, where noise tolerance bounds for one-way error correction are currently too low to be experimentally feasible. Existing security proofs for the device-independent repetition-code protocol (the most prominent form of advantage distillation) rely on fidelity-related security conditions, but previous bounds on the fidelity were not tight. We improve on those results by developing an algorithm that returns arbitrarily tight lower bounds on the fidelity. Our results give insight on how strong the fidelity-related security conditions are, and could also be used to compute some lower bounds on one-way protocol keyrates. Finally, we conjecture a necessary security condition for the protocol studied in this work, that naturally complements the existing sufficient conditions.

Similar content being viewed by others

Introduction

The ultimate goal of key distribution protocols is to generate secure keys between two parties, Alice and Bob. To this end, device-independent quantum key distribution (DIQKD) schemes aim to provide information-theoretically secure keys by taking advantage of non-local correlations, which can be verified via Bell inequalities1,2,3,4. Critically, Bell violations rely only on the measurement statistics, \(\Pr \left(ab| xy\right)\), where a(b) is Alice’s (Bob’s) measurement outcome and x(y) is Alice’s (Bob’s) measurement setting5. By basing security on Bell inequalities, DIQKD protocols do not require any knowledge of the bipartite state that Alice and Bob share, nor of the measurements both parties conduct, apart from the assumption that they act on separate Hilbert spaces1,5. (To guarantee security, it is still important to ensure that information about the device outputs themselves is not simply leaked to the adversary. Also, if the devices are reused, they must not access any registers retaining memory of ‘private data’, in order to avoid the memory attack of6.) We consider all Hilbert spaces to be finite-dimensional.

Although DIQKD allows for the creation of secret keys under very weak assumptions, there is a trade-off when it comes to noise tolerances1,7. Standard DIQKD protocols which apply one-way error-correction steps have fairly low noise robustness, and are therefore not currently experimentally feasible1,3. To improve noise tolerances, one may implement techniques such as noisy pre-preprocessing8, basing the protocol on asymmetric CHSH inequalities9,10, or applying advantage distillation11. In this work we focus solely on advantage distillation, which refers to using two-way communication for information reconciliation, in place of one-way error-correction.

In device-dependent QKD7,12,13,14,15,16,17, as well as classical key distillation scenarios18,19, advantage distillation can perform better than standard one-way protocols in terms of noise tolerance. As for DIQKD, it has been shown that advantage distillation leads to an improvement of noise tolerance as well11, but the results obtained in that work may not be optimal. Specifically, a sufficient condition was derived there for the security of advantage distillation against collective attacks, based on the fidelity between an appropriate pair of conditional states (see Theorem 1 below). However, the approach used in11 to bound this fidelity is suboptimal, and hence the results were not tight.

Our main contribution in this work is to derive an algorithm based on semidefinite programs (SDPs) that yields arbitrarily tight lower bounds on the relevant fidelity quantity considered in11. We apply this algorithm to several DIQKD scenarios studied in that work, and compare the resulting bounds. Surprisingly, while we find improved noise tolerance for some scenarios, we do not have such improvements for the scenario that gave the best noise tolerances in11, which relied on a more specialized security argument. An important consequence of this finding is that it serves as strong evidence that the general sufficient condition described in11 is in fact not necessary in the DIQKD setting, in stark contrast to the device-dependent QKD protocols in14,15, where it is both necessary and sufficient (when focusing on the repetition-code advantage distillation protocol; see below). In light of this fact, we describe an analogous condition that we conjecture to be necessary, and discuss possible directions for further progress.

We consider the following set-up for two parties, Alice and Bob2,11.

-

Measurement Settings: Alice (Bob) has MA (MB) possible measurement inputs and chooses \(x\in {{{\mathcal{X}}}}=\{0,\ldots ,{M}_{A}-1\}\,\,\left(y\in {{{\mathcal{Y}}}}=\{0,\ldots ,{M}_{B}-1\}\right)\). The measurements are denoted as Ax (By) for Alice (Bob).

-

Measurement Outcomes: Alice (Bob) has 2 possible measurement outcomes and measures \(a\in {{{\mathcal{A}}}}=\{0,1\}\,\,\left(b\in {{{\mathcal{B}}}}=\{0,1\}\right)\).

The only key-generating measurements are A0 and B0. We consider Eve to be restricted to collective attacks20, where it is assumed that the measurements Alice and Bob may conduct, as well as the single-round tripartite state, ρABE, that Alice, Bob, and an adversary, Eve, share are independent and identical for each round. Since we are working in the device-independent setting, we consider Alice and Bob’s measurements to be otherwise uncharacterized. For ease of applying the results from11, we assume that they perform a symmetrization step, in which Alice and Bob publicly communicate a uniformly random bit and XOR it with their raw outputs (see11 for details on when this step can be omitted). We use ϵ to denote the quantum bit error rate (QBER), i.e. the probability of obtaining different outcomes from measurements A0, B0.

We consider the repetition-code protocol for advantage distillation13,14,15,18,19, which proceeds as follows. After gathering the raw output strings from their devices, Alice and Bob split the key-generating rounds into blocks of size n each, and from each block they will attempt to generate a single highly-correlated bit. For each block, Alice generates a secret, uniformly random bit, C, and adds it to her n bits, A0. She then sends this ‘encoded’ bitstring, \({{{\bf{M}}}}={{{{\bf{A}}}}}_{0}\oplus ({C},\ldots ,{C})\), as a message to Bob via an authenticated channel. He then tries to decode the message by adding his own bitstring, B0, to it. He accepts the block of n bits if and only if \({{{\bf{M}}}}\oplus {{{{\bf{B}}}}}_{0}=({C}^{\prime},\ldots ,{C}^{\prime})\). If accepted, he indicates this to Alice by sending her a bit D = 1 via an authenticated channel. Otherwise, he sends D = 0. Considering only the accepted blocks, this process has therefore reduced each block of n bitpairs to a single highly correlated bitpair, \((C,{C}^{\prime})\). Alice and Bob then apply a one-way error correction procedure (from Alice to Bob) on the resulting bitpairs over asymptotically many rounds, followed by privacy amplification to produce a final secret key. This procedure can achieve a positive asymptotic keyrate if the bitpairs in the accepted blocks satisfy some conditions we shall now describe.

The protocol can be used to distill a secret key if21

where E is Eve’s side-information across one block of n rounds and H is the von Neumann entropy3,21. The second entropy term, \({{{\rm{H}}}}(C| {C}^{\prime};D=1)\), can easily be determined via the QBER1,11. In11, Eve’s conditional entropy H(C∣EM; D = 1) was lower-bounded using inequalities that are not necessarily tight, leading to the following result based on the (root-)fidelity \({{{\rm{F}}}}(\rho ,\sigma ):=\parallel \sqrt{\rho }\sqrt{\sigma }{\parallel }_{1}\):

Theorem 1

A secret key can be generated if

where \({\rho }_{E| {a}_{0}{b}_{0}}\) denotes Eve’s conditional state (in a single round) after Alice and Bob use inputs A0 and B0 and obtain outcomes a0 and b0.

Our goal will be to find a general method to certify the condition in Theorem 1. For later use, we also note that in the case where both parties have binary inputs and outputs, an alternative condition was derived11 based on the trace distance d(ρ, σ): = (1/2)∥ρ − σ∥1:

Theorem 2

If \({{{\mathcal{X}}}}={{{\mathcal{Y}}}}=\{0,1\}\) and all measurements have binary outcomes, a secret key can be generated if

Results

To find optimal bounds for Theorem 1, we need to minimize the fidelity for a given observed distribution. We show that this can be written as an SDP in Section “SDP Formulation of Minimum Fidelity Condition”, and use this to calculate noise tolerances for a range of repetition-code advantage distillation setups in Section “Results From SDP”. We conclude the Results section with a conjecture for a necessary condition that naturally complements the sufficient condition in11.

SDP formulation of minimum fidelity condition

To see if Eve can minimize the fidelity such that (2) does not hold, we must first solve the following constrained optimization over all possible ρABE and possible measurements by Alice and Bob:

where Pr(ab∣xy)ρ denotes the combined outcome probability distribution that would be obtained from ρABE (and some measurements), and p represents the measurement distribution Alice and Bob actually observe. We observe that after Alice and Bob perform the key-generating measurements, the resulting tripartite state is of the form

where for brevity we use Pr(ab) to denote the probability of getting outcomes (a, b) when the key-generating measurements are performed, i.e. Pr(ab∣00)ρ.

To turn this optimization into an SDP, we first note that for any pair (ρE∣00, ρE∣11), there exists a measurement Eve can perform that leaves the fidelity invariant22. Also, since this measurement is on Eve’s system only, performing it does not change the value Pr(ab∣xy)ρ in the constraint either. Therefore, given any feasible ρABE and measurements in the optimization, we can produce another feasible state and measurements with the same objective value but with Eve having performed the22 measurement that leaves the fidelity between the original (ρE∣00, ρE∣11) states invariant.

After performing this measurement, the state (5) becomes

where the index i represents the possible outcomes for Eve’s measurement (we do not limit the number of such outcomes for now). For these states, the fidelity can be written (assuming the distribution is symmetrized) as

As for the constraints, we note that this measurement by Eve commutes with Alice and Bob’s measurements, hence we can write Pr(ab∣xy)ρ = ∑iPr(i)pi, where pi denotes the Alice-Bob distribution conditioned on Eve getting outcome i. Note that pi is always a distribution realizable by quantum states and measurements, since conditioning on Eve getting outcome i produces a valid quantum state on Alice and Bob’s systems.

The solution to (4) is therefore equal to the output of the optimization problem

where \({{{{\mathcal{Q}}}}}_{{{{\mathcal{X}}}},{{{\mathcal{Y}}}}}\) represents the set of quantum realizable distributions, and \({{{\mathcal{P}}}}({{{\mathcal{I}}}})\) is the set of probability distributions Pr(i) on Eve’s (now classical) side-information. We note that the constraints can be relaxed to a convergent hierarchy of SDP conditions, following the approach in23,24,25, but the objective function is not affine. To address this, we show in Section “Approximating The Fidelity With Polytope Hyperplanes” that since the objective function is a convex sum of bounded concave functions, it can be approximated arbitrarily well using upper envelopes of convex polytopes. This will allow us to lower-bound this optimization using an SDP hierarchy, and do so without any knowledge about the dimension of Eve’s system other than the assumption that all Hilbert spaces are finite-dimensional. Critically, SDPs have the important property that they yield certified lower bounds on the minimum value (via the dual value), so we can be certain that we truly have a lower bound on the optimization (4). Our approach is based on the SDP reduction in26; however, we give a more detailed description and convergence analysis for the situation where the optimization involves concave functions of more than one variable (which is required in our work but not necessarily in26).

We remark that in this work, we focus on the situation where the constraints in (8) involve the full list of output probabilities. However, our analysis generalizes straightforwardly to situations where the constraints only consist of one or more linear combinations of these probabilities (though it is still necessary to have an estimate of the Pr(00) term in the denominator of the objective function), which can slightly simplify the corresponding DIQKD protocols.

Results from SDP

With the exception of the 2-input scenario for both parties (where Theorem 2 can be applied instead of Theorem 1), previous bounds for the fidelity were calculated via the Fuchs–van de Graaf inequalities11,27. To compare this against our method, we consider the depolarizing noise model28, i.e. where the observed statistics have the form

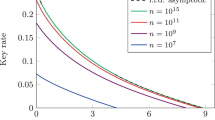

where Prtarget refers to some target distribution in the absence of noise. We consider three possible choices for the target distribution, and show our results for these scenarios in Fig. 1:

-

(a)

Both Alice and Bob have four possible measurement settings. The target distribution is generated by:

-

\(\left|{\phi }^{+}\right\rangle =\left(\left|00\right\rangle +\left|11\right\rangle \right)/\sqrt{2}\)

-

\({A}_{0}=Z,{A}_{1}=\left(X+Z\right)/\sqrt{2},{A}_{2}=X\), \({A}_{3}=\left(X-Z\right)/\sqrt{2}\)

-

\({B}_{0}=Z,{B}_{1}=\left(X+Z\right)/\sqrt{2},{B}_{2}=X\), \({B}_{3}=\left(X-Z\right)/\sqrt{2}\)

-

-

(b)

Alice has two measurement settings, whereas Bob has three. The target distribution is generated by:

-

\(\left|{\phi }^{+}\right\rangle =\left(\left|00\right\rangle +\left|11\right\rangle \right)/\sqrt{2}\)

-

A0 = Z, A1 = X

-

\({B}_{0}=Z,{B}_{1}=\left(X+Z\right)/\sqrt{2}\), \({B}_{2}=\left(X-Z\right)/\sqrt{2}\)

-

-

(c)

Both Alice and Bob have two possible measurement settings. The target distribution is generated by:

-

\(\left|{\phi }^{+}\right\rangle =\left(\left|00\right\rangle +\left|11\right\rangle \right)/\sqrt{2}\)

-

A0 = Z, A1 = X

-

\({B}_{0}=\left(X+Z\right)/\sqrt{2},{B}_{1}=\left(X-Z\right)/\sqrt{2}\)

-

Fidelity bounds as a function of depolarizing noise in several scenarios (described in main text), regarding the repetition-code protocol defined in our Introduction. The blue and black solid lines respectively represent the fidelity bounds derived via the previous approach (based on the Fuchs–van de Graaf inequality) and our new algorithm. It can be seen that the latter yields substantially better bounds. The dashed lines show the value of \({\sqrt{\epsilon/(1-{\epsilon)}}}\), so the points where they intersect the solid lines give the threshold values for which advantage distillation is possible according to the condition \({{{\rm{F}}}}{({\rho }_{E| 00},{\rho }_{E| 11})}^{2}\, > \,\frac{\epsilon }{(1-\epsilon )}\). For our approach, these thresholds are \({q}\approx {8.3 \%}, {q} \approx {7.0 \%}\), and \({q} \approx 6.7 \%\) (from top to bottom in the scenarios shown here).

Case (a) is meant to include the Mayers-Yao self-test29 and measurements that maximize the CHSH value. Alternatively, it can be viewed as having both parties perform all four of the measurements from (c). The results of11 were able to prove security of this advantage distillation protocol up to q ≈ 6.8% in this case. We manage to improve the noise tolerance to q ≈ 8.3%, which represents an increase of 1.5%.

As for case (b), the measurements A0, A1, B1, B2 maximize the CHSH value, and the key-generating measurement B0 is chosen such that the QBER is zero in Prtarget. Again, our approach allows us to improve the noise tolerance threshold from q ≈ 6.0% to q ≈ 7.0%.

The final case is a simple CHSH-maximizing setup, where both parties only have two measurements (this is similar to (b), but without the QBER-minimizing measurement for Bob). If we apply Theorem 1 for this case, our approach improves the threshold from q ≈ 3.6% to q ≈ 6.7%. Also, if we instead optimize the measurements for robustness against depolarizing noise, this threshold can be increased to q ≈ 7.6% (these optimized measurements correspond to measurements in the x-z plane at angles \({\theta }_{{A}_{0}}=0\), \({\theta }_{{A}_{1}}\approx 4.50\), \({\theta }_{{B}_{0}}\approx 3.61\), and \({\theta }_{{B}_{1}}\approx 5.39\) from the z-axis).

However, it is important to note that in case (c), Theorem 2 could be applied instead to yield a noise tolerance bound of q ≈ 7.7%, or q ≈ 9.1% with optimized measurements11. These values are higher than those obtained above by applying our approach to case (c) with Theorem 1. This gives strong evidence that the sufficient condition in Theorem 1 is not a necessary one, because our approach should yield threshold values close to the optimal ones that could be obtained based only on that sufficient condition. (In principle, there may still be a gap between the true fidelity values and the bounds we computed; however, we consider this somewhat unlikely—see Section “Creating An SDP Algorithm That Minimizes The Fidelity”.) Furthermore, we remark that it also suggests that the states ρE∣00, ρE∣11 that minimize the fidelity in this scenario cannot be pure. This is because in11, the critical inequalites used in the proof of Theorem 1 are all saturated (in the large-n limit, at least) if those states are pure, indicating that the resulting sufficient condition should be basically ‘tight’ (i.e. a necessary condition) in this case. Since our results indicate that the sufficient condition is not a necessary one, this implies that the relevant states ρE∣00, ρE∣11 cannot be pure.

Given the above observations, we now conjecture what might be a necessary condition for security of the repetition-code protocol, to serve as a counterpart to Theorem 1.

Conjectured necessary condition for secret key distillation

While our result in this section can be stated in terms of the fidelity, we note that the reasoning holds for any distinguishability measure g(ρ, σ) that has the following properties:

The first line states that it is symmetric, the second that it is multiplicative across tensor products, and the last two lines correspond to the Fuchs–van de Graaf inequality in the case of the fidelity. An example of another distinguishability measure that satisfies these properties is the pretty-good fidelity30,31,32, defined as \({{{{\rm{F}}}}}_{{{{\rm{pg}}}}}(\rho ,\sigma ):={{\mathrm{Tr}}}\,\left[\sqrt{\rho }\sqrt{\sigma }\right]\). To keep our result more general, we shall first present it in terms of any such measure g, and discuss at the end of this section which choices yield better bounds. (In fact, we will only need (10)–(12) for this section. We list (13) as well because it can be used when studying sufficient conditions; see Section “Proof of Proposition 3”.).

For any such g, we shall show that under a particular assumption, the condition

is necessary for (1) to hold. Specifically, the assumption is that given some state and measurements compatible with the observed statistics, Eve can produce some other state and measurements (with the same measurement-outcome probability distribution) that have the same value of g(ρE∣00, ρE∣11), but with ρE∣01 = ρE∣10. This assumption seems reasonable because ρE∣01 = ρE∣10 describes a situation where Eve is unable to distinguish the cases where Alice and Bob’s outcomes are 01 versus 10, i.e. in some sense this appears to be a ‘suboptimal’ attack by Eve. (It might seem that this could be trivially satisfied by having Eve erase her side-information conditioned on Alice and Bob obtaining different outcomes. However, for Eve to do so, she would need to know when Alice and Bob obtain different outcomes, and it is not clear that this is always possible for the states in Eve’s optimal attacks in DIQKD scenarios. This property does hold for the QKD protocols studied in14,15, and they use it as part of the proof that their condition is also necessary).

To prove that (14) is in fact necessary (given the stated assumption), we show that if it is not satisfied, then regardless of the choice of block size in the repetition-code protocol, in each block Eve can always produce a classical bit C'' such that

which in turn implies (as noted in 14, using the results for binary symmetric channels in 18) that the repetition-code protocol as described above (i.e. with only one-way error correction from Alice to Bob after the initial blockwise ‘distillation’ procedure) cannot achieve positive asymptotic keyrate. To prove that Eve can indeed do this, we first derive an ‘intermediate’ implication (we give the proof in Section. “Proof of Proposition 3”, based on the arguments in11,14,15):

Proposition 1

Let g be a function satisfying (10)–(12). If Eve’s side-information satisfies ρE∣01 = ρE∣10 and

then for all n, Eve can use the information available to her (i.e. E, M, and D = 1) to construct a classical binary-valued guess C″ for the bit C that satisfies

We now observe that the following relations hold (the first by Fano’s inequality, the second by a straightforward calculation using the fact that the bits C,C' have uniform marginal distributions in the accepted blocks):

where h2 is the binary entropy function. With this we find that Eve can indeed produce a bit C'' such that (15) holds, thereby concluding the proof.

If it could be shown that ρE∣01 = ρE∣10 is in fact always possible to achieve for Eve without compromising g(ρE∣00, ρE∣11) or the post-measurement probability distribution, then (14) would genuinely be a necessary condition for security. It could then be used to find upper bounds for noise tolerance of the repetition-code protocol.

Regarding specific choices of the measure g, note that the pretty-good fidelity and the fidelity are related by F(ρ, σ) ≥ Fpg(ρ, σ) ≥ F(ρ, σ)2 31, with the first inequality being saturated for commuting states and the second inequality for pure states. In particular, the F(ρ, σ) ≥ Fpg(ρ, σ) side of the inequality implies that when aiming to find upper bounds on noise tolerance of this protocol using the condition (14), it is always better to consider pretty-good fidelity rather than fidelity (since all states satisfying the condition (14) with g = Fpg also must satisfy it with g = F, but not vice versa). We leave for future work the question of whether there are other more useful choices for the measure g.

Discussion

We discuss several consequences of our findings in this section. With regards to noise tolerances, our results show that by calculating bounds directly via the fidelity, a significant improvement can be achieved over the results based on Theorem 1 in11. This is especially important for the 3-input-scenario for Bob, as the previous advantage distillation bound falls well short of the bound for standard one-way error correction (even when only accounting for the QBER and CHSH value)1,9,10. As advantage distillation improves the key rate for device-dependent QKD7,12,13,14,15,16,17, it is expected to behave analogously for the device-independent setting. Our bound of q ≈ 7.0% lies within 0.15% of the noise threshold for one-way protocols using the CHSH inequality1 and within 0.40% of those using asymmetric CHSH inequalities9,10, thereby substantially reducing this gap; however, it still does not yield an overall improvement. Again, this suggests that the sufficient condition (2) is in fact not necessary.

This brings us to the question of finding a condition that is both necessary and sufficient for security (of the repetition-code protocol) in the DIQKD setting. Note that while one could view the results of14,15 as stating that condition (2) is both necessary and sufficient in the device-dependent QKD scenarios studied there, there are some subtleties to consider. Namely, that condition could be rewritten in several ways that are equivalent in those QKD scenarios, but not necessarily in DIQKD. For instance, in those QKD scenarios the states ρE∣ab are all pure, which means that \({{{\rm{F}}}}{({\rho }_{E| 00},{\rho }_{E| 11})}^{2}\) could be rewritten as \(1-d{({\rho }_{E| 00},{\rho }_{E| 11})}^{2}\); however, this equivalence may not hold in the DIQKD setting, where those states might not be pure in general. Hence if we think in terms of trying to extend the necessary and sufficient condition in14,15 to DIQKD, we would first need to address the question of finding the ‘right’ way to formulate that condition.

Indeed, our findings raise the question of whether attempting to determine security via F(ρE∣00, ρE∣11) is the right approach at all, because of the following informal argument. First, assuming that the fidelity bounds we obtained were essentially tight, our results indicate that there are scenarios where condition (2) is violated but the repetition-code protocol is still secure (via Theorem 2 instead), implying that it is not a necessary condition. However, if it is not necessary, it is not immediately clear how one might improve upon it. In particular, it seems unlikely that our conjectured necessary condition (14) could also be sufficient—after all, for the device-dependent QKD protocols studied in14,15, it is condition (2) rather than (14) that is both sufficient and necessary. Since a Fuchs–van de Graaf inequality was used to incorporate the fidelity into the security condition and this inequality is most likely the reason that (2) is not necessary, finding a new, completely device-independent approach might necessitate using different inequalities.

A speculative, but interesting, alternative approach could be to instead consider the (non-logarithmic) quantum Chernoff bound33,34,

This is because this measure yields asymptotically tight bounds on the distinguishability of the states ρ⊗n and σ⊗n34, which we might be able to use. However, there is still some work that needs to be done before noise tolerances can be calculated via this method. For example, in contrast to the fidelity, it still not known whether there exists a measurement that preserves this distinguishability measure (this is the main reason we could construct an SDP for bounding the fidelity). Moreover, a security condition for the repetition-code protocol will in all likelihood require a measure of distinguishability between states that are not of the form ρ⊗n and σ⊗n, albeit with some similarities (see Section “Proof of Proposition 3”). Hence one would need to investigate which aspects of the proof in34 could be generalized to such states as well. We discuss this further in Section “Proof of Proposition 3”.

Similar to1, one could conduct a qubit analysis in the hopes that this approach produces fidelity bounds that are ‘strong enough’. We show in35, however, that this is not the case. Moreover, we prove for maximal CHSH violation, i.e. \(S=2\sqrt{2}\), that F(ρE∣00, ρE∣11) = 1 must hold for qubit strategies. As can be seen in35, this will no longer generally be the case in higher dimensions.

In principle, by combining the bounds we computed here with the security proof in11, one could compute lower bounds on the keyrates under the I.I.D. assumption (both in the asymptotic limit and for finite sample sizes, by using the finite version of the quantum asymptotic equipartition property). However, some numerical estimates we performed indicate that the resulting values are very low, even in the asymptotic case. Informally, this is likely because the proof in11 bounds the von Neumann entropy in terms of fidelity with an inequality that is suboptimal in this context, as previously discussed. However, in the case of device-dependent QKD, the keyrates of this protocol are more reasonable, so it is possible that with better proof techniques, the same may hold for DIQKD.

We conclude by mentioning that it should be possible to use our algorithm to obtain keyrate lower bounds for one-way communication protocols as well. This is because the keyrate in such protocols is given by21

and the main challenge in computing this value is finding lower bounds on H(A0∣E) (again, the H(A0∣B0) term is straightforward to handle, e.g. by estimating the QBER). Taking A0 to be symmetrized as previously mentioned, we can apply the following inequality36:

where the states \({\rho }_{E| {a}_{0}}\) refer to conditioning on the outcome A0 only. Hence H(A0∣E) can be bounded in terms of F(ρE∣0, ρE∣1). It should be straightforward to adapt our algorithm to bound such a fidelity expression as well, in which case our approach would also be useful to compute keyrates for non-advantage distillation setups. We aim to investigate this in future work.

Methods

Fidelity for post-measurement tripartite states

Proposition 2

The fidelity, F(ρE∣00, ρE∣11), of a state of the form (6) is given by

Proof

It is easily verified that:

We note that Pr(00) = Pr(11) due to the symmetrization step. Since these states are diagonal in the same basis, we can directly compute the fidelity:

as claimed.

Approximating the fidelity with polytope hyperplanes

Our goal in this section will be to find a lower bound on \(\sqrt{\Pr (00| i)\Pr (11| i)}\) that can be written as a pointwise minimum of affine functions (of pi). Note that this function depends only on Pr(00∣i) and Pr(11∣i). (We need to consider both Pr(00∣i) and Pr(11∣i) without assuming they are equal, as we cannot assume the distributions conditioned on i are still symmetrized. This is because Eve’s measurement takes place after the symmetrization step, and does not have to respect the symmetry.) Hence for the purposes of this section, we shall focus on 2-dimensional vectors x, with the implicit understanding that

An affine function of such a vector x straightforwardly defines a corresponding affine function of pi, by considering the latter to depend only on the terms Pr(00∣i) and Pr(11∣i).

We begin the construction by defining an appropriate lattice of points:

Definition 1

For each \(n\in {\mathbb{N}}\), let \({{{{\mathcal{L}}}}}_{n}\) denote a uniformly spaced grid of 2n + 1 ⋅ 2n + 1 points in [0, 1]2, i.e.

For a concave function \(f:{[0,1]}^{2}\to {\mathbb{R}}\), we define the nthorder lattice of f to be the set of 2n + 1 ⋅ 2n + 1 ordered triples (x1, x2, f(x)) such that \(({x}_{1},{x}_{2})\in {{{{\mathcal{L}}}}}_{n}\).

(The above definition naturally generalizes to higher-dimensional arrays.)

Proposition 3

Let \({{{{\mathscr{P}}}}}_{n}\) denote the convex hull of the union of the nth order lattice of \(f({{{\bf{x}}}}):= \sqrt{{x}_{1}{x}_{2}}\) and the nth order lattice of g(x) ≔ 0. Then \({{{{\mathscr{P}}}}}_{n}\) is a convex polytope that lies on or beneath the graph of the function \(f({{{\bf{x}}}})=\sqrt{{x}_{1}{x}_{2}}\).

Proof

Step 1 : Show that the \(2\cdot {({2}^{n}+1)}^{2}\) lattice points lie on or beneath the graph of f(x).

The lattice points of f(x) automatically lie on the function graph as desired. Also, since f(x) is always non-negative, the lattice points of g(x) also lie on or beneath the function graph.

Step 2 : Show that the convex hull of the union of both lattices lies on or beneath the graph of f(x).

All points in the convex hull are a convex sum of the lattice points. As f(x) is a concave function, such convex sums must lie on or beneath the graph of f(x) as well.

We can use \({{{{\mathscr{P}}}}}_{n}\) to construct the desired lower bound on f(x), as follows. As we shall formally prove in Proposition 7, this process is basically constructing the upper envelope of \({{{{\mathscr{P}}}}}_{n}\) (i.e. the function whose graph is the ‘upper surface’ of \({{{{\mathscr{P}}}}}_{n}\); this is formally defined by (37) below).

We first transform \({{{{\mathscr{P}}}}}_{n}\) to its facet representation37, i.e. the description of the polytope by its facet-defining half-spaces. This gives us a set of inequalities described by parameters \({\{({a}_{j},{b}_{j},{c}_{j},{d}_{j})\}}_{j}\), such that \(({x}_{1},{x}_{2},{x}_{3})\in {{{{\mathscr{P}}}}}_{n}\) if and only if

To only retrieve the facets that will be used to approximate f(x), i.e. the facets that describe the upper envelope of the polytope we constructed, keep only the facets for which cj > 0. Geometrically, this corresponds to facets such that the normal vector (directed outwards from the polytope) has a vertical component that points upwards.

In our case, this means we remove the facets

which correspond to a lower horizontal facet and two vertical facets, respectively. (For more general concave f, there would be more vertical facets to remove, but the subsequent analysis still holds as it is based only on the fact that we keep exactly the facets with cj > 0.) Let \({{{\mathcal{S}}}}\) denote the set of indices of the remaining facets. For each \(j\in {{{\mathcal{S}}}}\), we define a corresponding affine function,

and use these to define a function (denoted fn) that is meant to bound f(x):

We verify that the above procedure indeed produces the upper envelope of \({{{{\mathscr{P}}}}}_{n}\):

Proposition 4

For all \(n\in {\mathbb{N}}\) and \({{{\bf{x}}}}\in {\left[0,1\right]}^{2}\), fn as defined above satisfies

Proof

We first remark that it is indeed valid to write the expression (37) as a maximum rather than a supremum, because by construction of \({{{{\mathscr{P}}}}}_{n}\), the feasible set in (37) is non-empty (for \({{{\bf{x}}}}\in {\left[0,1\right]}^{2}\)) and compact.

To prove the desired equality, we start by considering a fixed \({{{\bf{x}}}}\in {\left[0,1\right]}^{2}\), and arguing that the expression (37) is in fact equal to

where the values aj, bj, cj, dj are from the facet inequalities (31). This is because \({{{{\mathscr{P}}}}}_{n}\) is exactly the set of points which satisfy the facet inequalities (31) for all j, so the only difference between (37) and (38) is that the latter maximization has omitted the facet inequalities such that cj≤0. Removing these inequalities does not change the maximum value, by the following argument. The inequalities with cj = 0 are independent of x3, so either they are satisfied for all x3 or for no x3; however, as previously noted the feasible set of (37) is non-empty (for \({{{\bf{x}}}}\in {\left[0,1\right]}^{2}\)), so the former must be the case. This implies that removing them does not change the maximum value. As for the inequalities with cj < 0, notice that they are lower bounds on x3, which means that removing them also does not change the maximum value (as long as the original maximization (37) is feasible, which it indeed is as noted previously). Thus the expressions (37) and (38) are equal for any \({{{\bf{x}}}}\in {\left[0,1\right]}^{2}\).

It remains to show that the original definition (36) of fn is equal to (38) (when treating the latter as a function of x on the same domain). To do so, we show that they have the same subgraph. The subgraph of (36) is

using the fact that cj > 0 for all \(j\in {{{\mathcal{S}}}}\). (To be precise, in those expressions x should be restricted to the function domain, but the argument at this step holds regardless of whether we take the domain to be \({\left[0,1\right]}^{2}\) or \({{\mathbb{R}}}^{2}\).) The last line is the subgraph of (38), so indeed the functions are equal.

With the formula (37), we can prove some intuitive properties of fn, which will be useful in subsequent arguments:

Proposition 5

...

with fn(x) = f(x) whenever \({{{\bf{x}}}}\in {{{{\mathcal{L}}}}}_{n}\).

Proof

We showed in Proposition 6 that \({{{{\mathscr{P}}}}}_{n}\) lies on or below the graph of f. Hence (42) follows immediately from the formula (37).

As for the equality condition, we note that for all \({{{\bf{x}}}}\in {{{{\mathcal{L}}}}}_{n}\) we have that (x, f(x)) lies in \({{{{\mathscr{P}}}}}_{n}\) (by construction). Hence (37) implies fn(x) ≥ f(x), which implies they must be equal since the reverse inequality (42) holds in general.

Proposition 6

Proof

By increasing n by one, we don’t remove any lattice points, but rather just add additional points by halving the intervals in both directions. Thus we have \({{{{\mathscr{P}}}}}_{n}\subseteq {{{{\mathscr{P}}}}}_{n+1}\), which yields the desired inequality via (37).

We now show the sequence fn indeed converges uniformly to f, so it yields arbitrarily tight bounds. The main intuition is that fn forms a monotone sequence of uniformly continuous functions that have the same value as f on an increasingly fine grid.

Proposition 7

As n → ∞, fn converges uniformly to f.

Proof

We note that three conditions must hold:

The first statement just says that one can create an arbitrarily fine grid \({{{{\mathcal{L}}}}}_{n}\). The other two statements follow from the fact that continuous functions on compact sets are automatically uniformly continuous.

To show uniform convergence, we would need to prove that

To prove this, consider any ϵ > 0, and choose n by the following procedure: set ϵ1 < ϵ/2 and ϵ2 < ϵ/2, and take some corresponding δ1, δ2 according to (45)–(46). Then set \(\delta \, < \,\min ({\delta }_{1},{\delta }_{2})\), and take a corresponding \(n\in {\mathbb{N}}\) according to (44). This choice of n has the desired property: for all \({n}^{\prime}\,\ge \,n\) and for all \({{{\bf{x}}}}\in {\left[0,1\right]}^{2}\), (44) ensures that there exists some \({{{\bf{y}}}}\in {{{{\mathcal{L}}}}}_{n}\) satisfying ∣x − y∣ < δ, hence

where the first line follows from Propositions 8 and 9, while the third line follows from Proposition 8.

Creating an SDP algorithm that minimizes the fidelity

Without loss of generality, we ignore the factor 1/Pr(00) in the fidelity expression, as it is a positive constant (for a given distribution Pr(ab∣xy)). We consider the functions f and fn defined in the previous section, but as previously discussed, we now view them as functions of pi (though with dependence only on the Pr(00∣i), Pr(11∣i) terms), i.e. so we have \(f({{{{\bf{p}}}}}^{i})=\sqrt{\Pr (00| i)\Pr (11| i)}\) and analogously for fn. Since fn is a lower bound on f, our optimization problem (after dropping the 1/Pr(00) factor) is clearly lower bounded by the following:

we can show that this lower bound converges uniformly to the original problem (8) as n → ∞, using our previous results about convergence of fn:

Proposition 8

As n → ∞, the optimal value of (52) converges uniformly to that of (8) (rescaled by the constant factor of 1/Pr(00)).

Proof

We denote the solutions to the original and approximate optimization problems by f⋆(p) and \({f}_{n}^{\star }({{{\bf{p}}}})\), respectively. For all \({{{\bf{p}}}}\in {{{{\mathcal{Q}}}}}_{{{{\mathcal{X}}}},{{{\mathcal{Y}}}}}\), for all \(n\in {\mathbb{N}}\), and for all ϵ1 > 0, there exists a probability distribution Pr(i) and a set of quantum realizable probability distributions pi that satisfy the optimization constraints, such that

As fn(pi) converges uniformly to f(pi), for all ϵ2 > 0, there exists an n such that for all \({n}^{\prime}\,\ge \,n\) and all \({{{{\bf{p}}}}}^{i}\in {{{{\mathcal{Q}}}}}_{{{{\mathcal{X}}}},{{{\mathcal{Y}}}}}\),

if one chooses ϵ1 + ϵ2 < ϵ, then

as desired.

We now describe how to bound (52) using SDPs.

Proposition 9

(52) can be solved via an SDP hierarchy.

Proof

To reduce the sum to a bounded number of terms, we adopt the approach from24,25. Specifically, consider any feasible point of the optimization (52), i.e. some feasible values for pi and Pr(i). Following the previous section, let \({{{\mathcal{S}}}}\) denote the indices of the affine functions used to define fn.

Partition the summation domain of i into subsets \({\{{{{{\mathcal{R}}}}}_{j}\}}_{j\in {{{\mathcal{S}}}}}\), such that \(i\in {{{{\mathcal{R}}}}}_{j}\) implies the minimum in the definition (36) of fn(pi) is attained by the index j. In other words, \(i\in {{{{\mathcal{R}}}}}_{j}\) implies fn(pi) = hj(pi). (Geometrically speaking, we are partitioning the terms based on which facet of \({{{{\mathscr{P}}}}}_{n}\) they lie on.) Let us define

Note that the choice of partition may not be unique, e.g. if the feasible point being considered has a pi term where the minimum in the definition (36) is attained by more than one \(j\in {{{\mathcal{S}}}}\). However, this nonuniqueness is not a problem; any partition with the specified property suffices. Also, some \({{{{\mathcal{R}}}}}_{j}\) may be empty, but this is not a problem either; one should simply select an arbitrary distribution \({\tilde{{{{\bf{p}}}}}}^{j}\in {{{{\mathcal{Q}}}}}_{{{{\mathcal{X}}}},{{{\mathcal{Y}}}}}\) instead of using (59) (since the denominator is zero if \({{{{\mathcal{R}}}}}_{j}\) is empty).

Then we can rewrite

where in the third line we used the fact that hj is affine and \({\{\Pr (i)/{\tilde{{{{\rm{P}}}}}}_{j}\}}_{i}\) forms a normalized probability distribution over \(i\in {{{{\mathcal{R}}}}}_{j}\).

Observe that \({\tilde{{{{\bf{p}}}}}}^{j}\in {{{{\mathcal{Q}}}}}_{{{{\mathcal{X}}}},{{{\mathcal{Y}}}}}\) (by convexity of \({{{{\mathcal{Q}}}}}_{{{{\mathcal{X}}}},{{{\mathcal{Y}}}}}\)), and that \({\tilde{{{{\rm{P}}}}}}_{j}\) is a valid probability distribution (over \(j\in {{{\mathcal{S}}}}\)) since the sets \({{{{\mathcal{R}}}}}_{j}\) partition the sum over i. Together with the expression (62), this implies that if we replace the original objective function with

the value of the optimization will not increase, since every feasible point of the original optimization yields another feasible point with the same objective value but in the form (63). In other words, by rewriting the objective function in the form (63), we have essentially taken pi and Pr(i) to be the \({\tilde{{{{\bf{p}}}}}}^{j}\) and \({\tilde{{{{\rm{P}}}}}}_{j}\) we constructed above. Furthermore, since we constructed fn via (36),

holds for all \({{{{\bf{p}}}}}^{i}\in {{{{\mathcal{Q}}}}}_{{{{\mathcal{X}}}},{{{\mathcal{Y}}}}}\). Thus (63) is a natural upper bound on our previous objective function in (52), so replacing the latter with the former will not decrease the optimal value either. In summary, we can replace the objective function with (63) without changing the optimal value, which is useful because it is the sum of a (known) finite number of terms; also, we no longer need to address the minimization in the definition of fn.

However, since hi(pi)Pr(i) is a product of affine functions of the optimization variables, (63) is still not an affine function. To deal with this, we consider subnormalized probability distributions, i.e. the terms in the distribution sum up to a value in [0, 1] instead of having to sum to 1 (here we mean summing over (a, b) for each choice of (x, y); also, we impose that all (x, y) have the same normalization factor). In our case, we scale the probability distributions pi by the scaling factor Pr(i), i.e. we define new variables \({\breve{{{{\bf{p}}}}}}^{i}=\Pr (i){{{{\bf{p}}}}}^{i}\). To verify that the objective function is affine in these new variables, we show that each term in the summation is affine. Writing hi(pi) in the form ai + ai ⋅ pi for some scalar ai and vector ai, we have

where z is a vector, which contains only zeroes and ones, that specifies the terms summed over in (67) (this is possible since each Pr(ab∣00i)Pr(i) term is equal to an element of \({\breve{{{{\bf{p}}}}}}^{i}\); also, note that the choice to use the input pair xy = 00 at that step is arbitrary and any other pair would suffice). This is indeed affine (in fact linear) in \({\breve{{{{\bf{p}}}}}}^{i}\). As for the constraints, observe that the first constraint is linear in \({\breve{{{{\bf{p}}}}}}^{i}\). Also, as long as p is normalized, we can replace the second and third constraints by a single constraint \({\breve{{{{\bf{p}}}}}}^{i}\in {\breve{{{{\mathcal{Q}}}}}}_{{{{\mathcal{X}}}},{{{\mathcal{Y}}}}}\), where \({\breve{{{{\mathcal{Q}}}}}}_{{{{\mathcal{X}}}},{{{\mathcal{Y}}}}}\) denotes subnormalized distributions compatible with quantum theory (and with a common normalization factor for all input pairs (x, y)). This is because when p is normalized, the first constraint implicitly imposes a normalization condition on the variables \({\breve{{{{\bf{p}}}}}}^{i}\) that subsumes the original third constraint.

In summary, the optimal value of (52) is the same as

where ai, ai, z are the values described above regarding (68). Finally, we use the fact that there exists a SDP hierarchy for the verification of subnormalized quantum probability distributions \({\breve{{{{\mathcal{Q}}}}}}_{{{{\mathcal{X}}}},{{{\mathcal{Y}}}}}\)23,24,25. Hence, the entire constrained optimization can be lower-bounded by using this hierarchy of SDP relaxations to impose the \({\breve{{{{\mathcal{Q}}}}}}_{{{{\mathcal{X}}}},{{{\mathcal{Y}}}}}\) constraint, yielding a sequence of increasingly tight lower bounds on the optimization. Note that each level of the hierarchy yields a certified lower bound, i.e. our results are never an over-estimate of the true minimum of the optimization.

We close this section with some implementation remarks. For Fig. 1a, we used a 4 × 4 lattice to construct the bound fn, and NPA level 2. For Fig. 1b, c, we used an 8 × 8 lattice and NPA level 3 (for the latter case, we found that NPA levels 2 and 4 also gave basically the same results). The SDP runtime was not too long in all cases, ranging from a few seconds to under 15 minutes (for each data point on the graphs), depending on the size of the scenarios.

In principle, there are two ways in which our bounds might not be tight: first, we have replaced f with fn; second, the SDP hierarchy of23 may not have converged to a sufficiently tight bound. We consider the latter to be less of an issue, because this hierarchy typically performs well in situations with few inputs and outputs (for instance in Fig. 1c, which was the main example supporting our reasoning that Theorem 1 may not be a necessary condition). As for the former, we performed some checks by noting that every feasible point of the optimization we solve (namely, (69) with the constraint \({\breve{{{{\bf{p}}}}}}^{i}\in {\breve{{{{\mathcal{Q}}}}}}_{{{{\mathcal{X}}}},{{{\mathcal{Y}}}}}\) relaxed to the SDP hierarchy) gives us a feasible point of the original optimization (8) (albeit with the constraint \({{{{\bf{p}}}}}^{i}\in {{{{\mathcal{Q}}}}}_{{{{\mathcal{X}}}},{{{\mathcal{Y}}}}}\) relaxed to the SDP hierarchy). We found that for points near the thresholds shown in Fig. 1b, c, the corresponding feasible values in that original optimization were within 0.0003 of the lower bounds we obtained, indicating that the bounds are almost tight. (For Fig. 1a we found a bigger gap of about 0.03 using a 6 × 6 lattice to find feasible points, but this is also not too large).

Note that by applying Carathéodory’s theorem for convex hulls, we can argue the minimum value in the optimization (52) can always be attained by a distribution Pr(i) with at most d + 2 nonzero terms, where d is the dimension of p. This eventually implies that the minimum value in our final optimization (69) can be attained with at most d + 2 of the subnormalized distributions \({\breve{{{{\bf{p}}}}}}^{i}\) being nonzero. In practice, d + 2 is often smaller than the number of affine bounds hi (i.e. \(| {{{\mathcal{S}}}}|\)). Hence if the optimization (69) is too large to solve directly, an alternative approach in principle is to run it for every subset of \({{{\mathcal{S}}}}\) with size d + 2, then take the smallest of the resulting values. This reduces the size of each individual optimization, but comes at the cost of having to run many more of them.

As another point regarding efficiency, note that our construction of fn involves a transformation to the facet representation of \({{{{\mathscr{P}}}}}_{n}\). While this can be quickly implemented when x is 2-dimensional, the transformation may be computationally demanding in high dimensions37. It would be interesting to know whether there are more efficiently computable alternatives.

A natural attempt would be to partition the domain of f into triangles (more generally, simplices) and construct an affine lower bound in each triangle, yielding a piecewise affine lower bound on f. One benefit of this approach is that it may be usable (though not necessarily straightforward) in some cases when f is not concave, whereas our current construction of fn relies heavily on concavity of f. However, it runs into the subtle issue that having fn be a pointwise minimum of affine functions is a stronger condition than simply requiring it to be piecewise affine; in particular, our analysis used the structure in the former. (Note that it is not useful to simply take the pointwise minimum of the affine bounds constructed this way — there can be very large gaps between f and the resulting bound).

Still, it may be possible to adapt our analysis to this case. To sketch a rough outline, we would again aim to partition the sum in (52) into finitely many subsets, but this time by which of the triangles each pi lies in. Transforming to a new feasible point as in (59) (here we would need to use the convexity of the triangles to argue that each \({\tilde{{{{\bf{p}}}}}}^{j}\) still lies within its defining triangle), we should be able to perform a similar analysis to reduce the objective to the form (63), but with the summation index ranging over the triangles in the domain instead. However, to proceed further we would need to constrain each \({\tilde{{{{\bf{p}}}}}}^{j}\) to remain within the corresponding triangle, appearing as additional constraints in (52). Since these constraints can be imposed as affine constraints, the final result should still be solvable using the SDP hierarchy.

Finally, we note that in26, the authors do not convert their optimization to the form (69), but rather to a dual form via a somewhat different argument. A similar argument is possible in principle here (see35), but we choose to present our result in the form (69) since it seems most straightforward for implementation. We thank the authors of26 for clarifications on these different approaches.

Proof of Proposition 3

Proof

After Alice and Bob conduct n key-generating measurements, the resulting classical-classical-quantum tripartite state is of the form

Considering that Alice and Bob only take accepted blocks into account, i.e. D = 1, and Alice sends the message M = m, it is simple to construct the bipartite state ρCE∣M=m∧D=1, which denotes the state that describes both the value of the bit C and Eve’s corresponding side-information. As D = 1 implies that Alice’s and Bob’s measurement devices either output m or \(\overline{{{{\bf{m}}}}}={{{\bf{m}}}}\oplus {{{\bf{1}}}}\), the resulting state is given by

where

denotes Eve’s conditioned side-information11. Moreover, after symmetrization, we get \(\Pr \left({{{\bf{m}}}}{{{\bf{m}}}}\right)=\Pr \left(\overline{{{{\bf{m}}}}}\overline{{{{\bf{m}}}}}\right)=\frac{{\left(1-\epsilon \right)}^{n}}{{2}^{n}}\) and \(\Pr \left({{{\bf{m}}}}\overline{{{{\bf{m}}}}}\right)=\Pr \left(\overline{{{{\bf{m}}}}}{{{\bf{m}}}}\right)=\frac{{\epsilon }^{n}}{{2}^{n}}\), which further simplifies (72) and (73).

Eve’s ability to correctly guess C therefore depends on the distinguishability of ω0 and ω1. As C, and consequently ωi, is distributed uniformly, we may use the operational interpretation of the trace distance to derive Eve’s optimal guessing probability. The optimal probability of guessing it incorrectly is thus given by

We first consider Bob’s guess. As \(C\,\ne \,{C}^{\prime}\) only if Alice measures m and Bob measures \(\overline{{{{\bf{m}}}}}\) or vice versa,

We now consider Eve’s guess. By using the reverse triangle inequality of the 1-norm, we can get a lower bound on d(ω0, ω1) in terms of δn:

and substituting this into (74) yields

where to get the second line we used the hypothesis ρE∣01 = ρE∣10 (which implies \({\rho }_{{{{\bf{E}}}}| {{{\bf{m}}}}\overline{{{{\bf{m}}}}}}={\rho }_{{{{\bf{E}}}}| \overline{{{{\bf{m}}}}}{{{\bf{m}}}}}\)). The ‘Fuchs–van de Graaf-type’ inequality (12) then implies that

Moreover, note that we have \(g({\rho }_{{{{\bf{E}}}}| {{{\bf{m}}}}{{{\bf{m}}}}},{\rho }_{{{{\bf{E}}}}| \overline{{{{\bf{m}}}}}\overline{{{{\bf{m}}}}}})=g{({\rho }_{E| 00},{\rho }_{E| 11})}^{n}\) (by applying the I.I.D. assumption together with the multiplicative property (11), followed by the symmetry property (10)). Therefore a sufficient condition for (17) to hold is

We conclude the proof by showing that for all \(n\in {\mathbb{N}}\), (16) is equivalent to (79). Note that the inequality (12) (together with the fact that d(ρ, σ) ≤ 1) implies that g(ρ, σ) is always non-negative. Hence for all \(n\in {\mathbb{N}}\) the inequality (16) is equivalent to

This inequality can be rewritten as

The previous inequality is equivalent to

and dividing both sides by 2 gives (79).

We note that the current gap between the sufficient and necessary conditions can be viewed as arising from the ‘Fuchs–van de Graaf-type’ inequalities (12) and (13). This is because the sufficient condition (Theorem 1) proof requires lower bounds on H(C∣EM; D = 1), whereas the conjectured necessary condition needs upper bounds. As noted in the supplemental material for11, the analysis we performed above also serves an alternative approach for proving Theorem 1 (the main proof in11 instead used the inequality from36 to lower-bound H(C∣EM; D = 1)). The main idea is that H(C∣EM; D = 1) can be lower bounded by the min-entropy, which simply equals \(-\log ((1-d\left({\omega }_{0},{\omega }_{1}\right))/2)\). By performing an analysis similar to the above but using the inequality (13) instead of (12), we end up (after some asymptotic analysis) with a sufficient condition for (1) to hold, which turns out to be exactly the same as Theorem 1 (except that since the only properties of g required for this argument are (10)–(13), it would hold with any g satisfying those properties in place of the fidelity F). Note that no assumption is needed on d(ρE∣01, ρE∣10) for this direction of the proof. From this perspective, it appears that the main contribution to the gap is the difference between the bounds (12) and (13), since other steps of the proof have comparatively small effects asymptotically.

However, regarding possible choices of distinguishability measure g in this generalized version of Theorem 1, note that replacing the fidelity in the theorem statement with the pretty-good fidelity yields a worse result, due to the inequality F(ρ, σ) ≥ Fpg(ρ, σ). (The opposite was true for the necessary condition, Proposition 3). The question remains of whether there are choices for the measure g that yield better bounds for the sufficient condition.

Finally, we remark that the above analysis essentially centers around distinguishing ρE∣mm and \({\rho }_{{{{\bf{E}}}}| \overline{{{{\bf{m}}}}}\overline{{{{\bf{m}}}}}}\). Returning to our discussion of the quantum Chernoff bound, we observe that unless M = 0 or M = 1, these states are not of the form ρ⊗n and σ⊗n studied in the quantum Chernoff bound, though there are some structural similarities. (If M were restrained to M = 0 or M = 1, one may consider only M = 0, as M = 1 can be thought of as a relabeling of measurement outcomes). Since M is not restricted to these cases in general, we would need to study whether these other structurally similar states could still be analyzed using the proof techniques for the quantum Chernoff bound.

As another perspective, note that the quantum Chernoff bound in fact satisfies almost all the properties (10)–(13)31. However, instead of the equality (11), it only satisfies the inequality \(g(\rho \otimes {\rho }^{\prime},\sigma \otimes {\sigma }^{\prime})\,\ge \,g(\rho ,\sigma )g({\rho }^{\prime},{\sigma }^{\prime})\). Looking through the proofs described above for the necessary versus sufficient conditions, this means that only the proof of the latter generalizes directly if we choose g = Q. Unfortunately, Theorem 1 with the fidelity simply replaced by the quantum Chernoff bound is a worse result, because the quantities are related by F(ρ, σ) ≥ Q(ρ, σ)33 (similar to the previous situation for pretty-good fidelity). Hence an argument that simply follows the proof structure sketched above with g = Q would not yield a better result than the the original Theorem 1 statement based on fidelity — to get better results using the quantum Chernoff bound, one would need a different proof structure.

Data availability

The datasets produced in this work are available from the authors upon reasonable request.

Code availability

The MATLAB code for this paper can be found at the following URL: https://github.com/Thomas0501/Fidelity-Optimization

References

Pironio, S. et al. Device-independent quantum key distribution secure against collective attacks. New J. Phys. 11, 045021 (2009).

Scarani V. The device-independent outlook on quantum physics (lecture notes on the power of Bell’s theorem). Preprint at https://arxiv.org/abs/1303.3081 (2013).

Arnon-Friedman, R., Dupuis, F., Fawzi, O., Renner, R. & Vidick, T. Practical device-independent quantum cryptography via entropy accumulation. Nat. Commun. 9, 459 (2018).

Bennett, C. H. & Brassard, G. Quantum cryptography: Public key distribution and coin tossing. Theor. Comput. Sci. 560, 7–11 (2014).

Brunner, N., Cavalcanti, D., Pironio, S., Scarani, V. & Wehner, S. Bell nonlocality. Rev. Mod. Phys. 86, 419–478 (2014).

Barrett, J., Colbeck, R. & Kent, A. Memory attacks on device-independent quantum cryptography. Phys. Rev. Lett. 110, 010503 (2013).

Gottesman, D. & Lo, H.-K. Proof of security of quantum key distribution with two-way classical communications. IEEE Trans. Inf. Theory 49, 457–475 (2003).

Ho, M. et al. Noisy preprocessing facilitates a photonic realization of device-independent quantum key distribution. Phys. Rev. Lett. 124, 230502 (2020).

Woodhead E., Acín A., & Pironio S. Device-independent quantum key distribution based on asymmetric CHSH inequalities. Quantum. vol 5, 443 (Verein zur Forderung des Open Access Publizierens in den Quantenwissenschaften, 2021).

Sekatski P. et al. Device-independent quantum key distribution from generalized CHSH inequalities. Quantum. vol 5, 444 (Verein zur Forderung des Open Access Publizierens in den Quantenwissenschaften, 2021).

Tan, E. Y.-Z., Lim, C. C.-W. & Renner, R. Advantage distillation for device-independent quantum key distribution. Phys. Rev. Lett. 124, 020502 (2020).

Chau, H. F. Practical scheme to share a secret key through a quantum channel with a 27.6% bit error rate. Phys. Rev. A 66, 060302 (2002).

Renner, R. Security of quantum key distribution. Int. J. Quantum Inf. 06, 1–127 (2008).

Bae J. & Acín A. Key distillation from quantum channels using two-way communication protocols. Phys. Rev. A, 75, 012334 (American Physical Society, 2007).

Acín, A. et al. Secrecy properties of quantum channels. Phys. Rev. A 73, 012327 (2006).

Watanabe, S., Matsumoto, R., Uyematsu, T. & Kawano, Y. Key rate of quantum key distribution with hashed two-way classical communication. Phys. Rev. A 76, 032312 (2007).

Khatri, S. & Lütkenhaus, N. Numerical evidence for bound secrecy from two-way postprocessing in quantum key distribution. Phys. Rev. A 95, 042320 (2017).

Maurer, U. M. Secret key agreement by public discussion from common information. IEEE Trans. Inf. Theory 39, 733–742 (1993).

Wolf, S., Information-Theoretically and Computationally Secure Key Agreement in Cryptography. PhD thesis, ETH Zurich, 1999.

Scarani, V. et al. The security of practical quantum key distribution. Rev. Mod. Phys. 81, 1301–1350 (2009).

Devetak, I. & Winter, A. Distillation of secret key and entanglement from quantum states. Proc. Math. Phys. Eng. Sci. 461, 207–235 (2005).

Fuchs, C. A. & Caves, C. M. Mathematical techniques for quantum communication theory. Open Syst. Inf. Dyn. 3, 345–356 (1995).

Navascués, M., Pironio, S. & Acín, A. A convergent hierarchy of semidefinite programs characterizing the set of quantum correlations. New J. Phys. 10, 073013 (2008).

Bancal, J.-D., Sheridan, L. & Scarani, V. More randomness from the same data. New J. Phys. 16, 033011 (2014).

Nieto-Silleras, O., Pironio, S. & Silman, J. Using complete measurement statistics for optimal device-independent randomness evaluation. New J. Phys. 16, 013035 (2014).

Himbeeck T. V., & Pironio S. Correlations and randomness generation based on energy constraints. Preprint at https://arxiv.org/abs/1905.09117 (2019).

Fuchs, C. & van de Graaf, J. Cryptographic distinguishability measures for quantum-mechanical states. IEEE Trans. Inf. Theory 45, 1216–1227 (1999).

Nielsen M. A. & Chuang I. L. Quantum Computation and Quantum Information: 10th Anniversary Edition. Cambridge University Press, 2010.

Mayers, D. & Yao, A. Self testing quantum apparatus. Quantum Info. Comput. 4, 273–286 (2004).

Luo, S. & Zhang, Q. Informational distance on quantum-state space. Phys. Rev. A 69, 032106 (2004).

Audenaert, K. M. R. Comparisons between quantum state distinguishability measures. Quantum Info. Comput. 14, 31–38 (2014).

Iten, R., Renes, J. M. & Sutter, D. Pretty good measures in quantum information theory. IEEE Trans. Inf. Theory 63, 1270–1279 (2017).

Audenaert, K. M. R. et al. Discriminating states: the quantum chernoff bound. Phys. Rev. Lett. 98, 160501 (2007).

Nussbaum, M. & Szkoła, A. The Chernoff lower bound for symmetric quantum hypothesis testing. Ann. Stat. 37, 1040–1057 (2009).

Hahn T. Fidelity Bounds for Device-Independent Advantage Distillation, Master’s thesis, ETH Zurich, 2021.

Roga, W., Fannes, M. & Życzkowski, K. Universal Bounds for the Holevo Quantity, Coherent Information, and the Jensen-Shannon Divergence. Phys. Rev. Lett. 105, 040505 (2010).

Toth, C. D., O’Rourke, J., & Goodman, J. E (Eds.). Handbook of Discrete and Computational Geometry (3rd ed.). Chapman and Hall/CRC, 2017, ch. 15, pp. 383–414. https://doi.org/10.1201/9781315119601.

Johnston, N. QETLAB: A MATLAB toolbox for quantum entanglement, version 0.9. http://qetlab.com, (2016).

Grant, M. & Boyd, S. CVX: Matlab Software for Disciplined Convex Programming, version 2.1. http://cvxr.com/cvx, (2014).

Grant, M., & Boyd, S. Graph implementations for nonsmooth convex programs, in Recent Advances in Learning and Control (V. Blondel, S. Boyd, and H. Kimura, eds.), Lecture Notes in Control and Information Sciences, pp. 95–110, Springer-Verlag Berlin Heidelberg, 2008. https://web.stanford.edu/b̃oyd/papers/graph_dcp.html.

Acknowledgements

We thank Thomas van Himbeeck for very helpful details regarding the SDP reduction in26, and Renato Renner for feedback on this work. We also thank Raban Iten, Joseph M. Renes, and Marco Tomamichel for useful discussions regarding the pretty-good fidelity and quantum Chernoff bound. This project was funded by the Swiss National Science Foundation via the National Center for Competence in Research for Quantum Science and Technology (QSIT), the Air Force Office of Scientific Research (AFOSR) via grant FA9550-19-1-0202, and the QuantERA project eDICT. Part of this work was done while Thomas Hahn worked at the Weizmann Institute of Science under Rotem Arnon-Friedman. During his stay, he was supported by a research grant from the Marshall and Arlene Bennett Family Research Program. The computations were performed with the NPAHierarchy function in QETLAB38, using the CVX package39,40.

Author information

Authors and Affiliations

Contributions

Both authors contributed to the theoretical analysis and algorithm development. T. H. implemented the algorithm and generated the corresponding data.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Hahn, T.A., Tan, E.YZ. Fidelity bounds for device-independent advantage distillation. npj Quantum Inf 8, 145 (2022). https://doi.org/10.1038/s41534-022-00635-y

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41534-022-00635-y

This article is cited by

-

Fidelity bounds for device-independent advantage distillation

npj Quantum Information (2022)