Abstract

The method of classical shadows proposed by Huang, Kueng, and Preskill heralds remarkable opportunities for quantum estimation with limited measurements. Yet its relationship to established quantum tomographic approaches, particularly those based on likelihood models, remains unclear. In this article, we investigate classical shadows through the lens of Bayesian mean estimation (BME). In direct tests on numerical data, BME is found to attain significantly lower error on average, but classical shadows prove remarkably more accurate in specific situations—such as high-fidelity ground truth states—which are improbable in a fully uniform Hilbert space. We then introduce an observable-oriented pseudo-likelihood that successfully emulates the dimension-independence and state-specific optimality of classical shadows, but within a Bayesian framework that ensures only physical states. Our research reveals how classical shadows effect important departures from conventional thinking in quantum state estimation, as well as the utility of Bayesian methods for uncovering and formalizing statistical assumptions.

Similar content being viewed by others

Introduction

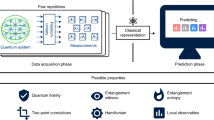

Measurement and characterization of quantum systems comprise a long-standing problem in quantum information science1. However, the exponential scaling of Hilbert space dimension with the number of qubits makes full characterization extremely challenging, inspiring a plethora of approaches designed to estimate properties of quantum states with as few measurements as possible, such as compressed sensing2,3, adaptive tomography4,5,6, matrix product state formulations7, permutationally invariant tomography8,9, and neural networks10,11,12. Very recently, a groundbreaking approach known as classical shadows was proposed and analyzed13. Building on and simplifying ideas from “shadow tomography”14, the classical shadow was shown to provide accurate predictions of observables with a fixed number of measurements, including simulated examples for quantum systems in excess of 100 qubits13. Astonishingly simple, the classical shadow is formed by collecting the results of random measurements on a repeatedly prepared input state, and inverting them through an appropriate virtual quantum channel.

However, several features of the classical shadow remain enigmatic, including its highly nonphysical nature, optimality with respect to alternative cost functions, and relationship to more conventional likelihood-based tomographic techniques. One such method, Bayesian mean estimation (BME)15, provides a conceptually straightforward path to estimate a quantum state given measured data, making use of prior knowledge, and providing meaningful error bars for any experimental conditions. BME appears particularly well-suited for contextualizing classical shadows, since it returns a principled estimate under any number of measurements (even zero), and is optimal in terms of minimizing average-squared error16.

In this work, we directly compare the estimates of classical shadows and BME for identical simulated datasets. For particular observables with relatively improbable values from the perspective of BME, shadow is found to reach the ground truth with significantly fewer measurements. However, after properly reformulating the problem under test for consistency with the Bayesian prior, the situation reverses, with BME returning estimates possessing lower error on average. In the latter portion of our investigation, we seek to construct a BME model emulating the key features of the classical shadow, but with positive semidefinite states as support. While complicated by the shadow’s nonphysical nature, we ultimately propose an observable-oriented pseudo-likelihood that rates quantum states by their observable values with respect to those of shadow. Our pseudo-likelihood successfully mimics the dimension independence of shadow, with the advantage of delivering entirely physical estimates for any number of measurements.

Results

Problem formulation

For our analysis, we invoke the setup of the original classical shadow proposal13. Consider a D-dimensional Hilbert space occupied by a ground truth quantum state ρg that can be repeatedly prepared. On each preparation m, ρg is subjected to a randomly chosen D × D unitary Um and one measurement is performed in the computational basis, leaving result \(\left|{b}_{m}\right\rangle\). Defining \(\left|{\psi }_{m}\right\rangle ={U}_{m}^{\dagger }\left|{b}_{m}\right\rangle\), the classical snapshot associated with measurement m follows as \({{\mathcal{M}}}^{-1}(\left|{\psi }_{m}\right\rangle \left\langle {\psi }_{m}\right|)\), where \({\mathcal{M}}(\cdot )\) is the quantum channel defined by averaging over all possible unitaries and outcomes.

We assume the Um are drawn from the set of D × D Haar-random unitaries, in which case \({{\mathcal{M}}}^{-1}(\left|{\psi }_{m}\right\rangle \left\langle {\psi }_{m}\right|)=(D+1)\left|{\psi }_{m}\right\rangle \left\langle {\psi }_{m}\right|-{I}_{D}\), with ID the D × D identity matrix13. (This channel holds for the more restricted class of random Cliffords as well17,18.) Averaging over M measurements yields the shadow estimator

(In what follows, the phrases “classical shadow,” “shadow estimator,” and simply “shadow” refer interchangeably to this estimator, as well as the procedure more generally.) In this form, the simplicity of ρs is evident: it is merely a scaled and recentered average of all observed outcomes. Interestingly, though, ρs is in general not positive semidefinite; for M < D, ρs possesses at least D − M eigenvalues equal to −1. Accordingly, in the targeted regime for classical shadows of M ≪ D, ρs is highly nonphysical. Understanding the impact of the shadow estimator’s negativity is a central goal of the present study. Finally, defining λ as the expectation of the observable Λ (\(\lambda ={\rm{Tr}}\rho {{\Lambda }}\)), the shadow estimate thereof follows as

to be compared to the ground truth \({\lambda }^{(g)}={\rm{Tr}}\,{\rho }_{g}{{\Lambda }}\).

As an aside, we note that ref. 13 employed an additional statistical technique, “median of means,” to reduce the impact of outliers by partitioning the M outcomes into K subsets and taking the median as the estimate λ(s). In the interests of simplicity and ease of comparison, we focus on K = 1 in Eq. (1). We expect the benefits of selecting K > 1 would prove similar in both the shadow and Bayesian cases19, but work on this is beyond the scope of the present investigation.

In the Bayesian paradigm, the same set of measurement outcomes \({\mathcal{D}}=\{\left|{\psi }_{1}\right\rangle ,\left|{\psi }_{2}\right\rangle ,...,\left|{\psi }_{M}\right\rangle \}\) is related to a possible density matrix ρ(x) via a likelihood consisting of the product of probabilities set by Born’s rule:

that is, \({L}_{{\mathcal{D}}}({\bf{x}})\propto \Pr ({\mathcal{D}}| \rho )\)—the probability of receiving the dataset \({\mathcal{D}}\) given quantum state ρ. Some prior distribution π0(x) is also assumed, defined for parameters x such that ρ(x) is always physical: trace-1, Hermitian, and positive semidefinite. Then the posterior describing the distribution of x given the observed data \({\mathcal{D}}\) ensues from Bayes’ rule:

Note that the randomness of the chosen unitaries Um does not enter the Bayesian model; only the outcomes \(\left|{\psi }_{m}\right\rangle\) play a role. The selection of unitary Um is independent of the (unknown) density matrix, i.e., Pr(Um = U∣ρ) = Pr(Um = U); thus any probabilities would cancel out through the normalization factor \({\mathcal{Z}}\) in Eq. (4). Intuitively, in the Bayesian view, the experimenter knows the unitaries exactly post-experiment, regardless of how they were chosen, so imposing uncertainty on them in the estimation process proves superfluous. Consequently, while the uncertainty of BME depends strongly on the variety of measurements chosen, the theory does not, a conspicuous departure from shadow where the distribution of Um enters directly through the inverted quantum channel \({{\mathcal{M}}}^{-1}(\cdot )\).

Formally, the posterior distribution in Eq. (4) completes the Bayesian model. From this, one can estimate any function of ρ(x), including uncertainties, since the full probability distribution π(x) is available. The ability to quantify uncertainty in a single, consistent framework is an extremely attractive feature of Bayesian methods in general. In the present work, however, the goal is to compare Bayesian tomography with the classical shadow—which is itself a point estimator—so we focus on BME specifically, which for some observable Λ returns the point estimator defined as

where the last two lines follow, respectively, from the linearity of the trace operation and defining the Bayesian mean ρB = ∫dx π(x)ρ(x). This convenient simplification, in which the Bayesian mean of a quantity is simply its value at ρB, holds for linear functions of ρ, which includes all quantum observables and which we focus on in this article. In addition, selection of the mean as our particular Bayesian point estimator for comparison is motivated by the fact that it minimizes the mean-squared error (MSE) averaged over all possible states and outcomes. Specifically, λ(B) defined above is the function of \({\mathcal{D}}\) such that

with \(\pi ({\bf{x}},{\mathcal{D}})\) the joint distribution over data and parameters16. This optimality is nonasymptotic, holding for any number or collection of unitaries {U1, U2, ..., UM}. Considering the widely different expressions for ρs [Eq. (1)] and ρB [Eq. (5)], we found it remarkable just how well ρs performed in ref. 13 in light of BME’s optimality in Eq. (6); it was this feature which initially inspired us to develop a thorough comparison between shadow and BME. So while focusing on the Bayesian mean point estimator admittedly underutilizes the full depth of Bayesian methods, it provides a direct path for side-by-side comparison with classical shadows, along with a quantitative optimality condition.

In general, comparing the performance of estimators derived from classical (frequentist) statistics—like ρs—with those from Bayesian methods proves tricky business, since they view uncertainty in functionally different ways. Therefore, we adopt a pragmatic view which aligns with the interests of experimentalists: perform experiments, compute the associated shadow and BME estimators, and calculate their error with respect to actual values. While the final step is not always possible in practice, it is in numerical simulation, where the ground truth ρg is known exactly. Doing so enables us to illuminate the advantages and disadvantages of both approaches on equal footing. We employ the approach described in the “Methods” section for obtaining simulated datasets \({\mathcal{D}}\).

Picture 1: fixed ground truth

As our first benchmark, we compare the performance of ρs and ρB in estimating three rank-1 observables, of which fidelities and entanglement witnesses form an important and experimentally relevant subset. Specifically, we consider ground truth \({\rho }_{g}=\left|0\right\rangle \left\langle 0\right|\) and observables \({{{\Lambda }}}_{n}=\left|{\phi }_{n}\right\rangle \left\langle {\phi }_{n}\right|\) (n = 0, 1, 2) where

These possess ground truth values equally spaced within the physically allowed range for trace-1, rank-1 observables: \({\lambda }_{0}^{(g)}=1\), \({\lambda }_{1}^{(g)}=\frac{1}{2}\), and \({\lambda }_{2}^{(g)}=0\). The shadow estimator is readily obtained from Eq. (1), so we compute ρs for all M ∈ {1, 2, ..., 1000}, where M defines the set containing the first M measurements: \({\mathcal{D}}=\{\left|{\psi }_{m}\right\rangle ;\ m=1,2,...,M\}\).

On the other hand, ρB requires evaluation of the high-dimensional integral ∫dx π(x)ρ(x). To that end, we summon Markov chain Monte Carlo (MCMC) methods, several of which have been explored in the context of quantum state estimation, including Metropolis–Hastings15,20, Hamiltonian Monte Carlo21, sequential Monte Carlo (SMC)22, and slice sampling23,24; we select the preconditioned Crank–Nicolson (pCN) algorithm25 applied to quantum state estimation in ref. 26. Finally, because of our assumed pure state ground truth, we take as prior all pure states \(\rho =\left|\psi \right\rangle \left\langle \psi \right|\) uniformly distributed on the complex D-dimensional unit hypersphere. Numerically, the parameters x reduce to a D-dimensional complex column vector, so we have \({\pi }_{0}({\bf{x}})\propto \exp \left(-\frac{1}{2}{{\bf{x}}}^{\dagger }{\bf{x}}\right)\), \(\rho ({\bf{x}})=\frac{{\bf{x}}{{\bf{x}}}^{\dagger }}{| {\bf{x}}{| }^{2}}\), and \({\mathrm{d}}{\bf{x}}=\mathop{\prod }\nolimits_{l = 1}^{D}{\mathrm{d}}({\rm{Re}}{x}_{l}){\mathrm{d}}({\rm{Im}}{x}_{l})\) with xl denoting a single component of x.

The use of pure states is not central to the BME formalism whatsoever, but does permit us to simulate in higher dimensions than otherwise possible. With pure states only, our parameterization entails 2D real numbers, compared to 2D2 + D for mixed states. As an example, for D = 256, the pure state prior, and likelihood of Eq. (3), each MCMC chain takes ~10 min to converge on our desktop computer, which for the 400 settings involved in Fig. 1b amounts to ~2.5 days. Based on previous studies26, the mixed state version would therefore have been completely unfeasible at this dimension with our computational resources, likely taking weeks (or more) to complete. (Incorporating some of the methods suggested in ref. 26 in further research, such as embedding within SMC samplers and parallelization, should permit the extension to significantly larger D and mixed states.) With pure states, then, we can focus more directly on dimensional scaling and the statistics from many trials.

For each trial, we perform BME for eight collections of measurements M ∈ {1, 50, 100, 200, 400, 600, 800, 1000}. We keep R = 210 samples from each chain of length RT, where we select the thinning factor T empirically to obtain convergence. (See “Methods” for more details on the MCMC chains, including convergence metrics and marginal distributions.) Figure 1 plots the estimates for all 50 trials obtained by both shadow and BME for D = 32 (Fig. 1a) and D = 256 (Fig. 1b). A thinning value of T = 29 (T = 212) is used for D = 32 (D = 256). Each column corresponds to a particular expectation value λn; the bottom row shows the MSE with respect to the ground truth, averaged over all trials defined as \({\left\langle | {\lambda }_{n}^{(\cdot )}-{\lambda }_{n}^{(g)}{| }^{2}\right\rangle }_{{\rm{trials}}}\) with ⋅ = s for the shadow and ⋅ = B for BME. The classical shadows show wide variation for low M, including highly nonphysical estimates (\({\lambda }_{n}^{(s)}>1\) or < 0), but they converge to ground truth values rapidly, with nearly identical rates for all observables and dimensions. This is confirmed quantitatively in the MSE curves that attain values of ~10−3 by M = 1000 for all cases.

The behavior proves vastly different for BME. While physical estimates are always returned, the number of measurements needed to reach the ground truth varies strongly both with observable λn and with dimension D. Intriguingly, shadow shows significantly lower MSE for λ0 and λ1, widening as D increases. On first glance, this presents a paradox: Eq. (6) implies that \({\lambda }_{n}^{(B)}\) should possess the lowest possible MSE for any n and M, and yet \({\lambda }_{n}^{(s)}\) convincingly surpasses it these cases. Yet this dilemma can be resolved by studying the prior π0(x). When the Bayesian model assigns equal a priori weights to all possible states—a sensible choice for an uninformative prior—this by implication makes observable values such as \({\lambda }_{0}^{(g)}=1\) highly unlikely, since only one state in the domain attains this. On the other hand, expectations for any rank-1 projector Λ on the order of \(\lambda \sim \frac{1}{D}\) are to be expected initially since \(\int {\mathrm{d}}{\bf{x}}\ {\pi }_{0}({\bf{x}}){\rm{Tr}}\,\rho ({\bf{x}}){{\Lambda }}=\frac{1}{D}\). This manifests itself in Fig. 1 in BME’s much lower MSE for λ2, whose ground truth value \({\lambda }_{2}^{(g)}=0\) is much more probable. Thus, by running 50 repeated trials with the same ground truth \({\rho }_{g}=\left|0\right\rangle \left\langle 0\right|\), the situation over which we average does not accurately reflect the uninformative prior; the conditions for BME optimality are not met.

Picture 2: random ground truth

To accurately reflect uninformative prior knowledge, we therefore must prepare random ground truth states in our simulations. To do so, we leverage the equivalence between (i) randomly prepared input states with a fixed observable—the situation of interest—and (ii) random selection of an observable for a fixed input. Consider the expectation of observable Λ, where the quantum state is rotated by some random unitary U:

Thus, one can emulate the effect of a randomized state by randomly rotating the observable and evaluating it on a fixed state. Practically speaking, we are free to employ the same simulated datasets and estimators ρs and ρB above, but select at random a different projector \({{\Lambda }}=\left|\phi \right\rangle \left\langle \phi \right|\) for each trial. This is equivalent to performing all trials with a random ground truth, but a fixed observable. We call this randomized evaluation “Picture 2” to distinguish it from the fixed ground truth case above (Picture 1).

Results appear in Fig. 2 for (a) D = 32 and (b) D = 256. The first column plots the ground truth value λ(g) for each trial, the next three columns plot the shadow and BME estimates for increasing numbers of measurements, and the final column presents the MSE with respect to the ground truth. Now BME returns much more accurate estimates than shadow on average, and the paradox regarding Bayesian optimality is solved: the Bayesian mean gives the lowest MSE as long as the prior accurately reflects the true uncertainty of the system under test. Accordingly, this BME study clarifies an underlying assumption in selecting observables in Picture 1: being able to “guess” an observable with such high overlap to the ground truth suggests that one is not really operating under the neutrality implied by a uniform prior; an informative prior would more accurately reflect the situation.

This observation brings to light an interesting question of motivation in a given quantum experiment. In the sense of ensuring that any estimate is adequately justified by the data, the idea of “baking in” a prior favoring some subset of quantum states is undesirable. And yet, in many situations the researcher does have strong beliefs—or at least hopefulness—about the state being prepared, and wants to verify this by computing an observable, such as fidelity, where it is desired that λ(g) ~ 1. In this case, one wishes to validate such high values quickly with few measurements, but likely does not care so much about how well the procedure can estimate the ground truth when it is low (e.g., when \({\lambda }^{(g)} \sim \frac{1}{D}\)), since this situation suggests a poorly prepared state anyway. Accordingly, the felt cost is stronger when error is higher for situations with \({\lambda }^{(g)}\gg \frac{1}{D}\) than when \({\lambda }^{(g)} \sim \frac{1}{D}\), which is not captured by the standard MSE, as expressed in Eq. (6). And as shown in our tests here, it is precisely these improbable situations wherein shadow excels over BME. Thus, our simulations reveal one surprising reason classical shadows are so powerful: they perform well within those subspaces of the entire Hilbert space that are of interest to a high-fidelity system.

Emulating classical shadows with BME

The dimension independence and rapid convergence of classical shadows for cases of interest indicate the value of a Bayesian version with similar features, both to gain further insight into shadow itself and to improve thereon by ensuring only physically acceptable states. A simple approach for custom Bayesian models, gaining traction in “probably approximately correct” (PAC) learning27, proposes use of a pseudo-likelihood that rates a prospective state’s suitability through a cost function, instead of a full likelihood based on a physical model. In quantum state tomography in particular, quadratic costs of the form \(\parallel \rho -\tilde{\rho }{\parallel }_{F}^{2}\) have been explored20,26, where \(\tilde{\rho }\) signifies some point estimator and \(\parallel A{\parallel }_{F}=\sqrt{{\rm{Tr}}{A}^{\dagger }A}\) the Frobenius norm. Therefore, to obtain a physical state with properties similar to ρs, we first suggest the pseudo-likelihood

The constant K establishes the relative weight of prior and likelihood. Previously, we suggested K = M for reasonable uncertainty quantification26; here we consider K = MD to impart dimension independence. (Incidentally, we have found no significant modifications to the results below when testing with K ≫ MD.)

Figure 3a, b shows the BME results obtained for D = 32 and D = 256, respectively, where we again return to Picture 1 with fixed ground truth for all trials. For the tests here, thinning of T = 28 (T = 210) is used for the D = 32 (D = 256) MCMC chains. Compared to the shadow results of Fig. 1, the BME predictions still do not reach ground truth values for λ0 and λ1 efficiently. This proves intriguing, since \(\parallel \rho -{\rho }_{s}{\parallel }_{F}^{2}\) with \(\rho =\left|\psi \right\rangle \left\langle \psi \right|\) is minimized precisely by states for which \(\left\langle \psi | {\rho }_{s}| \psi \right\rangle\) is large. So if \({\lambda }_{0}^{(s)}=\left\langle g| {\rho }_{s}| g\right\rangle \sim 1\) (cf. Fig. 1), it is odd that predictions using a BME value maximizing \(\left\langle \psi | {\rho }_{s}| \psi \right\rangle\) looks so different for D = 256. The origin of this discrepancy, however, lies in ρs’s nonphysicality.

Plotting the average overlap between shadow and Bayesian samples (\({\rm{Tr}}\,{\rho }_{B}{\rho }_{s}\)) in Fig. 3c, d, we find that ρB overlaps with ρs more strongly than the ground truth \({\rho }_{g}=\left|g\right\rangle \left\langle g\right|\). Because ρs is not positive semidefinite, \({\rm{Tr}}\,{\rho }_{B}{\rho }_{s}>1\) for all cases examined. Thus, the BME procedure succeeds in finding states with strong overlap to the shadow, but the closest physical state to ρs is not the ground truth, even though \(\left\langle g| {\rho }_{s}| g\right\rangle \sim 1\). Intuitively, this nonphysicality helps explain why observables with highly improbable values from the Bayesian view are estimated so much more efficiently with shadow. For a parameterization over physical states and rank-1 observable Λ, only a single state in the Hilbert space attains λ = 1, and since this represents the maximum value possible for any valid quantum state, it can only be approached from below. On the other hand, a continuum of shadow estimators ρs permit λ = 1, for ρs is constrained only by Hermiticity and unit trace—not positive semidefiniteness. Therefore, the estimate λ(s) can err on either the high or low side (cf. Fig. 1), pulling the shadow more rapidly to the ground truth in these extreme cases.

This discloses the second central finding of our investigation: the nonphysicality of ρs is not a deficiency, but rather critical to obtaining dimension independence. Thus, the key features of the shadow are not necessarily translated onto physical projections like the BME model here or, for that matter, alternative projected-least-squares approaches28,29. (As an additional check, we performed the algorithm of ref. 28 to determine the closest physical density matrix to ρs, finding very similar results as Fig. 3. This indicates that our projection conclusions are not an artifact of the pure state prior, but hold for general mixed states as well.) While strange from the conventional wisdom of maximum likelihood and BME, nonphysical states are actually beneficial for classical shadows.

Deriving a positive semidefinite model emulating classical shadows remains an intriguing question, however, to eliminate unphysical estimates while retaining the favorable scaling features. With projecting directly onto ρs proving unfruitful to this end, we note that, indeed, ρs was never intended to serve as an accurate substitute for the true ρg; instead, it facilitates estimates of observables13. Accordingly, we propose the “observable-oriented pseudo-likelihood”

where we insert the estimates \({\lambda }_{n}^{(s)}\) of N observables from ρs. This formalism ensures only physical values are returned [through the prior π0(x)], and rates the fitness of proposed states through their overlap with respect to shadow’s predictions of observables only. For dimension independence, we again set K = MD and perform BME for all simulated datasets and N = 3 above, thinning to T = 210 (T = 213) for D = 32 (D = 256).

The results follow in Fig. 4. Now BME shows very similar behavior to shadow: the MSE with respect to the ground truth matches shadow results from Fig. 1 closely, though BME still outperforms for λ2. Yet unlike shadow, BME here always gives physically permissible estimates (\({\lambda }_{n}^{(B)}\in [0,1]\)). This pseudo-likelihood therefore attains the goal of a BME model commensurate with classical shadows.

a Results for D = 32. b Results for D = 256. The MSE values for shadow from Fig. 1 are reproduced for comparison.

Yet it is important to emphasize that this approach depends heavily on the quality of the classical shadow. It refines estimates from the shadow with its positive semidefinite requirement, but it does not do markedly better at estimating the ground truth state—at least for arbitrary observables. As an example, we repeat the inference procedure for an observable-oriented pseudo-likelihood based solely on Λ1, i.e.,

which has ground truth value \({\lambda }_{2}^{(g)}=\frac{1}{2}\). Results for the D = 32 case appear in Fig. 5, where we plot the Bayesian estimates for all three observables even though the psuedo-likelihood is based on λ1 only. The estimate \({\lambda }_{1}^{(B)}\) closely matches shadow as designed, and \({\lambda }_{2}^{(B)}\) agrees with the ground truth well, due to the fact its value is highly probable for a uniform prior. But \({\lambda }_{0}^{(B)}\to \sim \frac{1}{4}\), far from \({\lambda }_{0}^{(g)}=1\).

The shadow MSE values from Fig. 1 are reprinted for clarity.

When using the pseudo-likelihood above, all quantum states with identical overlap to Λ1 are equally probable, of which the ground truth ρg represents just one possibility. The estimate of λ0 given only λ1 information reflects the inherent uncertainty within this specification. So to summarize, our observable-oriented pseudo-likelihood builds physicality into shadow, yet it can only (in general) accurately predict the N observables injected into it: to infer quantities beyond these N can prove unreliable.

Highly informative prior

In the above attempts to mimic classical shadows with BME, we focused on pseudo-likelihoods that explicitly leaned on shadow, either through ρs itself [Eq. (9)] or through a set of shadow-estimated observables [Eqs. (10) and (11)]. However, rather than modifying the likelihood (which is based on the physical considerations of Born’s rule), a conceptually more satisfying approach would be to modify the prior π0(x) such that any assumptions within classical shadows can be fully incorporated in the BME process without explicitly invoking the unphysical state ρs or quantities derived from it.

Unfortunately, while such a shadow-mimetic prior may certainly exist, we have been unable to find one. This objective is complicated by the fact that, even though classical shadows excel over BME with a uniform prior in estimating observables in particular regions of the state space, these regions are not readily defined a priori. These subspaces of high-performance change depending on the particular observable of interest, despite the fact that calculation of ρs [Eq. (1)] is independent of the observable to be estimated from it later. Therefore, we suggest one should not view the shadow as biasing a prior distribution; rather, it is working off of an entirely different cost function than MSE [Eq. (6)]—a cost function which assigns variable weights according to the ground truth value of the observable. Research into the nature of this cost optimization would be a fascinating subject for future work.

In the meantime, however, we briefly explore through example how highly informative prior distributions in BME can produce some similar features as classical shadows, albeit only qualitatively. Suppose that one has strong beliefs that the quantum system under test consists of an ideal state \(\left|0\right\rangle\), which then experiences noisy evolution modeled as a simple depolarizing channel, so that the final quantum state can be expressed as

where a ∈ [0, 1] defines the strength of the noise process. Significantly, this assumption has eliminated the Hilbert space dimension dependence of the number of unknowns: regardless of D, only a single parameter a must be inferred. As a natural choice for simulation, we select the uniform prior π0(a) = 1[0, 1](a) and consider the full likelihood of Eq. (3), which reduces to

Utilizing this simplified model, we perform MCMC integration to obtain \({\rho }_{B}=\frac{1}{{\mathcal{Z}}}\int {\mathrm{d}}a\ {L}_{{\mathcal{D}}}(a){\pi }_{0}(a)\rho(a)\) for all previously examined cases under Picture 1; the approach is identical to previous MCMC runs, except (i) thinning was not used to reach convergence in this one-parameter model, and (ii) the proposal distribution for uniform random variables in ref. 30 was applied to maintain the pCN condition. Our findings appear in Fig. 6. Similar to the results with the observable-oriented pseudo-likelihood in Fig. 4, the BME estimates converge to the ground truth rapidly in the number of measurements, remain in the physically allowable range [0, 1], and show no dependence on dimension D.

a Results for D = 32. b Results for D = 256. The shadow MSE values from Fig. 1 are plotted again for comparison.

These results are obtained without any reference to or reliance on the classical shadow ρs. Nonetheless, we emphasize that, despite the extremely high accuracy, these findings build on strong assumptions about the form of the quantum state [Eq. (12)], which are not present in ρs. This example highlights, however, that certain features of ρs—such as dimension independence—follow automatically from traditional BME as well, under the conditions of a sufficiently informative prior. Of course, whether or not such a simple prior is justified in a particular case depends entirely on one’s trust in the quantum system and the goals of the experiment; the salient point is that BME can incorporate these naturally into its formalism.

Discussion

Our numerical investigations here have elucidated two fascinating features of classical shadows:

-

1.

Classical shadows perform extremely well at predicting “unlikely” observables, i.e., those which obtain high values only on a restricted subset of states within the complete Hilbert space.

-

2.

The nonphysicality of classical shadows is critical to their dimension independence and accuracy under few measurements.

These findings do not contradict the optimality of Bayesian methods expressed in Eq. (6): BME with a full likelihood minimizes MSE for any number and collection of measurements, provided the prior distribution accurately reflects the true knowledge involved. The predictive power of ρs, then, derives from the fact that the situations in which it is much more accurate that BME are often of particular interest in practice, such as verification of a high-fidelity or highly entangled quantum state. Desiring to extend these features in the Bayesian context, we proposed an observable-oriented pseudo-likelihood that attains shadow’s dimension independence and state-specialized accuracy, with the advantage of guaranteed physicality.

Nonetheless, in all these explorations there remains one prominent sense, in which classical shadows unquestionably eclipse BME: computational efficiency. The shadow estimator ρs is formed directly from measurements for any dimension D; yet computing ρB requires tedious MCMC methods, with the number of parameters increasing linearly (quadratically) with D for a pure (mixed) uniform prior. Here, we considered up to D = 256, a far cry from the D = 2120 example in ref. 13. Moving forward, it would therefore seem profitable to explore simplified Bayesian models that maintain a fixed parameter dimensionality even as the Hilbert space grows exponentially, but that are perhaps not as restrictive as the example in Eq. (12). For example, if one could specify a prior and likelihood on an observable λ only, to the effect of \(\pi (\lambda )\propto {L}_{{\mathcal{D}}}(\lambda ){\pi }_{0}(\lambda )\), the inference procedure would not be limited directly by exponentially large Hilbert spaces. In this way, Bayesian methods could be extendable to the types of quantum systems sought for practically useful quantum computation.

Overall, our analyses have revealed the value of BME as a tool for shedding light on estimation procedures that formally have no connection to the Bayesian paradigm. The numerical simulations here reveal the complementary strengths of classical shadow and Bayesian tomographic approaches in the efficient estimation of quantum properties. And so we expect valuable opportunities for both methods, as quantum information processing resources continue to mature in size and complexity.

Methods

Data simulation approach

The method of classical shadows introduced in ref. 13 involves application of a Haar-random (or effectively Haar-random) unitary U followed by measurement in the computational basis. We exploit the fact that our target state is pure to substantially reduce the complexity of simulating this procedure. In particular, our simulation method requires the generation of only size-D random vectors rather than D × D random unitaries.

Without loss of generality, we work in a rotated basis such that the first basis state coincides with the ground truth: \({\rho }_{g}=\left|0\right\rangle \left\langle 0\right|\). Then the probability of observing outcome j depends only on \(| \left\langle j| U| 0\right\rangle {| }^{2}=| {U}_{j0}{| }^{2}=| {({U}^{\dagger })}_{0j}{| }^{2}\). That is, the distribution of outcomes depends only on the first row of U†. Now, when U is Haar-random, each individual row and column of U† is a uniformly distributed length-1 vector u. Furthermore, given any component uj, the remaining components are a uniformly distributed vector of length \(\sqrt{1-| {u}_{j}{| }^{2}}\). A uniformly random vector u, corresponding to the first row of U†, may be obtained by generating D complex normal random values and normalizing them to yield a unit length vector. An outcome n ∈ {0, 1, …, D − 1} is then chosen with probability ∣un∣2. This selects the nth column of U†. Since this column (whichever it is) is uniformly distributed, its remaining elements are uniformly distributed with length \(\sqrt{1-| {u}_{n}{| }^{2}}\). The explicit procedure is as follows:

-

1.

Posit a measurement unitary \({U}_{m}^{\dagger }=[{\tilde{\varphi }}_{0}\cdots {\tilde{\varphi }}_{D-1}]\), where each \({\tilde{\varphi }}_{n}\) is a column vector corresponding to one of the D possible output states.

-

2.

Generate D complex normal samples \({w}_{n}\mathop{ \sim }\limits^{{\rm{i.i.d.}}}{\mathcal{N}}(0,1)+i{\mathcal{N}}(0,1)\) and normalize

$${u}_{n}=\frac{{w}_{n}}{\sqrt{\mathop{\sum }\limits_{{n}^{\prime}=0}^{D-1}| {w}_{{n}^{\prime}}{| }^{2}}}.$$(14)These define projections of the unitary’s basis states on the ground truth: \({u}_{n}=\left\langle 0| {\tilde{\varphi }}_{n}\right\rangle\), or in other words, the elements in the first row of \({U}_{m}^{\dagger }\).

-

3.

Select an integer n ∈ {0, 1, ..., D − 1} at random with probability ∣un∣2. This implies that the state \({\tilde{\varphi }}_{n}\) is detected.

-

4.

Generate D − 1 complex normal samples \({v}_{j}\mathop{ \sim }\limits^{{\rm{i.i.d.}}}{\mathcal{N}}(0,1)+i{\mathcal{N}}(0,1)\) (j = 1, 2, ..., D − 1). These set the remaining coefficients of the detected state \({\tilde{\varphi }}_{n}\).

-

5.

Finally, take

$$\left|{\psi }_{m}\right\rangle ={u}_{n}\left|0\right\rangle +\sqrt{\frac{1-| {u}_{n}{| }^{2}}{\mathop{\sum }\limits_{{j}^{\prime}=1}^{D-1}| {v}_{{j}^{\prime}}{| }^{2}}}\mathop{\sum }\limits_{j=1}^{D-1}{v}_{j}\left|j\right\rangle$$(15)as the measured state.

Utilizing this method, we performed 50 independent trials with 1000 measurements each, for Hilbert space dimensions D = 32 and D = 256, giving a total of 100 datasets which are used in all subsequent tests. The two values of D were selected specifically to clarify how classical shadows and BME differ in their scaling with dimension.

MCMC convergence metrics

For each MCMC chain of length RT, we retained a fixed number of R = 210 sample density matrices ρr = ρ(xr)—or ρr = ρ(ar) for the parameterization in Eq. (12)—and increased the thinning factor T until convergence was confirmed; in practice, we did this by successively doubling T, monitoring the mean of the overlap with the ground truth \(\frac{1}{R}\mathop{\sum }\nolimits_{r = 1}^{R}\left\langle g| {\rho }_{r}| g\right\rangle\), and selecting a T value beyond which no discernible differences in this mean were observed. For each test value of T, we reran the entire chain with a randomly chosen initial condition, which provided a safeguard against the possibility of getting stuck in a local mode. We found the final T value separately for each qualitative regime (likelihood/prior/dimension). This method was simple and allowed us to focus on the quantities of interest (Bayesian means of observables) when gauging convergence. Here, we provide details on the MCMC chains and their convergence properties, in order to show that this approach is consistent with findings from other standard convergence metrics.

All conclusions in this paper depend on estimates of observable values \({{{\Lambda }}}_{n}=\left|{\phi }_{n}\right\rangle \left\langle {\phi }_{n}\right|\), and not the underlying quantum state, so we concentrate on λ0, λ1, and λ2 only [see state definitions in Eq. (7)]. Considering the density matrix MCMC samples {ρ1, ..., ρR}, we look at (i) the trace plot λn[r] (“trace” in MCMC parlance, not matrix trace \({\rm{Tr}}\)), (ii) cumulative mean μn[r], and (iii) sample autocorrelation function (ACF) cn[l], defined as

where n ∈ {0, 1, 2}, r ∈ {1, 2, ..., R}, \(l\in \{0,1,...,{l}_{\max }\}\), and C is a normalization constant such that cn[0] = 1. Of these expressions, the raw trace λn[r] and autocorrelation cn[l] probe the independence of the samples in the thinned chain, while the cumulative mean μn[r] is linked to the Bayesian mean estimator, which follows directly from it according to

A total of 3600 separate MCMC chains were required for the BME results in this paper, one chain for each examined combination of likelihood \({L}_{{\mathcal{D}}}\), prior distribution π0, dimension D, number of measurements M, and simulated experimental trial. In lieu of examining all 3600 chains, we exploit the fact that they can be classified into nine qualitative regimes, distinguished by \({L}_{{\mathcal{D}}}\), π0, and D. Figures 7 and 8 plot convergence metrics for one example from each regime, after applying the appropriate thinning value T mentioned in the “Results” section above; for all cases, \({l}_{\max }=100\). For each example, we utilize the most extreme case of M = 1000 measurements, because this has the most strongly peaked likelihood and therefore requires the longest chains to converge (since the pCN proposal keeps the prior invariant, the acceptance probability varies more strongly as the likelihood becomes more peaked). Thus, a value of T that attains convergence for M = 1000 will also realize convergence for M < 1000 cases as well.

Overall, the metrics for all nine examples are consistent with converged chains: the trace plots do not reveal long-term trends indicative of correlated points, and the ACFs drop off rapidly with (thinned) lag l. Some features perhaps indicate larger values of T would be preferable, such as the slight modulation in the λ0 trace of Fig. 8b or the slower drop in ACF in Fig. 8c. Yet such refinements would not be expected to impact the main results, for it is only the final value of the cumulative mean μn[R], which is utilized in comparing to classical shadows. The cumulative means are extremely steady, converging well before r = R for all examples, thus confirming the reliability of the \({\lambda }_{n}^{(B)}\) values reported in Figs. 1–6.

Marginal distributions

For additional insight into the joint posterior behavior of (λ0, λ1, λ2)—including potential multimodality and correlations—we examine pairwise and single marginal distributions of a subset of the MCMC chains above. We focus on the D = 256 case of Fig. 7a and the D = 32 case in Fig. 8b, as these show the highest uncertainties. Utilizing kernel density estimation in MATLAB31, and discarding a burn-in of ten samples (to remove the nonstationary initial points evident in the trace plots), we obtain the probability densities in Fig. 9; the two-dimensional contour plots show the pairwise marginals, with the corresponding single marginals appearing to the top and side. All observables follow unimodal distributions. No strong correlations between λ2 and any other observables are evident from these plots. λ0 and λ1 show positive correlations in Fig. 9a, but minimal correlations in Fig. 9b, which can be explained intuitively via the differences in likelihoods. Case (a) corresponds to use of a full likelihood [Eq. (3)] so that the state estimate is gradually moving from \({\rho }_{B}=\frac{1}{D}{I}_{D}\) (the prior mean, valid for M ≈ 0) to \({\rho }_{B}=\left|0\right\rangle \left\langle 0\right|\) (the value as M → ∞). Thus, for samples closer to the limit of \({\rho }_{B}=\left|0\right\rangle \left\langle 0\right|\), the values of both \({\lambda }_{0}^{(B)}\) and \({\lambda }_{1}^{(B)}\) are higher—and vice versa for samples closer to \({\rho }_{B}=\frac{1}{D}{I}_{D}\). On the other hand, the case in Fig. 9b corresponds to a pseudo-likelihood based on λ1 only [Eq. (11)]. Rather than trending toward the ground truth \(\left|0\right\rangle \left\langle 0\right|\), the estimate approaches a continuum of states satisfying \({\lambda }_{1} \sim \frac{1}{2}\), but with no constraint on λ0, thereby eliminating strong correlations between the two observables.

The fact that the marginals are unimodal, as opposed to multimodal, is not required for any of the theoretical or numerical conclusions in this paper: the results and optimality conditions rely on the Bayesian mean, irrespective of the underlying distribution. However, the unimodality does indicate our pCN technique26 is well-suited to sampling in this case. It is well known that random walk MCMC methods, of which pCN is an example25, struggle to reach stationarity under highly multimodal probability densities; in such cases, hybrid Monte Carlo32 or population-based methods, such as SMC33, are known to mix much more efficiently. Thus, while pCN would have still applied to multimodal observables, the required values of T—and hence computational time—would have likely increased significantly.

Data availability

Data used in this study are available from the corresponding author on request.

Code availability

Analysis code used in this study is available from the corresponding author on request.

References

James, D. F. V., Kwiat, P. G., Munro, W. J. & White, A. G. Measurement of qubits. Phys. Rev. A 64, 052312 (2001).

Gross, D., Liu, Y.-K., Flammia, S. T., Becker, S. & Eisert, J. Quantum state tomography via compressed sensing. Phys. Rev. Lett. 105, 150401 (2010).

Flammia, S. T., Gross, D., Liu, Y.-K. & Eisert, J. Quantum tomography via compressed sensing: error bounds, sample complexity and efficient estimators. New J. Phys. 14, 095022 (2012).

Huszár, F. & Houlsby, N. M. T. Adaptive Bayesian quantum tomography. Phys. Rev. A 85, 052120 (2012).

Kravtsov, K. S., Straupe, S. S., Radchenko, I. V., Houlsby, N. M. T., Huszár, F. & Kulik, S. P. Experimental adaptive Bayesian tomography. Phys. Rev. A 87, 062122 (2013).

Granade, C., Ferrie, C. & Flammia, S. T. Practical adaptive quantum tomography. New J. Phys. 19, 113017 (2017).

Cramer, M. et al. Efficient quantum state tomography. Nat. Commun. 1, 149 (2010).

Tóth, G., Wieczorek, W., Gross, D., Krischek, R., Schwemmer, C. & Weinfurter, H. Permutationally invariant quantum tomography. Phys. Rev. Lett. 105, 250403 (2010).

Schwemmer, C. et al. Experimental comparison of efficient tomography schemes for a six-qubit state. Phys. Rev. Lett. 113, 040503 (2014).

Torlai, G., Mazzola, G., Carrasquilla, J., Troyer, M., Melko, R. & Carleo, G. Neural-network quantum state tomography. Nat. Phys. 14, 447–450 (2018).

Carrasquilla, J., Torlai, G., Melko, R. G. & Aolita, L. Reconstructing quantum states with generative models. Nat. Mach. Intell. 1, 155–161 (2019).

Lohani, S., Kirby, B. T., Brodsky, M., Danaci, O. & Glasser, R. T. Machine learning assisted quantum state estimation. Mach. Learn. Sci. Technol. 1, 035007 (2020).

Huang, H.-Y., Kueng, R. & Preskill, J. Predicting many properties of a quantum system from very few measurements. Nat. Phys. 16, 1050–1057 (2020).

Aaronson, S. Shadow tomography of quantum states. In Proceedings of the 50th Annual ACM SIGACT Symposium on Theory of Computing, STOC 2018, 325–338 (ACM, 2018).

Blume-Kohout, R. Optimal, reliable estimation of quantum states. New J. Phys. 12, 043034 (2010).

Robert, C. P. & Casella, G. Monte Carlo Statistical Methods (Springer, 1999).

Webb, Z. The Clifford group forms a unitary 3-design. Quantum Inf. Comput. 16, 1379–1400 (2016).

Zhu, H. Multiqubit Clifford groups are unitary 3-designs. Phys. Rev. A 96, 062336 (2017).

Orenstein, P. Robust mean estimation with the Bayesian median of means. Preprint at https://arxiv.org/abs/1906.01204 (2019).

Mai, T. T. & Alquier, P. Pseudo-Bayesian quantum tomography with rank-adaptation. J. Stat. Plan. Inference 184, 62–76 (2017).

Seah, Y.-L., Shang, J., Ng, H. K., Nott, D. J. & Englert, B.-G. Monte Carlo sampling from the quantum state space. II. New J. Phys. 17, 043018 (2015).

Granade, C., Combes, J. & Cory, D. G. Practical Bayesian tomography. New J. Phys. 18, 033024 (2016).

Williams, B. P. & Lougovski, P. Quantum state estimation when qubits are lost: a no-data-left-behind approach. New J. Phys. 19, 043003 (2017).

Lu, H.-H. et al. A controlled-NOT gate for frequency-bin qubits. npj Quantum Inf. 5, 24 (2019).

Cotter, S. L., Roberts, G. O., Stuart, A. M. & White, D. MCMC methods for functions: modifying old algorithms to make them faster. Statist. Sci. 28, 424–446 (2013).

Lukens, J. M., Law, K. J. H., Jasra, A. & Lougovski, P. A practical and efficient approach for Bayesian quantum state estimation. New J. Phys. 22, 063038 (2020).

Guedj, B. A primer on PAC-Bayesian learning. Preprint at https://arxiv.org/abs/1901.05353 (2019).

Smolin, J. A., Gambetta, J. M. & Smith, G. Efficient method for computing the maximum-likelihood quantum state from measurements with additive Gaussian noise. Phys. Rev. Lett. 108, 070502 (2012).

Guţă, M., Kahn, J., Kueng, R. & Tropp, J. A. Fast state tomography with optimal error bounds. J. Phys. A Math. Theor. 53, 204001 (2020).

Vollmer, S. J. Dimension-independent MCMC sampling for inverse problems with non-Gaussian priors. SIAM/ASA J. Uncertain. Quantif. 3, 535–561 (2015).

MathWorks. "ksdensity” www.mathworks.com/help/stats/ksdensity.html (2021).

Duane, S., Kennedy, A., Pendleton, B. J. & Roweth, D. Hybrid Monte Carlo. Phys. Lett. B 195, 216–222 (1987).

Del Moral, P., Doucet, A. & Jasra, A. Sequential Monte Carlo samplers. J. R. Stat. Soc. Ser. B 68, 411–436 (2006).

Acknowledgements

This work was funded by the US Department of Energy, Office of Advanced Scientific Computing Research, through the Quantum Algorithm Teams and Early Career Research Programs. This work was performed in part at Oak Ridge National Laboratory, operated by UT-Battelle for the US Department of Energy under contract no. DE-AC05-00OR22725.

Author information

Authors and Affiliations

Contributions

J.M.L. performed the numerical simulations and led writing of the paper. K.J.H.L. provided guidance on Bayesian methods. R.S.B. developed the overall research question and supervised the project. All authors furnished ideas, analyzed the results, and contributed to the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Lukens, J.M., Law, K.J.H. & Bennink, R.S. A Bayesian analysis of classical shadows. npj Quantum Inf 7, 113 (2021). https://doi.org/10.1038/s41534-021-00447-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41534-021-00447-6

This article is cited by

-

Classical shadows with Pauli-invariant unitary ensembles

npj Quantum Information (2024)

-

Learning quantum systems

Nature Reviews Physics (2023)

-

Dimension-adaptive machine learning-based quantum state reconstruction

Quantum Machine Intelligence (2023)