Abstract

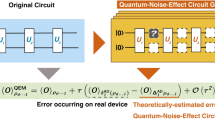

Noise in quantum hardware remains the biggest roadblock for the implementation of quantum computers. To fight the noise in the practical application of near-term quantum computers, instead of relying on quantum error correction which requires large qubit overhead, we turn to quantum error mitigation, in which we make use of extra measurements. Error extrapolation is an error mitigation technique that has been successfully implemented experimentally. Numerical simulation and heuristic arguments have indicated that exponential curves are effective for extrapolation in the large circuit limit with an expected circuit error count around unity. In this Article, we extend this to multi-exponential error extrapolation and provide more rigorous proof for its effectiveness under Pauli noise. This is further validated via our numerical simulations, showing orders of magnitude improvements in the estimation accuracy over single-exponential extrapolation. Moreover, we develop methods to combine error extrapolation with two other error mitigation techniques: quasi-probability and symmetry verification, through exploiting features of these individual techniques. As shown in our simulation, our combined method can achieve low estimation bias with a sampling cost multiple times smaller than quasi-probability while without needing to be able to adjust the hardware error rate as required in canonical error extrapolation.

Similar content being viewed by others

Introduction

While fault-tolerant quantum computers promise huge speed-up over classical computers in areas like chemistry simulations, optimisation and decryption, their implementations remain a long term goal due to the large qubit overhead required for quantum error correction. With the recent rapid advance of quantum computer hardware in terms of both qubit quantity and quality, culminating with the “quantum supremacy” experiment1, one must wonder is it possible for us to perform classically intractable computations on such Noisy Intermediate-Scale Quantum (NISQ) hardware without quantum error correction2. Resource estimation has been performed for one of the most promising applications on NISQ hardware: the Fermi–Hubbard model simulation3,4, realising that even with an optimistic local gate error rate of 10−4, the large number of gates needed for a classically intractable calculation will lead to an expected circuit error count of the order of unity. To obtain any meaningful results under such an expected circuit error count, it is essential to employ error mitigation techniques, which relies on making extra measurements, as opposed to employing extra qubits in the case of quantum error correction, to estimate the noise-free expectation values from the noisy measurement results. Three of the most well-studied error mitigation techniques are symmetry verification5,6, quasi-probability and error extrapolation7,8,9.

Previously all of these error mitigation techniques have been discussed separately. They make use of different information about the hardware and the computation problems to perform different sets of extra circuit runs for error mitigation. Symmetry verification makes use of the symmetry in the simulated system and performs circuit runs with additional measurements. Quasi-probability makes use of the error models of the circuit components and performs circuit runs with different additional gates in the circuit. Error extrapolation makes use of the knowledge about tuning the noise strength via physical control of the hardware and performs additional circuit runs at different noise levels. Consequently, the three error mitigation techniques are equipped to combat different types of noise with different additional sampling costs (number of additional circuit runs required). Hence, it is natural to wonder how these techniques might complement each other. For NISQ application, it may be essential to understand and develop ways for these error mitigation techniques to work in unison, to achieve better performance than the individual techniques in terms of lower bias in the noise-free expectation values estimates and/or lower sampling costs. Thus one key focus of our Article is on the methods for combining these error mitigation techniques and trying to gauge their performance under different scenarios through analytical arguments and numerical simulations.

To achieve efficient combinations of these mitigation techniques, we will need to exploit certain features of these constituent techniques. As we will see later, we will show that quasi-probability can be used for error transformation instead of error removal and the circuit runs that fail the symmetry verification can actually be utilised instead of being discarded. It is also essential to understand the mechanism behind error extrapolation, especially in the NISQ limit in which the number of errors in the circuit will follow a Poisson distribution. Heuristic arguments and numerical validations have been made by Endo et al.9 on error extrapolation using exponential decay curves in this NISQ limit. However, it cannot be applied to certain situations arising in practice, for example when the data points have an increasing trend. Our Article will take this further and provide a more rigorous argument showing that single-exponential error extrapolation is just a special case of the more general multi-exponential error extrapolation framework, using which we can achieve a much lower estimation bias.

Results

Symmetry verification

Suppose we want to perform a state preparation and we know that the correct state must follow a certain symmetry S, i.e., we expect our end state to be the eigenstate of S with the correct eigenvalue s (or within a set of eigenvalues {s}). In such a case, we can perform S measurement on our output state and discard the circuit runs that produce states that violate our symmetry. This was first proposed and studied by McArdle et al.5 and Bonet-Monroig et al.6. Discarding erroneous circuit runs results in an effective density matrix that is the original density matrix ρ projected into the S = s subspace via the projection operator Πs

Here, we have used ΠsΠs = Πs.

Now let us suppose we want to measure an observable O, which commutes with our symmetry S. Thus they can both be measured in the same run and we will discard the measurement results in the runs that failed the symmetry verification. The symmetry-verified expectation value of the observable O is then

in which we have used [S, O] = 0 ⇒ [Πs, O] = 0. Note that Πs measurement takes the value 1 if the symmetry verification is passed and 0 otherwise and hence \(\left\langle {{{\Pi }}}_{s}\right\rangle ={\rm{Tr}}({{{\Pi }}}_{s}\rho )\) is just the fraction of circuit runs that fulfil the symmetry condition. We will use Pd to denote the fraction of circuit runs that fail the symmetry verification, which gives

Recall that ρs is the effective density matrix of the non-discarded runs, which as mentioned is a \({\rm{Tr}}({{{\Pi }}}_{s}\rho )\) fraction of the total number of runs. Therefore to obtain statistics from ρs, we need a factor of

more circuit runs than obtaining directly from ρ.

In the method discussed above, OΠs is usually obtained through measuring O and S in the same run. However, sometimes this cannot be done due to, for example, the inability to perform non-demolishing measurements. In such a case we need to break OΠs into its Pauli basis6 and reconstruct it via post-processing, this is discussed in Supplementary Note 1. In this article, we will mainly be focusing on direct symmetry verification instead of post-processing verification, but most of the arguments are valid for both methods besides discussions about costs.

Now let us move on to see what errors are detectable by symmetry verification. We want to produce the state \(\left|{\psi }_{f}\right\rangle \) which is known to fall within the S = s symmetry subspace

To produce the state, we usually start with a state \(\left|{\psi }_{0}\right\rangle \) that follows the same symmetry and uses a circuit U that consists of components that conserve the symmetry

Suppose that some error E occurs during the circuit in between the symmetry-preserving components and it satisfies

in which \(s,s^{\prime} \) are some eigenvalues of the symmetry operator S. We then have

ΠsEΠs = EΠs means that E is a transformation within the S = s subspace, hence E is undetectable by the symmetry verification using S. ΠsEΠs = 0 means that E contains no components that map states in the S = s subspace back into the same subspace, hence E is (completely) detectable by the symmetry verification using S. A general error will be a combination of detectable and undetectable error components.

In this Article, we will be focusing on Pauli errors and Pauli symmetries, for which Eq. (4) is reduced to

Quasi-probability

To describe the quasi-probability method, we will make use of the Pauli transfer matrix (PTM) formalism10. Using \({\mathbb{G}}\) to denote the set of Pauli operators, any density operators can be written in the vector form by decomposing into the Pauli basis \(G\in {\mathbb{G}}\)

where we have defined the inner product as

Note that we need to add a normalisation factor \(\frac{1}{\sqrt{{2}^{N}}}\) when we use the Pauli operators as a basis, with N being the number of qubits.

The quasi-probability method was first introduced by Temme et al.8, and the implementation details were later studied by Endo et al.9. Let us suppose we are trying to perform the operation \({\mathcal{U}}\), but in practice, we can only implement its noisy version

In addition to \({{\mathcal{U}}}_{\epsilon }\), we can also implement a set of basis operation \(\{{{\mathcal{B}}}_{n}\}\). We can decompose the ideal operation \({\mathcal{U}}\) that we want to implement into a set of gates \(\{{{\mathcal{B}}}_{n}{{\mathcal{U}}}_{\epsilon }\}\) that we can implement

In this way, we are essentially trying to simulate the behaviour of the inverse noise channel \({{\mathcal{E}}}^{-1}\) using the set of basis operations \(\{{{\mathcal{B}}}_{n}\}\), which can undo the noise \({\mathcal{E}}\).

If we have a state \(\left.\left|\rho \right\rangle \right\rangle \) passing through the circuit \({\mathcal{U}}\) and we perform measurement O, then the observable we obtain during the experiment will be:

in which \(Q={\sum }_{n}\left|{q}_{n}\right|\) and sn = sgn(qn). This is implemented by sampling from the set of basis operations \(\{{{\mathcal{B}}}_{n}\}\) with the probability distribution \(\{\frac{\left|{q}_{n}\right|}{Q}\}\). We will weight each measurement outcome by the sign factor sn and rescale the final expectation value by the factor Q.

Now if we break down our computation into many components \({\mathcal{U}}=\mathop{\prod }\nolimits_{m = 1}^{M}{{\mathcal{U}}}_{m}\), with noise associated with each component, then the observable that we want to measure is

but in reality, we can only implement the noisy version

Each noise element can be removed by simulating the inverse channels using the set of basis operations \(\{{{\mathcal{B}}}_{n}\}\)

with \({R}_{m}={\sum }_{n}\left|{q}_{mn}\right|\).

Hence, we can get back the noiseless observable using

in which we have used \(\vec{n}\) to denote the set of number {n1, n2, ... , nM} and we have defined \({q}_{\vec{n}}=\mathop{\prod }\nolimits_{m = 1}^{M}{q}_{m{n}_{m}}\), \({s}_{\vec{n}}=\mathop{\prod }\nolimits_{m = 1}^{M}{s}_{m{n}_{m}}=\,{\text{sgn}}\,({q}_{\vec{n}})\) and

To implement this, we simply sample the set of basis operations \(\{{{\mathcal{B}}}_{{n}_{1}},{{\mathcal{B}}}_{{n}_{2}},\ldots \ ,{{\mathcal{B}}}_{{n}_{M}}\}\) that we want to implement with the probability \(\frac{|{q}_{\vec{n}}|}{Q}\) and weight each measurement outcome by a sign factor \({s}_{\vec{n}}=\,{\text{sgn}}\,({q}_{\vec{n}})\), so that the outcome we get is an effective Pauli observable OQ. And the error-free observable expectation value can be obtained via

Hence, to estimate \(\left\langle O\right\rangle \) by sampling OQ, we need CQ times more samples than sampling O directly, where the sampling cost factor CQ is

In this article, we will be mainly focusing on Pauli error channels, which can be inverted using quasi-probability by employing Pauli gates as the basis operations.

Using  to denote a super-operator

to denote a super-operator

any Pauli channel can be written in the form

with ∑GαG = 1. We use pϵ to denote the total probability of all the non-identity components. This channel can be approximately inverted using the quasi-probability channel \({{\mathcal{G}}}_{-{p}_{\epsilon }}\) since

Hence, to the first-order approximation, the cost of inverting \({{\mathcal{G}}}_{{p}_{\epsilon }}\) will be the cost of implementing \({{\mathcal{G}}}_{-{p}_{\epsilon }}\), which using Eq. (8) is

Here, we have only discussed approximately inverting a Pauli channel because the exact inverse channel can be hard to express in a compact analytical form. However, it can be obtained numerically by first obtaining the PTM of the noise channel and then performing matrix inversion.

Instead of removing the error channel completely, quasi-probability can also be used to transform the form of an error channel. In the case of Pauli channels, suppose we want to transform a channel of the form in Eq. (9) to

we can approximately achieve this transformation up to first order in qϵ and pϵ by applying the quasi-probability channel

This will incur the implementation cost

In the limit of small pϵ and qϵ, we have

In the last step we have used \({\sum }_{G}\left({p}_{\epsilon }{\alpha }_{G}-{q}_{\epsilon }{\beta }_{G}\right)={p}_{\epsilon }-{q}_{\epsilon }\) from ∑GαG = ∑GβG = 1.

If we are suppressing all error components evenly, or if we are simply removing certain error components, we will have \({p}_{\epsilon }{\alpha }_{G}\ge {q}_{\epsilon }{\beta }_{G}\quad \forall G\in {\mathbb{G}}-I\). In this case, the cost of implementing the transformation using quasi-probability will simply be

Group errors

Here, we will introduce a special kind of error channel: group error channels, which enable us to make more analytical predictions about the error mitigation techniques that we have already discussed and also will help our understanding about error extrapolation later.

The group error \({{\mathcal{J}}}_{p,{\mathbb{E}}}\) of the group \({\mathbb{E}}\) is defined to be

By groups we mean the subgroups of the Pauli group with a composition rule that ignores all the irrelevant phase factors. For the case of p = 1, we will call \({{\mathcal{J}}}_{1,{\mathbb{E}}}\) the pure group errors.

Many physically interesting noise models like depolarising channels, dephasing channels, Pauli-twirled swap errors and dipole–dipole errors are all group errors.

Now let us consider the effect of applying a set of Pauli symmetry checks \({\mathbb{S}}\) to the group error in Eq. (12). Using Eq. (5), \({\mathbb{S}}\) can remove and detect components in \({{\mathcal{J}}}_{p,{\mathbb{E}}}\) that anti-commute with any elements in \(S\in {\mathbb{S}}\). We look at the action of \({\mathbb{S}}\) on the subset of qubits affected by \({{\mathcal{J}}}_{p,{\mathbb{E}}}\), and denote the set of these operators on the subset of qubits as \({{\mathbb{S}}}_{\rm{sub}}\). The commutation relationship between \({\mathbb{S}}\) and \({\mathbb{E}}\) is equivalent to that of \({{\mathbb{S}}}_{\rm{sub}}\) and \({\mathbb{E}}\). We denote their generators as \({\widetilde{{\mathbb{S}}}}_{\rm{sub}}\) and \(\widetilde{{\mathbb{E}}}\). Note that here \({{\mathbb{S}}}_{\rm{sub}}\) is not a group, by \({\widetilde{{\mathbb{S}}}}_{\rm{sub}}\) we just mean the set of independent elements in \({{\mathbb{S}}}_{\rm{sub}}\). For Pauli generators, we can choose \(\widetilde{{\mathbb{E}}}\) in such a way that for every \({\widetilde{S}}_{\rm{sub}}\in {\widetilde{{\mathbb{S}}}}_{\rm{sub}}\), there will at most be only one element in \(\widetilde{{\mathbb{E}}}\) that anti-commutes with it. We will denote the elements in \(\widetilde{{\mathbb{E}}}\) that commute with all elements in \({\widetilde{{\mathbb{S}}}}_{\rm{sub}}\) as \(\widetilde{{\mathbb{Q}}}\)

and it will generate the remaining error components in \({\mathbb{E}}\) that are not detectable, which we denote as \({\mathbb{Q}}\). Hence the detectable error components are just \({\mathbb{E}}-{\mathbb{Q}}\)

Going back to our error channel in Eq. (12), the probability that the error gets detected is just the total probability of the detectable error components

Removing the detected errors in \({{\mathcal{J}}}_{p,{\mathbb{E}}}\) and renormalising the error channel by the factor 1 − pd gives the effective channel after verification, which is just another group error channel

with

An example of removing detectable errors for depolarising channels will be worked out later in Section “Numerical simulation for multi-exponential extrapolation”.

For a given general Pauli channel, we have only discussed its approximate inverse channel in Section “Quasi-probability”. This is because its exact inverse channel can be hard to express in a compact analytical form. However, for any group channel, we can easily write down the explicit form of its inverse channel.

As shown in Supplementary Note 2, it is easy to verify that the inverse of a group channel \({{\mathcal{J}}}_{p,{\mathbb{E}}}\) is just

with \(\alpha =\frac{p}{1-p}\).

Using Eq. (15) and (8), the cost of using quasi-probability to invert \({{\mathcal{J}}}_{p,{\mathbb{E}}}\) is thus

which is the same as Eq. (10) with

As shown in Eq. (14), for a given group error \({{\mathcal{J}}}_{p,{\mathbb{E}}}\), the resultant error channel after symmetry verification is another group channel \({{\mathcal{J}}}_{r,{\mathbb{Q}}}\) where \({\mathbb{Q}}\) is a subgroup of \({\mathbb{E}}\) and \(r=\frac{\left|{\mathbb{Q}}\right|}{\left|{\mathbb{E}}\right|}p\). The remaining errors can then be completely removed by implementing \({{\mathcal{J}}}_{r,{\mathbb{Q}}}^{-1}\) using quasi-probability.

Similarly, if we implement the quasi-probability inverse channel \({{\mathcal{J}}}_{r,{\mathbb{Q}}}^{-1}\) first and then perform symmetry verification, we can still completely remove the group error \({{\mathcal{J}}}_{p,{\mathbb{E}}}\). The gates we need to implement in the inverse channel \({{\mathcal{J}}}_{r,{\mathbb{Q}}}^{-1}\) will not be detected and thus will not be affected by the symmetry verification. As shown in Supplementary Note 3, the resultant error channel after applying \({{\mathcal{J}}}_{r,{\mathbb{Q}}}^{-1}\) is

This is a channel that only contains the error components that are detectable by the symmetry verification with the error rate pd.

As discussed in Section “Quasi-probability” and explicitly shown in Supplementary Note 3, we can implement additional quasi-probability operations to further reduce the error rate of the resultant channel to q ≤ pd. The resultant detectable error channel will be

NISQ limit

The number of possible error locations in the circuit, which is usually the number of gates in the circuit, will be denoted as M. These error locations might experience different noise with different error rates. From here on, instead of building our discussions around local gate error rates, we will see that the more natural quantity to consider in the context of NISQ error mitigation is the expected number of errors occurring in each circuit run, which is called the mean circuit error count and denoted as μ. In order to achieve quantum advantage using NISQ machine, we would generally expect the circuit size to be large enough to be classically intractable while the mean circuit error count should not be too far beyond unity

This is also called the Poisson limit since using Le Cam’s theorem, the number of errors occurring in each circuit run will follow the Poisson distribution—i.e. the probability that l errors occur will be

If we assume that every local error channel in the circuit can be approximated as the composition of an undetectable error channel and a detectable error channel, then symmetry verification will have no effects on the undetectable error channels and we can focus only on the detectable error channels. Alternatively, as we have seen in Section “Group errors”, we can use quasi-probability to remove all the local undetectable errors in the circuit, leaving us with only detectable error channels. The expected number of detectable errors occurring in each circuit run is denoted as μd. Taking the NISQ limit and using Eq. (20), the probability that l detectable errors occur in the circuit is

Using Eq. (5), an odd number of detectable errors will anti-commute with the symmetry and get detected while an even number of detectable errors will commute with the symmetry and pass the verification. Therefore, the total probability that the errors in the circuit will be detected by the verification of one Pauli symmetry is thus

Note that this is upper-bounded by \(\frac{1}{2}\), i.e. at most we can catch errors in half of the circuit runs.

Hence, using Eq. (2), the cost of implementing symmetry verification for one Pauli symmetry is

which is upper-bounded by 2.

After symmetry verification, the fraction of circuit runs that still have errors inside is

In Eq. (11) we have only been focusing on applying quasi-probability to one error channel. Assuming there are M such channels in the circuit, then using Eq. (11) and taking the NISQ limit (Eq. (20)), the sampling cost factor of transforming all M error channels with error probability pϵ into new error channels with error probability qϵ ≤ pϵ using quasi-probability is

At qϵ = 0, we will obtain the sampling cost of removing all the noise using quasi-probability

Remember that we are focusing on Pauli errors and pϵ is defined to be the total probability of all non-identity components. This is not equivalent to the error probability p because sometimes there are some identity components in our definition of error probability such as the group errors that we discussed in Section “Group errors”. Similar to the definition of pϵ, we can denote the expected number of non-identity errors in each circuit run as μϵ. In the above cases, we have μϵ = Mpϵ and similarly we can define νϵ = Mqϵ. In the cases where different noise locations experience different noise, using Le Cam’s theorem with negative probabilities and focusing on the zero-occurrence case, we can generalise Eq. (25) to

i.e. the sampling cost of quasi-probability transformation grows exponentially with the reduction in the error rate μϵ − νϵ.

Multi-exponential error extrapolation

The idea of amplifying the hardware error rate and performing extrapolation using the original result and the noise-amplified result was first introduced by Li et al.7 and Temme et al.8, and was later successfully realised experimentally using superconducting qubits11. Endo et al.9 provided heuristic arguments on why the exponential decay curve should be used for error extrapolation in the large circuit limit, whose improved performance over linear extrapolation was validated via numerical simulations in refs. 9,12.

Using \({\mathbb{L}}\) to denote the set of locations that the errors have occurred, when l errors have occurred in the circuit, our observable O will be transformed to a noisy observable \({O}_{\left|{\mathbb{L}}\right| = l}\). Recall that in the NISQ limit, the probability that l errors happen (denoted as Pl) will follows the Poisson distribution in Eq. (21). Therefore, the expectation value of the observable O at the mean circuit error count μ is then

Hence, how \(\left\langle {O}_{\mu }\right\rangle \) changes with μ is entirely determined by how \(\left\langle {O}_{\left|{\mathbb{L}}\right| = l}\right\rangle \) changes with l. When we try to fit a nth degree polynomial of μ to \(\left\langle {O}_{\mu }\right\rangle \), for example performing a linear extrapolation7,8, we are essentially assuming that \(\left\langle {O}_{\left|{\mathbb{L}}\right| = l}\right\rangle \) is a nth degree polynomial of l using the expressions of the moments of the Poisson distribution.

At l = 0, we have the error-free result \(\left\langle O\right\rangle \)

At large error number l, in the case of stochastic errors, the circuit will move closer to a random circuit. Hence, for a Pauli observable O we will expect

A generic polynomial of l will not satisfy the above boundary conditions. Hence, to align with the above boundary conditions, we can instead assume an exponential decay of \(\left\langle {O}_{\left|{\mathbb{L}}\right| = l}\right\rangle \) as l increase

in which γ is the observable decay rate that satisfies 0 ≤ γ ≤ 1. This will lead to an exponential function in μ

which is just the extrapolation curves employed in ref. 9.

Using Eq. (29), if we probe at the error rates μ and λμ, we can perform two-point exponential extrapolation and obtain the error-mitigated estimate of \(\left\langle O\right\rangle \), denoted as \(\left\langle {O}_{0}\right\rangle \), using the following equation:

As discussed in Supplementary Note 7B, the sampling cost factor of performing such an extrapolation is

Now let us try to gain a deeper insight about the reason behind the exponential decay of \(\left\langle {O}_{\left|{\mathbb{L}}\right| = l}\right\rangle \). If a Pauli error G occurs at the end of a circuit and we are trying to measure an Pauli observable O, then the expectation value is just

where η(G, O) is the commutator between G and O

If a pure group error \({{\mathcal{J}}}_{1,{\mathbb{E}}}\) occurs at the end of the circuit, then the resultant expectation value is

Using the fact that the composition of the commutators of elements in a Pauli subgroup follows the same structure as the composition of the elements themselves

we can rewrite the above formula in terms of the generator of \({\mathbb{E}}\)

in which

Hence, if a pure group error \({{\mathcal{J}}}_{1,{\mathbb{E}}}\) occurs right before measuring a Pauli observable O, then the resultant expectation value \({\rm{Tr}}({{\mathcal{J}}}_{1,{\mathbb{E}}}(\rho )O)\) will only remain unchanged if O commutes with all elements in \({\mathbb{E}}\), otherwise the information about O is “erased” by the group error and the expectation value will be 0.

If we decompose the gates in a unitary circuit \(U=\mathop{\prod }\nolimits_{m = M}^{1}{V}_{m}\) into their Pauli components: \({V}_{m}={\sum }_{{j}_{m}}{\alpha }_{m{j}_{m}}{G}_{m{j}_{m}}\), then we have

where

i.e. the circuit U can be viewed as the superposition of many Pauli circuits \({G}_{\vec{j}}\).

The expectation value of observing O after applying the circuit U on ρ is

which is a linear sum of the measurement results for the set of effective Pauli observables \({G}_{\vec{i}}^{\dagger }O{G}_{\vec{j}}\) for different \(\vec{i}\) and \(\vec{j}\). Similar to Eq. (31), the information about \({G}_{\vec{i}}^{\dagger }O{G}_{\vec{j}}\) will either be “erased” or perfectly preserved if a group error occurs in the circuit. Assuming the average fraction of group error locations in our circuit that can erase the information about \({G}_{\vec{i}}^{\dagger }O{G}_{\vec{j}}\) is \({\gamma }_{\vec{i},\vec{j}}\), then as proven in Supplementary Note 4, in the limit of large M and non-vanishing \(1-{\gamma }_{\vec{i},\vec{j}}\), the expectation value given l errors occurred can be approximated to be

which is just a multi-exponential decay curve.

If we consider the case in which many error locations in the circuit are affected by the same type of noise, and adding onto the fact that in practice there are usually many repetitions of the circuit structures along the circuit and across the qubits, we can expect many \({\gamma }_{\vec{i},\vec{j}}\) of different \(\vec{i}\) and \(\vec{j}\) to be very similar. Hence, by grouping the terms with similar \({\gamma }_{\vec{i},\vec{j}}\) together, Eq. (33) becomes

where Ak is the sum of \({2}\,{\mathrm{Re}} \left\{ {\alpha }_{\vec{i}}^{* }{\alpha }_{\vec{j}}{\mathrm{Tr}}\left(\rho {G}_{\vec{i}}^{\dagger }O{G}_{\vec{j}}\right)\right\}\) for some subset of \(\vec{i}\) and \(\vec{j}\). Note that Ak are independent of l and we have \(\mathop{\sum }\nolimits_{k = 1}^{K}{A}_{k}=\left\langle O\right\rangle \).

So far we have only been considering group error channels. However, as shown in Supplementary Note 5, by approximating general Pauli channels as the composition of pure group channels, we can prove that decay of the expectation value under general Pauli noise can also be approximated by a sum of exponentials like in Eq. (34).

Equation (34) can be translated into a multi-exponential decay of \(\left\langle {O}_{\mu }\right\rangle \) over the mean circuit error count μ using Eq. (28)

This can be rewritten as

Comparing with Eq. (34), we see that the shape of \(\left\langle {O}_{\mu }\right\rangle \) and \(\left\langle {O}_{\left|{\mathbb{L}}\right| = l}\right\rangle \) are the same up to the leading order of γk with the mean circuit error count μ in place of the circuit error count l.

The simplest shape that we can fit over \(\left\langle {O}_{\mu }\right\rangle \) is a single exponential decay curve (K = 1), which is the one we used in exponential extrapolation. We see here that a natural extension of this will be probing \(\left\langle {O}_{\mu }\right\rangle \) at more than two different error rates and trying to fit them using a sum of exponentials (K > 1). The estimate of the error-free observable \(\left\langle O\right\rangle \) can then be obtained by substituting μ = 0 into our fitted curve.

From Eq. (36), we see that the kth exponential component can only survive up to the mean circuit error count \(\mu \sim \frac{1}{{\gamma }_{k}}\), thus we can only obtain information about this component by probing at the mean circuit error count \(\mu \lesssim \frac{1}{{\gamma }_{k}}\). Since 0 ≤ γk ≤ 1, we have \(\frac{1}{{\gamma }_{k}}\ge 1\) for all k, i.e. we should be able to retrieve all exponential components if we can probe enough points within μ ≲ 1. In practice, there is a minimum mean circuit error count that we can achieve, which we denote as μ*. To have an accurate multi-exponential fitting, it is essential that for all components with non-negligible Ak, we have \({\mu }^{* }\lesssim \frac{1}{{\gamma }_{k}}\), i.e. none of the critical exponential components has died away at the minimal error rate that we can probe.

Numerical simulation for multi-exponential extrapolation

In this section, we will try to apply multi-exponential extrapolation to the Fermi–Hubbard model simulation circuit as outlined in ref. 3, which consists of local two-qubit components that correspond to different interaction terms and the fermionic swaps. It can be used for both eigenstate preparation and time evolution simulation. We will assume there are M of these two-qubit components, and they all suffer from two-qubit depolarising noise of error probability p, which is just a group channel of the two-qubit Pauli group (without the phase factors). Using Eq. (17), we have

Since we usually know the number of fermions in the system, we can try to verify the fermion number parity symmetry of the output state, which is simply

in the Jordan–Wigner qubit encoding. All the local two-qubit components in the circuit conserve the symmetry Sσ. Hence when we start in a state with the right fermion number, the output state should also have the correct fermion number, enabling us to perform symmetry verification.

By checking Sσ = ∏iZi, we can detect all error components with one X or Y in the local two-qubit depolarising channels since they anti-commute with Sσ. We will remove the other error components in the local two-qubit depolarising channels using quasi-probability. The removed components are those can be generated from the set \(\widetilde{{\mathbb{Q}}}=\{{Z}_{1},{Z}_{2},{X}_{1}{X}_{2}\}\) following Section “Group errors”. Thus we have \(\left|{\mathbb{Q}}\right|={2}^{3}=8\) and using Eq. (13) we also have

The resultant noise channel after the application of quasi-probability is given by Eq. (19), which is just a uniform distribution of the two-qubit Pauli errors that are detectable by Sσ. We will call it the detectable noise.

In this section and later in Section “Numerical simulation for a combination of error mitigation techniques”, we will perform numerical simulations using the circuit for the 2 × 2 half-filled Fermi–Hubbard model, which consists of 8 qubits and 144 two-qubit gates. The two-qubit gates in the circuit that correspond to interaction terms are parametrised gates with the parameters indicating the strength of the interaction. In our simulation, we will obtain the results for a set of randomly chosen gate parameters (with additional results for another set of random parameters listed in Supplementary Note 9). We will also look at two different error scenarios: depolarising errors and detectable errors. One of them is a group channel while the other is a more general Pauli channel. The measurements that we perform will be the Pauli components of the Hamiltonian, from which we can reconstruct the energy of the output state. The simulations are performed using the Mathematica interface13 of the high-performance quantum computation simulation package QuEST14.

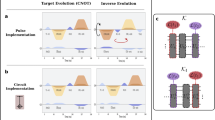

In Fig. 1, we have plotted the noisy expectation values \(\left\langle {O}_{\mu }\right\rangle \) for each Pauli observable at the mean circuit error counts μ = 0.5, 1, 1.5, 2. When we perform multi-exponential extrapolation on them, we find that all of the observables can be fitted using a sum of at most two exponentials, even though there should be very few symmetries in our circuits since they are generated from a set of random parameters. We now can proceed to compare the absolute bias in the estimate of the noiseless expectation values (μ = 0) using dual-exponential extrapolation against that using the conventional single-exponential extrapolation for all of the noisy observables in Fig. 1. The absolute biases of single- and dual-exponential extrapolation are denoted as ϵ1 and ϵ2, respectively, and different colours in the plots correspond to different estimation bias ratios \(\frac{{\epsilon }_{1}}{{\epsilon }_{2}}\).

Plots showing the noisy expectation values of different Pauli observables under a depolarising noise and b detectable noise obtained at the four mean circuit error counts μ = 0.5, 1, 1.5, 2 (cross markers). The single- and dual-exponential extrapolation curves fitted to the data points are represented by the solid and dashed lines, respectively. The circular markers lie at μ = 0 and denote the true noiseless expectation values. Different colours represent different ratios between the estimation bias of using single- and dual-exponential extrapolation (e.g. orange means that for the given observable, the estimation bias of single-exponential extrapolation is between 103 and 104 times larger than that of dual-exponential extrapolation).

For 32 out of the 34 observables we plotted, dual-exponential extrapolation can achieve a smaller estimation bias than single-exponential extrapolation (\(\frac{{\epsilon }_{1}}{{\epsilon }_{2}}\,>\,1\)). Within the two cases that dual-exponential extrapolation is outperformed (the green curves in Fig. 1), one of them is the case in which single- and dual-exponential extrapolation both achieve very small estimation bias of similar order. The other remaining case with larger ϵ2 relative to ϵ1 is mainly due to the small magnitude of its true expectation value, which lead to large uncertainties in the fitting parameters. It is also because we are only using the bare minimum of 4 data points to fit a dual-exponential curve with 4 free parameters and thus the problem may be alleviated by simply probing at more error rates to obtain more data points. On the other hand, there are also a few cases in which the ϵ1 are exceptionally large (e.g. certain orange and red curves in Fig. 1a, b). These are usually observables whose decay curves have extrema and/or crossing over the x-axis, thus it is impossible to get a good fit with a single-exponential curve. We have zoomed into one such observable in Fig. 2. For these observables, dual-exponential extrapolation can still perform extremely well and achieve ϵ2 ~ 10−5, which is up to tens of thousands times lower than ϵ1: \(\frac{{\epsilon }_{1}}{{\epsilon }_{2}} \sim 1{0}^{4}\).

Here we have plotted the noisy expectation values at the four mean circuit error counts μ = 0.5, 1, 1.5, 2 (cross markers). The single- and dual-exponential extrapolation curves fitted to the data points are represented by the solid and dashed lines, respectively. The circular markers lie at μ = 0 and denote the true noiseless expectation values.

Now we will exclude the few observables above with exceptionally large ϵ1 or ϵ2 and take the average of the remaining ϵ1 and ϵ2 to obtain a more representative performance of dual-exponential extrapolation against single-exponential extrapolation. This is shown in Table 1, from which we see that by using dual-exponential extrapolation instead of single-exponential extrapolation, we can achieve a tens or even a hundred times reduction in estimation bias across both noise models. Note that in Fig. 1 it appears to the eye that the true (noiseless) expectation values, marked by filled circles, never deviate from the dual-exponential (dashed) lines. In fact there are minute discrepancies as specified in Table 1, but the extrapolation is remarkably successful.

Combination of error mitigation techniques

We have shown that extrapolating using a multi-exponential curve can be very effective assuming Pauli noise. Besides the shape of the extrapolation curve, the other key component to error extrapolation is the way to tune the noise strength. Previously, noise is boosted by increasing gate pulse duration8, applying additional gates that cancel each other12 or simulating the noise using random gate insertion7. There are various practical challenges associated with these noise-boosting techniques, and furthermore as discussed at the end of Section “Multi-exponential error extrapolation”, data points at boosted error rates may not contain enough information for effective extrapolation. Now as shown in Section “Quasi-probability”, we can actually use quasi-probability to reduce the error rate and obtain a set of data points with reduced noise strength, which can then be used for extrapolation. We should expect a smaller estimation bias by using data points with reduced noise strength rather than boosted noise strength, but the sampling cost will also increase due to the use of quasi-probability. For the special case of two-point extrapolation using a single-exponential curve with the two data points at the unmitigated error rate μ and the quasi-probability-suppressed error rate ν, the total sampling cost as shown in Supplementary Note 7C is

with μ = λν. We will call this special case quasi-probability with exponential extrapolation.

As discussed in Section “Multi-exponential error extrapolation”, the number of exponential components in the multi-exponential extrapolation can be reduced with an increased degree of symmetry in the circuit and/or if the error channels are group channels. Hence, besides using quasi-probability for error suppression, we can also use quasi-probability to transform the error channels into group errors and/or errors of similar form for easier curve fitting in the extrapolation process.

Moving on, we may wish to combine symmetry verification and quasi-probability. We can first apply quasi-probability to transform all the error channels in the circuit with the total mean error count μ into detectable error channels with a total mean error count μd. After that, we can apply symmetry verification, but note that the additional quasi-probability operations may contain gates that take us from one symmetry space to another, for which we need to adjust our symmetry verification criterion accordingly. As discussed in Section “NISQ limit”, an even number of occurrence of local detectable errors can still escape the symmetry test and lead to circuit errors. We can further suppress them by applying additional quasi-probability operations as shown in Supplementary Note 6. Alternatively, we can also try to remove these remaining errors by applying error extrapolation as we will see below.

After using quasi-probability to transform all local errors into local detectable errors with the mean circuit error count μd, performing symmetry verification will split the circuit runs into two partitions, one has an even number of detectable errors occurring and will pass the symmetry test, the other has an odd number of detectable errors occurring and will fail the symmetry test. Consequently, the noisy observable expectation value (Eq. (35)) can also be split into the weighted sum of these two partitions

in which \({e}^{-{\mu }_{d}}\cosh ({\mu }_{d})\) and \({e}^{-{\mu }_{d}}\sinh ({\mu }_{d})\) are the probability to have an even and an odd number of errors occurring in the circuit, respectively. And \(\left\langle {O}_{c,{\mu }_{d}}\right\rangle \), \(\left\langle {O}_{s,{\mu }_{d}}\right\rangle \) are the corresponding expectation values in these cases with

We will consider the case that the decay of our expectation value \(\left\langle {O}_{{\mu }_{d}}\right\rangle \) over increased mean circuit error count μd follows a single exponential curve (K = 1) for simplicity, we then have

which gives

Note that we have used the fact that \(\left\langle {O}_{c,{\mu }_{d}}\right\rangle \) and \(\left\langle O\right\rangle \) have the same sign since 1 − γ > 0 and μd > 0. Here with the help of symmetry verification and quasi-probability, we can now obtain an estimate of the error-free expectation value \(\left\langle O\right\rangle \) by combining the expectation value of the passed runs and failed runs at one error rate μd instead of combining the expectation value of runs at different error rates in the conventional error extrapolation. Note that here we have assumed that we know the value of the mean detectable circuit error count μd, which needs be known before we can apply the quasi-probability step anyway. The method we employed in Eq. (43) will be called hyperbolic extrapolation.

As derived in Supplementary Note 7D, the sampling cost factor of hyperbolic extrapolation is

To combine all three error mitigation techniques, we first use quasi-probability to remove the error components that are undetectable by symmetry verifications. Applying symmetry verification will then split the circuit runs into two sets: runs with even number of errors and runs with odd number of errors, obtaining two separate expectation values. Using our understanding about the decay of the expectation value from our study of error extrapolation, we can simply combine these two erroneous expectation values and obtain the error-free expectation value. The full process is called quasi-probability with hyperbolic extrapolation, and the corresponding total sampling cost factor can be obtained using Eqs. (27) and (44)

We note that this is always smaller than the cost of pure quasi-probability \({C}_{Q,0}={e}^{4{\mu }_{\epsilon }}\). Its cost-saving over pure quasi-probability will increase with the increase of γ.

Numerical simulation for a combination of error mitigation techniques

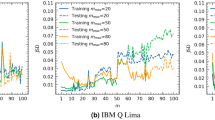

In this section, we will compare the performance of quasi-probability with exponential extrapolation (QE) and quasi-probability with hyperbolic extrapolation (QH) discussed in Section “Combination of error mitigation techniques”. Similar to Section “Numerical simulation for multi-exponential extrapolation”, we will perform Fermi-Hubbard model simulation with local two-qubit depolarising noise with a mean circuit error count μ. The symmetry we will used in QH is the fermionic number parity symmetry, which means that the resultant mean detectable circuit error count after we apply the quasi-probability step in QH will be \({\mu }_{d}=\frac{\mu }{2}\) following Eq. (38). For the quasi-probability in QE in this section, we will keep it at the same strength as that in QH, which means that they have the same resultant circuit error rate: \(\nu =\frac{\mu }{2}\). Note that even though resultant channels after the partial quasi-probability in both QE and QH give the same mean circuit error count \(\nu =\frac{\mu }{2}={\mu }_{d}\), in one case the resultant noise is still depolarising while in the other case the resultant noise is locally detectable. In this section, we will assume the quasi-probability process is performed perfectly. Recall that for simplicity we have only explicitly derived QH under the assumption that the observable follows a single-exponential decay, so for a fair comparison, the QE method in this section will also only employ single-exponential extrapolation. However as we will see later, even when we look at observables that follow a dual-exponential decay, which breaks our assumptions above, QH can still achieve robust performance.

As shown in Fig. 1, for our example circuits, some observables can be fitted well enough using single-exponential decay curves while the other observables can only be fitted well using dual-exponential decay curves. We will call these two types of observables single-exponential observables and dual-exponential observables, respectively. In Fig. 3, we have plotted the absolute estimation bias ϵest using the two different extrapolation techniques for the single-exponential and dual-exponential observables. First, we can see that the estimation biases for the dual-exponential observables are almost one order of magnitude higher than the biases for the single-exponential observables. This should not come as a surprise since both single-exponential extrapolation and hyperbolic extrapolation are derived under the assumptions of single-exponential observables. At the mean circuit error counts μ = 2, for each observable, we have used markers to label the method that can achieve a lower estimation bias out of the two. We see that the number of single-exponential observables that can achieve a lower estimation bias using QE is comparable to that of QH. On the other hand, almost all dual-exponential observables can achieve a lower estimation bias using QH.

Plots showing a observables following single-exponential decay and b observables following dual-exponential decay. Within each plot, different colours represent different observables. The solid lines denote QH, while the dashed lines denote QE. At the mean circuit, error counts μ = 2, for each observable, we use markers to denote the method that has lower estimation bias out of the two. For a given observable, circular markers denote lower estimation bias when using QH, while triangle markers denote lower estimation bias when using QE.

In Table 2, we further calculate the average estimation bias of single-exponential, dual-exponential and all observables separately at the circuit error rate μ = 1, 2, which re-confirm all of our observations above. We see that the estimation bias of QE is lower than that of QH for single-exponential observables, and on the other hand, QH can achieve a lower estimation bias for dual-exponential observables. In other words, the performance of QH is more robust against whether the observable is single-exponential or not. When looking at the estimation bias averaged over all observables, we see the estimation bias of QH is always lower than QE and can be four times smaller than QE at μ = 2. The all-observable averages can be more indicative about the practical performance of the mitigation techniques since in experiments we do not know whether a given observable should be fitted with single-exponential or not beforehand.

There is another added layer of robustness when we try to apply QH instead of QE to multi-exponential observables when we look back at the hyperbolic extrapolation equation Eq. (43). We can see that if the shape of the observable is far off from a single-exponential decay, then this might lead to a negative number in the square root of Eq. (43), allowing us to realise that we need to probe at more error rates to perform multi-exponential extrapolation instead, and avoiding performing a bad extrapolation with a very large bias. In the simulation, we indeed identify a few observables that we cannot perform QH on. For these observables, we can still perform QE, but it will lead to huge biases in the estimates. These observables have been excluded in our comparison between QE and QH.

Using Eqs. (45), (37) and (38), the sampling cost factor of performing QH in our example circuit is

where γ is the decay rate of the observable expectation values under noise.

Using Eqs. (39), (37) and \(\nu ={\mu }_{d}=\frac{\mu }{2}\Rightarrow \lambda =2\), the sampling cost factor of performing QE is

For comparison purpose, we also write down the sampling cost factor for removing all the errors using quasi-probability given by Eq. (26)

The comparison between CQ,0, CQE(γ) and CQH(γ) at different γ is plotted in Fig. 4. We can see that CQH(γ) is always lower than CQ,0 across all μ and γ, i.e. we can always get a sampling cost saving by applying QH instead of pure quasi-probability, which is also proven in Section “Combination of error mitigation techniques”. On the other hand, at γ = 1, CQE is larger than CQH for all μ and larger than CQ,0 for μ < 2.4. As γ decreases, CQH(γ) will increase while CQE(γ) will decrease. Thus they naturally complement each other as QH will be more suitable for large-γ error mitigation while QE will be more suitable for small-γ error mitigation. At γ = 0, we see that CQE becomes lower than both CQH and CQ,0 at μ > 1.8.

The average fitted γ of all the single-exponential observables within each plot in Fig. 1 all lie within the range 0.5–0.6. Hence, we will now focus on the γ = 0.5 plot in Fig. 4 to get an indication of the practical sampling costs of implementing different mitigation techniques.

At μ = 1, the sampling cost factor of quasi-probability is 43. QE requires a higher sampling cost, thus there is no point performing QE since pure quasi-probability can remove all the noise perfectly in theory with a lower cost. Compared to quasi-probability, QH can reduce the cost by more than 70% while still achieving the small estimation bias \({\overline{\epsilon }}_{QH} \sim 3\times 1{0}^{-3}\) shown in Table 2. In order for quasi-probability to have many advantages over QH, we must sample enough times such that the shot noise of pure quasi-probability is smaller than the estimation bias of QH (more rigorous arguments in Supplementary Note 8), which will require \({N}^{* } \sim \frac{{C}_{Q,0}}{{\overline{\epsilon }}_{QH}^{2}}\approx 4.3\times 1{0}^{6}\) samples for each observable. Therefore in practice, QH could be the preferred method over pure quasi-probability as it is challenging to sample more than N* for each observable within reasonable runtime constraints3.

At μ = 2, now the sampling cost factor of quasi-probability is 1800, which is hardly practical. QE can reduce this sampling cost by half while achieving estimation bias around 4 × 10−2 (Table 2), and QH can reduce this sampling cost by almost 90% while achieving estimation bias around 1 × 10−2 (Table 2), thus they both would be preferred over pure quasi-probability in practice following similar arguments in the μ = 1 case. We also see that QH outperforms QE in terms of both sampling cost and estimation bias at μ = 2, and thus would be preferred over QE. The cost of QE will only become lower than QH at μ = 3.9, however, at this point, neither of their sampling costs are likely to be practical.

Discussion

In this article, we have recapped and studied the mechanism and performance of three of the most well-known error mitigation techniques: symmetry verification, quasi-probability and error extrapolation under Pauli noise. By introducing the concepts of group errors and NISQ limits, we managed to prove that the change of the expectation value of a Pauli observable with increased Pauli noise strength can be approximated using multi-exponential decay, enabling us to extend exponential error extrapolation to multi-exponential extrapolation. We then performed eight-qubit numerical simulations for Fermi–Hubbard simulation under two different Pauli noise models, finding that the decay of their Pauli expectation values can all be fitted using single- or dual-exponential curves, confirming our earlier proof of multi-exponential decay. Using the same circuits, we performed dual-exponential extrapolation by probing at four different error rates, which is a minimal number of data points required, and managed to obtain a low estimation bias of ≲10−4 for almost all 34 observables except for one fringe case. In our simulations, the estimation bias of dual-exponential extrapolation is on average ~100 times lower than that of single-exponential extrapolation, with the maximum factor of bias reduction reaching ~104.

We then proceeded to combine different error mitigation techniques in the context of well-characterised local Pauli noise. Instead of using quasi-probability to completely remove all the noise, we can use it to suppress the noise strength and perform error extrapolation, which is named quasi-probability with exponential extrapolation (QE). Alternatively, we can use quasi-probability to remove the local undetectable noise and perform symmetry verification. Then instead of discarding all the circuit runs that fail the symmetry test, we have developed a way to recombine the expectation values of the “failed” and “passed” runs to obtain an estimate of the noiseless observable. The full combined method is called quasi-probability with hyperbolic extrapolation (QH). Note that both QE and QH are free of the requirement to adjust the hardware error rate despite the name “extrapolation”. By performing eight-qubit Fermi–Hubbard model simulations under local depolarising noise and using the fermionic number parity symmetry, we found that QH outperforms QE in terms of both estimation bias and sampling costs for almost all cases. When compared to pure quasi-probability, QH can achieve factor-of-4 and factor-of-9 sampling cost savings at the mean circuit error count μ = 1 and μ = 2, respectively, while still maintaining a low estimation bias of 10−3–10−2. Hence, QH would outperform pure quasi-probability in our examples unless we obtain an impractical number of samples (more than millions) per observable.

QH is derived under the assumption that the observables decay along single-exponential curves with increased noise. Our simulation shows that QH can be robust against violation of this assumption when applied to dual-exponential observables. However, such robustness may not persist with a further increase in the number of exponential components. A multi-exponential version of QH can be done through probing at more error rates and fitting Eq. (41) to the data. Alternatively, instead of probing at more error rates, we can also try to verify more symmetries. In such a way, we can obtain a set of expectation values corresponding to different verification syndromes for the multi-exponential version of the hyperbolic fitting. An example can be using the separate fermion number parity symmetries for each spin subspace, which will lead to expectation values corresponding to the four possible verification syndromes. However, how to recombine these expectation values in the case of multiple symmetries and how to use quasi-probability to transform the local error channels into the suitable forms for such a recombination is not a simple extension of the single-symmetry case we considered.

In our derivation, the number of exponential components in the expectation value decay curve in Eq. (34) is expected to scale exponentially with the number of gates. However, in our simulations, we fitted at most two exponential components for each of the observable decay curves. More analysis is needed to bridge the gap between the expected and the actual number of exponential components required, possibly based on the symmetry of the circuit. This will help us understand how the number of exponential components scales with the system size, enabling us to gauge the performance and the costs of scaling up the multi-exponential extrapolation method. It might be useful to draw ideas from non-Clifford randomized benchmarking15,16,17, in which multi-exponential decay is also employed for the fitting of the fidelity curves. When applying multi-exponential extrapolation in practice, we might want to develop Bayesian methods to determine whether we need to probe at more error rates, which error rates to probe, and whether to change the number of exponential components of our fitted curve based on the existing data. This has been done in the context of randomized benchmarking18 and it would be interesting to see its performance in the context of multi-exponential extrapolation.

One combination of error mitigation techniques that we have not explored here is pairing symmetry verification with error extrapolation without using quasi-probability. The naive version of such a combination is discussed in ref. 3. To make use of the results in this Article, one possible way is to approximate all the local error channels as the compositions of detectable and undetectable error channels, so that we can deal with them separately using hyperbolic extrapolation and exponential extrapolation. It would be very interesting to see the implementation details of such a method and how it compares to pure error extrapolation.

We have only considered Pauli noise in this Article, thus it will also be interesting to see whether our arguments can be extended to other error channels like amplitude damping or coherent errors. In practice, we can transform any error channels into Pauli channels using Pauli twirling19,20 and then apply our methods. Note that we can even perform further twirling like Clifford twirling to transform the error channels into group channels, which can be better mitigated as we have observed. Ways to transform a given error channel into a group channel can be an interesting area of investigation.

Data availability

The data that support the findings of this study are available from the corresponding author upon reasonable request.

Code availability

The code used in the current study is available from the corresponding author upon reasonable request.

References

Arute, F. et al. Quantum supremacy using a programmable superconducting processor. Nature 574, 505–510 (2019).

Preskill, J. Quantum Computing in the NISQ era and beyond. Quantum 2, 79 (2018).

Cai, Z. Resource estimation for quantum variational simulations of the Hubbard model. Phys. Rev. Appl. 14, 014059 (2020).

Cade, C., Mineh, L., Montanaro, A. & Stanisic, S. Strategies for solving the Fermi-Hubbard model on near-term quantum computers. Phys. Rev. B 102, 235122 (2020).

McArdle, S., Yuan, X. & Benjamin, S. Error-mitigated digital quantum simulation. Phys. Rev. Lett. 122, 180501 (2019).

Bonet-Monroig, X., Sagastizabal, R., Singh, M. & O’Brien, T. E. Low-cost error mitigation by symmetry verification. Phys. Rev. A 98, 062339 (2018).

Li, Y. & Benjamin, S. C. Efficient variational quantum simulator incorporating active error minimization. Phys. Rev. X7, 021050 (2017).

Temme, K., Bravyi, S. & Gambetta, J. M. Error mitigation for short-depth quantum circuits. Phys. Rev. Lett. 119, 180509 (2017).

Endo, S., Benjamin, S. C. & Li, Y. Practical quantum error mitigation for near-future applications. Phys. Rev. X 8, 031027 (2018).

Greenbaum, D. Introduction to quantum gate set tomography. Preprint at arXiv http://arxiv.org/abs/1509.02921 (2015).

Kandala, A. et al. Error mitigation extends the computational reach of a noisy quantum processor. Nature 567, 491–495 (2019).

Giurgica-Tiron, T., Hindy, Y., LaRose, R., Mari, A. & Zeng, W. J. Digital zero noise extrapolation for quantum error mitigation. In Proc 2020 IEEE International Conference on Quantum Computing and Engineering (QCE), 306–316 (2020).

Jones, T. & Benjamin, S. C. QuESTlink—Mathematica embiggened by a hardware-optimised quantum emulator. Quantum Sci. Technol. 5, 034012 (2020).

Jones, T., Brown, A., Bush, I. & Benjamin, S. C. QuEST and high performance simulation of quantum computers. Sci. Rep. 9, 1–11 (2019).

Cross, A. W., Magesan, E., Bishop, L. S., Smolin, J. A. & Gambetta, J. M. Scalable randomised benchmarking of non-Clifford gates. npj Quantum Inf. 2, 1–5 (2016).

Helsen, J., Xue, X., Vandersypen, L. M. K. & Wehner, S. A new class of efficient randomized benchmarking protocols. npj Quantum Inf. 5, 1–9 (2019).

Flammia, S. T. & Wallman, J. J. Efficient estimation of Pauli channels. ACM Trans. Quantum Comput. 1, 3:1–3:32 (2020).

Granade, C. et al. QInfer: statistical inference software for quantum applications. Quantum 1, 5 (2017).

Wallman, J. J. & Emerson, J. Noise tailoring for scalable quantum computation via randomized compiling. Phys. Rev. A 94, 052325 (2016).

Cai, Z. & Benjamin, S. C. Constructing smaller Pauli twirling sets for arbitrary error channels. Sci. Rep. 9, 1–11 (2019).

Acknowledgements

The author would like to thank Ying Li and Simon Benjamin for reading through the manuscript and providing valuable insights. The author is supported by the Junior Research Fellowship from St John’s College, Oxford and acknowledges support from Quantum Motion Technologies Ltd and the QCS Hub (EP/T001062/1).

Author information

Authors and Affiliations

Contributions

Z.C. is the sole author of the article.

Corresponding author

Ethics declarations

Competing interests

The author declares no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Cai, Z. Multi-exponential error extrapolation and combining error mitigation techniques for NISQ applications. npj Quantum Inf 7, 80 (2021). https://doi.org/10.1038/s41534-021-00404-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41534-021-00404-3

This article is cited by

-

Pipeline quantum processor architecture for silicon spin qubits

npj Quantum Information (2024)

-

Quantum error mitigation in the regime of high noise using deep neural network: Trotterized dynamics

Quantum Information Processing (2024)

-

Evidence for the utility of quantum computing before fault tolerance

Nature (2023)

-

Noise-resistant quantum state compression readout

Science China Physics, Mechanics & Astronomy (2023)

-

Near-term quantum computing techniques: Variational quantum algorithms, error mitigation, circuit compilation, benchmarking and classical simulation

Science China Physics, Mechanics & Astronomy (2023)