Abstract

Analog quantum simulation is expected to be a significant application of near-term quantum devices. Verification of these devices without comparison to known simulation results will be an important task as the system size grows beyond the regime that can be simulated classically. We introduce a set of experimentally-motivated verification protocols for analog quantum simulators, discussing their sensitivity to a variety of error sources and their scalability to larger system sizes. We demonstrate these protocols experimentally using a two-qubit trapped-ion analog quantum simulator and numerically using models of up to five qubits.

Similar content being viewed by others

Introduction

Quantum simulation has long been proposed as a primary application of quantum information processing1. In particular, analog quantum simulation, in which the Hamiltonian evolution of a particular quantum system is directly implemented in an experimental device, is projected to be an important application of near-term quantum devices2, with the goal of providing solutions to problems that are infeasible for any classical computer in existence. Because the obtained solutions to these problems cannot always be checked against known results, a key requirement for these devices will be the ability to verify that the desired interactions are being carried out faithfully3,4,5. If a trusted analog quantum simulator is available, then one can certify the behavior of an untrusted analog quantum simulator6. But in the absence of a trusted device, provable verification is essentially intractable for systems of interest that are too large to simulate classically3. Therefore, in the near-term, we see a need to develop pragmatic techniques to verify these devices and thus increase confidence in the results obtained.

Many experimental platforms have been used to perform analog quantum simulations of varying types, including devices based on neutral atoms7,8,9, trapped ions10,11,12, photons13, and superconducting circuits14. In such works, validation of simulation results is typically performed by comparison to results calculated analytically or numerically in the regime where such calculation is possible. In addition, a technique for self-verification has been proposed and demonstrated12 which measures the variance of the energy to confirm that the system has reached an eigenstate of the Hamiltonian. However, this technique does not verify whether the desired Hamiltonian has been implemented faithfully.

One method which has been proposed for analog simulation verification is to run the dynamics forward and backward for equal amounts of time3, commonly known as a Loschmidt echo15,16, which ideally returns the system to its initial state. Such a method is not able to provide confidence that the parameters of the simulation are correct, nor can it detect some common sources of experimental error such as slow environmental fluctuations or crosstalk between various regions of the physical device. However, it is naturally scalable and is straightforward to implement experimentally, provided that a time-reversed version of the analog simulation can be implemented. An extension of this method similar to randomized benchmarking has also been proposed17, although this suffers from the same shortcomings just mentioned.

Another natural candidate for verification of analog simulations is to build multiple devices capable of running the same simulation and to compare the results across devices, which is a technique that has been demonstrated for both gate-based devices18 and analog simulators19. This technique has the obvious difficulty of requiring access to additional hardware, in addition to the fact that it may be difficult to perform the same analog simulation across multiple types of experimental platforms.

Experimentalists building analog quantum simulators are in need of practical proposals for validating the performance of these devices. Ideally such a protocol can be executed on a single device, can provide confidence that the target Hamiltonian is correctly implemented, and can be scaled to large systems. In this work, we aim to address these goals by introducing a set of experimentally practical approaches to the task of validating the performance of analog quantum simulators.

Results

Overview of verification protocols

The task of analog quantum simulation involves configuring a quantum system in some initial state, allowing it to evolve according to some target Hamiltonian for a particular time duration, and then analyzing one or more observables of interest. A verification protocol for this process should provide some measure of how faithfully the device implements the target Hamiltonian.

We claim that a useful protocol for verification of analog quantum simulators should have the following attributes:

Independent of numerical calculations of the system dynamics

We should not need to rely on comparison of the analog simulation results to numerically-calculated dynamics of the full system, since simulations of interest will be performed in regimes where numerical calculation is infeasible.

Efficient to measure

Verification protocols should leave the system in or near a basis state, rather than in some arbitrary state. This allows characterization of the final state by making only a small number of measurements. This allows us to circumvent the need for more intensive procedures such as full state tomography, which in turn reduces the experimental overhead.

Sensitive to many experimental error sources

The main objective of a verification protocol is to measure experimental imperfections. If a protocol is not sensitive to some potential sources of experimental error in the simulation, it cannot give us maximal confidence in the results.

Applicable to near-term analog quantum simulators

Unlike many benchmarking protocols for digital, gate-based quantum computers, we are not seeking a protocol which can give fine-grained information about the fidelity of a particular operation, but rather an approach which can give us coarse-grained information about the reliability of a noisy simulation.

Scalable to large systems

Many interesting near-term analog quantum simulations will likely be performed in regimes where the system size is relatively large (many tens or hundreds of qubits). A useful verification protocol for such devices should be efficiently scalable to these system sizes, given reasonable assumptions.

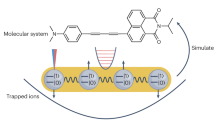

In this work, we propose a set of three verification protocols for analog quantum simulators which exhibit many of these attributes. These are illustrated in Fig. 1. The overarching strategy for each protocol, inspired by the Loschmidt echo procedure, involves asking the simulator to evolve a system from some known initial state through a closed loop in state space, eventually returning to its initial state. By using a basis state as the initial (and final) state, we can efficiently measure the success of this procedure. A number of strategies exist to construct such a closed loop, with varying pros and cons. We use a few of these strategies to construct the proposed verification protocols. These protocols are summarized in Table 1, including some types of experimental noise to which each protocol is sensitive, the hardware requirements for implementing each protocol, and the scalability constraints of each protocol.

Various protocols yield information about the accuracy of a quantum simulator by propagating a state along a closed loop and verifying to what degree the system returns to its original state, labeled here as \(\left|0\right\rangle\). The state \(\left|\psi \right\rangle\) denotes the state of the system after applying the dynamics of Hamiltonian H for a time τ, whereas the state \(\left|\phi \right\rangle\) denotes an arbitrary state. a Time-reversal analog verification: Running an analog simulation forward in time, followed by the same analog simulation backward in time. b Multi-basis analog verification: Running an analog simulation forward in time, rotating the state, performing the backward simulation by an analog version in the rotated basis, and finally rotating the state back. c Randomized analog verification: Running a random sequence of subsets of the Hamiltonian terms (denoted as Hrand), followed by an inversion sequence of subsets of the Hamiltonian terms which has been calculated to return the system approximately to a basis state.

First, we propose a time-reversal analog verification protocol, in which the simulation is run both forward and backward in time. As illustrated in Fig. 1a, this approach simply performs a Loschmidt echo to reverse the time dynamics of the simulation and then verifies that the system has returned to its initial state. However, because the system traverses the same path in state space in the forward and backward directions, it is insensitive to many types of experimental errors, including systematic errors such as miscalibrations in the Hamiltonian parameters or crosstalk between sites.

To increase the susceptibility to systematic errors, we propose a multi-basis analog verification protocol, as shown in Fig. 1b. This is a variant of the time-reversal protocol in which a global rotation is performed on the system after the completion of the forward evolution, and the backward evolution is then performed in the rotated basis. Because this requires a physical implementation of the analog simulation in an additional basis, it will provide sensitivity to any systematic errors that differ between the two bases. For example, errors due to some types of shot-to-shot noise may be enhanced and not cancel out as in the previous protocol.

However, we note that the previous two protocols may still be insensitive to many types of errors, such as miscalibration or the presence of unwanted constant interaction terms. To address this, we introduce a randomized analog verification protocol, which consists of running randomized analog sequences of subsets of the target Hamiltonian terms, as depicted in Fig. 1c. In particular, we choose a set of unitary operators consisting of short, discrete time steps of each of the terms of the Hamiltonian to be simulated, which may be in either the forward or backward direction. We randomly generate long sequences of interactions, each consisting of a subset of these unitary operators, which evolves the system to some arbitrary state. We then use a Markov chain Monte Carlo search technique to approximately compile an inversion sequence using the same set of unitary operators, such that after the completion of the sequence, the system is measured to be in a basis state with high probability. This scheme is an adaptation of traditional gate-based randomized benchmarking techniques20,21 for use in characterizing an analog quantum simulator. A key difference is that for a general set of Hamiltonian terms, finding a non-trivial exact inversion of a random sequence is difficult, which is why we instead find an approximate inversion sequence. In principle, this approximation is a limitation on the precision with which this protocol can be used to verify device performance. However, in practice, the search technique can be used to produce inversion sequences that return a large percentage (e.g., 99% or more) of the population to a particular basis state, which is enough for the protocol to be useful on noisy near-term devices, since even the most accurate analog quantum simulations typically have fidelities that decay far below this level22.

Each verification protocol can then be executed for varying lengths of time, and the measurement results will provide the success probability of each protocol as a function of time. For a system that implements the target Hamiltonian perfectly, one expects this probability to remain constant, with a small offset from unity due to state preparation and measurement errors, as well as the approximation error for the inversion sequence in the randomized protocol. But if the system dynamics are not perfect, one expects the success probability to decrease as a function of time.

For standard randomized benchmarking protocols, the shape of the decay curve provides additional information about the errors, for example, allowing one to distinguish whether the dominant error source affecting the dynamics is Markovian or non-Markovian. For typical incoherent noise, one expects this to be an exponential decay, but for noise that is non-Markovian23,24 or low-frequency25, the decay curve may be non-exponential.

However, in general, we make no strong claim about the shape of the decay curves resulting from the analog verification protocols. In particular, randomized benchmarking requires that the gate set must form an ϵ-approximate 2-design, which is true not only of the Clifford group but also of any universal gate set, given that the randomly generated sequences are long enough26. However, the time-evolution operator generated by a fixed Hamiltonian cannot approach a 2-design without adding a disorder term17, which means that we cannot directly apply randomized benchmarking theory for the time-reversal or multi-basis analog verification protocols. And even the randomized analog verification protocol, which is conceptually more similar to randomized benchmarking, does not require that the Hamiltonian terms actually generate a universal gate set or that the generated sequences are long enough to approximate a unitary 2-design.

Nonetheless, the decay curves still contain potentially useful information about the reliability of the analog quantum simulator. The protocols could be used as a tool to assist in calibrating an analog simulation by attempting to minimize the decay. Also, since each protocol has different sensitivities to errors, comparing decay curves from the various protocols may give clues to an experimentalist about the types of errors that are present.

In this work, we treat noise sources in an analog quantum simulation as modifications of the target Hamiltonian. Physically, these could be caused by variations in quantities such as laser intensity, microwave intensity, magnetic fields, or other terms which could create undesired interactions with the system. We can then represent the full Hamiltonian implemented by the system as

where H is the target Hamiltonian to be simulated, which we assume is time-independent, and

represents any unwanted time-dependence and other miscalibrations present in the physical system. We assume that each λk(t) varies on some characteristic timescale tk. For example, if λk(t) is a stationary Gaussian process, then tk may be the decay time of the autocorrelation function \(R(t)=\left\langle {\lambda }_{k}(0){\lambda }_{k}(t)\right\rangle\). We note that there are several distinct regimes:

Miscalibrations

tk ≫ Nτ, where N is the number of repetitions performed in a quantum simulation experiment, and τ is the total runtime of each repetition. This regime corresponds to miscalibrations, unwanted interactions, and other noise that varies on a very slow timescale.

Slow noise

Nτ > tk > τ. This corresponds to noise that causes fluctuations from one run of the experiment to the next, but is roughly constant over the course of a single experiment, i.e., shot-to-shot noise.

Fast noise

tk ≪ τ. This is the type of fluctuation that is most commonly referred to as "noise”, i.e., fluctuations in parameters that are much faster than the timescale of a single experiment.

We design verification protocols to detect different subsets of these noise types: the time-reversal analog verification protocol for detecting fast noise, the multi-basis analog verification protocol for additionally detecting some types of slow noise, and finally the randomized analog verification protocol for detecting miscalibrations and other unwanted interactions. These protocols are described and demonstrated in the remainder of this work.

Time-reversal verification protocol

The time-reversal analog verification protocol consists of the following steps, repeated for various values of τ, which should range over the characteristic time scale of the simulation to be tested:

Step 1. Initialize the system state to an arbitrarily-chosen basis state \(\left|i\right\rangle\).

Step 2. Apply the analog simulation for time τ, that is, apply the unitary operator e−iHτ, which ideally takes the system to the state \(\left|\psi \right\rangle\). (We use the convention \(\hbar\) = 1 here and throughout this work.)

Step 3. Apply the analog simulation with reversed time dynamics for time τ, that is, apply the operator e+iHτ, which ideally takes the system to the state \(\left|i\right\rangle\).

Step 4. Measure the final state in the computational basis. Record the probability that the final state is measured to be \(\left|i\right\rangle\).

After repeating these steps for various values of τ, a decay curve can be plotted which indicates the success probability of finding the system in the desired state as a function of simulation time.

We first note that this protocol does not provide validation of the values of any time-independent Hamiltonian parameters, because if \(\tilde{H}\) is time-independent, \({e}^{i\tilde{H}\tau }{e}^{-i\tilde{H}\tau }={\mathbb{1}}\) regardless of whether \(\tilde{H}\) is actually the desired Hamiltonian. It does, however, provide sensitivity to fast, incoherent noise that affects the system on a timescale shorter than the simulation time, and it also will detect imperfections in the implementation of the time-reversal itself.

More formally, the forward time-evolution operator from time 0 to τ can then be written explicitly in terms of a Dyson series as

where \({\mathcal{T}}\) is the time-ordering operator. The reverse time-evolution operator from time τ to 2τ is then

It is apparent that if the noise terms in the Hamiltonian are constant between times 0 and 2τ, i.e., if δH(t) = δH, then we have

and thus applying the forward and reverse time-evolution operators will return the system to its initial state.

However, this is not true in general if the noise terms have time-dependence. We can illustrate this by making a simplifying assumption that the noise is piecewise constant between times 0 and 2τ as

where δH1 and δH2 are non-commuting in general. We then perform a first-order Baker–Campbell–Hausdorff approximation, which shows that

In the general case where δH1 ≠ δH2, this quantity will not be equal to the identity. A similar argument also holds if the noise terms vary on faster timescales. That is, if δH(t) contains one or more noise terms such that λk(t) has a correlation time tk ≪ τ, then the product of the forward and reverse time-evolution operators will not be equal to the identity in general, and the system will not return to its initial state.

The time-reversal analog verification protocol requires only that the analog quantum simulator is capable of implementing the time-reversed dynamics of the desired simulation, that is, the signs of each of the Hamiltonian terms can be negated. Because there are no numerical calculations required, the protocol is independent of the size of the system, and its scalability has no inherent limitations, outside of any physical limitations involved in implementing the analog simulation itself in both directions.

Multi-basis analog verification protocol

The multi-basis analog verification protocol consists of the following steps, repeated for various values of τ, which should range over the characteristic time scale of the simulation to be tested:

Step 1. Initialize the system state to an arbitrarily-chosen basis state \(\left|i\right\rangle\).

Step 2. Apply the analog simulation for time τ, that is, apply the unitary operator e−iHτ, which ideally takes the system to the state \(\left|\psi \right\rangle\).

Step 3. Apply a basis transformation R to the system to take it to the state \(R\left|\psi \right\rangle\), with R chosen such that both R and the rotated inverse Hamiltonian

are implementable. For example, if the target Hamiltonian is

one could choose

if and only if the analog quantum simulator can physically implement the interactions R, H, and

Step 4. Apply the analog simulation in the rotated basis and with reversed time dynamics for time τ, that is, apply the operator \({e}^{+iH^{\prime} \tau }\), which ideally takes the system to the state \(R\left|i\right\rangle\).

Step 5. Apply the inverse of the rotation performed in Step 3, that is, apply a global − π/2 rotation R† to the system, which ideally takes the system back to the initial state \(\left|i\right\rangle\).

Step 6. Measure the final state in the computational basis. Record the probability that the final state is measured to be \(\left|i\right\rangle\).

After repeating these steps for various values of τ, a decay curve can be plotted which indicates the success probability of finding the system in the desired state as a function of simulation time.

We note that this protocol will detect errors such as miscalibrations or slow fluctuations if the strength of these errors differs in the two bases. Specifically, if \(\tilde{H}\) and \(\tilde{H}^{\prime}\) are the implementations in the two bases which contain noise terms δH(t) and \(\delta H^{\prime} (t)\), respectively, then the forward and reverse time-evolution operators can be written as

Then, even in the simplest case where we have time-independent noise terms δH(t) = δH and \(\delta H^{\prime} (t)=\delta H^{\prime}\), we see that applying the forward and reverse time-evolution operators and the appropriate basis-change operators R and R†, gives

where we have defined

as the rotation of \(\delta H^{\prime}\) into the original basis, and where we use the fact from Eq. (9) that \({R}^{\dagger }H^{\prime} R=H\). We assume here for simplicity that R and R† are implemented ideally.

We observe again that the resulting quantity is not equal to the identity in the general case where δH ≠ δH″, as well as in the cases where δH and δH″ are non-commuting with each other or with H. So we can conclude that in the case that the noise terms δH(t) and \(\delta H^{\prime} (t)\) vary independently of each other, even if their correlation times are much longer than the timescale of a single experiment, the system will not return to its initial state when these time-evolution operators are applied.

The multi-basis analog verification protocol requires that the analog quantum simulator implements the desired Hamiltonian in at least two separate bases. For example, a trapped-ion quantum simulator may implement a nearest-neighbor coupling term using both a σxσx Mølmer–Sørensen interaction27 and a σzσz geometric phase gate interaction28, which are equivalent up to a basis change. Likewise, a simulator based on superconducting qubits could implement entangling interactions in multiple bases, for example, bSWAP interactions using different phases of the microwave drive29. (Alternatively, if the device cannot implement the analog simulation in a different basis, but does implement a full universal gate set for quantum computation, the Hamiltonian may be implemented in a digital manner in an alternate basis via Trotterization.)

In addition to the multi-basis requirement, the device must also have the ability to perform single-qubit rotations in order to make the necessary basis change. But there are no numerical calculations required in advance, and thus the protocol itself is independent of the size of the system and has no inherent scalability limitations, outside of any limitations in performing the actual analog simulation in the two necessary bases.

Randomized analog verification protocol

It turns out that the previous two protocols cannot detect all types of errors. Most notably, neither protocol verifies that the simulation actually implements the target Hamiltonian H. Errors due to parameter miscalibration or the presence of unwanted constant interaction terms would not be detectable using these schemes.

To address this, we introduce a third protocol, which consists of running randomized analog sequences of subsets of the target Hamiltonian terms. In particular, we choose a set of unitary operators consisting of short, discrete time steps of each of the terms of the Hamiltonian to be simulated. We randomly generate long sequences of interactions, each consisting of a subset of these unitary operators, which evolves the system to some arbitrary state. We then use a stochastic search technique to approximately compile the inverse of these sequences using the same set of unitary operators, which produces another sequence of interactions. When appended to the original sequence the system returns to the initial state (or another basis state) with high probability.

This protocol is inspired by randomized benchmarking (RB) protocols, which are often used for characterization of gate-based devices20,21,30,31,32,33,34. Most commonly, RB involves generating many random sequences of Clifford gates and appending to each sequence an inversion Clifford. Ideally, in the absence of errors, the execution of each sequence should return all of the population to a well-known basis state. Measuring the actual population of the desired basis state after the execution of each sequence allows one to calculate a metric related to the average gate fidelity of the device, which can be used to compare the performance of a wide variety of physical devices.

We note that traditional RB has limited scalability due to the complexity of implementing multi-qubit Clifford gates, and has been demonstrated only for up to three qubits35; however, RB-like protocols have been demonstrated on larger systems36,37.

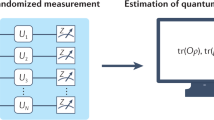

Figure 2 contains an illustration comparing the randomized analog verification protocol with the traditional Clifford-based RB protocol. We note that this protocol significantly differs from a recently-proposed technique for benchmarking analog devices17 in that we construct the approximate inversion sequence independently of the initial randomly-generated sequence, which in general prevents miscalibrations and constant errors from canceling out during the inversion step. We also implement the protocol using subsets of the Hamiltonian terms, which lends itself to scalability.

Both protocols involve generating a sequence that starts and ends in a known basis state, which is denoted \(\left|0\right\rangle\) in this figure for simplicity, and proceed by simply making a series of random choices. For traditional RB, the inversion Clifford is calculated deterministically based on the preceding sequence of random Cliffords. For randomized analog verification, the inversion sequence is compiled approximately via a stochastic search procedure.

We write the target Hamiltonian as a sum of terms

where we assume that the simulator can enable both the forward and time-reversed version of each Hi independently of the others. We note that this protocol, in addition to being sensitive to implementation errors in the time-reversal, will also be affected by experimental errors in the enabling or disabling of the individual Hamiltonian terms.

We then repeat the following steps for various values of τ, which is the time scale on which the sequence will operate and should range over the characteristic time scale of the simulation to be tested:

Step 1. Randomly choose an initial basis state \(\left|i\right\rangle\).

Step 2. Generate n random subsets (e.g., n = 100) of the terms of the target Hamiltonian, and define

as the sum of the terms in subset k. To increase the randomness of the resulting path, choose also the direction (forward or time-reversed) of each subset at random. Apply each of the resulting unitary time-evolution operators, i.e.,

for k = 1 to n, to the initial state \(\left|i\right\rangle\), which evolves the system to an intermediate state \(\left|\phi \right\rangle\).

Step 3. Calculate another sequence of these random unitaries that will approximately invert the process and act on \(\left|\phi \right\rangle\) to produce a basis state \(\left|f\right\rangle\) within some target fidelity, e.g., 0.99. Apply the sequence, which ideally will take the system to the final state \(\left|f\right\rangle\) with probability of at least the desired target fidelity.

Step 4. Measure the final state in the computational basis. Record the probability that the final state is measured to be \(\left|f\right\rangle\).

After repeating these steps for various values of τ, the resulting decay curve indicates the success probability of finding the system in the desired state after executing the randomized sequences as a function of effective simulation time.

Calculating an appropriate inversion layer, using only small time steps of the Hamiltonian terms as building blocks, is the most computationally intensive part of this protocol. We cannot directly reverse the random sequence generated, since this would simply be a time-reversal, and errors such as miscalibrations or shot-to-shot noise would cancel out. Instead, we generate a new sequence by explicitly calculating the product of the random sequence of unitaries and then building a sequence which inverts it.

Since compiling an exact inversion layer (outside of simply reversing the random sequence) is likely infeasible, we allow the inversion layer to only approximately invert the original sequence, such that we return nearly all of the population to a basis state. We note that the approximate nature still allows us to assess the quality of the simulation with the targeted precision using a single measurement basis.

To construct the inversion layer, we use the STOQ protocol for approximate compilation38, which is a stochastic Markov chain Monte Carlo (MCMC) search technique using a Metropolis-like algorithm. This is a randomized approach to compiling an arbitrary unitary into a sequence of "gates” drawn from a finite set of allowed unitaries, similar to the approach used in a proposed technique for quantum-assisted quantum compiling39.

Specifically, since the set of allowed unitaries here consists of all possible random subsets of the Hamiltonian terms, we have the following procedure for approximately compiling the inversion layer (illustrated in Fig. 3):

-

1.

Generate n randomized layers, each of which determines a unitary operation Uk, as defined in Eq. (20).

-

2.

Calculate the state after applying all n of the randomized layers to the initial state as

$$\left|\phi \right\rangle ={U}_{n}{U}_{n-1}\cdots {U}_{2}{U}_{1}\left|i\right\rangle .$$(21) -

3.

Build up a new sequence of layers, which will become the inversion layer, by incrementally adding a randomized layer or removing a layer from the beginning or end of the sequence (such that we only have to perform one multiplication per proposed step). Let the product of these layers be Uinv.

-

4.

For each proposed addition or removal, look at the basis state of \({U}_{{\rm{inv}}}\left|\phi \right\rangle\) with the largest population fraction to see if it has increased or decreased from the prior state. If it has increased, the system is closer to a basis state, and therefore accept the proposed addition or removal. If it has decreased, usually reject it, but sometimes accept it, based on the value of the MCMC annealing parameter β.

-

5.

Continue until the largest basis state population reaches some desired threshold (e.g., 0.99), which determines the population fraction in the final basis state after executing the compiled sequence.

In order to increase the distinction between this compiled inversion sequence and the original randomly-generated sequence (which seems desirable in order to avoid potentially canceling out any systematic errors), we initialize the MCMC search algorithm with a large value of the annealing parameter β, which increases the randomness in the early part of the compiled sequence. Over time, we linearly decrease the value of β until the process finally converges toward a basis state.

Notice that because this procedure simply takes us approximately to some basis state (not necessarily the initial state), a true inversion sequence would require a final local rotation of the appropriate qubits to take the system back to the initial state. However, since the intention is simply to measure the resulting state, this final rotation is unnecessary – we can just measure the state and compare the result to the expected final basis state, rather than comparing to the initial basis state.

Because this process is randomized, it is not guaranteed to converge40. To account for this, in the implementation used for this work, we launch many tens of MCMC search processes in parallel, which in practice typically allows the search to succeed in reasonable time. For example, in the five-qubit numerical simulation described later in this section, when the original sequence has ~100 random layers, one of the MCMC processes will typically converge to the desired accuracy of 98% within a few thousand steps.

The scalability of the randomized analog verification protocol is limited by the approximate compilation of the inversion layer. Performing this compilation requires many explicit multiplications of unitary operators acting on the full Hilbert space of the system being simulated, and thus has at least the same complexity as actually simulating the dynamics of the system. Unless a reliable quantum computer is available39, this must be done on a classical computer, and so it is likely infeasible to apply this protocol directly to systems with more than tens of qubits.

To apply this protocol to large-scale simulations, we can break the full system into subsystems33,41 to reduce the exponential scaling to polynomial scaling. Specifically, if the Hamiltonian is k-local, we can decompose the system into subsystems of size s ≥ 2k (see Fig. 4), and then run this protocol on every subsystem. This will test every interaction term, as well as potential errors such as crosstalk that may occur between any two distant interaction terms in the system. The number of such subsystems grows only polynomially with degree s, not exponentially. Since this is equivalent to testing each subsystem of size s independently, the downside of this approach is the loss of sensitivity to errors that may occur only for subsystems of size larger than s; however, in many systems, it is likely reasonable to assume that such errors are small. Additional work will be needed to understand exactly what claims one can make about the performance of the large-scale analog simulation by characterizing the subsystems in this way.

Here the Hamiltonian is k-local with k = 2. Colored dashed outlines show possible subsets of size s = 4, each of which is formed from two (possibly distant) pairs of connected neighbors. The total number of ways to choose such subsets from this lattice is 3540. Running the randomized analog verification protocol on all such subsets will test for errors associated with each interaction term in the Hamiltonian, as well as errors that may be caused by unwanted interaction (e.g., crosstalk) between any two pairs of sites in the system.

Experimental demonstration with trapped ions

To demonstrate the feasibility of implementing these verification protocols experimentally, we choose a simple two-site Ising model with transverse field

and we choose J = 2π × 139 Hz and b = 2π × 227 Hz. We implement this model in a trapped-ion analog quantum simulator containing two 40Ca+ ions. We use the electronic S1/2 ground orbital and D5/2 metastable excited orbital as the qubit states, and we drive transitions between these states using a 729 nm laser42. In particular, we choose \(\left|g\right\rangle =\left|{S}_{1/2},{m}_{j}=-1/2\right\rangle\) and \(\left|e\right\rangle =\left|{D}_{5/2},{m}_{j}=-1/2\right\rangle\) as the states of the two-level system.

We prepare the system in the state \(\left|eg\right\rangle\) or \(\left|ge\right\rangle\) by optically pumping the ions to the state \(\left|gg\right\rangle\), using a π-pulse with a laser beam localized to a single ion to prepare the state \(\left|eg\right\rangle\), and then optionally a π-pulse with a laser beam addressing both ions to prepare the state \(\left|ge\right\rangle\).

We then implement the Ising model by combining three tones in a laser beam that addresses both ions equally. In particular, we realize the transverse field interaction via a laser tone resonant with the qubit transition frequency with Rabi frequency ΩC. This creates the desired \((b/2)({\sigma }_{y}^{(1)}+{\sigma }_{y}^{(2)})\) interaction with b = ΩC. In addition, we implement the site-site coupling via a Mølmer–Sørensen interaction27 via the axial stretch vibrational mode with ωax ≈ 2π × 1.514 MHz, where we apply two laser tones detuned from the qubit transition frequency by ±(ωax + δMS), with δMS = 2π × 80 kHz, and where each tone has Rabi frequency ΩMS. This creates an effective \((J/2){\sigma }_{x}^{(1)}{\sigma }_{x}^{(2)}\) interaction with \(J={\eta }_{{\rm{ax}}}^{2}{{{\Omega }}}_{{\rm{MS}}}^{2}/{\delta }_{{\rm{MS}}}\), where ηax ≈ 0.08 is the Lamb–Dicke parameter indicating the coupling of the laser beam to the axial mode of the ion crystal, and we tune ΩMS to produce the desired value of the coupling strength J.

In addition to designing the analog simulation itself, we must also implement the time-reversed and rotated versions of the simulation in order to implement the desired verification protocols. For the time-reversal analog verification protocol, we take H to −H by shifting the phase of the resonant tone by π, which takes b to −b in the transverse field interaction, and by changing the Mølmer-Sørensen detuning from δMS to − δMS (with a small correction to account for a change in AC Stark shift), which takes J to −J in the effective \({\sigma }_{x}^{(1)}{\sigma }_{x}^{(2)}\) interaction.

For the multi-basis analog verification protocol, we choose the basis rotation

which is a global π/2 rotation around the z-axis. We implement R physically via a sequence of single-qubit carrier rotations, using the fact that

We then must implement RHR†, which is the Hamiltonian in the rotated basis. For the transverse field term, we note that

which we implement by simply shifting the phase of the resonant tone by π/2 as compared to the phase used to implement \({\sigma }_{y}^{(1)}+{\sigma }_{y}^{(2)}\). For the coupling term, we note that

which we implement by shifting the phase of the blue-sideband Mølmer–Sørensen tone by π with respect to the red-sideband tone43.

Finally, for the randomized analog verification protocol, we write the target Hamiltonian from Eq. (22) as

where H1 and H2 are defined as

We then generate 200 random sequences of subsets of these Hamiltonian terms in either the forward or time-reversed direction, such that each step of each sequence is selected from the set

and each sequence consists of 10 ≤ n ≤ 50 steps of length 8 μs ≤ tstep ≤ 290 μs. For each sequence, we then compile an approximate inversion sequence consisting of steps from the same set Hsteps. Each sequence has a randomly-chosen initial state from the set \(\{\left|ge\right\rangle ,\left|eg\right\rangle \}\), and each full sequence ideally leaves the system in some basis state with at least 98% probability. The terms in the set Hsteps are implemented experimentally by enabling or disabling the corresponding laser tones and by time-reversing the analog simulation as necessary.

To test the behavior of each of these protocols, we execute the time-reversal and multi-basis analog verification protocols for varying simulation times and execute all 200 of the randomized analog verification sequences. The results of these experimental runs are shown in Fig. 5. To produce these results, we executed each protocol under three different sets of experimentally-motivated noise conditions:

-

1.

No injected noise: We execute each of the verification protocols after calibrating the individual interactions to approximately match the desired dynamics.

-

2.

Slow noise injected: We introduce shot-to-shot fluctuations by intentionally varying the intensity of each of the three tones in the laser beam using parameters drawn from a Gaussian distribution with relative standard deviation of 3 dB. The parameter variations in the original basis are drawn independently from those in the rotated basis, which emulates the case where the system has independent noise sources in the two bases.

-

3.

Parameter miscalibration: We intentionally miscalibrate the Mølmer-Sørensen detuning to δMS = 2π × 60 kHz, which has the effect of increasing the coupling strength J by a factor of 1/3.

Results are for the two-site Ising model from Eq. (22), with J = 2π × 139 Hz and b = 2π × 227 Hz. Each plot shows the experimentally-measured population in the expected final state after running each of the verification protocols under the specified type of injected noise. Data points represent raw experimental results and include experimental errors due to state preparation, measurement, and imperfect control. For the time-reversal and multi-basis analog verification protocols, each datapoint represents the distribution of measured results over 200 independent runs. For the randomized analog verification protocol, each data point represents the distribution of measured results of ten different randomly generated sequences, with each sequence executed 100 times. Error bars indicate standard error of the mean.

To provide more insight into the results of these protocols, in Fig. 6 we plot the actual population dynamics of the analog simulation in the absence of injected noise. We observe that the implemented simulation diverges significantly from the ideal simulation after only a few milliseconds, primarily due to miscalibration and dephasing noise. We intentionally allow this divergence as a test case for the various verification protocols, since it is caused by errors that may be typical in experiments. The miscalibration here is due to laser intensities and/or frequencies that have not been optimized to produce the desired dynamics, and the dephasing noise is likely caused by the presence of global magnetic field fluctuations which cause the state to decohere when leaving the subspace \(\{\left|ge\right\rangle ,\left|eg\right\rangle \}\), which is a decoherence-free subspace with respect to the global magnetic field.

Each data point is the average of 100 independent runs. Error bars indicate standard error of the mean. The dotted curves represent the ideal dynamics of a perfectly-calibrated analog simulation (J = 2π × 139 Hz, b = 2π × 227 Hz) in the absence of noise. The solid curves represent the theoretical dynamics of a miscalibrated analog simulation (J = 2π × 250 Hz, b = 2π × 102 Hz) with a dephasing rate of γϕ = 2π × 38 Hz, where these parameters are chosen empirically as a reasonable approximation of the observed experimental data points. The dashed curve is the fidelity of the state evolved according to the miscalibrated dynamics with the state evolved according to the ideal dynamics, calculated using Eq. (31).

Also plotted in Fig. 6 is a curve showing the fidelity between an ideal evolution of the system state and an approximation of the system state obtained experimentally. For the ideal Hamiltonian H, defined in Eq. (22), we use the target values (J = 2π × 139 Hz, b = 2π × 227 Hz) and perform unitary evolution under the Schrödinger equation to obtain the dynamics of the ideal state \(\rho (t)=\left|\psi (t)\right\rangle \left\langle \psi (t)\right|\), where \(\left|\psi (t)\right\rangle ={e}^{-iHt}\left|\psi (0)\right\rangle\). For the experimentally-miscalibrated Hamiltonian \(\tilde{H}\), we use parameters that approximately match the observed measurements (J = 2π × 250 Hz, b = 2π × 102 Hz) with an appropriate dephasing rate (γϕ = 2π × 38 Hz). We then perform non-unitary evolution under the Lindblad master equation, using the Lindblad operator \(L=\sqrt{{\gamma }_{\phi }/2}\ {\sigma }_{z}\) as the dephasing mechanism, to obtain the approximate dynamics of the experimentally-obtained state \(\tilde{\rho }(t)\). The approximate fidelity between the ideal state and the experimentally-obtained state is then

The fidelity curve plotted in Fig. 6 is this approximate fidelity function \(\tilde{F}(t)\), and we observe that it decays to 50% in ~7 ms.

Despite this fast decay of the fidelity, we note that in the absence of additional injected noise, both the time-reversal and multi-basis analog verification protocols in Fig. 5(a) show decay times on the order of tens of milliseconds. Because these protocols are sensitive to fast, incoherent noise, we deduce that the majority of the errors present in the experiment are slower than the timescale of each experiment and are therefore canceled out by these protocols.

Conversely, we consider the results of the randomized analog verification protocol with no injected noise in Fig. 5(a). The success probability decays in ~3 ms, which is slightly faster than the fidelity decay observed in Fig. 6. This suggests that the randomized protocol at least detects these experimental miscalibrations or coherent errors that cause the actual simulation dynamics to differ from the ideal dynamics. That is, the randomized analog verification protocol helps to identify imperfections in the simulation with respect to the target Hamiltonian, which is something that the other protocols are unable to do. In addition, the faster decay of the randomized analog verification results as compared to the approximate fidelity curve in Fig. 6 indicates that there are additional sources of experimental error that are not captured by the population dynamics alone. For example, the experimental procedure involves rapidly enabling and disabling the various interaction terms, which may itself introduce imperfections that cause the success probability to decay more rapidly. Indeed, the difference between the randomized analog verification protocol results with no injected noise in Fig. 5a and with injected noise in Fig. 5b and c indicate that the experimental errors in the simulation dwarf the errors caused by the injected noise.

Finally, we note that a number of the experimental data series in Fig. 5 show hints of oscillatory behavior, and that in general the shape of each decay curve is non-exponential. This is evidence supporting the claim that these protocols do not fully twirl coherent errors into incoherent errors, and thus do not produce a fully depolarizing channel that would produce an exponential decay in these results.

Numerical demonstration under simulated noise conditions

To further test the sensitivity of each protocol to various types of noise, we numerically simulated the dynamics of the verification protocols using the five-site Heisenberg model

Nominally, we fix all parameter values as \({b}^{(i)}={J}_{x}^{(i,j)}={J}_{y}^{(i,j)}={J}_{z}^{(i,j)}=2\pi \, \times \, 1\ {\rm{kHz}}\), but we vary each of these parameters during the simulation according to several different types of potential experimental noise. We simulated the dynamics of each protocol under conditions with several classes of noise sources present individually:

-

1.

Fast incoherent noise: The b and J terms in the Hamiltonian have fast noise, modeled as an Ornstein–Uhlenbeck process with a correlation time on the order of τ/n, which is approximately the duration of one step of the randomized analog verification protocol.

-

2.

Slow parameter fluctuations: The b and J terms in the Hamiltonian have slow noise (modeled as a constant miscalibration that varies from run to run with a Gaussian distribution) that has a typical timescale longer than τ, but shorter than the time between individual experiments.

-

3.

Parameter miscalibration: Each of the b and J terms in the Hamiltonian is miscalibrated from the desired value.

-

4.

Idle crosstalk: Each of the interaction terms in the Hamiltonian, when disabled, still drives the interaction with some fraction of the intended strength. For example, during steps of the randomized analog verification protocol in which the \({\sigma }_{y}^{(1)}{\sigma }_{y}^{(2)}\) interaction is intended to be turned off, we still include a fraction of that term in the Hamiltonian being simulated.

The numerical simulation results in Fig. 7 demonstrate that certain types of noise, such as fast incoherent noise, can be detected by any of the proposed verification protocols. We see that the multi-basis analog verification protocol is also sensitive to certain slow parameter fluctuations, whereas the randomized analog verification protocol is additionally sensitive to errors such as parameter miscalibration and crosstalk among the interaction terms in the system. Such error sources may cancel out in the forward and backward directions when using more systematic protocols44,45, but when using a randomized protocol they are highly unlikely to cancel due to the randomized nature of the sequence and its dependence on the exact parameters of the Hamiltonian. In particular, we see in Fig. 7d that the actual fidelity of the analog simulation is most severely impacted by the parameter miscalibration and crosstalk errors, and only the randomized analog verification protocol is able to detect the presence of these errors.

Five-qubit numerical simulation results showing the sensitivities of each of the three analog verification protocols to four different types of experimental error sources: fast incoherent noise (~τ/n) in Hamiltonian parameters with 30% relative standard deviation (RSD), slow fluctuations (≫τ) in Hamiltonian parameters with 15% RSD, constant miscalibration of Hamiltonian parameters with 10% RSD, and constant idle crosstalk affecting all sites with 10% RSD. The target Hamiltonian is the five-qubit Heisenberg model from Eq. (32), with \({b}^{(i)}={J}_{x}^{(i,j)}={J}_{y}^{(i,j)}={J}_{z}^{(i,j)}=2\pi \, \times \, 1\ {\rm{kHz}}\). The "effective simulation time'' is the average time for which each term of the Hamiltonian is enabled. Error bars indicate standard error of the mean. a Time-reversal analog verification results. Each data point represents the distribution of results over 50 runs. b Multi-basis analog verification results. Each data point represents the distribution of results over 50 runs. c Randomized analog verification results. Each data point represents the distribution of 10 different randomly generated sequences with n = 150 steps, with each sequence simulated 20 times. d Actual fidelity of the analog simulation under each type of noise.

To gain further insight into the behavior of the randomized analog verification protocol, we also simulated the dynamics under various types of noise using a pair of two-qubit Hamiltonians. First, we use a one-dimensional Ising model with transverse field

which is identical to Eq. (22), the Hamiltonian used for the experiment. For the purposes of the randomized analog verification protocol, we treat \(b({\sigma }_{y}^{(1)}+{\sigma }_{y}^{(2)})\) as a single term, as was also done in the experiment.

Second, we use a one-dimensional Heisenberg model with transverse field terms along each axis

which is a simplified version of the five-qubit Hamiltonian in Eq. (32) used for the earlier simulations.

Figure 8 contains the numerical simulation results of applying the randomized analog verification protocol to these two Hamiltonians under various types of noise, where we have chosen b = Jx = Jy = Jz = 2π × 20 kHz such that the effective simulation times are much longer than the timescale of the system dynamics.

Two-qubit numerical simulations showing randomized analog verification results for two Hamiltonians under four different types of experimental error sources: fast incoherent noise (~τ/n) in Hamiltonian parameters, slow fluctuations (≫τ) in Hamiltonian parameters, constant miscalibration of Hamiltonian parameters, and constant idle crosstalk affecting all sites. The "effective simulation time'' is the average time for which each term of the Hamiltonian is enabled. Each data point represents the distribution of ten different randomly generated sequences, with each sequence simulated 15 times. Error bars indicate standard error of the mean. Dashed line added at y = 0.25 on each plot as a visual aid. a The target Hamiltonian is the one-dimensional Ising model from Eq. (33) under fast incoherent noise with 12% relative standard deviation (RSD), slow parameter fluctuations with 6% RSD, parameter miscalibration with 3% RSD, and idle crosstalk with 3% RSD. b The target Hamiltonian is the one-dimensional Heisenberg model from Eq. (34) under fast incoherent noise with 4% RSD, slow parameter fluctuations with 2% RSD, parameter miscalibration with 1% RSD, and idle crosstalk with 1% RSD. For both a and b, we choose b = Jx = Jy = Jz = 2π × 20 kHz. Note that larger relative errors are used in a to compensate for the smaller number of Hamiltonian terms in this simulation, such that the decay times of the plots in a and b are similar.

We note that the shape of the decay differs significantly between the two Hamiltonians. In particular, we observe that each of the decay curves for the Heisenberg model in Fig. 8b appears to be nearly exponential in shape and decays to ~0.25, which is the expected result for a fully mixed two-qubit state. This is not the case for some of the decay curves for the Ising model in Fig. 8a.

As discussed previously, randomized benchmarking protocols produce exponential decay curves in cases where the noise is fully depolarized by the randomized circuits. We note that the "native gate set” obtained from the Heisenberg model in Eq. (34) is a universal set of quantum gates, which forms an approximate 2-design in the limit of long sequence length. Here we are in fact operating in the limit of "long sequence length”, since the dynamics occur at 20 kHz and the protocol is being performed for an effective simulation time of a few milliseconds. So the nearly-exponential shape of the decay curves in Fig. 8b is a good indication that the various noise sources are indeed being depolarized under these conditions.

In contrast, the behavior of the decay curves in Fig. 8a, which do not decay to 0.25, can be explained by the fact that the interactions do not fully explore the state space of the system. We also observe non-monotonic behavior of these decay curves in the presence of correlated errors such as miscalibration or crosstalk, which suggests that such errors are not being fully depolarized. Such non-monotonic behavior is also observed in the experimental data in Fig. 5.

Discussion

The set of verification protocols for analog quantum simulators introduced in this work are experimentally motivated, and we have demonstrated the utility of these protocols both experimentally and numerically. Most notably, we observe that the randomized analog verification protocol is superior in terms of the types of experimental errors to which it is sensitive, but that its scalability to large system sizes requires additional assumptions, such as the ability to verify subsets of the system independently, due to the classical resources required to perform the approximate inverse compilation during the generation of the randomized sequences. We also observe that the randomized analog verification protocol produces results similar to those from traditional randomized benchmarking protocols in cases where the Hamiltonian terms form a universal "native gate set” and where the simulation time is long in comparison to the system dynamics.

It is worth noting that implementing the time-reversed Hamiltonian in the analog quantum simulation device, which is required for all of the discussed verification protocols, is not necessarily trivial for general Hamiltonians that may be simulated. It turns out that the slow Mølmer–Sørensen interaction used to implement the Ising model with trapped ions is easily time-reversible, as demonstrated in the experimental results, which allowed us to demonstrate each of the protocols here without much additional effort. It is likely that many interactions of interest on other physical platforms, such as neutral atoms or superconducting qubits, may have similarly simple physical mechanisms for time-reversing the dynamics.

Ideally, verification protocols are useful not only for verifying the correct behavior of a system, but also for helping to diagnose and fix errors. In particular, an experimentalist may wish to identify not only the existence of errors in the system, but also the types and locations of these errors. Simply running the dynamics of the full simulation and checking the results may not provide the information necessary to diagnose these details. However, the protocols described in this work provide additional tools for the experimentalist to help characterize the errors in the system. For example, running each of the protocols and comparing the relative decay curves could help to provide insight into whether the system suffers from fast incoherent noise, slow parameter fluctuations, parameter miscalibration, and/or crosstalk errors. In addition, because each of the protocols can be run on arbitrary subsets of the full system, running each on many different subsets will help to isolate the problematic physical interactions.

One may also consider whether such protocols could have application in the validation of gate-based quantum computers. At present, and until error-corrected devices become a reality, quantum computers are realized by carefully tuning the underlying analog interactions to implement quantum gates with the highest fidelity possible. Because the underlying interactions are analog, these analog verification protocols could be adapted for use in verifying the behavior of gate-based devices as well. The randomized analog verification protocol may be practically scalable to larger numbers of qubits than traditional RB because it directly uses the native interactions of the device, rather than requiring compilation of arbitrary Clifford gates into native gates. Most scalable variants of RB, such as direct RB36, require that the native gate set be a generator of the Clifford group, or at least that the native gate set is universal. Randomized analog verification imposes no restriction on the types of interactions present in the Hamiltonian, since it does not rely on properties of the gate set to efficiently return the state to the measurement basis. Of course, this is also a limitation of the randomized analog verification protocol, since one cannot make strong claims on the shape or meaning of the resulting decay curve without limitations on the gate set.

An important feature of the randomized analog verification protocol is the efficiency of executing the experiments on the physical device. Because the protocol requires measurement only in a single basis, the number of measurements required is not only significantly fewer than performing full tomography, but it also significantly fewer than sampling-based techniques such as cross-entropy benchmarking46. This is enhanced by the fact that the protocol measures the system when it is near a measurement basis state, which minimizes the effect of quantum projection noise47,48 and therefore reduces the number of measurements required in order to achieve a desired level of accuracy in the fidelity estimate. To check that the system is in a particular basis state, only 100 repetitions would be required to verify a fidelity of 99% to within 1% error. But for an arbitrary state, the projection noise scales as the inverse square root of the number of measurements, which would require on the order of 10,000 measurements to achieve a similar level of precision.

This work has introduced three experimentally-motivated verification protocols for validation of analog quantum simulators and has demonstrated the feasibility of these protocols both numerically and experimentally. Taken together, these techniques allow for pragmatic evaluation of an analog quantum simulation device in a way that builds confidence that the device is not only operating consistently, but that it is also operating faithfully according to the desired target Hamiltonian. The decay curves resulting from these protocols may then provide some insight into the type and strength of errors encountered. Such techniques can also be applied to subsets of a larger system to allow an experimentalist to characterize and diagnose the behavior in a scalable way. Future work should pursue a more detailed analysis of the information that these protocols can provide about the types of noise or errors present in the system, the feasibility of applying the randomized analog verification protocol to larger systems, and the application of similar techniques to gate-based quantum computing devices. In addition, alternative protocols should be explored that combine the ideas in these protocols with existing techniques from the randomized benchmarking literature, with the goal of producing a practical protocol which depolarizes errors more fully and about which stronger theoretical claims can be made with regard to noise sensitivity and the expected shape of the decay curve.

Data availability

The data that support the findings of this study are available from the corresponding author upon reasonable request.

References

Feynman, R. P. Simulating physics with computers. Int. J. Theor. Phys. 21, 467–488 (1982).

Preskill, J. Quantum computing in the NISQ era and beyond. Quantum 2, 79 (2018).

Cirac, J. I. & Zoller, P. Goals and opportunities in quantum simulation. Nat. Phys. 8, 264–266 (2012).

Hauke, P., Cucchietti, F. M., Tagliacozzo, L., Deutsch, I. & Lewenstein, M. Can one trust quantum simulators? Rep. Prog. Phys. 75, 082401 (2012).

Johnson, T. H., Clark, S. R. & Jaksch, D. What is a quantum simulator? EPJ Quantum Technol. 1, 10 (2014).

Wiebe, N., Granade, C., Ferrie, C. & Cory, D. G. Hamiltonian learning and certification using quantum resources. Phys. Rev. Lett. 112, 190501 (2014).

Bloch, I., Dalibard, J. & Nascimbène, S. Quantum simulations with ultracold quantum gases. Nat. Phys. 8, 267–276 (2012).

Bernien, H. et al. Probing many-body dynamics on a 51-atom quantum simulator. Nature 551, 579–584 (2017).

Gross, C. & Bloch, I. Quantum simulations with ultracold atoms in optical lattices. Science 357, 995–1001 (2017).

Blatt, R. & Roos, C. F. Quantum simulations with trapped ions. Nat. Phys. 8, 277–284 (2012).

Gorman, D. J. et al. Engineering vibrationally assisted energy transfer in a trapped-ion quantum simulator. Phys. Rev. X 8, 011038 (2018).

Kokail, C. et al. Self-verifying variational quantum simulation of lattice models. Nature 569, 355–360 (2019).

Aspuru-Guzik, A. & Walther, P. Photonic quantum simulators. Nature Phys. 8, 285–291 (2012).

Houck, A. A., Türeci, H. E. & Koch, J. On-chip quantum simulation with superconducting circuits. Nat. Phys. 8, 292–299 (2012).

Jalabert, R. A. & Pastawski, H. M. Environment-independent decoherence rate in classically chaotic systems. Phys. Rev. Lett. 86, 2490–2493 (2001).

Gorin, T., Prosen, T., Seligman, T. H. & Žnidarič, M. Dynamics of Loschmidt echoes and fidelity decay. Phys. Rep. 435, 33–156 (2006).

Derbyshire, E., Malo, J. Y., Daley, A. J., Kashefi, E. & Wallden, P. Randomized benchmarking in the analogue setting. Quantum Sci. Technol. 5, 034001 (2020).

Greganti, C. et al. Cross-verification of independent quantum devices. Preprint at https://arxiv.org/abs/1905.09790 (2019).

Elben, A. et al. Cross-platform verification of intermediate scale quantum devices. Phys. Rev. Lett. 124, 010504 (2020).

Emerson, J., Alicki, R. & Życzkowski, K. Scalable noise estimation with random unitary operators. J. Opt. B: Quantum Semiclass. Opt. 7, S347–S352 (2005).

Knill, E. et al. Randomized benchmarking of quantum gates. Phys. Rev. A 77, 012307 (2008).

Lysne, N. K., Kuper, K. W., Poggi, P. M., Deutsch, I. H. & Jessen, P. S. Small, highly accurate quantum processor for intermediate-depth quantum simulations. Phys. Rev. Lett. 124, 230501 (2020).

Epstein, J. M., Cross, A. W., Magesan, E. & Gambetta, J. M. Investigating the limits of randomized benchmarking protocols. Phys. Rev. A 89, 062321 (2014).

Wallman, J. J. Randomized benchmarking with gate-dependent noise. Quantum 2, 47 (2018).

Fogarty, M. A. et al. Nonexponential fidelity decay in randomized benchmarking with low-frequency noise. Phys. Rev. A 92, 022326 (2015).

Harrow, A. W. & Low, R. A. Random quantum circuits are approximate 2-designs. Commun. Math. Phys. 291, 257–302 (2009).

Sørensen, A. & Mølmer, K. Quantum computation with ions in thermal motion. Phys. Rev. Lett. 82, 1971–1974 (1999).

Duan, L.-M., Cirac, J. I. & Zoller, P. Geometric manipulation of trapped ions for quantum computation. Science 292, 1695–1697 (2001).

Poletto, S. et al. Entanglement of two superconducting qubits in a waveguide cavity via monochromatic two-photon excitation. Phys. Rev. Lett. 109, 240505 (2012).

Magesan, E., Gambetta, J. M. & Emerson, J. Scalable and robust randomized benchmarking of quantum processes. Phys. Rev. Lett. 106, 180504 (2011).

Magesan, E. et al. Efficient measurement of quantum gate error by interleaved randomized benchmarking. Phys. Rev. Lett. 109, 080505 (2012).

Magesan, E., Gambetta, J. M. & Emerson, J. Characterizing quantum gates via randomized benchmarking. Phys. Rev. A 85, 042311 (2012).

Gambetta, J. M. et al. Characterization of addressability by simultaneous randomized benchmarking. Phys. Rev. Lett. 109, 240504 (2012).

Gaebler, J. P. et al. Randomized benchmarking of multiqubit gates. Phys. Rev. Lett. 108, 260503 (2012).

McKay, D. C., Sheldon, S., Smolin, J. A., Chow, J. M. & Gambetta, J. M. Three-qubit randomized benchmarking. Phys. Rev. Lett. 122, 200502 (2019).

Proctor, T. J. et al. Direct randomized benchmarking for multiqubit devices. Phys. Rev. Lett. 123, 030503 (2019).

Erhard, A. et al. Characterizing large-scale quantum computers via cycle benchmarking. Nat. Commun. 10, 5347 (2019).

Shaffer, R. Stochastic search for approximate compilation of unitaries. Preprint at https://arxiv.org/abs/2101.04474 (2021).

Khatri, S. et al. Quantum-assisted quantum compiling. Quantum 3, 140 (2019).

Schkufza, E., Sharma, R. & Aiken, A. Stochastic superoptimization. SIGARCH Comput. Archit. News 41, 305–316 (2013).

Govia, L. C., Ribeill, G. J., Ristè, D., Ware, M. & Krovi, H. Bootstrapping quantum process tomography via a perturbative ansatz. Nat. Commun. 11, 1084 (2020).

Häffner, H., Roos, C. F. & Blatt, R. Quantum computing with trapped ions. Phys. Rep. 469, 155–203 (2008).

Lee, P. J. et al. Phase control of trapped ion quantum gates. J. Opt. B: Quantum Semiclass. Opt. 7, S371–S383 (2005).

Ball, H., Stace, T. M., Flammia, S. T. & Biercuk, M. J. Effect of noise correlations on randomized benchmarking. Phys. Rev. A 93, 022303 (2016).

Edmunds, C. L. et al. Dynamically corrected gates suppressing spatiotemporal error correlations as measured by randomized benchmarking. Phys. Rev. Research 2, 013156 (2020).

Boixo, S. et al. Characterizing quantum supremacy in near-term devices. Nature Phys. 14, 595–600 (2018).

Itano, W. M. et al. Quantum projection noise: population fluctuations in two-level systems. Phys. Rev. A 47, 3554–3570 (1993).

Monz, T. Quantum information processing beyond ten ion-qubits. Ph.D. Thesis, University of Innsbruck (2011).

Acknowledgements

We thank Clemens Matthiesen, Sara Mouradian, Mohan Sarovar, and Birgitta Whaley for feedback on early drafts of this manuscript, and we thank Sumanta Khan for work on the experimental apparatus. R.S. acknowledges government support under contract FA9550-11-C-0028 and awarded by the Department of Defense, Air Force Office of Scientific Research, National Defense Science and Engineering Graduate (NDSEG) Fellowship, 32 CFR 168a. Work by E.M. and development of the experimental system were supported by the Army Research Office under Grant Number W911NF-18-1-0170. J.B. acknowledges support via the NSF Graduate Research Fellowships Program. Work by W.C. and H.H. was sponsored by the U.S. Department of Energy, Office of Science, Office of Basic Energy Sciences under Award Number DE-SC0019376. The views and conclusions contained in this document are those of the authors and should not be interpreted as representing the official policies, either expressed or implied, of the Army Research Office or the U.S. Government. The U.S. Government is authorized to reproduce and distribute reprints for Government purposes notwithstanding any copyright notation herein.

Author information

Authors and Affiliations

Contributions

R.S. developed the verification protocols, performed the numerical simulations and experimental data analysis, and wrote the manuscript. R.S., E.M., and H.H. developed the main ideas and contributed to the interpretation of the results. R.S., J.B., and W.C. contributed to the design and execution of the experiments. All authors contributed to discussions of the results and provided revisions to the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Shaffer, R., Megidish, E., Broz, J. et al. Practical verification protocols for analog quantum simulators. npj Quantum Inf 7, 46 (2021). https://doi.org/10.1038/s41534-021-00380-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41534-021-00380-8

This article is cited by

-

Tunable quantum simulation of spin models with a two-dimensional ion crystal

Nature Physics (2024)

-

Shaped to roll along a programmed periodic path

Nature (2023)

-

Solid-body trajectoids shaped to roll along desired pathways

Nature (2023)