Abstract

Accurate control of quantum systems requires a precise measurement of the parameters that govern the dynamics, including control fields and interactions with the environment. Parameters will drift in time and experiments interleave protocols that perform parameter estimation, with protocols that measure the dynamics of interest. Here we specialize to a system made of qubits where the dynamics correspond to a quantum computation. We propose setting aside some qubits, which we call spectator qubits, to be measured periodically during the computation, to act as probes of the changing experimental and environmental parameters. By using control strategies that minimize the sensitivity of the qubits involved in the computation, we can acquire sufficient information from the spectator qubits to update our estimates of the parameters and improve our control. As a result, we can increase the length of experiments where the dynamics of the data qubits are highly reliable. In particular, we simulate how spectator qubits can keep the error level of operations on data qubits below a 10−4 threshold in two scenarios involving coherent errors: a classical magnetic field gradient dynamically decoupled with sequences of two or four π-pulses, and laser beam instability detected via crosstalk with neighboring atoms in an ion trap.

Similar content being viewed by others

Introduction

One of the key challenges in constructing a quantum computer is keeping the error rate under an acceptable threshold, which will be a requirement even for future fault–tolerant quantum computation.1,2,3,4,5,6,7 The optimal control strategy for each quantum gate depends on the parameters that characterize the underlying error channel \({\mathcal{E}}\). There has been an increasing interest in tailoring control strategies to the error channel, such as variability-aware qubit allocation and movement,8 optimal quantum control using randomized benchmarking,9 robust phase estimation (RPE),10 noise-adaptive compilation,11 and quantum error-correcting codes designed for biased noise.12,13,14,15

Although an initial calibration may be sufficient for simpler devices, a fully functional quantum computer will have to deal with the possibility of assessing changes in the error parameters in real time. Many reduction techniques have been proposed for errors that vary slowly in time, such as composite pulses,16,17,18,19,20,21,22,23,24,25,26,27,28 optimal control,29,30,31,32 dynamical decoupling,33,34,35,36,37,38 and dynamically corrected gates.39,40,41 In this work, we analyze the use of a subset of qubits—called spectator qubits—to perform real-time recalibration.

Spectator qubits probe directly the sources of error and thus do not need to interact with the data qubits, so they can be distinguished from ancilla qubits used for syndrome extraction7,42 in quantum error correction. As long as the error channel of the spectator qubits is correlated to the error channel of the data qubits, it is possible to estimate \({\mathcal{E}}\) by measuring the spectators. Although sensor networks,43 machine learning techniques,44,45 and even spectator qubits46 have been proposed to keep track of error parameters that vary in space or time, more often than not these techniques are not suitable for real-time calibration because of how long it takes to extract useful information about the error parameters from the experimental data. Here we describe the complete feedback loop between the information extracted from the spectator qubits and the recalibration of the control strategy on the data qubits, estimating how this information can positively impact the control protocol. When the necessity for feedback is taken into account, acquisition of information via the spectator qubits has to be sufficiently fast, such that the rate of errors in the data qubit does not exceed the rate at which the parameters are being estimated. Such feedback schemes could, in principle, deal with general classes of errors, but in this work we will limit our discussion to particularly damaging coherent errors.

We illustrate the difficulty of using feedback against coherent errors with a simple example. Consider constant overrotations around the x-axis characterized by the error parameter θ. If the error rate is the same as the rate of acquisition of information, the estimate of θ after N overrotations will have an imprecision proportional to N−1∕2. For this reason, any attempt to correct the error with the inverse unitary will result in an extant error that still grows with O(N1∕2):

This kind of difficulty is common to coherent errors in general, but can be contained with the help of quantum control techniques that reduce the speed with which the errors accumulate in the data qubits. Other ways of balancing the increase of errors with a sufficient fast acquisition of information are as follows: usage of different species of qubits for data and spectators, and application of different rates of measurements to reach the Heisenberg limit. Here we will focus on the first strategy of making the data qubits less sensitive to errors via control strategies and leave the other methods to be explored in future work.

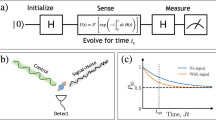

In this work, we propose that real-time calibration with spectator qubits can, in principle, improve the fidelity of any system undergoing coherent errors, as long as: (1) the information available to the spectator qubits is sufficient to keep track of the rate of change of the error parameters and (2) we have a quantum control method capable of sufficiently suppressing the speed with which the coherent errors accumulate in the data. The general setup that we will consider is the one illustrated in Fig. 1, where spectator qubits embedded in the same architecture as the data qubits are measured periodically to determine the error profile. The errors might change in space and time, but as long as they have some correlation between neighboring qubits, we can extrapolate an error field that contains information about the error profile in the data qubits. A classical apparatus then uses the information about this profile and about the results of the spectator measurements to decide the best control strategy. Here we present the theoretical framework for studying multipartite systems composed of spectator qubits and data qubits in the presence of coherent errors, propose some applications, and present analytical and numerical simulations of the performance of the spectator qubits.

Results

Theoretical limits

The purpose of spectator qubits is to obtain information about an error parameter, while its value slowly drifts. This problem can be approximated by a setting where the error parameter is assumed to be fixed within a series of time slots. After the end of each time slot, the parameter changes according to a probability distribution.

In this work, we will assume that the drift is sufficiently slow that this error parameter does not change significantly during a measurement. In this limit, the duration of the measurement process does not affect the result of the measurement and we can assume it to be instantaneous. However, the number N of measurements that can be performed in the period of time where the error parameters remain fixed is still limited.

The precision with which we can learn about the drift from this series of N measurements is limited by the Cramér–Rao bound.47 Calling our imprecision δϑ, the Cramér–Rao bound has the form:

where fθ is the Fisher information about the error parameter θ available to the system between each measurement. The error parameter θ could, in principle, represent any kind of information about the error, but in the case of coherent errors it will often mean an overrotation angle. Still, in the context of coherent errors, if the system can be represented by a pure-state density matrix ρ, the Fisher information takes the specific form:47

where we are expressing the unitary error operator in terms of the rotation axis \(\hat{{\bf{n}}}\) as \(U={e}^{-i(\hat{{\bf{n}}}\cdot {\boldsymbol{\sigma }})\theta /2}\) and the expectation value is taken for the initial state of the system.

Although the imprecision decreases with \(1/\sqrt{N}\), there are other resources that are able to improve our estimate faster than that. Increasing the number of overrotations L between each measurement reduces the imprecision by 1∕L—the so-called Heisenberg scaling—a kind of precision that can also be achieved by using entangled qubits.48,49 These schemes increase the achievable precision by increasing the Fisher information between each measurement. However, the scaling by the number of measurements will remain the same, \(1/\sqrt{N}\).

Calling the error parameter during the kth time slot θk, the optimal correction scheme against the coherent error U(θk) would be to simply apply its Hermitian conjugate U†(θk). Our actual strategy might not be as good as the ideal one, so this description will give us an upper limit for the possible recovery. Moreover, if we only have access to an estimate \({\hat{\theta }}_{k}\) of θk, our strategy will be limited by the amount of precision we can achieve in obtaining this estimate. Although it is possible that our measurement scheme will not be able to saturate the Cramér–Rao bound, this will nevertheless provide an upper limit to how much precision we can achieve. An example of a situation where the Cramér–Rao bound is not saturated occurs when we attempt to apply RPE, a procedure that prescribes doubling the number of gates before each measurement,50 resulting in a precision that achieves the Heisenberg scaling10 if there are no time constrains. With time constraints, this scaling is not always true—as discussed in Section V of the Supplementary Information. Given this risk of underperformance and the fact that robustness of RPE to time-dependent errors is not well-known,51 in this work we will only use a limited number of gate repetitions before measurement and concentrate our analysis on the scaling \(1/\sqrt{N}\), which comes from varying the number of measurements N.

Regardless of how we obtain the estimate \({\hat{\theta }}_{k}\), once we have a reliable value for it, the best possible evolution after the optimal correction strategy is represented by the effective unitary V(ϕ):

where ϕ is an effective error parameter that depends essentially on the difference between θk and \({\hat{\theta }}_{k}\). Using this, we can estimate the best possible process fidelity for a given ϕ:

which is proportional to the average fidelity.52 Here, ϕ = 0 corresponds to perfect knowledge of the error parameter, allowing a perfect evolution of the system. In addition, invoking the fact that the fidelity for a coherent error should be a continuous function of the angle, we can expand this fidelity as a power series around F(ϕ = 0):

where we are using the following notation for the nth derivative of the fidelity:

By our choice of ϕ, the point ϕ = 0 corresponds to perfect knowledge of the error, making the fidelity take its maximum value. Therefore, F(ϕ = 0) = 1, F(1)(ϕ = 0) = 0, and F(2)(ϕ = 0) < 0. Expanding the expression up to the second order and representing the extra terms as a Lagrange remainder, we find:

Suppose ϕn is the effective error parameter if we do not update our estimates using the spectator qubits, whereas ϕs is the effective error parameter if we use spectator qubits to acquire information. The feedback loop will be successful if the fidelity obtained using spectator qubits is, on average, superior to the fidelity that we obtain without using them. Therefore, we want to satisfy:

which in Eq. (8) is equivalent to:

Although it is possible to satisfy the necessary condition above even in situations where our spectators have not helped to decrease the effective error parameters, usually we will want to further impose that:

a condition that is ultimately limited by the Cramér–Rao bound. However, while we try to meet this condition, we also should make sure that the errors do not increase excessively. This can be translated into a second set of conditions that are not necessary, but are sufficient to satisfy inequality (10) when we impose (11). These conditions simply say that the higher-order terms of the expansion must be negligible in comparison with the second-order terms that originate condition (11):

It is worth noticing that, as sufficient but not necessary conditions, we do not need to respect (12) and (13) to obtain satisfactory results with spectator qubits. However, if the feedback loop with the spectators is not improving the fidelity of the system despite condition (11) being met, then changing the scheme so that (12) and (13) are satisfied will suffice to make the strategy work.

It is worth remarking that this kind of analysis that requires a Taylor expansion around ϕ = 0 is not adequate for metrics that do not have a derivative at ϕ = 0, such as the diamond norm, which is defined as:

For this reason, we opted to use the process fidelity as defined in Eq. (5) in our analyses. In any case, as there is a one-to-one correspondence between the diamond norm and the process fidelity for coherent errors, an improvement in one metric translates into an improvement in the other one as well.

Finally, let us consider a simple example of a situation where forcing conditions (12) and (13) to be respected also makes the complete feedback loop to function. Suppose a data qubit and a spectator qubit simultaneously suffer the same kind of overrotation, eiϕX. In this situation, the necessary condition (9) takes the following exact form after N measurements and overrotations:

If a measurement is performed after each overrotation, the best average estimate we can find for ϕ is given by \(1/(2\sqrt{N})\), according to the Cramér–Rao bound and Eq. (3). A simple way of satisfying (15) is by restricting the arguments of the cosines to the interval [−π∕2, π∕2] and then imposing condition (10). However, ϕs will only be smaller than ϕn if we perform a number of measurements that is sufficiently large to have a clear estimate of ϕn—i.e., if \(1/(2\sqrt{N})\,<\,| {\phi }_{n}|\). By this point, however, the increase in N may have brought the angles out of the [−π∕2, π∕2] range, in which case the necessary condition (15) may no longer be satisfied by simply reducing the value of ϕs. In particular, this translates into a violation of the sufficient condition (12). Instead, we have (see Section I of the Supplementary Information for details of the derivation):

whose right-hand side approaches \({\phi }_{s} \sim 1/\sqrt{2N}\) as N grows, which causes a violation of the condition.

As this is a sufficient condition, its violation at this point can be seen as merely incidental to the failure of the scheme. Nevertheless, satisfying the sufficient conditions is all that is necessary to turn an ineffective strategy into a successful one. In our case, if we have quantum control strategies available that are capable of slowing down the evolution of the data qubit to a fraction κ < 1 of the speed of change of the spectator, so that it now sees an effective error parameter κϕ, we find an easier solution to be satisfied:

As long as F(3)(ϕ) is continuous near the origin—a feature expected for coherent errors—he right-hand side of the inequality should go to zero as κ → 0. This means we can always find a sufficiently small value of κ so that the sufficient conditions are satisfied. Such a suppression κ of the errors in the data qubit, which can be achieved via control techniques, is equivalent to making the spectator qubit more sensitive to errors. This could be achieved by using different kinds of species of qubits for data and spectators. Although this is a promising direction for future research, in this work we will focus on examples where the control techniques are responsible for the suppression.

Moreover, if the actual error parameter θ is small, we can alternatively suppress ϕs by making the data qubit perceive a quadratic error in θ, whereas the error in the spectator remains linear. In other words, an effective error proportional to \(\sim ({\theta }^{2}-{\hat{\theta }}^{2})\) will be smaller than an effective error proportional to \(\sim (\theta -\hat{\theta })\).

In the simple example above, we can make a feedback scheme work by increasing the relative sensitivity of the spectator to the noise. We will see in the results below that this kind of suppression is also useful in more realistic scenarios. It is particularly convenient that the quadratic suppression of errors is common in many quantum control techniques.25,36,38,40

Application to magnetic field noise

A qubit precessing around an axis in the Bloch sphere due to some external coherent error source will behave in a manner that is analogous to a spin-1/2 subjected to an external classical magnetic field. Calling this external classical field B, the error will be described by the unitary U(t) = e−itB⋅σ, where we are incorporating any constants into the magnitude of B.

If we know the direction of the classical field B, we can achieve perfect dynamical decoupling by applying π-pulses in a direction \(\hat{{\bf{n}}}\) that is perpendicular to B.33 If we do not keep track of the direction of B, protection against first-order errors can still be obtained via repetitions of an XYXY sequence of π-pulses,33,53 also known as XY-438,54 or modified CPMG.55 However, if we acquire information about the direction of B and rotate the X and Y pulses to a new plane \({x}^{\prime}{y}^{\prime}\) that is perpendicular to B, this new tailored \({\rm{X}}^{\prime}{\rm{Y}}^{\prime}\)-4 sequence will not only cancel perfectly the errors caused by a static B, but will also be robust against small changes in the direction of the classical field.

By placing spectator qubits around the data, as depicted in Fig. 2a, we can detect drifts in the direction of a magnetic field. We measure the components of \({\bf{B}}={B}_{x}\hat{{\bf{x}}}+{B}_{y}\hat{{\bf{y}}}+{B}_{z}\hat{{\bf{z}}}\), by suppressing the undesirable parts of the qubit evolution56 via dynamical decoupling—a process that can be extended to the spectroscopy of non-unitary errors as well57 and intermediate situations that involve both kinds of errors.54 To achieve this, we measure one component at a time, applying π-pulses in the direction that we want to measure, and preparing and measuring the spectator qubit in two distinct bases that are not eigenvectors of the pulses applied. Meanwhile, the data qubit must undergo a dynamical decoupling that suppresses the linear terms of all the components of the magnetic field.

In both, we assume an equal distance x0 between spectators (red) and data qubit (black). In a, a classical field is assumed to vary linearly in the position coordinate, so the field in the data (Bd) can be estimated as the average of the field in the equidistant spectators (B1 and B2). In b, a laser beam has its ideal Gaussian profile (dashed) changed into an actual beam (solid), which is characterized by the error parameters δ and ε.

In Fig. 3, we compare the process fidelity (5) for the case where we maintain the initial calibration with the case where the spectator qubits are used for recalibration. Spectator qubits are able to keep 1 − 〈F〉 below a 10−4 threshold after the non-recalibrated system has crossed it. Although some codes have thresholds of the order of 1%,5,58,59,60 a more strict threshold would allow fault tolerance using fewer resources. We consider the dynamical decoupling via sequences of pairs of pulses perpendicular to the direction of the magnetic field and also the tailored \({\rm{X}}^{\prime}{\rm{Y}}^{\prime}\)-4 sequence. In both cases, the spectator qubits stabilize at a level that remains indefinitely below the threshold.

Here we show the average process fidelity per sequence of four π-pulses spaced by a period τ, calculated numerically and averaged over 1000 runs (dashed) analytically (solid for the case without spectator qubits) and semi-analytically (solid for the case with spectator qubits) when we apply (a) just pulses perpendicular to the direction of the field; (b) a tailored XY-4 sequence where the xy-plane is chosen so that it is perpendicular to the magnetic field. Insets show the long-term behavior of the fidelity, where the spectators stay indefinitely below the threshold. We assume the π-pulses to be instantaneous.

Application to laser beam instability

In ion-trap quantum computers, the laser beams used to drive gates, cool ions, and detect states can suffer from common calibration issues such as beam pointing instability and intensity fluctuations.61 Moreover, they can cause crosstalk, rotations on neighboring qubits that occur when the laser beam overlaps with more qubits than the one being addressed. In principle, the amplitude and pointing instability can be probed by measuring the neighbors,43 although in practice a series of such measurements can affect other qubits in the chain, creating an additional source of errors that we discuss further in the next section. If we assume the system allows non-disruptive measurements of single qubits, two spectators closely surrounding a data qubit become a possible way of assessing laser beam miscalibrations, as depicted in Fig. 2b.

Variations in the amplitude of the laser beam change the Rabi frequency Ω by an amount (1 − ε). Moreover, small errors in the direction of the laser beam are responsible for underrotations. Assuming a Gaussian form for the laser beam, as illustrated in Fig. 2b, a small pointing displacement of δ results in a quadratic change in the amplitude Ω of the laser beam affecting the data qubit:

At a distance ±x0 from the center of the Gaussian, the spectator qubits sense a change in amplitude that is linearly proportional to the pointing displacement δ:

where \(c={e}^{{x}_{0}^{2}}\). This allows the spectator qubits to be sensitive enough to estimate δ before this pointing error grows too much in the data qubit. For ε, the problem of having linear errors both in the data and in the spectator qubits can be overcome by applying composite pulse sequences such as SK1.26 This kind of sequence reduces the effect of the error in the data qubit to a higher order, while preserving the linear effect on the spectator qubits.

The process fidelity for the laser instability with and without recalibration with spectator qubits is shown in Fig. 4. The improvement in fidelity means that we are acquiring information fast enough to be able to recalibrate the system before the errors become too large. If spectator qubits and data both were subjected to an error linearly proportional to ε, the recalibration would not be able to keep the errors under the same threshold for the same values of the parameters, as can be seen in Fig. 5. It is therefore crucial to choose a measurement strategy that balances the rate of acquisition of information and the rate with which the errors increase. We define τnospec to be the time when 〈Fnospec〉 crosses this threshold and τspec when 〈Fspec〉 crosses the threshold. In Fig. 6, we show which combinations of random walk parameters and measurements per spectator cycle M are still capable of providing an effective recalibration mechanism. Fig. 6 also functions as a control landscape and a map that shows the frequency of updates that is adequate for a given order of magnitude of the error. Using our estimate of the order of magnitude of the error obtained from the initial calibration, we can find the adequate frequency of update of the control method by looking for the blue regions below the solid black line.

This is the evolution in time of the average process fidelity per gate for fluctuating parameters (a) δ and (b) ε. Discontinuities in the average solution over 1000 numerical runs (dashed) and the analytical approximate solutions (solid) represent points where there is a recalibration. Insets show analytical solutions for the longer time scales, showing the point where the recalibrated systems cross the threshold.

This numerical simulation is averaged over 1000 runs for the same situation as in Fig. 4b, but with the SK1 turned off, so that the effect of the error parameter ε is linear in both spectators and data qubits. Under these circumstances, it is never possible to keep the average error under the 10−4 threshold: either (a) we take too long to use the information from the spectator qubits and the correlation is already lost, or (b) we update before sufficient Fisher information is available, causing further miscalibration of the system.

These are heat maps in base-10 logarithm of the (a, b) ratio of 1 − 〈F〉 with and without spectator qubits at fixed time N = 4000; and (c, d) ratio between the points where the 10−4 threshold is crossed, with and without spectator qubits. These control landscapes are heat maps showing the effect of spectator qubits for different values of measurements per cycle (M) and step size. Initial values of the error parameters and ion distance are the same as in Fig. 4, and the values of Δε, Δδ, and M that correspond to those from Fig. 4 are marked by the white circle. Blue regions represent settings where the spectators improve either (a, b) the fidelity or (c, d) time it stays below the 10−4 threshold. Regions above the black curve represent situations where (a, b) the system with spectators cross the 10−4 threshold for the process fidelity or (c, d) where the 10−4 threshold is crossed before there has been time to complete the first spectator cycle. Spectator qubits perform worse (red areas) when very few measurements are performed before updating (left extremity of the graphs), or when the rate of change is so small that not recalibrating is a better strategy, as in the bottom of a and b. Discontinuities along the x-axis in a and b correspond to situations where the end of a spectator cycle occurs at the point N = 4000 and are analogous to the discontinuities seen in Fig. 4.

Discussion

We have shown that spectator qubits are capable of recalibrating an error reduction scheme for coherent errors with a precision that is only limited by the Fisher information available and by our capacity of slowing down the rate with which the error in the data qubit changes with time. In this work, both spectator and data qubits were equally sensitive to noise and we had to use quantum control methods to slow down the error accumulation on data qubit. One benefit of such a strategy is that we can dynamically allocate spectator qubits46 and use all physical qubits in our system as spectator or data qubits. However, we can sacrifice this advantage to construct (or choose) spectator qubits that are more sensitive to a particular type of noise than our data qubits. Such a strategy eliminates the need for quantum control methods that were used to slow down errors on data qubit. One example is to use multi-species ions in ion-trap architecture.62 We can use Zeeman qubits (first-order magnetic field sensitive) as our spectator qubits and hyperfine qubits (first-order magnetic field insensitive) as our data qubits for dealing with magnetic field noise.

In the case of the spectator qubits used to calibrate dynamical decoupling, in Fig. 3, we have seen the fidelity remain under the threshold for an indefinite period. We believe this is possible because the error in this setting depends mainly on the angle between the classical field and the pulses, a parameter whose value is not allowed to grow indefinitely.

For laser beam instability, the insets in Fig. 4 show that even when we are using spectator qubits, the average gate fidelity crosses over the threshold at a later time. We believe this is because the error parameters become very large as the random walk is unbounded. This contrasts with the magnetic field parameters, whose random walk was bounded. When the error parameters become very large (ε, δ > 1), the data qubit (error is quadratic) becomes more sensitive to the error than the spectator qubits (error is linear). One possible way to fix this is to include an external classical controller that restricts the maximum variance of the fluctuating error parameters and prevents the crossing of the threshold.

It is worth noting for the laser beam instability case that although we have simulated the fidelity of a single gate (σx) due to miscalibration, it is straightforward to extend our approach to an arbitrary computation. We can do this by interleaving cycles composed of gates that we want to calibrate on data qubits and spectator qubit measurements between gates of the algorithm.

When attempting to put the beam instability feedback loop into practice, it may become important to take into account the additional errors that can be caused by measuring neighboring qubits. However, in this setting where we attempt to improve the process fidelity per gate, the penalty for the measurement errors does not accumulate, rather impacting the performance of the gates individually. If a measurement error is modeled by an incoherent process that occurs with probability p, this will count as an incoherent channel that occurs after the gate and reduces the probability of no error occuring by a fraction 1 − p. This new background error will reduce the overall gate fidelity and to maintain gates below the threshold error it is critical to maintain an even better calibration. In effect, this will lower the threshold that we have to reach by a fixed amount, but the overall analysis of the problem remains the same. When the measurement error occurs during an SK1 sequence, the interaction between the series of gates and the incoherent channel will be more complicated, but the overall effect will still be that an incoherent error spoils the state of the system with probability p, having the overall effect of lowering the threshold.

The possibilities of applications of spectator qubits are not limited to the two coherent errors that we simulated above. Protection against magnetic fields, e.g., besides being relevant to ion traps and nuclear spin qubits, could be extended to detection and dynamical decoupling of a classical external electric field E for qubits that are instead sensitive to electric fields, such as antimony nuclei.63

In future full-fledged quantum computing systems, spectator qubits will enable error rates below the threshold for fault tolerance for longer times than systems without spectator recalibration. This will allow for longer quantum computations. For near- and medium-term applications, however, enhancements would be required to reduce the prohibitive number of measurements necessary to obtain a reliable estimate of the change in the calibration. It would be particularly desirable to implement small corrections in the calibration after fewer measurements, possibly assuming some prior knowledge of how the calibration changes or a specific biased drift of the error parameters. These could be combined with other venues for improvement, such as using Bayesian learning protocols45,46 to make more accurate previsions of future evolution of error parameters or to implement adaptive measurements,64,65 and using entangled states,66 many-body Hamiltonians,65 quantum codes,67 or optimal control68 to maximize the information available.

Methods

Error model

In our numerical, analytical, and semi-analytical simulations for Figs 3–6, the error parameters θ are assumed to start at a fixed value θ0 and fluctuate in time according to a random walk with unbiased Gaussian steps of average size Δθ, so that the probability of it having a value θN after N steps will be:

where the random variable Θn gives the value of the error parameter after n steps and \({\mathcal{N}}(\mu ,{\sigma }^{2};x)\) is the normal distribution with mean μ and variance σ2:

Suppose that, given an actual value of the error parameter θ and an estimate ϑ, we know the expression of the process fidelity per gate, F(θ, ϑ). Then, if the parameter drifts in time but our estimate is not updated, we can use the probability distribution of the random walk to find the average fidelity per gate after N steps when spectators are not recalibrating the system, Fnospec:

This expression can be analytically calculated for all the applications above (see Supplementary Information Section III).

If spectator qubits are present, the estimate ϑ is updated after every cycle of M measurements. After the kth cycle of measurements of the spectators, the next estimate is obtained via an estimator \({\theta }_{kM}^{\,\text{(est)}\,}\) that consists of the average of the error parameter sampled at the previous M steps of the random walk:

where we assume that the parameters change sufficiently slowly so that θ has a precisely defined value during each measurement. For this reason, the variance of the Gaussian in Eq. (20) can always be rescaled so that the number of steps of the random walk matches the number of measurements.

The probability distribution of the estimator will be a Gaussian, as this is a random variable consisting of the average of the Gaussian random variables ΘkM+n:

Therefore, the probability distribution will be entirely characterized by the two cumulants that can be calculated from Eq. (23), which are (see Supplementary Information Section II) the mean μk:

and the variance \({\sigma }_{k}^{2}\):

Finally, the measured value \(\bar{\theta }\) may differ from the estimator, according to the lower limit of the Cramér–Rao bound (2), by an amount that corresponds to the inverse of the Fisher information fθ times the number of measurements:

Given these probability distributions for θkM, \({\theta }_{kM}^{\,\text{(est)}\,}\), and \(\bar{\theta }\), the average fidelity N steps after the kth spectator cycle, which we call Fspec, can be calculated from the average fidelity for a fixed calibration given in Eq. (22):

Using the assumption that the error parameters are small, we solved the triple integrals analytically for ε and δ (see Supplementary Information Section IV), and numerically for the magnetic field case.

Magnetic field noise

In the simulation of the dynamical decoupling of a magnetic field, we assumed the field gradient to be linear, so that measurements in two spectators are sufficient to determine the field in the data qubit. We choose the \(\hat{{\bf{z}}}\) axis to coincide with the initial direction of the magnetic field. Using τ to denote the time spacing between instantaneous π-pulses, we choose the initial value B1 of the magnetic field in one of the spectators to satisfy \(\tau {{\bf{B}}}_{1}=2\cdot 1{0}^{-3}\ \hat{{\bf{z}}}\) when the data qubit undergoes a two-pulse sequence and \(\tau {{\bf{B}}}_{1}=3.8\cdot 1{0}^{-2}\ \hat{{\bf{z}}}\) when it undergoes the tailored XY-4 sequence. The second spectator is assumed to experience initially half of the value of this magnetic field (B2 = B1∕2).

Each component of the magnetic field was assumed to perform an independent random walk, with steps of different size. We choose SDs ΔBx∕B1,x = 3%, ΔBy∕B1,y = 2%, ΔBz∕B1,z = 1% for each random walk. These components are then assessed separately and sequentially in the spectator qubits, which is done by preparing and measuring the spectator in eigenbases of two distinct Pauli matrices that are perpendicular to the component of B that we want to measure. There is no need for spectator qubit re-initialization after each measurement, as measurement in a particular Pauli basis prepares the spectator qubit in an eigenbases of that Pauli operator. The other components of B are decoupled by applying a sequence of π-pulses to the spectators between each measurement, so that we can approximate our estimates of Bx, By, and Bz by:

where \(\left\langle \psi \right|{U}^{\dagger }(n\tau ){\sigma }_{i}U(n\tau )\left|\psi \right\rangle\) represents the averages of a measurement of σi in a system prepared at a state \(\left|\psi \right\rangle\) and left to evolve for a time nτ. The number n of π-pulses before each measurement was chosen as 20 for spectators aiding the perpendicular 2-pulse sequence and 4 for spectators whose information was used to tailor a XY-4 sequence. After M = 700 measurement cycles, we use the new estimate of the direction of B to update our dynamical decoupling control parameters. For the pairs of perpendicular π-pulses, we make the pulse direction perpendicular to B. We estimate the plane that is normal to B, find two perpendicular pulses in that plane, and use them as our tailored XY-4 sequence. We repeat this process throughout the length of our computation.

Laser beam instability

For spectator qubits used to reduce the underrotation caused by pointing instability of the laser beam, we simulate a series of σx gates applied to the data qubit and assume the presence of either a fluctuating parameter δ or ε. The δ parameter is assumed to start the random walk at δ0 = 0.02 and proceed with Gaussian steps of SD Δδ∕δ0 = 5%, whereas the ε starts at ε0 = 0.002 and proceeds with steps of SD Δε∕ε0 = 35%. We assume an initial calibration that allows us to estimate δ to a precision \(\bar{\delta }/{\delta }_{0}=99 \%\) and ε to a precision \(\bar{\varepsilon }/{\varepsilon }_{0}=75 \%\).

The δ errors naturally cause a greater effect in the spectators than in the data, as can be seen from Eqs. (18) and (19), where the difference is between a linear and a quadratic dependence. After four σx gates, we measure the spectator qubits, which we assume to be at a distance \({x}_{0}={(\mathrm{ln}12)}^{1/2}\) from the data (\({x}_{0}^{2}\) is measured in units of twice the variance). To maximize the Fisher information for uncorrelated probes, the two spectator qubits are prepared and measured in an eigenstate of σz. The averages \({\langle {\sigma }_{z}\rangle }_{1},\) \({\langle {\sigma }_{z}\rangle }_{2}\) of the M measurement results in spectator qubits 1 and 2 are then used to estimate δ according to:

which follows from Eq. (19). As only δ2 affects the data, the sign of our estimate of δ is irrelevant. After M = 400 repeated measurements, we build sufficient confidence in our estimate \(\bar{\delta }\) so that, for future gates, we adjust the Rabi frequency to \(\Omega /(1-{\bar{\delta }}^{2})\) using our classical control setup to compensate for the pointing instability.

As the parameter ε is linear in all qubits, we apply an SK1 composite pulse sequence18,19 to slow down the error accumulation in the data qubit. Measurements on the spectator qubits—assumed to be at a distance \({x}_{0}={(\mathrm{ln}1.8)}^{1/2}\) from the data (where \({x}_{0}^{2}\) is measured in units of twice the variance)—are performed after each regular σx gate is applied, but before the application of the second and third pulses of the SK1 sequence. The value of ε is then estimated from the measurement results of \({\langle {\sigma }_{z}\rangle }_{1},\) \({\langle {\sigma }_{z}\rangle }_{2}\):

where \(\tilde{\varepsilon }\) is the previous estimate of ε. After M = 1000 measurements, we update the Rabi frequency to \(\Omega /(1-\bar{\varepsilon })\) to compensate for the errors.

Data availability

The data that supports the findings of this study is available from the corresponding authors upon reasonable request.

Code availability

The code that supports the findings of this study is available from the corresponding authors upon reasonable request.

References

Aharonov, D. & Ben-Or, M. Fault-tolerant quantum computation with constant error. In Proc. 29th Annual ACM Symposium on Theory of Computing, STOC ’97, 176–188 (ACM, New York, NY, USA, 1997).

Kitaev, A. Y. Quantum computations: algorithms and error correction. Russ. Math. Surv. 52, 1191–1249 (1997).

Knill, E., Laflamme, R. & Zurek, W. Resilient quantum computation. Science 279, 342–345 (1998).

Gottesman, D. Theory of fault-tolerant quantum computation. Phys. Rev. A 57, 127–137 (1998).

Knill, E. Quantum computing with realistically noisy devices. Nature 434, 39–44 (2005).

Aliferis, P. & Cross, A. W. Subsystem fault tolerance with the Bacon-Shor code. Phys. Rev. Lett. 98, 220502 (2007).

Terhal, B. M. Quantum error correction for quantum memories. Rev. Mod. Phys. 87, 307–346 (2015).

Tannu, S. S. & Qureshi, M. K. A case for variability-aware policies for NISQ-era quantum computers. Proceedings of the Twenty-Fourth International Conference on Architectural Support for Programming Languages and Operating Systems, 987–999 (2019).

Kelly, J. et al. Optimal quantum control using randomized benchmarking. Phys. Rev. Lett. 112, 240504 (2014).

Kimmel, S., Low, G. H. & Yoder, T. J. Robust calibration of a universal single-qubit gate set via robust phase estimation. Phys. Rev. A 92, 062315 (2015).

Murali, P., Baker, J. M., Abhari, A. J., Chong, F. T. & Martonosi, M. Noise-adaptive compiler mappings for noisy intermediate-scale quantum computers. Proceedings of the Twenty-Fourth International Conference on Architectural Support for Programming Languages and Operating Systems, 1015–1029 (2019).

Napp, J. & Preskill, J. Optimal Bacon-Shor codes. Quantum Info. Comput. 13, 490–510 (2013).

Tuckett, D. K., Bartlett, S. D. & Flammia, S. T. Ultrahigh error threshold for surface codes with biased noise. Phys. Rev. Lett. 120, 050505 (2018).

Tuckett, D. K., Darmawan, A. S., Chubb, C. T., Bravyi, S., Bartlett, S. D. & Flammia, S. T. Tailoring surface codes for highly biased noise. Phys. Rev. X 9, 041031 (2019).

Li, M., Miller, D., Newman, M., Wu, Y. & Brown, K. R. 2D compass codes. Phys. Rev. X 9, 021041 (2019).

Levitt, M. H. Composite pulses. Prog. Nucl. Mag. Res. Spec. 18, 61–122 (1986).

Wimperis, S. Broadband, narrowband, and passband composite pulses for use in advanced NMR experiments. J. Magn. Reson. 109, 221–231 (1994).

Brown, K. R., Harrow, A. W. & Chuang, I. L. Arbitrarily accurate composite pulse sequences. Phys. Rev. A 70, 052318 (2004).

Brown, K. R., Harrow, A. W. & Chuang, I. L. Phys. Rev. A 72, 039005 (2005).

Torosov, B. T. & Vitanov, N. V. Smooth composite pulses for high-fidelity quantum information processing. Phys. Rev. A 83, 053420 (2011).

Vitanov, N. V. Arbitrarily accurate narrowband composite pulse sequences. Phys. Rev. A 84, 065404 (2011).

Bando, M., Ichikawa, T., Kondo, Y. & Nakahara, M. Concatenated composite pulses compensating simultaneous systematic errors. J. Phys. Soc. Jpn 82, 014004 (2013).

Genov, G. T., Schraft, D., Halfmann, T. & Vitanov, N. V. Correction of arbitrary field errors in population inversion of quantum systems by universal composite pulses. Phys. Rev. Lett. 113, 043001 (2014).

Low, G. H., Yoder, T. J. & Chuang, I. L. Optimal arbitrarily accurate composite pulse sequences. Phys. Rev. A 89, 022341 (2014).

Kabytayev, C. et al. Robustness of composite pulses to time-dependent control noise. Phys. Rev. A 90, 012316 (2014).

Merrill, T. J. & Brown, K. R. Progress in compensating pulse sequences for quantum computation. Adv. Chem. Phys. 154, 241–294 (2014).

Low, G. H., Yoder, T. J. & Chuang, I. L. The methodology of resonant equiangular composite quantum gates. Phys. Rev. X 6, 041067 (2016).

Cohen, I., Rotem, A. & Retzker, A. Refocusing two-qubit-gate noise for trapped ions by composite pulses. Phys. Rev. A 93, 032340 (2016).

Montangero, S., Calarco, T. & Fazio, R. Robust optimal quantum gates for Josephson charge qubits. Phys. Rev. Lett. 99, 170501 (2007).

Grace, M. et al. Fidelity of optimally controlled quantum gates with randomly coupled multiparticle environments. J. Mod. Opt. 54, 2339–2349 (2007).

Schulte-Herbrüggen, T., Spörl, A., Khaneja, N. & Glaser, S. Optimal control for generating quantum gates in open dissipative systems. J. Phys. B 44, 154013 (2011).

Machnes, S., Assémat, E., Tannor, D. & Wilhelm, F. K. Tunable, flexible, and efficient optimization of control pulses for practical qubits. Phys. Rev. Lett. 120, 150401 (2018).

Viola, L., Knill, E. & Lloyd, S. Dynamical decoupling of open quantum systems. Phys. Rev. Lett. 82, 2417 (1999).

Viola, L. & Knill, E. Robust dynamical decoupling of quantum systems with bounded controls. Phys. Rev. Lett. 90, 037901 (2003).

Ryan, C. A., Hodges, J. S. & Cory, D. G. Robust decoupling techniques to extend quantum coherence in diamond. Phys. Rev. Lett. 105, 200402 (2010).

Souza, A. M., Álvarez, G. A. & Suter, D. Robust dynamical decoupling. Phil. Trans. R. Soc. A 370, 4748 (2012).

Quiroz, G. & Lidar, D. A. Optimized dynamical decoupling via genetic algorithms. Phys. Rev. A 88, 052306 (2013).

Pokharel, B., Anand, N., Fortman, B. & Lidar, D. A. Demonstration of fidelity improvement using dynamical decoupling with superconducting qubits. Phys. Rev. Lett. 121, 220502 (2018).

Khodjasteh, K. & Viola, L. Dynamically error-corrected gates for universal quantum computation. Phys. Rev. Lett. 102, 080501 (2009).

Khodjasteh, K. & Viola, L. Dynamical quantum error correction of unitary operations with bounded controls. Phys. Rev. A 80, 032314 (2009).

Green, T., Uys, H. & Biercuk, M. J. High-order noise filtering in nontrivial quantum logic gates. Phys. Rev. Lett. 109, 020501 (2012).

Shor, P. W. Scheme for reducing decoherence in quantum computer memory. Phys. Rev. A 52, R2493–R2496 (1995).

Qian, K. et al. Heisenberg-scaling measurement protocol for analytic functions with quantum sensor networks. Phys. Rev. A 100, 042304 (2019).

Mavadia, S., Frey, V., Sastrawan, J., Dona, S. & Biercuk, M. J. Prediction and real-time compensation of qubit decoherence via machine learning. Nat. Commun. 8, 14106 (2017).

Gupta, R. S. & Biercuk, M. J. Machine learning for predictive estimation of qubit dynamics subject to dephasing. Phys. Rev. Appl. 9, 064042 (2018).

Gupta, R. S., Milne, A. R., Edmunds, C. L., Hempel, C. & Biercuk, M. J. Adaptive scheduling of noise characterization in quantum computers. Preprint at https://arxiv.org/abs/1904.07225 (2019).

Braunstein, S. L. & Caves, C. M. Statistical distance and the geometry of quantum states. Phys. Rev. Lett. 72, 3439–3443 (1994).

Wineland, D. J., Bollinger, J. J., Itano, W. M., Moore, F. L. & Heinzen, D. J. Spin squeezing and reduced quantum noise in spectroscopy. Phys. Rev. A 46, R6797–R6800 (1992).

Bollinger, J. J., Itano, W. M., Wineland, D. J. & Heinzen, D. J. Optimal frequency measurements with maximally correlated states. Phys. Rev. A 54, R4649–R4652 (1996).

Rudinger, K., Kimmel, S., Lobser, D. & Maunz, P. Experimental demonstration of a cheap and accurate phase estimation. Phys. Rev. Lett. 118, 190502 (2017).

Meier, A. M., Burkhardt, K. A., McMahon, B. J. & Herold, C. D. Testing the robustness of robust phase estimation. Phys. Rev. A 100, 052106 (2019).

Pedersen, L. H., Møller, N. M. & Mølmer, K. Fidelity of quantum operations. Phys. Lett. A 367, 47–51 (2007).

Gullion, T., Baker, D. B. & Conradi, M. S. New, compensated Carr-Purcell sequences. J. Magn. Reson. 89, 479–484 (1990).

Hernández-Gómez, S., Poggiali, F., Cappellaro, P. & Fabbri, N. Noise spectroscopy of a quantum-classical environment with a diamond qubit. Phys. Rev. B 98, 214307 (2018).

Maudsley, A. A. Modified Carr–Purcell–Meiboom–Gill sequence for NMR fourier imagining applications. J. Magn. Reson. 69, 488 (1986).

Sekatski, P., Skotiniotis, M. & Dür, W. Dynamical decoupling leads to improved scaling in noisy quantum metrology. New J. Phys. 18, 073034 (2016).

Bylander, J. et al. Noise spectroscopy through dynamical decoupling with a superconducting flux qubit. Nat. Phys. 7, 565 (2011).

Raussendorf, R. & Harrington, J. Fault-tolerant quantum computation with high threshold in two dimensions. Phys. Rev. Lett. 98, 190504 (2007).

Raussendorf, R., Harrington, J. & Goyal, K. Topological fault-tolerance in cluster state quantum computation. New J. Phys. 9, 199 (2007).

Fowler, A. G., Stephens, A. M. & Groszkowski, P. High-threshold universal quantum computation on the surface code. Phys. Rev. A 80, 052312 (2009).

Häffner, H., Roos, C. F. & Blatt, R. Quantum computing with trapped ions. Phys. Rep. 469, 155 (2008).

Brown, N. C. & Brown, K. R. Leakage mitigation for quantum error correction using a mixed qubit scheme. Phys. Rev. A 100, 032325 (2019).

Asaad, S. et al. Coherent electrical control of a single high–spin nucleus in silicon. Preprint at https://arxiv.org/abs/1906.01086 (2019).

Granade, C. E., Ferrie, C., Wiebe, N. & Cory, D. G. Robust online Hamiltonian learning. New J. Phys. 14, 103013 (2012).

Demkowicz-Dobrzański, R., Czajkowski, J. & Sekatski, P. Adaptive quantum metrology under general markovian noise. Phys. Rev. X 7, 041009 (2017).

Eldredge, Z., Foss-Feig, M., Gross, J. A., Rolston, S. L. & Gorshkov, A. V. Optimal and secure measurement protocols for quantum sensor networks. Phys. Rev. A 97, 042337 (2018).

Zhou, S., Zhang, M., Preskill, J. & Jiang, L. Achieving the Heisenberg limit in quantum metrology using quantum error correction. Nat. Commun. 9, 78 (2018).

Liu, J. & Yuan, H. Quantum parameter estimation with optimal control. Phys. Rev. A 96, 012117 (2017).

Acknowledgements

We thank Natalie Brown, Dripto Debroy, Shilin Huang, Pak Hong Leung, Iman Marvian, Michael Newman, Gregory Quiroz, and Lorenza Viola for helpful discussions. Numerical simulations were performed on the Duke Compute Cluster (DCC). This work was supported by the ARO MURI grant W911NF-18-1-0218.

Author information

Authors and Affiliations

Contributions

K.R.B. conceived the idea of this study and directed the project. S.M. and L.A.C. modeled the problem and obtained the simulated results. S.M., L.A.C. and K.R.B. analyzed the data and prepared the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Majumder, S., Andreta de Castro, L. & Brown, K.R. Real-time calibration with spectator qubits. npj Quantum Inf 6, 19 (2020). https://doi.org/10.1038/s41534-020-0251-y

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41534-020-0251-y

This article is cited by

-

Realizing all-to-all couplings among detachable quantum modules using a microwave quantum state router

npj Quantum Information (2023)

-

Surpassing spectator qubits with photonic modes and continuous measurement for Heisenberg-limited noise mitigation

npj Quantum Information (2023)

-

Improving qubit coherence using closed-loop feedback

Nature Communications (2022)

-

Adaptive characterization of spatially inhomogeneous fields and errors in qubit registers

npj Quantum Information (2020)