Abstract

The gradient descent method is central to numerical optimization and is the key ingredient in many machine learning algorithms. It promises to find a local minimum of a function by iteratively moving along the direction of the steepest descent. Since for high-dimensional problems the required computational resources can be prohibitive, it is desirable to investigate quantum versions of the gradient descent, such as the recently proposed (Rebentrost et al.1). Here, we develop this protocol and implement it on a quantum processor with limited resources. A prototypical experiment is shown with a four-qubit nuclear magnetic resonance quantum processor, which demonstrates the iterative optimization process. Experimentally, the final point converged to the local minimum with a fidelity >94%, quantified via full-state tomography. Moreover, our method can be employed to a multidimensional scaling problem, showing the potential to outperform its classical counterparts. Considering the ongoing efforts in quantum information and data science, our work may provide a faster approach to solving high-dimensional optimization problems and a subroutine for future practical quantum computers.

Similar content being viewed by others

Introduction

A basic situation in optimization is the minimization or maximization of a polynomial subject to some constraints. As polynomials are in general non-convex and optimization is NP-hard, these problems cannot be solved accurately with efficient resource consumption2. As a special case for approximation algorithms, homogeneous polynomial optimization has wide applications, for examples, signal processing, magnetic resonance imaging3, data training4, approximation theory5, and material science6. These scientific and technological problems are especially demanding in the present-day era of big data7. The gradient algorithm, serving as one of the most fundamental solutions to non-convex optimization problems, lies at the heart of many machine learning methods, such as regression, support vector machines, and deep neural networks8,9,10,11,12. However, when dealing with large data sets, the gradient algorithm consumes tremendous resources and often pushes the current computational resources to their limits.

Quantum computing promises ultrafast computational capabilities by information processing via the laws of quantum mechanics13,14,15. With the intrinsic advantages in executing certain matrix multiplication operations, quantum algorithms are proposed to enhance data analysis techniques under some circumstances. For example, phase estimation, quantum principal component analysis, and the solver for linear system of equations can provide quantum advantages if the state preparation and readout procedure can be efficiently realized13,16. As for the optimization with gradients, which is the central issue in this article, several works focusing on developing quantum versions1,17,18,19,20,21 have been done.

Optimization, i.e., maximization or minimization, of a cost function can be attempted by the prototypical gradient algorithm iteratively. Let the cost function be a map \(f:{{\Bbb{R}}}^{{\mathbb{N}}}\to {\Bbb{R}}\). Set an initial guess \({{\bf{x}}}^{(0)}\in {{\Bbb{R}}}^{N}\), then move it along the direction of the gradient

where \({\bf{x}}={({x}_{1},\ldots ,{x}_{N})}^{T}\in {{\Bbb{R}}}^{N}\) and η is the learning rate. As the first experimental endeavor in this field and for the wide academic and industrial applications, in this work, an order-2p homogeneous polynomial optimization with the spherical constraints \({\sum }_{i}| | {x}_{i}^{2}| | =1\) is investigated, whose cost function is expressed as

The coefficients \({a}_{{i}_{1}\ldots {i}_{2p}}\in {\Bbb{R}}\) can be reshaped to a Np × Np matrix A. And f(x) can be rewritten as \(\frac{1}{2}{{\bf{x}}}^{T}\otimes ...\otimes {{\bf{x}}}^{T}{\bf{A}}{\bf{x}}\otimes ...\otimes {\bf{x}}\). Simultaneously, A is the linear summation of tensor product of N × N unitary matrix \({{\bf{A}}}_{i}^{\alpha }\), which represents as \(\mathop{\sum }\nolimits_{\alpha = 1}^{K}{{\bf{A}}}_{1}^{\alpha }\otimes ...\otimes {{\bf{A}}}_{p}^{\alpha }\). K is the number of decomposition terms required to specify A and p is half the order of the cost function. Therefore, in light of the previous work, the gradient at x can be mapped into a matrix summation1

With the amplitude encoding method, which encodes x as \(\left|{\bf{x}}\right\rangle =\mathop{\sum }\nolimits_{i = 0}^{N-1}{x}_{i}\left|i\right\rangle\), the iterative equation Eq. (1) is interpreted as an evolution of \(\left|{\bf{x}}\right\rangle\) with an operation of D,

where D is a parameter-dependent gradient operator, which in general is non-unitary. Note that two methods to decompose A are shown in Supplementary Note I(D) (theory and experiment) and the subscripts (t) will be omitted in the remainder.

In this work, we develop the gradient algorithm and propose an experimental protocol to perform the gradient descent iterations, with a prototypical experiment to demonstrate the process to optimize polynomials on a quantum simulator. The gradient algorithm in ref. 1 involves phase estimation, which requires substantial circuit depth for currently available circuits, giving logarithmic error and polynomial gate scaling. Hence, the algorithm is difficult to implement with current techniques on a quantum platform. Instead of phase estimation, our method uses the linear combination of unitaries to realize the gradient descent iterations. It provides a gate-based circuit only comprising of standard quantum gates, which is experimental friendly and implementable in current quantum techniques. Our protocol needs two copies of a quantum state \(\left|{\bf{x}}\right\rangle\) to produce the next quantum state at each iterative step, instead of multiple copies which is linearly depending on the order of objective function in the previous algorithm1. The product of decomposition terms K and the order p is an important indicator to determine the efficiency of our protocol. The protocol can be especially beneficial in cases when there is an explicit decomposition of A with comparably small Kp and \({{\bf{A}}}_{i}^{\alpha }\) is Pauli product matrix, calculating the gradient with the \({\mathcal{O}}(Kp\times \mathrm{log}\,(N))\) depth circuits. Moreover, the experiment benefits from the protocol as only two copies are required for optimization of each iteration. Therefore, given the unrivaled degree of nuclear magnetic resonance (NMR) quantum control techniques22,23, this homogeneous polynomial optimization is conducted with a four-qubit system encoded in a molecule of crotonic acid and a quantum state in the vicinity of the local minimum is iteratively obtained with high fidelity. Finally, multidimensional scaling (MDS) problems are introduced as a potential application of this protocol.

Results

Experimental protocol

For the convenience to implement the evolution of the gradient operation, D (shown in Eq. (4)) is rewritten as

where Am and \({{\bf{A}}}_{j}^{\alpha }\) are the same if m = p(α − 1) + j − 1. In addition, \({b}_{j}^{\alpha }=\left\langle {\bf{x}}\right|{{\bf{A}}}_{j}^{\alpha }\left|{\bf{x}}\right\rangle\) and \({M}^{\alpha }=\mathop{\prod }\nolimits_{i = 1}^{p}{b}_{i}^{\alpha }\).

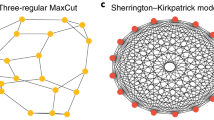

As shown in Fig. 1, to implement the quantum gradient algorithm, two specified circuits are involved. Parameters such as cm(m = 0...Kp − 1) can be obtained by the parameter circuits in Fig. 1a. This circuit evolves the system

where T1 is the integer that satisfies \({2}^{{T}_{1}}=Kp\). When the ancillary system d is in state \(\left|m\right\rangle\), where m = p(α − 1) + j − 1, \({b}_{j}^{\alpha }\) can be obtained on the ancillary system s with σx basis. Thus Mα (α = 1...K), as well as cm can be calculated once the m traverses [0, Kp − 1].

\(\left|{\bf{x}}\right\rangle\) denotes the input state of the work system, and ancillary systems are T1 + 1 qubits in the \(\left|0\right\rangle {\left|0\right\rangle }^{\otimes {T}_{1}}\) state, where \({T}_{1}={\mathrm{log}\,}_{2}(Kp)\). The squares represent unitary operations and the circles represent the state of the controlling qubit. a Parameter circuit. \({b}_{j}^{\alpha }\) can be obtained with 〈σx〉 on register s, when register d is on \(\left|m=p(\alpha -1)+j\right\rangle\). b Iteration circuit includes three steps: initialization, D application, and combination.

The iteration circuit, which is shown in Fig. 1b, is to generate the iterative state with D. The ancillary system s is the first ancillary system which is for the linear combination of two terms in Eq. (4), and d is second one which is for the implementing D with linear combination of operators. In our protocol, the first thing is to involve the minus signs in cm in the unitary operator Am to make cm positive. After dealing with the minus signs in Eq. (4), the iterative equation is rewritten as

where \(\left|{\bf{x}}^{\prime} \right\rangle\) is the state for the next step. The entire iteration circuit to \(\left|{\bf{x}}^{\prime} \right\rangle\) can be implemented via following four steps:

Step 1: for the work register, the amplitude encoding state \(\left|{\bf{x}}\right\rangle\) should be efficiently prepared. In general, for the first iteration, some easy access states can be our initial states, such as tensor product states. For the following iterations, the output of the last iteration can generate what we want. Hence in this situation, time complexity can be ignored. In the case of preparing a particular \(\mathrm{log}\,(N)\)-qubit input \(\left|{\bf{x}}\right\rangle ={\sum }_{k}{c}_{k}\left|k\right\rangle\), we employ the amplitude encoding method in ref. 24,25. It shows that if ck and Pk = ∑k∣ck∣2 can be efficiently calculated by a classical algorithm, constructing this particular state takes \(O[{\mathrm{poly}}\left(\mathrm{log}\,(N)\right.]\) steps. Alternatively, we can resort to quantum random (RAM) access memory26,27,28 or Hamiltonian simulation method29. Quantum RAM (qRAM) is an efficient method to do state preparation, whose complexity is \({\mathcal{O}}(\mathrm{log}\,(N))\) after the quantum memory cell was established.

As for the entire system, with the ancillary register s, d being in a specific superposition state by V0 and controlled-V, it is driven into

with β = 1 + ∑cm and unitary matrixes V, V0

Remarkably, ci should be rescaled with \({c}_{i}/\sqrt{\beta -1}\) to the unitary condition. While all other elements {V0,1, V0,2, ⋯, VKp−1,Kp−1} are arbitrary as long as V is unitary.

Step 2: to apply the gradient operation, D, on the system, the methods of linear combination of the unitary operations are employed30,31,32,33,34,35,36. A0, A1...AKp, tensor decompositions of A, are applied to the work system conditionally on the register d which is on \(\left|0\right\rangle\), \(\left|1\right\rangle\)...\(\left|Kp-1\right\rangle\), correspondingly. In this way, the work system would feel an effective operation as \(\mathop{\sum }\nolimits_{i = 0}^{Kp}{{\bf{A}}}_{i}\) when registers s, d are delicately decoupled. However, A0 would be applied to the work system in both \({\left|0\right\rangle }_{s}{\left|0\right\rangle }_{d}\) and \({\left|1\right\rangle }_{s}{\left|0\right\rangle }_{d}\) subspaces. Thus, an additional \({{\bf{A}}}_{0}^{\dagger }\) is required for the compensation and the final state is

Step 3: combination is implemented to combine the information in different subspaces of the ancillary system and generate the formalized D on the work space. Controlled-W and W0, which are the inverse operations of V and V0, are applied in this step, which produces

The last two terms are orthogonal to the first term, and can be regarded as rubbish terms. When the ancillary system is in state \({\left|0\right\rangle }_{s}{\left|0\right\rangle }_{d}^{{T}_{1}}\), the iterative state, \(\left|{\bf{x}}^{\prime} \right\rangle\)(shown in Eq. (7)) is obtained from the work system.

Finally, the result state \(\left|{\bf{x}}^{\prime} \right\rangle\) could either be the answer state, which satisfies the previous setup convergence condition or be the next input for both parameter and iteration circuits. More details on the protocol can be found in Supplementary Note I(A) and (B) (theory and experiment).

Algorithm complexity is concerned with the computing resources required to process a quantum information task. Particularly, the gate complexity quantifies the amount of the basic quantum operations taken to run as a function of size of input. Similarly, the memory complexity quantifies the amount of space or memory taken.

Kp is an important indicator to characterize the complexity of the protocol, where K is the number of decomposition terms required to specify A and p is half the order of the cost function. It determines both the size of the ancillary system and the depth of both parameter and iteration circuits.

For the size of the circuits which does not include state preparation, \({\mathcal{O}}({T}_{1}+\mathrm{log}\,(N))\) qubits are required for both parameter and iteration circuits, where T1 is the integer that satisfies \({2}^{{T}_{1}}=Kp\). And two copies of the iterative state are needed for each iteration. If the number of iterations is r, the total memory consumption is \({\mathcal{O}}({2}^{r}\mathrm{log}\,(N)+2{T}_{1})\).

For the depth of the circuits which already has the encoded states, \({\mathcal{O}}(Kp)\) conditional-Ams are required. In addition, the gates complexity is provided under the assumption of Pauli product form of Am. As a \(\mathrm{log}\,(Kp)\)-qubit-controlled gate can be implemented with \({\mathcal{O}}(\mathrm{log}\,{(Kp)}^{2}\times \mathrm{log}\,(N))\) basic quantum gates, which is included in Supplementary Note I(C) (theory and experiment)37, \({\mathcal{O}}(Kp\times \mathrm{log}\,{(Kp)}^{2}\times \mathrm{log}\,(N)\times r)\) basic quantum gates are required for both circuits for r iterations.

As for the the state preparation step, the amplitude encoding method would consume \({\mathcal{O}}({\mathrm{log}}\,(N))\) more qubit with \({\mathcal{O}}({\mathrm{poly}}\,{\mathrm{log}}\,(N))\) steps. If the qRAM is adopted in the state preparation, the spatial cost is not just \({\mathcal{O}}(\mathrm{log}\,(N))\) qubits, one also needs \({\mathcal{O}}(N)\) qutrits to establish quantum memory cell.

The protocol relies on the tensor decomposition of A, which is in general hard, especially as Kp grows. This protocol is theoretically efficient when there is an explicit decomposition of A with a limited Kp. However, there are some benefits when adopting this experimental protocol. The experiments are comparably easier since only two copies are required for each iteration optimization.

Success probability, for the parameter circuit, the probability of obtaining the required \({b}_{j}^{\alpha }\) is related to the size of the second ancillary resister, T1, which is proportional to 1/Kp. For the iteration circuit, the ancillary register finally stay on \({\left|0\right\rangle }_{s}{\left|0\right\rangle }_{d}^{{T}_{1}}\) and the output is determined to be the iterative state with the probability \({P}_{s}=\parallel \left|{{\bf{x}}}^{(t)}\right\rangle \pm D\left|{{\bf{x}}}^{(t)}\right\rangle {\parallel }^{2}/{(\mathop{\sum }\nolimits_{m = 0}^{Kp-1}{c}_{m}+1)}^{2}\).

Apparatus

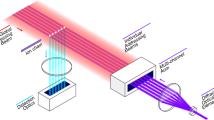

All experiments were carried out on a Bruker DRX 400MHz NMR spectrometer at room temperature. As it is shown in Fig. 2a, a four-qubit system is required, represented by the liquid 13C-labeled crotonic acid sample dissolved in d-chloroform. Four carbon-13 nuclei spins (13C) are denoted as four qubits, C1 as the register s, C2,3 as the register d, and C4 being the work system. The free evolution of this four-qubit system is dominated by the internal Hamiltonian,

where νj and Jjk are the resonance frequency of the jth spin, and the J-coupling strength between spins j and k, respectively. Values of all parameters can be found in the the experimental Hamiltonian of Supplementary Note II(A) (theory and experiment). In order to master the evolution of the system, the transverse radio frequency (r.f.) pulses are introduced as the control field,

By tuning the parameters in r.f. field (Eq. (14)), such as intensity ω1, phase ϕ, and frequency ωr.f. and duration, the four-qubit universal quantum gates are theoretically achievable with the combination of internal system (Eq. (13))38,39.

a Molecule structure of four-qubit sample : crotonic acid. b Quantum circuit for an iteration to realize gradient descent algorithm. \(\left|{\bf{x}}\right\rangle\) denotes the initial state of work system, and ancillary system are T1 + 1 qubits in the \(\left|0\right\rangle {\left|0\right\rangle }^{{T}_{1}}\) state, where T1 = 2.

Experimental implementation

A bivariate quartic polynomial (Eq. (15)), serving as the cost function, is shown to be minimized by our experimental protocol iteratively. The problem is depicted as

with ∣x∣ = 1, where \({\bf{x}}={({x}_{1},{x}_{2})}^{T}\) is a 2-d real vector. Though the number of independent variable is 1 for the normalization constrain, as the growth of the size of problem, a surge of information processing would be included. A, the coefficient matrix, has another representation by tensor products A = −σI ⊗ σx + σx ⊗ σz, where σi(i = I, x, y, z) denotes the Pauli matrices.

With the amplitude encoding method(\({\bf{x}}\to \left|{\bf{x}}\right\rangle\)), the experimental demonstration for updating \(\left|{\bf{x}}^{\prime} \right\rangle\) consists of both acquiring parameters and proceeding iterations. The iteration circuit, from the \({\left|0\right\rangle }_{s}{\left|00\right\rangle }_{d}\left|{\bf{x}}\right\rangle\) to the output \({\left|0\right\rangle }_{s}{\left|00\right\rangle }_{d}\left|{\bf{x}}^{\prime} \right\rangle\), is implemented with three steps, to \(\left|{\psi }_{1}\right\rangle\), \(\left|{\psi }_{2}\right\rangle\), \(\left|{\psi }_{3}\right\rangle\) and measurement, sequentially. As for acquiring parameters, for the hermitian of \({{\bf{A}}}_{j}^{\alpha }\), cm can be obtained as a measurement of \({{\bf{A}}}_{j}^{\alpha }\) on \(\left|{\bf{x}}\right\rangle \left\langle {\bf{x}}\right|\), instead of the parameter circuits. For accuracy and limitation of the molecule sample, this conversion is adopted and we concentrate on the iteration part, where T1 = 2, Kp = 4.

In the experiment, two sets of experiment s1 and s2 are conducted, with different initial guess, \({{\bf{x}}}_{0}^{{s}_{1}}\) (−0.38, 0.92) and \({{\bf{x}}}_{0}^{{s}_{2}}\) (0.86, 0.50). The realization of the iteration circuit is depicted as follows:

Initialization—at room temperature, the four-qubit quantum system is in the thermal equilibrium state. This thermal equilibrium system can be driven to a pseudo-pure state (PPS) with spatial average method40. Then, \({\left|0\right\rangle }_{s}{\left|00\right\rangle }_{d}\left|{\bf{x}}\right\rangle\) was prepared from this PPS, with simply a single-qubit rotation on C4, where x is either initial guess \({{\bf{x}}}_{0}^{{s}_{1}}\), \({{\bf{x}}}_{0}^{{s}_{2}}\) or the output of last iteration. In this step, preparation of the PPS and individual control operations are the mature technology in NMR quantum control and can be found in Supplementary Note II(B) (theory and experiment).

Iteration circuit—the circuit consists of three steps and thus we pack our control pulses into three groups. They are shown in Fig. 2b: (1) a combination of single-qubit rotation V0 and control-V gate realizes the transformation to \(\left|{\psi }_{1}\right\rangle\). (2) Conditional operations of decompositions of A implement the \(\left|{\psi }_{2}\right\rangle\). (3) W0 and control-W achieve the disentanglement to \(\left|{\psi }_{3}\right\rangle\). Remarkably, parameters cm in local operations, such as V and W are obtained by measuring the iterative state \(\left|{\bf{x}}\right\rangle\). Gradient ascent pulse engineering was employed to generate three packages of optimized pulses to implement the operations listed above, with the simulated fidelity all over 99.9% and the time-length being 20, 30, and 20 ms, respectively41. Hence, in experiment, we got ρ1, ρ2, and ρ3 correspondingly.

Measurement—since only the state in subspace of \({\left|0\right\rangle }_{s}{\left|00\right\rangle }_{d}\) is necessary for obtaining the output, \(\left|{\bf{x}}^{\prime} \right\rangle\), a full tomography in such subspace was employed. All readout pulses are 0.9 ms with 99.8% simulated fidelity. For the sake of experimental errors, mixed states were led in our results, however, the two-dimension vector \(\left|{\bf{x}}^{\prime} \right\rangle\) should be a pure real state. Hence, a purification step was added to search a closest pure state after this measurement and it is realized with the method of maximum likelihood42. As the consequence of the output \(\left|{\bf{x}}^{\prime} \right\rangle\), \(\left|{\phi }_{i}^{{s}_{1}}\right\rangle\), and \(\left|{\phi }_{i}^{{s}_{2}}\right\rangle\) (i = 1...4) were found to be the closest to our experimental density matrices for two different cases s1 and s2.

According to preset threshold, the output \(\left|{\bf{x}}^{\prime} \right\rangle\) can be labeled as the updated input \(\left|{\bf{x}}\right\rangle\) to run the next iterative circuit, or be the final result and the iteration thus terminates.

The results are shown in Fig. 3. For the cases s1 and s2, we could see the trend of convergence at xopt (0.50, 0.86) after four times iterations with the initial points, \({{\bf{x}}}_{0}^{{s}_{1}}\) and \({{\bf{x}}}_{0}^{{s}_{2}}\). \(\left|{\phi }_{i}^{{s}_{1}}\right\rangle\) and \(\left|{\phi }_{i}^{{s}_{2}}\right\rangle\) (i = 1...4) are outputs of iteration circuit at ith iteration, which are plotted in the sub-figures (a) and (b). For comparison, theoretical simulation is provided, whose inputs were chosen as the output of the last experimental iteration.

a, b The output \(\left|x^{\prime} \right\rangle\) in the iteration process with their orthogonal basis, i.e., \(\left|{\phi }_{i}^{{s}_{1}}\right\rangle\) and \(\left|{\phi }_{i}^{{s}_{2}}\right\rangle\) (i = 1...4). Green triangles are theoretical simulation results, while red squares are experimental measured outputs. They both begin with a same initial point. In addition, the moving directions are also labeled by the dashed arrows colored green or red. c The 1-d depiction. Beginning with two initial points, for s1 (colored red) and s2 (colored blue), the iteration outputs become lower and lower, until slipping into the neighbor of the local minimum. The dashed arrows show the moving direction for each iteration and in the zoom-in figure, it shows they gradually converging to the optimal minimum point were x1 (or \(\cos \theta\)) = 0.5. d The relations between the number of iterations and the overlaps between the iterative states and the target.

In addition, by substituting x1 = \(\cos (\theta )\) and \({x}_{2}=\sin (\theta )\), the cost function is rewritten as \(f({\bf{x}})=-2{\sin }^{3}\theta \cos \theta\). Thus the problem is reduced to a one-dimensional unconstrained optimization problem, where the extreme points lie at θ = 0, π/3. Among them, θ = 0 is unstable, while π/3 is the stable local minimum. To show this results explicitly, both iteration outputs and the value of the cost function are shown in Fig. 3c. In this situation, the initial guesses are \(\cos (\theta )=-0.38\) (s1 and colored red) and \(\cos (\theta )=-0.86\) (s2 and colored blue), respectively. As with the growth of the number of the iteration, the value of the cost function gets lower and lower, until slipping into the neighbor of the local minimum.

As another aspect to show this convergence, in Fig. 3d, relations between the number of iterations and overlaps were given. The value of vertical axis was defined as the overlaps between the optimal state and the output state after each iteration: \(| \langle {\phi }_{\mathrm{opt}}| {\phi }_{i}^{\,{j}\,}\rangle |\) (i = 0, 1...4. j = s1, s2). The horizontal axis is the number of iterations. It shows that the overlaps converges to 1 weather the initial guess is chosen as \({{\bf{x}}}_{0}^{{s}_{1}}\) or \({{\bf{x}}}_{0}^{{s}_{2}}\). For more information of the different seeds and investigation of unstable point, numerical simulations were carried out and some results are shown in Supplementary Note III (theory and experiment).

Furthermore, to check the performance of the circuits experimentally implemented, a four-qubit tomography was implemented at two points, after PPS preparation and after the iteration circuit. Thus four-qubit states ρpps and ρ3 were obtained. For the PPS, we got a fidelity ~99.01%, and for those four-qubit states ρ3, they have an average of 94% fidelity. Detailed information is shown in the experimental part, Supplementary Note II(C) and (D) (theory and experiment).

Application

For the further applications, MDS is a technique, providing a visual representation of the pattern of proximities in a dataset. It is a common method of statistical analysis in sociology, quantitative psychology, marketing, and so on. We apply our method to quantize an algorithm for fitting the simplest of MDS models in major applications in the method.

Given a matrix A = δij, which is nonnegative symmetric with zero diagonal. A set of number δij is the data collected in a classical MDS problem, and δij is the dissimilarity between objects i and j. Representing n objects as n points via ignoring the objects size, the dissimilarity of objects i and j is approximately equal to the distance between points i and j. The goal is to find n points in m dimensions, denoted by x1, x2, ⋅, xn to form a configuration with coordinates in an n × m matrix X.

When m = 3, it is reduced to a molecular conformation problem43, which plays an important role in chemical and biological fields. Let dij(X) denotes the Euclidean distances between the points xi and xj. It follows that

We minimize the loss function, defined as

where W = wij is a symmetric weight matrix that can be used to code various Supplementary Information. The purpose of this algorithm is to find the most suitable information visualization configuration. Now, we map it to a quantum version. First, the loss function is rewritten as44

where

and

Thus, we only need to minimize \(f^{\prime} ({\bf{X}})=-2g({\bf{X}})+{h}^{2}({\bf{X}})\). g(X) and h2(X) can be further expressed as a trace of some matrixes muiltiproduction. We have \(g({\bf{X}})={\rm{Tr}}({{\bf{X}}}^{T}B({\bf{X}}){\bf{X}})\) with B(X) = 1/2∑i∑jwijAijkij(X), where

Similarly, \(h{({\bf{X}})}^{2}={\rm{Tr}}({{\bf{X}}}^{T}C({\bf{X}}){\bf{X}})\) with C(X) = 1/2∑i∑jwijAij. Then, we have

where D(X) = C(X) − 2B(X). It should be noticed that here X is a n × m matrix. In order to represent X as quantum states, we map it to a sum of m column vectors Xv of X. Now, we can apply our quantum gradient algorithm to minimize the objective function

In this special case, the function order p = 1 and D(x) is a symmetric matrix which is likely to be decomposed efficiently. It potentially yields an exponential speedup over the classical algorithm in MDS problems.

This protocol also provides potential applications in quantum control technology. For example, the cost function could be reduced to a quadratic optimization problem in the form of \(f(x)=\left\langle {\bf{x}}\right|{\bf{A}}\left|{\bf{x}}\right\rangle\). If the coefficient matrix A is restricted to a density matrix, the objective function represents the overlap between A and \(\left|{\bf{x}}\right\rangle \left\langle {\bf{x}}\right|\). Thus, we can product a state \(\left|{\bf{x}}\right\rangle\) closely enough to a density matrix A by finding the maximum of f(x). It can be used as a quantum method to prepare the specific state.

Discussion

In this article, an experiment-friendly protocol is proposed to implement the gradient algorithm. The protocol provides a quantum circuit only comprised of standard quantum gates, hence it can in principle be realized in current technologies. The experimental implementation required only two copies of quantum states for the parameter and iteration circuits. Moreover, if there is an explicit decomposition of A in terms of Pauli product matrix, \({\mathcal{O}}(Kp)\times {\mathrm{log}}\,(N)\) depth circuits (\({\mathcal{O}}(Kp\times {\mathrm{log}}\,{(Kp)}^{2}\times {\mathrm{log}}\,(N))\) basic quantum gates) are enough to calculate the gradient within with \({\mathcal{O}}({T}_{1}+\mathrm{log}\,(N))\) qubits.

With a four-qubit NMR quantum system, we demonstrated an optimization of a homogeneous polynomial optimization and iteratively obtained the vicinity of the local minimum. The result is iteratively implemented with the iteration circuit in Fig. 2, while the parameters cm are measured with iterative states instead of the parameter circuit. With the initial guess either \({{\bf{x}}}_{0}^{{s}_{1}}\) or \({{\bf{x}}}_{0}^{{s}_{2}}\), the demonstration shows the feasibility in near-future quantum devices for this shallow circuit. For the advanced control techniques of spin systems22,45, they are applied as the first trail to demonstrate the effectiveness of the more and more protocols. In addition, MDS problems are introduced as a potential application of this experimental protocol.

Polynomials, subject to some constraints, are basic models in the area of optimization. Furthermore, the gradient algorithm is considered as one of the most fundamental solutions to those non-convex optimization problems. Our protocol, which gives another implementation of the gradient algorithm using quantum mechanics, is applicable to homogeneous polynomials optimization with spherical constrains. When there is a simple and explicit decomposition of coefficients matrix, the protocol could provide an speedup with poly-logarithmic operations of the size of problem to calculate the gradient, which has potential to be used in near-future quantum machine learning. Our approach could be exceptionally useful for high-dimensional optimization problems, and the gate-based circuit makes it readily transferable to other systems, such as superconducting circuits and trapped ion quantum system, being an subroutine for future practical large-scale quantum computers.

Data availability

All data for the figure and table are available on request. All other data about experiments are available upon reasonable request.

Code availability

Code used for generate the quantum circuit and implementing the experiment is available on reasonable request.

References

Rebentrost, P., Schuld, M., Wossnig, L., Petruccione, F. & Lloyd, S. Quantum gradient descent and Newton’s method for constrained polynomial optimization. New J. Phys. 21(7), 073023 (2019).

Graziano, C. Lmi techniques for optimization over polynomials in control: a survey. IEEE Trans. Autom. Control 55, 2500–2510 (2010).

Ghosh, A. et al. A polynomial based approach to extract the maxima of an antipodally symmetric spherical function and its application to extract fiber directions from the orientation distribution function in diffusion mri. 11th International Conference on Medical Image Computing and Computer Assisted Intervention (MICCAI), Sep 2008, New York, United States. p. 237–248. 〈hal-00340600〉.

Blondel, M., Ishihata, M., Fujino, A. & Ueda, N. Polynomial networks and factorization machines: new insights and efficient training algorithms. Preprint at https://arxiv.org/pdf/1607.08810.pdf (2016).

Kofidis, E. & Regalia, P. A. On the best rank-1 approximation of higher-order supersymmetric tensors. SIAM J. Matrix Anal. Appl. 23, 863–884 (2002).

So, A.-C., Ye, Y. & Zhang, J. A unified theorem on sdp rank reduction. Math. Oper. Res. 33, 910–920 (2008).

He, S., Li, Z. & Zhang, S. Approximation algorithms for homogeneous polynomial optimization with quadratic constraints. Math. Program. 125, 353–383 (2010).

Géron, A. Hands-On Machine Learning with Scikit-Learn, Keras, and TensorFlow: Concepts, Tools, and Techniques to Build Intelligent Systems (O’Reilly Media, 2019).

Jiawei, Z. Gradient descent based optimization algorithms for deep learning models training. Preprint at https://arxiv.org/abs/1903.03614 (2019).

Manogaran, G. & Lopez, D. Health data analytics using scalable logistic regression with stochastic gradient descent. Int. J. Adv. Intell. Paradig. 10, 118–132 (2018).

Wang, Z. et al. Insensitive stochastic gradient twin support vector machines for large scale problems. Inf. Sci. 462, 114–131 (2018).

Du, S. S., Lee, J. D., Li, H., Wang, L. & Zhai, X. Gradient descent finds global minima of deep neural networks. In International Conference on Machine Learning, pp. 1675–1685 (2019).

Nielsen, M. A. & Chuang, I. Quantum Computation and Quantum Information (American Association of Physics Teachers, 2002).

Shor, P.W. Algorithms for quantum computation: discrete logarithms and factoring. In Proceedings 35th Annual Symposium on Foundations of Computer Science, 124–134 (IEEE, Santa Fe, NM, 1994).

Grover, L.K. A fast quantum mechanical algorithm for database search. In Proceedings of the tWenty-eighth Annual ACM Symposium on Theory of Computing, 212–219 (STOC, 1996).

Harrow, A. W., Hassidim, A. & Lloyd, S. Quantum algorithm for linear systems of equations. Phys. Rev. Lett. 103, 150502 (2009).

Jordan, S. P. Fast quantum algorithm for numerical gradient estimation. Phys. Rev. Lett. 95, 050501 (2005).

Gilyén, A., Arunachalam, S. & Wiebe, N. Optimizing quantum optimization algorithms via faster quantum gradient computation. In Proceedings of the Thirtieth Annual ACM-SIAM Symposium on Discrete Algorithms, 1425–1444 (SIAM, 2019).

Li, J., Yang, X., Peng, X. & Sun, C.-P. Hybrid quantum-classical approach to quantum optimal control. Phys. Rev. Lett. 118, 150503 (2017).

Schuld, M., Bergholm, V., Gogolin, C., Izaac, J. & Killoran, N. Evaluating analytic gradients on quantum hardware. Phys. Rev. A 99, 032331 (2019).

Kerenidis, I. & Prakash, A. Quantum gradient descent for linear systems and least squares. Phys. Rev. A 101, 022316 (2020).

Vandersypen, L. M. K. & Chuang, I. L. Nmr techniques for quantum control and computation. Rev. Mod. Phys. 76, 1037 (2005).

Ladd, T. D. et al. Quantum computers. Nature 464, 45–53 (2010).

Grover, L. & Rudolph, T. Creating superpositions that correspond to efficiently integrable probability distributions. Preprint at https://arxiv.org/abs/quant-ph/0208112 (2002).

Soklakov, A. N. & Schack, R. Efficient state preparation for a register of quantum bits. Phys. Rev. A 73, 012307 (2006).

Giovannetti, V., Lloyd, S. & Maccone, L. Quantum random access memory. Phys. Rev. Lett. 100, 160501 (2008).

Giovannetti, V., Lloyd, S. & Maccone, L. Architectures for a quantum random access memory. Phys. Rev. A 78, 052310 (2008).

Arunachalam, S., Gheorghiu, V., Jochym-O’Connor, T., Mosca, M. & Srinivasan, P. V. On the robustness of bucket brigade quantum ram. N. J. Phys. 17, 123010 (2015).

Wossnig, L., Zhao, Z. & Prakash, A. Quantum linear system algorithm for dense matrices. Phys. Rev. Lett. 120, 050502 (2018).

Gui-Lu, L. General quantum interference principle and duality computer. Commun. Theor. Phys. 45, 825 (2006).

Long, G. L. Mathematical theory of the duality computer in the density matrix formalism. Preprint at https://arxiv.org/abs/quant-ph/0605087 (2006).

Childs, A. M. & Wiebe, N. Hamiltonian simulation using linear combinations of unitary operations. Quantum Info. Comput. 12, 11–12 (November 2012), 901–924 (2012).

Wei, S.-J., Ruan, D. & Long, G.-L. Duality quantum algorithm efficiently simulates open quantum systems. Sci. Rep. 6, 30727 (2016).

Wei, S.-J. & Long, G.-L. Duality quantum computer and the efficient quantum simulations. Quantum Inf. Process. 15, 1189–1212 (2016).

Wen, J. W. et al. One-step method for preparing the experimental pure state in nuclear magnetic resonance. Sci. China Phys. Mech. Astron. 63, 230321 (2020).

Xin, T., Hao, L., Hou, S.-Y., Feng, G.-R. & Long, G.-L. Preparation of pseudo-pure states for nmr quantum computing with one ancillary qubit. Sci. China Phys. Mech. Astron. 62, 960312 (2019).

Xin, T., Wei, S.-J., Pedernales, J. S., Solano, E. & Long, G.-L. Quantum simulation of quantum channels in nuclear magnetic resonance. Phys. Rev. A 96, 062303 (2017).

Lu, D. et al. Enhancing quantum control by bootstrapping a quantum processor of 12 qubits. npj Quantum Inf. 3, 1–7 (2017).

Li, K. et al. Measuring holographic entanglement entropy on a quantum simulator. npj Quantum Inf. 5, 1–6 (2019).

Cory, D. G., Fahmy, A. F. & Havel, T. F. Ensemble quantum computing by nmr spectroscopy. Proc. Natl Acad. Sci. USA 94, 1634–1639 (1997).

Khaneja, N., Reiss, T., Kehlet, C., Schulte-Herbrüggen, T. & Glaser, S. J. Optimal control of coupled spin dynamics: design of nmr pulse sequences by gradient ascent algorithms. J. Magn. Reson. 172, 296–305 (2005).

Rehacek, J. Hradil, Z. & JezekIterative, M. Iterative algorithm for reconstruction of entangled states. Phys. Rev. A 63, 040303 (2001).

Glunt, W., Hayden, T. & Raydan, M. Molecular conformations from distance matrices. J. Comput. Chem. 14, 114–120 (1993).

De Leeuw, J. Convergence of the majorization method for multidimensional scaling. J. Classif. 5, 163–180 (1988).

Long, G., Feng, G. & Sprenger, P. Overcoming synthesizer phase noise in quantum sensing. Quantum Eng 1(4), e27 (2019).

Acknowledgements

This research was supported by National Basic Research Program of China. K.L. acknowledge the National Natural Science Foundation of China under Grant No. 11905111. S.W., F.Z., P.G., Z.Z., T.X., and G.L. acknowledge the National Natural Science Foundation of China under Grants No. 11974205 and No. 11774197. The National Key Research and Development Program of China (2017YFA0303700); The Key Research and Development Program of Guangdong province (2018B030325002); Beijing Advanced Innovation Center for Future Chip (ICFC). S.W. is also supported by the China Postdoctoral Science Foundation 2020M670172. T.X. is also supported by the National Natural Science Foundation of China (Grants No. 11905099 and No. U1801661), and Guangdong Basic and Applied Basic Research Foundation (Grant No. 2019A1515011383). X.W. was partially supported by the National Key Research and Development Program of China, Grant No. 2018YFA0306703.

Author information

Authors and Affiliations

Contributions

G.L. initiated the project. S.W. formulated the theory. K.L., P.G., and Z.Z. performed the calculation. K.L., F.Z., and T.X. designed and carried out the experiments. All work was carried out under the supervision of P.R., X.W., and G.L. All authors contributed to writing the manuscript.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Li, K., Wei, S., Gao, P. et al. Optimizing a polynomial function on a quantum processor. npj Quantum Inf 7, 16 (2021). https://doi.org/10.1038/s41534-020-00351-5

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41534-020-00351-5

This article is cited by

-

Euclidean time method in generalized eigenvalue equation

Quantum Information Processing (2024)

-

Near-term quantum computing techniques: Variational quantum algorithms, error mitigation, circuit compilation, benchmarking and classical simulation

Science China Physics, Mechanics & Astronomy (2023)

-

Quantum gradient descent algorithms for nonequilibrium steady states and linear algebraic systems

Science China Physics, Mechanics & Astronomy (2022)

-

Quantum algorithms for the generalized eigenvalue problem

Quantum Information Processing (2022)

-

Controlled Dense Coding Using Generalized GHZ-type State in a Noisy Network

International Journal of Theoretical Physics (2022)