Abstract

Randomized benchmarking is a technique for estimating the average fidelity of a set of quantum gates. However, if this gateset is not the multi-qubit Clifford group, robustly extracting the average fidelity is difficult. Here, we propose a new method based on representation theory that has little experimental overhead and robustly extracts the average fidelity for a broad class of gatesets. We apply our method to a multi-qubit gateset that includes the T-gate, and propose a new interleaved benchmarking protocol that extracts the average fidelity of a two-qubit Clifford gate using only single-qubit Clifford gates as reference.

Similar content being viewed by others

Introduction

Randomized benchmarking1,2,3,4,5,6,7 is arguably the most prominent experimental technique for assessing the quality of quantum operations in experimental quantum computing devices.4,8,9,10,11,12,13 Key to the wide adoption of randomized benchmarking are its scalability with respect to the number of qubits and its insensitivity to errors in state preparation and measurement. It has also recently been shown to be insensitive to variations in the error associated to different implemented gates.14,15,16

The randomized benchmarking protocol is defined with respect to a gateset G, a discrete collection of quantum gates. Usually, this gateset is a group, such as the Clifford group.2 The goal of randomized benchmarking is to estimate the average fidelity17 of this gateset.

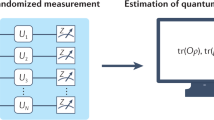

Randomized benchmarking is performed by randomly sampling a sequence of gates of a fixed length m from the gateset G. This sequence is applied to an initial state ρ, followed by a global inversion gate such that in the absence of noise the system is returned to the starting state. Then the overlap between the output state and the initial state is estimated by measuring a two-component POVM {Q, 1 − Q}. This is repeated for many sequences of the same length m and the outputs are averaged, yielding a single average survival probability pm. Repeating this procedure for various sequence lengths m yields a list of probabilities {pm}m.

Usually G is chosen to be the Clifford group. It can then be shown (under the assumption of gate-independent CPTP noise)2 that the data {pm}m can be fitted to a single exponential decay of the form

where A, B depend on state preparation and measurement, and the quality parameter f only depends on how well the gates in the gateset G are implemented. This parameter f can then be straightforwardly related to the average fidelity Favg.2 The fitting relation Eq. (1) holds intuitively because averaging over all elements of the Clifford group effectively depolarizes the noise affecting the input state ρ. This effective depolarizing noise then accretes exponentially with sequence length m.

However it is possible, and desirable, to perform randomized benchmarking on gatesets that are not the Clifford group, and a wide array of proposals for randomized benchmarking using non-Clifford gatesets appear in the literature.18,19,20,21,22,23,24 The most prominent use case is benchmarking a gateset G that includes the vital T-gate18,19,22 which, together with the Clifford group, forms a universal set of gates for quantum computing.17 Another use case is simultaneous randomized benchmarking,23 which extracts information about crosstalk and unwanted coupling between neighboring qubits by performing randomized benchmarking on the gateset consisting of single qubit Clifford gates on all qubits. In these cases, and in other examples of randomized benchmarking with non-Clifford gatesets,20,22,23 the fitting relation Eq. (1) does not hold and must instead be generalized to

where RG is an index set that only depends on the chosen gateset, the fλ are general ‘quality parameters’ that only depend on the gates being implemented and the Aλ prefactors depend only on SPAM (when the noise affecting the gates is trace preserving there will be a λ ∈ RG -corresponding to the trivial subrepresentation- such that fλ = 1, yielding the constant offset seen in Eq. (1)). The above holds because averaging over sequences of elements of these non-Clifford groups averaging does not fully depolarize the noise. Rather the system state space will split into several ‘sectors’ labeled by λ, with a different depolarization rate, set by fλ, affecting each sector. The interpretation of the parameters fλ varies depending on the gateset G. In the case of simultaneous randomized benchmarking23 they can be interpreted as a measure of crosstalk and unwanted coupling between neighboring qubits. For other gatesets an interpretation is not always available. However, as was pointed out for specific gatesets in ref. 18,19,20,22 and for general finite groups in ref., 21 the parameters fλ can always be jointly related (see Eq. (5)) to the average fidelity Favg of the gateset G. This means that in theory randomized benchmarking can extract the average fidelity of a gateset even when it is not the Clifford group.

However in practice the multi-parameter fitting problem given by Eq. (2) is difficult to perform, with poor confidence intervals around the parameters fλ unless impractically large amounts of data are gathered. More fundamentally it is, even in the limit of infinite data, impossible to associate the estimates from the fitting procedure to the correct decay channel in Eq. (2) and thus to the correct fλ, making it impossible to reliably reconstruct the average fidelity of the gateset.

In the current literature on non-Clifford randomized benchmarking, with the notable exception of ref., 22 this issue is sidestepped by performing randomized benchmarking several times using different input states ρλ that are carefully tuned to maximize one of the prefactors Aλ while minimizing the others. This is unsatisfactory for several reasons: (1) the accuracy of the fit now depends on the preparation of ρλ, undoing one of the main advantages of randomized benchmarking over other methods such as direct fidelity estimation,25 and (2) it is, for more general gatesets, not always clear how to find such a maximizing state ρλ. These problems aren’t necessarily prohibitive for small numbers of qubits and/or exponential decays (see for instance26) but they do limit the practical applicability of current non-Clifford randomized benchmarking protocols on many qubits and more generally restrict which groups can practically be benchmarked.

Here, we propose an adaptation of the randomized benchmarking procedure, which we call character randomized benchmarking, which solves the above problems and allows reliable and efficient extraction of average fidelities for gatesets that are not the Clifford group. We begin by discussing the general method, before applying it to specific examples. Finally, we discuss using character randomized benchmarking in practice and argue the new method does not impose significant experimental overhead. Previous adaptations of randomized benchmarking, as discussed in8,27,28 and in particular22 (where the idea of projecting out exponential decays was first proposed for a single qubit protocol), can be regarded as special cases of our method.

Results

In this section, we present the main result of this paper: the character randomized benchmarking protocol, which leverages techniques from character theory29 to isolate the exponential decay channels in Eq. (2). One can then fit these exponential decays one at a time, obtaining the quality parameters fλ. We emphasize that the data generated by character randomized benchmarking can always be fitted to a single exponential, even if the gateset being benchmarked is not the Clifford group. Moreover, our method retains its validity in the presence of leakage, which also causes deviations from single exponential behavior for standard randomized benchmarking14 (even when the gateset is the Clifford group).

For the rest of the paper, we will use the Pauli Transfer Matrix (PTM) representation of quantum channels (This representation is also sometimes called the Liouville representation or affine representation of quantum channels30,31). Key to this representation is the realization that the set of normalized non-identity Pauli matrices σq on q qubits, together with the normalized identity σ0 := 2−q/21 forms an orthonormal basis (with respect to the trace inner product) of the Hilbert space of Hermitian matrices of dimension 2q. Density matrices ρ and POVM elements Q can then be seen as vectors and co-vectors expressed in the basis \(\{ \sigma _0\} \cup {\boldsymbol{\sigma }}_{\mathbf{q}}\), denoted |ρ〉〉 and 〈〈Q| respectively. Quantum channels \({\cal{E}}\)32 are then matrices (we will denote a channel and its PTM representation by the same letter) and we have \({\cal{E}}|\rho \rangle \rangle = |{\cal{E}}(\rho )\rangle \rangle\). Composition of channels \({\cal{E}},{\cal{F}}\)corresponds to multiplication of their PTM representations, that is \(|{\cal{E}} \circ {\cal{F}}(\rho )\rangle \rangle = {\cal{E}}{\cal{F}}|\rho \rangle \rangle\). Moreover, we can write expectation values as bra-ket inner products, i.e. \(\langle \langle Q|{\cal{E}}|\rho \rangle \rangle = {\mathrm{Tr}}(Q{\cal{E}}(\rho ))\). The action of a unitary G on a matrix ρ is denoted \({\cal{G}}\), i.e. \({\cal{G}}|\rho \rangle \rangle = |G\rho G^\dagger \rangle \rangle\) and we denote its noisy implementation by \(\tilde {\cal{G}}\). For a more expansive review of the PTM representation, see Section I.2 in the Supplementary Methods.

We will, for ease of presentation, also assume gate-independent noise. This means we assume the existence of a CPTP map \({\cal{E}}\) such that \(\tilde {\cal{G}} = {\cal{E}}{\cal{G}}\) for all G ∈ G. We however emphasize that our protocol remains functional even in the presence of gate-dependent noise. We provide a formal proof of this, generalizing the modern treatment of standard randomized benchmarking with gate-dependent noise,14 in the Methods section.

Standard randomized benchmarking

Let’s first briefly recall the ideas behind standard randomized benchmarking. Subject to the assumption of gate-independent noise, the average survival probability pm of the standard randomized benchmarking procedure over a gateset G (with input state ρ and measurement POVM {Q, 1 − Q}) with sequence length m can be written as: ref. 2

where \({\Bbb E}_{G \in {\mathrm{G}}}\) denotes the uniform average over G. The key insight to randomized benchmarking is that \({\cal{G}}\) is a representation (for a review of representation theory see section I.1 in the Supplementary Methods) of G ∈ G. This representation will not be irreducible but will rather decompose into irreducible subrepresentations, that is \({\cal{G}} = \oplus _{\lambda \in R_{\mathrm{G}}}\phi _\lambda (G)\) where RG is an index set and ϕλ are irreducible representations of G which we will assume to all be mutually inequivalent. Using Schur’s lemma, a fundamental result in representation theory, we can write Eq. (3) as

where \({\cal{P}}_\lambda\) is the orthogonal projector onto the support of ϕλ (note that this is a superoperator) and \(f_\lambda : = {\mathrm{Tr}}({\cal{P}}_\lambda {\cal{E}})/{\mathrm{Tr}}({\cal{P}}_\lambda )\) is the quality parameter associated to the representation ϕλ (note that the trace is taken over superoperators). This reproduces Eq. (2). A formal proof of Eq. (4) can be found in the Supplementary Methods and in ref. 21 The average fidelity of the gateset G can then be related to the parameters fλ as

Note again that RG includes the trivial subrepresentation carried by |1〉〉, so when \({\cal{E}}\) is a CPTP map there is a λ ∈ RG for which fλ = 1. See Lemma’s 4 and 5 in the Supplementary Methods for a proof of Eq. (5)

Character randomized benchmarking

Now we present our new method called character randomized benchmarking. For this we make use of concepts from the character theory of representations.29 Associated to any representation \(\hat \phi\) of a group \({\hat{\mathrm G}}\) is a character function \(\chi _{\hat \phi }:{\hat{\mathrm G}} \to {\Bbb R}\), from the group to the real numbers (Generally the character function is a map to the complex numbers, but in our case it is enough to only consider real representations). Associated to this character function is the following projection formula:29

where \({\cal{P}}_{\hat \phi }\) is the projector onto the support of all subrepresentations of \(\hat {\cal{G}}\) equivalent to \(\hat \phi\) and \(|\hat \phi |\) is the dimension of the representation \(\hat \phi\). We will leverage this formula to adapt the randomized benchmarking procedure in a way that singles out a particular exponential decay \(f_\lambda ^m\) in Eq. (2).

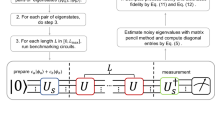

We begin by choosing a group G. We will call this group the ‘benchmarking group’ going forward and it is for this group/gateset that we will estimate the average fidelity. In general we will have that \({\cal{G}} = \oplus _{\lambda \in R_{\mathrm{G}}}\phi _\lambda (G)\) where RG is an index set and ϕλ are irreducible representations of G which we will assume to all be mutually inequivalent (It is straightforward to extend character randomized benchmarking to also cover the presence of equivalent irreducible subrepresentation. However do not make this extension explicit here in the interest of simplicity). Now fix a λ′ ∈ RG. fλ′ is the quality parameter associated to a specific subrepresentation ϕλ′ of \({\cal{G}}\). Next consider a group \({\hat{\mathrm G}} \subset {\mathrm{G}}\) such that the PTM representation \(\hat {\cal{G}}\) has a subrepresentation \(\hat \phi\), with character function \(\chi _{\hat \phi }\), that has support inside the representation ϕλ′ of G, i.e. \({\cal{P}}_{\hat \phi } \subset {\cal{P}}_{\lambda \prime }\) where \({\cal{P}}_{\lambda \prime }\) is again the projector onto the support of ϕλ′. We will call this group \({\hat{\mathrm G}}\) the character group. Note that such a pair \({\hat{\mathrm G}},\hat \phi\) always exists; we can always choose \({\hat{\mathrm G}} = {\mathrm{G}}\) and \(\hat \phi = \phi _{\lambda \prime }\). However other natural choices often exist, as we shall see when discussing examples of character randomized benchmarking. The idea behind the character randomized benchmarking protocol, described in Fig. 1, is now to effectively construct Eq. (6) by introducing the application of an extra gate \(\hat G\) drawn at random from the character group \({\hat{\mathrm G}}\) into the standard randomized benchmarking protocol. In practice this gate will not be actively applied but must be compiled into the gate sequence following it, thus not resulting in extra noise (this holds even in the case of gate-dependent noise, see Methods).

The character randomized benchmarking protocol. Note the inclusion of the gate \(\hat G\) and the average over the character function \(\chi _{\hat \phi }\), which form the key ideas behind character randomized benchmarking. Note also that this extra gate \(\hat G\) is compiled into the sequence of gates (G1, …, Gm) and thus does not result in extra noise

This extra gate \(\hat G \in {\hat{\mathrm G}}\) is not included when computing the global inverse \(G_{{\mathrm{inv}}} = (G_1 \ldots G_m)^\dagger\). The average over the elements of \({\hat{\mathrm G}}\) is also weighted by the character function \(\chi _{\hat \phi }\) associated to the representation \(\hat \phi\) of \({\hat{\mathrm G}}\). Similar to eq. (3) we can rewrite the uniform average over all \(\vec G \in {\mathrm{G}}^{ \times m}\) and \(\hat G \in {\hat{\mathrm G}}\) as

Using the character projection formula (Eq. (6)), the linearity of quantum mechanics, and the standard randomized benchmarking representation theory formula (Eq. (4)) we can write this as

since we have chosen \({\hat{\mathrm G}}\) and \(\hat \phi\) such that \({\cal{P}}_{\hat \phi } \subset {\cal{P}}_{\lambda \prime }\). This means the character randomized benchmarking protocol isolates the exponential decay associated to the quality parameter fλ′ independent of state preparation and measurement. We can now extract fλ′ by fitting the data-points \(k_m^{\lambda^{\prime} }\) to a single exponential of the form \(Af_{\lambda \prime }^m\). Note that this remains true even if \({\cal{E}}\) is not trace-preserving, i.e. the implemented gates experience leakage. Repeating this procedure for all λ′ ∈ RG (choosing representations \(\hat \phi\) of \({\hat{\mathrm G}}\) such that \({\cal{P}}_{\hat \phi } \subset {\cal{P}}_{\lambda \prime }\)) we can reliably estimate all quality parameters fλ associated with randomized benchmarking over the group G. Once we have estimated all these parameters we can use Eq. (5) to obtain the average fidelity of the gateset G.

Discussion

We will now discuss several examples of randomized benchmarking experiments where the character randomized benchmarking approach is beneficial. The first example, benchmarking T-gates, is taken from the literature18 while the second one, performing interleaved benchmarking on a 2-qubit gate using only single qubit gates a reference, is a new protocol. We have also implemented this last protocol to characterize a CPHASE gate between spin qubits in Si/SiGe quantum dots, see ref. 33

Benchmarking T-gates

The most common universal gateset considered in the literature is the Clifford + T gateset.17 The average fidelity of the Clifford gates can be extracted using standard randomized benchmarking over the Clifford group, but to extract the average fidelity of the T gate a different approach is needed. Moreover one would like to characterize this gate in the context of larger circuits, meaning that we must find a family of multi-qubit groups that contains the T gate. One choice is to perform randomized benchmarking over the group Tq generated by the CNOT gate between all pairs of qubits (in both directions), Pauli X on all qubits and T gates on all qubits (another choice would be to use dihedral randomized benchmarking22 but this is limited to single qubit systems, or to use the interleaved approach proposed in ref. 24). This group is an example of a CNOT-dihedral group and its use for randomized benchmarking was investigated in.18 There it was derived that the PTM representation of the group Tq decomposes into 3 irreducible subrepresentations ϕ1, ϕ2, ϕ3 with associated quality parameters f1, f2, f3 and projectors

where σ0 is the normalized identity, σq is the set of normalized Pauli matrices and \({\cal{Z}}_q\) is the subset of the normalized Pauli matrices composed only of tensor products of Z and 1. Noting that f1 = 1 if the implemented gates \(\tilde {\cal{G}}\) are CPTP we must estimate f2 and f3 in order to estimate the average fidelity of Tq. Using standard randomized benchmarking this would thus lead to a two-decay, four-parameter fitting problem, but using character randomized benchmarking we can fit f2 and f3 separately. Let’s say we want to estimate f2, associated to ϕ2, using character randomized benchmarking. In order to perform character randomized benchmarking we must first choose a character group \({\hat{\mathrm G}}\). A good choice for \({\hat{\mathrm G}}\) is in this case the Pauli group Pq. Note that Pq ⊂ Tq since T4 = Z the Pauli Z matrix.

Having chosen \({\hat{\mathrm G}} = {\mathrm{P}}_q\) we must also choose an irreducible subrepresentation \(\hat \phi\) of the PTM representation of the Pauli group Pq such that \({\cal{P}}_{\hat \phi }{\cal{P}}_2 = {\cal{P}}_{\hat \phi }\). As explained in detail in section V.I in the Supplementary Methods the PTM representation of the Pauli group has 2q irreducible inequivalent subrepresentations of dimension one. These representations ϕσ are each associated to an element \(\sigma \in \{ \sigma _0\} \cup {\boldsymbol{\sigma }}_{\mathbf{q}}\) of the Pauli basis. Concretely we have that the projector onto the support of ϕσ is given by \({\cal{P}}_\sigma = |\sigma \rangle \rangle \langle \langle \sigma |\). This means that, to satisfy \({\cal{P}}_{\hat \phi }{\cal{P}}_2 = {\cal{P}}_{\hat \phi }\) we have to choose \(\hat \phi = \phi _\sigma\) with \(\sigma \in {\cal{Z}}_q\). One could for example choose σ proportional to Z⊗q. The character associated to the representation ϕσ is χσ(P) = (−1)〈P,σ〉 where 〈P, σ〉 = 1 if and only if P and σ anti-commute and zero otherwise (we provided a proof of this fact in section V.1 of the Supplementary Methods). Hence the character randomized benchmarking experiment with benchmarking group Tq, character group Pq and subrepresentation \(\hat \phi = \phi _\sigma\) produces data that can be described by

allowing us to reliably extract the parameter f2. We can perform a similar experiment to extract f3, but we must instead choose \(\sigma \in {\boldsymbol{\sigma }}_q\backslash {\cal{Z}}\). A good choice would for instance be σ proportional to X⊗q.

Having extracted f2 and f3 we can then use Eq. (5) to obtain the average fidelity of the gateset Tq as:18

Finally we would like to note that in order to get good signal one must choose ρ and Q appropriately. The correct choice is suggested by Eq. (7). For instance, if when estimating f2 as above we choose σ proportional to Z⊗q we must then choose \(Q = \frac{1}{2}(1 + Z^{ \otimes 2})\) and \(\rho = \frac{1}{d}(1 + Z^{ \otimes 2})\). This corresponds to the even parity eigenspace (in the computational basis).

2-for-1 interleaved benchmarking

The next example is a new protocol, which we call 2-for-1 interleaved randomized benchmarking. It is a way to perform interleaved randomized benchmarking34 of a 2-qubit Clifford gate C using only single qubit Clifford gates as reference gates. The advantages of this are (1) lower experimental requirements and (2) a higher reference gate fidelity relative to the interleaved gate fidelity allows for a tighter estimate of the average fidelity of the interleaved gate (assuming single qubit gates have higher fidelity than two qubit gates). This latter point is related to an oft overlooked drawback of interleaved randomized benchmarking, namely that it does not yield a direct estimate of the average fidelity F(C) of the interleaved gate C but only gives upper and lower bounds on this fidelity. These upper and lower bounds moreover depend34,35 on the fidelity of the reference gates and can be quite loose if the fidelity of the reference gates is low. To illustrate the advantages of this protocol we have performed a simulation comparing it to standard interleaved randomized benchmarking (details can be found in section V.2 in the Supplementary Methods). Following recent single qubit randomized benchmarking and Bell state tomography results in spin qubits in Si/SiGe quantum dots36,37,38 we assumed single qubit gates to have a fidelity of \(F_{{\mathrm{avg}}}^{(1)} = 0.987\) and two-qubit gates to have a fidelity of Favg(C) = 0.898. Using standard interleaved randomized benchmarking34 we can guarantee (using the optimal bounds of ref. 35) that the fidelity of the interleaved gate is lower bounded by \(F_{{\mathrm{avg}}}^{{\mathrm{int}}} \approx 0.62\) while using 2-for-1 interleaved randomized benchmarking we can guarantee that the fidelity of interleaved gate is lower bounded by Favg(C) ≈ 0.79, a significant improvement that is moreover obtained by a protocol requiring less experimental resources. On top of this the 2-for-1 randomized benchmarking protocol provides strictly more information than simply the average fidelity, we can also extract a measure of correlation between the two qubits, as per.23 In another paper33 we have used this protocol to characterize a CPHASE gate between spin qubits in Si/SiGe quantum dots.

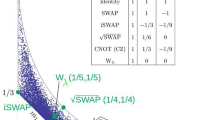

An interleaved benchmarking experiment consists of two stages, a reference experiment and an interleaved experiment. The reference experiment for 2-for-1 interleaved randomized benchmarking consists of character randomized benchmarking using 2 copies of the single-qubit Clifford group \({\mathrm{G}} = {\mathrm{C}}_1^{ \otimes 2}\) as the benchmarking group (this is also the group considered in simultaneous randomized benchmarking23). The PTM representation of \({\mathrm{C}}_1^{ \otimes 2}\) decomposes into four irreducible subrepresentations and thus the fitting problem of a randomized benchmarking experiment over this group involves 4 quality parameters fw indexed by w = (w1, w2) ∈ {0, 1}×2. The projectors onto the associated irreducible representations ϕw are

where σw is the set of normalized 2-qubit Pauli matrices that have non-identity Pauli matrices at the i’th tensor factor if and only if wi = 1. To perform character randomized benchmarking we choose as character group \({\hat{\mathrm G}} = {\mathrm{P}}_2\) the 2-qubit Pauli group. For each w ∈ {0, 1}×2 we can isolate the parameter fw by correctly choosing a subrepresentation ϕσ of the PTM representation of P2. Recalling that \({\cal{P}}_\sigma = |\sigma \rangle \rangle \langle \langle \sigma |\) we can choose \(\hat \phi = \phi _\sigma\) for \(\sigma = (Z_1^w \otimes Z_2^w)/2\) to isolate the parameter fw for w = (w1, w2) ∈ {0, 1}×2. We give the character functions associated to these representation in section V.2 of the Supplementary Methods. Once we have obtained all quality parameters fw we can compute the average reference fidelity Fref using Eq. (5).

The interleaved experiment similarly consists of a character randomized benchmarking experiment using \({\mathrm{G}} = {\mathrm{C}}_1^{ \otimes 2}\) but for every sequence \(\vec G = (G_1, \ldots ,G_m)\) we apply the sequence (G1, C, G2, …, C, Gm) instead, where C is a 2-qubit interleaving gate (from the 2-qubit Clifford group). Note that we must then also invert this sequence (with C) to the identity.34 Similarly choosing \({\hat{\mathrm G}} = {\mathrm{P}}_2\) we can again isolate the parameters fw and from these compute the ‘interleaved fidelity’ Fint. Using the method detailed in ref. 35 we can then calculate upper and lower bounds on the average fidelity Favg(C) of the gate C from the reference fidelity Fref and the interleaved fidelity Fint. Note that it is not trivial that the interleaved experiment yields data that can be described by a single exponential decay, we will discuss this in greater detail in the methods section.

Finally we would like to note that the character benchmarking protocol can be used in many more scenarios than the ones outlined here. Character randomized benchmarking is versatile enough that when we want to perform randomized benchmarking we can consider first what group is formed by the native gates in our device and then use character benchmarking to extract gate fidelities from this group directly, as opposed to carefully compiling the Clifford group out of the native gates which would be required for standard randomized benchmarking. This advantage is especially pronounced when the native two-qubit gates are not part of the Clifford group, which is the case for e.g. the \(\sqrt {{\mathrm{SWAP}}}\) gate.39,40

Methods

In this section will discuss three things: (1) The statistical behavior and scalability of character randomized benchmarking, (2) the robustness of character randomized benchmarking against gate-dependent noise, and (3) the behavior of interleaved character randomized benchmarking, and in particular 2-for-1 interleaved benchmarking.

First we will consider whether the character randomized benchmarking protocol is efficiently scalable with respect to the number of qubits (like standard randomized benchmarking) and whether the character randomized benchmarking protocol remains practical when only a finite amount of data can be gathered (this last point is a sizable line of research for standard randomized benchmarking6,28,30,41).

Scalability of character randomized benchmarking

The resource cost (the number of experimental runs that must be performed to obtain an estimate of the average fidelity) of character randomized benchmarking can be split into two contributions: (1) The number of quality parameters fλ associated that must be estimated (this is essentially set by |RG|, the number of irreducible subrepresentations of the PTM representation of the benchmarking group G), and (2) the cost of estimating a single average \(k_m^{\lambda \prime }\) for a fixed λ′ ∈ RG and sequence length m.

The first contribution implies that for scalable character randomized benchmarking with (a uniform family of) groups Gq (w.r.t. the number of qubits q) the number of quality parameters (set by |RG|) must grow polynomially with q. This means that not all families of benchmarking groups are can be characterized by character randomized benchmarking in a scalable manner.

The second contribution, as can be seen in Fig. 1, further splits up into three components: (2a) the magnitude of \(|\hat \phi |\), (2b) the number of random sequences \(\vec G\) needed to estimate \(k_m^{\lambda \prime }\) (given access to \(k_m^{\lambda \prime }(\vec G)\)) and (2c) the number of samples needed to estimate \(k_m^{\lambda \prime }(\vec G)\) for a fixed sequence. We will now argue that the resource cost of all three components are essentially set by the magnitude of \(|\hat \phi |\). Thus if \(|\hat \phi |\) grows polynomially with the number of qubits then the entire resource cost does so as well. Hence a sufficient condition for scalable character randomized benchmarking is that one chooses a family of benchmarking groups where |RG| grows polynomially in q and character groups such that for the relevant subrepresentations \(|\hat \phi |\) the dimension grows polynomially in q.

We begin by arguing (2c):The character-weighted average over the group \({\hat{\mathrm G}}\) for a single sequence \(\vec G\): \(k_m^{\lambda \prime }(\vec G)\), involves an average over \(|{\hat{\mathrm G}}|\) elements (which will generally scale exponentially in q), but can be efficiently estimated by not estimating each character-weighted expectation value \(k_m^{\lambda \prime }(\vec G,\hat G)\) individually but rather estimate \(k_m^{\lambda \prime }(\vec G)\) directly by the following procedure

-

1.

Sample \(\hat G \in {\hat{\mathrm G}}\) uniformly at random

-

2.

Prepare the state \({\cal{G}}_{{\mathrm{inv}}}{\cal{G}}_m \cdots {\cal{G}}_1\hat {\cal{G}}|\rho \rangle \rangle\) and measure it once obtaining a result \(b(\hat G) \in \{ 0,1\}\)

-

3.

Compute \(x(\hat G): = \chi _{\hat \phi }(\hat G)|\hat \phi |b(\hat G) \in \{ 0,\chi _{\hat \phi }(\hat G)|\hat \phi |\}\)

-

4.

Repeat sufficiently many times and compute the empirical average of \(x(\hat G)\)

Through the above procedure we are directly sampling from a bounded probability distribution with mean \(k_m^{\lambda \prime }(\vec G)\) that takes values in the interval \([ - \chi _{\hat \phi }^ \ast ,\chi _{\hat \phi }^ \ast ]\) where \(\chi _{\hat \phi }^ \ast\) is the largest absolute value of the character function \(\chi _{\hat \phi }\). Since the maximal absolute value of the character function is bounded by the dimension of the associated representation,29 this procedure will be efficient as long as \(|\hat \phi |\) is not too big.

For the examples given in the discussion section (with the character group being the Pauli group) the maximal character value is 1. Using standard statistical techniques42 we can give e.g. a 99% confidence interval of size 0.02 around \(k_m^{\lambda \prime }(\vec G)\) by repeating the above procedure 1769 times, which is within an order of magnitude of current experimental practice for confidence intervals around regular expectation values and moreover independent of the number of qubits q. See section VI in the Supplementary Methods for more details on this.

We now consider (2b): From the considerations above we know that \(k_m^{\lambda \prime }(\vec G)\) is the mean of a set of random variables and thus itself a random variable, taking possible values in the interval \([ - \chi _{\hat \phi }^ \ast ,\chi _{\hat \phi }^ \ast ]\). Hence by the same reasoning as above we see that \(k_m^{\lambda \prime }\), as the mean of a distribution (induced by the uniform distribution of sequences \(\vec G\)) confided to the interval \([ - \chi _{\hat \phi }^ \ast ,\chi _{\hat \phi }^ \ast ]\) can be estimated using an amount of resources polynomially bounded in \(|\hat \phi |\). We would like to note however that this estimate is probably overly pessimistic in light of recent results for standard randomized benchmarking on the Clifford group28,30 where it was shown that the average \(k_m^{\lambda \prime }\) over sequences \(\vec G \in {\mathrm{G}}^{ \times m}\) can be estimated with high precision and high confidence using only a few hundred sequences. These results depend on the representation theoretic structure of the Clifford group but we suspect that it is possible to generalize these results at least partially to other families of benchmarking groups. Moreover any such result can be straightforwardly adapted to also hold for character randomized benchmarking. Actually making such estimates for other families groups is however an open problem, both for standard and character randomized benchmarking.

To summarize, the scalability of character randomized benchmarking depends on the properties of the families of benchmarking and character groups chosen. One should choose the benchmarking groups such that the number of exponential decays does not grow too rapidly with the number of qubits, and one should choose the character group such that the dimension of the representation being projected on does not grow too rapidly with the number of qubits.

Gate-dependent noise

Thus far we have developed the theory of character randomized benchmarking under the assumption of gate-independent noise. This is is not a very realistic assumption. Here we will generalize our framework to include gate-dependent noise. In particular we will deal with the so called ‘non-Markovian’ noise model. This noise model is formally specified by the existence of a function \({\mathrm{\Phi }}:{\mathrm{G}} \to {\cal{S}}_{2^q}\) which assigns to each element G of the group G a quantum channel \({\mathrm{\Phi }}(G) = {\cal{E}}_G\). Note that this model is not the most general, it does not take into account the possibility of time dependent effects or memory effects during the experiment. It is however much more general and realistic than the gate-independent noise model. In this section we will prove two things:

-

1.

A character randomized benchmarking experiment always yields data that can be fitted to a single exponential decay up to a small and exponentially decreasing corrective term.

-

2.

The decay rates yielded by a character randomized benchmarking experiment can be related to the average fidelity (to the identity) of the noise in between gates, averaged over all gates.

Both of these statements, and their proofs, are straightforward generalizations of the work of Wallman14 which dealt with standard randomized benchmarking. We will see that his conclusion, that randomized benchmarking measures the average fidelity of noise in between quantum gates up to a small correction, generalizes to the character benchmarking case. We begin with a technical theorem, which generalizes [14, Theorem 2] to twirls over arbitrary groups (with multiplicity-free PTM representations).

Theorem 1

Let G be a group such that its PTM representation \({\cal{G}} = \oplus _{\lambda \in R_{\mathrm{G}}}\phi _\lambda (G)\) is multiplicity-free. Denote for all λ by fλ the largest eigenvalue of the operator \({\Bbb E}_{G \in {\mathrm{G}}}(\tilde {\cal{G}} \otimes \phi _\lambda (G))\) where \(\tilde {\cal{G}}\) is the CPTP implementation of G ∈ G. There exist Hermicity-preserving linear superoperators \({\cal{L}},{\cal{R}}\) such that

where \({\cal{D}}_{\mathrm{G}}\) is defined as

with \({\cal{P}}_\lambda\) the projector onto the representation ϕλ for all λ ∈ RG.

Proof. Using the definition of \({\cal{G}}\) and \({\cal{D}}_{\mathrm{G}}\) we can rewrite Eq. (11) as

This means that, without loss of generality, we can take \({\cal{L}}\) to be of the form

Similarly we can take \({\cal{R}}\) to be

This means Eqs. (11) and (12) decompose into independent pairs of equations for each λ:

Next we use the vectorization operator \({\mathrm{vec}}:{\mathrm{M}}_{2^{2q}} \to {\Bbb R}^{2^{4q}}\) mapping the PTM representations of superoperators to vectors of length \({\Bbb R}^{2^{4q}}\). This operator has the property that for all \(A,B,C \in {\mathrm{M}}_{2^{2q}}\) we have

where CT is the transpose of C. Applying this to the equations Eqs. (18) and (19) and noting that \({\cal{G}}^\dagger = {\cal{G}}^T\) since \({\cal{G}}\) is a real matrix we get the eigenvalue problems equivalent to Eqs. (18) and (19),

Since we have defined fλ to be the largest eigenvalue of \({\Bbb E}_{G \in {\mathrm{G}}}(\tilde {\cal{G}} \otimes \phi _\lambda (G))\) (and equivalently of \({\Bbb E}_{G \in {\mathrm{G}}}(\tilde {\cal{G}} \otimes \phi _\lambda (G))^T\)) we can choose \({\mathrm{vec}}({\cal{L}})\) and \({\mathrm{vec}}({\cal{R}})\) to be the left and right eigenvectors respectively of \({\Bbb E}_{G \in {\mathrm{G}}}(\tilde {\cal{G}} \otimes \phi _\lambda (G))\) associated to fλ. Inverting the vectorization we obtain solutions to the equations Eqs. (18) and (19) and hence also Eqs. (11) and (12). To see that this solution also satisfies Eq. (13) we note first that \({\Bbb E}_{G \in {\mathrm{G}}}({\cal{G}}{\cal{R}}_\lambda {\cal{L}}_\lambda {\cal{G}}^\dagger )\) is proportional to Pλ for any \({\cal{R}}_\lambda ,{\cal{L}}_\lambda\) satisfying Eqs. (16) and (17) (by Schur’s lemma). Since the eigenvectors of \({\Bbb E}_{G \in {\mathrm{G}}}(\tilde {\cal{G}} \otimes \phi _\lambda (G))\) are only defined up to a constant we can for every λ choose proportionality constants such that \({\Bbb E}_{G \in {\mathrm{G}}}({\cal{G}}{\cal{R}}_\lambda {\cal{L}}_\lambda {\cal{G}}^\dagger ) = f_\lambda P_\lambda\) and thus that Eq. (13) is satisfied.

Next we prove that if we perform a character randomized benchmarking experiment with benchmarking group G, character group \(\hat G\) and subrepresentations \(\hat \phi \subset \phi _{\lambda^{\prime} }\) for some λ′ ∈ RG, the observed data can always be fitted (up to an exponentially small correction) to a single exponential decay. The decay rate of fλ′ associated to this experiment will be the largest eigenvalue of the operator \({\Bbb E}_{G \in {\mathrm{G}}}(\tilde {\cal{G}} \otimes \phi _{\lambda^{\prime} }(G))\) mentioned in the theorem above. Later we will give an operational interpretation of this number. We begin by defining, for all G ∈ G a superoperator ΔG which captures the ‘gate-dependence’ of the noise implementation of \({\cal{G}}\),

where \({\cal{R}},{\cal{L}}\) are defined as in Theorem 1. Using this expansion we have the following theorem, which generalizes [14, Theorem 4] to character randomized benchmarking over arbitrary finite groups with multiplicity-free PTM representation.

Theorem 2

Let G be a group such that its PTM representation \({\cal{G}} = \oplus _{\lambda \in R_{\mathrm{G}}}\phi _\lambda (G)\) is multiplicity-free. Consider the outcome of a character randomized benchmarking experiment with benchmarking group G, character group \(\hat G\), subrepresentations \(\hat \phi \subset \phi _{\lambda \prime }\) for some λ′ ∈ RG, and set of sequence lengths \({\Bbb M}\). That is, consider the real number

for some input state ρ and output POVM {Q, 1 − Q} and \(m \in {\Bbb M}\). This probability can be fitted to an exponential of the form

where A is a fitting parameter, fλ is the largest eigenvalue of the operator \({\Bbb E}_{G \in {\mathrm{G}}}(\tilde {\cal{G}} \otimes \phi _\lambda (G))\) and \(\varepsilon _m \le \delta _1\delta _2^m\) with

where \(\left\| \cdot \right\|_\diamondsuit\) is the diamond norm on superoperators.43

Proof. We begin by expanding \(\widetilde {{\cal{G}}_{1}\widehat {\cal{G}}} = {\cal{L}}{\cal{G}}_{1}\hat {\cal{G}}{\cal{R}} + {\mathrm{\Delta }}_{G_{1}\hat {G}}\). This gives us

We now analyze the first term in Eq. (28). Using the character projection formula, the fact that \({\cal{G}}_1 = ({\cal{G}}_{inv}{\cal{G}}_m \ldots {\cal{G}}_2)^\dagger\) and Eq. (11) from Theorem 1 we get

where we used that \({\cal{D}}_{\mathrm{G}}\) commutes with \({\cal{G}}\) for all G ∈ G and the fact that \({\cal{D}}_{\mathrm{G}}{\cal{P}}_{\hat \phi } = f_{\lambda^{\prime}}{\cal{P}}_{\hat \phi }\). Next we consider the second term in Eq. (28). For this we first need to prove a technical statement. We make the following calculation for all j ≥ 2 and \(\hat G \in {\hat{\mathrm G}}\):

where we used the definition of \({\mathrm{\Delta }}_{G_{j - 1}}\), the fact that \(G_{j - 1} = (G_m \ldots G_{j + 1})^\dagger G_{{\mathrm{inv}}}(G_1 \ldots G_{j - 1})^\dagger\) and Eqs. (12) and (13). We can apply this calculation to the second term of Eq. (28) to get

Hence we can write

with

We can upper bound εm by

Setting

we complete the proof.

In14 it was shown that δ2 is small for realistic gate-dependent noise. This implies that for large enough m the outcome of a character randomized benchmarking experiment can be described by a single exponential decay (up to a small, exponentially decreasing factor). The rate of decay fλ′ can be related to the largest eigenvalue of the operator \({\Bbb E}_{G \in {\mathrm{G}}}(\tilde {\cal{G}} \otimes \phi _{\lambda^{\prime} }(G))\). We can interpret this rate of decay following Wallman14 by setting w.l.o.g. \(\tilde {\cal{G}} = {\cal{L}}_G{\cal{G}}{\cal{R}}\) where \({\cal{R}}\) is defined as in Theorem 1 and is invertible (we can always render \({\cal{R}}\) invertible by an arbitrary small perturbation). Now consider from \(\tilde {\cal{G}} = {\cal{L}}_G{\cal{G}}{\cal{R}}\) and the invertibility of \({\cal{R}}\):

and moreover from Eq. (12):

From this we can consider the average fidelity of noise between gates (the map \(({\cal{R}}{\cal{L}}_G)\) averaged over all gates:

Hence can interpret the quality parameters given by character randomized benchmarking as characterizing the average noise in between gates, extending the conclusion reached in14 for standard randomized benchmarking to character randomized benchmarking. In ref. 16 an alternative interpretation of the decay rate of randomized benchmarking in the presence of gate dependent noise is given in terms of Fourier transforms of matrix valued group functions. One could recast the above analysis for character randomized benchmarking in this language as well but we do not pursue this further here.

Interleaved character randomized benchmarking

In the main text we proposed 2-for-1 interleaved randomized benchmarking, a form of character interleaved randomized benchmarking. More generally we can consider performing interleaved character randomized benchmarking with a benchmarking group G, a character group \({\hat{\mathrm G}}\), and an interleaving gate C. However it is not obvious that the interleaved character randomized benchmarking procedure (for arbitrary G and C) always yields data that can be fitted to a single exponential such that the average fidelity can be extracted. Here we will justify this behavior subject to an assumption on the relation between the interleaving gate C and the benchmarking group G which we expect to be quite general. This relation is phrased in terms of what we call the ‘mixing matrix’ of the group G and gate C. This matrix, which we denote by M, has entries

for \(\lambda ,\lambda \prime \in R_{\mathrm{G}}^\prime = R_{\mathrm{G}}\backslash \{ {\mathrm{id}}\}\) with ϕid the trivial subrepresentation of the PTM representation of G carried by |1〉〉 and where \({\cal{P}}_\lambda\) is the projector onto the subrepresentation ϕλ of \({\cal{G}}\). Note that this matrix is defined completely by C and the PTM representation of G. Note also that this matrix has only non-negative entries, that is \(M_{\lambda ,\hat \lambda } \ge 0\quad \forall \lambda ,\hat \lambda\).

In the following lemma we will assume that the mixing matrix M is not only non-negative but also irreducible in the Perron-Frobenius sense.44 Formally this means that there exists an integer L such that AL has only strictly positive entries. This assumption will allow us to invoke the powerful Perron-Frobenius theorem44 to prove in Theorem 3 that interleaved character randomized benchmarking works as advertised. Below Theorem 3 we will also explicitly verify the irreducibility condition for 2-for-1 interleaved benchmarking with the CPHASE gate. We note that the assumption of irreducibility of M can be easily relaxed to M being a direct sum of irreducible matrices with the proof of Theorem 3 basically unchanged. It is an open question if it can be relaxed further to encompass all non-negative mixing matrices.

Theorem 3

Consider the outcome \(k_{\lambda^{\prime} }^m\) of an interleaved character randomized benchmarking experiment benchmarking group G, character group \(\hat G\), subrepresentations \(\hat \phi \subset \phi _{\lambda^{\prime} }\) for some λ′ ∈ RG, interleaving gate C, and set of sequence lengths \({\Bbb M}\) and assume the existence of quantum channels \({\cal{E}}_C,{\cal{E}}\) s.t. \(\tilde {\cal{C}} = {\cal{C}}{\cal{E}}_C\) and \(\tilde {\cal{G}} = {\cal{E}}{\cal{G}}\) for all G ∈ G. Now consider the matrix \(M({\cal{E}}_C{\cal{E}})\) as a function of the composed channel \({\cal{E}}_C{\cal{E}}\) with entries

for \(\lambda ,\lambda^{\prime} \in R_{\mathrm{G}}^{\prime} = R_{\mathrm{G}}\backslash \{ {\mathrm{id}}\}\) where \({\cal{P}}_\lambda\) is again the projector onto the subrepresentation ϕλ of \({\cal{G}}\). If for \({\cal{E}} = {\cal{E}}_C = {\cal{I}}\) (the identity map) the matrix \(M({\cal{I}}) = M\) (the mixing matrix defined above) is irreducible (in the sense of Perron-Frobenius), then there exist parameters A, fλ′ s.t.

with \(\delta _1 = O(1 - F_{{\mathrm{avg}}}({\cal{E}}_C{\cal{E}}))\) and \(\delta _2 = \gamma + O([1 - F_{{\mathrm{avg}}}({\cal{E}}_C{\cal{E}})]^2)\) where γ is the second largest eigenvalue (in absolute value) of M. Moreover we have that (noting that fid = 1 as the map \({\cal{E}}_C{\cal{E}}\) is CPTP):

Proof. Consider the definition of \(k_{\lambda \prime }^m\):

where \(G_{{\mathrm{inv}}} = G_1^\dagger C^\dagger \cdots G_m^\dagger C^\dagger\) and \({\cal{E}}_{{\mathrm{inv}}}\) is the noise associated to the inverse gate (which we assume to be constant). Using the character projection formula and Schur’s lemma we can write this as

Note now that in general \({\cal{C}}\) and \({\cal{P}}_{\lambda _m}\) do not commute. This means that we can not repeat the reasoning of Lemma 3 but must instead write (using Schur’s lemma again):

Here we recognize the definition of the matrix element \(M_{\lambda _{m - 1},\lambda _m}({\cal{E}}_C{\cal{E}})\). Moreover we can apply the above expansion to Gm−2,Gm−3 and so forth writing the result in terms of powers of the matrix \(M({\cal{E}}_C{\cal{E}})\). After some reordering we get

where we have again absorbed the noise associated with the inverse Ginv into the measurement POVM element Q. Now recognizing that by construction \({\cal{P}}_{\hat \phi } \subset {\cal{P}}_{\lambda \prime }\) we can write \(k_m^{\lambda \prime }\) as

where eλ′ is the λ′th standard basis row vector of length \(R_{\mathrm{G}}^\prime\) and \(v = v({\cal{E}}_C{\cal{E}})\) is a row vector of length \(R_{_{\mathrm{G}}}^\prime\) with entries \([v]_\lambda = \frac{{{\mathrm{Tr}}(P_{\lambda _m}{\cal{E}}_C{\cal{E}})}}{{{\mathrm{Tr}}({\cal{P}}_{\lambda _m})}}\). This looks somewhat like an exponential decay but not quite. Ideally we would like that Mm has one dominant eigenvalue and moreover that the vector v has high overlap with the corresponding eigenvector. This would guarantee that \(k_m^{\lambda \prime }\) is close to a single exponential. The rest of the proof will argue that this is indeed the case. Now we use the assumption of the irreducibility of the mixing matrix \(M = M({\cal{I}})\). Subject to this assumption, the Perron-Frobenius theorem44 states that the matrix M has a non-degenerate eigenvalue \(\gamma _{{\mathrm{max}}}(M({\cal{I}}))\) that is strictly larger in absolute value than all other eigenvalues of \(M({\cal{I}})\) and moreover satisfies the inequality

It is easy to see from the definition of \(M_{\lambda ,\hat \lambda }\) that

for all \(\lambda \in R_{\mathrm{G}}^\prime\). This means the largest eigenvalue of \(M({\cal{I}})\) is exactly 1. Moreover, as one can easily deduce by direct calculation, the associated right-eigenvector is the vector vR = (1, 1, …, 1). Note that this vector is precisely \(v({\cal{E}}_C{\cal{E}})\) (as defined in Eq. (62)) for \({\cal{E}}_C{\cal{E}} = {\cal{I}}\). Similarly the left-eigenvector of \(M = M({\cal{I}})\) is given by (in terms of its components) \(v_\lambda ^L = {\mathrm{Tr}}({\cal{P}}_\lambda )\). This allows us to calculate that \(k_m^{\lambda \prime } = \langle \langle Q|{\cal{P}}_{\hat \phi }|\rho \rangle \rangle\) if \({\cal{E}}_C{\cal{E}} = {\cal{I}}\), which is as expected.

Now we will consider the map \({\cal{E}}_C{\cal{E}}\) as a perturbation of \({\cal{I}}\) with the perturbation parameter

with \({\cal{P}}_{{\mathrm{tot}}} = \mathop {\sum}\nolimits_{\lambda \in R_{\mathrm{G}}^\prime } {{\cal{P}}_\lambda }\). We can write the quantum channel \({\cal{E}}_C{\cal{E}}\) as \({\cal{E}}_C{\cal{E}} = {\cal{I}} - \alpha {\cal{F}}\) where \({\cal{F}}\) is some superoperator (not CP, but by construction trace-annihilating). Since \(M({\cal{E}}_C{\cal{E}})\) is linear in its argument we can write \(M({\cal{E}}_C{\cal{E}}) = M({\cal{I}}) - \alpha M({\cal{F}})\). From standard matrix perturbation theory [ref. 45, Section 5.1] we can approximately calculate the largest eigenvalue of \(M({\cal{E}}_C{\cal{E}})\) as

We can now calculate the prefactor \(\frac{{v^LM({\cal{F}})v^{R^T}}}{{v^Lv^{R^T}}}\) as

where we used the definition of α in the last line. This means that \(\gamma _{{\mathrm{max}}}(M({\cal{E}}_C{\cal{E}})) = 1 - \alpha\) up to O(α2)corrections. One could in principle calculate the prefactor of the correction term, but we will not pursue this here. Now we know that the matrix \(M({\cal{E}}_C{\cal{E}})^{m - 1}\) in Eq. (62) will be dominated by a factor (1 − α + O(α2))m−1. However it could still be that the vector \(v({\cal{E}}_C{\cal{E}})\) in Eq. (62) has small overlap with the right-eigenvector \(v^R({\cal{E}}_C{\cal{E}})\) of \(M({\cal{E}}_C{\cal{E}})\) associated to the largest eigenvalue \(\gamma _{{\mathrm{max}}}(M({\cal{E}}_C{\cal{E}}))\). We can again use a perturbation argument to see that this overlap will be big. Again from standard perturbation theory [ref. 45, Section 5.1] we have

Moreover, by definition of \(v^R({\cal{I}})\) and \(v({\cal{E}}_C{\cal{E}})\) we have that \(v^Rv({\cal{E}}_C{\cal{E}})^T = 1 - \alpha\). By the triangle inequality we thus have

One can again fill in the constant factors here if one desires a more precise statement. Finally we note from Lemma 4 that

This means that in the relevant limit of high fidelity, α will be small, justifying our perturbative analysis. Defining γ to be the second largest (in absolute value) eigenvalue of \(M({\cal{E}}_C{\cal{E}})\), which by the same argument as above will be the second largest eigenvalue of \(M({\cal{I}})\) up to O(α2) corrections, we get

with \(\delta _1 = O(1 - F_{{\mathrm{avg}}}({\cal{E}}_C{\cal{E}}))\) and \(\delta _2 = |\gamma | + O((1 - F_{{\mathrm{avg}}}({\cal{E}}_C{\cal{E}}))^2)\). Moreover, we have from Eqs. (68) and (76) that

which immediately implies

proving the lemma.

It is instructive to calculate the mixing matrix for a relevant example. We will calculate M for C the CPHASE gate and \({\mathrm{G}} = {\mathrm{C}}_1^{ \otimes 2}\) two copies of the single qubit Clifford gates. Recall from the main text that the PTM representation of \({\mathrm{C}}_1^{ \otimes 2}\) has three non-trivial subrepresentations. From their definitions in Eq. (10) and the action of the CPHASE gate on the two qubit Pauli operators it is straightforward to see that the mixing matrix is of the form

Calculating M2 one can see that M is indeed irreducible. Moreover M has eigenvalues 1, 1/3 and −1/9. This means that for 2-for-1 interleaved benchmarking the interleaved experiment produces data that deviates from a single exponential no more than (1/3)m (for sufficiently high fidelity) which will be negligible for even for fairly small m. This means that for 2-for-1 interleaved benchmarking the assumption that the interleaved experiment produces data described by a single exponential is good. We will see this confirmed numerically in the simulated experiment presented in Supplementary Fig. 2. Finally, we note that a similar result was achieved using different methods in ref. 46,47

Data availability

The data and analysis used to generate Supplementary Fig. 2 will be available online at https://doi.org/10.5281/zenodo.2549368. No other supporting data was generated or analyzed for this work.

References

Dankert, C., Cleve, R., Emerson, J. & Livine, E. R. Exact and approximate unitary 2-designs: Constructions and applications. Phys. Rev. A 80, 012304 (2006).

Magesan, E., Gambetta, J. M. & Emerson, J. Characterizing quantum gates via randomized benchmarking. Phys. Rev. A 85, 042311 (2012).

Emerson, J., Alicki, R. & Życzkowski, K. Scalable noise estimation with random unitary operators. J. Opt. B 7, S347 (2005).

Chow, J. M. et al. Randomized benchmarking and process tomography for gate errors in a solid-state qubit. Phys. Rev. Lett. 102, 090502 (2009).

Gaebler, J. P. et al. Randomized benchmarking of multiqubit gates. Phys. Rev. Lett. 108, 260503 (2012).

Granade, C., Ferrie, C. & Cory, D. G. Accelerated randomized benchmarking. New J. Phys. 17, 013042 (2014).

Epstein, J. M., Cross, A. W., Magesan, E. & Gambetta, J. M. Investigating the limits of randomized benchmarking protocols. Phys. Rev. A 89, 062321 (2014).

Knill, E. et al. Randomized benchmarking of quantum gates. Phys. Rev. A 77, 012307 (2008).

Asaad, S. et al. Independent, extensible control of same-frequency superconducting qubits by selective broadcasting. npj Quantum Inf. 2, 16029 (2016).

Barends, R. et al. Superconducting quantum circuits at the surface code threshold for fault tolerance. Nature 508, 500–503 (2014).

DiCarlo, L. et al. Demonstration of two-qubit algorithms with a superconducting quantum processor. Nature 460, 240 (2009).

O’Malley, P. et al. Qubit metrology of ultralow phase noise using randomized benchmarking. Phys. Rev. Applied 3, 044009 (2015).

Sheldon, S. et al. Characterizing errors on qubit operations via iterative randomized benchmarking. Phys. Rev. A 93, 012301 (2016).

Wallman, J. J. Randomized benchmarking with gate-dependent noise. Quantum 2, 47 (2018).

Proctor, T., Rudinger, K., Young, K., Sarovar, M. & Blume-Kohout, R. What randomized benchmarking actually measures. Phys. Rev. Lett. 119, 130502 (2017).

Merkel, S. T., Pritchett, E. J. & Fong, B. H. Randomized benchmarking as convolution: Fourier analysis of gate dependent errors. arXiv preprint arXiv:1804.05951 (2018).

Nielsen, M. A. & Chuang, I. L. Quantum Computation and Quantum Information: 10th Anniversary Edition. 10th edn (Cambridge University Press, New York, NY, USA, 2011).

Cross, A. W., Magesan, E., Bishop, L. S., Smolin, J. A. & Gambetta, J. M. Scalable randomised benchmarking of non-clifford gates. npj Quantum Information 2, 16012 (2016).

Brown, W. G. & Eastin, B. Randomized benchmarking with restricted gate sets. arXiv preprint arXiv:1801.04042 (2018).

Hashagen, A., Flammia, S., Gross, D. & Wallman, J. Real randomized benchmarking. arXiv preprint arXiv:1801.06121 (2018).

França, D. S. & Hashagen, A.-L. Approximate randomized benchmarking for finite groups. arXiv preprint arXiv:1803.03621 (2018).

Dugas, A. C., Wallman, J. J. & Emerson, J. Characterizing Universal Gate Sets via Dihedral Benchmarking. arXiv preprint arXiv:1508.06312.

Gambetta, J. M. et al. Characterization of addressability by simultaneous randomized benchmarking. Phys. Rev. Lett. 109, 240504 (2012).

Harper, R. & Flammia, S. T. Estimating the fidelity of t gates using standard interleaved randomized benchmarking. Quantum Sci.Technol. 2, 015008 (2017).

Flammia, S. T. & Liu, Y.-K. Direct fidelity estimation from few Pauli measurements. Phys. Rev. Lett. 106, 230501 (2011).

Harper, R. & Flammia, S. T. Fault-tolerant logical gates in the ibm quantum experience. Phys. Rev. Lett. 122, 080504 (2019).

Muhonen, J. T. et al. Quantifying the quantum gate fidelity of single-atom spin qubits in silicon by randomized benchmarking. J. Phys. Condens. Matter 27, 154205 (2015).

Helsen, J., Wallman, J. J., Flammia, S. T. & Wehner, S. Multi-qubit randomized benchmarking using few samples. arXiv preprint arXiv:1701.04299 (2017).

Fulton, W. & Harris, J. Representation Theory: A First Course. Readings in Mathematics (Springer-Verlag, New York, 2004).

Wallman, J. J. & Flammia, S. T. Randomized benchmarking with confidence. New J. Phys. 16, 103032 (2014).

Wolf, M. Quantum channels operations: Guided tour. Lecture Notes (2012). http://www-m5.ma.tum.de/foswiki/pub/M5/Allgemeines///MichaelWolf/QChannelLecture.pdf.

Chuang, I. L. & Nielsen, M. A. Prescription for experimental determination of the dynamics of a quantum black box. J. Mod. Opt. 44, 2455 (1997).

Xue, X. et al. Benchmarking gate fidelities in a si/sige two-qubit device. arXiv preprint arXiv:1811.04002 (2018).

Magesan, E. et al. Efficient measurement of quantum gate error by interleaved randomized benchmarking. Phys. Rev. Lett. 109, 080505 (2012).

Dugas, A. C., Wallman, J. J. & Emerson, J. Efficiently characterizing the total error in quantum circuits. arXiv preprint arXiv:1610.05296 (2016).

Watson, T. et al. A programmable two-qubit quantum processor in silicon. Nature 555, 633 (2018).

Zajac, D. M. et al. Resonantly driven cnot gate for electron spins. Science 359, 439–442 (2018).

Huang, W. et al. Fidelity benchmarks for two-qubit gates in silicon. arXiv preprint arXiv:1805.05027 (2018).

Kalra, R., Laucht, A., Hill, C. D. & Morello, A. Robust two-qubit gates for donors in silicon controlled by hyperfine interactions. Physical Review X 4, 021044 (2014).

Li, R. et al. A crossbar network for silicon quantum dot qubits. Science advances 4, eaar3960 (2018).

Hincks, I., Wallman, J. J., Ferrie, C., Granade, C. & Cory, D. G. Bayesian inference for randomized benchmarking protocols. arXiv preprint arXiv:1802.00401 (2018).

Hoeffding, W. Probability inequalities for sums of bounded random variables. Journ. Am. Stat. Assoc. 58, 13–30 (1963).

Watrous, J. Notes on super-operator norms induced by schatten norms. arXiv preprint arXiv:0411077 (2004).

MacCluer, C. R. The many proofs and applications of Perron’s theorem. Siam Review 42, 487–498 (2000).

Sakurai, J. J. et al. Modern quantum mechanics, vol. 261 (Pearson, 2014).

Erhard, A. et al. Characterizing large-scale quantum computers via cycle benchmarking. arXiv preprint arXiv:1902.08543 (2019).

Wallman, J. J. & Emerson, J. Determining the capacity of any quantum computer to perform a quantum computation. In Preparation (2018).

Acknowledgements

The authors would like to thank Thomas F. Watson, Jérémy Ribeiro and Bas Dirkse for enlightening discussions. While preparing a new version of this manuscript the authors became aware of similar, independent work by Wallman & Emerson. J.H. and S.W. are funded by STW Netherlands, NWO VIDI, an ERC Starting Grant and by the NWO Zwaartekracht QSC grant. X.X. and L.M.K.V. are funded by the Army Research Office (ARO) under Grant Number W911NF-17-1-0274.

Author information

Authors and Affiliations

Contributions

J.H., X.X., L.M.K.V. and S.W. conceived of the theoretical framework, detailed analysis was done by J.H. with input from X.X., L.M.K.V., and S.W., J.H. wrote the manuscript with input from X.X., L.M.K.V., and S.W., SW supervised the project.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Helsen, J., Xue, X., Vandersypen, L.M.K. et al. A new class of efficient randomized benchmarking protocols. npj Quantum Inf 5, 71 (2019). https://doi.org/10.1038/s41534-019-0182-7

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41534-019-0182-7

This article is cited by

-

Probabilistic error cancellation with sparse Pauli–Lindblad models on noisy quantum processors

Nature Physics (2023)

-

Near-term quantum computing techniques: Variational quantum algorithms, error mitigation, circuit compilation, benchmarking and classical simulation

Science China Physics, Mechanics & Astronomy (2023)

-

Error rate reduction of single-qubit gates via noise-aware decomposition into native gates

Scientific Reports (2022)

-

Partial randomized benchmarking

Scientific Reports (2022)

-

Direct state measurements under state-preparation-and-measurement errors

Quantum Information Processing (2021)