Abstract

We present a density-matrix simulation of the quantum memory and computing performance of the distance-3 logical qubit Surface-17, following a recently proposed quantum circuit and using experimental error parameters for transmon qubits in a planar circuit QED architecture. We use this simulation to optimize components of the QEC scheme (e.g., trading off stabilizer measurement infidelity for reduced cycle time) and to investigate the benefits of feedback harnessing the fundamental asymmetry of relaxation-dominated error in the constituent transmons. A lower-order approximate calculation extends these predictions to the distance-5 Surface-49. These results clearly indicate error rates below the fault-tolerance threshold of the surface code, and the potential for Surface-17 to perform beyond the break-even point of quantum memory. However, Surface-49 is required to surpass the break-even point of computation at state-of-the-art qubit relaxation times and readout speeds.

Similar content being viewed by others

Introduction

Recent experimental demonstrations of small quantum simulations1,2,3 and quantum error correction (QEC)4,5,6,7 position superconducting circuits for targeting quantum supremacy8 and quantum fault tolerance,9 two outstanding challenges for all quantum information processing platforms. On the theoretical side, much modeling of QEC codes has been made to determine fault-tolerance threshold rates in various models10,11,12 with different error decoders.13,14,15 However, the need for computational efficiency has constrained many previous studies to oversimplified noise models, such as depolarizing and bit-flip noise channels. This discrepancy between theoretical descriptions and experimental reality compromises the ability to predict the performance of near-term QEC implementations, and offers limited guidance to the experimentalist through the maze of parameter choices and trade-offs. In the planar circuit quantum electrodynamics (cQED)16 architecture, the major contributions to error are transmon qubit relaxation, dephasing from flux noise, and resonator photons leftover from measurement, and leakage from the computational space, none of which are well-approximated by depolarizing or bit-flip channels. Simulations with more complex error models are now essential to accurately pinpoint the leading contributions to the logical error rate in the small-distance surface codes10, 13, 17 currently pursued by several groups worldwide.

In this paper, we perform a density-matrix simulation of the distance-3 surface code named Surface-17, using the concrete quantum circuit recently proposed in ref. 18 and the measured performance of current experimental multi-transmon cQED platforms.19,20,21,22 For this purpose, we have developed an open-source density-matrix simulation package named quantumsim (https://github.com/brianzi/quantumsim). We use quantumsim to extract the logical error rate per QEC cycle, \({\epsilon _{\rm{L}}}\). This metric allows us to optimize and trade-off between QEC cycle parameters, assess the merits of feedback control, predict gains from future improvements in physical qubit performance, and quantify decoder performance. We compare an algorithmic decoder using minimum-weight perfect matching (MWPM) with homemade weight calculation to a simple look-up table (LT) decoder, and weigh both against an upper bound (UB) for decoder performance obtainable from the density-matrix simulation. Finally, we make a low-order approximation to extend our predictions to the distance-5 Surface-49. The combination of results for Surface-17 and Surface-49 allows us to make statements about code scaling and to predict the code size and physical qubit performance required to achieve break-even points for memory and computational performance.

Results

Error rates for Surface-17 under current experimental conditions

To quantify the performance of the logical qubit, we first define a test experiment to simulate. Inspired by the recent experimental demonstration of distance-3 and distance-5 repetition codes,4 we first focus on the performance of the logical qubit as a quantum memory. Specifically, we quantify the ability to hold a logical \(\left| 0 \right\rangle \) state, by initializing this state, holding it for k ∈ {1, …, 20} cycles, performing error correction, and determining a final logical state (see Fig. 6 for details). The logical fidelity \({{\cal F}_{\rm{L}}}\)[k] is then given by the probability to match the initial state. We observe identical results when using \(\left| 1 \right\rangle \) or \(\left| \pm \right\rangle = \frac{1}{{\sqrt 2 }}\left( {\left| 0 \right\rangle \pm \left| 1 \right\rangle } \right)\) in place of \(\left| 0 \right\rangle \).

We base our error model for the physical qubits on current typical experimental performance for transmons in planar cQED, using parameters from the literature and in-house results (e.g., gate-set tomography measurements). These are summarized in Table 1, and further detailed in Supplemental Material. We focus on the QEC cycle proposed in ref. 18 which pipelines the execution of X-type and Z-type stabilizer measurements. Each stabilizer measurement consists of three parts: a coherent step (duration τ c = 2τ g,1Q + 4τ g,2Q), measurement (τ m), and photon depletion from readout resonators (τ d), making the QEC cycle time τ cycle = τ c + τ m + τ d.

Simulating this concrete quantum circuit with the listed parameters using quantumsim, we predict \({{\cal F}_{\rm{L}}}\)[k] of Surface-17 (Fig. 1). We show \({{\cal F}_{\rm{L}}}\)[k] for both a homemade MWPM decoder (green, described in Supplemental Material), and an implementation of the LT decoder of ref. 13 (blue, described in Supplemental Material). To isolate decoder performance, we can compare the achieved fidelity to an UB extractable from the density-matrix simulation (red, described in Methods). To assess the benefit of QEC, we also compare to a single decohering transmon, whose fidelity is calculated by averaging over the six cardinal points of the Bloch sphere:

Logical fidelity \({{\cal F}_{\rm{L}}}\)[k] of Surface-17 with current experimental parameters (Table 1 and (See Supplemental Material)), simulated with quantumsim as described in Fig. 6. The results from a MWPM decoder (green) and an implementation of the LT decoder of ref. 13 (blue) are compared to the decoder upper bound (red). The labeled error rate is obtained from the best fit to Eq. (2) (also plotted). A further comparison is given to majority voting (purple, dashed), which ignores the outcome of individual stabilizer measurements, and to the fidelity \({{\cal F}_{{\rm{phys}}}}\) of a single transmon (black) [Eq. (1)]. Error bars (2 s.d.) are obtained by bootstrapping

The observation of \({{\cal F}_{\rm{L}}}\)[k] > \({{\cal F}_{{\rm{phys}}}}\)(kτ cycle) for large k would constitute a demonstration of QEC beyond the quantum memory break-even point.7 Equivalently, one can extract a logical error rate \({\epsilon _{\rm{L}}}\) from a best fit to \({{\cal F}_{\rm{L}}}\)[k] (as derived in Methods as the probability of an odd number of errors occurring),

Here, k 0 and \({\epsilon _{\rm{L}}}\) are the parameters to be fit. We compare \({\epsilon _{\rm{L}}}\) to the physical error rate

We observe \({\epsilon _{\rm{L}}}\) = 1.44%c for the LT decoder, \({\epsilon _{\rm{L}}}\) = 1.07%c for the MWPM decoder, and \({\epsilon _{\rm{L}}}\) = 0.68%c at the decoder UB (%c = % per cycle). The latter two fall below \({\epsilon _{{\rm{phys}}}}\) = 1.33%c. Defining the decoder efficiency \({\eta _{\rm{d}}} = \epsilon _{\rm{L}}^{\left( {{\rm{UB}}} \right)}{\rm{/}}{\epsilon _{\rm{L}}}\), we find \(\eta _{\rm{d}}^{\left( {{\rm{LT}}} \right)}\) = 0.47, and \(\eta _{\rm{d}}^{\left( {{\rm{MWPM}}} \right)}\) = 0.64.

We can also compare the multi-cycle error correction to majority voting, in which the state declaration is based solely on the output of the final data qubit measurements (ancilla measurements are ignored). Majority voting corrects any single data qubit error (over the entire experiment), and thus exhibits a quadratic decay for small k (A distance-d code with majority voting alone should exhibit a (d + 1)/2-order decay). A decoder should also be able to correct (at least) a single error, and thus should produce the same behavior at low k, delaying the onset of exponential decay in \({{\cal F}_{\rm{L}}}\)[k]. In fact, a good test for the performance of a MWPM decoder is to ensure it can outperform the majority vote at short timescales, as suboptimal configuration will prevent this (as seen for the LT decoder).

With the baseline for current performance established, we next investigate \({\epsilon _{\rm{L}}}\) improvements that may be achieved by two means. First, we consider modifications to the QEC cycle at fixed physical performance. Afterwards, we consider the effect of improving physical qubit T 1 and T ϕ .

Optimization of logical error rates with current experimental conditions

Error sources in current cQED setups derive primarily from transmon decoherence, as opposed to gate and measurement errors produced by control electronics. Thus, a path to reducing \({\epsilon _{\rm{L}}}\) may be to decrease τ cycle. Currently, the cycle is dominated by τ m + τ d. At fixed readout power, reducing τ m and τ d will reduce τ cycle at the cost of increased readout infidelity \({\epsilon _{{\rm{RO}}}}\) (described in Methods). We explore this trade-off in Fig. 2, using a linear-dispersive readout model,23 keeping τ m = τ d and assuming no leftover photons. Because of the latter, \(\epsilon _{\rm{L}}^{\left( {{\rm{MWPM}}} \right)}\) reduces from 1.07%c (Fig. 1) to 0.62%c at τ m = 300 ns. The minimum \(\epsilon _{\rm{L}}^{\left( {{\rm{MWPM}}} \right)}\) = 0.55%c is achieved at around τ m = 260 ns. This is perhaps counterintuitive, as \({\epsilon _{{\rm{phys}}}}\) reduces only 0.13%c while \({\epsilon _{{\rm{RO}}}}\) increases 0.5%. However, it reflects the different sensitivity of the code to different types of errors. Indeed, \(\epsilon _{\rm{L}}^{\left( {{\rm{MWPM}}} \right)}\) is smaller for τ m = 200 ns than for τ m = 300 ns, even though \({\epsilon _{{\rm{RO}}}}\) increases to 5%. It is interesting to note that the optimal τ m for quantum memory, which minimizes logical error per unit time, rather than per cycle, is τ m = 280 ns (Fig. 2 inset). This shows that different cycle parameters might be optimal for computation and memory applications.

Optimization of the logical error rate (per cycle) of Surface-17 as a function of measurement-and-depletion time.19 Changes in the underlying physical error rates are shown, as well. Decreasing the measurement time causes an increase in the readout infidelity (solid black curve with dots), whilst decreasing the single qubit decay from T 1 and T 2 (black dashed curve) for all qubits. The logical rate with an MWPM decoder (green curve) is minimized when these error rates are appropriately balanced. The logical error rate is calculated from the best fit of Eq. (2). Error bars (2 s.d.) are obtained by bootstrapping (N = 10,000 runs). Inset: Logical error rate per unit time, instead of per cycle

Next, we consider the possibility to reduce \({\epsilon _{\rm{L}}}\) using feedback control. Since T 1 only affects qubits in the excited state, the error rate of ancillas in Surface-17 is roughly two times higher when in the excited state. The unmodified syndrome extraction circuit flips the ancilla if the corresponding stabilizer value is −1, and since ancillas are not reset between cycles, they will spend significant amounts of time in the excited state. Thus, we consider using feedback to hold each ancilla in the ground state as much as possible. We do not consider feedback on data qubits, as the highly entangled logical states are equally susceptible to T 1.

The feedback scheme (Inset of Fig. 3) consists of replacing the R y (π/2) gate at the end of the coherent step with a R y (−π/2) gate for some of the ancillas, depending on a classical control bit p for each ancilla. This bit p represents an estimate of the stabilizer value, and the ancilla is held in the ground state whenever this estimate is correct (i.e., in the absence of errors). Figure 3 shows the effect of this feedback on the logical fidelity, both for the MWPM decoder and the decoder UB. We observe \({\epsilon _{\rm{L}}}\) improve only 0.05%c in both cases. Future experiments might opt not to pursue these small gains in view of the technical challenges added by feedback control.

Logical fidelity of Surface-17 with (solid) and without (dashed) an additional feedback scheme. The performance of a MWPM decoder (green) is compared to the decoder upper bound (red). Curves are fits of Eq. (2) to the data, and error bars (2 s.d.) are given by bootstrapping, with each point averaged over 10,000 runs. Inset: Method for implementing the feedback scheme. For each ancilla qubit A j , we store a parity bit p j , which decides the sign of the R y (π/2) rotation at the end of each coherent step. The time A j spends in the ground state is maximized when p j is updated each cycle t by XORing with the measurement result from cycle t − 1, after the rotation of cycle t has been performed

Projected improvement with advances in quantum hardware

We now estimate the performance increase that may result from improving the transmon relaxation and dephasing times via materials and filtering improvements. To model this, we return to τ cycle = 800 ns, and adjust T 1 values with both T ϕ = 2T 1 (common in experiment) and T ϕ = ∞ (all white-noise dephasing eliminated). We retain the same rates for coherent errors, readout infidelity, and photon-induced dephasing as in Fig. 1. Figure 4 shows the extracted \({\epsilon _{\rm{L}}}\) and \({\epsilon _{{\rm{phys}}}}\) over the T 1 range covered. For the MWPM decoder (UB) and T ϕ = 2T 1, the memory figure of merit γ m = \({\epsilon _{{\rm{phys}}}}\)/\({\epsilon _{\rm{L}}}\)increases from 1.3 (2) at T 1 = 30 μs to 2 (5) at 100 μs. Completely eliminating white-noise dephasing will increase γ m by 10% with MWPM and 30% at the UB.

T 1 dependence of the Surface-17 logical error rate (MWPM and UB) and the physical error rate. We either fix T ϕ = 2T 1 (solid) or T ϕ = ∞ (dashed). Logical error rates are extracted from a best fit of Eq. (2) to \({{\cal F}_{\rm{L}}}\)[k] over k = 1, …, 20 QEC cycles, averaged over N = 50,000 runs. Error bars (2 s.d.) are calculated by bootstrapping

A key question for any QEC code is how \({\epsilon _{\rm{L}}}\) scales with code distance d. Computing power limitations preclude similar density-matrix simulations of the d = 5 surface code Surface-49. However, we can approximate the error rate by summing up all lowest-order error chains (as calculated for the MWPM decoder), and deciding individually whether or not these would be corrected by a MWPM decoder (See Supplemental Material for details). Figure 5 shows the lowest-order approximation to the logical error rates of Surface-17 and Surface-49 over a range of T 1 = T ϕ /2. Comparing the Surface-17 lowest-order approximation to the quantumsim result shows good agreement and validates the approximation. We observe a lower \({\epsilon _{\rm{L}}}\) for Surface-49 than for Surface-17, indicating quantum fault tolerance over the T 1 range covered. The fault-tolerance figure of merit defined in,9 \({{\it{\Lambda }}_{\rm{t}}} = \epsilon _{\rm{L}}^{\left( {17} \right)}{\rm{/}}\epsilon _{\rm{L}}^{\left( {49} \right)}\), increases from 2 to 4 as T 1 grows from 30 to 100 μs.

Analytic approximation of \({\epsilon _{\rm{L}}}\) for Surface-17 (green) and Surface-49 (orange) using a MWPM decoder. Details of the calculation of points and error bars are given in (See Supplemental Material). All plots assume T ϕ = 2T 1, and τ cycle = 800 ns (crosses) or 400 ns (dots). Numerical results for Surface-17 with τ cycle = 800 ns are also plotted for comparison (green, dashed). The physical-qubit computation metric is given as the error incurred by a single qubit over the resting time of a single-qubit gate (black, dashed)

As a rough metric of computational performance, we offer to compare \({\epsilon _{\rm{L}}}\) (per cycle) to the error accrued by a physical qubit idling over τ g,1Q. We define a metric for computation performance, \({\gamma _{\rm{c}}} = \left( {{\epsilon _{{\rm{phys}}}}{\tau _{{\rm{g,1Q}}}}} \right){\rm{/}}\left( {{\epsilon _{\rm{L}}}{\tau _{{\rm{cycle}}}}} \right)\) and γ c = 1 as a computational break-even point. Clearly, using the QEC cycle parameters of Table 1 and even with T 1 improvements, neither Surface-17 nor Surface-49 can break-even computationally. However, including the readout acceleration recently demonstrated in ref. 22 which allows τ m = τ d = 100 ns and τ cycle = 400 ns, Surface-49 can cross γ c = 1 by T 1 = 40 μs . In view of first reports of T 1 up to 80 μs emerging for planar transmons,24, 25 this important milestone may be within grasp.

Discussion

Computational figure of merit

We note that our metric of computational power is not rigorous, due to the different gate sets available to physical and logical qubits. Logical qubits can execute multiple logical X and Z gates within one QEC cycle, but require a few cycles for two-qubit and Hadamard gates (using the proposals of refs. 12, 17), and state distillation over many cycles to perform non-Clifford gates. As such, this metric is merely a rough benchmark for computational competitiveness of the QEC code. However, given the amount by which all distance-3 logical fidelities fall above this metric, we find it unlikely that these codes will outperform a physical qubit by any fair comparison in the near future.

Decoder performance

A practical question facing QEC is how best to balance the trade-off between decoder complexity and performance. Past proposals for surface-code computation via lattice surgery17 require the decoder to provide an up-to-date estimate of the Pauli error on physical qubits during each logical T gate. Because tracking Pauli errors through a non-Clifford gate is inefficient, however implemented, equivalent requirements will hold for any QEC code.26 A decoder is thus required to process ancilla measurements from one cycle within the next (on average). This presents a considerable challenge for transmon-cQED implementations, as τ cycle < 1 μs. This short time makes the use of computationally intensive decoding schemes difficult, even if they provide lower \({\epsilon _{\rm{L}}}\)

The leading strategy for decoding the surface code is MWPM using the blossom algorithm of Edmonds.10, 14, 27 Although this algorithm is challenging to implement, it scales linearly in code distance.27 The algorithm requires a set of weights (representing the probability that two given error signals are connected by a chain of errors) as input. An important practical question (See Supplemental Material) is whether these weights can be calculated on the fly, or must be precalculated and stored. On-the-fly weight calculation is more flexible. For example, it can take into account the difference in error rates between an ancilla measured in the ground and in the excited state. The main weakness of MWPM is the inability to explicitly detect Y errors. In fact, in Supplemental Material we show that MWPM is nearly perfect in the absence of Y errors. The decoder efficiency Y may significantly increase by extending MWPM to account for correlations between detected X and Z errors originating from Y errors.28, 29

If computational limitations preclude a MWPM decoder from keeping up with τ cycle, the LT decoder may provide a straightforward solution for Surface-17. However, at current physical performance, the η d reduction will make Surface-17 barely miss memory break-even (Fig. 1). Furthermore, memory requirements make LT decoding already impractical for Surface-49. Evidently, real-time algorithmic decoding by MWPM or improved variants is an important research direction already at low code distance.

Other observations

The simulation results allow some further observations. Although we have focused on superconducting qubits, we surmise that the following statements are fairly general.

We observe that small quasi-static qubit errors are suppressed by the repeated measurement. In our simulations, the 1/f flux noise producing 0.01 radians of phase error per flux pulse on a qubit has a diamond norm approximately equal to T 1 noise, but a trace distance 100 times smaller. As the flux noise increases \({\epsilon _{\rm{L}}}\) by only 0.01%c, it appears \({\epsilon _{\rm{L}}}\) is dependent on the trace distance rather than the diamond norm of the underlying noise components. Quasi-static qubit errors can then be easily suppressed, but will also easily poison an experiment if unchecked.

We further observe that above a certain value, ancilla and measurement errors have a diminished effect on \({\epsilon _{\rm{L}}}\). In our error model, the leading sources of error for a distance d code are chains of (d − 1)/2 data qubit errors plus either a single ancilla qubit error or readout error, which together present the same syndrome as a chain of (d + 1)/2 data qubit errors. An optimal decoder decides which of these chains is more likely, at which point the less-likely chain will be wrongly corrected, completing a logical error. This implies that if readout infidelity \(\left( {{\epsilon _{{\rm{RO}}}}} \right)\) or the ancilla error rate \(\left( {{\epsilon _{{\rm{anc}}}}} \right)\) is below the data qubit \(\left( {{\epsilon _{{\rm{phys}}}}} \right)\) error rate, \({\epsilon _{\rm{L}}} \propto \left( {{\epsilon _{{\rm{nac}}}} + {\epsilon _{{\rm{RO}}}}} \right)\epsilon _{{\rm{phys}}}^{\left( {d - 1} \right)/2}\). However, if \({\epsilon _{{\rm{RO}}}}\) \(\left( {{\epsilon _{{\rm{anc}}}}} \right) > {\epsilon _{{\rm{phys}}}}\), \({\epsilon _{\rm{L}}}\) becomes independent of \({\epsilon _{{\rm{RO}}}}\) \(\left( {{\epsilon _{{\rm{anc}}}}} \right)\), to lowest order. This can be seen in Fig. 2, where the error rate is almost constant as \({\epsilon _{{\rm{RO}}}}\) exponentially increases. This approximation breaks down with large enough \({\epsilon _{{\rm{anc}}}}\) and \({\epsilon _{{\rm{RO}}}}\), but presents a counter-intuitive point for experimental design; \({\epsilon _{\rm{L}}}\) becomes less sensitive to measurement and ancilla errors as these error get worse.

A final, interesting point for future surface-code computation is shown in Fig. 2: the optimal cycle parameters for logical error rates per cycle and per unit time are not the same. This implies that logical qubits functioning as a quantum memory should be treated differently to those being used for computation. This idea can be extended further: at any point in time, a large quantum computer performing a computation will have a set S m of memory qubits which are storing part of a large entangled state, whilst a set S c of computation qubits containing the rest of the state undergo operations. To minimize the probability of a logical error occurring on qubits within both S c and S m , the cycle time of the qubits in S c can be reduced to minimize the rest time of qubits in S m . As a simple example, consider a single computational qubit q c and a single memory qubit q m sharing entanglement. Operating all qubits at τ cycle = 720 ns to minimize \({\epsilon _{\rm{L}}}\) would lead to a 1.09% error rate for the two qubits combined. However, shortening the τ cycle of q c reduces the time over which q m decays. If q c operates at τ cycle = 600 ns, the average error per computational cycle drops to 1.06%, as q m completes only 5 cycles for every 6 on q c. Although this is only a meager improvement, one can imagine that when many more qubits are resting than performing computation, the relative gain will be quite significant.

Effects not taken into account

Although we have attempted to be thorough in the detailing of the circuit, we have neglected certain effects. We have used a simple model for C-Z gate errors as we lack data from experimental tomography (e.g., one obtained from two-qubit gate-set tomography30). Most importantly, we have neglected leakage, where a transmon is excited out of the two lowest energy states, i.e., out of the computational subspace. Previous experiments have reduced the leakage probability per C-Z gate to ~0.3%,31 and per single-qubit gate to ~0.001%.32 Schemes have also been developed to reduce the accumulation of leakage.33 Extending quantumsim to include and investigate leakage is a next target. However, the representation of the additional quantum state can increase the simulation effort significantly [by a factor of (9/4)10 ≈ 3000]. To still achieve this goal, some further approximations or modifications to the simulation will be necessary. Future simulations will also investigate the effect of spread in qubit parameters, both in space (i.e., variation of physical error rates between qubits) and time (e.g., T 1 fluctuations), and cross-talk effects such as residual couplings between nearest and next-nearest neighbor transmons, qubit cross-driving, and qubit dephasing by measurement pulses targeting other qubits.

Methods

Simulated experimental procedure

Surface-17 basics

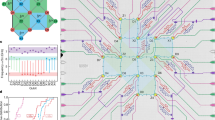

A QEC code can be defined by listing the data qubits and the stabilizer measurements that are repeatedly performed upon them.34 In this way, Surface-17 is defined by a 3 × 3 grid of data qubits {D 0, … D 8}. In order to stabilize a single logical qubit, 9 − 1 = 8 commuting measurements are performed. The stabilizers are the weight-two and weight-four X-type and Z-type parity operators X 2 X 1, Z 3 Z 0, X 4 X 3 X 1 X 0, Z 5 Z 4 Z 2 Z 1, Z 7 Z 6 Z 4 Z 3, X 8 X 7 X 5 X 4, Z 8 Z 5, and X 7 X 6, where X j (Z j ) denotes the X (Z) Pauli operator acting on data qubit D j . Their measurement is realized indirectly using nearest-neighbor interactions between data and ancilla qubits arranged in a square lattice, followed by ancilla measurements [Fig. 6a]. This leads to a total of 17 physical qubits when a separate ancilla is used for each individual measurement. We follow the circuit realization of this code described in ref. 18, for which we give a schematic description in Fig. 6b (See Supplemental Material for a full circuit diagram).

Schematic overview of the simulated experiment. a 17 qubits are arranged in a surface code layout (legend top-right). The red data qubits are initialized in the ground state \(\left| 0 \right\rangle \), and projected into an eigenstate of the measured X-(blue) and Z-(green) type stabilizer operators. b A section of the quantum circuit depicting the four-bit parity measurement implemented by the A3 ancilla qubit (+/− refer to R y (±π/2) single-qubit rotations). The ancilla qubit (green line, middle) is entangled with the four data qubits (red lines) to measure Z 1 Z 2 Z 4 Z 5. Ancillas are not reset between cycles. Instead, the implementation relies on the quantum non-demolition nature of measurements. The stabilizer is then the product of the ancilla measurement results of successive cycles. This circuit is performed for all ancillas and repeated k times before a final measurement of all (data and ancilla) qubits. c All syndrome measurements of the k cycles are processed by the decoder. d After each cycle, the decoder updates its internal state to represent the most likely set of errors that occurred. e After the final measurement, the decoder uses the readout from the data qubits, along with previous syndrome measurements, to declare a final logical state. To this end, the decoder processes the Z-stabilizers obtained directly from the data qubits, finalizing its prediction of most likely errors. The logical parity is then determined as the product of all data qubit parities \(\left( {\mathop {\prod}\nolimits_{j = 0}^8 {D_j}} \right)\) once the declared errors are corrected. The logical fidelity \({{\cal F}_{\rm{L}}}\) is the probability that this declaration is the same as the initial state \(\left( {\left| 0 \right\rangle } \right)\)

In an experimental realization of this circuit, qubits will regularly accumulate errors. Multiple errors that occur within a short period of time (e.g., one cycle) form error ‘chains’ that spread across the surface. Errors on single qubits, or correlated errors within a small subregion of Surface-17, fail to commute with the stabilizer measurements, creating error signals that allow diagnosis and correction of the error via a decoder. However, errors that spread across more than half the surface in a short enough period of time are misdiagnosed, causing an error on the logical qubit when wrongly corrected.10 The rate at which these logical errors arise is the main focus of this paper.

Protocol for measurement of logical error rates

As the performance measure of Surface-17, we study the fidelity of the logical qubit as a quantum memory. We describe our protocol with an example ‘run’ in Fig. 6. We initialize all qubits in \(\left| 0 \right\rangle \) and perform k = 1, 2, …, 20 QEC cycles [Fig. 6b]. Although this initial state is not a stabilizer eigenstate, the first QEC cycle projects the system into one of the 16 overlapping eigenstates within the +1 eigenspace for Z stabilizers, which form the logical \(\left| 0 \right\rangle \) state.10 This implies that, in the absence of errors, the first measurement of the Z stabilizers will be +1, whilst that of the X stabilizers will be random. In the following cycles, ancilla measurements of each run [Fig. 6c] are processed using a classical decoding algorithm. The decoder computes a Pauli update after each QEC cycle [Fig. 6d]. This is a best estimate of the Pauli operators that must be applied to the data qubits to transform the logical qubit back to the logical \(\left| 0 \right\rangle \) state. The run ends with a final measurement of all data qubits in the computational basis. From this 9-bit outcome, a logical measurement result is declared [Fig. 6e]. First, the four Z-type parities are calculated from the 9 data-qubit measurement outcomes and presented to the decoder as a final set of parity measurements. This ensures that the final computed Pauli update will transform the measurement results into a set that measures +1 for all Z stabilizers. This results in one of 32 final measurements, from which the value of a logical Z operator can be calculated to give the measurement result (any choice of logical operator gives the same result). The logical fidelity \({{\cal F}_{\rm{L}}}\)[k] after k QEC cycles is defined as the probability of this declared result matching the initial +1 state.

At long times and with low error rates, Surface codes have a constant logical error rate \({\epsilon _{\rm{L}}}\). The fidelity \({{\cal F}_{\rm{L}}}\)[k] is obtained by counting the probability of an odd number of errors having occurred in total (as two σ x errors cancel)20 (We thank Barbara Terhal for providing this derivation):

Here, the combinatorial factor counts the number of combinations of l errors in k rounds, given an \({\epsilon _{\rm{L}}}\) chance of error per round. This can be simplified to

However, at small k, the decay is dominated by the majority vote, for which \({\epsilon _{\rm{L}}}\) ∝ (k \({\epsilon _{{\rm{phys}}}}\))(d + 1)/2. For example, for all the Surface-17 decay curves, we observe a quadratic error rate at small k, as opposed to the linear slope predicted by Eq. (5). In order to correct for this, we shift the above equation in k by a free parameter k 0, resulting in Eq. (2). This function fits well to data with k ≥ 3 in all plots, and thus allows accurate determination of \({\epsilon _{\rm{L}}}\).

The quantumsim simulation package

Quantumsim performs calculations on density matrices utilizing a graphics processing unit in a standard desktop computer. Ancillas are measured at the end of each cycle, and thus not entangled with the rest of the system. As such, it is possible to obtain the effect of the QEC cycle on the system without explicitly representing the density matrix of all 17 qubits simultaneously. The simulation is set up as follows: the density matrix of the nine data qubits is allocated in memory with all qubits initialized to \(\left| 0 \right\rangle \). One-qubit and two-qubit gates are applied to the density matrix as completely positive, trace preserving maps represented by Pauli transfer matrices. When a gate involving an ancilla qubit must be performed, the density matrix of the system is dynamically enlarged to include that one ancilla.

Qubit measurements are simulated as projective and following the Born rule, with projection probabilities given by the squared overlap of the input state with the measurement basis states. In order to capture empirical measurement errors, we implement a black-box measurement model (see Error Models) by sandwiching the measurement between idling processes. The measurement projects the system to a product state of the ancilla and the projected sub-block of the density matrix. We can therefore remove the ancilla from the density matrix and only store its state right after projection, and continue the calculation with the partial density matrix of the other qubits. Making use of the specific arrangement of the interactions between ancillas and data qubits in Surface-17, it is possible to apply all operations to the density matrix in such an order (shown in Supplemental Material) that the total size of the density matrix never exceeds 210 × 210 (nine data qubits plus one ancilla), which allows relatively fast simulation. We emphasize that with the choice of error model in this work, this approach gives the same result as a full simulation on a 17-qubit density matrix. Only the introduction of residual entangling interactions between data and ancilla qubits (which we do not consider in this work) would make the latter necessary. On our hardware (See Supplemental Material), simulating one QEC cycle of Surface-17 with quantumsim takes 25 ms.

We highlight an important advantage of doing density-matrix calculations with quantumsim. We do not perform projective measurements of the data qubits. Instead, after each cycle, we extract the diagonal of the data-qubit density matrix, which represents the probability distribution if a final measurement were performed. We leave the density matrix undisturbed and continue simulation up to k = 20. This is a very useful property of the density-matrix approach, because having a probability distribution of all final readout events greatly reduces sampling noise.

Our measurement model includes a declaration error probability (see Error Models), where the projected state of the ancilla after measurement is not the state reported to the decoder. Before decoding, we thus apply errors to the outcomes of the ancilla projections, and smear the probability distribution of the data qubit measurement. To then determine the fidelity averaged over this probability distribution, we present all 16 possible final Z-type parities to the decoder. This results in 16 different final Pauli updates, allowing us to determine correctness of the decoder for all 512 possible measurement outcomes. These are then averaged over the simulated probability distribution. This produces good results after about ~104 simulated runs.

A second highlight of quantumsim is the possibility to quantify the sub-optimality of the decoder. The fidelity of the logical qubit obtained in these numerical simulations is a combination of the error rates of the physical qubits and the approximations made by the decoder. Full density-matrix simulations make it possible to disentangle these two contributions. Namely, the fidelity is obtained by assigning correctness to each of the 512 possible readouts according to 16 outputs of the decoder, and summing the corresponding probabilities accordingly. If the probabilities are known, it is easy to determine the 16 results that a decoder should output in order to maximize fidelity (i.e., the output of the best-possible decoder). This allows placing a decoder UB \({\cal F}_{\rm{L}}^{{\rm{max}}}\) on logical fidelity as limited by the physical qubits independent of the decoder. Conversely, it also allows quantifying sub-optimality in the decoder used. In fact, we can make the following reverse statement: if our measurement model did not include a declaration error, then we could use the simulation to find the final density matrix of the system conditioned on a syndrome measurement. From this, the simulation could output exactly the 16 results that give \({\cal F}_{\rm{L}}^{{\rm{max}}}\), so that quantumsim could thus be used as a maximum-likelihood decoder. In this situation, \({\cal F}_{\rm{L}}^{{\rm{max}}}\) would not only be an UB, but indeed the performance of the best-possible decoder. However, as we add the declaration errors after simulation, we can only refer to \({\cal F}_{\rm{L}}^{{\rm{max}}}\) as the decoder UB.

Error models

We now describe the error model used in the simulations. Our motivation for the development of this error model is to provide a limited number of free parameters to study, whilst remaining as close to known experimental data as possible. As such, we have taken well-established theoretical models as a base, and used experimental tomography to provide fixed parameters for observed noise beyond these models. The parameters of the error model are provided in Supplemental Material.

Idling qubits

While idling for a time τ, a transmon in \(\left| 1 \right\rangle \) can relax to \(\left| 0 \right\rangle \). Furthermore, a transmon in superposition can acquire random quantum phase shifts between \(\left| 0 \right\rangle \) and \(\left| 1 \right\rangle \) due to 1/f noise sources (e.g., flux noise) and broadband ones (e.g., photon shot noise35 and quasiparticle tunneling36). These combined effects can be parametrized by probabilities p 1 = exp(−τ/T 1) for relaxation, and p ϕ = exp(−τ/T ϕ ) for pure dephasing. The combined effects of relaxation and pure dephasing lead to decay of the off-diagonal elements of the qubit density matrix. We model dephasing from broadband sources in this way, taking for T ϕ the value extracted from the decay time T 2 of standard echo experiments:

We model 1/f sources differently, as discussed below.

Dephasing from photon noise

The dominant broadband dephasing source is the shot noise due to photons in the readout resonator. This dephasing is present whenever the coupled qubit is brought into superposition before the readout resonator has returned to the vacuum state following the last measurement. This leads to an additional, time-dependent pure dephasing (rates given in Supplemental Material).

One-qubit Y rotations

We model y-axis rotations as instantaneous rotations sandwiched by idling periods of duration τ g,1Q/2. The errors in the instantaneous gates are modeled from process matrices measured by gate-set tomography30, 37 in a recent experiment.20 In this experiment, the GST analysis of single-qubit gates also showed that the errors can mostly be attributed to Markovian noise. For simplicity, we thus model these errors as Markovian.

Dephasing of flux-pulsed qubits

During the coherent step, transmons are repeatedly moved in frequency away from their sweetspot using flux pulses, either to implement a C-Z gate or to avoid one. Away from the sweetspot, transmons become first-order sensitive to flux noise, which causes an additional random phase shift. As this noise typically has a 1/f power spectrum, the largest contribution comes from low-frequency components that are essentially static for a single run, but fluctuating between different runs. In our simulation, we approximate the effect of this noise through ensemble averaging, with quasi-static phase error added to a transmon whenever it is flux pulsed. Gaussian phase errors with the variance (calculated in Supplemental Material) are drawn independently for each qubit and for each run.

C-Z gate error

The C-Z gate is achieved by flux pulsing a transmon into the \(\left| {11} \right\rangle \leftrightarrow \left| {02} \right\rangle \) avoided crossing with another, where the 2 denotes the second-excited state of the fluxed transmon. Holding the transmons here for τ g,2Q causes the probability amplitudes of \(\left| {01} \right\rangle \) and \(\left| {11} \right\rangle \) to acquire phases.38 Careful tuning allows the phase ϕ 01 acquired by \(\left| {01} \right\rangle \) (the single-qubit phase ϕ 1Q) to be an even multiple of 2π, and the phase ϕ 11 acquired by \(\left| {11} \right\rangle \) to be π extra. This extra phase acquired by \(\left| {11} \right\rangle \) is the two-qubit phase ϕ 2Q. Single-qubit and two-qubit phases are affected by flux noise because the qubit is first-order sensitive during the gate. Previously, we discussed the single-qubit phase error. In Supplemental Material, we calculate the corresponding two-qubit phase error δϕ 2Q. Our full (but simplistic) model of the C-Z gate consists of an instantaneous C-Z gate with single-qubit phase error δϕ 1Q and two-qubit phase error δϕ 2Q = δϕ 1Q/2, sandwiched by idling intervals of duration τ g,2Q/2.

Measurement

We model qubit measurement with a black-box description using parameters obtained from experiment. This description consists of the eight probabilities for transitions from an input state \(\left| i \right\rangle \in \left\{ {\left| 0 \right\rangle ,\left| 1 \right\rangle } \right\}\) into pairs \(\left( {m,\left| o \right\rangle } \right)\) of measurement outcome \(m \in \left\{ { + 1, - 1} \right\}\) and final state \(o \in \left\{ {\left| 0 \right\rangle ,\left| 1 \right\rangle } \right\}\). By final state we mean the qubit state following the photon-depletion period. Input superposition states in the computational bases are first projected to \(\left| 0 \right\rangle \) and \(\left| 1 \right\rangle \) following the Born rule. The probability tree (the butterfly) is then used to obtain an output pair \(\left( {m,\left| o \right\rangle } \right)\). These experimental parameters can be described by a six-parameter model (described in detail in Supplemental Material), consisting of periods of enhanced noise before and after a point at which the qubit is perfectly projected, and two probabilities \(\epsilon _{{\rm{RO}}}^{\left| i \right\rangle }\) for wrongly declaring the result of this projective measurement. In Supplemental Material, a scheme for measuring these butterfly parameters and mapping them to the six-parameter model is described. In experiment, we find that the readout errors \(\epsilon _{{\rm{RO}}}^{\left| i \right\rangle }\) are almost independent of the qubit state \(\left| i \right\rangle \), and so we describe them with a single readout error parameter \({\epsilon _{{\rm{RO}}}}\) in this work.

Data availability

The data that support the plots within this paper and other findings of this study are available from the corresponding author (L.DiCarlo@tudelft.nl) upon request.

Code availability

The computer code that was use to generate these results is available from the corresponding author (L.DiCarlo@tudelft.nl) upon request.

References

O’Malley, P. J. J. et al. Scalable quantum simulation of molecular energies. Phys. Rev. X 6, 031007 (2016).

Barends, R. et al. Digital quantum simulation of fermionic models with a superconducting circuit. Nat. Commun. 6, 7654 (2015).

Langford, N. K. et al. Experimentally simulating the dynamics of quantum light and matter at ultrastrong coupling. Preprint at http://arxiv.org/abs/1610.10065 (2016).

Kelly, J. et al. State preservation by repetitive error detection in a superconducting quantum circuit. Nature 519, 66–69 (2015).

Ristè, D. et al. Detecting bit-flip errors in a logical qubit using stabilizer measurements. Nat. Commun. 6, 6983 (2015).

Córcoles, A. D. et al. Demonstration of a quantum error detection code using a square lattice of four superconducting qubits. Nat. Commun. 6, 6979 (2015).

Ofek, N. et al. Extending the lifetime of a quantum bit with error correction in superconducting circuits. Nature 536, 441 (2016).

Boixo, S. et al. Characterizing quantum supremacy in near-term devices. Preprint at https://arxiv.org/abs/1608.00263 (2016).

Martinis, J. M. Qubit metrology for building a fault-tolerant quantum computer. npj Quantum Inf. 1, 15005 (2015).

Fowler, A. G., Mariantoni, M., Martinis, J. M. & Cleland, A. N. Surface codes: towards practical large-scale quantum computation. Phys. Rev. A. 86, 032324 (2012).

Landahl, A. J., Anderson, J. T. & Rice, P. R. Fault-tolerant quantum computing with color codes. Preprint at http://arxiv.org/abs/1108.5738 (2011).

Yoder, T. J. & Kim, I. H. The surface code with a twist. Preprint at http://arxiv.org/abs/1612.04795 (2016).

Tomita, Y. & Svore, K. M. Low-distance surface codes under realistic quantum noise. Phys. Rev. A 90, 062320 (2014).

Fowler, A. G., Stephens, A. M. & Groszkowski, P. High-threshold universal quantum computation on the surface code. Phys. Rev. A 80, 052312 (2009).

Heim, B., Svore, K. M. & Hastings, M. B. Optimal circuit-level decoding for surface codes. Preprint at http://arxiv.org/abs/1609.06373 (2016).

Blais, A., Huang, R.-S., Wallraff, A., Girvin, S. M. & Schoelkopf, R. J. Cavity quantum electrodynamics for superconducting electrical circuits: An architecture for quantum computation. Phys. Rev. A 69, 062320 (2004).

Horsman, C., Fowler, A. G., Devitt, S. & Meter, R. V. Surface code quantum computing by lattice surgery. New J. Phys. 14, 123011 (2012).

Versluis, R. et al. Scalable quantum circuit and control for a superconducting surface code. Preprint at http://arxiv.org/abs/1612.08208 (2016).

Bultink, C. C. et al. Active resonator reset in the nonlinear dispersive regime of circuit qed. Phys. Rev. Appl 6, 034008 (2016).

Rol, M. A. et al. Restless tuneup of high-fidelity qubit gates. Phys. Rev. Appl. 7, 041001 (2017).

Asaad, S. et al. Independent, extensible control of same-frequency superconducting qubits by selective broadcasting. npj Quantum Inf. 2, 16029 (2016).

Walter, T. et al. Realizing Rapid, High-Fidelity, Single-Shot Dispersive Readout of Superconducting Qubits. Preprint at http://arxiv.org/abs/1701.06933 (2017).

Frisk Kockum, A., Tornberg, L. & Johansson, G. Undoing measurement-induced dephasing in circuit QED. Phys. Rev. A 85, 052318 (2012).

Paik, H. et al. Experimental demonstration of a resonator-induced phase gate in a multiqubit circuit-qed system. Phys. Rev. Lett. 117, 250502 (2016).

Gustavsson, S. et al. Suppressing relaxation in superconducting qubits by quasiparticle pumping. Science 354, 1573–1577 (2016).

Terhal, B. M. Quantum error correction for quantum memories. Rev. Mod. Phys. 87, 307–346 (2015).

Fowler, A. G., Sank, D., Kelly, J., Barends, R. & Martinis, J. M. Scalable extraction of error models from the output of error detection circuits. Preprint at https://arxiv.org/abs/1405.1454 (2014).

Delfosse, N. & Tillich, J. P. A decoding algorithm for css codes using the x/z correlations. In 2014 IEEE International Symposium on Information Theory, 1071–1075 (2014).

Fowler, A. G. Optimal complexity correction of correlated errors in the surface code. Preprint at https://arxiv.org/abs/1310.0863 (2013).

Blume-Kohout, R. et al. Robust, self-consistent, closed-form tomography of quantum logic gates on a trapped ion qubit. Preprint at https://arxiv.org/abs/1310.4492 (2013).

Barends, R. et al. Superconducting quantum circuits at the surface code threshold for fault tolerance. Nature 508, 500 (2014).

Chen, Z. et al. Multi-photon sideband transitions in an ultrastrongly-coupled circuit quantum electrodynamics system. Preprint at https://arxiv.org/abs/1602.01584 (2016).

Fowler, A. G. Coping with qubit leakage in topological codes. Phys. Rev. A 88, 042308 (2013).

Gottesman, D. Stabilizer Codes and Quantum Error Correction. Ph.D. thesis Caltech, (1997).

Sears, A. P. et al. Photon shot noise dephasing in the strong-dispersive limit of circuit QED. Phys. Rev. B 86, 180504 (2012).

Ristè, D. et al. Millisecond charge-parity fluctuations and induced decoherence in a superconducting transmon qubit. Nat. Commun. 4, 1913 (2013).

Blume-Kohout, R. et al. Demonstration of qubit operations below a rigorous fault tolerance threshold with gate set tomography. Nat. Commun. 8, 14485 (2017).

DiCarlo, L. et al. Demonstration of two-qubit algorithms with a superconducting quantum processor. Nature 460, 240 (2009).

Acknowledgements

We thank C. C. Bultink, M. A. Rol, B. Criger, X. Fu, S. Poletto, R. Versluis, P. Baireuther, D. DiVincenzo, B. Terhal, and C.W.J. Beenakker for useful discussions. This research is supported by the Foundation for Fundamental Research on Matter (FOM), the Netherlands Organization for Scientific Research (NWO/OCW), an ERC Synergy Grant, and by the Office of the Director of National Intelligence (ODNI), Intelligence Advanced Research Projects Activity (IARPA), via the U.S. Army Research Office grant W911NF-16-1-0071. The views and conclusions contained herein are those of the authors and should not be interpreted as necessarily representing the official policies or endorsements, either expressed or implied, of the ODNI, IARPA, or the U.S. Government. The U.S. Government is authorized to reproduce and distribute reprints for Governmental purposes notwithstanding any copyright annotation thereon.

Author information

Authors and Affiliations

Contributions

T.E.O. wrote the decoder algorithm, and calculated the analytic extension to Surface-49. B.T. wrote the quantumsim program used for all density matrix simulations, and calculated simulation parameters from the underlying model. L.D. provided the underlying model parameters, and calculated simulation parameters from the underlying model. All authors contributed equally to the writing of the paper.

Corresponding author

Ethics declarations

Competing interests

The authors declare that they have no competing financial interests.

Additional information

Publisher’s note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic supplementary material

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

O’Brien, T.E., Tarasinski, B. & DiCarlo, L. Density-matrix simulation of small surface codes under current and projected experimental noise. npj Quantum Inf 3, 39 (2017). https://doi.org/10.1038/s41534-017-0039-x

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41534-017-0039-x

This article is cited by

-

Realizing repeated quantum error correction in a distance-three surface code

Nature (2022)

-

Efficient parallelization of tensor network contraction for simulating quantum computation

Nature Computational Science (2021)

-

Removing leakage-induced correlated errors in superconducting quantum error correction

Nature Communications (2021)

-

Quantum interference device for controlled two-qubit operations

npj Quantum Information (2020)

-

A quantum solution for efficient use of symmetries in the simulation of many-body systems

npj Quantum Information (2020)