Abstract

Conventional vision-based systems, such as cameras, have demonstrated their enormous versatility in sensing human activities and developing interactive environments. However, these systems have long been criticized for incurring privacy, power, and latency issues due to their underlying structure of pixel-wise analog signal acquisition, computation, and communication. In this research, we overcome these limitations by introducing in-sensor analog computation through the distribution of interconnected photodetectors in space, having a weighted responsivity, to create what we call a computational photodetector. Computational photodetectors can be used to extract mid-level vision features as a single continuous analog signal measured via a two-pin connection. We develop computational photodetectors using thin and flexible low-noise organic photodiode arrays coupled with a self-powered wireless system to demonstrate a set of designs that capture position, orientation, direction, speed, and identification information, in a range of applications from explicit interactions on everyday surfaces to implicit activity detection.

Similar content being viewed by others

Introduction

The realization of ubiquitous interactive environments that enable human activity sensing in a smart home, hospital, and other privacy-sensitive environments1,2 or asset tracking in an unmanned retail store3, require autonomous optical sensing technologies that are more sustainable, scalable, conformal, and privacy-preserving when compared to current camera-based approaches3,4,5,6,7 and von Neumann architectures. New materials and device architectures are needed for such pervasive sensing technologies, as Mark Weiser put it, to disappear and weave into the fabric of everyday life8.

Recent work of light-sensing surfaces has opened a new design space that integrates sensing and computation into everyday objects we interact with, enabling a myriad of applications for gestural input to activity recognition without physical contact9,10,11,12. Flexible photodetectors based on emerging semiconductors13, such as organic photodiodes (OPDs), are desirable to develop light-sensing surfaces because they can be manufactured over large areas14, patterned into arbitrary shapes15,16,17, and with conformal form factors18,19,20,21,22,23,24,25. Despite progress, for light-sensing surfaces to be scalable, they need to maintain low power consumption and latency, regardless of the total area and the number of photosensitive elements on their surface. It is also important that they operate under a wide range of lighting conditions and preserve privacy.

Some of these challenges can be addressed by in-sensor computation, i.e., by processing the sensory data in the analog domain prior to analog-digital conversion (ADC) and digital computation26. Low-level data aggregation or feature extraction performed in the analog domain can compress the high-dimensional raw data acquired from a large array of sensors into lower dimensions and reduce their inherent redundancies27,28. In lieu of digitizing and outputting raw sensor signals, such designs also reduce unwanted capturing of private information. By inextricably integrating the sensing and computing components as ‘computational sensors’, we can more easily achieve low-latency, low power, and privacy-preserving sensory systems.

In this paper, we present computational photodetectors and demonstrate applications in ubiquitous human activity sensing. Herein, a computational photodetector achieves in-sensor analog computation by combining the weighted output from a heterogeneous array of photodiodes distributed in space, into a two-pin readout for time-resolved differential measurement (Fig. 1a), from which mid-level vision features that encode position, orientation, direction, speed, and identification information can be extracted. Key advantages of this approach are: power consumption and latency for acquiring and processing the signal from a computational photodetector are invariant to the pattern design, size, shape, number of photodiodes in the array, or their distribution in space, supporting large-scale sensing applications. Also, since no images are captured or stored, and the temporal evolution of the signal encodes the arbitrary distribution in space of a heterogeneous array of photodiodes, as well as the particular motion it detects, they are expected to be resilient to eavesdropping attacks and capable of protecting user privacy by keeping sensitive data and computation at the hardware level. We demonstrate computational photodetectors using thin and flexible low-noise OPDs that operate even under dimly-lit environments (≪1 lux and down to tens of nanowatt cm−2 in the visible spectral range). In addition to thin and flexible form factors, the potential of achieving low-noise silicon photodiode-like performance14, and the possibility to further tailor their properties through synthetic chemistry, make OPD attractive to implement light-sensing surfaces based on computational photodetectors.

a Computational photodetector with a pattern design produces a motion-incited output photocurrent, which corresponds to the convolution between the signed heterogeneous responsivity distribution and the incident optical power along the time axis. b The equivalent circuit of a typical computational photodetector without or with one photodiode blocked, and the corresponding current output (mean and standard deviation) versus the number of photodiodes connected in parallel with a single photodiode blocked at any given time. c Design space of a computational photodetector, which connects the target information, to relevant design parameters, and finally the pattern design. In this work, we demonstrate and evaluate the linear, crossing, and grid patterns as representatives.

Results

Principles of operation

A computational photodetector is a parallelly connected photodiode array having a two-pin differential readout. Photodiodes are distributed on the surfaces of objects in space at locations ri with a signed spectral responsivity Rλ,i that accounts for the polarities in which devices are connected with respect to others. Photodiodes connected with opposite polarities, as shown in Fig. 1b, produce photocurrents flowing in different directions and thus of opposite signs. The spectral responsivity Rλ of the computational photodetector is characterized by a 1 × M vector of the form:

Similarly, a vector Φλ(t) of the time-dependent spectral optical power Φλ,i(t) received by an individual photodiode of area Ai at location ri can be defined as:

The output current Iout of the computational photodetector follows Kirchhoff’s law, and can be described as a summation of pairwise products of Rλ and Φλ(t) (Hadamard products) integrated over the relevant spectral range:

Therefore, a computational photodetector achieves a weighted linear combination task within the analog domain. Knowledge of Rλ and the spatial location of each photodiode is needed to decode the information from Iout. Hence, a computational photodetector does not produce an ‘image’ of the optical power distribution in space. Since data acquisition and computation happen at the same time, they do not scale with the complexity of the pattern design, resulting in overall complexity of O(1). In comparison, conventional computing hierarchies that acquire and process the output from each individual array element involve: (1) digitizing analog signals, which takes M operations; (2) pairwise integer multiplication, which takes M operations; and (3) summation of M integers, which takes M − 1 operations, resulting in overall complexity of O(M).

Design of computational photodetectors

Flexible photodiodes, which allow the shape, size, distribution, and interconnection of individual photodiodes to be tuned in a scalable way, are ideal for the implementation of computational photodetectors. Their designs depend upon the type(s) of sensing of interest. The design space is very broad as shown in Fig. 1c. Here we present a brief discussion of the following parameters:

Interconnection and sensor output

We use photodiodes connected in parallel with the same absolute spectral responsivity, i.e., ∣Rλ,i∣ = ∣Rλ,j∣. The example in Fig. 1b consists an even number of identical devices (M = 2n with n an integer) with pairwise opposite polarities, i.e., Rλ,i = −Rλ,j. In such case, Iout(t0) = 0 if the whole array is in the dark or under uniform illumination, and non-zero, e.g., Iout =Ii(t), if a photodiode at ri is blocked, and Iout = − Ii+1(t) if another photodiode at ri+1 is blocked. Hence, the sign of the output signal in these types of arrays can be used as binary codes (e.g., \(\left(1\ {-}1\ 1\ {-}1\right)\)). The measurements show that the combined photocurrent output is unaffected by the number of connected devices. We can also use an unbalanced design with a direct-circuit (DC) bias, e.g., Iout(t0) = I0, to capture the uniform ambient light illumination.

Device shape

There are two main design strategies of device shapes—continuous and discrete. A continuous design uses a large, irregular, and asymmetric shape to generate a distinct ‘analog’ signal profile from object motion. A discrete design uses small area photodiodes that generate a ‘digitized’ signal as described in the previous section. The shape of the photodiodes is not important since their size is assumed to be significantly smaller than the object size—but large enough to produce a photocurrent above the sensor noise. Although the continuous approach can be more aesthetically appealing, the discrete approach is more practical and robust from fabrication and signal processing perspectives.

Device size

Instead of having a uniform device area, photodiodes can also modulate their photocurrent output by controlling their sizes Ai, where Ai = ηiAj such that ∣Ii(t)∣ = ηi∣Ij(t)∣, ηi > 0. This allows the combination of sign and magnitude to extend beyond binary encoding.

Spatial layout

Patterns in 1D (e.g., a straight line, a curve, or a circle), 2D (e.g., a matrix, or a checkerboard), or 3D (e.g., faces of a 3D object or an origami structure) can be used to extract spatial information of motion, position, and orientation.

Interspace

The sensing application determines the interspace: di = ∣ri+1 − ri∣. To sense the position or speed of an object, di should be greater than the size of the shadow projected by the object onto the photodetector plane, hobj, such that the object position is registered to a single photodiode. If di ≤ hobj, the ‘entry’ or ‘exit’ can still be detected as the object begins to cover or uncover multiple devices.

In the remainder of this section, we present linear, crossing, and grid patterns implemented with flexible OPDs as representatives of the design space that covers a full spectrum of motion, position, and orientation sensing. We discuss the design strategy for each pattern based on simulations, and evaluate their effectiveness against factors such as sensing distance, ambient light, object size, etc., in realistic settings.

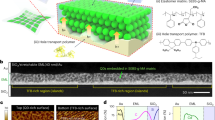

Linear pattern

Figure 2a shows the architecture of the flexible OPDs, which have a spectral responsivity in the red to the near-infrared spectral region (Fig. 2b) and low dark current values under reverse bias (Fig. 2c), allowing them to operate under dim illumination conditions. A linear pattern of two OPDs connected in parallel and opposite polarity with a user blocking each OPD is shown in Fig. 2d. Figure 2f shows the sensor output in response to sequentially occluding each OPD in a different order or direction. Figure 2f also shows that these gestures could be resolved with a high signal-to-noise at irradiance values down to 2.8 nW cm−2, where only a thermal camera could be used to capture the interaction, as shown in Fig. 2e. The noise in the dark and under homogeneous illumination was found to have a median value of 150 fA, as shown in Fig. 2g. Hence these OPDs operate under illumination conditions that the human eye perceives as near-darkness, opening the possibility of achieving computational photodetectors that operate under a wide dynamic range of illumination conditions that are relevant for human activity.

a Schematics of a single organic photodiode (OPD) used in a computational photodetector. b The spectral responsivity of the OPD. c The current-voltage (I–V) characteristics of the OPD in the dark and at 270 lux under fluorescent room lights. d Photographs of a computational photodetector—a pair of OPDs connected in parallel and opposite polarity, under ~100 nW cm−2 optical power of red LED with 635 nm peak emission and close proximity interactions with each OPD. e A thermal image of the interaction under ~3 nW cm−2 optical power of the same 635 nm LED; conditions that are too dark for a regular camera sensor to capture. f Distinctive signals from finger swiping in down-up and up-down directions are well above the noise floor. g Distribution of root-mean-squared noise current values measured during the experiments in f.

Next, we extended the linear pattern to four OPDs in a 1D sequence to study in detail the linear motion of an object (Fig. 3a). Mathematically, this pattern can be compared to a series of line detecting kernels, which ‘convolves’ with the image of a passing object. For instance, if the object can be represented as \(\left(0\ 1\ 0\right)\) and the normalized signed output of the linear OPD pattern as \(\left(1\ {-}1\ 1\ {-}1\right)\), we can acquire the sequence of spikes {1, −1, 1, 1} as the 1D convolution result and detect the following motion information:

-

Direction can be detected by the sequence of spikes, e.g., {−1, 1, −1, 1} and {1, −1, 1, −1} corresponding to motion in opposite directions.

-

Speed v, and acceleration Δv, can be detected by dividing the photodiode interspace d and the duration between neighbouring spikes t as v = dt−1, and obtaining first derivatives Δv = vi+1 − vi.

-

Identification of the sensors can be achieved by having distinctive patterns of 1 and −1. An equal number of OPDs in opposite polarity is connected in asymmetric sequences to distinguish one direction from another.

a Design and prototype of a computational photodetector with a linear pattern. b Simulation of responses from a linear pattern with alternating-polarity photodiodes and an interspace of 4 cm when an object hovers over with varying object sizes or sensing distances. c Experiment setup of technical evaluation using a linear actuator and laser-cut wooden bars with variable widths for light blocking. d Sensor output in various conditions of ambient light, sensing distance, object size, and object speed. e Speed detection result for the above conditions.

We simulate the signal output versus device interspace and sensing distance to facilitate the computational photodetector design as shown in Fig. 3b.

Based on the simulations, a linear-pattern computational photodetector using OPDs with a device area of 4 × 4 mm and interspace of 4 cm were fabricated (Fig. 3a). To evaluate its capability in detecting the linear motion of an object, we develop a test rig based on a linear actuator (Fig. 3c) and precisely control the test conditions, such as motion speed, sensing distance, object size, and ambient light illumination.

We apply noise filtering to the sensor output to remove the power-line noise and the direct-current (DC) component. Figure 3d shows the filtered waveform of sensor signal upon two motion events in opposite directions. The distinctive spikes of {−1, 1, −1, 1} and {1, −1, 1, −1} can be seen at 75 lux. The effect of sensing distance and object size aligns well with our simulations. We segment the signal based on its magnitude and spike–spike interval, apply peak detection to the segment, and derive the speed of the object motion. As shown in Fig. 3e, our sensor can accurately detect the motion speed under various conditions with the highest error of −0.053 m s−1 and a standard deviation of 0.09 m s−1 at 20 mm sensing distance.

Crossing pattern

A crossing pattern can be viewed as a 2D matrix of OPDs designed to invoke distinctive signal sequences in response to a larger object approaching or departing in different 2D directions, blocking or unblocking multiple OPDs (Fig. 4a). We can draw an analogy to an edge detection kernel. For example, an 8 × 8 asymmetric matrix

can generate a sequence of {1, −1, −1, −1} when a large object enters from the left and sequentially blocks the OPDs of each column, {−1, 1, 1, 1} when it exits to the right; so forth for all 8 unique sequences corresponding to 4 motion directions (top-bottom, bottom-top, left-right, right-left) × 2 moments (entry and exit). Therefore, we can acquire the following motion information:

-

2D direction can be determined by the sequence of spikes, which is grouped by a set of 4 unique sequences: {original, reverse-order, inverse-polarity, reverse-order, and inverse-polarity}, corresponding to 4 motion events along one of the two axes of the sensor. The minimum length of a sequence n is 4. To translate the sequence to the pattern, we use 2n number of OPDs distributed in a 2n × 2n matrix with one device per each row or column. OPDs of opposite polarity are used for every two columns or rows (i.e., a ‘−1’ followed by an immediate ‘−1’ and vice versa) to ‘reset’ the output for the next spike.

-

2D speed and identification can be detected at the moment of entry or exit for both axes, based on the eight distinctive sequences of length n.

a Design of a computational photodetector with a crossing pattern. b A prototype with an interspace of 1 cm and overall size of 8 × 8 cm. c Simulation of the computational photodetector responding to an object of 10 and 20 cm width moving in the horizontal and vertical direction at a height of 0.25 and 0.5 mm. d Sensor output from all the motion events with varying object speed, under 250 lux illumination. Under 500 and 750 lux illumination, the signal has a similar envelope with slightly larger magnitudes. The object size is fixed at 16 cm and the sensing range is 0.25 cm for all conditions. e Speed detection result for the various conditions of ambient light and object speed.

We simulate the output of the 8 × 8 asymmetric matrix in the example in response to the 2D motion of a large object (Fig. 4c). Based on these simulations, we develop a crossing-pattern computational photodetector using OPDs with a column or row interspace of 1 cm (Fig. 4b).

Figure 4d, e shows that using a characterization method similar to the one used for the linear arrays, a computational photodetector with a crossing pattern can accurately detect motion and speed in 4 directions and under various conditions, with the highest error of 0.06 m s−1 and standard deviation of 0.10 m s−1 at the exit right event for an object moving at 0.6 m s−1 under 750 lux ambient light illumination.

Grid pattern

A grid pattern is a distribution of OPDs with distinct photocurrent values that can be mapped to their position or orientation (Fig. 5a). For instance, a grid pattern designed as

can detect any of its 5 OPDs being occluded from ambient light based on the steady-state signal output. The grid pattern allows detection of:

-

2D position from the one-to-one mapping of the sensor output and the corresponding OPD. To account for different ambient light conditions, an unbalanced design that naturally exhibits a DC bias in the unblocked state is used to calibrate the output signal and remove the factor of ambient light illumination. The given example has a DC bias of 1.

-

3D orientation when OPDs are mounted on an object facing different directions. A simple example would be two OPDs of opposite polarity (i.e., (−1, 1)) on the top and bottom sides of an object: large magnitudes indicate either the top or bottom side is placed facing down, while small magnitudes (close to zero) indicate the object is placed sideways. The same setup can also be used for shake or impact detection from high-frequency components of the signal due to rapid orientation changes.

a Design of a computational photodetector with a grid pattern. b A prototype with an increasing device area and alternating polarity from left to right. c Sensor output and object position detection result based on various conditions of ambient light. d Sensor output and finger position detection result based on various conditions of ambient light. The same thresholds from the object position detection are used without modification.

To implement a grid design, the photocurrent was controlled by using OPDs with different areas. Assuming that objects can only block a single OPD at a time, we develop a grid-pattern computational photodetector using OPDs with areas of 12, 24, and 48 mm2 (Fig. 5b).

To extract the object position, we use the unblocked-state signal under ambient light to calibrate the signal and apply a series of thresholds on the calibrated signal. Figure 5c shows the raw and calibrated signals measured for different object positions. An empirically chosen threshold of τ0 = 7 and a set of threshold values { ±τ0, ±2τ0, ±4τ0} clearly separate the calibrated signal apart and achieve 100% object position detection accuracy.

We also collected data from two researchers (1 male, 1 female) using their index fingers to block each position under the same set of conditions (Fig. 5d). While the magnitude of the signals generated by a finger blocking each OPD is on average 12.3% weaker than those from completely opaque objects, the same set of threshold values used before achieve 100% detection accuracy.

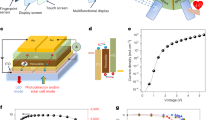

System architecture and applications

Computational photodetectors enable a myriad of ubiquitous sensing applications on everyday surfaces, by capturing relative disposition or motion information between surfaces, objects, and humans. To demonstrate these applications, we develop a self-powered wireless system (Fig. 6a, b) to acquire and communicate the sensor signal. The computational photodetector operates in photovoltaic mode (i.e., at V = 0 V) and is entirely self-powered, with the rest of the system powered by a photovoltaic cell. Unlike other self-powered optical sensing systems (e.g., refs. 10,29), a key advantage enabled by a computational photodetector is that it operates with constant latency and power consumption independent of the number of OPDs or pattern design. To capture the motion of everyday objects and support real-time interactions, we fix the measurement frequency (100 Hz) and communication interval (160 ms) for all applications. Our sensing platform relies only on harvested energy from ambient light. As shown in Fig. 6c, the system operates at 96.6 μW, which is 44% less power than that supplied by a 14.5 cm2 C-Si photovoltaic cell at 250 lux.

a Schematics of the self-powered wireless sensing system compatible with various computational photodetector designs. b The wireless sensing circuit and a rechargeable battery can hide behind a solar cell. c Power consumption and harvesting characteristics: 100 Hertz sensor measurement frequency and 160 millisecond wireless communication interval for all applications can be powered solely by the solar cell under 250 lux. d Music player: a linear pattern can sense the finger swipe to switch track or adjust the volume. e Board game and buttons: a grid pattern can sense the location of a chess piece or a finger touch. f Braille: a combination of linear and grid patterns can be used to encode braille alphabets from finger motion. g Package monitoring: a grid pattern deployed on a package can sense its orientation and monitor its handling. h Inventory management: a crossing pattern deployed on a shelf rack can sense the motion direction of an item.

Computational photodetectors support interactions on everyday surfaces by creating an expressive palette for finger touch, hover inputs, and object detection. A computational photodetector with a linear pattern can be used as a music player interface (Fig. 6d). With an L-shaped pattern—horizontally (1, −1, 1, −1) and vertically (1, −1, −1), the sensor can detect four finger-swiping gestures for volume control and track selection. A computational photodetector with a grid pattern (e.g., the unbalanced 5-device example) can be used for interactive board games or touch-sensing buttons (Fig. 6e). The sensor can track object location on the table, play interactive board games such as monopoly, or enable button input such as ordering food on a restaurant table. Finally, combining the linear and the grid pattern, a computational photodetector can be designed in a braille-like pattern for textual input (Fig. 6f). Identical to the arrangement of the six-dot modern braille alphabets, the computational photodetector uses up to six OPDs to formulate a letter, i.e., (4, 2, 1) from top to bottom on the left, and (−4, −2, −1) on the right. As the user swipes a finger from left to right, each letter will generate a positive signal followed by a negative one, with unique magnitudes. For instance, the letter ‘T’ has an arrangement of (2, 1 ∣ −4, −2), and generates a sequence of {3, −6}. In this way, we can uniquely represent each letter compatible with the tactile representations for visually impaired users.

Computational photodetectors also enable implicit detection of human activities in various (e.g., industrial) settings. A computational photodetector with a grid pattern can provide a cost-effective and self-sustainable solution for package handling monitoring in the delivery process (Fig. 6g). A 2-device (1, −1) pattern placed on the top and bottom sides of a parcel can sense the static (e.g., top-side-down) or dynamic (e.g., shaken or dropped) orientation of the package. A computational photodetector with a crossing pattern (e.g., the 8 × 8 example) can keep track of the items on a shelf (Fig. 6h). Placed near the edge of a rack, the sensor picks up motions of an item being slid in, out, left, and right for inventory management, without explicit scanning or logging of human workers.

Discussion

We presented an approach to computational photodetectors that achieves in-sensor computation in functional patterns and demonstrated a variety of pattern designs for human activity sensing. A computational photodetector operating under the photovoltaic mode is a self-powered sensor and only draws constant power from the wireless sensing system fueled by a single fixed-size solar panel. As it compresses the higher-dimensional signal space to a single-value output in the analog domain, a computational photodetector by design extracts specific information related to the motion, position, and orientation without capturing or storing digital images, which reduces the probability of capturing unwanted information and is more privacy-preserving than sensing approaches based on von Neumann architectures. When implemented with flexible OPDs computational photodetectors can function under a very wide dynamic range of illumination conditions that are relevant for human activity, and show compelling form factors being thin, flexible, or bendable, and aesthetically pleasing. While these proof-of-principle demonstrations validate the general properties of computational photodetectors and suggest wide applicability for gestural input and activity recognition, it should be clear that to fully exploit the potential of OPDs for implementing computational photodetectors, further optimization of their properties will be needed, from introducing amplification for the detection of faint light, tailoring their field-of-view, spectral bandwidth, and responsivity, to investigating approaches to make some of these properties programmable. Also, further studies will be needed to develop cost models for large-scale manufacturing and to assess the overall reliability of OPDs tailored for computational photodetectors and within the context of their potential applications. Additional usability evaluations are needed for the confounding factors in real-world deployment, such as complex object shapes, unintended interactions, and user variability. However, we believe that the general approach described in this paper provides a solid platform to tackle these real-world challenges.

Methods

Fabrication of computational photodetectors

We fabricate the OPD devices and interconnections on flexible polyethylene terephthalate (PET) substrates. Three different shadow masks are designed in Adobe Illustrator and cut out for patterning.

Indium doped tin oxide (ITO)-coated PET substrates are etched with dilute hydrochloric acid using the first mask for the bottom electrode to recreate the required pattern. In the final OPD devices, ITO acts as the hole-collecting electrode. The photoactive and charge transport layers are then subsequently grown on top of the etched ITO regions via physical vapor deposition at 10−7 mbar, using the second mask. The photoactive layers are comprised of the molecular semiconductors, Indium (III) phthalocyanine chloride (InClPc) and fullerene (C60), both of which are commercially available. This active layer combination has been previously used to fabricate ITO-free semitransparent solar cells on flexible PET substrates30. Lastly, the top electrode and array interconnects are created by physical vapor deposition of silver using the third mask at 10−7 mbar.

To inhibit device degradation and prevent abrasive damage to the devices, the completed array is protected with commercially available UV-curable resin as an encapsulant. The curing time is optimized to make sure that the cured encapsulant did not impact the flexibility of the substrate.

Organic photodiode characterization

We measure the spectral responsivity and irradiance-dependent steady-state current density vs. voltage (I–V) characteristic of the organic photodiodes using an electrometer (Keithley 6517A) averaged over a set period of time, as shown in 1b. The OPD displays high responsivity at red to near-infrared region, a region human eyes are less sensitive to. The I–V curve is measured under the illumination of a 635 nm red light-emitting diode (LED), which is typically used in a UL 924 compliant exit light in the US. We reduce the optical power of the light source to barely visible to human eyes (<10 nW cm−2) and measure the photocurrent of the OPD device. As a reference, the optical power of the exit light at 0.5 lux and 0.1 lux is 180 and 43 nW cm−2, respectively. We record the sensor output while manually occluding each of the devices from the illumination (Fig. 2e).

Sensor identification

For linear and crossing patterns, sensor identification can be achieved by distinctive arrangement of the sensor patterns. A linear pattern with 2n OPDs (n ≥ 1) can generate \(N=\frac{1}{2}\left({2n}\atop{n}\right)\) combinations if n is odd, or \(N=\frac{1}{2}\left({2n}\atop{n}\right)-\frac{1}{2}\left({n}\atop{n/2}\right)\) combinations if n is even. For instance, N = 126 for n = 10, and N = 92252 for n = 20.

A crossing pattern with 2n OPDs can generate N = 2n−2 − 2(n−2)/2 such sequences if n is even, or N = 2n−2 − 2(n−3)/2 sequences if n is odd. For instance, N = 240 for n = 10, and N = 261632 for n = 20. Then, by picking one sequence for one axis and one for the other, the crossing pattern can have \(\frac{N!}{{2}^{N/2}(N/2)!}\) different combinations for N/2 unique IDs.

Sensor evaluation and signal processing

To evaluate the motion sensing capability of a computational photodetector with a linear or crossing pattern, we use laser-cut wooden bars with variable widths fixed onto the moving rack of the test rig (Fig. 3c) to represent an object in motion. A digital oscilloscope (Analog Discovery 231) is used to acquire the signal from the sensor. For the linear pattern, we vary one of the test conditions including motion speed (0.25–1 m s−1), sensing distance (0.5–4 cm), object size (1–8 cm), and ambient light illumination (75–750 lux). For the crossing pattern, we fix the object size to be 16 cm and the sensing range to 0.25 cm. We vary one of the test conditions including motion speed (0.3–0.6 m s−1), and ambient light illumination (250–750 lux).

To evaluate the object position sensing capability of a computational photodetector with a grid pattern, we measure the output in the unblocked state and the blocked state for each device under a 2 × 2 cm laser-cut wooden square. We vary the ambient light condition (250–750 lux).

To process the signal, we use a notch filter centered at 60 Hz with a quality factor Q = 30 to remove the alternating current (AC) power-line noise, followed by a DC blocker32 with R = 0.995 to remove the DC component. For object motion detection, we segment the signal of a motion event based on a minimum spike magnitude (10 mV) and a maximum spike–spike interval of 200 ms. To capture the speed of the object motion, we apply peak (local maximum) detection to the magnitude of the signal with a minimum amplitude of 15 mV, a minimum peak-to-peak interval of 0.02 s, and a minimum prominence of 10 mV. The speed of the object traveling over two OPDs is then calculated by dividing the interspace by peak–peak traveling time.

System design

As Fig. 6a shows, we use a low-power microcontroller (Nordic nRF52832 with an ARM Cortex M4 CPU) that supports 12-bit differential ADC and Bluetooth Low Energy (BLE) communication to measure and wirelessly communicate the output of a computational photodetector to a laptop (MacBook Pro, 2.3 GHz Intel Core i5 processor, 8 GB memory) for processing. We measure the power consumption of the system at different signal acquisition and communication frequencies in Fig. 6c. To reduce the maintenance efforts and battery waste, we use an mc-Si PV cell (IXYS SM141K09L) to harvest power from ambient light. A low-power boost charger (TI bq25505) is used for power management, and the surplus energy is stored in a thin 3.7 V 105 mAh LiPo battery as a backup power source. We measure the amount of harvested energy at the output of the low-power boost charger within indoor ambient light conditions at 250–750 lux.

Data availability

The data that support the findings of this study are available from the corresponding author upon reasonable request.

Code availability

The code used in this study is available from the corresponding author upon reasonable request.

References

Harper, R. Inside the Smart Home. (Springer Science & Business Media, 2006).

Giannetsos, T., Dimitriou, T. & Prasad, N. R. People-centric sensing in assistive healthcare: privacy challenges and directions. Secur. Commun. Netw. 4, 1295–1307 (2011).

Wingfield, N. Inside Amazon Go, a Store of the Future. https://www.nytimes.com/2018/01/21/technology/inside-amazon-go-a-store-of-the-future.html (2018).

Poppe, R. A survey on vision-based human action recognition. Image Vis. Comput. 28, 976–990 (2010).

Beddiar, D. R., Nini, B., Sabokrou, M. & Hadid, A. Vision-based human activity recognition: a survey. Multimed. Tools. Appl. 79, 30509–30555 (2020).

Rautaray, S. S. & Agrawal, A. Vision based hand gesture recognition for human computer interaction: a survey. Artif. Intell. Rev. 43, 1–54 (2015).

Wu, Y. & Huang, T. S. In International Gesture Workshop, 103–115 (Springer, 1999).

Weiser, M. The computer for the 21st century. Sci. Am. 265, 94–105 (1991).

Ma, D. et al. SolarGest: Ubiquitous and Battery-free Gesture Recognition using Solar Cells. In The 25th Annual International Conference on Mobile Computing and Networking, MobiCom ’19, 1–15 (Association for Computing Machinery, 2019).

Zhang, D. et al. OptoSense: Towards ubiquitous self-powered ambient light sensing surfaces. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 4, 103:1–103:27 (2020).

Meena, Y. K. et al. PV-Tiles: Towards Closely-Coupled Photovoltaic and Digital Materials for Useful, Beautiful and Sustainable Interactive Surfaces. In Proc. 2020 CHI Conference on Human Factors in Computing Systems, 1–12 (Association for Computing Machinery, 2020).

Raju, D. K. et al. PV-Pix: Slum Community Co-design of Self-Powered Deformable Smart Messaging Materials. In Proc. 2021 CHI Conference on Human Factors in Computing Systems, 1–14 (Association for Computing Machinery, 2021).

García de Arquer, F. P., Armin, A., Meredith, P. & Sargent, E. H. Solution-processed semiconductors for next-generation photodetectors. Nat. Rev. Mater. 2, 1–17 (2017).

Fuentes-Hernandez, C. et al. Large-area low-noise flexible organic photodiodes for detecting faint visible light. Science 370, 698–701 (2020).

Brunetti, F. et al. Printed solar cells and energy storage devices on paper substrates. Adv. Funct. Mater. 29, 1806798 (2019).

Vak, D. et al. 3D printer based slot-die coater as a lab-to-fab translation tool for solution-processed solar cells. Adv. Energy Mater. 5, 1401539 (2015).

Eggenhuisen, T. M. et al. High efficiency, fully inkjet printed organic solar cells with freedom of design. J. Mater. Chem. A 3, 7255–7262 (2015).

Someya, T., Bauer, S. & Kaltenbrunner, M. Imperceptible organic electronics. MRS Bull. 42, 124–130 (2017).

Wang, C.-Y., Fuentes-Hernandez, C., Chou, W.-F. & Kippelen, B. Top-gate organic field-effect transistors fabricated on paper with high operational stability. Org. Electron. 41, 340–344 (2017).

Leonat, L. et al. 4% efficient polymer solar cells on paper substrates. J. Phys. Chem. C 118, 16813–16817 (2014).

Tobjörk, D. & Österbacka, R. Paper electronics. Adv. Mater. 23, 1935–1961 (2011).

Jia, X., Fuentes-Hernandez, C., Wang, C.-Y., Park, Y. & Kippelen, B. Stable organic thin-film transistors. Sci. Adv. 4, eaao1705 (2018).

Zhou, Y. et al. All-plastic solar cells with a high photovoltaic dynamic range. J. Mater. Chem. A 2, 3492 (2014).

Xu, J. et al. Highly stretchable polymer semiconductor films through the nanoconfinement effect. Science 355, 59–64 (2017).

Oh, J. Y. et al. Intrinsically stretchable and healable semiconducting polymer for organic transistors. Nature 539, 411 (2016).

Zhou, F. & Chai, Y. Near-sensor and in-sensor computing. Nat. Electron. 3, 664–671 (2020).

Kagawa, K. et al. Pulse-domain digital image processing for vision chips employing low-voltage operation in deep-submicrometer technologies. IEEE J. Sel. Top. Quantum Electron. 10, 816–828 (2004).

Hsu, T.-H. et al. A 0.5V Real-Time Computational CMOS Image Sensor with Programmable Kernel for Always-On Feature Extraction. In 2019 IEEE Asian Solid-State Circuits Conference (A-SSCC), 33–34 (IEEE, 2019).

Li, Y., Li, T., Patel, R. A., Yang, X.-D. & Zhou, X. Self-Powered Gesture Recognition with Ambient Light. In The 31st Annual ACM Symposium on User Interface Software and Technology - UIST ’18, 595–608 (ACM Press, 2018).

Peng, Y., Zhang, L., Cheng, N. & Andrew, T. L. ITO-free transparent organic solar cell with distributed Bragg reflector for solar harvesting windows. Energies 10, 707 (2017).

Digilent. Analog Discovery 2 Specifications. https://reference.digilentinc.com/reference/instrumentation/analog-discovery-2/specifications. (2017).

Smith, J. O. Introduction to digital filters: with audio applications, vol. 2 (Julius Smith, 2007).

Acknowledgements

We acknowledge Bernard Kippelen for providing lab space, prototyping, and characterization tools; Victor Rodriguez Toro, Yi-Chien Chang, Felipe A. Larrain for technical support in device fabrication; Charles Ramey and Bashima Islam for fruitful discussion. This research was partially supported by the Georgia Tech CRNCH (Center for Research into Novel Computing Hierarchies) Ph.D. Fellowship.

Author information

Authors and Affiliations

Contributions

D.Z. and C.F. conceived the idea and planned the research. R.V. fabricated the devices. D.Z. and C.F. performed device characterization experiments. D.Z., Y.Z., J.P., Y.H.Z., N.A., A.M., and T.C. developed the hardware. D.Z., Y.Z., Y.L., Y.W., Y.H.Z., and Y.D. developed the software. D.Z., Y.Z., Y.L., and J.P. designed and executed the sensing experiments. D.Z. and C.F. performed signal analysis. D.Z., C.F., R.V., Y.Z., J.P., Y.W., S.S., T.S., T.A., and G.D.A. contributed to the applications. T.A. and G.D.A. supervised the overall research. All authors contributed to the manuscript writing.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Zhang, D., Fuentes-Hernandez, C., Vijayan, R. et al. Flexible computational photodetectors for self-powered activity sensing. npj Flex Electron 6, 7 (2022). https://doi.org/10.1038/s41528-022-00137-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41528-022-00137-z

This article is cited by

-

Self-powered photodetector based on Cu2O thin film fabricated using E-beam evaporation technique

Applied Physics A (2023)