Abstract

With the advancements in instrumentations of next-generation synchrotron light sources, methodologies for small-angle X-ray scattering (SAXS)/wide-angle X-ray diffraction (WAXD) experiments have dramatically evolved. Such experiments have developed into dynamic and multiscale in situ characterizations, leaving prolonged exposure time as well as radiation-induced damage a serious concern. However, reduction on exposure time or dose may result in noisier images with a lower signal-to-noise ratio, requiring powerful denoising mechanisms for physical information retrieval. Here, we tackle the problem from an algorithmic perspective by proposing a small yet effective machine-learning model for experimental SAXS/WAXD image denoising, allowing more redundancy for exposure time or dose reduction. Compared with classic models developed for natural image scenarios, our model provides a bespoke denoising solution, demonstrating superior performance on highly textured SAXS/WAXD images. The model is versatile and can be applied to denoising in other synchrotron imaging experiments when data volume and image complexity is concerned.

Similar content being viewed by others

Introduction

Small-angle X-ray scattering (SAXS) and wide-angle X-ray diffraction (WAXD) are widely used techniques in synchrotron and X-ray free electron laser facilities. By providing structural information including phase, orientation, and shape of the crystalline elements and other functional information like local strain and stress states, SAXS/WAXD methods are well suited to study the structural complexity of functional materials and its connectivity to functional properties1,2,3,4,5,6. Due to the fast advances in the instrumentation and methodologies, the paradigm of SAXS/WAXD is shifted from static 2D characterizations towards high-throughput, multi-dimensional tomography experiments, enabling simultaneous multiscale inspection of the structural and mechanical dynamics of heterogeneous materials like alloy, semiconductors, batteries, bone, cuticle, etc7,8,9. For instance, the newly emerging six-dimensional SAXS tomography and other types of tensor tomography techniques open up new opportunities to image fiber or mineral networks within biological materials like bone, tooth, etc10,11,12,13,14,15. However, usually over a million SAXS/WAXD patterns are required for the final reconstruction process which ramps up the data acquisition time consumption, which also imposes unfavorable and permanent structural damage on samples due to excessive radiation dose, thus deteriorating the following reconstruction quality. In addition, beamlines at next-generation advanced light sources are aiming to reach micro- and femtosecond-timescale SAXS/WAXD characterization capabilities to study the structural dynamics of materials16, but trade-offs still need to be made between acquisition speed and detection precision at the moment.

Numerous efforts have been made to increase the acquisition efficiency of SAXS/WAXD experiments. From the instrumentation end, the application of fly-scan techniques17,18 and cryogenic sample environment19 can dramatically increase acquisition speed and reduce radiation damage. Besides, the signal-to-noise ratio (SNR) of the acquired SAXS/WAXD images can be improved to an extent by improving the vacuum level in the X-ray beam path20. However, the acquisition efficiency enhancement from instrumentation developments has limits. Another promising approach is to utilize algorithmic advancements, that is, image denoising techniques on SAXS/WAXD data. The aim is to take advantage of these denoising algorithms to improve the SNR, allowing more room for reduced radiation dose and acquisition time.

In the past decade, machine learning has led to a revolutionary efficiency improvement in image denoising21,22,23, thanks to the progressive developments on convolutional neural networks (CNNs). Zhang et al. proposed DnCNN24 for natural image denoising, which outperformed BM3D25 (the gold standard among conventional algorithms) in terms of peak signal-to-noise ratio (PSNR) metrics. Later on, successful CNN denoising models mushroomed such as FFDNet26, CBDNet27, RIDNet28, and PMRID29, which has been adopted by several smartphone manufacturers in 2019 to boost their phone’s photography capability in poor lighting conditions. Despite the accomplishments made, these CNN models only process natural images corresponding to limited dynamic range in terms of pixel magnitudes. However, extreme high-intensity diffraction spots and low-scattering near-noise level signals usually coexist in the SAXS/WAXD patterns and tend to be equally important, leading to a much higher dynamic range comparing to natural images. Moreover, unlike natural images whose useful information ubiquitously distributes across the entire image area, the denoising requirement of SAXS/WAXD images is more focused on signals of interest. Currently, encouraging progresses on synchrotron X-ray image denoising are mostly achieved on the entire image’s scale30,31,32,33,34,35,36,37,38,39, which lack considerations on the inherent characteristics pertaining to synchrotron X-ray images. Rather than the entire image area, the algorithm should pay attention to the scattering and diffraction streak, spot, arc, and ring matters for experimental purpose, where preprocessing work is usually required to mask unfavorable signals (direct beam, strong spots, etc.) which may cause major interruption for the denoising process. Finally, the contemporary CNN models often utilize massive network architectures that would not ideally cater to the needs of online data acquisition and processing, where lightweight and efficient architectures would be better suitable. These observations leave the research community in desperate need of bespoke network architecture design in accordance with proper image preprocessing techniques devised particularly for SAXS/WAXD images.

Here, to solve the SAXS/WAXD image denoising problem, we suggest SEDCNN (Small Encoder–Decoder Convolutional Neural Networks). SEDCNN is a small, lightweight yet efficient network consisting of encoder–decoder-based CNN architectures. By incorporating SAXS/WAXD-oriented image preprocessing techniques such as masking and resizing, we envision SEDCNN as a machine-learning model specifically optimized for highly textured SAXS/WAXD images. From an algorithmic perspective, our model effectively improves the SNR, allowing more margins for exposure time and radiation-induced damage reduction, all without imposing extra complexities to instrumentations. During training and prediction on experimental SAXS/WAXD data, our SEDCNN model showed superior performance and robustness compared with other classic models such as REDCNN and PMRID. This model takes data volume and image complexity into account, providing versatile denoising solution to other synchrotron experiments as well.

Results

Overview of SEDCNN network architecture

On top of PMRID’s architecture, we designed SEDCNN based on experimental SAXS/WAXD data. We represent network layers as Stages. For instance, SEDCNN4 contains four Sages. Figure 1 illustrates the overall SEDCNN4 architecture consisting of multiple stages. Figure 2 shows the breakdown of each block’s structure within these Stages, with SAXS and WAXD part separately shown.

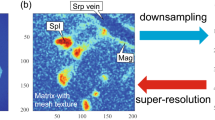

Denoising on experimental SAXS/WAXD data

In practical applications, images acquired from detectors usually contain more complex patterns in SAXS/WAXD experiments. The position and intensity of scattering signal from the non-crystalline component, various hkl diffraction peaks from different crystalline phases in the examined material might be mixed up. In addition, the features of a direct beam, beam stop, and detector gap can also interrupt the SAXS/WAXD signal.

Therefore, it is unlikely to find universal metrics to evaluate denoising performance. However, since physically each hkl diffraction ring is independent from each other, we can study performance by inspecting the rings separately. For the purpose of algorithm validation, here we differentiate between SAXS and WAXD patterns mainly from the simplicity of their signals instead of actual physical difference between those two methods.

For SAXS images, we tend to investigate the network’s capability of denoising single ring prioritizing physical information. In this work, the main task is focusing on the third order diffraction rings from the collagen fibers in the bone sample which happens to appear in the SAXS region. Whereas for WAXD images, we would like to perform more global denoising on multiple diffraction rings with conventional metrics considered, such as PSNR, structural similarity (SSIM), and root mean squared error (RMSE).

Due to the simplicity and small data volume of SAXS images, following the idea of REDCNN we implemented a block-based training scheme and designed SEDCNN2 (SAXS), SEDCNN4 (SAXS). Using classic metrics (PSNR, SSIM and RMSE) we compared the performance of SEDCNN with REDCNN and PMRID. SEDCNN4 (SAXS) performs the best in terms of these more conventional metrics. However, considering the drawbacks of each metric we need to inspect the performance using other metrics better tailored to SAXS/WAXD datasets. Therefore, we applied radial integration over 360° and formulated metrics such as orientation difference for SAXS-specific performance evaluation, in order to better reveal the physical details hidden in low-SNR SAXS images; for WAXD data, besides PSNR, SSIM, and RMSE we also evaluated the performance by calculating line profile and Q-value integration, which is calculated along lines or over regions of interest.

We introduce our performance metrics for SAXS data, which comprise mean, standard deviation, amplitude, and orientation (direct angle) differences represented as Δμ, Δσ, ΔA, and Δα, respectively. Detailed definitions on these metrics can be found in “Methods”. We obtained the performance shown in the following table (Table 1), where the “1 s SAXS” column refers to the reference (clean) dataset with an exposure time of 1 s. The “0.3 s SAXS” column refers to the original noisy data with an exposure time of 0.3 s. We highlight the best-performing results in red, with the second place marked in blue.

To better visualize the effectiveness of denoising using our SEDCNN model, we randomly selected one image pair from the testing dataset and compared the radial integration of the original image \(P_{0.3}^i\) (0.3 s exposure time), the denoised (optimized) image \(P_{0.3opt}^i\) and the reference image \(P_1^i\) (1 s exposure time), see the upper row of Fig. 3. The integration was performed using pyFAI40,41. We calculated Δα, Δμ, Δσ, and ΔA for each image, along which a side-by-side comparison between the noisy and the clean experimental diffraction patterns with 0.3 s and 1 s exposure times, respectively, are shown in the lower row of Fig. 3. We can see that the peaks of radial integration have successfully been recovered, and the diffraction patterns are more visible compared to the original; regarding the resemblance of both radial integration and diffraction patterns to the reference image, the optimized image exhibits significant improvement over the original noisy image.

Visually, the optimized result shows the recovered peak shape compared to raw data, and quantitatively the orientation (Direct Angle), Delta Angle (orientation difference), Delta Mean, Delta Variance, Delta Intensity of the optimized are all closer to the reference 1s-exposure time data. Lower row: SAXS images corresponding to 0.3 s, SEDCNN4-optimized 0.3 s and 1 s exposure time. The diffraction patterns of the optimized are visually more obvious than the raw data, closer to the reference data.

For SAXS images, since the training process using our self-defined image evaluation standard took a long time, we let each network carry out 500 epochs and saved the network parameters every 20 epochs, resulting in 25 networks throughout the training process. Finally, the saved 25 networks were tested using the testing dataset. In Fig. 4, we plot the optimal results of each network, showing that SEDCNN2 and SEDCNN4 yield the strongest robustness when evaluated by the more universal β metric, which is defined in “Methods”.

Performance of networks under optimal hyperparameter configurations using various metrics, including a RMSE, b Betas, c PSNR, d Delta Direct Angle, e SSIM, f Delta Mean, g Delta Amplitude, h Delta Standard Deviation. Two variants of SEDCNN, PMRID, and REDCNN were compared. Units: RMSE, PSNR, SSIM, Betas are in arbitrary units; Delta Amplitude is in image intensity values; Delta Direct Angle, Delta Mean, Delta Standard Deviation are in degrees. The number of epochs was set to 500.

While for WAXD images, naturally it is more challenging for the network to reach decent results. Compared with SAXS images, the diffraction patterns of WAXD are more “complex”, composing more diffraction peaks with greater intensity differences and wider distributions; the metallic or mineral components inside the sample or the random disturbance occurring in detector electronics would also burst the intensity of certain diffraction spots, such as dead pixels on the detector and presence of cosmic rays, which luckily can be removed by proper thresholding to improve the overall image quality. We calculated the line integration and the Q-value integration, respectively, using ALBULA and Fit2D42 for original noisy WAXD images with 0.3 s exposure time, denoised images, and reference images with 1 s exposure time (Fig. 5). From the line profile we see the peaks have been recovered, and from the Q-value integration we see the shape and distribution of the peaks is much closer to the reference, compared to the noisy data. Also, numerically we can see the improvement in terms of PSNR, SSIM, and RMSE metrics.

Line profile was calculated along the black and white/zebra line segment shown in WAXD images. Q-value integration was performed within the red and blue zone shown in WAXD images. The intensity has been normalized, and the peak location and shape were preserved. Note how the network successfully recovered the peak shape from the noisy data.

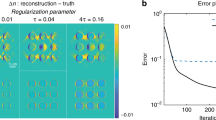

Fine-tuning hyperparameters to reach optimal performance (SAXS)

Keeping the overall network architecture intact by using our previously designed Stages as the building blocks of our network, we thoroughly investigated the impact of hyperparameters on network performance, such as learning rate, number of Stages, depth-wise separable convolution and noise learning, batch size, feature map stacking mode, and batch training scheme. Through the hyperparameter study, we would finally be able to demonstrate the performance of our SEDCNN4 model. To better evaluate image variations, we compared RMSE, orientation difference \(\overline {\Delta \alpha }\), and the more universal metric β.

For Stage numbers equaling 2, 4, and 6 (no depth-wise separable convolution and residual learning applied), we altered only the learning rate and found the three networks perform the best all at the 10−3 learning rate (Fig. 6). See Supplementary Information (Supplementary Fig. 4) for results on PSNR, SSIM, and Delta Amplitude.

To study the performance of SEDCNN2, SEDCNN4, and SEDCNN6, we set the learning rates to be their respective optimal values and kept other hyperparameters unchanged. SEDCNN4 was found to be the best performer with SEDCNN2 being a close runner-up. Considering the trade-offs between performance and computational cost, we further modified the design of SEDCNN2 and SEDCNN4 by hyperparameters tuning.

The block-based training scheme oversamples the input image with overlapping areas thus increasing the volume of training data. This scheme helps improve the stability of the network at the cost of increased training time. To improve computation efficiency, we replaced conventional convolutions with depth-wise separable convolutions, consequently reducing the number of convolutional operations and network parameters. Such approach has already been adopted by more lightweight networks such as Mobile-net and been proven to be equivalent to conventional convolutions43,44. Using depth-wise separable convolution, both the number of convolutional operations and the number of network parameters can be reduced. However, despite the decrease in training time, the amount of VRAM access using depth-wise separable convolution was nearly doubled, and the performance of SEDCNN2 and SEDCNN4 also dropped (the performance drop often occurs in depth-wise separable convolutions due to decreased number of parameters).

To make up for the performance loss, we changed the objectives of the network to learn the differences between the input and output images by implementing the idea of residual networks. The new output then is the summation of the input and output images, we name such scheme that utilizes the input information as ‘noise learning’. We found using noise learning, the performance once again improved (Fig. 7).

Serial optimizations of a SEDCNN2 and b SEDCNN4. Similar to Fig. 6, RMSE, Betas, and Delta Direct Angle were illustrated. Noise learning approach made up for the performance loss incurred by Sep. Conv. Units for RMSE and Betas: arbitrary units, and for Delta Direct Angle: degrees.

The network performance and VRAM usage is also highly dependent on the Batch size. For example, SEDCNN2 with Batch sizes equal to 4 and 8, respectively, yields nearly identical performance at the 500th epoch. This could be owing to our block-based training scheme (220 subimages with 55*55 pixels each were generated). That is, all at once 880 images are input for training, which is conceived sufficient to avoid overfitting.

We applied depth-wise separable convolution and noise learning on SEDCNN4 and found the block-based training scheme yields the best performance. That is, keeping batch size and other hyperparameters intact we used full-size images as input, which is called ‘direct training’. We found the performance of direct training is inferior to that of block-based training.

SEDCNN on WAXD dataset

We have already visually presented the effect of denoising on WAXD dataset. For quantitative results, we compared the performance of PMRID and SEDCNN (WAXD) under varying batch size configurations with metrics including VRAM usage, time consumption, and PSNR, RMSE, SSIM, which is summarized in Table 2.

Similar to how we evaluated performance on SAXS data, we present our results on WAXD testing dataset in Fig. 8. We depicted the line profile and the Q-value integration in Fig. 9, which also combines the results already shown in Fig. 5.

Combining the results shown in Table 2 and Fig. 8, we learn that PMRID suffers from high VRAM usage (30.2 GB), while the two SEDCNN4 variants (batch size equaling 12 and 16) achieve comparable performance without exploiting too much VRAM (only around 10 GB). From Fig. 9, we can see the peak within the pink dashed square corresponding to SEDCNN (batch size equaling 12) is more noticeably recovered than those corresponding to SEDCNN (batch size equaling 16) as well as PMRID. From the Q-value integration, we can clearly see the underlying noise present on intensity peaks has been effectively suppressed. We were also aware that although the amount of VRAM usage decreased with batch size chosen to be 12, the capability of successfully recovering useful physical information would also diminish, where a batch size equal to 16 would be a better choice.

We built SEDCNN with PyTorch45 and deployed the network on a host computer with an Intel 10980XE CPU and two NVIDIA RTX 3090 GPUs for training session acceleration. For SAXS data, the time cost spent on training after 500 epochs was 5.5 h (SEDCNN2) and 10 h (SEDCNN4). The prediction time per image from the two SEDCNN variants was 0.2 s and 0.5 s, respectively; For WAXD data the training time was 1 hour 5 minutes after 500 epochs (SEDCNN4). The prediction time per image was 1.2 s.

SEDCNN on the bamboo dataset

In addition to the mouse radial bones dataset, we also collected experimental bamboo dataset using other synchrotron beamline and detectors. Like Fig. 9, we calculated the line profile (ALBULA) and Q-value integration (Fit2D39) as well as Gaussian fitting. These results can be found in Results on WAXD Bamboo Dataset in Supplementary Information (Supplementary Fig. 8 and Supplementary Table 2).

Discussion

Instead of implementing larger and deeper networks for SAXS/WAXD image denoising, we contend lighter, shallower networks with simpler architectures would be more useful. In terms of data volume, the number of available training images for SAXS/WAXD data is considerably less than that of open datasets for natural images, making deeper networks comprising myriads of parameters non-ideal. Based upon this observation, the SEDCNN model is designed to be shallow, lightweight yet efficient. From image acquisition to preprocessing, from network architecture design to performance evaluation, SENCNN prioritize its adaptability to SAXS/WAXD data. For example, in preprocessing phase, we applied resizing and masking techniques to select regions of interest (ROIs). That is, for both SAXS and WAXD data we resized the original images to only keep the image areas containing useful physical information, while reducing the size of input images; we also masked the dazzling central scattering pattern that should be ignored in our textured analysis to circumvent its interference with the physical information residing within outer diffraction rings. Moreover, since only partial areas are of interest on SAXS/WAXD images, we consider metrics like PSNR, SSIM and RMSE that take pixel intensity differences into account on the whole image scale as only secondary and introduce metrics that can better reflect the geometrical properties of local ROIs such as symmetrical diffraction rings.

In contrast to SAXS images, WAXD images tend to exhibit multiple diffraction rings. These rings are considered independent pixel-wise, making it feasible to perform our established denoising strategies on a specified ring. However, the operation of excluding disturbances from irrelevant rings is considered superfluous, especially when there exist indistinguishable rings (low-SNR rings). Therefore, for WAXD images we treat the multiple diffraction rings altogether, instead of testing them individually. Thereby, we can see decent denoising results through the line profile and the Q-value integration over a weakly-scattered area.

The reason why we came up with two architectural designs not only stems from image characteristics themselves, but also from trade-offs between network design and computational resource exploitation. Though depth-wise separable convolution somewhat helps reduce the number of network parameters and computational cost, doubling the number of convolutions would cause nearly doubling of VRAM usage, which poses extra challenges on computing hardware. On the other hand, despite the increase of dataset volume by performing block-based training, the size of the feature maps remains large due to subtraction of 4 pixels (or addition of 4 pixels), instead of halving the feature map size, such aspect keeps the size of feature maps large thus preventing us from expanding the number of channels or the depth of the network due to VRAM usage concerns. Admittedly, the network is designed specifically for SAXS data with limited number of training images and simpler image characteristics. For some SAXS data the size of the ROI is only 128 × 128 pixels, the network stability could hardly be met without such training strategy. However, for WAXD data the image characteristics are much more complicated than SAXS, making such training strategy unsuited. Aiming at the performance of PMRID on WAXD images, a batch size of 16 was required to achieve comparable performance, when the usage of VRAM exceeded 30 GB. Right now, the maximum VRAM available on non-professional GPUs is 24 GB on RTX 3090, which means at least 2 GPUs are required to fulfill our needs. Therefore, we accordingly adjusted the network design, reducing the VRAM usage to 13.7 GB and completed training and testing on a single RTX 3090; we further decreased the batch size to 1, achieving even lower VRAM usage of only 10.8 GB. Although the performance was slightly worse than that using batch size of 16, effective denoising can be observed from the Q-value integration with only single RTX 3090. Besides the reduced VRAM usage on SEDCNN (WAXD), the training time is also 22% less than that of PMRID with all other metrics improved.

We believe such machine learning-based algorithmic approach would continue to benefit scientific discoveries at advanced light sources46,47. Specifically, in this work, we found being able to obtain high-quality output images through machine leaning models would significantly facilitate the ROI-finding process and preserve data fidelity. During typical SAXS/WAXD experiments, due to limited beamtime coarse scanning or pre-experiments are usually conducted on the entire sample with lower resolution and reduced exposure time, and subsequent finer scanning is performed on ROIs with higher resolution. Making use of machine learning-enabled denoising techniques, the SNR of coarse scanning can be improved, which would benefit ROI finding for the finer scanning process. Also, certain information that would have been discarded through pre-experiments could be retained. In addition, multiple or repetitive experiments are needed to achieve highly reliable results. For example, to circumvent the interference caused by individual differences when analyzing micro- to nano-scale structural diversity between normal and osteoporotic bones, repetitive exposures that result in near millions of images are often required. However, based on the model proposed in this work, only small number of paired images (0.3 s and 1 s) need to be acquired; thereby, for subsequent experiments, only 0.3 s images are needed (as testing dataset), saving tremendous exposure time with more reliable results while being able to characterize more samples within limited beamtime.

Methods

Validity of utilizing neural network models

The validity of using neural network models for SAXS/WAXD denoising can be demonstrated as follows. We collected paired SAXS/WAXD data of mice radius corresponding to high and low-noise levels. After denoising and plotting the radial integration which reflect Gaussian peaks, we studied the symmetry levels of the peaks and compared the peaks’ orientations with those from the reference images to evaluate the denoising performance. Suppose the input noisy images are \(X \in R^{M^\ast M}\) with the low-noise reference images denoted as \(Y \in R^{M \ast M}\), then there exists mapping Y = F(X), where F performs the denoising task. Thus, we define a series of denoising functions f and search for the f that best suits the denoising requirements of the given images as F. The searching process can be expressed as\(\mathop {f}\limits^{{\it{argmin}}} \left( {(y - f(x))} \right.^2\) . Therefore, such dynamic model adjustment problem can naturally be solved by neural network models. We have shown that our SEDCNN4 model effectively fulfill such needs.

Experimental SAXS/WAXD data collection

The SAXS/WAXD mouse radial bones data was collected at BL10U1, Time-resolved Ultra Small-Angle X-ray Scattering (USAXS) beamline at Shanghai Synchrotron Radiation Facility (SSRF). The energy of the incident X-ray was 10 keV with a beam size of 10 × 10 μm2. The detector used for SAXS was Eiger 4 M with pixel size of 75 × 75 μm2 and for WAXD was PILATUS 1 M with pixel size of 172 × 172 μm2. The distance between the sample and the detector was calibrated as 120 mm (WAXD) and 3100 mm (SAXS) by standard sample silver behenate (AgBH). During the experiment, a total of 529 SAXS/WAXD images of mouse radial bone with an exposure time of 0.3 s and 1 s were collected, including 468 images with exposure time of 1 s containing clean signals. Therefore, these 468 images and their corresponding images with an exposure time of 0.3 s were incorporated into a dataset. We put 80% of the data into the training dataset and 20% into the testing/prediction set. The azimuth angle difference of the major peaks of this mouse radial bone data covers between 88 to 100 degrees, all around 90 degrees. We present the results in the radial integration diagram.

The WAXD bamboo dataset was collected at Beijing Synchrotron Radiation Facility (BSRF)-3W1A beamline to demonstrate our model’s robustness. The detector used for data collection was iRay-NDT1717HS with pixel size of 140 × 140 μm2. In total, 56 pairs of noisy and reference images were collected with 1 s and 10 s exposure time, respectively. After inspecting the original data, we found the SAXS/WAXD pattern usually featured in three different orientations (A, B, and C) as shown in Supplementary Fig. 7. To unify the angular discrepancy on orientation, we combined the images corresponding to orientations A and B and two-thirds of the images corresponding to orientation C together, shuffled them to be the training set. The remaining one-third of the images corresponding to orientation C were set as testing set.

All animal studies were carried out using guidelines issued by the Biomedical Ethics Committee, Institute of High Energy Physics, Chinese Academy of Sciences, China.

Preprocessing: center covering, resizing, and intensity normalization

The SAXS and the WAXD image acquired by the rectangle-shaped detector is composed of 2069 × 2166 and 981 × 1043 pixels, respectively. Unlike the commonly known 8-bit depth images which have a maximum intensity of only 255, due to high-flux X-rays from synchrotron, the maximum intensity would easily reach ten thousand, for instance. Therefore, during SAXS image acquisition, beam stop is often used to cover the central area of the detector to protect it from saturated photon counts. Combining with subsequent masking and resizing operations, image regions with exceptionally high pixel intensity can be effectively excluded and the interference with ROI incurred by these regions can be removed.

For SAXS images, considering the saturated central area would not help the processing of surrounding diffraction arcs, we completely covered the central dazzling area with a larger mask to exclude its undesirable impact on data processing, such as intensity normalization. Otherwise, useful information pertaining to diffraction arcs would be normalized to near zero which subsequently could be filtered out as noise by the network. We used masks to cover the center so that the maximum intensities are ~5–10 times larger than those of the regions containing useful information such as in diffraction arcs. To reduce training time, we also resized the images to keep only useful diffraction information.

For WAXD images, as diffraction angle increases the intensity of scattering signal drops, leaving diffraction signals stronger than their scattering counterparts with higher associated SNR. Although the SNR of WAXD images is generally greater than that of SAXS images, interpreting physical information residing at those WAXD rings with low SNR is still demanding due to insufficient exposure time, thus requiring effective machine-learning methods for information recovery. During preprocessing on WAXD images, masks were used to suppress anomalous scattering signals resulting from metallic or mineral constituents within the sample, as well as from detector’s electronics.

In summary, we preprocess SAXS/WAXD images as follows. First, we cover the original images with masks. Second, using the centers of diffraction rings as the centers of resized areas, for SAXS images, we cut the areas containing useful diffraction information into square images of size 576 × 576, and 768 × 960 for WAXD images. Finally, we perform normalization of intensities of the resized images for following training and testing phases. More detailed information can be found in ‘Preprocessing SAXS/WAXD images’ section in Supplementary Information.

Customized metrics for performance evaluation

Given a reference image, we frequently use metrics such as RMSE, PSNR and SSIM to evaluate the pixel differences between the original and the reference image. These commonly used metrics generally are regarded as specialized mappings that interpret the image differences before and after denoising as definite values. Although it remains feasible to use these metrics for SAXS/WAXD images, they are not best-suitable since only pixel differences within ROI are of interest for SAXS/WAXD images. To better reveal pixel differences within partial ROI, we calculated radial integration of diffraction rings and presented customized metrics based on the calculated integration.

That is, to precisely portray interested physical information from SAXS/WAXD images, we utilized radial integration to quantitatively elucidate physics-related information such as orientation of collagen fibers and symmetrical properties of diffraction arcs on SAXS/WAXD images. We used MSELoss, a variant of MSE as the loss function in training phase, rather than just judging image quality by human inspections. MSELoss is widely used in natural image processing to measure pixel-level differences between images. Since radial integrations are essentially manipulations of pixel-wise intensities, the validity of using MSELoss for our model should be straightforward.

Before analyzing SAXS/WAXD data of mineralized organisms, we converted data from 2D to 1D by summing all pixels within a specified region. In order to determine the orientation of the observed region of interest, we chose the intensity distributions over bearing angles (hereafter called radial integration) over radial intensity distributions. We formulated a pipeline to calculate the radial integration.

Let \(P_{0.3}^i\) denote the SAXS/WAXD image with 0.3 s exposure time and \(P_{0.3opt}^i\) the denoised image. The reference image which corresponds to 1 s exposure time is denoted as \(P_1^i\). The superscript i indicates the ith image. After conducting a 360-degree radial integration of an image, the mean μ1 and μ2, standard deviation σ1 and σ2, and amplitude A1 and A2 of the two Gaussian peaks corresponding to the two diffraction arcs are obtained.

In theory, under Fridel’s Law the two Gaussian peaks are identical and symmetrical about the image center. To observe this, we performed radial integration on a low-SNR image and Gaussian-fit the integration results with mean, standard deviation and amplitude.

As for the calculation of radial integration, we specified a ring area that fits the diffraction arcs inside (the area between the double blue rings in Fig. 10) and split the whole ring into n sections (the orange sector area corresponds to 1 section in Fig. 10). Then we summed all pixels inside the sector area as the calculated radial integration of a specific section. The above process can be explained as the following.

Let C denote the specified ring area which is divided into n sections; each section corresponds to an angle χ, \(\chi = 0,1,2 \ldots .,n\) such that the section corresponding to χ can be denoted as Cχ. Let Iχ denote the total intensities of the section Cχ by summing all pixels inside that section. We define the set consisting of Iχ as the distribution of radial integration, that is,

To get rid of the disturbance of background intensities, we also applied ring areas both inside (the ring area between the blue and green rings in Fig. 10) and outside (the ring area between the blue and red rings in Fig. 10) the diffraction arcs. We used the same mechanism to calculate radial integration of these areas. We averaged the integration of inner and outer ring areas to be background intensity and subtracted it from its corresponding central ring integration, which can be expressed as

As for mean (μ), we define the mean difference as follows, where \(\mu _1^i(1s)\) and \(a\mu _1^i(0.3s\_opt)\) denote the mean of the first arcs of the ith reference image and the denoised image, respectively; and \(\mu _2^i(1s)\), \(\mu _2^i(0.3s\_opt)\) denote the second arcs of the ith reference image and the denoised image, respectively.

Then we denote the orientation of the ith SAXS/WAXD image as αi using \(\mu _1^i\) and \(\mu _2^i\).

For the testing dataset, let us denote the orientation of images corresponding to 0.3 s and 1 s exposure times (\(P_{0.3}^i\) and \(P_1^i\)) as\(\alpha _{0.3}^i\) and \(\alpha _1^i\) for the ith image, and \(\Delta \alpha ^i = \left| {\alpha _1^i - \alpha _{0.3}^i} \right|\) as the difference of the orientations. We then calculated the averaged orientation difference \(\overline {\Delta \alpha _{raw}}\) between original noisy images and reference images.

Then we calculated the orientation difference between denoised images and reference images.

As for Standard deviation (σ), similar to the definition of mean (μ), we define the differences of standard deviation as

Likewise, the differences of amplitudes can be expressed as

So far, we have used both the metrics that evaluate natural image qualities and the metrics that reflect the similarities between the diffraction peaks (\(\overline {\Delta \mu }\), \(\overline {\Delta \sigma }\), \(\overline {\Delta A}\) and most importantly, \(\overline {\Delta \alpha }\)). However, we are still in short of universal mechanisms that jointly consider these metrics. Therefore, we performed correlation analysis on these metrics and found out the correlation coefficients between \(\overline {\Delta \alpha }\) and all other metrics. Consequently, we are able to assign appropriate weights to respective metrics in order to create a more versatile performance metric. See Fig. 11 for correlations of the metrics.

The orientation difference \(\overline {\Delta \alpha }\) is inversely correlated to PSNR and SSIM while showing stronger correlations with RMSE and \(\overline {\Delta \mu }\) than with \(\overline {\Delta A}\) and \(\overline {\Delta \sigma }\). Since radial integration inherently show the ability of recovering pixel intensities thus closing the intensity gaps with the references, a decrease of RMSE would be equivalent to a decrease of \(\overline {\Delta \alpha }\).

To formulate β using various weights, we define \(\beta _{\overline {\Delta \mu } }\) as follows to reflect the efficacy of intensity recovery in terms of averaged mean difference,

where \(\overline {\Delta \mu _{0.3opt}}\), \(\overline {\Delta \mu _{0.3}}\) denote the averaged mean difference of denoised images and original images, respectively; and \(\overline {\Delta \mu _1}\), \(\overline {\Delta \mu _{0.3}}\) denote the averaged mean difference of references and original images, respectively.

We are then able to formulate intensity recovery levels with distinct weights on the indexes \(\beta _{\overline {\Delta \alpha } }\), \(\beta _{\overline {\Delta \mu } }\), \(\beta _{\overline {\Delta A} }\), and \(\beta _{\overline {\Delta \sigma } }\) as follows, following the correlation results.

Such weighted index provides us with a universal solution to jointly study the various metrics designed specifically for SAXS/WAXD images.

Strategy on data acquisition time reduction

When designing algorithms for beamline experiments, we have to take user experience into account. As we mentioned before, SAXS/WAXD experiments typically require large number of exposures for mapping (105) with each point exposed for 1 s. For the purpose of algorithm validation, we performed 1-s and 2-s exposure every four points to form 2.5 × 104 pairs of training images to complete the network training and testing. The remaining 7.5 × 104 images with 1-s exposure time were tested, guaranteeing no impact on users if the results were suboptimal, otherwise this approach could also provide users with higher SNR images. For ultimate user experience improvement, these algorithms should be intuitively integrated into the experimental control and data acquisition software framework48.

In terms of reduction on acquisition time, suppose the original 105 images requiring 1-s exposure time are instead exposed for 0.3 s and 2 s, respectively, every 4 points. Therefore, 2.5 × 104 pairs of images were generated for network training and testing, and the other 7.5 × 104 pairs of images with 0.3-s exposure time were injected into the network as the testing data for denoising task that was proven to increase the SNR while also reducing exposure time.

To quantitatively evaluate how much time we could save during data acquisition process, suppose the normal exposure time for an image took t seconds, then the total time consumption was Nt seconds when the total number of exposed points was N. To obtain higher SNR images, one would increase the image acquisition time to Nβt seconds. Let α represent the ratio between the scanned points requiring the acquisition of high SNR images and the total number of scanned points, then the number of acquired points requiring higher SNR is Nα and N for lower SNR images. For higher SNR images the total exposure time per image is βt seconds with β > 1, and for lower SNR images γt seconds with γ < 1. Following such convention, the total time consumption can be expressed as \(N\alpha \beta t + N\gamma t\). Then, the exposure time is reduced to \(\frac{{N\alpha \beta t + N\gamma t}}{{N\beta t}}\) of the original, which can be simplified as

Taking 1-s exposure time as baseline, based on our configuration on data acquisition we know γ = 0.3, α = 0.25, and β = 2. Substituting these values into Eq. (11), we know only 40% of the original exposure time is required to collect all the data we need for training and testing. Furthermore, since α, γ < 1 while β > 1, as we are further pursuing higher SNR images during normal experimental operations (larger β), the reduced exposure time will asymptotically reach α, which is 0.25. Typically, α can also be lower than 0.25, making the exposure time reduction even more significant.

Data availability

We have made the data publicly available. The mouse radial bones dataset is available at https://znas.cn/AppH5/share/?nid=LIYDIMJQGEYDESRSJY2TS&code=vArdAslX30dm2kXm2j2vkA9Ej8x0aRqHqm3AUXaVUfqzYcB3TzIeQGDzuOCm1ehza8de&mode=file&display=list. The bamboo dataset (saved in npy_img) with the trained models is available at https://znas.cn/AppH5/share/?nid=LIYDIMJQGEYDESRSJY2TS&code=vArdAslX30dm2kXm2j2vkA9GFfvac5B2ghelYpD8Z7Voco8cm1oX1EZyllFITlVSIkE&mode=file&display=list. Please note that after uploading to Network-Attached Storage (NAS) platform, the data will no longer be downloadable after 30 days. If you are having trouble downloading the data, please contact dongz@ihep.ac.cn for updated download links.

Code availability

The code implementing the SEDCNN network design that can be used to replicate the results presented in this work is available at https://github.com/zzZhou8/SEDCNN-for-SAXS-and-WAXD/.

References

Hura, G. et al. Robust, high-throughput solution structural analyses by small angle X-ray scattering (SAXS). Nat. Methods 6, 606–612 (2009).

Stanić, V. et al. Local structure of human hair spatially resolved by sub-micron X-ray beam. Sci. Rep. 5, 17347 (2015).

Wang, M. et al. SAXS and WAXD study of periodical structure for polyacrylonitrile fiber during coagulation. Polym. Adv. Technol. 26, 136–141 (2014).

Pauw, B. R. Everything SAXS: small-angle scattering pattern collection and correction. J. Phys. Condens. Matter 25, 383201 (2013).

Sui, T. et al. Multiple-length-scale deformation analysis in a thermoplastic polyurethane. Nat. Commun. 6, 6583 (2015).

Jeffries, C. M. et al. Small-angle X-ray and neutron scattering. Nat. Rev. Methods Prim. 1, 70 (2021).

Rungswang, W. et al. Time-Resolved SAXS/WAXD under Tensile Deformation: Role of Segmental Ethylene–Propylene Copolymers in Impact-Resistant Polypropylene Copolymers. ACS Applied Polymer Materials 3, 6394–6406 (2021).

Hémonnot, C. Y. J. & Köster, S. Imaging of biological materials and cells by X-ray scattering and diffraction. ACS Nano 11, 8542–8559 (2017).

Qian, J. et al. Insights into the enhanced reversibility of graphite anode upon fast charging through Li reservoir. ACS Nano 16, 20197–20205 (2022).

Schaff, F. et al. Six-dimensional real and reciprocal space small-angle X-ray scattering tomography. Nature 527, 353–356 (2015).

Liebi, M. et al. Nanostructure surveys of macroscopic specimens by small-angle scattering tensor tomography. Nature 527, 349–352 (2015).

Jud, C. et al. X-ray dark-field tomography reveals tooth cracks. Sci. Rep. 11, 14017 (2021).

Georgiadis, M. et al. Nanostructure-specific X-ray tomography reveals myelin levels, integrity and axon orientations in mouse and human nervous tissue. Nat. Commun. 12, 2941 (2021).

Mürer, F. K. et al. Quantifying the hydroxyapatite orientation near the ossification front in a piglet femoral condyle using X-ray diffraction tensor tomography. Sci. Rep. 11, 2144 (2021).

Fratzl, P. Extra dimension for bone analysis. Nature 527, 308–309 (2015).

Kurihara, H. et al. Elongation induced β- to α-crystalline transformation and microvoid formation in isotactic polypropylene as revealed by time-resolved WAXS/SAXS. Polym. J. 51, 199–209 (2019).

Yang, L., Liu, J., Chodankar, S., Antonelli, S. & DiFabio, J. Scanning structural mapping at the life science X-ray scattering beamline. J. Synchrotron Radiat. 29, 540–548 (2022).

Ilavsky, J. et al. Development of combined microstructure and structure characterization facility for in situ and operando studies at the advanced photon source. J. Appl. Crystallogr. 51, 867–882 (2018).

Sarafimov, B. et al. OMNY—a tomography nano crYo stage. Rev. Sci. Instrum. 89, 043706 (2018).

Ye, D. et al. Preferred crystallographic orientation of cellulose in plant primary cell walls. Nat. Commun. 11, 4720 (2020).

Thiyagalingam, J. et al. Scientific machine learning benchmarks. Nat. Rev. Phys. 4, 413–420 (2022).

Zhang, K. et al. Learning deep CNN denoiser prior for image restoration. in 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR) 2808–2817 (IEEE, 2017).

Tian, C. et al. Image denoising using deep CNN with batch renormalization. Neural Netw. 121, 461–473 (2020).

Zhang, K. et al. Beyond a Gaussian denoiser: residual learning of deep CNN for image denoising. IEEE Trans. Image Process. 26, 3142–3155 (2016).

Dabov, K. et al. Image denoising by sparse 3-D transform-domain collaborative filtering. IEEE Trans. Image Process. 16, 2080–2095 (2007).

Zhang, K. et al. FFDNet: toward a fast and flexible solution for CNN-based image denoising. IEEE Trans. Image Process. 27, 4608–4622 (2018).

Guo, S. et al. Toward convolutional blind denoising of real photographs. in 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) 1712–1722 (IEEE, 2019).

Anwar, S. & Barnes, N. Real image denoising with feature attention. in 2019 IEEE/CVF International Conference on Computer Vision (ICCV) 3155–3164 (IEEE, 2019).

Wang, Y. et al. Practical deep raw image denoising on mobile devices. in Computer Vision – ECCV 2020: 16th European Conference, Glasgow, UK, August 23–28 (Springer International Publishing, 2020).

Hendriksen, A. A. et al. Deep denoising for multi-dimensional synchrotron X-ray tomography without high-quality reference data. Sci. Rep. 11, 11895 (2021).

Bai, T. et al. Deep interactive denoiser (DID) for X-ray computed tomography. IEEE Trans. Med. Imaging 40, 2965–2975 (2021).

Yang, X. et al. Low-dose X-ray tomography through a deep convolutional neural network. Sci. Rep. 8, 2575 (2018).

Chen, H. et al. Low-dose CT with a residual encoder-decoder convolutional neural network. IEEE Trans. Image Process. 36, 2524–2535 (2017).

Shan, H. et al. 3-D convolutional encoder-decoder network for low-dose CT via transfer learning from a 2-D trained network. IEEE Trans. Med. Imag. 37, 1522–1534 (2018).

Niu, Y. et al. Geometrical-based generative adversarial network to enhance digital rock image quality. Phys. Rev. Appl. 15, 064033 (2021).

Bizhani, M., Ardakani, O. H. & Little, E. Reconstructing high fidelity digital rock images using deep convolutional neural networks. Sci. Rep. 12, 4264 (2022).

Yang, Q. et al. Low-dose CT image denoising using a generative adversarial network with Wasserstein distance and perceptual loss. IEEE Trans. Med. Imag. 37, 1348–1357 (2018).

Lee, S. Y. et al. Denoising low-intensity diffraction signals using k-space deep learning: applications to phase recovery. Phys. Rev. Res. 3, 043066 (2021).

Cha, E. et al. Low-dose sparse-view HAADF-STEM-EDX tomography of nanocrystals using unsupervised deep learning. ACS Nano 16, 10314–10326 (2022).

Kieffer, J. & Karkoulis, D. PyFAI, a versatile library for azimuthal regrouping. J. Phys. Conf. Ser. 425, 202012 (2013).

Ashiotis, G. et al. The fast azimuthal integration Python library: pyFAI. J. Appl. Crystallogr. 48, 510–519 (2015).

Hammersley, A. FIT2D: a multi-purpose data reduction, analysis and visualization program. J. Appl. Crystallogr. 49, 646–652 (2016).

Guo, J. et al. Network decoupling: from regular to depthwise separable convolutions. British Machine Vision Conference (BMVC) (2018).

Chollet, F. Xception: deep learning with depthwise separable convolutions. in 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR) 1800–1807 (IEEE, 2017).

Paszke, A. et al. Automatic differentiation in PyTorch. NIPS 2017 Workshop on Autodiff (2017).

Dong, Y. et al. Exascale image processing for next-generation beamlines in advanced light sources. Nat. Rev. Phys. 4, 427–428 (2022).

Li, J. et al. Machine-and-data intelligence for synchrotron science. Nat. Rev. Phys. 3, 766–768 (2021).

Liu, Y. et al. Mamba: a systematic software solution for beamline experiments at HEPS. J. Synchrotron Radiat. 29, 664–669 (2022).

Acknowledgements

This work was funded by the National Science Foundation for Young Scientists of China (Grant No. 12005253), the Strategic Priority Research Program of the Chinese Academy of Sciences (XDB 37000000), and the Innovation Program of the Institute of High Energy Physics, CAS (No. E25455U210). All authors gratefully acknowledge the support from the BL10U1 and BL19U2 beamline at Shanghai Synchrotron Radiation Facility (SSRF), the I22 beamline at Diamond Light Source, and the 1W2A and 3W1A beamline at Beijing Synchrotron Radiation Facility (BSRF) for generously offering beamtime to acquire experimental data.

Author information

Authors and Affiliations

Contributions

Y.D. and Y.Z. conceived and supervised the whole project. Z.Z. and Z.D. collected the experimental data. Z.Z. developed and tested all the algorithms described in the paper. Z.Z., C.L., and Y.Z. wrote the manuscript with inputs from all co-authors. Z.Z. and C.L. are considered the co-first author. X.B., C.Z., and Y.H. helped to provide constructive inputs to the project. Y.D., Y.Z., J.Z., W.H., Z.D., and L.Z. helped with project design and manuscript refinement. All authors have given approval to the final version of the manuscript.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Zhou, Z., Li, C., Bi, X. et al. A machine learning model for textured X-ray scattering and diffraction image denoising. npj Comput Mater 9, 58 (2023). https://doi.org/10.1038/s41524-023-01011-w

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41524-023-01011-w