Abstract

Novel X-ray methods are transforming the study of the functional dynamics of biomolecules. Key to this revolution is detection of often subtle conformational changes from diffraction data. Diffraction data contain patterns of bright spots known as reflections. To compute the electron density of a molecule, the intensity of each reflection must be estimated, and redundant observations reduced to consensus intensities. Systematic effects, however, lead to the measurement of equivalent reflections on different scales, corrupting observation of changes in electron density. Here, we present a modern Bayesian solution to this problem, which uses deep learning and variational inference to simultaneously rescale and merge reflection observations. We successfully apply this method to monochromatic and polychromatic single-crystal diffraction data, as well as serial femtosecond crystallography data. We find that this approach is applicable to the analysis of many types of diffraction experiments, while accurately and sensitively detecting subtle dynamics and anomalous scattering.

Similar content being viewed by others

Introduction

X-ray crystallography has revolutionized our understanding of the molecular basis of life by providing atomic-resolution experimental access to the structure and dynamics of macromolecules and their assemblies. In an X-ray diffraction experiment, the electrons of a molecular crystal scatter X-rays, yielding patterns of constructive interference recorded on an X-ray detector. The resulting images contain discrete spots, known as reflections, with intensities proportional to the squared amplitudes of the Fourier components (structure factors) of the crystal’s electron density. Each structure factor reports on the electron density at a specific spatial frequency and direction, indexed by triplets of integers termed Miller indices. Estimates of the amplitudes and phases of these structure factors allow one to reconstruct the 3D electron density in the crystal by Fourier synthesis.

Based on these principles, advances in X-ray diffraction now permit direct visualization of macromolecules in action1 using short X-ray pulses generated at synchrotrons2,3 and X-ray Free-Electron Lasers (XFELs)4,5. The full realization of the promise of these methods hinges on the ability to separate signals in X-ray diffraction that result from subtle structural changes from a multitude of systematic errors that can be specific to a crystal, X-ray source, detector, or sample environment6. Even under well-controlled experimental conditions, redundant reflections are expressed on the X-ray detector with different scales (Fig. 1). These scales depend non-linearly on the context of each observed reflection as illustrated in Fig. 1b-d. For example, beam properties like intensity fluctuations7 and polarization8, crystal imperfections like mosaicity9 and radiation damage10, and absorption and scattering of X-rays by material around the crystal all modulate the measured diffraction intensities in a manner, which varies throughout the experiment.

a Geometry of a conventional diffraction experiment: a crystal (shown as a hexagon) scatters an incident X-ray beam and yields a pattern of reflections (gray spots) on a detector. Three metadata of the measurements are indicated: scattering angle 2θ, crystal rotation angle, and polar angle, ϕ. b-d depict the dependence of the observed intensity distributions on these metadata for a hen egg-white lysozyme dataset. The 2-dimensional histograms show the number of counts in each of bins on a logarithmic scale. White dashed lines indicate the median intensity in each x-axis bin. Reflections with I/σI < = 0 were discarded from this analysis. b Diffraction intensities decrease with increasing scattering angle, or resolution. c Diffraction intensities vary with crystal rotation angle, a proxy for cumulative radiation dose and variations in diffracting volume. d Diffraction intensities depend on polar angle due to polarization of the X-ray source and absorption effects.

Traditionally, these artifacts are accounted for by estimating a series of scale parameters that are intended to explicitly model the physics of the sources of error6,11,12,13 (see the Supplementary Note for a description of crystallographic data reduction). The observed intensities are then corrected by each scale parameter to yield scaled intensities. To obtain consensus merged intensities, equivalent observations are then merged by weighted averaging assuming normally distributed errors. This approach thus uses a series of simplifying ‛data reduction’ steps that work well for standard diffraction experiments at synchrotron beamlines, but are less suited for a rapidly evolving array of next-generation X-ray diffraction experiments.

Although intensities are proportional to squared structure factor amplitudes, negative intensities can result from processing steps such as background subtraction and may persist through scaling and merging. French-Wilson corrections are commonly applied to ensure that inferred structure factor amplitudes are strictly positive14. Rather than being based on a physical model of diffraction, this step is based on a Bayesian argument. Namely, structure factor amplitudes are positive and can be expected to follow the so-called Wilson distribution15. In a Bayesian sense, the Wilson distribution serves as a prior probability distribution, or prior. This prior can be combined with a statistical model of the true intensity given the observed merged intensity to obtain a posterior probability distribution, or posterior, of the true merged intensity that is strictly positive.

To address the needs of new X-ray diffraction experiments, here we introduce a Bayesian model which builds on the paradigm of French and Wilson14. We implement this model in an open-source software, Careless, which performs scaling, merging, and French-Wilson corrections in a single step by directly inferring structure factor amplitudes from unscaled, unmerged intensities. In our model, the probability calculation is “forward," predicting integrated intensities from structure factor amplitudes and experimental metadata. As a consequence, the analytical tractability of the inference is no longer a concern and the model relating structure factor amplitudes to integrated intensities can be arbitrarily complex and include both explicit physics and machine learning concepts. We demonstrate that this model can accurately and sensitively extract anomalous signal from single-crystal, monochromatic diffraction at a synchrotron, time-resolved signal from single-crystal, polychromatic diffraction at a synchrotron, and anomalous signal from a serial femtosecond experiment at an XFEL. Our analyses show that this single model can implicitly account for the physical parameters of diffraction experiments with performance competitive with domain-specific, state-of-the-art analysis methods. Although we focus on X-ray diffraction, we believe the same principles can be applied to any diffraction experiment.

Results

Accurate inference of scale parameters and structure factor amplitudes from noisy observations

In a typical diffraction experiment, reflection intensities are recorded along with error estimates and metadata, like crystal orientation, location on the detector, image number, and Miller indices. As shown in Fig. 1, the observed intensities vary systematically due to physical artifacts correlated with the metadata, causing the reflections to be related to the squared structure factor amplitudes by different multiplicative scale factors, or scales. These different scales must be accounted for in the analysis of diffraction experiments. Here, we present a probabilistic forward model of X-ray diffraction, which we implemented in a software package called Careless. As illustrated in Fig. 2a, this model can be abstractly expressed as a probabilistic graphical model. Specifically, the distribution of observable intensities, Ihi, for Miller index h in image i, is predicted from structure factor amplitudes Fh and scale factors Σ, which are estimated concurrently. We do so in a Bayesian sense—we estimate the posterior distributions of both the structure factor amplitudes and scale function. We approximate the structure factor amplitudes as statistically independent across Miller indices and use the Wilson distribution as a prior on their magnitudes15.

a A probabilistic graphical model summarizes our basic statistical formalism: Careless calculates a probabilistic scale, Σ, as a function of the recorded metadata, M, and learned parameters, θ. Observed intensities, Ih,i, for Miller index h and image i are modeled as the product of the scale and the square of the structure factor amplitude Fh, that is, as \({{{\Sigma }}}_{h,i}\cdot {F}_{h}^{2}\). b The global scale function that maps the recorded metadata to the probabilistic scale, Σ, takes the form of a multilayer perceptron. c–e Inference of scales and structure factors from simulated data comprising 10 draws from the ground truth model. (c) Input parameters for the simulated data were chosen to recapitulate the non-linear scales observed in diffraction data. d Noisy observations were generated from these input parameters, which reflects the measurement errors in a diffraction experiment (ten per structure factor). Shown are simulated measured values. Error bars indicate 95% confidence intervals. e This statistical model allows for joint, rather than sequential, inference of the posterior distributions of structure factor amplitudes and scales, and therefore of implied intensity (bottom panel). The violin plots in the top panel show the posterior probability with whiskers indicating the extrema of 10,000 samples drawn from the posterior distribution of the inferred F. Shaded bands indicate 95% confidence intervals around the posterior means. The posterior mean of the scale function is indicated as a dashed line (middle panel), and the posterior mean for the reflection intensities are shown as circles (bottom panel). For this toy example, the inferred values in (e) can be compared to the known ground truth in (c).

By contrast, most contributions to scale factors vary slowly across the data set and are accounted for using a global parametrization. By default, this scale function, Σ, is implemented as a deep neural network, which takes the metadata as arguments and predicts the mean and standard deviation of the scale function for each observation (Fig. 2b). To describe measurement error, Careless supports both a normally distributed error model, and a robust Student’s t-distributed error model. The implementation of Careless is described in further detail in the methods section. The full Bayesian model will typically contain tens of thousands of unique structure factor amplitudes and a dense neural network for the scale function. Use of Markov chain Monte Carlo methods16, which sample from the posterior, would be computationally prohibitive. Instead, inference is made possible by variational inference17,18, in which the parameters of proposed posterior distributions are directly optimized.

We first illustrate the application of the Careless model using a small simulated dataset as shown in Fig. 2. As shown in Fig. 2c, we did so by generating true intensities for a toy “crystal" with 3 structure factors of different amplitudes in a mock diffraction experiment with a sharply varying scale function (similar to Fig. 1d). The observed intensities would be recorded with measurement error, yielding a small set of noisy observations (Fig. 2d). Using Careless, we can infer the posterior distributions of the structure factor amplitudes and of the scale factors, and therefore of the true intensities (Fig. 2e). The inferred parameters from Careless show a close correspondence with the true values used to simulate the noisy observations.

Robust inference of anomalous signal from monochromatic diffraction

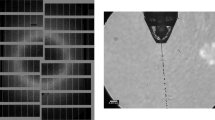

We next assessed the ability of Careless to extract small crystallographic signals from conventional monochromatic rotation series data (a detailed walk-through of each example is available at https://github.com/rs-station/careless-examples). To do so, we applied Careless to a sulfur single-wavelength anomalous diffraction (SAD) data set of lysozyme collected at ambient temperature19. It consists of a single 1,440 image rotation series collected in 0.5 degree increments at a low X-ray energy, 6.55 keV, at Advanced Photon Source beamline 24-ID-C. These data contain two sources of outliers (Fig. 3a). Most significantly, leakage from a higher energy undulator harmonic resulted in a second, smaller diffraction pattern in the center of each image. Additionally, a small number of reflections are located underneath a shadow from the beam stop mounting bracket near the edge of the detector (in the 2 to 2.2 Å range). These artifacts mean that there are many outliers at low resolution and some at high resolution in this data set. Conventional scaling and merging in Aimless or XDS12,20 is successful for these data because these approaches use outlier rejection to explicitly identify and remove spurious reflections. These data therefore represent a challenging test case for our approach which considers all integrated reflections without outlier rejection.

a A sample diffraction pattern from the lysozyme data set indicating strong spots which could not be indexed by DIALS52. Insets show sources of outliers in the data (left: a beam stop shadow; right: a secondary diffraction pattern resulting from an undulator harmonic). b Ten-fold cross-validation of merging as a function of the likelihood degrees of freedom. Lines: average values; gray bands: bootstrap 95% confidence intervals from 10 repeats with different randomly chosen test reflections. c Spearman correlation coefficient between anomalous differences estimated from half-datasets with jointly trained scale function parameters. d Density-modified experimental electron density maps produced with PHENIX Autosol21 using the sulfur substructure from a reference structure (PDBID: 7L84), contoured at 1.0 σ. Rendered with PyMOL62.

To address the outliers, we used the cross-validation implemented in Careless to select an appropriate degrees-of-freedom (d.f.) parameter of a Student’s t-distributed likelihood function for these data. Titrating the number of degrees of freedom, we found that 16 d.f. resulted in the best Spearman correlation coefficient between observations and model predictions (Fig. 3b). Accordingly, at 16 degrees of freedom, the structure factor amplitudes exhibit comparatively high half-dataset anomalous correlations (CCanomFig. 3c). By the same measure, Careless with this tuned likelihood function recovers significantly more consistent signal at intermediate and high resolution than the conventional merging program, Aimless20. A more extensive comparison of Careless’ scaling protocols with Aimless (Supplementary Fig. 5) shows that this pattern generally holds, whether quantified by Spearman’s anomalous correlation coefficient or weighted or unweighted Pearson correlation coefficients.

SAD phasing provides a further test of the quality of anomalous signal inferred by Careless. We used Autosol21 to phase our merging results, comparing the Careless output with a Student’s t-distributed likelihood with ∞ or 16 degrees of freedom to the same data merged by Aimless. In order to ensure a consistent origin, we supplied the sulfur atom substructure from the final refined model (PDBID: 7L84) during phasing. Although we provided the heavy atom substructure in the experiments reported here, we were also able to phase each of these data sets ab initio (Supplementary Table 1). It is evident from the density-modified experimental maps in Fig. 3d that the Careless output with the normally distributed error model (∞ d.f.) is of much lower quality. By contrast, both Careless with 16 d.f. and Aimless produced clearly interpretable experimental maps. We repeated these analyses with data processed entirely in XDS, another conventional program that performs all steps from indexing to merging. As shown in Supplementary Fig. 5, scaling in XDS results in CCanom similar to Aimless. XDS output, followed by ab initio phasing in AutoSol, further results in a similar Figure of Merit and somewhat worse Bayes-CC (the difference is within the estimated error). Consistent with this, the experimental electron density map from XDS (Supplementary Fig. 6a) is of slightly lower visual quality for the scenes illustrated in Fig. 3d.

Anomalous signal in real space, on cysteine and methionine S atoms, provides an additional measure of the accuracy of the estimated structure factor amplitudes. To this end, we performed limited automated refinement in Phenix using a sulfur-omit version of PDB ID 7L8419 as a starting model, and inferred peak heights from the resulting anomalous omit map. As shown in Supplementary Table 2, Aimless outperforms Careless with 16 d.f. and XDS in this regard, underscoring the subtle differences in the requirements each test imposes on the data.

As we illustrate in the online example “Boosting SAD signal with transfer learning", it is possible to further improve scaling in Careless by using a simple transfer-learning procedure in which the parameters of the scale function are learned by a non-anomalous pre-processing step. With this addition, Careless attains higher average anomalous peak height than Aimless and XDS (Supplementary Table 2), better Spearman CCanom (Supplementary Fig. 5), and equal (by figure of merit) or better (by Bayes-CC and visual appearance) phased map quality (Supplementary Table 1, Supplementary Fig. 6c). Moreover, Careless can post-process already scaled XDS data and improve anomalous peak heights (Supplementary Table 2). Relative performance may, of course, vary from dataset to dataset. In summary, Careless supports the robust recovery of high-quality experimental electron density maps and anomalous signal in the presence of physical artifacts.

Sensitive detection of time-resolved change from polychromatic diffraction data

Polychromatic (Laue) X-ray diffraction provides an attractive modality for serial and time-resolved X-ray crystallography, as many photons can be delivered in bright femto- or picosecond X-ray pulses22. In particular, most reflections in these diffraction snapshots are fully, rather than partially, observed even in still diffraction images. Laue diffraction processing remains, however, a major bottleneck3 due to its polychromatic nature: The spectrum of a Laue beam is typically peaked with a long tail toward lower energies. This so-called “pink” beam means that reflections recorded at different wavelengths are inherently on different scales. In addition, reflections, which lie on the same central ray in reciprocal space will be superposed on the detector. These “harmonic” reflections need to be deconvolved to be merged23.

Typical polychromatic data reduction software addresses these issues in a series of steps—it uses the experimental geometry to infer which photon energy contributed most strongly to each reflection observation. It then scales the reflections in a wavelength-dependent manner by inferring a wavelength normalization curve related to the spectrum of the X-ray beam24. Finally, it deconvolves the contributions to each harmonic reflection by solving a system of linear equations for each image23. The need for these steps made it difficult to scale and merge polychromatic data. Not surprisingly, there are no modern open-source merging packages supporting wavelength normalization and harmonic deconvolution.

By contrast, the forward modelling approach implemented in Careless readily extends to the treatment of Laue diffraction. First, to handle wavelength normalization, providing the wavelength of each reflection estimated from experimental geometry as metadata enables the scale function to account for the nonuniform spectrum of the beam. Harmonic deconvolution requires accounting for the fact that the intensity of a reflection is the sum over contributions from all Miller indices lying on the relevant central ray—in the forward probabilistic model implemented in Careless this is a simple extension of the monochromatic case.

To demonstrate that Careless effectively merges Laue data, we applied it to a time-resolved crystallography data set—20 images from a single crystal of photoactive yellow protein (PYP) in the dark state and 20 images each collected 2 ms after a blue laser pulse. Blue light induces a trans- to-cis isomerization in the p-coumaric acid chromophore in the PYP active site, which can be observed in time-resolved experiments25 (Fig. 4a). We first integrated the Bragg peaks using the commercial Laue data analysis software, Precognition (Renz Research). Then we merged the resulting intensities using Careless.

a When exposed to blue light, the PYP chromophore undergoes trans-to-cis isomerization. In total, 40 images from a single crystal of PYP were processed: 20 were recorded in the dark state, and 20 were recorded 2 ms after illumination with a blue laser pulse. Each image is the result of 6 accumulated X-ray pulses. The signal to noise and multiplicity of each time point is reported in Supplemental Fig. 4. b The data were randomly divided in half by image and merged with scale function parameters learned by merging the full data set. Merging with Careless gave excellent correlation between the structure factor estimates of both halves. Error bars indicate the standard deviation of the structure factor posteriors for the half data sets around the posterior means. c Half-dataset correlation coefficients as a function of resolution, including both the dark and 2 ms data. d Ground-state 2Fo − Fc map created by refining the ground-state model (PDBID: 2PHY) against the dark merging results (countoured at 1.5 σ). The phases of this refined model were used for the difference maps in (e-g). e F2ms − Fdark time-resolved difference map showing the accumulation of blue positive density around the excited state chromophore (blue model, PDBID: 3UME) and depletion of the ground state (yellow model, PDBID: 2PHY). f F2ms − Fdark weighted time-resolved difference map showing localization of the difference density to the region surrounding the chromophore. g F2ms − Fdark weighted time-resolved difference map showing large differences around the chromophore. All difference maps are contoured at ± 3.0σ.

Careless produces high-quality structure factor amplitudes for this data set, as judged by half-data set correlation coefficients (Fig. 4c and 4b). We refined a ground-state model against the ‘dark’ data starting from a reference model (PDBID: 2PHY)26, yielding excellent 2Fo − Fc electron density maps (Fig. 4d). Using the phases from this refined ground-state model, we then constructed unweighted difference maps \(|{{\Delta }}{F}_{h}|\, \approx \, (|{F}_{h}^{\,{{\mbox{2ms}}}}|-|{F}_{h}^{{{\mbox{dark}}}}|)\), \({\phi }_{h} \, \approx \, {\phi }_{h,calc}^{dark}\). As shown in Fig. 4e, these maps contain peaks around the PYP chromophore. To better visualize these maps, we applied a previously described weighting procedure27. The weighted maps (Fig. 4f, 4g) show strong difference density localized to the chromophore, consistent with published models of the dark and excited-state structures25.

Previous generations of Laue merging software required discarding reflections below a particular I/σI cutoff during scaling and merging. Otherwise, the resulting structure factor estimates are not accurate enough to be useful in the analysis of time-resolved structural changes. Here, we applied no such cutoff. Likewise, the appearance of interpretable difference electron density in the absence of a weighting scheme (Fig. 4e) is extraordinary. The ability of Careless to identify these difference signals demonstrates an unprecedented degree of accuracy and robustness to outliers. As such, Careless improves on the state of the art for the analysis of Laue experiments.

Recovering anomalous signal from a serial experiment at an X-ray Free-Electron Laser

X-ray Free-Electron Lasers (XFELs) are revolutionizing the study of light-driven proteins28,29,30,31,32, enzyme microcrystals amenable to rapid mixing33,34,35, and the determination of damage-free structures of difficult-to-crystallize targets36,37. Diffraction data from XFEL sources involve two unique challenges that result from the serial approach commonly used to outrun radiation damage4. The first challenge of serial crystallography is that each image originates from a different crystal with a different scattering mass, which diffracts one intense X-ray pulse before structural damage occurs. A completely global scaling model is therefore not appropriate. To overcome this limitation, we exploited the modular design of Careless to incorporate local parameters into the scale function. Specifically, we appended layers with per-image kernel and bias parameters to the global scale function (Fig. 5a). Effectively, these additional layers allow the model to learn a separate scale function for each image. To address the risk of overfitting posed by the additional parameters, we determined the optimal number of image layers by crossvalidation (see Supplementary Fig. 4 for determination of optimal the number of image-specific layers). A second challenge of serial crystallography is that all images are stills—there is neither time to rotate the crystal during exposure, nor the spectral bandwidth to observe the entirety of each reciprocal lattice point (Fig. 5b). For a still image, the maximal intensity for a given reflection is observed on the detector when the so-called Ewald sphere intersects the reflection centroid. The Ewald offset (EO) measures the degree to which a particular reflection observation deviates from its maximal diffraction condition (the length of the orange arrow in Fig. 5b) and can be estimated from the experimental geometry for each observation. To account for partiality, we hence provided the EO estimates as a metadata.

a By adding image-specific layers to the neural network, Careless can scale diffraction data from serial XFEL experiments. b The Ewald offset (EO), shown in red, can optionally be included during processing. S0 and S1 represent the directions of the unscattered and diffracted X-rays, respectively. c Half-dataset correlation coefficients by resolution bin for data processed in Careless, with and without Ewald offsets, and CCTBX. d Refinement R-factors from phenix.refine using Careless, with and without Ewald offsets, and CCTBX. e Careless 2Fo − Fc electron density map, from inclusion of EO, contoured at 2.0 σ (purple mesh) overlayed with thermolysin anomalous omit map contoured at 5.0 σ (orange mesh). f Peak heights of anomalous scatterers in an anomalous omit map, in σ units, for Careless output with and without Ewald offsets and conventional data processing with CCTBX.

To test if our model could leverage per-image scale parameters and EO estimates, we applied Careless to unmerged intensities from an XFEL serial crystallography experiment (CXIDB, entry 81). In this experiment38, a slurry of thermolysin microcrystals was delivered to the XFEL beam by a liquid jet. The data contain significant anomalous signal from the zinc and calcium ions in the structure. We limited analysis to a single run containing 3,160 images. We processed the integrated intensities in Careless using ten global layers and two image layers, both with and without the inclusion of the Ewald offset metadata to evaluate whether its inclusion improves the analysis.

As shown in Fig. 5, Careless successfully processes the serial XFEL data. In particular, use of the EO metadatum yielded markedly superior results as judged by the half-dataset correlation coefficient (Fig. 5c) as well as the refinement residuals (Fig. 5d). To verify that including the metadata also improved the information content of the output, we constructed anomalous omit maps after phasing the merged structure factor amplitudes by isomorphous replacement with PDBID 2TLI39. Specifically, we omitted the anomalously scattering ions in the reference structure during refinement against the Careless output. The anomalous difference peak heights at the former location of each of the anomalous scatterers, tabulated in Fig. 5f, confirm that the inclusion of Ewald offsets not only improved the accuracy of structure factor amplitudes estimates but also yielded anomalous differences on par with XFEL-specific analysis methods.

Discussion

Statistical modeling can account for diverse physical effects

We have shown that Careless successfully scales and merges X-ray diffraction data without the need for explicit physical models of X-ray scattering—comparing favorably to established algorithms tailored to specific crystallography experiments. This begs the question: What sorts of physical effects can the Careless scale function account for using a general-purpose neural network and Wilson’s prior distributions on structure factor amplitudes15? Though our scale function operates in a high-dimensional space, making it difficult to interrogate directly, we have found that the impact of excluding specific reflection metadata can be used to assess the physical effects that are implicitly accounted for by Careless.

For example, the resolution of each observed reflection is essential for proper data reduction in Careless. This suggests that, among other corrections, the model learns an isotropic scale factor akin to the per-image temperature factors included in most scaling packages6. We have also found that in some cases the merging model benefits from the inclusion of the observed Miller indices during scaling, as was the case with the lysozyme and PYP data presented here. This indicates that for some data sets Careless learns an effective anisotropic scaling model. Likewise, the inclusion of the detector positions of the reflections seems to improve merging performance. Since no prior polarization correction has been applied to the PYP and thermolysin intensities used here, this suggests that source polarization is implicitly corrected by the model. Specific crystallographic experiments can also benefit from the inclusion of domain-specific metadata. The success of merging Laue data implies that Careless can learn a function of the spectrum of the X-ray source (Fig. 4). Finally, the XFEL example shows the model is competent to infer partialities in still images (Fig. 5). In principle, the inclusion of local parameters in the form of image layers allows the model to make geometric corrections similar to the partiality models implemented in other packages40,41,42. The major difference is that our work does not require an explicit model of the line shape of Bragg peaks nor of the resolution-dependence of the peak size (mosaicity).

Robust statistics instead of outlier rejection

Occasionally, observed reflections have spurious measured intensities. These outliers can arise from various physical effects during a diffraction experiment, such as ice or salt crystals, detector readout noise, or absorption and scattering by surrounding material6. In conventional data reduction, outlier observations are frequently detected and filtered during data processing to improve the estimates of merged intensities and statistics6,13. This step is necessary because the inverse-variance weighting scheme43 is otherwise easily skewed by spurious observations.

Instead of outlier rejection, Careless employs robust statistical estimators. The processing of the native SAD data from lysozyme (Fig. 3) show its benefit. These data have significant outliers at low and high resolution (Fig. 3a), and the anomalous content is notably improved through the use of a robust error model (Fig. 3c). Importantly, doing so also improves experimental electron density maps compared to the corresponding normally distributed error models (Fig. 3d). The influence of outlier observations can be tuned using the degrees of freedom of the Student’s t-distribution during data processing. This approach to handling outlier observations highlights the flexibility of the Careless model and reduces the need for data filtering steps.

Sensitive detection of small structural signals in change-of-state experiments

Our processing of the time-resolved PYP photoisomerization Laue dataset demonstrates that Careless can accurately recover the signal from small structural changes (Fig. 4). The use of Careless in this context involved fitting a common scaling model for both the dark and 2ms datasets, while inferring two separate sets of structure factor amplitudes, one for each state. The quality of the difference maps generated from this processing (Fig. 5e-g) suggests that the common scaling model employed by Careless is effective for analyzing these change-of-state experiments. Importantly, the common scaling model may improve difference maps by ensuring that inferred structure factor amplitudes are on the same scale, producing a balanced difference map with both positive and negative features.

These features suggest that Careless will have strong applications in time-resolved experiments and related change-of-state crystallography experiments. Furthermore, although Careless currently only provides Wilson’s distributions as a prior over structure factor amplitudes, it is possible to imagine using stronger priors to further constrain the inference problem. Such priors are an active area of research, and further improve the sensitivity to small differences between conditions in time-resolved data sets (see the online example “Using a bivariate prior to exploit correlations between Friedel mates” for a prototype implementation).

Supporting next-generation diffraction experiments

In its current implementation, Careless requires that the full data set reside in memory on a single compute node or accelerator card (each presented examples can run on a consumer-grade NVIDIA 3000 series GPU in under an hour). This is a significant limitation for free-electron laser applications. The next generation of X-ray free-electron lasers will provide data acquisition rates of 103 − 106 diffraction images per second44 leading to very large data sets with images numbering in the millions or more. In this setting, it would be advantageous to have an online merging algorithm, which did not require access to the entire data set at each training step. Currently, the reliance on local parameters to handle serial crystallography data precludes this. However, we are exploring strategies to replace these local parameters with a global function. This will enable Careless to be implemented with stochastic training45, in which gradient descent is conducted on a subset of the data at each iteration. This training paradigm allows variational inference to be used for data sets too large to fit in memory. With stochastic training, variational inference will be an excellent candidate for merging large XFEL data sets on-the-fly during data acquisition.

In summary, we have described a general, extensible framework for the inference of structure factor amplitudes from integrated X-ray diffraction intensities. We find the approach to be accurate, and applicable to a wide range of X-ray diffraction modalities. Careless is modular and open-source. We encourage users to report their experiences with downstream software and to contribute extensions through github.com/rs-station/careless. Careless provides a foundation for the ongoing development and systematic application of advanced probabilistic models to the analysis of ever more powerful diffraction experiments.

Methods

Merging X-ray data by variational inference

Observed reflection intensities can be thought of as the product of diffraction in an ideal experiment and a local scale, which describes the systematic error in each reflection observation. This implies a graphical model (Supplementary Fig. 1), relating the observed intensities, Ih,i to two sets of latent variables, the reflection scales, Σh,i, and the structure factor amplitudes, Fh. The corresponding joint distribution factorizes as

In this setting, it is most desirable to estimate the posterior,

The exact posterior is generally intractable for such problems. So, we posit an approximate posterior q taken from a parametric family of distributions. This is the so-called variational distribution or surrogate posterior. We then use optimization to learn parameters of q such that it approximates the desired posterior. One way to accomplish this is to minimize the Kullback-Leibler divergence between q and the posterior.

Note that the expectation, \({{\mathbb{E}}}_{q}\left[\log p(I)\right]\) does not depend on the parameters of q. It is therefore a constant. Disregarding this constant term,

and negating,

leads to the optimization objective of variational inference, which is called the Evidence Lower BOund (ELBO)17,18. Maximizing this quantity with respect to the variational distribution, q, recovers an approximation to the posterior distribution. After re-arranging the terms,

it is clear that the ELBO can be thought of as the sum of expected log-likelihood of the data and the negative Kullback-Leibler divergence between the surrogate posterior and the the prior. The expected log-likelihood term encourages the model to faithfully represent the data. The KL divergence term acts as a penalty, which discourages the surrogate posterior from wandering too far from the prior distribution. From the frequentist perspective, this is similar to a regularized maximum-likelihood estimator. This general form of the ELBO applies equally to any parameterization of the graphical model in Supplementary Fig. 1.

The parameterization used in this work slightly simplifies Equation (10). The graphical model in Supplementary Fig. 1, implies that the prior distribution p(F, Σ) factorizes p(F)p(Σ). Therefore, it is convenient to assume the surrogate posterior,

consists of statistically independent distributions for F and Σ. This is a modeling choice, and it need not be the case in other variational merging models with this graph. Factorizing q leads to an ELBO,

with separate Kullback-Leibler divergences for F and Σ. We can now begin to consider priors for each of these surrogate posteriors.

An uninformative prior on scales

It is difficult to reason about the appropriate prior distribution for scales, p(Σ). In all likelihood, this prior depends intimately on the details of the experiment. It will vary by sample and apparatus. In this work we choose an uninformative prior, Σ ~ q(Σ). Thereby, the second divergence term in the ELBO becomes zero, and whatever parameters define qΣ are simply allowed to optimize as dictated by the likelihood term. The objective used in this work is therefore

Posterior structure factors

In this work, the surrogate posteriors of structure factors are independently parameterized by truncated normal distributions.

with location and scale parameters \({\mu }_{{F}_{h}}\) and \({\sigma }_{{F}_{h}}\) and support

Both the location and scale parameters are constrained to be positive. This constraint is implemented with the softplus function, \({{{{{{{\rm{softplus}}}}}}}}(x)=\log (\exp (x)+1)\) in Careless version 0.2.0 and with the \(\exp\) in subsequent versions including 0.2.3 used for the additional lysozyme analyses in the supplemental information.

Posterior scales

As noted in section ‘An Uninformative Prior on Scales’, we choose not to impose a structured prior on reflection scales. Rather, we assert that the scale of a reflection should be computable from the geometric metadata recorded about each reflection during integration. Therefore, our model infers a function, which ingests metadata and outputs scale factors (Fig. 2a).

By default, we parameterize this function as a deep neural network with parameters θ. In particular, we use a multilayer perceptron with leaky rectified linear units (ReLU) as the activation. The parameters, correspond to the kernels and biases of each layer. The kernels are initialized to the identity matrix and biases to zeros. The number of hidden units in each layer takes the dimensionality of the metadata by default, but this is user-configurable. We set the default depth of the neural network to twenty layers which we find offers a reasonable balance of performance and stability. The model has a final, linear layer with 2 units. The output of the last layer is interpreted as the mean and standard deviation of the scale distribution with the standard deviation constrained to be positive in the same manner as the structure factor posteriors' parameters .

The correct scale function is the one which allows the model to recapitulate the data while letting the structure factors follow the desired prior distribution. Provided rich enough metadata about each reflection observation, variational inference will recover such a function.

One must use caution when selecting metadata. If information about the reflection intensities is provided to the scale function, the scale function may bypass the structure factors to directly minimize the expected log-likelihood. This leads to poor structure factor estimates. We recommend against including data such as the reflection uncertainties in the metadata, as they are strongly correlated with the intensities.

Wilson’s Priors

Wilson’s priors,

express the expected distributions of structure factors under the assumption that atoms are uniformly distributed within the unit cell15. The probability distribution over the structure factor amplitude Fh with Miller index h is expressed in terms of the multiplicity of the reflection ϵh. The multiplicity, a feature of the crystal’s space group, is a constant which can be determined for each Miller index. It corresponds to the contribution to the relative intensity of each reflection solely due to crystal symmetry. The Wilson prior has separate parameterizations for centric and acentric reflections. This form of Wilson’s priors differs from the one employed in the French Wilson algorithm14 in that it is independent of the scale, Σ. Because of this choice, the scale function can be inferred in parallel with the structure factor amplitudes. However, it implies that the structure factors output by Careless are on the same scale across resolution bins. This is an important consideration for downstream processing. Careless output may, for some applications, need to be rescaled to meet the expectations of crystallographic data analysis packages. An input flag is available to apply a global Wilson B-factor.

Likelihood functions

The first term in the ELBO is an expected log-likelihood. In this work, we present two parameterizations of this term: a normal distribution

which is suitable for data with few outliers, as well as a robust t-distribution

which adds an additional hyperparameter. The degrees of freedom, ν, titrates the robustness of the model toward outliers. In the limit ν → ∞, the t-distribution approaches a normal distribution.

Model training

In this work, we use the reparameterization trick to estimate gradients of the ELBO with respect to the parameters of the variational distributions, q. First applied in the Variational Autoencoder46, reparameterization is a common tool to estimate gradients of probabilistic programs. In our implementation, the ELBO is approximated by random samples from the surrogate distributions,

where Fh,s and Σh,i,s denote reparameterized samples from the surrogate posteriors, and the number of Monte Carlo samples, S is a hyperparameter. By default a single sample is used (S = 1). For training, we use the Adam optimizer47 with hyperparameters α = 0.001, β1 = 0.9, and β2 = 0.99.

Cross-validation

Careless provides two modes of cross-validation. In the first paradigm, the model is first trained on the full data set yielding structure factor estimates and neural network weights. Next, the data are partitioned randomly into halves by image. Using the neural network weights learned from the full data set, each half is merged separately by optimizing the structure factors. During this process the neural network weights remain fixed. The resulting pair of structure factor estimates may be correlated to produce a measure similar to the canonical CC1/2 widely used in crystallography. This mode of cross-validation does not necessarily inform the user about the degree of overfitting. Rather, the CC1/2 value is more indicative of the data consistency.

The second type of cross-validation supported by Careless is intended to explicitly test for overfitting in the scale function. In this mode, a fraction of the data is held out during training time. After training, the model is applied to these data in order to predict intensities for the held-out fraction. The correlation between the observed intensities and the predictions provides an estimate for how well the model generalizes. The choice of summary statistic is up to the user. However, we recommend Spearman’s rank correlation coefficient as a robust alternative to Pearson’s. In the following section, we address the issue of how to recover intensity predictions and moments from our model.

Predictions

Model predictions are essential to quantify model overfitting by cross-validation. The predicted reflection intensities implied by our model are the product of random variables,

The variational distributions inferred by Careless imply a probability distribution for the intensity of each reflection. We do not have an analytical expression for this distribution but can compute the expected value,

taking advantage of the fact that in the Careless formalism Σh,i and Fh are assumed to be statistically independent in the posterior distribution. The first term in the product,

is computed by the scale function, fθ, from the metadata vector, Mh,i,

Note that fθ returns a two-element vector, the first of which is the expected value of Σh,i, and the second is the standard deviation. The second term, is calculated from the moments of the truncated normal surrogate posterior, \({q}_{{F}_{h}}\). These moments have analytical expressions which are implemented in many statistical libraries, including TensorFlow Probability48 which we use in this work.

It is also possible to compute the second moment of the predictions,

where the fourth non-central moment, \(\langle {F}_{h}^{4}\rangle\) of \({q}_{{F}_{h}}\) has an analytical expression which is implemented in SciPy49.

Harmonic deconvolution for Laue diffraction

To implement harmonic deconvolution, the Careless ELBO approximator needs to be modified to update the center of the likelihood distribution. By summing over each contributor on the central ray, the new ELBO approximation becomes

which is readily optimized by same protocol demonstrated in Supplementary Fig. 3. In the code base, harmonics are handled by having a separate class of likelihood objects for Laue experiments. In practice, one could use the polychromatic likelihood to merge monochromatic data with no ill effect on the quality of the results. In that sense, this is the more general version of the ELBO for diffraction data. However, doing so would incur a performance cost given the underlying implementation, which is why we maintain separate likelihoods for mono and polychromatic experiments. Regardless, the core merging class inside Careless is competent to fit both sorts of data.

Data collection and analysis

Data collection for hen egg white lysozyme was described in reference19. Data for photoactive yellow protein were collected as described in reference50. Collection of thermolysin data was described in38. Scaling and merging was performed using Careless version 0.2.0 or version 0.2.3 in the case of the “Image Layer" and “Transfer" protocols presented in Supplementary Tables 1 and 2 and Supplementary Figs. 5 and 6. DIALS version 3.1.4 was used to index and integrate observed reflections for hen egg white lysozyme. Aimless version 0.7.4 was used to merge the integrated intensities for hen egg white lysozyme data. For the XDS analysis, the Jan 10, 2022 version was used along with the 2021/1 version of SHELX. Precognition version 5.2.2 was used to index and integrate the polychromatic PYP data. cctbx.xfel version 2021.11.dev3+4.g05389c3054 was used to scale and merge thermolysin XFEL data; the merging parameters are available in the Zenodo deposition. All model refinement and phasing was performed in PHENIX version 1.18.2. The refinement outputs and log files, including parameter settings are deposited in Zenodo. The anomalous peak heights presented in Fig. 5 and Supplementary Table 2 report anomalous signal based on anomalous difference maps obtained after refinement of an S-omit (Supplementary Table 2) or Zn, Ca-omit refined model of each protein, and were quantified using the “Difference map peaks...” function Coot version 0.9.6. For Fig. 3, we used five cycles of isotropic atomic temperature factors and rigid body refinement. For thermolysin, Fig. 5, atomic coordinates were also refined as they improved refinement residuals. One could, instead, work with initial omit map peak heights reported during de novo phasing. In our experience, these anomalous peak heights depend strongly on substructure search parameters, and are therefore less suitable for comparison of scaling methods.

Reporting summary

Further information on research design is available in the Nature Portfolio Reporting Summary linked to this article.

Data availability

The refined lysozyme structure19 is deposited in the Protein Data Bank under PDBID 7L84 [https://www.rcsb.org/structure/7L84]. The raw lysozyme diffraction images are available through the SBGrid Data Bank under accession code 816 [https://data.sbgrid.org/dataset/816/]. The ground state photoactive yellow protein structure26 is deposited in the Protein Data Bank under 2PHY [https://www.rcsb.org/structure/2PHY]. The excited state photoactive yellow protein structure60 is deposited in the Protein Data Bank under 3UME [https://www.rcsb.org/structure/3UME]. The thermolysin structure39 is deposited in the Protein Data Bank under 2TLI [https://www.rcsb.org/structure/2TLI]. The integrated thermolysin diffraction data61 are available from the Coherent X-ray Imaging Data Bank under accession code 81 [http://www.cxidb.org/id-81.html]. Some data processing statistics are provided in Supplementary Tables 3–5 as well. The three data sets discussed in this manuscript have been adapted into examples available through the careless-examples GitHub page [https://github.com/rs-station/careless-examples] including the unmerged diffraction data and relevant merging scripts. The results presented here including all merged structure factors and crossvalidation data along with the intermediate analysis used to generate all figures and tables had been deposited in Zenodo under accession code 10.5281/zenodo.6408749 [https://doi.org/10.5281/zenodo.6408749].

Code availability

The source code used to generate all figures and tables is freely available from Zenodo [https://doi.org/10.5281/zenodo.6408749]. The algorithm described, Careless, is implemented in a python package which is available from our GitHub page (https://github.com/rs-station/careless). It can be installed on Mac OS or Linux with the popular python package manager, pip.

References

Šrajer, V. & Schmidt, M. Watching proteins function with time-resolved x-ray crystallography. J. Phys. D: Appl. Phys. 50, 373001 (2017).

Graber, T. et al. BioCARS: a synchrotron resource for time-resolved X-ray science. J. Synchrotron Radiation 18, 658–670 (2011).

Moffat, K. Laue diffraction and time-resolved crystallography: a personal history. Philos. Transac. Royal Soc. A: Math. Phys. Eng. Sci. 377, 20180243 (2019).

Chapman, H. N. et al. Femtosecond X-ray protein nanocrystallography. Nature 470, 73–77 (2011).

Pandey, S. et al. Time-resolved serial femtosecond crystallography at the European XFEL. Nat. Meth. 17, 73–78 (2020).

Evans, P. Scaling and assessment of data quality. Acta Crystallogr. Section D 62, 72–82 (2006).

Bonifacio, R., De Salvo, L., Pierini, P., Piovella, N. & Pellegrini, C. Spectrum, temporal structure, and fluctuations in a high-gain free-electron laser starting from noise. Phys. Rev. Lett. 73, 70–73 (1994).

Azároff, L. V. Polarization correction for crystal-monochromatized X-radiation. Acta Crystallogr. 8, 701–704 (1955).

Nave, C. A Description of Imperfections in Protein Crystals. Acta Crystallogr. Section D 54, 848–853 (1998).

Garman, E. F. Radiation damage in macromolecular crystallography: what is it and why should we care? Acta Crystallogr. Section D 66, 339–351 (2010).

Otwinowski, Z., Borek, D., Majewski, W. & Minor, W. Multiparametric scaling of diffraction intensities. Acta Crystallogr. Section A 59, 228–234 (2003).

Kabsch, W. Integration, scaling, space-group assignment and post-refinement. Acta Crystallogr. Section D 66, 133–144 (2010).

Beilsten-Edmands, J. et al. Scaling diffraction data in the DIALS software package: algorithms and new approaches for multi-crystal scaling. Acta Crystallogr. Section D 76, 385–399 (2020).

French, S. & Wilson, K. On the treatment of negative intensity observations. Acta Crystallogr. Section A 34, 517–525 (1978).

Wilson, A. J. C. The probability distribution of X-ray intensities. Acta Crystallogr. 2, 318–321 (1949).

Geyer, C. J. Practical Markov Chain Monte Carlo. Stat. Sci. 7, 473 – 483 (1992).

Jordan, M. I., Ghahramani, Z., Jaakkola, T. S. & Saul, L. K. An Introduction to Variational Methods for Graphical Models. Mach. Learn. 37, 183–233 (1999).

Blei, D. M., Kucukelbir, A. & McAuliffe, J. D. Variational Inference: A Review for Statisticians. J. Am. Stat. Association 112, 859–877 (2017).

Greisman, J. B. et al. Native SAD phasing at room temperature. Acta Crystallogr. Section D 78, 986–996 (2022).

Evans, P. R. & Murshudov, G. N. How good are my data and what is the resolution? Acta Crystallogr. Section D: Biol. Crystallogr. 69, 1204–1214 (2013).

Terwilliger, T. C. et al. Decision-making in structure solution using Bayesian estimates of map quality: the PHENIX AutoSol wizard. Acta Crystallogr. Section D: Biol. Crystallogr. 65, 582–601 (2009).

Meents, A. et al. Pink-beam serial crystallography. Nat. Commun. 8, 1281 (2017).

Ren, Z. & Moffat, K. Deconvolution of energy overlaps in Laue diffraction. J.; Appl. Crystallogr. 28, 482–494 (1995).

Ren, Z. et al. Laue crystallography: coming of age. J. Synchrot. Radiation 6, 891–917 (1999).

Genick, U. K. et al. Structure of a protein photocycle intermediate by millisecond time-resolved crystallography. Science 275, 1471–1475 (1997).

Borgstahl, G. E. O., Williams, D. R. & Getzoff, E. D. 1.4 Å structure of photoactive yellow protein, a cytosolic photoreceptor: Unusual fold, active site, and chromophore. Biochemistry 34, 6278–6287 (1995).

Hekstra, D. R. et al. Electric-field-stimulated protein mechanics. Nature 540, 400–405 (2016).

Tenboer, J. et al. Time-resolved serial crystallography captures high-resolution intermediates of photoactive yellow protein. Science 346, 1242–1246 (2014).

Pande, K. et al. Femtosecond structural dynamics drives the trans/cis isomerization in photoactive yellow protein. Science 352, 725–729 (2016).

Nango, E. et al. A three-dimensional movie of structural changes in bacteriorhodopsin. Science 354, 1552–1557 (2016).

Suga, M. et al. Light-induced structural changes and the site of O=O bond formation in PSII caught by XFEL. Nature 543, 131–135 (2017).

Shimada, A. et al. A nanosecond time-resolved XFEL analysis of structural changes associated with co release from cytochrome c oxidase. Sci. Adv. 3, e1603042–e1603042 (2017).

Stagno, J. R. et al. Structures of riboswitch RNA reaction states by mix-and-inject XFEL serial crystallography. Nature 541, 242–246 (2017).

Olmos, J. L. et al. Enzyme intermediates captured “on the fly”by mix-and-inject serial crystallography. BMC Biol. 16, 59 (2018).

Dasgupta, M. et al. Mix-and-inject XFEL crystallography reveals gated conformational dynamics during enzyme catalysis. Proc. Natl. Acad. Sci. 116, 25634–25640 (2019).

Kang, Y. et al. Crystal structure of rhodopsin bound to arrestin by femtosecond X-ray laser. Nature 523, 561–567 (2015).

Batyuk, A. et al. Native phasing of x-ray free-electron laser data for a G protein-coupled receptor. Sci. Adv. 2, e1600292–e1600292 (2016).

Kern, J. et al. Taking snapshots of photosynthetic water oxidation using femtosecond X-ray diffraction and spectroscopy. Nat. Commun. 5, 4371 (2014).

English, A. C., Done, S. H., Caves, L. S. D., Groom, C. R. & Hubbard, R. E. Locating interaction sites on proteins: The crystal structure of thermolysin soaked in 2% to 100% isopropanol. Proteins: Struct. Func. Bioinform. 37, 628–640 (1999).

Uervirojnangkoorn, M. et al. Enabling X-ray free electron laser crystallography for challenging biological systems from a limited number of crystals. eLife 4, e05421 (2015).

Sauter, N. K. XFEL diffraction: developing processing methods to optimize data quality. J. Synchrot.Radiation 22, 239–248 (2015).

White, T. A. Post-refinement method for snapshot serial crystallography. Philos. Transactions Royal Soc. B: Biol. Sci. 369, 20130330 (2014).

Hamilton, W. C., Rollett, J. S. & Sparks, R. A. On the relative scaling of X-ray photographs. Acta Crystallogr. 18, 129–130 (1965).

Wiedorn, M. O. et al. Megahertz serial crystallography. Nat. Commun. 9, 4025 (2018).

Hoffman, M. D., Blei, D. M., Wang, C. & Paisley, J. Stochastic variational inference. J. Mach. Learn. Res. 14, 1303–1347 (2013).

Kingma, D. P. & Welling, M. Auto-Encoding Variational Bayes. arXiv:1312.6114 [cs, stat] (2014). http://arxiv.org/abs/1312.6114.

Kingma, D. P. & Ba, J. Adam: A Method for Stochastic Optimization. arXiv:1412.6980 [cs] (2017). http://arxiv.org/abs/1412.6980.

Dillon, J. V. et al. TensorFlow Distributions. arXiv:1711.10604 [cs, stat] (2017). http://arxiv.org/abs/1711.10604.

Virtanen, P. et al. SciPy 1.0: Fundamental Algorithms for Scientific Computing in Python. Nat. Meth. 17, 261–272 (2020).

Schmidt, M. et al. Protein energy landscapes determined by five-dimensional crystallography. Acta Crystallogr. Section D Biol. Crystallogr. 69, 2534–2542 (2013).

Foreman-Mackey, D. et al. daft-dev/daft: daft v0.1.2. https://zenodo.org/record/4615289. https://doi.org/10.5281/zenodo.4615289.

Winter, G. et al. DIALS: implementation and evaluation of a new integration package. Acta Crystallogr. Section D: Struct. Biol. 74, 85–97 (2018).

Wojdyr, M. Gemmi: A library for structural biology. J. Open Source Software 7, 4200 (2022).

Hunter, J. D. Matplotlib: A 2d graphics environment. Computing Sci. Eng. 9, 90–95 (2007).

Harris, C. R. et al. Array programming with NumPy. Nature 585, 357–362 (2020).

Team, T. P. D. pandas-dev/pandas: Pandas 1.2.1 [software] (2021). https://doi.org/10.5281/zenodo.3509134.

Greisman, J. B., Dalton, K. M. & Hekstra, D. R. reciprocalspaceship: a Python library for crystallographic data analysis. J. Appl. Crystallogr. 54, 1521–1529 (2021).

Waskom, M. L. seaborn: statistical data visualization. J. Open Source Software 6, 3021 (2021).

Abadi, M. et al. TensorFlow: Large-scale machine learning on heterogeneous systems (2015). https://www.tensorflow.org/. Software available from tensorflow.org.

Tripathi, S., Šrajer, V., Purwar, N., Henning, R. & Schmidt, M. pH dependence of the photoactive yellow protein photocycle investigated by time-resolved crystallography. Biophys. J. 102, 325–332 (2012).

Brewster, A. S. et al. Improving signal strength in serial crystallography with DIALS geometry refinement. Acta Crystallogr. Section D: Struct. Biol. 74, 877–894 (2018).

Schrödinger, LLC. The PyMOL molecular graphics system, version 2.5 (2022).

Acknowledgements

We acknowledge the open source software projects used in this work including Daft51, DIALS52, GEMMI53, Matplotlib54, Numpy55, Pandas56, reciprocalspaceship57, SciPy49, Seaborn58, TensorFlow59, TensorFlow Probability48. We thank Vukica Šrajer and Marius Schmidt for providing Laue time-resolved data, Nick Sauter and Aaron Brewster for helpful discussions, and T.J. Lane and Takanori Nakane for comments on the manuscript. We thank Derek Mendez for advice on CCTBX. We thank the staff at the Northeastern Collaborative Access Team (NE-CAT), beamline 24-ID-C of the Advanced Photon Source for assistance with room-temperature crystallography, in particular Igor Kourinov. NE-CAT beamlines are supported by the National Institute of General Medical Sciences, NIH (P30 GM124165), using resources of the Advanced Photon Source, a U.S. Department of Energy (DOE) Office of Science User Facility operated for the DOE Office of Science by Argonne National Laboratory under Contract No. DE-AC02-06CH11357. This work was supported by the Searle Scholarship Program (SSP-2018-3240), a Merck Fellowship (338034) from the George W. Merck Fund of the New York Community Trust, and the NIH457 Director’s New Innovator Award (DP2-GM141000) to D.R.H. J.B.G. was supported by the National Science Foundation Graduate Research Fellowship under Grant No. DGE1745303. K.M.D. holds a Career Award at the Scientific Interface from the Burroughs Wellcome Fund.

Author information

Authors and Affiliations

Contributions

K.M.D. and D.R.H. conceived the project. K.M.D. conceived and implemented the inference algorithm. J.B.G. contributed to software development. K.M.D. drafted the manuscript. All of the authors contributed to analysis and edited the paper.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review

Peer review information

Nature Communications thanks the anonymous reviewer(s) for their contribution to the peer review of this work. Peer reviewer reports are available.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Dalton, K.M., Greisman, J.B. & Hekstra, D.R. A unifying Bayesian framework for merging X-ray diffraction data. Nat Commun 13, 7764 (2022). https://doi.org/10.1038/s41467-022-35280-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41467-022-35280-8

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.