Abstract

Accurate information processing is crucial both in technology and in nature. To achieve it, any information processing system needs an initial supply of resources away from thermal equilibrium. Here we establish a fundamental limit on the accuracy achievable with a given amount of nonequilibrium resources. The limit applies to arbitrary information processing tasks and arbitrary information processing systems subject to the laws of quantum mechanics. It is easily computable and is expressed in terms of an entropic quantity, which we name the reverse entropy, associated to a time reversal of the information processing task under consideration. The limit is achievable for all deterministic classical computations and for all their quantum extensions. As an application, we establish the optimal tradeoff between nonequilibrium and accuracy for the fundamental tasks of storing, transmitting, cloning, and erasing information. Our results set a target for the design of new devices approaching the ultimate efficiency limit, and provide a framework for demonstrating thermodynamical advantages of quantum devices over their classical counterparts.

Similar content being viewed by others

Introduction

Many processes in nature depend on accurate processing of information. For example, the development of complex organisms relies on the accurate replication of the information contained in their DNA, which takes place with an error rate estimated to be less than one basis per billion1.

At the fundamental level, information is stored into patterns that stand out from the thermal fluctuations of the surrounding environment2,3. In order to achieve deviations from thermal equilibrium, any information-processing machine needs an initial supply of systems in a non-thermal state4,5. For example, an ideal copy machine for classical data requires at least a clean bit for every bit it copies6,7,8. For a general information-processing task, a fundamental question is: what is the minimum amount of nonequilibrium needed to achieve a target level of accuracy? This question is especially prominent at the quantum scale, where many tasks cannot be achieved perfectly even in principle, as illustrated by the no-cloning theorem9,10.

In recent years, there has been a growing interest in the interplay between quantum information and thermodynamics11,12,13, motivated both by fundamental questions14,15,16,17,18 and by the experimental realisation of new quantum devices19,20,21. Research in this area led to the development of resource-theoretic frameworks that can be used to study thermodynamics beyond the macroscopic limit22,23,24,25,26,27,28,29,30. These frameworks have been applied to characterise thermodynamically allowed state transitions, to evaluate the work cost of logical operations31,32 and to study information erasure and work extraction in the quantum regime33,34,35. From a different perspective, relations between accuracy and entropy production have been investigated in the field of stochastic thermodynamics36,37,38,39,40, referring to specific physical models such as classical Markovian systems in nonequilibrium steady states.

Here, we establish a fundamental tradeoff between accuracy and nonequilibrium, valid at the quantum scale and applicable to arbitrary information-processing tasks. The main result is a limit on the accuracy, expressed in terms of an entropic quantity, which we call the reverse entropy, associated with a time reversal of the information-processing task under consideration. The limit is attainable in a broad class of tasks, including all deterministic classical computations and all quantum extensions thereof. For the task of erasing quantum information, our limit provides, as a byproduct, the ultimate accuracy achievable with a given amount of work. For the tasks of storage, transmission, and cloning of quantum information, our results reveal a thermodynamic advantage of quantum setups over all classical setups that measure the input and generate their output based only on the measurement outcomes. In the cases of storage and transmission, we show that quantum machines can break the ultimate classical limit on the amount of work required to achieve a desired level of accuracy. This result enables the demonstration of work-efficient quantum memories and quantum communication systems outperforming all possible classical setups.

Results

The nonequilibrium cost of accuracy

At the most basic level, the goal of information processing is to set up a desired relation between an input and an output. For example, a deterministic classical computation amounts to transforming a bit string x into another bit string f(x), where f is a given function. In the quantum domain, information-processing tasks are often associated with ideal state transformations \({\rho }_{x}\mapsto \rho_{x}^{\prime}\), in which an input state described by a density operator ρx has to be converted into a target output state described by another density operator \(\rho_x^{\prime}\), where x is a parameter in some given set X.

Since every realistic machine is subject to imperfections, the physical realisations of an ideal information-processing task can have varying levels of accuracy. Operationally, the accuracy can be quantified by performing a test on the output of the machine and by assigning a score to the outcomes of the measurement. The resulting measure of accuracy is given by the expectation value of a suitable observable Ox, used to assess the closeness of the output to the target state \(\rho_x^{\prime}\). In the worst case over all possible inputs, the accuracy achieved in a given task \({{{{{{{\mathcal{T}}}}}}}}\) has the expression \({{{{{{{{\mathcal{F}}}}}}}}}_{{{{{{{{\mathcal{T}}}}}}}}}({{{{{{{\mathcal{M}}}}}}}})=\mathop{\min }_{x}{{{{{{{\rm{Tr}}}}}}}}[{O}_{x}{{{{{{{\mathcal{M}}}}}}}}({\rho }_{x})]\), where \({{{{{{{\mathcal{M}}}}}}}}\) is the quantum channel (completely positive trace-preserving map) describing the action of the machine. Here, the dependence of the input states ρx and output observables Ox on the parameter x is fully general, and includes in particular cases where multiple observables are tested for the same input state. The range of values for the function \({{{{{{{{\mathcal{F}}}}}}}}}_{{{{{{{{\mathcal{T}}}}}}}}}\) depends on the choice of observables Ox: for example, if all the observables Ox are projectors, the range of \({{{{{{{{\mathcal{F}}}}}}}}}_{{{{{{{{\mathcal{T}}}}}}}}}\) will be included in the interval [0, 1].

Accurate information processing generally requires an initial supply of systems away from equilibrium. The amount of nonequilibrium required to implement a given task can be rigorously quantified in a resource-theoretic framework where Gibbs states are regarded as freely available, and the only operations that can be performed free of cost are those that transform Gibbs states into Gibbs states28,32. These operations, known as Gibbs preserving, are the largest class of processes that maintain the condition of thermal equilibrium. The initial nonequilibrium resources can be represented in a canonical form by introducing an information battery31,32, consisting of an array of qubits with degenerate energy levels. The battery starts off with some qubits in a pure state (hereafter called the clean qubits), while all the remaining qubits are in the maximally mixed state. To implement the desired information-processing task, the machine will operate jointly on the input system and on the information battery, as illustrated in Fig. 1.

A source generates a set of input states for an information-processing machine. The machine uses an information battery (a supply of qubits initialised in a fixed pure state) and thermal fluctuations (a reservoir in the Gibbs state) to transform the input state ρx into an approximation of the ideal target states \(\rho_x^{\prime}\). Finally, the similarity between the output and the target states is assessed by a measurement. The number of pure qubits consumed by the machine is the nonequilibrium cost that needs to be paid in order to achieve the desired level of accuracy.

The number of clean qubits required by a machine is an important measure of efficiency, hereafter called the nonequilibrium cost. For a given quantum channel \({{{{{{{\mathcal{M}}}}}}}}\), the minimum nonequilibrium cost of any machine implementing channel \({{{{{{{\mathcal{M}}}}}}}}\) (or some approximation thereof) has been evaluated in refs. 31, 32. Many information-processing tasks, however, are not uniquely associated with a specific quantum channel: for example, most state transitions \(\rho \mapsto \rho^{\prime}\) can be implemented by infinitely many different quantum channels, which generally have different costs. When a task can be implemented perfectly by more than one quantum channel, the existing results do not identify, in general, the minimum nonequilibrium cost that has to be paid for a desired level of accuracy. Furthermore, there also exist information-processing tasks, such as quantum cloning9,10, that cannot be perfectly achieved by any quantum channel. In these scenarios, it is important to establish a direct relation between the accuracy achieved in the given task and the minimum cost that has to be paid for that level of accuracy. Such a relation would provide a direct bridge between thermodynamics and abstract information processing, establishing a fundamental efficiency limit valid for all machines allowed by quantum mechanics.

In this paper, we build concepts and methods for determining the nonequilibrium cost of accuracy in a way that depends only on the information-processing task under consideration, and not on a specific quantum channel. Let us denote by \(c({{{{{{{\mathcal{M}}}}}}}},{{{\Pi }}}_{A})\) the nonequilibrium cost required for implementing a given channel \({{{{{{{\mathcal{M}}}}}}}}\) on input states in the subspace specified by a projector ΠA. We then define the nonequilibrium cost for achieving accuracy F in a task \({{{{{{{\mathcal{T}}}}}}}}\) as \({c}_{{{{{{{{\mathcal{T}}}}}}}}}(F):=\min \{c({{{{{{{\mathcal{M}}}}}}}},{{{\Pi }}}_{A})\,|\,{{{{{{{{\mathcal{F}}}}}}}}}_{{{{{{{{\mathcal{T}}}}}}}}}({{{{{{{\mathcal{M}}}}}}}})\ge F\}\). Note that the the specification of the input subspace is included in the task \({{{{{{{\mathcal{T}}}}}}}}\). In the following we focus on tasks where the input subspace is invariant under time evolution, namely [ΠA, HA] = 0, where HA is the Hamiltonian of the input system. Our main goal will be to evaluate \({c}_{{{{{{{{\mathcal{T}}}}}}}}}(F)\), the nonequilibrium cost of accuracy.

In Methods, we provide an exact expression for \({c}_{{{{{{{{\mathcal{T}}}}}}}}}(F)\). The expression involves a semidefinite programme, which can be solved numerically for low-dimensional systems, thus providing the exact tradeoff between nonequilibrium and accuracy. Still, brute-force optimisation is intractable for high-dimensional systems. For this reason, it is crucial to have a computable bound that can be applied in a broader range of situations. The central result of the paper is a universal bound, valid for all quantum systems and to all information-processing tasks: the bound reads

where \({\kappa }_{{{{{{{{\mathcal{T}}}}}}}}}:=-\log {F}_{\max }^{{{{{{{{{\mathcal{T}}}}}}}}}_{{{{{{{{\rm{rev}}}}}}}}}}\) is an entropic quantity, hereafter called the reverse entropy, and \({F}_{\max }^{{{{{{{{{\mathcal{T}}}}}}}}}_{{{{{{{{\rm{rev}}}}}}}}}}\) is the maximum accuracy allowed by quantum mechanics to a time-reversed information-processing task \({{{{{{{{\mathcal{T}}}}}}}}}_{{{{{{{{\rm{rev}}}}}}}}}\), precisely defined in the following section (see Supplementary Note 1 for the derivation of Eq. (1)). Note that the reverse entropy is a monotonically decreasing function of \({F}_{\max }^{{{{{{{{{\mathcal{T}}}}}}}}}_{{{{{{{{\rm{rev}}}}}}}}}}\), and becomes zero when the time-reversed task can be implemented with unit accuracy.

Eq. (1) can be equivalently formulated as a limit on the accuracy attainable with a given budget of nonequilibrium resources: for a given number of clean qubits c, the maximum achievable accuracy in the task \({{{{{{{\mathcal{T}}}}}}}}\), denoted by \({F}_{{{{{{{{\mathcal{T}}}}}}}}}(c):=\max \{{{{{{{{{\mathcal{F}}}}}}}}}_{{{{{{{{\mathcal{T}}}}}}}}}({{{{{{{\mathcal{M}}}}}}}})\,|\,c({{{{{{{\mathcal{M}}}}}}}},{{{\Pi }}}_{A})\le c\}\), satisfies the bound

This bound represents an in-principle limit on the performance of every information-processing machine. The bounds (1) and (2) are achievable in a number of tasks, and have a number of implications that will be discussed in the following sections.

Time-reversed tasks and reverse entropy

Here, we discuss the notion of time reversal of an information-processing task. Let us start from the simplest scenario, involving transformations of a fully degenerate system into itself. For a state transformation task \({\rho }_{x}\mapsto \rho_{x}^{\prime}\), we consider without loss of generality an accuracy measure for which the observables Ox are positive operators, proportional to quantum states. We then define a time-reversed task \({{{{{{{{\mathcal{T}}}}}}}}}_{{{{{{{{\rm{rev}}}}}}}}}\), where the role of the input states ρx and of the output observables Ox are exchanged. The accuracy of a generic channel \({{{{{{{\mathcal{M}}}}}}}}\) in the execution of the time-reversed task is specified by the reverse accuracy \({{{{{{{{\mathcal{F}}}}}}}}}_{{{{{{{{{\mathcal{T}}}}}}}}}_{{{{{{{{\rm{rev}}}}}}}}}}({{{{{{{\mathcal{M}}}}}}}}):=\mathop{\min }_{x}{{{{{{{\rm{Tr}}}}}}}}[{\rho }_{x}{{{{{{{\mathcal{M}}}}}}}}({O}_{x})]\). Maximising over all possible channels, we obtain \({F}_{\max }^{{{{{{{{{\mathcal{T}}}}}}}}}_{{{{{{{{\rm{rev}}}}}}}}}}\) and define \({\kappa }_{{{{{{{{\mathcal{T}}}}}}}}}=-\log {F}_{\max }^{{{{{{{{{\mathcal{T}}}}}}}}}_{{{{{{{{\rm{rev}}}}}}}}}}\).

For systems with nontrivial energy spectrum, we define the time-reversed task in terms of a time reversal of quantum operations introduced by Crooks41 and recently generalised in ref. 42 (this time-reversal operation is also related to Petz’s recovery map)43,44,45. In the Gibbs preserving context, this time-reversal exchanges states with observables, mapping Gibbs states into trivial observables (described by the identity matrix) and vice-versa. More generally, the time-reversal maps the states ρx into the observables \({\widetilde{O}}_{x}:={\Gamma }_{A}^{-\frac{1}{2}}{\rho }_{x}{\Gamma }_{A}^{-\frac{1}{2}}\) and the observables Ox into the (unnormalised) states \({\widetilde{\rho }}_{x}:={\Gamma }_{B}^{\frac{1}{2}}{O}_{x}{\Gamma }_{B}^{\frac{1}{2}}\), where ΓA and ΓB are the Gibbs states of the input and output systems, respectively. The reverse accuracy of a channel \({{{{{{{\mathcal{M}}}}}}}}\) is then defined as \({{{{{{{{\mathcal{F}}}}}}}}}_{{{{{{{{{\mathcal{T}}}}}}}}}_{{{{{{{{\rm{rev}}}}}}}}}}({{{{{{{\mathcal{M}}}}}}}}):=\mathop{\min }_{x}{{{{{{{\rm{Tr}}}}}}}}[{\widetilde{O}}_{x}{{{{{{{\mathcal{M}}}}}}}}({\widetilde{\rho }}_{x})]\). In the Methods section, we show that the reverse entropy can be equivalently written as

where \({{{{{{{\bf{p}}}}}}}}={({p}_{x})}_{x\in {\mathsf{X}}}\) is a probability distribution, \({\omega }_{{{{{{{{\mathcal{T}}}}}}}},{{{{{{{\bf{p}}}}}}}}}={\sum }_{x}\,{p}_{x}\,{\Gamma }_{A}^{-\frac{1}{2}}{\rho }_{x}^{T}{\Gamma }_{A}^{-1/2}\otimes {\Gamma }_{B}^{\frac{1}{2}}{O}_{x}{\Gamma }_{B}^{\frac{1}{2}}\) is an operator acting on the tensor product of the input and output systems, \({\rho }_{x}^{T}\) is the transpose of the density matrix ρx with respect to the energy eigenbasis, and \({H}_{\min }{(A|B)}_{{\omega }_{{{{{{{{\mathcal{T}}}}}}}},{{{{{{{\bf{p}}}}}}}}}}:=-\log \min \{{{{{{{{\rm{Tr}}}}}}}}[{{{\Lambda }}}_{B}]\,|\,({I}_{A}\otimes {{{\Lambda }}}_{B})\ge {\omega }_{{{{{{{{\mathcal{T}}}}}}}},{{{{{{{\bf{p}}}}}}}}}\}\) is the conditional min-entropy43,44,45.

Crucially, the reverse entropy depends only on the task under consideration, and not on a specific quantum channel used to implement the task. In fact, the reverse entropy is well-defined even for tasks that cannot be perfectly achieved by any quantum channel, as in the case of ideal quantum cloning, and even for tasks that are not formulated in terms of state transitions (see Methods).

To gain a better understanding of the reverse entropy, it is useful to evaluate it in some special cases. Consider the case of a classical deterministic computation, corresponding to the evaluation of a function y = f(x). In this case the reverse entropy is

where \({D}_{\max }(p\parallel q)=\mathop{\max }\limits_{y}\,p(y)/q(y)\) is the max Rényi divergence between two probability distributions p(y) and q(y)46, gB(y) is Gibbs distribution for the output system, and pf(y) is the probability distribution of the random variable y = f(x), when x is sampled from the Gibbs distribution (see Supplementary Note 2 for the derivation). Eq. (4) shows that the reverse entropy of a classical computation is a measure of how much the computation transforms thermal fluctuations into states that deviate from thermal equilibrium.

In the quantum case, however, physical limits to the execution of the time-reversed task can arise even without any deviation from thermal equilibrium. Consider for example the transposition task \({\rho }_{x}\mapsto {\rho }_{x}^{T}\)47,48,49,50,51,52, where x parametrises all the possible pure states of a quantum system. This transformation does not generate any deviation from equilibrium as it maps Gibbs states into Gibbs states. On the other hand, in the fully degenerate case the time-reversed task is still transposition, and perfect transposition is forbidden by the laws of quantum mechanics47,48,49,50,51,52. The maximum fidelity of an approximate transposition is Ftrans = 2/(d + 1) for d-dimensional quantum systems, and therefore \({\kappa }_{{{{{{{{\rm{trans}}}}}}}}}=\log [(d+1)/2]\).

Condition for achieving the limit

The appeal of the bounds (1) and (2) is that they are general and easy to use. But are they attainable? To discuss their attainability, it is important to first identify the parameter range in which these bounds are meaningful. First of all, the bound (1) is only meaningful when the desired accuracy does not exceed the maximum accuracy \({F}_{\max }\) allowed by the laws of physics for the task \({{{{{{{\mathcal{T}}}}}}}}\). Similarly, the bound (2) is only meaningful if the initial amount of nonequilibrium resources does not go below the smallest nonequilibrium cost of an arbitrary process acting on the given input subspace, hereafter denoted by \({c}_{\min }\). By maximising the accuracy over all quantum channels with minimum cost \({c}_{\min }\), we then obtain a minimum value \({F}_{\min }\) below which reducing the accuracy does not result in any reduction of the nonequilibrium cost.

We now provide a criterion that guarantees the attainability of the bounds (1) and (2) in the full interval \([{F}_{\min },{F}_{\max }]\). Since the two bounds are equivalent to one another, we will focus on bound (1). The condition for attainability in the full interval \([{F}_{\min },{F}_{\max }]\) is attainability at the maximum value \({F}_{\max }\). As we will see in the rest of the paper, this condition is satisfied by a number of information-processing tasks, notably including all classical computations and all quantum extensions thereof.

Theorem 1

For every information-processing task \({{{{{{{\mathcal{T}}}}}}}}\) with [ΠA, HA] = 0, if the bound (1) is attainable for a value of the accuracy F0, then it is attainable for every value of the accuracy in the interval \([{F}_{\min },{F}_{0}]\), with \({F}_{\min }={2}^{{c}_{\min }-{\kappa }_{{{{{{{{\mathcal{T}}}}}}}}}}\). In particular, if the bound is attainable for the maximum accuracy \({F}_{\max }\), then it is attainable for every value of the accuracy in the interval \([{F}_{\min },{F}_{\max }]\).

In Supplementary Note 3, we prove the theorem by explicitly constructing a family of channels that achieve the bound (1).

By evaluating the nonequilibrium cost of specific quantum channels, one can prove the attainability of the bound (1) for a variety of different tasks. For example, the bound (1) is attainable for every deterministic classical computation. Moreover, it is achievable for every quantum extension of a classical computation: on Supplementary Note 4 we show that for every value of the accuracy, the nonequilibrium cost is the same for the original classical computation and for its quantum extension, and therefore the achievability condition holds in both cases.

The nonequilibrium cost \({c}_{{{{{{{{\mathcal{T}}}}}}}}}(F)\) provides a fundamental lower bound to the amount of work that has to be invested in order to achieve accuracy F. Indeed, the minimum work cost of a specific channel \({{{{{{{\mathcal{M}}}}}}}}\), denoted by \(W({{{{{{{\mathcal{M}}}}}}}},{{{\Pi }}}_{A})\) can be quantified by the minimum number of clean qubits needed to implement the process in a scheme like the one in Fig. 1, with the only difference that Gibbs preserving operations are replaced by thermal operations, that is, operations resulting from a joint energy-preserving evolution of the system together with auxiliary systems in the Gibbs state24,25. Since thermal operations are a proper subset of the Gibbs preserving operations28, the restriction to thermal operations generally results into a larger number of clean qubits, and the work cost is lower bounded as \(W({{{{{{{\mathcal{M}}}}}}}},{{{\Pi }}}_{A})\ge kT(\ln 2)\,c({{{{{{{\mathcal{M}}}}}}}},{{{\Pi }}}_{A})\), where k is the Boltzmann constant and T is the temperature. By minimising both sides over all channels that achieve accuracy F, we then get the bound \({W}_{{{{{{{{\mathcal{T}}}}}}}}}(F)\ge kT(\ln 2)\,{c}_{{{{{{{{\mathcal{T}}}}}}}}}(F)\), where \({W}_{{{{{{{{\mathcal{T}}}}}}}}}(F):=\min \{W({{{{{{{\mathcal{M}}}}}}}},{{{\Pi }}}_{A})\,|\,{F}_{{{{{{{{\mathcal{T}}}}}}}}}({{{{{{{\mathcal{M}}}}}}}})\ge F\}\) is the minimum work cost that has to be paid in order to reach accuracy F.

The achievability of this bound is generally nontrivial, except for operations on fully degenerate classical systems, wherein the sets of thermal operations and Gibbs preserving maps coincide due to Birkhoff’s theorem53. Another example is the task of erasing quantum states, corresponding to the state transformation \({\rho }_{x}\mapsto \left|0\right\rangle \left\langle 0\right|\), where ρx is an arbitrary state and \(\left|0\right\rangle\) is the ground state. In Supplementary Note 4, we show that the bound \({W}_{{{{{{{{\rm{erase}}}}}}}}}(F)\ge kT\ln 2\,{c}_{{{{{{{{\rm{erase}}}}}}}}}(F)\) holds with the equality sign, and the minimum work cost of approximate erasure is given by

where ΔA is the difference between the free energy of the ground state and the free energy of the Gibbs state, and the equality holds for every value of F in the interval \([{F}_{\min },{F}_{\max }]\), with \({F}_{\min }={e}^{-\Delta A/(kT)}\) and \({F}_{\max }=1\).

Nonequilibrium cost of classical cloning

Copying is the quintessential example of an information-processing task taking place in nature, its accurate implementation being crucial for processes such as DNA replication. In the following, we will refer to the copying of classical information as classical cloning. In abstract terms, the classical cloning task is to transform N identical copies of a pure state picked from an orthonormal basis into \(N{\prime} \ge N\) copies of the same state. Classically, this corresponds to the transformation \(\left|x\right\rangle {\left\langle x\right|}^{\otimes N}\mapsto \left|x\right\rangle {\left\langle x\right|}^{\otimes N{\prime} }\), where x labels the vectors of an orthonormal basis. The reverse entropy can be computed from Eq. (4), which gives

where \(\Delta N:=N^{\prime} -N\) the number of extra copies, and \(\Delta {A}_{\max }\) is the maximum difference between the free energy of a single-copy pure state and the free energy of the single-copy Gibbs state. Physically, \({\kappa }_{{{{{{{{\rm{clon}}}}}}}}}^{{{{{{{{\rm{C}}}}}}}}}\) coincides with the maximum amount of work needed to generate ΔN copies of a pure state from the thermal state25.

Since cloning is a special case of a deterministic classical computation, the bound (1) is attainable, and the minimum nonequilibrium cost of classical cloning is

This result generalises seminal results by Landauer and Bennett on the thermodynamics of classical cloning7,54,55, extending them from the ideal scenario to realistic settings where the copying process is approximate. For systems with fully degenerate energy levels, one also has the equality \({W}_{{{{{{{{\rm{clon}}}}}}}}}^{{{{{{{{\rm{C}}}}}}}}}(F)=kT\,(\ln 2)\,{c}_{{{{{{{{\rm{clon}}}}}}}}}^{{{{{{{{\rm{C}}}}}}}}}(F)\), which provides the minimum amount of work needed to replicate classical information with a target level of accuracy.

Nonequilibrium cost of quantum cloning

We now consider the task of approximately cloning quantum information56. The accuracy of quantum cloning is important both for foundational and practical reasons, as it is linked to the no signalling principle57, to quantum cryptography56, quantum metrology58, and a variety of other quantum information tasks59.

Here, we consider arbitrary cloning tasks where the set of single-copy states includes all energy eigenstates. This includes in particular the task of universal quantum cloning60,61,62, where the input states are arbitrary pure states. The reverse entropy of universal quantum cloning is at least as large as the reverse entropy of classical cloning: the bound \({\kappa }_{{{{{{{{\rm{clon}}}}}}}}}^{{{{{{{{\rm{Q}}}}}}}}}\ge {\kappa }_{{{{{{{{\rm{clon}}}}}}}}}^{{{{{{{{\rm{C}}}}}}}}}\) follows immediately from Eq. (3), by restricting the optimisation to probability distributions that are concentrated on the eigenstates of the energy.

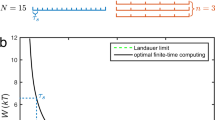

In Supplementary Note 5, we show that (i) the bound (1) is attainable for universal quantum cloning, and (ii) \({\kappa }_{{{{{{{{\rm{clon}}}}}}}}}^{{{{{{{{\rm{Q}}}}}}}}}={\kappa }_{{{{{{{{\rm{clon}}}}}}}}}^{{{{{{{{\rm{C}}}}}}}}}\). These results imply that classical and quantum cloning exhibit exactly the same tradeoff between accuracy and nonequilibrium: for every value of the accuracy, the minimum nonequilibrium cost of information replication is given by Eq. (7) both in the classical and in the quantum case. An illustration of this fact is provided in Fig. 2. In terms of accuracy/nonequilibrium tradeoff, the only difference between classical and quantum cloning is that the classical tradeoff curve goes all the way up to unit fidelity, while the quantum tradeoff curve stops at a maximum fidelity, which is strictly smaller than 1 due to the no-cloning theorem9,10.

The optimal accuracy-nonequilibrium tradeoff is depicted for \(N\to N{\prime}\) cloning machines with N = 1 and \(N{\prime}=2,3,4\). The fidelities for copying classical (red region) and quantum data (blue region) are limited by the same boundary curve, except that the fidelity for the task of copying quantum data cannot reach to 1 due to the no-cloning theorem.

Considering the differences between quantum and classical cloning, the fact that these two tasks share the same tradeoff curve is quite striking. An insight into this phenomenon comes from connection between the nonequilibrium cost and the time-reversed task of cloning. For fully degenerate systems, the time-reversed task is to transform \(N^{\prime}\) copies of a state into \(N\le N^{\prime}\) copies of the same state, and in both cases it can be realised by discarding \(N^{\prime} -N\) systems. The reverse accuracy of this task is the same for both classical and quantum systems, and so is the reverse entropy. In the nondegenerate case, the analysis is more complex, but the conclusion remains the same.

Although classical and quantum cloning share the same tradeoff curve, in the following we will show that they exhibit a fundamental difference in the way the tradeoff is achieved: to achieve the fundamental limit, cloning machines must use genuinely quantum strategies.

Limit on the accuracy of classical machines

Classical copy machines scan the input copies and produce replicas based on this information. Similarly, a classical machine for a general task can be modelled as a machine that measures the input and produces an output based on the measurement result. When this approach is used at the quantum scale, it leads to a special class of quantum machines, known as entanglement breaking63.

Here, we show that entanglement breaking machines satisfy a stricter bound. In fact, this stricter bound applies not only to entanglement breaking machines, but also to a broader class of machines, called entanglement binding64. An entanglement binding channel is a quantum channel that degrades every entangled state to a bound (a.k.a. PPT) entangled state65,66. In Methods, we show that the minimum nonequilibrium cost over all entanglement binding machines, denoted by \({c}_{{{{{{{{\mathcal{T}}}}}}}}}^{{{{{{{{\rm{eb}}}}}}}}}(F)\), must satisfy the inequality

where \({\kappa }_{{{{{{{{\mathcal{T}}}}}}}}}\) is the reverse entropy of the state transformation task \({\rho }_{x}\to \rho_x^{\prime}\), and \({\kappa }_{{{{{{{{{\mathcal{T}}}}}}}}}^{*}}\) is the reverse entropy of the transposed task \({{{{{{{{\mathcal{T}}}}}}}}}^{*}\), corresponding to the state transformation \({\rho }_{x}\mapsto {(\rho_x^{\prime} )}^{T}\). This bound can be used to demonstrate that a thermodynamic advantage of general quantum machines over all entanglement binding machines, including in particular all classical machines.

Quantum advantage in cloning

For quantum cloning, it turns out that no entanglement binding machine can achieve the optimal accuracy/nonequilibrium tradeoff. The reason for this is that the reverse entropy of the transpose task is strictly larger than the reverse entropy of the direct task, namely \({\kappa }_{{{{{{{{\rm{clo{n}}}}}}}^{*}}}} > {\kappa }_{{{{{{{{\rm{clon}}}}}}}}}\). In Supplementary Note 6, we prove the inequality

where ΔE is the difference between the maximum and minimum energy, and, dK = (K + d − 1)!/[K!(d − 1)!] for K = N or \(K=N+N^{\prime}\). Inserting this inequality into Eq. (8), we conclude that every entanglement binding machine necessarily requires a larger number of clean qubits compared to the optimal quantum machine.

When the energy levels are fully degenerate, we show that the bounds (8) and (9) are exact equalities. With this result at hand, we can compare the exact performance of entanglement binding machines and general quantum machines, showing that the latter achieve a higher accuracy for every given amount of nonequilibrium resources. The comparison is presented in Fig. 3.

The figure illustrates the accessible regions for the cloning fidelity when generating \(N^{\prime}=2,3,4\) output copies from N = 1 input copy, in the case of qubits with degenerate Hamiltonian. The values of the fidelity in the blue region are attainable by general quantum machines, while the values in the orange region are attainable by entanglement binding machines. The difference between the two regions indicates a thermodynamic advantage of general quantum machines over all classical machines.

Our result shows that entanglement binding machines are thermodynamically inefficient for the task of information replication. Achieving the ultimate efficiency limit requires machines that are able to preserve free (i.e., non-bound) entanglement. This observation fits with the known fact that classical machines cannot achieve the maximum copying accuracy allowed by quantum mechanics61,62,67. Here, we have shown that not only classical machines are limited in their accuracy, but also that, to achieve such limited accuracy, they require a higher amount of nonequilibrium resources. Interestingly, the thermodynamic advantage of general quantum machines vanishes in the asymptotic limit \(N^{\prime} \to \infty\), in which the optimal quantum cloning can be reproduced by state estimation68,69,70.

Thermodynamic benchmark for quantum memories and quantum communication

Quantum machines that preserve free entanglement also offer an advantage in the storage and transmission of quantum states, corresponding to the ideal state transformation ρx ↦ ρx where x parametrises the states of interest. In theory, a noiseless quantum machine can achieve perfect accuracy at zero work cost. In practice, however, the transmission is always subject to errors and inefficiencies, resulting into nonunit fidelity and/or nonzero work. For this reason, realistic experiments that aim to demonstrate genuine quantum transmission or storage need criteria to demonstrate superior performance with respect to all classical setups. A popular approach is to demonstrate an experimental fidelity larger than the maximum fidelity achievable by classical schemes71,72,73. In the qubit case, the maximum classical fidelity is \({F}_{\max }^{{{{{{{{\rm{eb}}}}}}}}}=2/3\)74, and is often used as a benchmark for quantum communication experiments75,76,77. Here, we provide a different benchmark, in terms of the nonequilibrium cost needed to achieve a target fidelity F. In Supplementary Note 7, we show that the minimum nonequilibrium cost over all entanglement binding machines for the storage/transmission of qubit states is

Eq. (10) is valid for every qubit Hamiltonian and for every value of F in the interval \([{F}_{\min }^{{{{{{{{\rm{eb}}}}}}}}},{F}_{\max }^{{{{{{{{\rm{eb}}}}}}}}}]\), with \({F}_{\max }^{{{{{{{{\rm{eb}}}}}}}}}=2/3\) and \({F}_{\min }^{{{{{{{{\rm{eb}}}}}}}}}=({e}^{\frac{\Delta E}{kT}}+1)/(2{e}^{\frac{\Delta E}{kT}}+1)\). The minimum cost \({c}_{{{{{{{{\rm{store/store}}}}}}}}}^{{{{{{{{\rm{eb}}}}}}}}}(F)\) can be achieved by state estimation, and therefore can be regarded as the classical limit on the nonequilibrium cost.

For every \(F > {F}_{\min }\), the minimum nonequilibrium cost (10) is strictly larger than zero for every nondegenerate Hamiltonian. Since the nonequilibrium cost is a lower bound to the work cost, Eq. (10) implies that every entanglement binding machine with fidelity F requires at least \(kT(\ln 2)\,{c}_{{{{{{{{\rm{store/transmit}}}}}}}}}^{{{{{{{{\rm{eb}}}}}}}}}(F)\) work. This value can be used as a benchmark to certify genuine quantum information processing: every realistic setup that achieves fidelity F with less than \(kT\ln \left[F+{e}^{\frac{\Delta E}{kT}}\,{(2F-1)}^{2}/(1-F)\right]\) work will necessarily exhibit a performance that cannot be achieved by any classical setup. Notably, the presence of a thermodynamic constraint (either on the nonequilibrium or on the work) provides a way to certify a quantum advantage even for noisy implementations of quantum memories and quantum communication systems with fidelity below the classical fidelity threshold \({F}_{\max }=2/3\). A generalisation of these results for higher dimensional systems is provided in Supplementary Note 7.

Discussion

An important feature of our bound (1) is that it applies also to state transformations that are forbidden by quantum mechanics, such as ideal quantum cloning or ideal quantum transposition. For state transformations that can be exactly implemented, instead, it is interesting to compare our bound with related results in the literature.

For exact implementations, the choice of accuracy measure is less important, and one can use any measure for which Eq. (1) yields a useful bound on the work cost. For example, consider the problem of generating a state ρ from the equilibrium state. By choosing a suitable measure of accuracy (see Methods for the details), we find that the nonequilibrium cost for the state transition Γ ↦ ρ is equal to \({D}_{\max }(\rho \parallel \Gamma )\), where \({D}_{\max }(\rho \parallel \sigma ):=\mathop{\lim }\limits_{\alpha \to \infty }{D}_{\alpha }(\rho \parallel \sigma )\) is the max relative entropy, \(D(\rho \parallel \sigma ):=\log {{{{{{{\rm{Tr}}}}}}}}[{\rho }^{\alpha }{\sigma }^{1-\alpha }]/(\alpha -1)\,,\alpha \ge 0\) being the the Rényi relative entropies. In this case, the nonequilibrium cost coincides (up to a proportionality constant \(kT\ln 2\)) with the minimal amount of work needed to generate the state ρ without errors25. Similarly, one can consider the task of extracting work from the state ρ, corresponding to the state transition ρ ↦ Γ. Ref. 25 showed that the maximum extractable work is \({D}_{\min }(\langle \rho \rangle \parallel \Gamma )\,kT\ln 2\), where \({D}_{\min }(\rho \parallel \sigma ):={D}_{0}(\rho \parallel \sigma )\) is the min relative entropy as per Datta’s definition78 and 〈ρ〉 is the time-average of ρ. This value can also be retrieved from our bound with a suitable choice of accuracy measure (see Supplementary Note 8 for the details). Smooth versions of these entropic quantities naturally arise by smoothing the task, that is, by considering small deviation from the input/output states that specify the desired state transformation (see Methods).

Our bound can also be applied to the task of information erasure with the assistance of a quantum memory33. There, a machine has access to a system S and to a quantum memory Q, and the goal is to reset system S to a pure state ηS, without altering the local state of the memory. When the initial states of system SQ are drawn from a time-invariant subspace, our bound (1) implies that the work cost satisfies the inequality \(W/(kT\,\ln 2)\ge {D}_{\max }({\eta }_{S}\otimes {\gamma }_{Q}\parallel {\Gamma }_{SQ})-{D}_{\max }({\widetilde{\Gamma }}_{SQ}\parallel {\Gamma }_{SQ})\), where \({\widetilde{\Gamma }}_{SQ}\) is the quantum state obtained by projecting the Gibbs state onto the input subspace, and \({\gamma }_{Q}={{{{{{{{\rm{Tr}}}}}}}}}_{S}[{\widetilde{\Gamma }}_{SQ}]\) is the marginal state of the memory. The bound is tight, and, for degenerate Hamiltonians, it matches the upper bound from ref. 33 up to logarithmic corrections in the error parameters (see Supplementary Note 9).

Another interesting issue is to determine when a given state transition \(\rho \mapsto \rho ^{\prime}\) can be implemented without investing work. For states that are diagonal in the energy basis, a necessary and sufficient condition was derived in ref. 26, adopting a framework where catalysts are allowed. In this setting, ref. 26 showed that the state transition \(\rho \mapsto \rho ^{\prime}\) can be implemented catalytically without work cost if and only if

These conditions can be compared with our bound (1). In Methods, we show that, with a suitable choice of figure of merit, Eq. (1) implies the lower bound \(W/(kT\ln 2)\ge \,{D}_{\max }(\rho ^{\prime} \parallel {\Gamma }_{B})-{D}_{\max }(\rho \parallel {\Gamma }_{A})\) for the perfect execution of the state transition \(\rho \mapsto \rho ^{\prime}\). Hence, the work cost for the state transition \(\rho \mapsto \rho ^{\prime}\) satisfies the bound \(W/(kT\ln 2)\ge {D}_{\max }(\rho ^{\prime} \parallel {\Gamma }_{B}) - {D}_{\max }(\rho \parallel {\Gamma }_{A})\), and the r.h.s. is nonpositive only if \({D}_{\max }(\rho ^{\prime} \parallel {\Gamma }_{B})\le {D}_{\max }(\rho \parallel {\Gamma }_{A})\). The last condition is a special case of Eq. (11), corresponding to α → ∞. Notably, this condition and Eq. (11) are equivalent when the input and output states have well-defined energy, including in particular the case where the Hamiltonians of systems A and B are fully degenerate. Further discussion on the relation between quantum relative entropies and the cost of accuracy is provided in Supplementary Note 10.

While the applications discussed in the paper focussed on one-shot tasks, our results also apply to the asymptotic scenario where the task is to implement the transformation \({\rho }_{x}^{\otimes n}\mapsto {\rho }_{x}^{{\prime} \otimes n}\) in the large n limit. In Methods we consider the amount of nonequilibrium per copy required by this transformation, allowing for small deviations in the input and output states. This setting leads to the definition of a smooth reverse entropy of a task, whose value per copy is denoted by \({\kappa }_{{{{{{{{\mathcal{T}}}}}}}},{{{{{{{\rm{i}}}}}}}}id}\) and is shown to satisfy the bound

where \(S(\rho \parallel \sigma ):={{{{{{{\rm{Tr}}}}}}}}[\rho (\log \rho -\log \sigma )]\) is the quantum relative entropy.

In the special case where the state transformation \({\rho }_{x}\to \rho_x ^{\prime}\) can be implemented perfectly, and where \({({\rho }_{x})}_{x\in {\mathsf{X}}}\) is the set of all possible quantum states of the input system, the r.h.s. of Eq. (12) (times \(kT\ln 2\)) coincides with the thermodynamic capacity introduced by Faist, Berta, and Brandão in ref. 79. In this setting, the results of ref. 79 imply that the thermodynamic capacity coincides with the amount of work per copy needed to implement the transformation \({\rho }_{x}\to \rho_x ^{\prime}\). Since the amount of work cannot be smaller than the nonequilibrium cost, this result implies that our fundamental accuracy/nonequilibrium tradeoff is asymptotically achievable for all information-processing tasks allowed by quantum mechanics.

In a different setting and with different techniques, questions related to the thermodynamical cost of physical processes have been studied in the field of stochastic thermodynamics36. Most of the works in this area focus on the properties of nonequilibrium steady states of classical systems with Markovian dynamics. An important result is a tradeoff relation between the relative standard deviation of the outputs associated with the currents in the nonequilibrium steady state and the overall entropy production37,38,39,40. This relation, called a thermodynamic uncertainty relation, is often interpreted as a tradeoff between the precision of a process and its thermodynamical cost. A difference with our work is that the notion of precision used in stochastic thermodynamics is not directly related to general information-processing tasks. Another difference is that thermodynamic uncertainty relations do not always hold for systems outside the nonequilibrium steady state39, whereas our accuracy/nonequilibrium tradeoff applies universally to all quantum systems. An interesting avenue of future research is to integrate the information-theoretic methods developed in this paper with those of stochastic thermodynamics, seeking for concrete physical models that approach the ultimate efficiency limits.

Methods

General performance tests

The performance of a machine in a given information-processing task can be operationally quantified by the probability to pass a test73,80,81. In the one-shot scenario, a general test \({{{{{{{\mathcal{T}}}}}}}}\) consists in preparing states of a composite system AR, consisting of the input of the machine and an additional reference system. The machine is requested to act locally on system A, while the reference system undergoes the identity process, or some other (generally noisy) process \({{{{{{{{\mathcal{R}}}}}}}}}_{x}\) implemented by the party that performs the test. Finally, the reference system and the output of the machine undergo a joint measurement, described by a suitable observable. The measurement outcomes are regarded as the score assigned to the machine. The test \({{{{{{{\mathcal{T}}}}}}}}\) is then described by the possible triples \({({\rho }_{x},{{{{{{{{\mathcal{R}}}}}}}}}_{x},{O}_{x})}_{x\in {\mathsf{X}}}\), consisting of an input state, a process on the reference system, and an output observable. In the worst case over all possible triples, one gets the accuracy \({{{{{{{{\mathcal{F}}}}}}}}}_{{{{{{{{\mathcal{T}}}}}}}}}({{{{{{{\mathcal{M}}}}}}}}):=\mathop{\min }_{x}{{{{{{{\rm{Tr}}}}}}}}[{O}_{x}\,({{{{{{{\mathcal{M}}}}}}}}\otimes {{{{{{{{\mathcal{R}}}}}}}}}_{x})({\rho }_{x})]\), where \({{{{{{{\mathcal{M}}}}}}}}\) is the map describing the machine’s action. Note that the dependence of the state ρx, transformation \({{{{{{{{\mathcal{R}}}}}}}}}_{x}\), and measurement Ox can be arbitrary, and that the parameter x can also be a vector x = (x1, …, xn). For example, the input state ρx could depend only on the subset of the entries of the vector x, while the output observable Ox could depend on all the entries, thus describing the situation where multiple observables are tested for the same input state.

Performance tests provide a more general way to define information-processing tasks. Rather than specifying a desired state transformation \({\rho }_{x}\mapsto \rho_x ^{\prime}\), one can directly specify a test that assigns a score to the machine. The test can be expressed in a compact way in the Choi representation82. In this representation, the test is described by a set of operators \({({\Omega }_{x})}_{x\in {\mathsf{X}}}\), called the performance operators81, acting on the product of the input and output Hilbert spaces. The accuracy of the test has the simple expression \({{{{{{{{\mathcal{F}}}}}}}}}_{{{{{{{{\mathcal{T}}}}}}}}}({{{{{{{\mathcal{M}}}}}}}})=\mathop{\min }_{x}{{{{{{{\rm{Tr}}}}}}}}[M\,{\Omega }_{x}]\), where \(M:=({{{{{{{{\mathcal{I}}}}}}}}}_{A}\otimes {{{{{{{\mathcal{M}}}}}}}})(\left|{I}_{A}\right\rangle \left\langle {I}_{A}\right|)\), \(\left|{I}_{A}\right\rangle :={\sum }_{i}\,\left|i\right\rangle \otimes \left|i\right\rangle\) is the Choi operator of channel \({{{{{{{\mathcal{M}}}}}}}}\), and \({{{{{{{{\mathcal{I}}}}}}}}}_{A}\) is the identity on system A. In the following we will take each operator Ωx to be positive semidefinite without loss of generality.

Exact expression for the nonequilibrium cost

In Supplementary Note 1, we show that the nonequilibrium cost of a general task \({{{{{{{\mathcal{T}}}}}}}}\) can be evaluated with the expression \({c}_{{{{{{{{\mathcal{T}}}}}}}}}(F)=\mathop{\max }\limits_{{{{{{{{\bf{p}}}}}}}}}{c}_{{{{{{{{\mathcal{T}}}}}}}},{{{{{{{\bf{p}}}}}}}}}(F)\), where the minimum is over all probability distributions \({{{{{{{\bf{p}}}}}}}}={({p}_{x})}_{x\in {\mathsf{X}}}\) and

with Ωp ≔ ∑x px Ωx, \(\Gamma ^{\prime} :={{{\Pi }}}_{A}{\Gamma }_{A}{{{\Pi }}}_{A}\). Here, the maximisation runs over all Hermitian operators XA(YB) acting on system A (B) and over all real numbers z.

For every fixed probability distribution p, the evaluation of \({c}_{{{{{{{{\mathcal{T}}}}}}}},{{{{{{{\bf{p}}}}}}}}}(F)\) is a semidefinite programme83, and can be solved numerically for low-dimensional systems. A simpler optimisation problem arises by setting XA = 0, which provides the lower bound

(see Supplementary Note 1 for the derivation).

Time-reversed tasks and reverse entropy

For a given task \({{{{{{{\mathcal{T}}}}}}}}\), implemented by operations with input A and output B, we define a time-reversed task \({{{{{{{{\mathcal{T}}}}}}}}}^{{{{{{{{\rm{rev}}}}}}}}}\), implemented by operations with input B and output A. For example, consider the case where the direct task is to transform pure states into pure states, according to a given mapping \({\rho }_{x}\mapsto \rho_x ^{\prime}\), on a quantum system with fully degenerate energy levels, and the accuracy of the implementation measured by the fidelity \({{{{{{{{\mathcal{F}}}}}}}}}_{{{{{{{{\mathcal{T}}}}}}}}}({{{{{{{\mathcal{M}}}}}}}})=\mathop{\min }_{x}{{{{{{{\rm{Tr}}}}}}}}[\rho_x ^{\prime} {{{{{{{\mathcal{M}}}}}}}}({\rho }_{x})]\). In this case, the time-reversed task is to implement the transformation \(\rho_{x} ^{\prime} \mapsto {\rho }_{x}\), using some channel \({{{{{{{\mathcal{M}}}}}}}}\) with input B and output A. The accuracy is then given by the reverse fidelity \({{{{{{{{\mathcal{F}}}}}}}}}_{{{{{{{{{\mathcal{T}}}}}}}}}_{{{{{{{{\rm{rev}}}}}}}}}}({{{{{{{\mathcal{M}}}}}}}})=\mathop{\min }_{x}{{{{{{{\rm{Tr}}}}}}}}[{\rho }_{x}{{{{{{{\mathcal{M}}}}}}}}(\rho_{x} ^{\prime} )]\). More generally, we define the time-reversed task \({{{{{{{{\mathcal{T}}}}}}}}}_{{{{{{{{\rm{rev}}}}}}}}}\) in terms of a time reversal for quantum operations41,42, related to Petz’s recovery map43,44,45. The specific version of the time reversal used here maps the states ρx into the observables \({\widetilde{O}}_{x}:={\Gamma }_{A}^{-\frac{1}{2}}{\rho }_{x}{\Gamma }_{A}^{-\frac{1}{2}}\) and the observables Ox into the (unnormalised) states \({\widetilde{\rho }}_{x}:={\Gamma }_{B}^{\frac{1}{2}}{O}_{x}{\Gamma }_{B}^{\frac{1}{2}}\)42. The reverse accuracy then becomes \({{{{{{{{\mathcal{F}}}}}}}}}_{{{{{{{{{\mathcal{T}}}}}}}}}_{{{{{{{{\rm{rev}}}}}}}}}}({{{{{{{\mathcal{M}}}}}}}}):=\mathop{\min }_{x}{{{{{{{\rm{Tr}}}}}}}}[{\widetilde{O}}_{x}{{{{{{{\mathcal{M}}}}}}}}({\widetilde{\rho }}_{x})]\).

For a general information-processing task with performance operators \({({\Omega }_{x})}_{x\in {\mathsf{X}}}\), we define the time-reversed task \({{{{{{{{\mathcal{T}}}}}}}}}_{{{{{{{{\rm{rev}}}}}}}}}\) with performance operators \({({\Omega }_{x}^{{{{{{{{\rm{rev}}}}}}}}})}_{x\in {\mathsf{X}}}\) defined by

where \({E}_{AB}:{{{{{{{{\mathcal{H}}}}}}}}}_{A}\otimes {{{{{{{{\mathcal{H}}}}}}}}}_{B}\to {{{{{{{{\mathcal{H}}}}}}}}}_{B}\otimes {{{{{{{{\mathcal{H}}}}}}}}}_{A}\) is the unitary operator that exchanges systems A and B. The reverse accuracy of a generic quantum channel \({{{{{{{\mathcal{M}}}}}}}}\) is then given by \({{{{{{{{\mathcal{F}}}}}}}}}_{{{{{{{{{\mathcal{T}}}}}}}}}_{{{{{{{{\rm{rev}}}}}}}}}}({{{{{{{\mathcal{M}}}}}}}}):=\mathop{\min }_{x}\,{{{{{{{\rm{Tr}}}}}}}}[{\Omega }_{x}^{{{{{{{{\rm{rev}}}}}}}}}\,M]\), where M is the Choi operator of \({{{{{{{\mathcal{M}}}}}}}}\). The maximum of the reverse accuracy over all quantum channels can be equivalently expressed in terms of a conditional min-entropy: indeed, one has

where the minimum is over all probability distributions p = (px), and \({\omega }_{{{{{{{{\mathcal{T}}}}}}}},{{{{{{{\bf{p}}}}}}}}}:={({\sum }_{x}{p}_{x}{\Omega }_{x}^{{{{{{{{\rm{rev}}}}}}}}})}^{T}\). Using von Neumann’s minimax theorem, we then obtain

where the second equality follows from the operational interpretation of the min-entropy45. Taking the logarithm on both sides of the equality, we then obtain the relation \({\kappa }_{{{{{{{{\mathcal{T}}}}}}}}}:=-\log {F}_{\max }^{{{{{{{{{\mathcal{T}}}}}}}}}_{{{{{{{{\rm{rev}}}}}}}}}}=\mathop{\max }\nolimits_{{{{{{{{\bf{p}}}}}}}}}{H}_{\min }{(A|B)}_{{\omega }_{{{{{{{{\bf{p}}}}}}}}}}\), corresponding to Eq. (3) in the main text. The bound (1) then follows from the relation \({c}_{{{{{{{{\mathcal{T}}}}}}}}}(F)=\mathop{\max }\nolimits_{{{{{{{{\bf{p}}}}}}}}}{c}_{{{{{{{{\mathcal{T}}}}}}}},{{{{{{{\bf{p}}}}}}}}}\) and from Eq. (14).

Bounds on the reverse entropy

When the test \({{{{{{{\mathcal{T}}}}}}}}\) consists in the preparation of a set of states \({({\rho }_{x})}_{x\in {\mathsf{X}}}\) of system A and in the measurement of a set of observables \({({O}_{x})}_{x\in {\mathsf{X}}}\) on system B, the reverse entropy can be lower bounded as

with the equality holding when ∣X∣ = 1 (see Supplementary Note 10 for the proof and for a discussion on the relation between the nonequilibrium cost of a state transformation task \({\rho }_{x}\mapsto \rho_{x} ^{\prime},\forall x\in {\mathsf{X}}\) and the nonequilibrium cost of the individual state transitions \({\rho }_{x}\mapsto \rho_{x} ^{\prime}\) for a fixed value of x).

A possible choice of observable is Ox = Px, where Px is the projector on the support of the target state \(\rho_{x} ^{\prime}\). In this case, the bound (18) becomes

An alternative choice of observables is \({O}_{x}={\Gamma }^{-1/2}\left|{\psi }_{x}\right\rangle \left\langle {\psi }_{x}\right|{\Gamma }^{-1/2}/\parallel {\Gamma }^{-1/2}\rho_{x} ^{\prime} {\Gamma }^{-1/2}\parallel\), where \(\left|{\psi }_{x}\right\rangle\) is the normalised eigenvector corresponding to the maximum eigenvalue of \({\Gamma }_{B}^{-1/2}\rho_{x} ^{\prime} {\Gamma }_{B}^{-1/2}\). With this choice, the bound (18) becomes \(\kappa \ge \mathop{\max }\limits_{x}\,{D}_{\max }(\rho_{x} ^{\prime} \parallel {\Gamma }_{B})-{D}_{\max }({\rho }_{x}\parallel {\Gamma }_{A})\), with the equality when ∣X∣ = 1. Combining this bound with Eq. (1), we obtain the following

Proposition 1

If there exists a quantum channel \({{{{{{{\mathcal{M}}}}}}}}\) such that \({{{{{{{\mathcal{M}}}}}}}}({\rho }_{x})=\rho_{x} ^{\prime}\) for every x ∈ X, then its nonequilibrium cost satisfies the bound \(c({{{{{{{\mathcal{M}}}}}}}})\ge \mathop{\max }\limits_{x}\,{D}_{\max }(\rho_{x} ^{\prime} \parallel {\Gamma }_{B})-{D}_{\max }({\rho }_{x}\parallel {\Gamma }_{A})\).

The proposition follows from Eq. (1) and from the fact that the channel \({{{{{{{\mathcal{M}}}}}}}}\) has accuracy \({{{{{{{\mathcal{F}}}}}}}}({{{{{{{\mathcal{M}}}}}}}})=\mathop{\min }_{x}{{{{{{{\rm{Tr}}}}}}}}[{{{{{{{\mathcal{M}}}}}}}}({\rho }_{x}){O}_{x}]=1\).

Smooth reverse entropy

For an information-processing task \({{{{{{{\mathcal{T}}}}}}}}\) with operators \({({\Omega }_{x})}_{x\in {\mathsf{X}}}\), one can consider an approximate version, described by another task \({{{{{{{\mathcal{T}}}}}}}}^{\prime}\) with operators \(({\Omega} ^{\prime }_{x})_{x \in {\mathsf{X}}^{\prime}}\) that are close to \(({{\Omega }_{x}})_{x\in {\mathsf{X}}}\) with respect to a suitable notion of distance. One can then define the worst (best) case smooth reverse entropy of the task \({\kappa }_{{{{{{{{\mathcal{T}}}}}}}},\epsilon }\) as the maximum (minimum) of \({\kappa }_{{{{{{{{\mathcal{T}}}}}}}}^{\prime} }\) over all tasks \({{{{{{{\mathcal{T}}}}}}}}^{\prime}\) that are within distance ϵ from the given task. The choice between the worst case and the best case irreversibility depends on the problem at hand. A best case irreversibility corresponds to introducing an error tolerance in the task, thus discarding low-probability events that would result in a higher cost25,33. Instead, a worst case irreversibility can be used to model noisy scenarios, where the input states may not be the ones in the ideal information-processing task. An example of this situation is the experimental implementation of quantum cloning, where the input states may not be exactly pure.

Smoothing is particularly useful in the asymptotic scenario. Consider the test \({{{{{{{{\mathcal{T}}}}}}}}}_{n}\) that consists in preparing a multi-copy input state \({\rho }_{x}^{\otimes n}\) and measuring the observable Px,n, where Px,n is the projector on the support of the target state \({\rho }_{x}^{{\prime} \,\otimes n}\). A natural approximation is to allow, for every x ∈ X, all inputs ρy,n that are ϵ-close to \({\rho }_{x}^{\otimes n}\), and all outputs \(\rho_{x} ^{\prime}\) that are ϵ-close to \({\rho }_{x}^{{\prime} \otimes n}\). Choosing \({\kappa }_{{{{{{{{{\mathcal{T}}}}}}}}}_{n},\epsilon }\) to be the worst case smooth reverse entropy of the task \({{{{{{{{\mathcal{T}}}}}}}}}_{n}\), Eq. (19) gives the bound \({\kappa }_{{{{{{{{{\mathcal{T}}}}}}}}}_{n},\epsilon }\ge \mathop{\max }\limits_{x}{D}_{\min }^{\epsilon }({\rho }_{x}^{{\prime} \otimes n}\parallel {\Gamma }_{B}^{\otimes n})-{D}_{\max }^{\epsilon }({\rho }_{x}^{\otimes n}\parallel {\Gamma }_{A}^{\otimes n})\), where \({D}_{\min }^{\epsilon }\) and \({D}_{\max }^{\epsilon }\) are the smooth versions of \({D}_{\min }\) and \({D}_{\max }\)78. One can then define the regularised reverse entropy of the task as \({\kappa }_{{{{{{{{\mathcal{T}}}}}}}},{{{{{{{\rm{i}}}}}}}}id}:=\mathop{\lim }\limits_{\epsilon \to 0}\,\mathop{\sup }\limits_{n}\,{\kappa }_{{{{{{{{{\mathcal{T}}}}}}}}}_{n},\epsilon }/n\). Using the relations \(\mathop{\lim }\limits_{\epsilon \to 0}\,\mathop{\sup }\limits_{n}{D}_{\min }^{\epsilon }({\rho }_{x}^{{\prime} \otimes n}\parallel {\Gamma }_{B}^{\otimes n})/n=S(\rho_{x} ^{\prime} \parallel {\Gamma }_{B})\) and \(\mathop{\lim }\limits_{\epsilon \to 0}\,\mathop{\inf }\limits_{n}{D}_{\max }^{\epsilon }({\rho }_{x}^{\otimes n}\parallel {\Gamma }_{A}^{\otimes n})/n=S({\rho }_{x}\parallel {\Gamma }_{A})\)78 we finally obtain the bound \({\kappa }_{{{{{{{{\bf{T}}}}}}}},{{{{{{{\rm{i}}}}}}}}id}\ge \mathop{\max }\limits_{x}S(\rho_{x} ^{\prime} \parallel {\Gamma }_{B})-S({\rho }_{x}\parallel {\Gamma }_{A})\). The quantity on the r.h.s. coincides with the thermodynamic capacity introduced by Faist, Berta, and Brandão in ref. 79, where it was shown that the thermodynamic capacity coincides with the amount of work per copy needed to implement the transformation \({\rho }_{x}\to \rho_{x} ^{\prime}\). Combining this result with our bounds, we obtain that the fundamental accuracy/nonequilibrium in Eq. (1) is asymptotically achievable for all transformations allowed by quantum mechanics.

Limit for entanglement binding channels

Entanglement binding channels generally satisfy a more stringent limit than (1). The derivation of this strengthened limit is as follows: first, the definition of an entanglement binding channel \({{{{{{{\mathcal{P}}}}}}}}\) implies that the map \({{{{{{{{\mathcal{P}}}}}}}}}^{{{{{{{{\rm{PT}}}}}}}}}\) defined by \({{{{{{{{\mathcal{P}}}}}}}}}^{{{{{{{{\rm{PT}}}}}}}}}(\rho ):={[{{{{{{{\mathcal{P}}}}}}}}(\rho )]}^{T}\) is a valid quantum channel. Now, the nonequilibrium cost of the channels \({{{{{{{\mathcal{P}}}}}}}}\) and \({{{{{{{{\mathcal{P}}}}}}}}}^{{{{{{{{\rm{PT}}}}}}}}}\) is given by \({D}_{\max }({{{{{{{\mathcal{P}}}}}}}}({{{\Pi }}}_{A}{\Gamma }_{A}{{{\Pi }}}_{A}\parallel {\Gamma }_{B}))\) and \({D}_{\max }({{{{{{{{\mathcal{P}}}}}}}}}^{{{{{{{{\rm{PT}}}}}}}}}({{{\Pi }}}_{A}{\Gamma }_{A}{{{\Pi }}}_{A}\parallel {\Gamma }_{B}))\) (cf. Supplementary Note 1). Since the max relative entropy satisfies the relation \({D}_{\max }(\rho \parallel \sigma )={D}_{\max }({\rho }^{T}\parallel {\sigma }^{T})\) for every pair of states ρ and σ, the costs of \({{{{{{{\mathcal{P}}}}}}}}\) and \({{{{{{{{\mathcal{P}}}}}}}}}^{{{{{{{{\rm{PT}}}}}}}}}\) are equal.

The second step is to note that the accuracy of the channel \({{{{{{{\mathcal{P}}}}}}}}\) for the task specified by the performance operators (Ωx) is equal to the accuracy of the channel \({{{{{{{{\mathcal{P}}}}}}}}}^{{{{{{{{\rm{PT}}}}}}}}}\) for the task specified by the performance operators \(({\Omega }_{x}^{{T}_{B}})\), where TB denotes the partial transpose over system B. Applying the bound (1) to channel \({{{{{{{{\mathcal{P}}}}}}}}}^{{{{{{{{\rm{PT}}}}}}}}}\), we then obtain the relation

where \({\kappa }_{{{{{{{{{\mathcal{T}}}}}}}}}^{*}}\) is the reverse entropy of the transpose task \({{{{{{{{\mathcal{T}}}}}}}}}^{*}\), with performance operators \(({\Omega }_{x}^{{T}_{B}})\). Since entanglement binding channel is subject to both bounds (1) and (20), Eq. (8) holds.

Data availability

The authors declare that the data supporting the findings of this study are available within the paper and in the supplementary information files.

References

McCulloch, S. D. & Kunkel, T. A. The fidelity of DNA synthesis by eukaryotic replicative and translesion synthesis polymerases. Cell Res. 18, 148–161 (2008).

Wang, T. et al. Self-replication of information-bearing nanoscale patterns. Nature 478, 225–228 (2011).

England, J. L. Statistical physics of self-replication. J. Chem. Phys. 139, 09B623_1 (2013).

Andrieux, D. & Gaspard, P. Nonequilibrium generation of information in copolymerization processes. Proc. Natl Acad. Sci. USA 105, 9516–9521 (2008).

Jarzynski, C. The thermodynamics of writing a random polymer. Proc. Natl Acad. Sci. USA 105, 9451–9452 (2008).

Bennett, C. H. The thermodynamics of computation-a review. Int. J. Theor. Phys. 21, 905–940 (1982).

Landauer, R. Irreversibility and heat generation in the computing process. IBM J. Res. Dev. 5, 183–191 (1961).

Leff, H. S. & Rex, A. F. Maxwell’s Demon: Entropy, Information, Computing (Princeton University Press, 2014).

Wootters, W. K. & Zurek, W. H. A single quantum cannot be cloned. Nature 299, 802–803 (1982).

Dieks, D. Communication by EPR devices. Phys. Lett. A 92, 271–272 (1982).

Goold, J., Huber, M., Riera, A., Del Rio, L. & Skrzypczyk, P. The role of quantum information in thermodynamics-a topical review. J. Phys. A Math. Theor. 49, 143001 (2016).

Vinjanampathy, S. & Anders, J. Quantum thermodynamics. Contemp. Phys. 57, 545–579 (2016).

Binder, F., Correa, L. A., Gogolin, C., Anders, J. & Adesso, G. Thermodynamics in the Quantum Regime: Fundamental Aspects and New Directions: Vol. 195 (Fundamental Theories of Physics). 1–2 (Springer, 2018).

Lloyd, S. Ultimate physical limits to computation. Nature 406, 1047–1054 (2000).

Sagawa, T. & Ueda, M. Minimal energy cost for thermodynamic information processing: measurement and information erasure. Phys. Rev. Lett. 102, 250602 (2009).

Linden, N., Popescu, S. & Skrzypczyk, P. How small can thermal machines be? The smallest possible refrigerator. Phys. Rev. Lett. 105, 130401 (2010).

Parrondo, J. M., Horowitz, J. M. & Sagawa, T. Thermodynamics of information. Nat. Phys. 11, 131–139 (2015).

Goold, J., Paternostro, M. & Modi, K. Nonequilibrium quantum landauer principle. Phys. Rev. Lett. 114, 060602 (2015).

Baugh, J., Moussa, O., Ryan, C. A., Nayak, A. & Laflamme, R. Experimental implementation of heat-bath algorithmic cooling using solid-state nuclear magnetic resonance. Nature 438, 470–473 (2005).

Toyabe, S., Sagawa, T., Ueda, M., Muneyuki, E. & Sano, M. Experimental demonstration of information-to-energy conversion and validation of the generalized jarzynski equality. Nat. Phys. 6, 988–992 (2010).

Vidrighin, M. D. et al. Photonic maxwell’s demon. Phys. Rev. Lett. 116, 050401 (2016).

Janzing, D., Wocjan, P., Zeier, R., Geiss, R. & Beth, T. Thermodynamic cost of reliability and low temperatures: tightening landauer’s principle and the second law. Int. J. Theor. Phys. 39, 2717–2753 (2000).

Horodecki, M., Horodecki, P. & Oppenheim, J. Reversible transformations from pure to mixed states and the unique measure of information. Phys. Rev. A 67, 062104 (2003).

Brandao, F. G., Horodecki, M., Oppenheim, J., Renes, J. M. & Spekkens, R. W. Resource theory of quantum states out of thermal equilibrium. Phys. Rev. Lett. 111, 250404 (2013).

Horodecki, M. & Oppenheim, J. Fundamental limitations for quantum and nanoscale thermodynamics. Nat. Commun. 4, 2059 (2013).

Brandao, F., Horodecki, M., Ng, N., Oppenheim, J. & Wehner, S. The second laws of quantum thermodynamics. Proc. Natl Acad. Sci. USA 112, 3275–3279 (2015).

Brandão, F. G. S. L. & Gour, G. Reversible framework for quantum resource theories. Phys. Rev. Lett. 115, 070503 (2015).

Faist, P., Oppenheim, J. & Renner, R. Gibbs-preserving maps outperform thermal operations in the quantum regime. N. J. Phys. 17, 043003 (2015).

Gour, G., Müller, M. P., Narasimhachar, V., Spekkens, R. W. & Halpern, N. Y. The resource theory of informational nonequilibrium in thermodynamics. Phys. Rep. 583, 1–58 (2015).

Gour, G., Jennings, D., Buscemi, F., Duan, R. & Marvian, I. Quantum majorization and a complete set of entropic conditions for quantum thermodynamics. Nat. Commun. 9, 1–9 (2018).

Faist, P., Dupuis, F., Oppenheim, J. & Renner, R. The minimal work cost of information processing. Nat. Commun. 6, 7669 (2015).

Faist, P. & Renner, R. Fundamental work cost of quantum processes. Phys. Rev. X 8, 021011 (2018).

Del Rio, L., Åberg, J., Renner, R., Dahlsten, O. & Vedral, V. The thermodynamic meaning of negative entropy. Nature 474, 61 (2011).

Åberg, J. Truly work-like work extraction via a single-shot analysis. Nat. Commun. 4, 1–5 (2013).

Skrzypczyk, P., Short, A. J. & Popescu, S. Work extraction and thermodynamics for individual quantum systems. Nat. Commun. 5, 1–8 (2014).

Seifert, U. Stochastic thermodynamics: from principles to the cost of precision. Phys. A: Stat. Mech. Appl. 504, 176–191 (2018).

Barato, A. C. & Seifert, U. Thermodynamic uncertainty relation for biomolecular processes. Phys. Rev. Lett. 114, 158101 (2015).

Gingrich, T. R., Horowitz, J. M., Perunov, N. & England, J. L. Dissipation bounds all steady-state current fluctuations. Phys. Rev. Lett. 116, 120601 (2016).

Barato, A. C. & Seifert, U. Cost and precision of brownian clocks. Phys. Rev. X 6, 041053 (2016).

Horowitz, J. M. & Gingrich, T. R. Thermodynamic uncertainty relations constrain non-equilibrium fluctuations. Nat. Phys. 16, 15–20 (2020).

Crooks, G. E. Quantum operation time reversal. Phys. Rev. A 77, 034101 (2008).

Chiribella, G., Aurell, E. & Życzkowski, K. Symmetries of quantum evolutions. Phys. Rev. Res. 3, 033028 (2021).

Renner, R. & Wolf, S. Smooth rényi entropy and applications. Proceedings. In: International Symposium on Information Theory, 2004. ISIT 2004. 233 (IEEE, 2004).

Datta, N. & Renner, R. Smooth entropies and the quantum information spectrum. IEEE Trans. Inf. Theory 55, 2807–2815 (2009).

König, R., Renner, R. & Schaffner, C. The operational meaning of min-and max-entropy. IEEE Trans. Inf. theory 55, 4337–4347 (2009).

Rényi, A. On measures of entropy and information. In: Proceedings of the Fourth Berkeley Symposium on Mathematical Statistics and Probability, Volume 1: Contributions to the Theory of Statistics (The Regents of the University of California, 1961).

Bužek, V., Hillery, M. & Werner, F. Universal-not gate. J. Mod. Opt. 47, 211–232 (2000).

Horodecki, P. From limits of quantum operations to multicopy entanglement witnesses and state-spectrum estimation. Phys. Rev. A 68, 052101 (2003).

Buscemi, F., D’Ariano, G., Perinotti, P. & Sacchi, M. Optimal realization of the transposition maps. Phys. Lett. A 314, 374–379 (2003).

Ricci, M., Sciarrino, F., Sias, C. & De Martini, F. Teleportation scheme implementing the universal optimal quantum cloning machine and the universal not gate. Phys. Rev. Lett. 92, 047901 (2004).

De Martini, F., Pelliccia, D. & Sciarrino, F. Contextual, optimal, and universal realization of the quantum cloning machine and of the not gate. Phys. Rev. Lett. 92, 067901 (2004).

Lim, H.-T., Kim, Y.-S., Ra, Y.-S., Bae, J. & Kim, Y.-H. Experimental realization of an approximate partial transpose for photonic two-qubit systems. Phys. Rev. Lett. 107, 160401 (2011).

Birkhoff, G. Tres observaciones sobre el algebra lineal. Univ. Nac. Tucuman Ser. A 5, 147–154 (1946).

Landauer, R. Information is physical. Phys. Today 44, 23–29 (1991).

Bennett, C. H. Notes on Landauer’s principle, reversible computation, and maxwell’s demon. Stud. Hist. Philos. Sci. Part B Stud. Hist. Philos. Mod. Phys. 34, 501–510 (2003).

Scarani, V., Iblisdir, S., Gisin, N. & Acin, A. Quantum cloning. Rev. Mod. Phys. 77, 1225 (2005).

Gisin, N. Quantum cloning without signaling. Phys. Lett. A 242, 1–3 (1998).

Chiribella, G., Yang, Y. & Yao, A. C.-C. Quantum replication at the heisenberg limit. Nat. Commun. 4, 1–8 (2013).

Fan, H. et al. Quantum cloning machines and the applications. Phys. Rep. 544, 241–322 (2014).

Hillery, M. & Bužek, V. Quantum copying: fundamental inequalities. Phys. Rev. A 56, 1212 (1997).

Gisin, N. & Massar, S. Optimal quantum cloning machines. Phys. Rev. Lett. 79, 2153 (1997).

Werner, R. F. Optimal cloning of pure states. Phys. Rev. A 58, 1827 (1998).

Horodecki, M., Shor, P. W. & Ruskai, M. B. Entanglement breaking channels. Rev. Math. Phys. 15, 629–641 (2003).

Horodecki, P., Horodecki, M. & Horodecki, R. Binding entanglement channels. J. Mod. Opt. 47, 347–354 (2000).

Peres, A. Separability criterion for density matrices. Phys. Rev. Lett. 77, 1413 (1996).

Horodecki, P. Separability criterion and inseparable mixed states with positive partial transposition. Phys. Lett. A 232, 333–339 (1997).

Bruss, D., Ekert, A. & Macchiavello, C. Optimal universal quantum cloning and state estimation. Phys. Rev. Lett. 81, 2598 (1998).

Bae, J. & Acín, A. Asymptotic quantum cloning is state estimation. Phys. Rev. Lett. 97, 030402 (2006).

Chiribella, G. & D’Ariano, G. M. Quantum information becomes classical when distributed to many users. Phys. Rev. Lett. 97, 250503 (2006).

Chiribella, G. On quantum estimation, quantum cloning and finite quantum de finetti theorems. In: Conference on Quantum Computation, Communication, and Cryptography. 9–25 (Springer, 2010).

Boschi, D., Branca, S., De Martini, F., Hardy, L. & Popescu, S. Experimental realization of teleporting an unknown pure quantum state via dual classical and einstein-podolsky-rosen channels. Phys. Rev. Lett. 80, 1121 (1998).

Braunstein, S. L. & Kimble, H. J. Teleportation of continuous quantum variables. Phys. Rev. Lett. 80, 869 (1998).

Hammerer, K., Wolf, M. M., Polzik, E. S. & Cirac, J. I. Quantum benchmark for storage and transmission of coherent states. Phys. Rev. Lett. 94, 150503 (2005).

Massar, S. & Popescu, S. Optimal extraction of information from finite quantum ensembles. In: Asymptotic Theory Of Quantum Statistical Inference: Selected Papers. 356–364 (World Scientific, 2005).

Li, B. et al. Quantum state transfer over 1200 km assisted by prior distributed entanglement. Phys. Rev. Lett. 128, 170501 (2022).

Zhong, Y. et al. Deterministic multi-qubit entanglement in a quantum network. Nature 590, 571–575 (2021).

Kurpiers, P. et al. Deterministic quantum state transfer and remote entanglement using microwave photons. Nature 558, 264–267 (2018).

Datta, N. Min-and max-relative entropies and a new entanglement monotone. IEEE Trans. Inf. Theory 55, 2816–2826 (2009).

Faist, P., Berta, M. & Brandão, F. Thermodynamic capacity of quantum processes. Phys. Rev. Lett. 122, 200601 (2019).

Yang, Y., Chiribella, G. & Adesso, G. Certifying quantumness: Benchmarks for the optimal processing of generalized coherent and squeezed states. Phys. Rev. A 90, 042319 (2014).

Bai, G. & Chiribella, G. Test one to test many: a unified approach to quantum benchmarks. Phys. Rev. Lett. 120, 150502 (2018).

Choi, M.-D. Completely positive linear maps on complex matrices. Linear Algebra Appl. 10, 285–290 (1975).

Watrous, J. The Theory of Quantum Information (Cambridge University Press, 2018).

Acknowledgements

G.C. acknowledges a helpful discussion with Nilanjiana Datta on the quantum extensions of Rényi relative entropies. F.M. acknowledges Yuxiang Yang, Mile Gu, and Oscar Dahlsten for helpful comments that helped improving the presentation. This work was supported by the Hong Kong Research Grant Council through grants 17326616 (G.C.) and 17300918 (G.C.), and through the Senior Research Fellowship Scheme via SRFS2021-7S02 (G.C.), by the Swiss National Science Foundation via grant 200021_188541 (R.R.), by the National Natural Science Foundation of China through grants 11675136 (G.C.), 11875160 (M.Y.) and U1801661 (M.Y.), by the Key R&D Programme of Guangdong province through grant 2018B030326001 (M.Y.), by the Guangdong Provincial Key Laboratory through grant c1933200003 (M.Y.), the Guangdong Innovative and Entrepreneurial Research Team Programme via grant 2016ZT06D348 (M.Y.), the Science, Technology and Innovation Commission of Shenzhen Municipality through grant KYTDPT20181011104202253 (M.Y.). Research at the Perimeter Institute is supported by the Government of Canada through the Department of Innovation, Science and Economic Development Canada and by the Province of Ontario through the Ministry of Research, Innovation and Science.

Author information

Authors and Affiliations

Contributions

G.C. and M.Y. proposed the initial idea. G.C. introduced the notion of reverse entropy and proved the bound on the nonequilibrium cost. F.M. derived the achievability condition and computed the nonequilibrium cost of quantum cloning. R.R. and G.C. devised the notion of smoothing for the reverse entropy, and developed the connections with information erasure, work cost, and work extraction. All authors contributed substantially to the development of the preparation of the paper. G.C. and F.M. contributed equally.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review

Peer review information

Nature Communications thanks the anonymous, reviewer(s) for their contribution to the peer review of this work. Peer reviewer reports are available.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Chiribella, G., Meng, F., Renner, R. et al. The nonequilibrium cost of accurate information processing. Nat Commun 13, 7155 (2022). https://doi.org/10.1038/s41467-022-34541-w

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41467-022-34541-w

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.