Abstract

The phenomena of quantum criticality underlie many novel collective phenomena found in condensed matter systems. They present a challenge for classical and quantum simulation, in part because of diverging correlation lengths and consequently strong finite-size effects. Tensor network techniques that work directly in the thermodynamic limit can negotiate some of these difficulties. Here, we optimise a translationally invariant, sequential quantum circuit on a superconducting quantum device to simulate the groundstate of the quantum Ising model through its quantum critical point. We further demonstrate how the dynamical quantum critical point found in quenches of this model across its quantum critical point can be simulated. Our approach avoids finite-size scaling effects by using sequential quantum circuits inspired by infinite matrix product states. We provide efficient circuits and a variety of error mitigation strategies to implement, optimise and time-evolve these states.

Similar content being viewed by others

Introduction

The simulation of chemical reactions and materials properties, and the discovery of new pharmaceutical compounds are anticipated to be major applications of quantum computers. The rapid development of various approaches to constructing NISQ devices brings simple models of these use cases within the realm of possibility. Finding challenging but feasible problems that can be implemented on current devices is crucial. As well as demonstrating the progress that has been made, these serve to highlight required improvements and, ideally, have the possibility of quantum advantage when suitably powerful quantum computers are developed. Many problems that fit this bill are to be found in condensed matter. They can be scaled to fit current machines while retaining scientific and technological relevance.

Strongly correlated condensed matter systems are amongst those most likely to yield a quantum advantage1,2,3,4,5,6. These are systems in which the underlying spins or electrons are strongly renormalised and whose quantum properties are beyond the reach of standard perturbative or density functional type approaches. Quantum criticality7,8,9 is one of the few collective organising principles that has been able to make sense of a large class of strongly correlated phenomena. The term—first coined by Hertz10—refers to systems in the vicinity of a zero-temperature phase transition driven by quantum fluctuations. Such systems display universal spatial and temporal correlations that are not seen in purely classical problems and can underpin the formation of entirely new quantum phases. Diverging correlation lengths near the quantum critical point make these states challenging for numerics, driving the development of state-of-the-art tools such as dynamical mean field theory11 (to deal with the Mott transition), singlet Monte Carlo12 (to deal with deconfined quantum criticality) and tensor networks amongst many others.

As well as providing the best classical numerical approach for many spin systems, tensor network methods can be directly translated to quantum circuits13,14,15,16,17. Doing so has potential quantum advantage over the classical implementations16, and tensor networks allow initialisation and improvement of quantum circuits in a way that can circumvent the barren plateaux that potentially plague quantum circuits. There are particular advantages in applying these methods to describe quantum critical systems. Diverging correlation lengths lead to strong finite-size effects which can be avoided with tensor networks by working directly in the thermodynamic limit. There may be advantages in combining tensor network methods with machine learning tools18,19,20 to extract simulation results as used recently in classical numerics. Moreover, there is potentially excellent fit between tensor network simulation methods and matrix product operator-based error mitigation21,22. While we focus on one-dimensional matrix product states (MPS), reflecting the limitations of current devices, translations of these methods to higher dimensions have been proposed23,24.

Here we demonstrate that translationally invariant MPS (iMPS) can be used to simulate quantum critical systems, in the thermodynamic limit, on Google’s Rainbow device—a quantum device that shares the Sycamore architecture25. We focus upon the quantum Ising model which has a quantum phase transition in its groundstate properties7,9 and in its dynamics26,27,28,29. We show that—with appropriate error mitigation—the groundstate of the quantum Ising model can be found with high accuracy even at the quantum critical point. We also demonstrate a cost-function that, by balancing analytical approximations and error mitigation strategies, faithfully tracks time-evolution through the dynamical quantum phase transition. The resulting circuits are considerably simpler than the previous proposals17.

Results

We begin discussion of our results by introducing the quantum Ising model and its key features. We then show how translationally invariant, quantum circuit iMPS, together with suitable error-mitigation strategies, can be used to determine its groundstate properties. We report the result of applying these methods on Rainbow. Next, we introduce circuits that can be used to simulate the quantum dynamics, and the balance of analytical approximations and error mitigation that permit them to be implemented on the Rainbow device.

Quantum phase transitions

The quantum or transverse field Ising model is one of the best understood models that exhibits quantum critical phenomena. Its Hamiltonian is given by

where \(\hat{Z}\) and \(\hat{X}\) are Pauli operators, J is the exchange coupling and g the transverse field. This model has a groundstate quantum phase transition at g/J = 1 and a dynamical quantum phase transition when a groundstate prepared on one side of the critical point (say g/J > 1) is evolved with a Hamiltonian on the opposite side (say g/J < 1). Though an exact solution can be obtained using a Jordan–Wigner transformation, much remains to be understood and its dynamical and thermalisation properties are the subject of current research interest30,31,32. In the following, we demonstrate that the groundstate and dynamical properties of this model may be fruitfully investigated on current quantum devices using quantum circuit MPS.

Groundstate optimisation

We approach this problem using one-dimensional sequential quantum circuits23 inspired by matrix product states15,16,17,33,34,35. Figure 1 illustrates the structure of these states and their representation on Rainbow. In this work we focus on bond order D = 233,34,35 described by a staircase of two-qubit unitaries, U. Higher bond orders can be achieved with an efficient shallow circuit representation15,17. A crucial feature of these circuits is that although the fully translationally invariant state is infinitely deep and infinitely wide, local observables can be measured on a finite-depth, finite-width circuit with an additional unitary V ≡ V(U) describing the effect of distant parts of the system on the local observables. This additional unitary is determined by a set of auxillary equations—discussed in the Methods section and shown in Fig. 6—that we solve on chip. We optimise this fixed-point equation together with measurement of terms in the Hamiltonian in order to find the groundstate of the quantum Ising model. The parametrizations of U and V are indicated in Fig. 1.

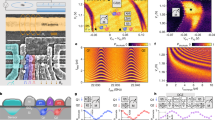

a iMPS circuits to calculate the three terms in the Ising Hamiltonian. The blue boxes indicate realisations of the state unitary U with its factorisation to the Rainbow gate set. The red boxes highlight the environment tensor V. Ry gates refer to the Pauli-Y rotation gates, \(Ry(\theta )=\exp (-i{\sigma }_{y}\theta )\) where σy is the Pauli-Y matrix. The W gate is an arbitrary single qubit unitary with three free parameters. Parameters are shared across the blue tensors to enforce translational invariance. There are eight free parameters in total, four from the environment (red) and four from the state tensor (blue). b Example of the Ising circuits laid out on the Rainbow device. The best performing qubits were chosen on each day of experiment. c iMPS Circuits to determine V ≡ V(U). The three elements shown comprise the three elements required to compute the trace distance between the left- and right-hand sides of the fixed-point equations shown in Fig. 6. The gates needed to perform the required swap test are highlighted in green. d Example of the trace distance circuits laid out on the rainbow device.

Error mitigation is essential for these algorithms. We deploy a number of strategies. Foremost amongst these is choosing the best qubits. Individual qubit and gate noise can vary dramatically on current devices. In our energy optimisation experiments, we run all of the circuits shown in Fig. 1 in parallel on an optimally chosen set of qubits on Rainbow. Next, we account for measurement errors and biases using a confusion matrix deduced from measurements on each of our chosen qubits. Finally, we utilise a Loschmidt echo to account for depolarisation, by implementing a circuit and its Hermitian conjugate to deduce the rescaling that depolarisation induces1,36.

We optimise the sum of the measured quantum Ising Hamiltonian given by the circuits in Fig. 1a, and the circuits in Fig. 1b that impose consistency between the unitary U defining the quantum state and the unitary V(U) describing the effect of the rest of of the system on local measurements. The full cost function then is \(\langle {{{{{{{\mathcal{H}}}}}}}}\rangle+{{{{{{{\rm{Tr}}}}}}}}{({\hat{\rho }}_{L}-{\hat{\rho }}_{R})}^{2}\), where the second term is the trace distance between the left- and right-hand sides of the fixed-point equation first reported in ref. 17 and shown in Fig. 6 in Methods. We carry out this optimisation using the simultaneous perturbation stochastic approximation (SPSA)37. This optimisation strategy works well deep in either phase of the quantum Ising model. In order to approach the quantum critical point, we use a quasi-adiabatic method gradually changing the Hamiltonian parameters toward those at the quantum critical point in steps, using the optimised ansatz parameters at the preceding step to start the optimisation.

The results of applying these methods on Rainbow are shown in Fig. 2. These results demonstrate that, with appropriate rescaling to allow for depolarisation errors, the measured and optimised energies are close to the exact value for our ansatz even at the quantum critical point. The deviations from the analytically exact results arise primarily because we have used a reduced parameterisation of our two qubit unitaries. Figure 2b shows a typical optimisation curve. A marked oscillation of unknown origin on a time-period of about half an hour was present in all of our experiments. It was particularly marked at g = 0.4 (see Fig. 2c) and is likely responsible for the larger error in energy at this value.

a The blue curve gives the exact energy of the quantum Ising model optimised over bond order D = 2 MPS within the ansatz class used on the device. The dashed purple curve gives the analytically exact groundstate energy calculated analytically in the thermodynamic limit55,56. The green and orange curves show energies of the optimised on the Rainbow device with and without Loschmidt rescaling to allow for depolarisation, respectively. Measurement errors are corrected using a confusion matrix in both cases. Except for the anomalous point at g = 0.4 affected by an uncontrolled oscillation on the device (see c), the rescaled results are within 2.2% of the exact-in-ansatz values. This is true even at the quantum critical point, g = 1. The inset shows the log-deviation in measured energy compared to the exact value for the circuit ansatz. J has been rescaled to 1 in these plots. The deviation between the analytically exact and exact-in-ansatz energies at large g is due to the reduced parametrisation used for the circuit unitaries, U. b A typical optimisation curve showing the measured Hamiltonian and the trace-distance. The latter is defined in the main text. A value of zero value for it indicates that U and V consistently describe the local properties of translationally invariant state. Optimisation is carried out using the simultaneous perturbation stochastic approximation (SPSA) and a quasi-adiabatic change in the value of the transverse field g. The optimisation is made in stages changing g by discrete amounts and allowing the optimisation to stabilise before incrementing further. The vertical dashed lines indicate junctures when the transverse field g is altered. c Oscillations in measured energy found around g = 0.4.

For the quantum Ising model, D = 2 does remarkably well and the improvement in going to D = 4 is below the resolution of our experiments. Other models such as the antiferromagnetic Heisenberg model show a larger improvement in optimised energies by increasing bond order and are within the resolution of future experiments.

Calculating and optimising overlaps

Before turning to quantum dynamics, we first discuss a key building block—the overlap of translationally invariant states. For states parametrised by circuit unitaries UA and UB this overlap is given by an infinitely wide and deep version of the circuit shown in Fig. 3a. It is formally zero. However, the distance between states can be quantified by the rate at which this overlap tends to zero as the length of the system, N, is taken to infinity. This occurs as λN, where λ is the principal eigenvalue of the transfer matrix, \({E}_{{U}_{A},{U}_{B}}\), indicated by the part of the circuit contained between the dashed red lines in Fig. 3a.

a The overlap of two translationally invariant states parametrised by U and \(U^{\prime}\) is given by \(\mathop{\lim }\limits_{n\to \infty }{C}_{n}\to {\lambda }^{n}\), where λ is the principle eigenvalue of the transfer matrix delimited by the red dotted line. Cn is evaluated on circuit by measuring the probability of \({\left|0\right\rangle }^{\otimes (n+1)}\) at the output. In order to correct for depolarisation errors, we divide by the Loschmidt echo obtained by evaluating the circuit at \(U^{\prime}=U\). b Overlaps Cn(UA, UB) and Loschmidt echo Cn(UA, UA) evaluated on Rainbow as a function of the order of power method n. c The ratio Cn+1/Cn obtained from the data in (b). The overlap begins to converge as the circuit depth—measured by n—rises. By n = 4 the measured value overlaps within error bars with the exact value of the principal eigenvalue of the transfer matrix (aside from the outlier at n = 6 which occurs due to an error in the estimate of the Loschmidt echo. This is corrected by the interpolation shown in (b). The circuit depth increases with n leading to increased error bars. However, the result is still within errors suggesting that useful information can be extracted even from these deeper circuits. d A demonstration that stochastic optimisation of UB using SPSA converges to UB = UA.

Several methods can be used to determine λ. ref. 17 uses a variational representation of the bottom and top eigenvectors B and T of the transfer matrix. These correspond to the left and right eigenvectors in the usual MPS notation, were the circuit is conventionally rotated 90 deg clockwise. This involves solving fixed-point equations for B and T akin to those used in to calculate the expectations of the Hamiltonian in ‘Groundstate Optimisation’. Here, we use a different approach invoking the power method. λ is given by

for approximations, \(\tilde{B}\) and \(\tilde{T}\), to the eigenvectors of the transfer matrix, This result converges exponentially quickly with n, and can be particularly accurate when a good starting approximations to \(\tilde{B}\) and \(\tilde{T}\) are chosen. Indeed, when either \(\tilde{B}\) or \(\tilde{T}\) are exact, then Eq. (2) converges at n = 1. Figure 3a corresponds to n = 6 and the choices \(\tilde{B}={{{{{{{\boldsymbol{I}}}}}}}}\) and \(\tilde{T}=\left|0\right\rangle \left\langle 0\right|\).

The results of calculating the overlaps on Rainbow in this way are shown in Fig. 3b, c. In order to correct for depolarisation errors, we divide Cn(UA, UB) by a Loschmidt echo Cn(UA, UA), whose value is one in the depolarisation-free case. Figure 3c shows Cn(UA, UB) and Cn(UA, UA) calculated for different n. The depolarisation effects become larger as the order n, and consequentially depth of circuit, increases. Figure 3b shows how the Loschmidt-corrected results converge to λ with n. By n = 4 the estimate for λ has converged to within error bars (aside from n = 6 which appears to arise due to an overestimate of the Loschmidt echo. Correcting for this with an interpolated value of the Loschmidt echo brings the estimate within errors). The optimum balance between convergence of the power method and increasing circuit errors occurs at n = 4 or 5. Finally, Fig. 3d shows the result of optimising the overlap by varying the parameters of UB using SPSA.

Quantum dynamics

This method of computing overlaps can be used to time-evolve a quantum state \(\left|\psi \left(U(t)\right.\right\rangle\) parametrised by U(t) at time t to time t + dt according to refs. 16, 17, 38, 39

As in the case of direct overlaps between states, the overlap in Eq. (3) decays exponentially with the system size according to the principle eigenvalue of the transfer matrix. We identify circuits that approximate this principle eigenvalue as cost-functions for time-evolution.

Just as for finding groundstates, the key to operating time evolution algorithms on NISQ devices is the management and mitigation of errors. A balance must be found between the theoretical, error-free accuracy and the effect of errors incurred on a real device. We introduce time-evolution circuits for quantum iMPS (dramatically simplified compared to previous proposals17) that permit careful tradeoffs in implementation to enable quantum dynamics to be faithfully tracked.

The first such trade-off concerns the time-evolution operator, which must be expanded using a Trotterisation procedure40,41,42. Higher order Trotterizations improve the scaling of errors with the time-step dt, but require deeper circuits that are more exposed to gate infidelity. Moreover, errors incurred in stochastic optimisation may favour a larger time-step, offsetting increased resolution of the cost function against an increase in Trotter errors. In practice, the time-evolution operator is the deepest part of our circuit. In order to minimise this depth, we use a first order Trotterisation and a trick appropriate to translationally invariant states evolving with nearest-neighbour, translationally-invariant Hamiltonians. The circuit Fig. 4a uses just the even-bonds of the Hamiltonian, but by dint of the projection back to translationally invariant states incurs errors at higher order in dt than expected with a naive accounting of Trotter errors. This can be seen by noting that the time-dependent variational principle equations for evolving a translationally invariant state with just the even- or odd-bond parts of the Hamiltonian are identical to evolving using the full Hamiltonian divided by two43.

a Time-evolution circuit: The probability of measuring \({\left|0\right\rangle }^{\otimes N}\) at the output, after post-selecting on the top two qubits on the right-hand side, gives an approximation to λ2—the square of the principal eigenvalue of the transfer matrix (indicated by the dashed red lines). This circuit provides a cost function whose optimisation over \(U^{\prime}\) gives the state at time t + dt after starting at time t with the state parametrised by U. b Factorisation of the MPS and Time-evolution unitaries: The unitary U describing the iMPS quantum state of the system is parametrised on the circuit as shown. This is reduced from the full parametrisation of a two qubit unitary in order to enable shallower circuits. There is an additional redundancy of the first z-rotation on the reference qubit state \(\left|0\right\rangle\) and two further angles contained in the parametrisation do not change through the dynamical quantum phase transition that we study. c Factorisation of the time-evolution unitary: The two-site time-evolution unitary is factorised to the Rainbow gate set as shown. This is one of the more costly parts of the simulation in terms of circuit-depth.

The transfer matrix is indicated by the part of the circuit in Fig. 4a between the red dashed lines. Time-evolution cost functions can be obtained using the power method to approximate the principal eigenvalue of this transfer matrix. The circuit shown in Fig. 4a contains two powers of the transfer matrix, together with an approximation to the bottom fixed-point of the transfer matrix equal to the identity and of the top fixed point constructed from a contraction of U with \({U^{\prime} }^{{{{\dagger}}} }\) with post-selection over the top two qubits. These approximations to the top and bottom fixed points are accurate to O(dt2) so that overall — in the absence of errors—the circuit Fig. 4a gives an approximation to the square of the eigenvector of the transfer matrix to O(dt2). Cost functions constructed at different orders of the power method and with different approximations to the fixed points are discussed in Supplementary Note 2.

In addition to these strategies for managing errors and making a good choice of qubits, we also require a degree of error mitigation. We average of the results obtained from four copies of the time evolution circuits: running two circuits in parallel and repeating to a total of four circuits. A Loschmidt echo is used to to mitigate depolarisation errors; we deduce a rescaling by taking the circuit in Fig. 4a setting \(U^{\prime}=U\) and including time-evolution unitaries with a negligible time step in comparison to dt. In this way, the circuit has similar structure to our target circuit, but has a theoretical close to unity.

The results of simulation with the circuit Fig. 4a are shown in Fig. 5a, b. These results comprise two parts: (a) a demonstration that circuit Fig. 4a captures the true dynamics when run in the absence of noise; and (b) measurement of the time-evolution cost-function on the Rainbow chip. Crucially the optimum value of the measured cost function—measured for each time-step in the evolution along a linear interpolation from the initial parameters through the optimum—is in the correct place. In this sense, the time-evolution shown in Fig. 5a is the output that would be obtained by full stochastic optimisation on the Rainbow chip.

a Dynamical Quantum Phase Transition in the Quantum Ising Model: The dynamics of the transverse field Ising model can be obtained analytically and form a good basis for the comparison with our quantum circuits. These are shown in blue and compared with dynamics obtained from numerically exact optimisation of the principal eigenvalue of the transfer matrix within our ansatz shown in orange, and the numerical optimisation of the circuits shown in Fig. 4 in the absence of noise shown in green. The results show that the circuit cost function can faithfully track the dynamics. b Cost-function evaluated on Rainbow: The cost function evaluated along a linear interpolation in the eight parameters from U and extending through the exact update is shown for each of the indicated time-steps in (a). The cost-function is rescaled by a Loschmidt echo obtained by overlapping the left hand of the circuit in (a), including the time-evolution operator, with its Hermitian conjugate. The optimum value of the rescaled circuit coincides with that calculated in a classical simulation without error.

The dynamical quantum phase transition studied in Fig. 5a is a stringent test of our time-evolution algorithm. Starting with the parametrised groundstate at g = 1.5 and evolving with g = 0.2, the logarithm of the overlap of the initial state with the time evolved state, \(-\log|\langle \psi (0)|\psi (t)\rangle|\), shows periodic partial revivals (corresponding to the minima in the plot in Fig. 5a and dynamical quantum phase transitions (corresponding to the cusps in the plot in Fig. 5a. Observing these features requires a delicate cancellation of phase coherences in the wavefunction. Our results demonstrate that the Rainbow device is capable of accurately capturing the subtle features of quantum time-evolution.

Discussion

We have demonstrated that tensor network methods endow NISQ devices with the power to simulate the groundstate and dynamics of quantum critical systems. Such systems pose amongst the greatest challenge for classical simulation techniques and provide a forum with genuine potential for quantum advantage. Our algorithms operate directly in the thermodynamic limit - avoiding difficulties of finite-size scaling near criticality. We demonstrate that translationally-invariant variational approximations to the groundstate can be optimised on current devices to good accuracy even at the quantum critical point, and present a cost function for time-evolution—a significant simplification over previous proposals—that can faithfully track dynamics when implemented on the Rainbow device.

An explicit, stochastic optimisation of this cost function remains a subject for future study. There are many different schemes available for such optimisations and choosing an appropriate one is an important task. Moreover, sampling costs of the combined measurement of overlaps and stochastic optimisation of the updated state ansatz must be kept to realistic levels. Offsetting the accuracy with which the overlap of any particular trial update is determined against the number of potential updates that are tested provides a further space for optimisation. The quantum circuit effectively provides stochastic corrections to a classical model guiding the choice of test updates and poses an interesting problem in quantum control44,45,46. Ultimately, the task is one of performing tomography of the updated state to an MPS approximation47.

Effective error mitigation is crucial in using NISQ devices for quantum many-body simulation, and form a central part of our discussion. Tensor networks permit a systematic tradeoff between the error-free accuracy of circuits, and the errors incurred due to circuit infidelity. For example, the time-evolution circuits present a suite of refinement parameters—such as bond-order, Trotterisation order and timestep, order of power method—that can be chosen to optimise performance on a given device together with a confusion matrix to correct for measurement errors and a Loschmidt echos to correct for depolarisation. Other strategies can be deployed as the accuracy of simulations improves. Perhaps the most exciting follow the structure of the tensor networks themselves; averaging over the gauge freedom intrinsic to the auxilliary space of the tensor network states is natural first step. Combining with the matrix product operator encoding of errors discussed in ref. 21 has potentially only constant overhead in circuit depth if the staircase of state and MPO unitaries are aligned (in a similar manner to the interferometric measurement of topological string order parameters in ref. 15).

Extensions include using higher bond order to refine simulation accuracy. High bond order quantum matrix product states can be generated with low depth (\({{{{{{{\mathcal{O}}}}}}}}(\log (D))\)) circuits. Such circuits have previously been shown to effectively capture states generated during time evolution, and show potential to achieve quantum advantage in finding ground states compared to classical tensor network simulations48,49 (classical algorithms scale polynomially with D yielding an advantage for the quantum circuit that is exponential in D). In the present case, improvements expected from increasing the bond order fall below the resolution of our experiments. Other problems (such as the antiferromagnetic Heisenberg model) show a greater improvement with bond order and may be feasible. Finite circuit fidelity is currently the main barrier to realising quantum advantage. However, incremental increases in fidelity can have dramatic impact due to the exponential quantum advantage. Though rastering one-dimensional MPS algorithms over a higher-dimensional system is competitive in classical applications, in the quantum setting, it may be favourable to take more direct advantage of the connectivity of the quantum device. Our code can be modified to accommodate the two-dimensional sequential circuits proposed in ref. 23, and the isometric two-dimensional algorithms of ref. 24 are another promising avenue.

The use of machine learning in many-body quantum physics is intriguing recent development18,50,51,52. One use has been identifying phases in classical simulation (e.g., monte carlo simulations) of many-body quantum systems, thereby potentially enhancing the ability of these established approaches to reveal new physics. In its quantum application, such an approach might simultaneously mitigate intrinsic errors in the simulation and errors due to the infidelity of the device22. Combining with tensor network realisation of the machine learning components19,20 may enable a compact realisation of these combined aims. Indeed, combining the methods of ref. 21 with our scheme would constitute precisely this.

Tensor networks provide a systematic way to structure quantum simulations on NISQ devices. Our results show how they can be used to analyse quantum critical systems in the thermodynamic limit. There are many promising ways in which their application can be extended.

Methods

Fixed-point equations

A translationally-invariant MPS formally requires an infinitely wide, infinitely deep quantum circuit to represent it (see Fig. 6a). As demonstrated in ref. 17, local observables of this translationally invariant state can be measured on a finite circuit by introducing the environment tensor V which summarises the effects of distant parts of the quantum state on the local measurement (see Fig. 6b). This tensor is determined as a function of the state unitary, U, by solving the fixed point equations shown in Fig. 6c). The circuits implemented in Fig. 1 correspond to the terms involved in the trace distance between circuits on the left- and right-hand side of the fixed point equation, Fig. 6c: \({{{{{{{\rm{Tr}}}}}}}}{({\hat{\rho }}_{L}-{\hat{\rho }}_{R})}^{2}\). There are three terms corresponding to the trace norm of each side of the equation and the cross terms between them. In practice, when optimising the energy, the cost-function for the fixed-point equations and the various contributions to the energy are optimised simultaneously using SPSA.

SPSA is used to optimise a cost function given by

where the first term gives the expected energy of the state, and the latter three terms penalise inconsistencies between the state and environment tensors. The energy term is calculated using the three circuits in Fig. 1a, and the three consistency terms using the circuits in Fig. 1c ordered from top to bottom. The last three terms are zero when the environment satisfies the fixed point equation in Fig. 6c. This cost function is minimised by the translationally invariant ground state of the given Hamiltonian.

Error mitigation strategies

We deploy a number of error mitigation strategy in order to obtain our results, summarised as follows:

The measured single and two qubit gate fidelity vary across the Rainbow device, and vary over time on each qubit. We can reduce the error in circuit executions by picking connected sets of qubits that have the lowest error rates. This is best done empirically by running a reference circuit across the target qubits and choosing the qubits that have the highest fidelity with the known target state. To best capture the effects of noise on the circuits that we are using, we apply an MPS circuit and then the Hermitian conjugate of the same MPS circuit. We measure the probability of returning to the initial all-zeros state after N applications of this Loschmidt echo circuit and use this to determine the best qubit sequences to use. We also employed qubit averaging where the same circuit was run on multiple non-intersecting sets of qubits in parallel and the results averaged.

The readout of quantum states on the Rainbow device can be biased towards certain bit strings. To correct for this we learn how the readout is biased by measuring states that produce fixed bit-string outputs. We encode any deviations in the known bit strings by a confusion matrix. This can be inverted and used to correct the measurement bias. The size of the confusion matrix is 2M where M is the number of measured qubits. For all circuits in this work where this error mitigation strategy is applied the number of measured qubits is only two. In principle, this method may not be scalable for quantum MPS methods. The size of the confusion matrix needed to correct the fixed point calculation circuits grows polynomially with the bond dimension of the MPS, and can potentially erode any performance benefits from running MPS simulations on a quantum device. However, where we are interested in the relative rather than absolute values of measured circuits—such as in our time-evolution and fixed-point circuits—this bias can be neglected without adversely affecting results.

Depolarisation is a major source of error in the measured outputs of our quantum circuits. We correct for this using a Loschmidt echo in two different forms for our energy optimisation and time-evolution circuits1,36. In the case of optimisation of the energy of the Ising model, we know the energy of the ground state when the coefficient of the interacting term is set to 1 and the single site terms are set to 0. We also know the parameters in our ansatz corresponding to the ground state with these parameters. We measure the energy of this state on the device to get a rescaling and correct all future interacting terms measured on the device with this value. For time-evolution circuits, we use the fact that the overlap of a circuit with its Hermitian conjugate should always be 1. We choose a test circuit that is representative of the complexity of our target circuits and measure its overlap with its Hermitian conjugate. Target circuits are divided by this value to allow for depolarisation. In order to reflect the complexity of the time-evolution circuits in Fig. 5, we use a circuit MPS state and apply the time evolution unitary with a negligible time step compared to dt, before finally overlapping with the Hermitian conjugate of the circuit MPS state.

Floquet calibration1,53 has been developed to correct the angles on the native Rainbow two qubit gate so that desired two qubit unitaries can be applied more accurately. Floquet calibration proceeds by repeating a two qubit gate multiple times on the chip to amplify small discrepancies in the angles that are being applied. The measured deviations can then be compensated to increase the fidelity of the implemented circuit with the intended circuit. This method was found to have only a small impact on the energy measurements, and was not as effective as the confusion matrix and Loschmidt rescaling techniques. For this reason, we did not apply Floquet calibration to our final calculations of energy or time evolution circuits.

Data availability

The sampled data used in this work are available in a Zenodo repository at the https://zenodo.org/badge/latestdoi/52116574154.

Code availability

Code used for the data analysis and circuit construction are available at https://github.com/jamesdborin/qmps_syc, https://zenodo.org/badge/latestdoi/52116574154.

References

Arute, F. et al. Observation of separated dynamics of charge and spin in the fermi-hubbard model. Preprint at https://arxiv.org/abs/2010.07965 (2020).

Babbush, R., Berry, D. W. & Neven, H. Quantum simulation of the sachdev-ye-kitaev model by asymmetric qubitization. Phys. Rev. A 99, 040301 (2019).

Stanisic, S. et al. Observing ground-state properties of the fermi-hubbard model using a scalable algorithm on a quantum computer. Preprint at https://arxiv.org/abs/2112.02025 (2021).

Tazhigulov, R. N. et al. Simulating challenging correlated molecules and materials on the sycamore quantum processor. Preprint at https://arxiv.org/abs/2203.15291 (2022).

Satzinger, K. et al. Realizing topologically ordered states on a quantum processor. Science 374, 1237–1241 (2021).

Chiaro, B. et al. Direct measurement of nonlocal interactions in the many-body localized phase. Phys. Rev. Res. 4, 013148 (2022).

Sachdev, S. Quantum criticality: competing ground states in low dimensions. Science 288, 475–480 (2000).

Coleman, P. & Schofield, A. J. Quantum criticality. Nature 433, 226–229 (2005).

Sachdev, S. Quantum phase transitions (Cambridge university press, 2011).

Hertz, J. A. Quantum critical phenomena. Phys. Rev. B 14, 1165 (1976).

Parcollet, O., Biroli, G. & Kotliar, G. Cluster dynamical mean field analysis of the mott transition. Phys. Rev. Lett. 92, 226402 (2004).

Sandvik, A. W. Evidence for deconfined quantum criticality in a two-dimensional heisenberg model with four-spin interactions. Phys. Rev. Lett. 98, 227202 (2007).

Schön, C., Solano, E., Verstraete, F., Cirac, J. I. & Wolf, M. M. Sequential generation of entangled multiqubit states. Phys. Rev. Lett. 95, 110503 (2005).

Schön, C., Hammerer, K., Wolf, M. M., Cirac, J. I. & Solano, E. Sequential generation of matrix-product states in cavity qed. Phys. Rev. A 75, 032311 (2007).

Smith, A., Jobst, B., Green, A. G. & Pollmann, F. Crossing a topological phase transition with a quantum computer. Phys. Rev. Res. 4, L022020 (2022).

Lin, S.-H., Dilip, R., Green, A. G., Smith, A. & Pollmann, F. Real- and imaginary-time evolution with compressed quantum circuits. PRX Quantum 2, 010342 (2021).

Barratt, F. et al. Parallel quantum simulation of large systems on small nisq computers. NPJ Quantum Inform. 7, 1–7 (2021).

Carrasquilla, J. & Melko, R. G. Machine learning phases of matter. Nat. Phys. 13, 431–434 (2017).

Stoudenmire, E. & Schwab, D. J. Supervised learning with tensor networks. Adv Neural Inform. Process. Syst. 29 (2016).

Huggins, W., Patil, P., Mitchell, B., Whaley, K. B. & Stoudenmire, E. M. Towards quantum machine learning with tensor networks. Quantum Sci. Technol. 4, 024001 (2019).

Guo, Y. & Yang, S. Quantum error mitigation via matrix product operators. Preprint at https://arxiv.org/abs/2201.00752 (2022).

Herrmann, J. et al. Realizing quantum convolutional neural networks on a superconducting quantum processor to recognize quantum phases. Nat. Commun. 13, 4144 (2022).

Banuls, M.-C., Pérez-García, D., Wolf, M. M., Verstraete, F. & Cirac, J. I. Sequentially generated states for the study of two-dimensional systems. Phys. Rev. A 77, 052306 (2008).

Zaletel, M. P. & Pollmann, F. Isometric tensor network states in two dimensions. Phys. Rev. Lett. 124, 037201 (2020).

Arute, F. et al. Quantum supremacy using a programmable superconducting processor. Nature 574, 505–510 (2019).

Pollmann, F., Mukerjee, S., Green, A. G. & Moore, J. E. Dynamics after a sweep through a quantum critical point. Phys. Rev. E 81, 020101 (2010).

Heyl, M., Polkovnikov, A. & Kehrein, S. Dynamical quantum phase transitions in the transverse-field ising model. Phys. Rev. Lett. 110, 135704 (2013).

Guo, X.-Y. et al. Observation of a dynamical quantum phase transition by a superconducting qubit simulation. Phys. Rev. Appl. 11, 044080 (2019).

Xu, K. et al. Probing dynamical phase transitions with a superconducting quantum simulator. Sci. Adv. 6, eaba4935 (2020).

Bañuls, M. C., Cirac, J. I. & Hastings, M. B. Strong and weak thermalization of infinite nonintegrable quantum systems. Phys. Rev. Lett. 106, 050405 (2011).

Hallam, A., Morley, J. & Green, A. G. The lyapunov spectra of quantum thermalisation. Nat. Commun. 10, 1–8 (2019).

Azad, F., Hallam, A., Morley, J. & Green, A. Phase transitions in the classical simulability of open quantum systems. Preprint at https://arxiv.org/abs/2111.06408 (2021).

Schollwöck, U. The density-matrix renormalization group in the age of matrix product states. Ann. Phys. (NY) 326, 96–192 (2011).

Perez-Garcia, D., Verstraete, F., Wolf, M. M. & Cirac, J. I. Matrix product state representations. Quantum Info. Comput. 7, 401–430 (2007).

Orús, R. A practical introduction to tensor networks: Matrix product states and projected entangled pair states. Ann. Phys. (NY) 349, 117–158 (2014).

Mi, X. et al. Information scrambling in quantum circuits. Science 374, 1479–1483 (2021).

Spall, J. C. et al. Multivariate stochastic approximation using a simultaneous perturbation gradient approximation. IEEE Transactions Autom. Control 37, 332–341 (1992).

Barison, S., Vicentini, F. & Carleo, G. An efficient quantum algorithm for the time evolution of parameterized circuits. Quantum 5, 512 (2021).

Berthusen, N. F., Trevisan, T. V., Iadecola, T. & Orth, P. P. Quantum dynamics simulations beyond the coherence time on noisy intermediate-scale quantum hardware by variational trotter compression. Phys. Rev. Res. 4, 023097 (2022).

Trotter, H. F. On the product of semi-groups of operators. Proc. Am. Math. Soc. 10, 545–551 (1959).

Suzuki, M. Improved trotter-like formula. Phys. Lett. A 180, 232–234 (1993).

Childs, A. M., Su, Y., Tran, M. C., Wiebe, N. & Zhu, S. Theory of Trotter error with commutator scaling, Phys Rev X 11, 011020 (2021).

Haegeman, J. et al. Time-dependent variational principle for quantum lattices. Phys. Rev. Lett. 107, 070601 (2011).

Vandersypen, L. M. & Chuang, I. L. Nmr techniques for quantum control and computation. Rev. Mod. Phys. 76, 1037 (2005).

Dong, D. & Petersen, I. R. Quantum control theory and applications: a survey. IET Control Theor. Applications 4, 2651–2671 (2010).

Bukov, M. et al. Reinforcement learning in different phases of quantum control. Phys. Rev. X 8, 031086 (2018).

Lanyon, B. P. et al. Efficient tomography of a quantum many-body system. Nat. Phys. 13, 1158–1162 (2017).

Lin, S.-H., Dilip, R., Green, A. G., Smith, A. & Pollmann, F. Real- and imaginary-time evolution with compressed quantum circuits. PRX Quantum 2, 010342 (2021).

Haghshenas, R., Gray, J., Potter, A. C. & Chan, G. K.-L. Variational power of quantum circuit tensor networks. Phys. Rev. X 12, 011047 (2022).

Rodriguez-Nieva, J. F. & Scheurer, M. S. Identifying topological order through unsupervised machine learning. Nat. Phys. 15, 790–795 (2019).

Ch’Ng, K., Carrasquilla, J., Melko, R. G. & Khatami, E. Machine learning phases of strongly correlated fermions. Phys. Rev. X 7, 031038 (2017).

Broecker, P., Carrasquilla, J., Melko, R. G. & Trebst, S. Machine learning quantum phases of matter beyond the fermion sign problem. Sci. Rep. 7, 1–10 (2017).

Neill, C. et al. Accurately computing the electronic properties of a quantum ring. Nature 594, 508–512 (2021).

Dborin, J. et al. Simulating groundstate and dynamical quantum phase transitions on a superconducting quantum computer (2022). https://github.com/jamesdborin/qmps_syc.

Jordan, P. & Wigner, E. P. About the pauli exclusion principle. Z. Phys. 47, 14–75 (1928).

Lieb, E., Schultz, T. & Mattis, D. Two soluble models of an antiferromagnetic chain. Ann. Phys. 16, 407–466 (1961).

Acknowledgements

J.D. and A.G.G. were supported by the EPSRC through grants EP/L015242/1 and EP/S005021/1. V.M. is supported by ESPRC Prosperity Partnership grant EP/S516090/1. F.B. was supported by the Air Force Office of Scientific Research under Grant No FA9550-21-1-0123. We thank the Google Quantum AI team for their support and advice.

Author information

Authors and Affiliations

Contributions

The research was based on ideas conceived by A.G.G. and F.B. Code executed on the device was written J.D. and V.W. T.E.O. helped devise and implement the error mitigation strategies used. E.O. and T.E.O. helped devise the experiments to run on the Google devices. Paper written by A.G.G., J.D.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests

Peer review

Peer review information

Nature Communications thanks Michael Hartmann, and the other anonymous reviewer(s) for their contribution to the peer review of this work.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Dborin, J., Wimalaweera, V., Barratt, F. et al. Simulating groundstate and dynamical quantum phase transitions on a superconducting quantum computer. Nat Commun 13, 5977 (2022). https://doi.org/10.1038/s41467-022-33737-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41467-022-33737-4

This article is cited by

-

Characterizing a non-equilibrium phase transition on a quantum computer

Nature Physics (2023)

-

Anomalous correlation-induced dynamical phase transitions

Scientific Reports (2023)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.