Abstract

When the electric conductance of a nano-sized metal is measured at low temperatures, it often exhibits complex but reproducible patterns as a function of external magnetic fields called quantum fingerprints in electric conductance. Such complex patterns are due to quantum–mechanical interference of conduction electrons; when thermal disturbance is feeble and coherence of the electrons extends all over the sample, the quantum interference pattern reflects microscopic structures, such as crystalline defects and the shape of the sample, giving rise to complicated interference. Although the interference pattern carries such microscopic information, it looks so random that it has not been analysed. Here we show that machine learning allows us to decipher quantum fingerprints; fingerprint patterns in magneto-conductance are shown to be transcribed into spatial images of electron wave function intensities (WIs) in a sample by using generative machine learning. The output WIs reveal quantum interference states of conduction electrons, as well as sample shapes. The present result augments the human ability to identify quantum states, and it should allow microscopy of quantum nanostructures in materials by making use of quantum fingerprints.

Similar content being viewed by others

Introduction

In metals at low temperatures, the quantum–mechanical wave nature of conduction electrons comes to the fore, which can be described in terms of their wave functions. The phase of the wave function can be modulated by a magnetic field, causing wave interference of electrons as a function of external fields1,2. The simplest case can be found in a ring sample where the wave propagation is completely restricted along the circumference. In such a sample, an electron circling along the ring gains a phase proportional to the magnetic field inside the ring. If the phase is an even multiple of π, constructive interference of the electrons enhances the wave nature and electric conductance. When the phase is an odd multiple of π, on the other hand, destructive interference reduces electric conductance, giving rise to a periodic oscillation of the conductance with respect to the external field, called an Aharonov-Bohm oscillation3,4,5,6. In a real nanometal, however, there are many scatterers for the electron waves, such as impurities and nanostructures (Fig. 1a, b). As a result, interference among all electron waves propagating among the scatterers piles up to modulate the conductance, giving birth to a complicated magnetic field dependence reflecting the distribution of the scatterers7,8 (Fig. 1c). These complex patterns of electric conductance are called conductance fluctuations or quantum fingerprints in electric conductance1. The fingerprint thus carries information concerning the quantum electron states. Nevertheless, it has been considered difficult to interpret it due to its complexity. In the literature (refs. 9,10,11), conductance was shown to be predicted from microscope information such as defect positions by using machine learning methods. Here we show that, by developing machine learning12,13 for quantum interference, fingerprint patterns in magneto-conductance can be transcribed into a real image of electron WIs and a sample shape.

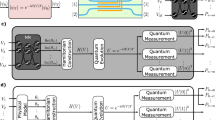

a, b A schematic illustration of a magneto-conductance measurement in a small metal sample (a) and its magnified view (b). The green stripe pattern describes electron wave function intensity (WI) in the sample with defects. c Conductance change \({\Delta}G\) for a nanowire sample. e, h, and a are the elementary charge, the Plank constant, and the lattice constant in the calculation model, respectively. \({\phi }_{0}=h/e\) is the magnetic flux quantum. d Concept of Quantum Geometric Decoding based on a deep neural network. First, the networks (A) and (B) compress the WI images into the latent space. Then, the networks (C) and (B) output a geometry image including the sample shape, defect distribution, and WI information from the input of the magneto-conductance.

Feature extraction and geometry generative deep-neural networks are combined to reconstruct electron WIs and sample shape images from the magneto-conductance data (Fig. 1d). We named the present network a quantum geometric decoder (QGD). As a training dataset, we use numerical-calculated conductance and WIs in two-dimensional nanowires with antidot defects exposed to magnetic fields (Fig. 2). The network (A) + (B) in the QGD (Fig. 1d) extracts essential features of the WI images using a dimensionality-reduction technique based on a variational autoencoder14,15 (VAE) (Fig. 3a). By training the network to compress the calculated real-space WI data onto a low dimensional latent space and to reconstruct the data from the latent-space data, the network (A) learns to convert the WI images into a vector in the latent space so as to best reproduce the original images using the network (B), and the network (B) to generate WI images from the compressed information represented by the vector (Fig. 3a). In the following, we will show that owing to the latent-space information, the QGD can find relations between complicated quantum interference and quantum fingerprints, which cannot be realised by conventional methods.

a A sample system for calculation with two antidot defects. One antidot is fixed at the upper centre of the nanowire, while the other is located so as not to overlap with the fixed one. Two leads are attached to the top and bottom ends of the sample to measure conductance. b Calculated WI distribution. The square of the absolute value of the calculated wave function is plotted in the sample region with 60 \({\times}\) 50 pixels. The WI values are normalised such that the sum of the intensity equals to unity for each antidot configuration; \({\sum }_{i=1}^{60}{\sum }_{j=1}^{60}{X}_{i,j}=1\), where \({{{\bf{X}}}}\) is a WI image, and the suffixes \(i,{j}\) represent the pixel label. We added zero padding with 5 sites to the left and right ends of the nanowire. c Magneto-conductance \(G\) for 10 samples with different defect distributions. Here, B is the magnetic flux density. d Normalised data of the calculated magneto-conductance \(\Delta G\) for the antidots distribution shown in a. \(\Delta G\) is obtained by subtracting the averaged \(G\ (\equiv {G}_{{{{{{\rm{ave}}}}}}})\) over all the nanowire configurations from the \(B\) dependence of \(G\ [\Delta G\left(B\right)\equiv G\left(B\right)-{G}_{{{{{{\rm{ave}}}}}}}(B)]\).

a, b The feature extraction network based on VAE, trained by using geometry images with WIs (a) and without WIs (b), and an example of input and output images. c, d UMAP data points generated in the latent space, trained with the geometry images together with WIs (c), and without WIs (d). \(\left(x,y\right)\) represents the location of the antidot defect. The data structure is mapped onto a three-dimensional space from the seven-dimensional latent space by using UMAP to visualise the data geometry. Each data point corresponds to one WI image and is coloured in blue or yellow depending on the parity of \(x\). e The definition of \(\Delta x\). f, g Images of the absolute difference between \(\left(x,y\right)\) data and \(\left(x+\Delta x,{y}\right)\) data, where \(\left(x,{y}\right)=\left(12,33\right)\), for \(\Delta x=1\) (f) and \(\Delta x=2\) (g), respectively. The RMS difference values are shown below the images. h The RMS differences at \((x,33)\).

Results

Calculation of wave function intensities and conductance

To prepare a training dataset, we perform numerical calculation; Fig. 2a shows a calculation model of the two-dimensional nanowire with a size of 60 × 50 single-orbital sites. For simplicity, defects in the nanowire are introduced as two antidots with a radius of 5 sites. One antidot is fixed around the upper centre of the system. With 1591 different antidot positions and five different random potentials applied to the systems, 7955 samples are prepared as a dataset. For each sample, we calculate WIs and two-terminal magneto-conductance by using a tight-binding method and Landauer’s formula (see “Methods”), where the magnetic field is introduced as a Peierls phase in the Landau gauge and inelastic scattering is not taken into consideration, assuming extremely low temperatures.

Figure 2c exemplifies the calculated magneto-conductance, G, for a sample as a function of the externally applied magnetic flux density B. Each conductance data exhibits different complex patterns. To highlight the patterns, we introduce the normalised magneto-conductance \(\Delta G(B)\) calculated by subtracting the averaged G over all the 7955 conductance data from the raw \(G(B)\) data (see Fig. 2d). We also calculate the wave functions under the zero magnetic flux density condition. We then obtain the WI images by squaring the modulus of the wave functions. Figure 2b shows WI images, where complicated quantum interference can be seen around the antidot defects. Zero padding16 with a width of 5 pixels is added to the left and right ends of each 60 × 50 pixel WI image, resulting in the final size of 60 × 60 pixels.

Quantum geometric decoder network

The QGD is trained to generate correct sample-shape images and quantum interference patterns from \(\Delta G(B)\) (Fig. 1d). To realise the generation, we combine a feature extraction network [(A) + (B) in Fig. 1d] and a geometry generative network [(C) + (B) in Fig. 1d] in the QGD. The feature extraction neural network is based on VAE14,15; VAE was shown to compress its input and extract the information necessary to reproduce the input on the output of the network14. The network converts a WI image with 3600 pixels into a seven-dimensional latent-space data [(A) in Fig. 1d] and then converts the data back to an image with 3600 pixels [(B) in Fig. 1d]. The network is trained so that the output image matches the input image (see Supplementary Fig. 1a and Tables 1, and 2 for more details). After the training, the latent space of the QGD acquires essential information to reconstruct the WI images.

The QGD can directly generate WI images from magneto-conductance data \(G\left(B\right)\) (Fig. 4a, b). First, the network (C) in Fig. 1d connects the input 101-points \(\Delta G\left(B\right)\) data and the seven-dimensional latent space, and then generates a WI image using the deconvolution part (B) of the VAE-based network. We trained the fully-connected neural network (C) so that the generated image well reproduces the input WI image associated with the magneto-conductance data (see Supplementary Fig. 1b and Table 3 for more details). Such a Y-shaped QGD was found to acquire an excellent capacity for WI generation as follows.

a, b The geometry generative network and an example of the input conductance and output deciphered image. \(\Delta G\) and B are the normalised magneto-conductance and the magnetic flux density, respectively. c The sample system whose magneto-conductance is shown in a. d The absolute difference image between the original image (inset) and the output deciphered image shown in b. e, f, g Examples of the input conductance (e), output deciphered images (f), and original images (g). Colour scales of the intensity images in f and g are the same as those of b and the inset to d, respectively.

Deciphering quantum fingerprint in magneto-conductance

Figure 4a, b shows a typical result of deciphering a quantum fingerprint into a WI image by using the QGD. Surprisingly, the QGD spontaneously generates a clear WI image just from conductance data (see Fig. 4a, b). The generated WI image and sample shape coincide with a separately calculated WI image (Fig. 4d) and the corresponding sample shape (Fig. 4c), respectively. To evaluate the generation fidelity, we calculated the root mean squared (RMS) error between the generated and calculated images for each sample in the test dataset (see “Methods” for more details). The average RMS error is \(2.1\times {10}^{-5}\). More examples are shown in Fig. 4e–g; WI images are almost correctly generated for all the magneto-conductance inputs. In addition to the locations of the antidots, significantly, the quantum interference patterns of the WIs are well generated from only the magneto-conductance data (see the fringe patterns in Fig. 4f). We also checked that similar generalisation performance of the QGD can be obtained even if some different system parameters are used (see “Methods”). The results show that, although a quantum fingerprint pattern looks random, it contains information on the quantum interference in the sample, and the QGD can interpret it.

Discussion

To check the performance of the feature extraction network [(A) + (B) in Fig. 1d], in Fig. 3a, we show the result of the WI autoencoding by the trained network. The output image clearly reproduces the input WI image (see Supplementary Figs. 3 and 10 for detailed error profiles), which can be attributed to the fact that the sharpness of the image generation is an advantage of the VAE. The RMS error is \(2.0\times {10}^{-5}\), comparable to the error of the QGD output. More examples are shown in Supplementary Fig. 3a–c. It is surprising that such complicated geometry information is reconstructed using only the seven-dimensional data in the latent space, which is three orders of magnitude smaller than the dimension of the input data: 3600. This demonstrates the excellent compressing ability of the feature extraction network.

The above decipherment using the QGD means that the present network can analyse the quantum fingerprints to reconstruct the microscopic images (geometry and WIs) in the sample. In the network, information on the interference is compressed into the seven-dimensional latent space. Each WI image is convoluted into a Gaussian distribution in the latent space, and the set of the distribution forms a global geometric structure describing similarity among the states. We now visualise and discuss the geometric structure of the data in the latent space. To this end, we use a dimensionality reduction method called the universal manifold approximation and projection (UMAP)17, which converts data points in the seven-dimensional latent space into those in a three-dimensional space while keeping their topological structure. Figure 3c shows the obtained three-dimensional representation of the latent-space data. Owing to the variational Bayesian approach of VAE14, VAE was found to construct well-organised data structures in the latent space (see also Supplementary Fig. 12). In the present feature extraction network, one can see that the dataset forms a two-dimensional curved surface in the latent space (Fig. 3c), but the surface shows a structure with some thickness (see, for instance, the regions with a dual-layered structure indicated by the red arrows in Fig. 3c). The two-dimensional nature of the dataset is consistent with the degree of freedom of the antidot location \((x,y)\). However, the appearance of the data-point scattering along the thickness direction cannot be explained by the location degree of freedom.

In order to understand the geometric structure, we performed a control experiment using geometry images without WIs (compare Fig. 3a, c and b, d and see “Methods” for details of the geometry images without WIs). In contrast to the images which contain WI as well as sample-shape images, the dataset without WI images forms a simple plane without the thickness structures in the three-dimensional representation (see Fig. 3d). By comparing the data in the latent space, we found that the dataset generated from the inputs with WI images is about ten times thicker than that generated from the inputs without WI images, where the thickness is defined as the variance of the data points (see “Methods” and Supplementary Fig. 11). The result suggests that the thickness structures, such as the dual-layered structure, carry electron information represented by WIs.

The dual-layered structure was found to be related clearly to the antidot location and interference; the upper layer consists of the data points for even x, while the lower one for odd x in the present parametrisation [see the points coloured in yellow (for even x) and blue (for odd x) around the red arrows in Fig. 3c]. To show how the interference patterns change with the antidot location \(\left(x,y\right)\), we calculate the absolute difference and the RMS difference of the WI images between \(\left(x,y\right)\) and \((x+\Delta x,y)\). Figure 3f, g shows the results at \(\left(x,y\right)=(12,33)\) for \(\Delta x=1,2\), respectively. The absolute difference for \(\Delta x=2\) is less than that for \(\Delta x=1\) [compare the images between Fig. 3f and g, e.g., at the left and right sides of the antidot position \(\left(x,y\right)\)]. In Fig. 3h, we plot the RMS difference in each image as a function of x at \(y=33\). For most of the x values, the RMS difference for \(\Delta x=2\) turned out to be less than that for \(\Delta x=1\), indicating that the WI image for the antidot position \(\left(x,y\right)\) has similar features to that for \((x+\Delta x,y)\) with \(\Delta x=\pm 2,\pm 4,\pm 6\cdots\) in the present parametrisation. In fact, we found that, near the antidot, the crest and trough patterns of the WIs agree with each other between \(\left(x,y\right)\) and \(\left(x+\Delta x,y\right)\) for even \(\Delta x\) numbers, while those are reversed for odd \(\Delta x\), suggesting that, in the present study, the constructive and deconstructive interference gives rise to the observed dual-layered structure in the latent space (see “Methods” for more details). The result suggests that the electron information, such as interference, is encoded in the latent space as the thickness-dimension information, which emerges on the two-dimensional curved surface representing the antidot location. Such extra-dimension information appears to allow the QGD to interpret quantum fingerprint patterns in the present study.

In summary, we demonstrated the decipherment of quantum fingerprints in conductance by developing QGD. The QGD was found to transcribe the complicated magneto-conductance patterns into geometric information such as defect distributions and WIs in the samples. The WI patterns turned out to be encoded in the latent space of QGD as an extra dimension of the manifold representing the defect position information. It is truly worthwhile to tune the network by using data from real objects to show the versatility of the present method (for experiments using physical samples, see Supplementary Fig. 4 and Supplementary Note 1). We expect that a wide range of signals from quantum systems can be interpreted by this method.

Methods

Numerical calculations of the input dataset

The WIs and two-terminal magneto-conductance calculations are based on a tight-binding model and the Landauer–Buttiker formula2. The Hamiltonian for the present calculation is \(H={\sum }_{i}(4t+{U}_{i}){c}_{i}^{{{\dagger}} }{c}_{i}-{\sum }_{ < i,j > }t\exp [-{{{{{\rm{i}}}}}}\,{{{{{\rm{\pi }}}}}}B{a}^{2}/{\phi }_{0}({x}_{i}-{x}_{j})({y}_{i}+{y}_{j})]{c}_{j}^{{{\dagger}} }{c}_{i}\), where \({c}_{i}^{{{\dagger}} }\) and \({c}_{i}\) are creation and annihilation operators, respectively, for electrons at the site \(\left({x}_{i},{y}_{i}\right)\), t is the hopping energy, \({U}_{i}\) is a random potential representing sample-specific randomness, and \({\phi }_{0}\equiv h/e\) is the magnetic flux quantum. The magnetic flux density B is introduced over the entire scattering region. a is the lattice constant and it is not a fixed parameter since our model is spatially scale-free calculation18. The second term in the Hamiltonian is a Peierls phase with the Landau gauge and is summed over the nearest-neighbour pairs. The hopping energy is set to \({t}=1\). The magnitude of the disorder potential is set to one tenth of the hopping energy; \({U}_{i}\) is sampled uniformly but randomly in the range from −0.05t to 0.05t. The Fermi energy is set to 2.0t except for the dataset of the Fermi-energy dependent magneto-conductance calculation in Supplementary Fig. 8. One antidot is fixed at the upper centre of the system, while the other is located so as not to overlap with the fixed one. The antidot is modelled by removing lattice points in a circular shape from the two-dimensional square lattice of the nanowire. Two leads with the same square lattice and hopping energy are attached to both ends of the scattering region. Kwant18, a code for numerical calculation of wave functions and quantum transport properties, is used to obtain the dataset of magneto-conductance and WIs for the samples with antidot defects. B dependence of the two-terminal conductance is calculated, where \(B\) is swept from \(0\) to \(0.12\) in 101 divisions. The normalised magneto-conductance \(\Delta G(B)\) is calculated by subtracting the averaged conductance \({G}_{{{{{{\rm{ave}}}}}}}(B)\) from the raw \(G(B)\), where we defined \({G}_{{{{{{\rm{ave}}}}}}}(B)\) as the conductance value averaged over 7955 conductance data with 1591 different antidot positions and 5 different random potentials. We checked that the conductance calculation works well (see Supplementary Figs. 13 and 14) and \(\Delta G\left(B\right)\) shows fluctuation with respect to B with the variance comparable to \({e}^{2}/h\)19. WIs are calculated at the zero magnetic flux density condition. We note that a part of wave function phase information is included in the WI because the amplitude is the result of complex interference between scattered waves. The WI images shown in the figures are normalised such that the sum of the intensity equals to unity for each antidot configuration; \({\sum }_{i=1}^{60}{\sum }_{j=1}^{60}{X}_{i,j}=1\), where \({{{{{\bf{X}}}}}}\) is a WI image, and the suffixes \(i,{j}\) represent the pixel index. Note that the normalisation is done only for the visualisation plots after the training, and unnormalised data is used during the training. A geometry image without WIs is defined as an image with a non-zero constant value on a nanowire and zero inside the antidots and outside the nanowire. No WI images are superimposed. The constant value is determined by the normalisation condition \({\sum }_{i=1}^{60}{\sum }_{j=1}^{60}{X}_{i,j}=1\), where \({{{{{\bf{X}}}}}}\) is an image, and the suffixes \(i,{j}\) represent the pixel label.

Feature extraction network and its training

The feature extraction network is based on a VAE network14,15 comprising image encoding and decoding networks. The encoding network is composed of 2 two-dimensional convolution layers with the kernel size of \(4\times 4\) and 3 fully-connected layers, where each layer has 1024, 512, and 7 nodes. The encoding network outputs a seven-dimensional gaussian distribution in the latent space via the reparameterisation trick used in VAE14,15. The dimension of the latent space is set to 7 to reconstruct the input WI images with high accuracy. The decoding network is composed of 2 fully-connected layers and 2 two-dimensional deconvolution layers with the kernel size of \(4\times 4\). The network architecture parameters are determined based on a recipe found in machine learning for image recognition15 and then fine-tuned. Rectified linear units (ReLUs)20 and Leaky ReLUs21 are used as activation functions. To improve the learning performance, the batch normalisation technique22 is used. For the training and evaluation of the network, each dataset is split into training and test datasets with the ratio of 7 to 3. The loss function is the evidence lower bound14, and the optimisation algorithm is Adam23 with a learning rate of 0.0001. See Supplementary Figs. 1a, 2a and Supplementary Tables 1, 2 for more details. We used the WI images as the inputs to the feature extraction network to extract defect position and WI information. The definition of the RMS error in evaluation is \(\sqrt{\frac{1}{N}{\sum }_{n=1}^{N}\left\{\frac{1}{3600}{\sum }_{i=1}^{60}{\sum }_{j=1}^{60}{\left[{X}_{i,j}^{(n)}-{Y}_{i,j}^{(n)}\right]}^{2}\right\}}\), where N is the number of samples in the test dataset, \({{{{{{\bf{X}}}}}}}^{{{{{{\boldsymbol{(}}}}}}n{{{{{\boldsymbol{)}}}}}}}\) is the n-th numerical-calculated WI image input, \({{{{{{\bf{Y}}}}}}}^{{{{{{\boldsymbol{(}}}}}}n{{{{{\boldsymbol{)}}}}}}}\) is the n-th output image of the feature extraction network [(A) + (B) in Fig. 1d], and the suffixes \(i,j\) represent the pixel index. The RMS error is a dimensionless quantity because the WI images \({{{{{{\bf{X}}}}}}}^{{{{{{\boldsymbol{(}}}}}}n{{{{{\boldsymbol{)}}}}}}}\) and \({{{{{{\bf{Y}}}}}}}^{{{{{{\boldsymbol{(}}}}}}n{{{{{\boldsymbol{)}}}}}}}\) are dimensionless quantities that satisfy the normalisation conditions: \({\sum }_{i=1}^{60}{\sum }_{j=1}^{60}{X}_{i,j}^{(n)}=1\) and \({\sum }_{i=1}^{60}{\sum }_{j=1}^{60}{Y}_{i,j}^{(n)}=1\).

Geometry generative network and its training

The geometry generative network is a combination of a fully-connected network and the decoding network in the feature extraction network. The fully-connected network has 4 layers, where the dropout technique24 is used to improve the learning performance. The loss function is the mean squared error. For the training and evaluation of the network, the dataset is split into training and test datasets (corresponding WIs of the test data are not seen by the QGD network). See Supplementary Figs. 1b, 2b and Supplementary Table 3 for more details. The definition of the RMS error in the evaluation is the same as that used in the feature extraction network, except that the \({{{{{{\bf{Y}}}}}}}^{{{{{{\boldsymbol{(}}}}}}n{{{{{\boldsymbol{)}}}}}}}\) is replaced by the generated WI image from the n-th magneto-conductance input. The definition of the absolute difference image \({{{{{{\bf{X}}}}}}}^{{{{{{\rm{dif}}}}}}}\) of the WI images between \({{{{{\bf{X}}}}}}\) and \({{{{{\bf{X}}}}}}^{\prime}\) is \({X}_{i,j}^{{{{{{\rm{dif}}}}}}}=\left|{X}_{i,j}-{{X}^{\prime} }_{i,j}\right|\). Although reconstructing a Hamiltonian from wave functions (an inverse problem) is difficult, from a theoretical point of view, potential reconstruction is possible if scattering states are known for all the incident wavenumbers25. In the present case, although the incident wavenumbers are limited to those on the Fermi surface, the spatial distribution of the potential is reproduced. This might be attributed to the fact that the potential shape is restricted to the antidot shape.

Latent-space dimension in QGD

To determine the appropriate dimension of the latent space, we performed control experiments. Supplementary Figure 5 shows the latent-space dimension dependence of the averaged RMS error of the decoded WI images. For the feature extraction network, the latent-space dimension less than 5 was found to show large RMS errors, which can be attributed to insufficient capacity of the latent space as shown in Supplementary Fig. 5a, b. On the other hand, higher latent-space dimension than 9 was found to show large RMS errors due to too many learning parameters. Due to the competition between the two, the RMS error takes a minimum around at dimension = 7. A similar behaviour was also found for the WI images decoded from the geometry generative network (Supplementary Fig. 5c, d). Therefore, we determined to use the seven-dimensional latent space for the QGD network.

Generalisation ability of the QGD network

In Supplementary Figs. 6 and 7, we show some training results of the network with different tight-binding parameters: the Fermi energy and disorder potential, respectively. The result shows that the network we proposed exhibits high fidelity regardless of the parameters. Although the interference fringe patterns in the WI images largely depend on the Fermi energy and the disorder potential (see Supplementary Figs. 6 and 7 and those captions for details), the method can reconstruct WI images. In Supplementary Fig. 8, we also show the WI images decoded from the Fermi energy dependence of the magneto-conductance, where the Fermi energy is swept from 1.2t to 2.0t at zero magnetic flux density. The data shows that the WI images can also be decoded with high fidelity when we use the Fermi energy dependence data instead of the magnetic flux density dependence.

Quantitative analysis of the data structure in the latent space

We quantitatively estimated the difference between Fig. 3c and d by calculating the thicknesses of the data structures as the variance of the data points in the thickness direction. Firstly, as shown in Supplementary Fig. 11a, we cut out a part of the data structure in the latent space, which locally forms two-dimensional plane with a thickness. The local variance was calculated for the in-plane and thickness directions. The ratio of the local variance along the in-plane and thickness directions is 0.10 for the data structure formed by the geometry images with WIs (Supplementary Fig. 11c). On the other hand, the data structure formed by the geometry images without WIs exhibits a much smaller value of the ratio, 0.01 (Supplementary Fig. 11b, d).

Dual-layered data structure in the latent space

In the present calculation, we chose the pixel size of the interference images so that the Fermi wavelength is equivalent to 2 pixels. In the condition, the data scattering in the latent space was found to apparently be quantised to show the most interpretable structure: the dual layers, which can be explained by the fact that a standing wave between an antidot and the sample end can be classified into two; destructive and constructive interference patterns. In fact, the dual-layer structure was found to change into a randomly scattered structure around the surface by changing the pixel size, showing that the essential feature here is the data scattering around the surface structure representing the information of the antidot positions.

Quantitative comparison between original and deciphered WI images

We analysed the WI images in terms of the normalised cross correlation:

where A and B are the original and generated WI images, respectively. As shown in Supplementary Fig. 9a, b, RNCC increases with the training epoch. RNCC after the training is much greater (RNCC > 0.997) than that before the training. We confirmed that the generated WI images show high fidelity in terms of the normalised cross correlation.

Data availability

The data that support the findings of this study are available from the corresponding author upon reasonable request.

Code availability

The codes that support the findings of this study are available from the corresponding author upon reasonable request.

References

Akkermans, E. & Montambaux, G. Mesoscopic Physics of Electrons and Photons (Cambridge University Press, 2007).

Datta, S. Electronic Transport in Mesoscopic Systems (Cambridge University Press, 1995).

Aharonov, Y. & Bohm, D. Significance of electromagnetic potentials in the quantum theory. Phys. Rev. 115, 485 (1959).

Webb, R. A., Washburn, S., Umbach, C. P. & Laibowitz, R. B. Observation of h/e Aharonov–Bohm oscillations in normal-metal rings. Phys. Rev. Lett. 54, 2696 (1985).

Saitoh, E., Kasai, S. & Miyajima, H. Aharonov Bohm oscillation in a multi-domain ferromagnetic NiFe ring. Physica E 18, 237–238 (2003).

Ramos, R. et al. Observation of quantum interference conductance fluctuations in metal rings with strong spin-orbit coupling. Appl. Phys. Lett. 117, 242402 (2020).

Mailly, D. & Sanquer, M. Sensitivity of quantum conductance fluctuations and of 1/f noise to time reversal symmetry. J. Phys. I Fr. 2, 357–364 (1992).

Lee, P. A. & Ramakrishnan, T. V. Disordered electronic systems. Rev. Mod. Phys. 57, 287–337 (1985).

Lopez-Bezanilla, A. & von Lilienfeld, O. A. Modeling electronic quantum transport with machine learning. Phys. Rev. B 89, 235411 (2014).

Korol, R. & Segal, D. Machine learning prediction of DNA charge transport. J. Phys. Chem. B 123, 2801–2811 (2019).

Li, K., Lu, J. & Zhai, F. Neural networks for modeling electron transport properties of mesoscopic systems. Phys. Rev. B 102, 064205 (2020).

Carleo, G. et al. Machine learning and the physical sciences. Rev. Mod. Phys. 91, 045002 (2019).

Ohtsuki, T. & Mano, T. Drawing phase diagrams of random quantum systems by deep learning the wave functions. J. Phys. Soc. Jpn. 89, 022001 (2020).

Kingma, D. P. & Welling, M. Auto-encoding variational bayes. Preprint at https://arxiv.org/abs/1312.6114 (2013).

Doersch, C. Tutorial on variational autoencoders. Preprint at https://arxiv.org/abs/1606.05908 (2016).

Albawi, S., Mohammed, T. A. & Al-Zawi, S. Understanding of a convolutional neural network. In International Conference on Engineering and Technology (ICET). (IEEE, 2017).

McInnes, L., Healy, J. & Melville, J. UMAP: Uniform manifold approximation and projection for dimension reduction. Preprint at https://arxiv.org/abs/1802.03426 (2018).

Groth, C. W., Wimmer, M., Akhmerov, A. R. & Waintal, X. Kwant: a software package for quantum transport. N. J. Phys. 16, 063065 (2014).

Lee, P. A., Stone, A. D. & Fukuyama, H. Universal conductance fluctuations in metals: Effects of finite temperature, interactions, and magnetic field. Phys. Rev. B 35, 1039 (1987).

Glorot, X., Bordes, A. & Bengio, Y. Deep sparse rectifier neural networks. In Proc. 14th International Conference on Artificial Intelligence and Statistics 315–323 (PMLR, 2011).

Xu, B., Wang, N., Chen, T. & Li, M. Empirical evaluation of rectified activations in convolutional network. Preprint at https://arxiv.org/abs/1505.00853 (2015).

Ioffe, S. & Szegedy, C. Batch normalization: Accelerating deep network training by reducing internal covariant shift. Preprint at https://arxiv.org/abs/1502.03167 (2015).

Kingma, D. P. & Ba, J. Adam: A method for stochastic optimization. Preprint at https://arxiv.org/abs/1412.6980 (2014).

Srivastava, N., Hinton, G., Krizhevsky, A., Sutskever, I. & Salakhutdinov, R. Dropout: A simple way to prevent neural networks from overfitting. JMLR 15, 1929–1958 (2014).

Takayanagi, K. & Oishi, M. Inverse scattering problem and generalized optical theorem. J. Math. Phys. 56, 022101 (2015).

Acknowledgements

The authors thank Y. Oikawa for valuable discussions. This work was supported by ERATO “Spin Quantum Rectification Project” (No. JPMJER1402) from JST, Japan; Grant-in-Aid for Scientific Research (S) (No. JP19H05600), (A) (No. JP19H00658), (B) (No. JP20H02599), and (C) (No. JP20K05297), Grant-in-Aid for Early-Career Scientists (No. 21K14519), Grant-in-Aid for Research Activity Start-up (Nos. JP19K21031 and JP19K21035, JP20K22476) from JSPS KAKENHI, Japan; Institute for AI and Beyond of the University of Tokyo, Japan; and the NEC Corporation. R.R. acknowledges support from the European Commission through the project 734187-SPICOLOST (H2020-MSCA-RISE-2016), the European Union’s Horizon 2020 research and innovation programme through the MSCA grant agreement SPEC-894006, Grant RYC 2019-026915-I funded by the MCIN/AEI/10.13039/501100011033 and by “ESF investing in your future”, the Xunta de Galicia (ED431B 2021/013, Centro Singular de Investigación de Galicia Accreditation 2019-2022, ED431G 2019/03) and the European Union (European Regional Development Fund - ERDF). K.O. acknowledges support from GP-Spin at Tohoku University.

Author information

Authors and Affiliations

Contributions

S.D. and K.T. contributed equally to this work. E.S. planned and supervised the study. S.D. and K.T. performed numerical calculations, analysed the data, and prepared the manuscript with help from T.O. S.K. built and optimised the machine learning models. T.K., R.R., K.O., T.O., and E.S. discussed the results, developed the explanation of the experiments, and commented on the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review

Peer review information

Nature Communications thanks Rudolf Römer, Feng Zhai, and the other, anonymous, reviewer(s) for their contribution to the peer review of this work. Peer reviewer reports are available.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Daimon, S., Tsunekawa, K., Kawakami, S. et al. Deciphering quantum fingerprints in electric conductance. Nat Commun 13, 3160 (2022). https://doi.org/10.1038/s41467-022-30767-w

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41467-022-30767-w

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.