Abstract

In order to better understand how the brain perceives faces, it is important to know what objective drives learning in the ventral visual stream. To answer this question, we model neural responses to faces in the macaque inferotemporal (IT) cortex with a deep self-supervised generative model, β-VAE, which disentangles sensory data into interpretable latent factors, such as gender or age. Our results demonstrate a strong correspondence between the generative factors discovered by β-VAE and those coded by single IT neurons, beyond that found for the baselines, including the handcrafted state-of-the-art model of face perception, the Active Appearance Model, and deep classifiers. Moreover, β-VAE is able to reconstruct novel face images using signals from just a handful of cells. Together our results imply that optimising the disentangling objective leads to representations that closely resemble those in the IT at the single unit level. This points at disentangling as a plausible learning objective for the visual brain.

Similar content being viewed by others

Introduction

It is well known that neurons in the ventral visual stream support the perception of faces and objects1. Decades of extracellular single neuron recordings have defined their canonical coding principles at different stages of the processing hierarchy, such as the sensitivity of early visual neurons to oriented contours and more anterior ventral stream neurons to complex objects and faces2,3. A sub-network of the inferotemporal (IT) cortex specialised for face processing is particularly well studied3,4,5. Faces appear to be represented within such patches using low-dimensional neural codes, where each neuron encodes an orthogonal axis of variation in the face space3. An important yet unanswered question is how such representations may arise through learning from the statistics of the visual input. The most successful computational model of face processing, the active appearance model (AAM)6, is a largely handcrafted framework which cannot help answer this question. Can we find a general learning principle that could match AAM in terms of its explanatory power, while having the potential to generalise beyond faces?

Recently, deep neural networks have emerged as popular models of computation in the primate ventral stream7,8. Unlike AAM, these models are not limited to the domain of faces, and they develop their tuning distributions through data-driven learning. Such contemporary deep networks are trained with high-density teaching signals on multiway object recognition tasks9, and in doing so form high-dimensional representations that, at the population level, closely resemble those in biological systems10,11,12. Such deep classifiers, however, currently do not explain the responses of single neurons in the primate face patch better than AAM6. Furthermore, deep classifiers and AAM differ in their representational form. While deep classifiers develop high-dimensional representations where information is multiplexed over many simulated neurons, AAM has a low-dimensional code where single dimensions encode orthogonal information. Hence, the question of whether there exists a learning objective that leverages the power of deep neural networks while preserving the “gold standard” representational form and explanatory power of the handcrafted AAM remains open.

An important further challenge for theories that rely on deep supervised networks is that external teaching signals are scarce in the natural world, and visual development relies heavily on untutored statistical learning13,14,15. Building on this intuition, one longstanding hypothesis1,16 is that the visual system uses self-supervision to recover the semantically interpretable latent structure of sensory signals, such as the shape or size of an object, or the gender or age of a face image. While appearing deceptively simple and intuitive to humans, such interpretable structure has proven hard to recover in practice, since it forms a highly complex non-linear transformation of pixel-level inputs. Recent advances in machine learning, however, have offered an implementational blueprint for this theory with the advent of deep self-supervised generative models that learn to “disentangle” high-dimensional sensory signals into meaningful factors of variation. One such model, known as the beta-variational autoencoder (β-VAE), learns to faithfully reconstruct sensory data from a low-dimensional embedding whilst being additionally regularised in a way that encourages individual network units to code for semantically meaningful variables, such as the colour of an object, the gender of a face, or the arrangement of a scene (Fig. 1a–c)17,18,19. These deep generative models thus continue the longstanding tradition from the neuroscience community of building self-supervised models of vision20,21, while moving in a new direction that allows strong generalisation, imagination, abstract reasoning, compositional inference and other hallmarks of biological visual cognition18,22,23,24.

a Latent traversals used to visualise the semantic meaning encoded by single disentangled latent units of a trained model. In each row the value of a single latent unit is varied between −3 and 3, while the other units are fixed. The resulting effect on the reconstruction is visualised. Each column represents a different model trained to disentangle a different dataset. Chair and face images in the leftmost two traversals are reproduced with the permission of Lee et al.19. Traversals of 3D scenes are reproduced with the permission of Burgess et al.18. b Schematic representation of a self-supervised deep neural network. The encoder maps the input image into a low-dimensional latent representation, which is used by the decoder to reconstruct the original image. Blue indicates trainable neural network units that are free to represent anything. Pink indicates latent representation units that are compared to neurons. CNN, convolutional neural network. FC, fully connected neural network. Face image reproduced with permission from Gao et al.57. c Latent traversals of eight units of a β-VAE model trained to disentangle 2100 natural face images. The initial values of all latent units were obtained by encoding the same input image.

In this work we compare the responses of single neurons in the primate IT face patches and single units learnt by different computational models when presented with the same face images. Our goal is to answer the question of whether a general learning objective can give rise to an encoding that matches the representational form employed by the real neurons. This question has so far been ignored in the literature, with most quantitative results instead reporting measures of explanatory power3,6,8,11,25,26,27,28 that are insensitive to the representational form15. Our results demonstrate that the disentangling objective optimised by β-VAE is a viable option for explaining how the ventral visual stream develops the observed low-dimensional face representations3. We find significantly stronger one-to-one correspondence between the responses of single units learnt by β-VAE and the responses of single IT neurons compared to all other baselines, including deep classifiers and AAM. β-VAE also produces more accurate reconstructions of novel faces than the alternative methods when decoding from the activity of a handful of face-patch neurons. Furthermore, β-VAE learns using a general self-supervised objective without relying on high-density teaching signals like deep classifiers, which makes it more biologically plausible.

Results

Single disentangled units explain the activity of single neurons

If the computations employed in biological sensory systems resemble those employed by this class of deep generative model to disentangle the visual world, then the tuning properties of single neurons should map readily onto the meaningful latent units discovered by the β-VAE. Here, we tested this hypothesis, drawing on a previously published dataset6 of neural recordings from 159 neurons in macaque face area AM, made whilst the animals viewed 2100 natural face images (Fig. 2a, see “Methods”). We first investigated whether the variation in average spike rates of any of the individual recorded neurons was explained by the activity in single units of a trained β-VAE that learnt to “disentangle” the same face dataset that was presented to the primates. For illustration, in Fig. 1c we show faces that were generated (or “imagined”) by such a β-VAE. Each row of faces is produced by gradually varying the output of a single network unit (we call these “latent units”), and it can be seen that they learnt to encode fairly interpretable variables—e.g. hairstyle, age, face shape or emotional variables, such as the presence of a smile. All presented labels are the consensus choice among 300 human raters. On average, across all 11 units discovered by the β-VAE, 32.1% of the participants agreed on a single distinct semantic label per unit when presented with a choice of 17 options including “none of the above” (significantly above the 11.82% chance level, p = 0.00011; minimum agreement per unit 10.9%, maximum agreement per unit 70.5%; see “Methods”), thus validating the human interpretability of the latents discovered by the β-VAE. These individual β-VAE units were also able to explain the response variance in single recorded neurons, as shown in Fig. 2b. For example, neuron 117 is shown to be sensitive to gender, and neuron 136 is shown to respond differentially to the presence of a smile.

a Coronal section showing the location of fMRI-identified face patches in two primates, with patch AM circled in red. Dark black lines, electrodes. Reproduced with permission from Chang et al.6. b Explained variance of single neuron responses to 2100 faces. Response variance in single neurons is explained primarily by single disentangled units encoding different semantically meaningful information (insets, latent traversals as in Fig. 1a, c). Source data are provided as a Source Data file.

To quantify this effect, we used a metric recently proposed in the machine learning literature, referred to as neural “alignment” in this work for more intuitive exposition, which measures the extent to which variance in each neuron’s firing rate can be explained by a single latent unit29, but is insensitive to the converse, i.e. whether a single unit predicts the response of many biological neurons (Fig. 3a, see “Methods”). The alignment score measures whether the representational form within a subset of the neural population is similar to the representational form discovered by the model, which is a different yet complementary goal to other measures commonly used in the literature8,28, which instead measure the amount of linearly accessible information shared by the neural and model representations at the population level, while being insensitive to the representational form. High alignment scores indicate that a neural population is intrinsically low-dimensional, with the factors of variation mapping onto the variables discovered by the latent units of the neural network15.

a Schematic of alignment score29, 63. Green arrows, lasso regression weights obtained from predicting neural responses from model units (thickness indicates weight magnitude). High alignment scores are obtained when per-neuron regression weights have low entropy (one strong weight); high entropy (all incoming weights are of equal magnitude) results in low alignment scores. b β-VAE alignment scores match the ceiling provided by subsets of neurons (p = 0.4345, two-sided Welsch’s t-test). Circles, alignment per model (n = 51) or neuron subsets (n = 50). Boxplot centre is median, box extends to 25th and 75th percentiles, whiskers extend to the most extreme data that are not considered outliers, outliers are plotted individually. Source data are provided as a Source Data file. c Alignment scores per model (n = 51) or neuron subsets (n = 50) against artificial neural responses (linear recombination of original neural responses). Boxplot centre is median, box extends to 25th and 75th percentiles, whiskers extend to the most extreme data that are not considered outliers, outliers are plotted individually. Source data are provided as a Source Data file. d Alignment scores correlate with the disentanglement quality of latent units obtained from 400 β-VAE models trained with different β values (indicated by colour). UDR, Unsupervised Disentanglement Ranking31, measures the quality of disentanglement, higher is better. Red line, least squares fit (r = 0.96, Pearson correlation). Source data are provided as a Source Data file. e Running correlation between UDR and alignment scores across subsets of models. Models in each subset were trained with different β values, with the number of β values in each subset indicated on the x-axis. Rightmost circle, Pearson correlation across 400 β-VAE models, spanning 40 β values as reported in (d). Leftmost circle, average across 40 Pearson correlations, each calculated with 10 models with a single β value. Bars, standard deviation. Source data are provided as a Source Data file.

We first compared alignment scores between the β-VAE and the monkey data to a theoretical ceiling which was obtained by subsampling the neural data to match the intrinsic dimensionality of the β-VAE latent representation (see “Methods”) and computing its alignment with itself (Fig. 3b). The first surprising observation is that neuron subsets do not reach the maximal alignment score of 1 to the full set of 159 neurons, which points to a significant amount of redundancy in the coding preferences within the neural population. If all neurons encoded unique information, then each neuron in the sampled subset would align uniquely and one-to-one only to itself in the full neural population, resulting in the perfect alignment score. Lower alignment scores indicate that there are a number of other neurons in the population with similar coding properties, resulting in a few-to-one mapping. The second interesting observation is that alignment scores in the β-VAE met the ceiling provided by the neural subsets, with no reliable difference between the two estimates obtained when the analysis was repeated on multiple subsamples and with multiple network instances (p = 0.4345, two-sided Welch’s t-test). This suggests that each trained β-VAE instance was able to automatically discover through learning a small number of disentangled units with response properties that are equivalent to the equally sized subsets of real neurons. Furthermore, when we repeated this analysis while computing alignment against fictitious neural responses obtained by linearly recombining the original neural data, we found a significant drop in scores for both the β-VAE and neural subsets (Fig. 3c, p = 3.2197e–29 for β-VAE, p = 5.6624e–60 for neuron subsets; two-sided Welch’s t-test), indicating that the individual disentangled units discovered by the β-VAE map significantly better onto the responses of single neurons recorded from macaque IT, rather than onto their linear combinations. Indeed, the average β-VAE alignment scores from Fig. 3c are almost as low as those of the random baseline matched in sparsity to the trained β-VAE instances shown below.

The extent to which the β-VAE is effective in disentangling a dataset into its latent factors can vary substantially with the way it is regularised, as well as with randomness in its initialisation and training conditions30. The parameter after which the network class is named determines the weight of a regularisation term that aims to keep the latent factors independent. Networks with higher values of β thus typically give rise to more disentangled representations, as measured by a metric known as the Unsupervised Disentanglement Ranking (UDR, see “Methods”)31, a finding we replicate here. However, we also found that networks with higher UDR scores additionally had higher alignment scores with the neural data (Fig. 3d), and that this relationship held for networks with the same and different values of β (Fig. 3e). In other words, the better the network was able to disentangle the latent factors in the face dataset, the more those factors were expressed in single neurons recorded from macaque IT.

All aspects of the disentanglement objective are important

Next, we compared the β-VAE alignment scores with a number of rival models. These baseline models were carefully chosen to disambiguate the role played by the different aspects of the β-VAE design and training in explaining the coding of neurally aligned variables in its single latent units (see “Methods”). We included a state-of-the-art deep supervised network (VGG)32 that has previously been proposed as a good model for comparison against neural data in face recognition tasks33,34, other generative models, such as a basic autoencoder (AE)35 and a variational autoencoder (VAE)36, as well as baselines provided by ICA, PCA and a classifier which used only the encoder from the β-VAE. We defined “latent units” as those emerging in the deepest layers of these networks and, where appropriate, used PCA or feature subsampling (e.g. for VGG raw) to equate the dimensionality of the latent units (to ≤50) to provide a fair comparison with the β-VAE. We also compared β-VAE to the “gold standard” provided by the previously published AAM3, which produced a low-dimensional code that explained the responses of single neurons to face images well3,6. Unlike the β-VAE, which relied on a general learning mechanism to discover its latent units, AAM relied on a manual process idiosyncratic to the face domain. Hence, β-VAE provides a learning-based counterpart to the handcrafted AAM units that could generalise beyond the domain of faces. Although the baselines considered varied in their average alignment scores (Fig. 4a), none approached those of the β-VAE, for which alignment was statistically higher than every other model (all p-values < 0.01, two-sided Welch’s t-test). Furthermore, high β-VAE alignment scores could not be explained solely by the sparse nature of disentangled representations, with the random baseline matched in sparseness to the trained β-VAE instances obtaining significantly lower alignment scores (Fig. 4a, teal). The alignment scores broken down by individual neurons are plotted in Fig. 4b for the β-VAE and its baselines.

a Alignment scores are significantly higher for the β-VAE than the baseline models and the “gold standard” provided by the AAM (all p < 0.01, two-sided Welsch’s t-test; VGG (raw) p = 7.4220e–06, Classifier p = 0.0, AAM p = 9.1757e–42, VAE p = 9.7811e–43, VGG (PCA) p = 1.9383e–35, PCA p = 0.0, AE p = 0.0, ICA p = 0.0). Circles, alignment per model (β-VAE, n = 51; VGG (raw), n = 22; Classifier, n = 64; VAE, Variational AutoEncoder36, n = 50; AE, AutoEncoder35, n = 50; VGG (PCA)32, n = 41; PCA, n = 41; ICA, n = 50; AAM, active appearance model3, n = 21). Red circle indicates VGG (raw) with all N = 4096 units from the last hidden layer. Teal boxplot—random baseline with sparseness matched to the 51 β-VAE models. Boxplot centre is median, box extends to 25th and 75th percentiles, whiskers extend to the most extreme data that are not considered outliers, outliers are plotted individually. Source data are provided as a Source Data file. b Per-neuron alignment scores. Scores are discretised into equally spaced bins. Scores in each row are arranged in descending order. The results from single models, chosen to have the median alignment score. VGG (raw) results are presented from the model that contained all N = 4096 units from the last hidden layer. Arrows point to neurons from Fig. 2b within the β-VAE alignment scores. Source data are provided as a Source Data file.

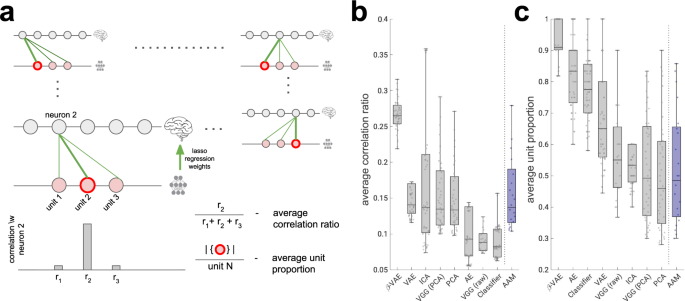

We validated the findings above using a more direct metric for the coding of latent factors in single neurons, which compared the ratio between the maximum correlation between spike rates and activations in each latent unit, and the sum of such correlations over the model units (average correlation ratio in Fig. 5a, see “Methods”). This ratio was higher for the β-VAE than for other models, confirming the results with alignment scores (Fig. 5b). Interestingly, different neurons did not tend to covary with the same β-VAE latent unit. In fact, there was more heterogeneity among β-VAE units that achieved maximum correlation with the neural responses than among the equivalent units for other models (Fig. 5c). Rich heterogeneity in response properties of single neurons (or latent units) is exactly what would be desired to enable a population of computational units to encode the rich variation in the image dataset. This broad pattern of the results also held when the models were presented with 62 novel face identities that were never seen by the models during training (see Figs. S1b and S2b, c).

a Schematic of average correlation ratio and average unit proportion scores. A good computational model for explaining responses of single neurons should allow each neuron (grey circle) to be decodable from a single latent unit (pink circle). Green lines are Lasso regression weights as in Fig. 3a. The response of each neuron should correlate strongly with the response of only one latent unit (grey bars) as measured by the average correlation ratio (higher is better). Different neurons should correlate strongly with diverse single latent units (red circles) as measured by the average unit proportion score (higher is better). b Average correlation ratio scores are significantly higher for the β-VAE than the baseline models and the AAM model (all p < 0.01; AAM p = 3.4626e–07, ICA p = 4.3804e–07, VGG (PCA) p = 2.9275e–15, PCA p = 1.8577e–15, VAE p = 5.7259e–13, AE p = 8.2339e–16, Classifier p = 7.8206e−55, VGG (raw) p = 1.4072e–26, two-sided Welsch’s t-test). Circles, average correlation ratio score per model (β-VAE, n = 51; VGG (raw), n = 22; Classifier, n = 64; VAE, Variational AutoEncoder36, n = 50; AE, AutoEncoder35, n = 50; VGG (PCA)32, n = 41; PCA, n = 41; ICA, n = 50; AAM, active appearance model3, n = 21). Boxplot centre is median, box extends to 25th and 75th percentiles, whiskers extend to the most extreme data that are not considered outliers, outliers are plotted individually. Source data are provided as a Source Data file. c Average unit proportion scores are significantly higher for the β-VAE than the baseline models and the AAM model (all p < 0.01; Classifier p = 4.5163e–10, AE p = 1.4289e–04, VAE p = 4.1792e–14, ICA p = 1.1129e–19, VGG (PCA) p = 1.7441e–19, PCA p = 3.5554e–19, AAM p = 1.8075e–09, VGG (raw) p = 1.2049e–08, two-sided Welsch’s t-test). Circles, average unit proportion score per model (β-VAE, n = 51; VGG (raw), n = 22; Classifier, n = 64; VAE36, n = 50; AE35, n = 50; VGG (PCA)32, n = 41; PCA, n = 41; ICA, n = 50; AAM3, n = 21). Boxplot centre is median, box extends to 25th and 75th percentiles, whiskers extend to the most extreme data that are not considered outliers, outliers are plotted individually. Source data are provided as a Source Data file.

Taken together, these results suggest that no one feature of the β-VAE—its training objective (baselined by AE, VAE and classifier), architecture and training data distribution (baselined by VGG) or isolated aspects of its learning objective (baselined by PCA, ICA and the sparse random model)—was sufficient to explain the coding of neurally aligned latent variables in single units. Rather, it was all of these design choices together that allowed the β-VAE to learn a set of disentangled latent units that explained the responses to single neurons so well.

Disentanglement discovers a subset of all face dimensions

So far we have shown that the disentangled representational form in the β-VAE is a closer match to the representational form of real neurons compared to the alternatives presented by the baselines. This, however, is not the whole story. A complementary question to ask is to what degree the information captured by the β-VAE representation overlaps with the information captured by the neural population (see Fig. 6a). Past work demonstrated that at lower dimensionality β-VAE representations can match the “gold standard” AAM model in terms of how well they can explain neural responses at the population level—a result reported in Chang et al.6. Here we found that single β-VAE units are able to account for more than 50% of the neuronal variance explained by all 50 units of the highest scoring AAM baseline for around 10% of all neurons (see Fig. 6b and Supplementary Fig. 3). This further corroborates the results in Fig. 3b that suggest that the representations discovered by β-VAE are equivalent to a similarly sized subset of original neurons.

a Schematic representation of linearly accessible information overlap between the population of neurons (green) and model representation (blue) corresponding to the different combinations of magnitudes of the encoding and decoding scores. b Ratio of total neuron population (n = 159) for which single β-VAE units explain more variance than X% of variance explained by the best baseline model (AAM, 50 units). See Supplementary Fig. 3 for more details. Source data are provided as a Source Data file. c Encoding variance explained. No significant difference is found between encoding variance explained by neuron subsets and AE, ICA, VAE, PCA and AAM (β-VAE p = 2.4689e–08, AAM p = 0.1211, VGG (PCA) p = 2.5055e–07, PCA p = 0.0174, Classifier p = 2.6311e–17, ICA p = 0.0185, AE p = 0.0368, VAE p = 0.0176, VGG (raw) p = 8.4568e–18, two-sided Welsch’s t-test). Circles, median explained variance across 159 neurons (β-VAE, n = 51; VGG (raw), n = 22; Classifier, n = 64; VAE, Variational AutoEncoder36, n = 50; AE, AutoEncoder35, n = 50; VGG (PCA)32, n = 41; PCA, n = 41; ICA, n = 50; AAM, active appearance model3, n = 21). Boxplot centre is median, box extends to 25th and 75th percentiles, whiskers extend to the most extreme data that are not considered outliers, outliers are plotted individually. Source data are provided as a Source Data file. d Decoding variance explained. β-VAE variance explained is statistically significantly different from all other models (all p < 0.01; AAM p = 7.6510e–07, VGG (PCA) p = 0.0, PCA p = 6.0566e–23, Classifier p = 0.0, ICA p = 2.8584e–20, AE p = 0.0164, VAE p = 1.1390e–12, VGG (raw) p = 0.0, two-sided Welsch’s t-test). Circles, median explained variance across model units (β-VAE, n = 51; VGG (raw), n = 22; Classifier, n = 64; VAE, Variational AutoEncoder36, n = 50; AE, AutoEncoder35, n = 50; VGG (PCA)32, n = 41; PCA, n = 41; ICA, n = 50; AAM, active appearance model3, n = 21). Boxplot centre is median, box extends to 25th and 75th percentiles, whiskers extend to the most extreme data that are not considered outliers, outliers are plotted individually. Source data are provided as a Source Data file.

We also calculated how much of the total neural response variance was explained by the models (encoding variance explained), and how much variance in the model responses was explained by the neurons (decoding variance explained) at the population level (see Fig. 6c, d). While the absolute values presented are artificially low due to the lack of noise normalisation (see “Methods”), our results are consistent with those reported in Chang et al.6—β-VAE representations contain less information in general than the other baselines (apart from the Classifier and VGG raw), but the information that does get preserved by the β-VAE overlaps with the information within the neural population the most compared to the other baselines (apart from AE). Taken together, the results presented in Fig. 6c, d suggest that in terms of linearly decodable information overlap with the neural population, the best models are AE and β-VAE (closer to the bottom left quadrant in Fig. 6a, while the other baselines are closer to the top left or right quadrants).

Disentangled units are sufficient to decode novel faces

Finally, we conducted an analysis that sought to link the virtues of the β-VAE as a tool in machine learning—its capacity to make strong inferences about held out data, with its qualities emphasised here as a theory of visual cognition—strong one-to-one alignment between individual neural and individual disentangled latent units. During training we omitted 62 faces that had been viewed by the monkeys from the training set of the β-VAE, allowing us to verify that these were reconstructed more faithfully by the β-VAE than by other networks. Critically, in order to reconstruct these faces, we applied the decoder of the β-VAE not to its latent units as inferred by its encoder, but rather to the latent unit responses predicted from the activity of a small subset of single neurons (as few as 12) that best aligned with each model unit on a different subset of data (Fig. 7a, see “Methods”). We found that such one-to-one decoding of latent units from the corresponding single neurons was significantly more accurate for the disentangled latent units learnt by the β-VAE compared to the latent units learnt by other baseline models (all p-values < 0.01, two-sided Welch’s t-test) (Fig. 7b). Furthermore, we visualised the β-VAE reconstructions decoded from just 12 matching neurons (Fig. 7c). Qualitatively, these appeared both more identifiable and of higher image quality than those produced by the latent units decoded from the nearest rival model, the AE, and comparable to those of the basic VAE (as validated by the subjective judgements obtained from 300 human participants, see “Methods”). These results suggest that both the small subset of just 12 neurons and the corresponding 12 disentangled units carried sufficient information to decode previously unseen faces—a stronger result than past work which required a 1024-dimensional VAE representation to decode novel faces from fMRI voxels37.

a Responses of 159 neurons (grey circles) in face-patch area AM were recorded while two primates viewed 62 novel faces. One-to-one match was found between each model unit (pink circles) and a corresponding single neuron. Linear regression (blue arrow) was used to decode the responses of each individual model latent unit (pink circles) from the activations of its corresponding single neuron. The pre-trained model decoder was used to reconstruct the novel face. Face image reproduced with permission from Chang et al.6. b Cosine distance between real standardised latent unit responses and those decoded from single neurons are significantly smaller for β-VAE compared to baseline models and the “gold standard” provided by the AAM model (all p < 0.05, single-sided Welsch’s t-test; AE p = 0.0195, VAE p = 1.0596e–14, PCA p = 4.0370e–25, ICA p = 1.5758e–12, VGG (PCA) p = 5.0467e–24, Classifier p = 0.0, AAM p = 2.3216e–14, VGG (raw) p = 4.8840e–20). Circles, median cosine distance per model (β-VAE, n = 51; VGG (raw), n = 22; Classifier, n = 64; VAE, Variational AutoEncoder36, n = 50; AE, AutoEncoder35, n = 50; VGG (PCA)32, n = 41; PCA, n = 41; ICA, n = 50; AAM, active appearance model3, n = 21). Boxplot centre is median, box extends to 25th and 75th percentiles, whiskers extend to the most extreme data that are not considered outliers, outliers are plotted individually. Source data are provided as a Source Data file. c β-VAE can decode and reconstruct novel faces from 12 matching single neurons. The reconstructions are better than those from the closest baselines, AE and VAE, which required 30 and 27 neurons for decoding, respectively. The β-VAE instance was chosen to have the best disentanglement quality as measured by the UDR score; AE and VAE instances were chosen to have the highest reconstruction accuracy on the training dataset. Face images reproduced with permission from Ma et al.53 and Phillips et al.55.

Furthermore, it should be noted that the AE and VAE were explicitly optimised for reconstruction quality resulting in better reconstruction performance than β-VAE (which was optimised for disentangling at the cost of reconstruction quality17) at matched dimensionality during training (all p < 0.01, two-sided Welsch’s t-test, see Supplementary Fig. 1a). Yet, both AE and VAE required more than twice as many neurons as β-VAE for best decoding from neural data. This, together with the results in ref. 37, suggests that the representational form can matter more than information content for improved decoding performance.

Discussion

The results we have presented here validate past evidence3,6 that the code for facial identity in the primate IT is low-dimensional, with single neurons encoding independent axes of variation. Unlike the previous work3,6, however, our results demonstrate that such a code can also be semantically interpretable at a single neuron level. In particular, we show that the axes of variation represented by single IT neurons align with single “disentangled” latent units that appear to be largely semantically meaningful and which are discovered by the β-VAE, a recent class of deep neural networks proposed in the machine learning community that does not rely on extensive teaching signals for learning. Given the strong alignment of single IT neurons with the single units discovered through disentangled representation learning, and the fact that disentangling can be done through self-supervision without the need for an external teaching signal, it is plausible that the ventral visual stream may also be optimising the disentangling learning objective.

Our work extends recent studies of the coding properties of single neurons in the primate face-patch area, reporting finding one-to-one correspondences between model units and neurons, as opposed to few-to-one as previously reported3. Moreover, we show that disentangling may occur at the end of the ventral visual stream (IT), extending the results recently reported for V138. Past studies have proposed that the ventral visual cortex may disentangle1,38 and represent visual information with a low-dimensional code3,39,40. However, this work did not ask how these representations emerge via learning. Here, we propose a theoretically grounded41 computational model (the β-VAE) for how disentangled, low-dimensional codes may be learnt from the statistics of visual inputs17.

It is worth noting that, in general, the baselines, including AAM, contain a larger number informative dimensions than β-VAE. Furthermore, it has been demonstrated that when the larger full set of AAM dimensions is used, it explains more neural variance at the population level than the smaller full set of disentangled β-VAE units6. Indeed, it is clear that the handful of disentangled dimensions discovered by β-VAE in the current study are not sufficient to fully describe the whole space of faces. The reason why β-VAE discovers only a subset of the dimensions necessary to describe faces fully stems from a known limitation of the current methods for disentangled representation learning—they rely on training on well-aligned large datasets for achieving maximal interpretability and disentangling quality. Indeed, applying β-VAE to a more suitable dataset of faces42 allows it to recover at least double the number of disentangled dimensions than the number found in the current study (see Supplementary Information for more details, example latent traversals shown in Fig. 1a, second from the right). The lack of appropriate levels of scale and alignment for the dataset of 2100 faces used in this study leaves room for improvements both in terms of latent interpretability and the amount of population neural variance explained to future work.

Saying this, an important aspect of our proposed learning mechanism is that it generalises beyond the domain of faces17,18,19. We believe that the difficulty in identifying interpretable codes in the IT encountered in the past may have been due to the fact that semantically meaningful axes of variation of complex visual objects are more challenging for humans to define (and hence use as visual probes) compared to simple features, such as visual edges14. A computational model like the β-VAE, on the other hand, is able to automatically discover disentangled latent units that align with such axes, as was demonstrated for the domain of faces in this work. Hence, we hope that the neuroscience community will be able to take advantage of any further advancements in disentangled representation learning techniques within the machine learning community to study neural responses in the IT beyond the domain of faces. Using face perception as the test domain for building the connection between neural coding in the IT and disentangling deep generative models as was done in this work has unique advantages. Specifically, both neural responses and image statistics in this domain have been particularly well studied compared to other visual stimulus classes. This allows for comparisons with strong hand-engineered baselines3 using relatively densely sampled neural data5. Furthermore, although faces make up a small subset of all possible visual objects, and neurons that preferentially respond to faces tend to cluster in particular patches of the IT cortex5, the computational mechanisms and basic units of representation employed for face processing may in fact generalise more broadly within the ventral visual stream5,43. Indeed, face perception is seen by many to be a “microcosm of object recognition”5. Hence, assuming that this is the case, β-VAE and any future more advanced methods for disentangled representation learning may serve as a promising tools to understand IT codes at a single neuron level even for rich and complex visual stimuli in the future.

One contribution of this paper is the introduction of novel measures for comparing neural and model representations. Unlike other often used representation comparison methods (e.g. explained variance of neuron-level regressions8,11,25,26,27 or representational similarity analysis (RSA)11,28) which are insensitive to invertible linear transformations, our methods measure the alignment between individual neurons and model units. Hence, they do not abstract away the representational form and preserve the ability to discriminate between alternative computational models that may otherwise score similarly15. To summarise, while the traditional methods compare the informativeness of representations, our approach compares their representational form, hence the two are complementary to each other.

While the development of β-VAE for learning disentangled representations was originally guided by high-level neuroscience principles44,45,46, subsequent work in demonstrating the utility of such representations for intelligent behaviour was primarily done in the machine learning community22,23,24,47. In line with the rich history of mutually beneficial interactions between neuroscience and machine learning48, we hope that the latest insights from machine learning may now feed back to the neuroscience community to investigate the merit of disentangled representations for supporting intelligence in the biological systems, in particular as the basis for abstract reasoning49, or generalisable and efficient task learning50.

Methods

Dataset

We used a dataset of 2162 natural grayscaled, centred and cropped images of frontal views of faces without nuisance obstructions (e.g. facial hair or a head garment), under normal lighting conditions and without strong facial expressions pasted on a grey 200 × 200 pixel background as described in6. The face images were collated from multiple publicly available datasets: 72 images from AR Face Database51, 48 images from CelebA52, 457 images from Chicago Face Database53, 64 faces from CVL54, 563 images from FERET55, 45 images from MR256 and 913 images from PEAL57 dataset. In all, 62 held out face images were randomly chosen. These faces were among the 2100 faces presented to the primates, but not among the 2100 faces used to train the models. All models (apart from VGG) were trained on the same set of faces, which were mirror flipped with respect to the images presented to the primates. This ensured that the train and test data distributions were similar, but not identical (see Supplementary Fig. 1a). To train the Classifier baseline, we augmented the data with 5 × 5 pixel translations of each face to ensure that multiple instances were present for each unique face identity. The data were split into 80%/10%/10% train/validation/test sets.

Neurophysiological data

All neurophysiological data were re-used from Chang et al.6. The data were collected from two male rhesus macaques (Macaca mulatta) of 7–10 years old. The animals were pair-housed and kept on a 14h/10h light/dark cycle. All procedures conformed to local and US National Institutes of Health guidelines, including the US National Institutes of Health Guide for Care and Use of Laboratory Animals. All experiments were performed with the approval of the Caltech Institutional Animal Care and Use Committee (IACUC).

Face patches were identified by finding regions responding significantly more to 16 faces than to 80 non-face stimuli (bodies, fruits, gadgets, hands, and scrambled patterns4) while passively viewing images on a screen in a 3T TIM (Siemens, Munich, Germany) magnet. Feraheme contrast agent was injected to improve signal/noise ratio. The results were confirmed across multiple independent scan sessions.

For single-unit recording, tungsten electrodes (18–20 Mohm at 1 kHz, FHC) backloaded into plastic guide tubes set to reach approximately 3–5 mm below the dura surface were used. The electrode was advanced slowly with a manual advancer (Narishige Scientific Instrument, Tokyo, Japan). Extracellular action potentials were isolated using the box method using an online spike sorting system (Plexon, Dallas, TX, USA) from amplified neural signals. Spikes were sampled at 40 kHz. All spike data were further re-sorted offline using Plexon spike sorting clustering algorithm. Only well-isolated units were considered for further analysis. The image stimuli were presented on a CRT monitor (DELL P1130). The intensity of the screen was measured using a colorimeter (PR650, Photo Research) and linearised for visual stimulation. Screen size covered 27.7 × 36.9 visual degrees and stimulus size spanned 5.7°. The fixation spot size was 0.2° in diameter and the fixation window was a square with the diameter of 2.5°. The monkeys were head fixed and passively viewed the screen in a dark room. Eye position was monitored using an infrared eye tracking system (ISCAN). Juice reward was delivered every 2–4 s if fixation was properly maintained. Images were presented in random order. All images were presented for 150 ms interleaved by 180 ms of a grey screen. Each image was presented 3–5 times. The number of spikes in a time window of 50–350 ms after stimulus onset was counted for each stimulus and used to calculate the face-selectivity index of each cell according to

where \(\overline{{{{{{{{\bf{n}}}}}}}}}\) is the mean activity of a single neuron in response to either face or non-face stimuli. Only neurons with high face selectivity (FSI > 0.33) were selected for further analysis.

Artificial neurophysiological data

In order to investigate whether the responses of β-VAE units encoded linear combinations of neural responses, we created artificial neural data by linearly recombining the responses of the real neurons. We first standardised the responses of the 159 recorded neurons across the 2100 face images. We then multiplied the original matrix of neural responses with a random projection matrix A. Each value Aij of the projection matrix was sampled from the unit Gaussian distribution. The absolute value of the matrix was then taken, and each column was normalised to sum to 1.

Neuron subsets

For fairer comparison with the models, which learnt latent representations of size N ∈ [10, 50] as will be described below, we sampled neural subsets with 50 or fewer neurons. To do this, we first uniformly sampled five values from N ∈ [10, 50] without replacement to indicate the size of the subsets. Then, for each size value we sampled 10 random neuron subsets without replacement, resulting in 50 neuron subsets in total.

Human participants

In order to validate the semantic meaningfulness of β-VAE latent units shown in Figs. 1c and 2b, and the judged quality of model reconstructions shown in Fig. 7c, we recruited 600 human participants (300 for each of the two studies, age 30.81 ± 11.07 years, 117 females for identifying transformations applied to faces, and age 30.75 ± 10.57 years, 123 females for comparing face reconstructions). The participants were recruited through the Prolific crowd-sourcing platform. The full details of our study design, including compensation rates, were reviewed and approved by DeepMind’s independent ethical review committee. All participants provided informed consent prior to completing tasks and were reimbursed for their time.

β-VAE model

We used the standard architecture and optimisation parameters introduced in17 for training the β-VAE (Fig. 8a). The encoder consisted of four convolutional layers (32 × 4 × 4 stride 2, 32 × 4 × 4 stride 2, 64 × 4 × 4 stride 2 and 64 × 4 × 4 stride 2), followed by a 256-d fully connected layer and a 50-d latent representation. The decoder architecture was the reverse of the encoder. We used ReLU activations throughout. The decoder parameterised a Bernoulli distribution. The model was implemented using TensorFlow 1.0 (e.g. see https://github.com/google-research/disentanglement_lib). We used Adam optimiser with 1e−4 learning rate and trained the models for 1 mln iterations using batch size of 16, which was enough to achieve convergence. The models were trained to optimise the following disentangling objective:

where p(x) is the probability of the image data, q(z∣x) is the learnt posterior over the latent units given the data, and p(z) is the unit Gaussian prior with a diagonal covariance matrix. Note that due to the limited amount of training data (2100 images compared to typical dataset sizes approaching 1 mln images), we were not able to achieve the best disentangling or reconstruction performance that this model class if capable of in principle, resulting in fewer disentangled dimensions discovered by each trained model, and choppier reconstruction quality. Saying this, the reported models typically still converged on approximately the same disentangled representation. All the results are reported using disentangled dimensions discovered by single models—we never mix or combine disentangled dimensions across models.

Blue, trainable neural network units free to represent anything. Pink, latent representation units used for comparison with neurons in response to 2100 face images. Grey, units representing class probabilities. CNN, convolutional neural network. FC, fully connected neural network. N, number of latent units. a Self-supervised models — β-VAE17, autoencoder (AE)35 and variational autoencoder (VAE)36, 58. Models were trained on the mirror flipped versions of the 2100 faces presented to the primates. Face image reproduced with permission from Gao et al.57. b Classifier baseline. Encoder network, same as in (a). Model trained to differentiate between unique 2100 face identities using mirror flipped versions of the 2100 faces augmented with 5 × 5 pixel translations. Face image reproduced with permission from Gao et al.57. c VGG baseline32. Encoder network has larger and deeper CNN and FC modules than in (a) and (b). Representation dimensionality is reduced to match other models either by a projection on the first N principal components (PCs) (VGG (PCA)), or by taking a random subset of N units without replacement (VGG (raw)). VGG was trained to differentiate between 2622 unique faces using a face dataset32 unrelated to the 2100 faces presented to the primates. Face image is representative of the images used to train the model and is reproduced with permission from Liu et al.52. d Active appearance model (AAM)3. Keypoints were manually placed on the 2100 face images. First N/2 PCs over the keypoint locations formed the “shape” latent units. First N/2 PCs over the shape-normalised images formed the “appearance” latent units. Figure adapted with permission from Chang et al.3.

Baseline models

We compared β-VAE to a number of baselines to test whether any individual aspects of β-VAE training could account for the quality of its learnt latent units. To disambiguate the role of the learning objective, we compared β-VAE to a traditional autoencoder (AE)35 and a basic variational autoencoder (VAE)36,58. These models had the same architecture, training data, and optimisation parameters as the β-VAE (Fig. 8a), and were also implemented in TensorFlow 1.0, but their learning objectives were different. The AE optimised the following objective that tried to optimise the quality of its reconstructions:

where f(x; θ, ϕ) is the image reconstruction produced by putting the original image through the encoder and decoder networks parameterised by ϕ and θ, respectively. The VAE optimised the variational lower bound on the data distribution p(x):

where q(z∣x) is the learnt posterior over the latent units given the data, and p(z) is the isotropic unit Gaussian prior.

To test whether the supervised classification objective could be a good alternative to the self-supervised disentangling objective, we compared β-VAE to two classifier neural network baselines. One of these baselines, referred to as the Classifier in all the figures and the text, shared the encoder architecture, the data distribution and the optimisation parameters with the β-VAE (Fig. 8b), but instead of disentangling, it was trained to differentiate between the 2100 faces using a supervised objective. In particular, the four convolutional layers and the fully connected layer of the encoder fed into an N-dimensional representation, which was followed by 2100 logits that were trained to recognise the unique 2100 face identities. In order to avoid overfitting, we used early stopping. The final models were trained for between 300k and 1mln training steps. This model was also implemented in TensorFlow 1.0.

The other classifier baseline was the VGG-Face model32 (referred to as the VGG in all the figures and the text), a more powerful deep network developed for state-of-the-art face recognition performance and previously chosen as an appropriate computational model for comparison against neural data in face recognition tasks33,34,59 (Fig. 8c). Similarly to other works6,33,34,59, we used a standard pre-trained MatLab implementation (http://www.vlfeat.org/matconvnet/pretrained/) of the VGG network, trained to differentiate between 2622 unique individuals using a dataset of 982,803 images32. Note that the data used for VGG training were unrelated to the 2100 face images presented to the primates. The VGG therefore had a different architecture, training data distribution and optimisation parameters compared to the β-VAE. The model consisted of 16 convolutional layers, followed by 3 fully connected layers (see32 for more details). The last hidden layer before the classification logits contained 4096 units. Following the precedent set by refs. 6 and59, we used PCA to reduce the dimensionality of the VGG representation by projecting the activations in its last hidden layer in response to the 2100 test faces to the top N principal components (PCs) (Fig. 8c, referred to as VGG (PCA) in figures). Alternatively, we also randomly subsampled the units in the last hidden layer of VGG (without replacement) to control for any potential linear mixing of their responses which PCA could plausibly introduce (Fig. 8c, referred to as VGG (raw) in figures).

To rule out that the responses of single neurons could be modelled by simply explaining the variance in the data, we compared β-VAE to N PCs produced by applying principal component analysis (PCA) to the 2100 faces using sklearn 0.2 PCA package. To rule out the role of simply finding the independent components of the data during β-VAE training, we compared β-VAE to the N independent components discovered by independent component analysis (ICA) applied to the 2100 face images using sklearn 0.2 FastICA package.

Finally, we also compared β-VAE to the active appearance model (AAM). Linear combinations of small numbers of its latent units (six on average) was previously reported to explain the responses of single neurons in the primate AM area well3,6. We re-used the AAM latent units from6. These were obtained by setting 80 landmarks on each of the 2100 facial images presented to the primates. The positions of landmarks were normalised to calculate the average shape template. Each face was warped to the average shape using spline interpolation. The warped image was normalised and reshaped to a 1-d vector. PCA was carried out on landmark positions and shape-free intensity independently. The first N/2 shape PCs and the first N/2 appearance PCs were concatenated to produce the N-dimensional AAM representations (Fig. 8d).

Hyperparameter sweep

To ensure that all models had a fair chance of learning a useful representation, we trained multiple instances of each model class using different hyperparameter settings. The choice of hyperparameters and their values were dependent on the model class. However, all models went through the same model selection pipeline: (1) K model instances with different hyperparameter settings were obtained as appropriate; (2) S ⊆ K models with the best performance on the training objective were selected; (3) models that did not discover any latent units that shared information with the neural responses were excluded, resulting in M ⊆ S models retained for the final analyses. These steps are expanded below for each model class.

For the β-VAE model, the main hyperparameter of interest that affects the quality of the learnt latent units is the value of β. The β hyperparameter controls the degree of disentangling achieved during training, as well as the intrinsic dimensionality of the learnt latent representation17. Typically, a β > 1 is necessary to achieve good disentangling, however, the exact value differs for different datasets. Hence, we trained 400 models with different values of β by uniformly sampling 40 values of β in the [0.5, 20] range. Another factor that affects the quality of disentangled representation is the random initialisation seed for training the models30. Hence, for each β value, we trained 10 models from different random initialisation seeds, resulting in the total pool of 400 trained β-VAE model instances to choose from. All β-VAE models were initialised to have N = 50 latent units, however, due to the variability in the values of β, the intrinsic dimensionality of the trained models varied between 10 and 50.

In order to isolate the role of disentangling within the β-VAE optimisation objective from the self-supervision aspect of training, we kept as many choices as possible unchanged between the β-VAE and the AE/VAE baselines: the model architecture, optimiser, learning rate, batch size and number of training steps. The remaining free hyperparameters that could affect the quality of the AE/VAE learnt latent units were the random initialisation seeds, and the number of latent units N. The latter was necessary to sweep over explicitly, since AE and VAE models do not have an equivalent to the β hyperparameter that affects the intrinsic dimensionality of the learnt representation. Hence, we trained 100 model instances for each of the AE and VAE model classes, with five values of N sampled uniformly without replacement from N ∈ [10, 50], each trained from 20 random initialisation seed values.

For the Classifier baseline, we used the following hyperparameters for the initial selection: five values of N ∈ [10, 50] sampled uniformly without replacement, as well as a number of learning rate values {1e−3, 1e−4, 1e−5, 1e−6, 1e−7} and batch sizes {16, 64, 128, 256}, resulting in 100 model instances. We trained the models with early stopping to avoid overfitting, and used the classification performance on the validation set to choose the settings for the learning rate and batch size. We found that the values used for training β-VAE, AE and VAE (learning rate 1e−4, batch size 16) were also reasonable for training the Classifier, achieving >95% classification accuracy. Hence, we trained the final set of 450 Classifier model instances with fixed learning rate and batch size, five values of N ∈ [10, 50] sampled uniformly without replacement and 50 random seeds.

We used sklearn 0.2 FastICA algorithm60 to extract ICA units, which is dependent on the random initialisation seed. Hence, we extracted N ∈ [10, 50] independent components with 10 random initialisation seeds each, resulting in 41 ICA model instances.

The remaining baseline models relied on using a single canonical model instance (VGG and AAM) and/or on a deterministic dimensionality reduction process (PCA, AAM, VGG). Hence, the random seed hyperparameter did not apply to them. In order to make a fairer comparison with the other baselines, we therefore created different model instances by extracting different numbers of representation dimensions with N ∈ [10, 50], resulting in 41 PCA and VGG (PCA) model instances, and 21 AAM instances (since N needs to split evenly into shape- and appearance-related units). For the VGG (raw) variant, we first uniformly sampled five values from N ∈ [10, 50] without replacement to indicate the size of the hidden unit subsets. Then, for each size value we sampled 10 random hidden unit subsets without replacement, resulting in 50 VGG (raw) model instances in total.

Model selection based on training performance

For each model class, apart from the deterministic baselines (PCA, AAM and VGG), we selected a subset of model instances based on their training performance. For the β-VAEs, we used the recently proposed Unsupervised Disentanglement Ranking (UDR) score31 implemented in MatLab R2017b to select 51 model instances with the most disentangled representations (within the top 15% of UDR scores) for further analysis. For AE baseline, we selected 50 model instances with the lowest reconstruction error per chosen value of N. For the VAE baseline we selected 50 model instances with the highest lower bound on the training data distribution per chosen value of N. Finally, for the Classifier baseline, we selected 81 models which achieved >95% classification accuracy on the test set.

Filtering out uninformative models

To ensure that all models used in the final analyses shared at least some information with the recorded neural population, we performed the following filtering procedure. First, we trained Lasso regressors (MatLab R2017b) as per Variance Explained section below, to predict the responses of each neuron across the 2100 faces from the population of latent units extracted from each trained model. We then calculated the mean amount of variance explained (VE) averaged across all neurons for each of the models. We filtered out all models where \({{{{{\mathrm{VE}}}}}} \, < \, \overline{{{{{{\mathrm{VE}}}}}}}-\,{{\mbox{SD}}}\,({{{{{\mathrm{VE}}}}}})\), with \(\overline{{{{{{\mathrm{VE}}}}}}}\) and SD(VE) represent the mean and standard deviation of VE scores across all models, respectively.

The full-model selection pipeline resulted in 51 β-VAE model instances, 50 AE, VAE and ICA model instances, 41 PCA and VGG (PCA) model instances, 22 VGG (raw) model instances, 21 AAM model instances and 64 Classifier model instances that were used for further analyses.

Human psychophysics: identifying transformations applied to faces

While there is no readily available metric via which we can quantify the interpretability of β-VAE latent units, we designed a psychophysical study to test the null hypothesis that people cannot agree upon the variable coded by each latent unit. To validate the semantic meaningfulness of β-VAE latent units we asked 300 participants to select which of the 17 provided options best described the transformation generated by traversing a single latent unit. The following label options were presented to the participants: age, chin size, ethnicity, eye distance, eye size, eye slant, eyebrow position, face length, face shape, forehead size, fringe/bangs, gender, hair density, hair length, nose size, smiling and none of the above. Since all well-disentangled β-VAE models learnt approximately the same representation, we found corresponding units in two trained and well-disentangled β-VAE models and presented traversals from the two corresponding units applied to two different faces for the participants to label. The participants were presented with the following instructions: “You will be asked to identify 11 transformations applied to faces. For each transformation, you will be provided with two examples containing two different faces, each one transformed in the same way. You will be asked to make a judgement of what the transformation is, and select an answer from the drop-down list that best matches your guess.”. Each face transformation was generated by traversing the value of one chosen unit of a pre-trained β-VAE between −3 and 3, while keeping all other units fixed to their inferred values. The participants were asked to describe the resulting transformation of the reconstructed face using one of the 16 label options extracted from the list of 46 descriptive face attributes compiled in consultation with sketch artists in order to produce reliable and identifiable sketches of faces61. The full set of 46 attributes was not used, because it would have been too long and confusing for the participants to parse. Furthermore, many of the attributes in the list were not applicable to the current study (e.g. eye colour). To select the subset of attributes to use in this study, we recruited 6 pilot participants and asked them to match the traversals to the most appropriate descriptors selected from the full list of 46 attributes, and used the union of these as the final set. We also re-worded some of the attribute names to make them more appropriate for labelling transformations (e.g. we changed “small eyes” to “eye size”). The participants were presented with three worked examples before being asked to make the judgements. The presentation order of the latent traversals to be judged was randomised.

The experiment took on average 670 ± 430 s to complete. For each latent unit, we calculated the distribution of labels chosen by the participants over all labelling options. To measure how semantically meaningful each latent was, we calculated the entropy of the resulting distribution (being further away from the maximum entropy indicates better consensus by the participants on the semantic meaning of the latent), as well as the maximum proportion of participants who agreed on the label for each latent. We found that 6/11 latents had an agreement of >30% (significantly above the 11.82% agreement expected by chance, p = 0.001), with the observed entropy unlikely under the uniform prior distribution (p = 0.0001, see Supplementary Fig. 2). Note that for some of the latent units, we found that the participants’ label choices were split between closely related concepts, e.g. eye distance and eye size; hair density and hair length; face length, face shape and forehead size; gender and hair length; and age and hair density.

Human psychophysics: comparing face reconstructions

We measured the subjective quality of VAE, AE and β-VAE reconstruction of novel faces from single neurons by asking 300 participants to rank the three reconstructions as “Best”, “OK” or “Worst” using a randomised block design. Out of the 62 novel faces used in this study, we had to remove 5 due to legal requirements. The participants were presented with the following instructions: “We would like to compare the quality of face image reconstructions produced by three different systems. You will be presented with 57 face images, each one with three reconstructions by three different systems. The order in which the reconstructions are presented is random, so its position (left, middle or right) does not indicate which system it came from. For each image, we would like you to rate how much the three reconstructions resemble the original. We recommend that you make your judgement on the first holistic impression.”.

The experiment took on average 1070 ± 725 s to complete. Friedman test62 with α = 0.05 significance level (Python 3.6 scipy stats.friedmanchisquare implementation) was applied to the collected ranking scores, and the null hypothesis that all three models were the same in terms of their reconstruction quality was rejected. Post hoc pairwise comparisons across all 57 images revealed no statistically significant difference between β-VAE and VAE reconstructions, while significant differences were found between AE and the other two models. Post hoc pairwise comparisons for each image are presented in Supplementary Fig. 3.

Variance explained

We used Lasso regression to predict the response of each neuron nj from model units. We used 10-fold cross-validation using standardised units and neural responses to find the sparsest weight matrix that produced mean squared error (MSE) results between the predicted neural responses \({\hat{{{{{{{{\bf{n}}}}}}}}}}_{j}\) and the real neural responses nj no more than one standard error away from the smallest MSE obtained using 100 lambda values. The learnt weight vectors were used to predict the neural responses from model units on the test set of images. Variance explained (VE) was calculated on the test set according to the following:

where j is the neuron index, i is the test image index, and \({\overline{{{{{{{{\bf{n}}}}}}}}}}_{j}\) is the mean response magnitude for neuron j across all test images. In order to speed up the Lasso regression calculations, we manually zeroed out the responses of those model units that did not carry much information about the face images. We defined units as “uninformative” if their standardised responses had low variance σ2 < 0.01 across the dataset of 2100 faces. We verified that this did not affect the sparsity of the resulting Lasso regression weights.

Note that the proportion of explained variance for each neuron is typically normalised by the neuron’s Spearman-Brown corrected split-half self-consistency over image presentation repetitions (e.g. see Yamins et al.10). This data, however, was not available to us, hence our encoding-explained variance results are artificially lower than the typically reported values due to noise. Indeed, Chang et al.6 used a different classification-based method to quantify how well β-VAE, AAM and VGG can explain the responses of the same 159 neurons. Their approach did not require noise normalisation, and the results reported show strong performance across models. Our variance explained that the results are consistent with those reported in Chang et al.6.

Alignment score

Two versions of the same measure were simultaneously and independently proposed in the machine learning literature, referred to as “completeness”29 or “compactness”63. We refer to the same measure as “alignment” for more intuitive exposition in this work.

This score measures how well the responses of single neurons are explained by single model units. Perfect score of 1 is achieved if each neuron that is well explained, is only explained well by the activity of a single model unit. If more than one model unit is necessary to explain the activity of a single neuron, this score is reduced. However, if the activity of a neuron cannot be explained at all by any of the model units, the score is unaffected.

First, we obtained the matrix R necessary for calculating the score by training Lasso regressors to predict the responses of each neuron from the population of model latent units. When calculating completeness against the original neural responses, we followed the same procedure as per the variance explained calculations. When calculating completeness against the artificial (linearly recombined) neural responses, we did not zero out the responses of the “uninformative” units, since in this case this procedure affected the sparsity of the resulting Lasso regression weights. Instead, in order to speed up calculations, we reduced the number of cross-validation splits from ten to three. The completeness score Cj for neuron j was calculated according to the following:

where j indexes over neurons, d indexes over model units, and D is the total number of model units. The overall completeness score per model is equal to the sum of all per-neuron completeness scores C = ∑jCj. See ref. 29 for more details.

Unsupervised Disentanglement Ranking (UDR) score

The UDR score31 measures the quality of disentanglement achieved by trained β-VAE models by performing pairwise comparisons between the representations learnt by models trained using the same hyperparameter setting but with different seeds. The measure relies on the assumption that for any particular dataset, well-disentangled β-VAE models will converge on the same representation up to permutation, subsetting (different models may discover subsets of all disentangled dimensions), and sign inverse (for example, some models may learn to represent age from young to old, while others may represent it from old to young). This approach requires no access to labels or neural data. We used the Spearman version of the UDR score described in ref. 31. For each trained β-VAE model, we performed 9 pairwise comparisons with all other models trained with the same β value and calculated the corresponding UDRij score, where i and j index the two β-VAE models. Each UDRij score is calculated by computing the similarity matrix Rij, where each entry is the Spearman correlation between the responses of individual latent units of the two models. The absolute value of the similarity matrix is then taken ∣Rij∣ and the final score for each pair of models is calculated according to

where a and b index into the latent units of models i and j, respectively, \({r}_{a}=\mathop{\max}\limits_{a}R(a,b)\) and \({r}_{b}=\mathop{\max}\limits_{b}R(a,b)\). IKL indicate the “informative” latent units within each model, and d is the number of such latent units. The final score for model i is calculated by taking the median of UDRij across all j.

Average correlation ratio and average unit proportion

For each neuron, we calculated the absolute magnitude of Pearson correlation with each of the “informative” model units. We then calculated the ratio between the highest correlation and the sum of all correlations per neuron. The ratio scores were then averaged (mean) across the set of unique model units with the highest ratios, and this formed the average correlation ratio score per model. The number of unique model units with the highest ratios divided by the total number of informative model units formed the average unit proportion score.

Decoding novel faces from single neurons

We first found the best one-to-one match between single model units and corresponding single neurons. To do this, we calculated a correlation matrix Dij = Corr(zi, rj) between the responses of each model unit zi and the responses of each neuron rj over the subset of 2100 face images that were seen by both the models and the primates, where Corr stands for Pearson correlation. We then used MATLAB R2017b implementation of Kuhn-Munkres64,65 algorithm (matchpairs) to find the best one-to-one assignment between each model unit and a unique neuron based on the lowest overall (1 − Dij) score across all matchings. We used the resulting one-to-one assignments to regress the responses of single latent units from the responses of their corresponding single neurons to the held out 62 faces, using the same subset of 2100 face images that were seen by both the models and the primates for estimating the regression parameters. We standardised both model units and neural responses for the regression. The resulting predicted latent unit responses were fed into the pre-trained model decoder to obtain reconstructions of the novel faces. We calculated the cosine distance between the standardised predicted and real latent unit responses for each face (after filtering out the “uninformative” units), and presented the mean scores across the 62 held out faces for each model.

Statistical tests

We used a MATLAB R2017b implementation of Welsch’s t-test (ttest2) for all pairwise model comparisons, with unequal variance and α = 0.01.

Reporting summary

Further information on research design is available in the Nature Research Reporting Summary linked to this article.

Data availability

The unprocessed responses of all models to the 2162 face images generated in this study have been deposited in the figshare database (https://doi.org/10.6084/m9.figshare.c.5613197.v2). This includes AAM, VGG (PCA), VAE and β-VAE responses previously published in Chang et al.6. The figshare database also includes the anonymised psychophysics data, a file describing how the semantic labels used in one of the psychophysics study were obtained from the larger list of 46 descriptive face attributes compiled in Klare et al.61, and the two sample forms used for data collection on Prolific. The raw neural data supporting the current study were previously published in Chang et al.6 and are available under restricted access because of the complexity of the customised data structure and the size of the data; access can be obtained by contacting Le Chang (stevenlechang@gmail.com) or Doris Tsao (tsao.doris@gmail.com). The face image data used in this study are available in the corresponding databases: FERET face database55 (https://www.nist.gov/itl/iad/image-group/color-feret-database), CVL face database54 (http://lrv.fri.uni-lj.si/facedb.html), MR2 face database56 (http://ninastrohminger.com/the-mr2), PEAL face database57, AR face database51 (http://www2.ece.ohio-state.edu/aleix/ARdatabase.html), Chicago face database53 (https://www.chicagofaces.org) and CelebA face database52 (http://mmlab.ie.cuhk.edu.hk/projects/CelebA.html). Source data are provided with this paper.

Code availability

The code that supports the findings of this study is available upon request from Irina Higgins (irinah@google.com) due to its complexity and partial reliance on proprietary libraries. Open-source implementations of the β-VAE model, the alignment score and the UDR measure are available at https://github.com/google-research/disentanglement_lib.

References

DiCarlo, J., Zoccolan, D. & Rust, N. How does the brain solve visual object recognition? Neuron 73, 415–434 (2012).

Hubel, D. H. & Wiesel, T. N. Receptive fields of single neurones in the cat’s striate cortex. J. Physiol. 124, 574–591 (1959).

Chang, L. & Tsao, D. Y. The code for facial identity in the primate brain. Cell 169, 1013–1028 (2017).

Tsao, D. Y., Freiwald, W. A., Tootell, R. B. & Livingstone, M. S. A cortical region consisting entirely of face-selective cells. Science 311, 670–674 (2006).

Tsao, D. Y. & Livingstone, M. S. Mechanisms of face perception. Annu. Rev. Neurosci. 31, 411–437 (2008).

Chang, L., Egger, B., Vetter, T. & Tsao, D. Y. Explaining face representation in the primate brain using different computational models. Current Biology 31, 2785–2795 (2021).

Richards, B. A. et al. A deep learning framework for neuroscience. Nat. Neurosci. 22, 1546–1726 (2019).

Yamins, D. L. K. & DiCarlo, J. J. Using goal-driven deep learning models to understand sensory cortex. Nat. Neurosci. 19, 356–365 (2016).

He, K., Zhang, X., Ren, S. & Sun, J. Delving deep into rectifiers: surpassing human-level performance on imagenet classification. In Proceedings of the IEEE International Conference on Computer Vision, ICCV. Vol. 1, 1026–1034 (ICCV, 2015).

Yamins, D. L. K. et al. Performance-optimized hierarchical models predict neural responses in higher visual cortex. Proc. Natl Acad. Sci. USA 111, 8619–8624 (2014).

Khaligh-Razavi, S. & Kriegeskorte, N. Deep supervised, but not unsupervised, models may explain IT cortical representation. PLoS Comput. Biol. 10, e1003915 (2014).

Bashivan, P., Kar, K. & DiCarlo, J. J. Neural population control via deep image synthesis. Science 364 eaav9436 (2019).

Slone, L. & Johnson, S. Infants’ statistical learning: 2- and 5-month-olds’ segmentation of continuous visual sequences. J. Exp. Child Psychol. 133, 47–56 (2015).

Lindsay, G. Convolutional neural networks as a model of the visual system: past, present, and future. J. Cogn. Neurosci. 33, 2017–2031 (2021).

Thompson, J. A. F., Bengio, Y., Formisano, E. & Schönwiesner, M. How can deep learning advance computational modeling of sensory information processing? In NeurIPS Workshop on Representation Learning in Artificial and Biological Neural Networks Report number: MLINI/2016/04, arXiv:1810.08651v1 [cs.NE] (MLINI, 2016).

Bengio, Y., Courville, A. & Vincent, P. Representation learning: a review and new perspectives. IEEE Trans. Pattern Anal. Mach. Intell. 35, 1798–1828 (2013).

Higgins, I. et al. β-VAE: learning basic visual concepts with a constrained variational framework. In Proceedings of the 5th International Conference on Learning Representations, ICLR (ICLR, 2017).

Burgess, C. P. et al. MONet: Unsupervised scene decomposition and representation. Preprint at https://arxiv.org/abs/1901.11390 (2019).

Lee W., Kim D., Hong S. & Lee H. (2020) High-Fidelity Synthesis with Disentangled Representation. In: Vedaldi A., Bischof H., Brox T., Frahm JM. (eds) Computer Vision – ECCV 2020. ECCV 2020. Lecture Notes in Computer Science, vol 12371. Springer, Cham. https://doi.org/10.1007/978-3-030-58574-7_10

Fukushima, K. A self-organizing neural network model for a mechanism of pattern recognition unaffected by shift in position. Biol. Cybern. 36, 193 – 202 (1980).

Riesenhuber, M. & Poggio, T. Hierarchical models of object recognition in cortex. Nat. Neurosci. 2, 1019–1025 (1999).

Higgins, I. et al. DARLA: improving zero-shot transfer in reinforcement learning. In Proceedings of the 34th International Conference on Machine Learning, PMLR. Vol. 70, 1480–1490 (ICML, 2017).

Higgins, I. et al. SCAN: Learning hierarchical compositional visual concepts. In Proceedings of the 6th International Conference on Learning Representations, ICLR (ICLR, 2018).

Achille, A. et al. Life-long disentangled representation learning with cross-domain latent homologies. In Proceedings of Advances in Neural Information Processing Systems, NeurIPS. Vol. 31, 9873–9883 (NeurIPS, 2018).

Cadieu, C. et al. A model of v4 shape selectivity and invariance. J. Neurophysiol. 98, 1733–1750 (2007).

Güçlü, U. & van Gerven, M. A. Deep neural networks reveal a gradient in the complexity of neural representations across the ventral stream. J. Neurosci. 35, 10005–10014 (2015).