Abstract

Reproducibility is essential to open science, as there is limited relevance for findings that can not be reproduced by independent research groups, regardless of its validity. It is therefore crucial for scientists to describe their experiments in sufficient detail so they can be reproduced, scrutinized, challenged, and built upon. However, the intrinsic complexity and continuous growth of biomedical data makes it increasingly difficult to process, analyze, and share with the community in a FAIR (findable, accessible, interoperable, and reusable) manner. To overcome these issues, we created a cloud-based platform called ORCESTRA (orcestra.ca), which provides a flexible framework for the reproducible processing of multimodal biomedical data. It enables processing of clinical, genomic and perturbation profiles of cancer samples through automated processing pipelines that are user-customizable. ORCESTRA creates integrated and fully documented data objects with persistent identifiers (DOI) and manages multiple dataset versions, which can be shared for future studies.

Similar content being viewed by others

Introduction

The demand for large volumes of multimodal biomedical data has grown drastically, partially due to active research in personalized medicine, and further understanding diseases1,2,3. This shift has made reproducing research findings much more challenging because of the need to ensure the use of adequate data-handling methods, resulting in the validity and relevance of studies to be questioned4,5. Even though sharing of data immensely helps in reproducing study results6, current sharing practices are inadequate with respect to the size of data and corresponding infrastructure requirements for transfer and storage2,7. As computational processing required to process biomedical data is becoming increasingly complex3, expertise is now needed for building the tools and workflows for this large-scale handling1,2. There have been multiple community efforts in creating standardized workflow languages, such as the Common Workflow Language (CWL) and the Workflow Definition Language (WDL), along with associated workflow management systems such as Snakemake8 and Nextflow9, in order to promote reproducibility10,11. However, a steep learning curve is encountered for these programming-heavy solutions, in comparison to user-friendly data-processing platforms like Galaxy, which provide both storage and computational resources but have limited features and scalability12,13,14. While sharing these computational workflows, along with metadata, is of utmost importance, they are often missing15, negatively impacting data provenance and transparency16. There is a dire need for reproducible and transparent solutions for processing and analyzing large multimodal data that are scalable while providing full data provenance.

Biomedical data can expand into a plethora of data types such as in vitro and in vivo pharmacogenomics, toxicogenomics, radiogenomics, and clinical genomics. These data are a prime example of multimodal biomedical data with a long history of sharing in the field of biomarker discovery. Preclinical pharmacogenomics involves the use of a genome-wide association approach to identify correlations between compound/treatment response and molecular profiling, such as gene expression17,18,19. In addition, omics technologies have also been utilized in toxicological profiling for identifying the effect of compound toxicity on humans20, and in radiogenomics data to uncover genomic correlates of radiation response21. These rich preclinical data are often combined with clinical genomics data generated over the past decades22 with the aim to test whether preclinical biomarkers can be translated in clinical settings to ultimately improve patient care. Given the diversity of human diseases and therapies, researchers can hardly rely on a single dataset and benefit from collecting as much data as possible from all possible sources, calling for better sharing of data that are highly standardized and processed in a transparent and reproducible way.

The generation of large volumes of data has led to a sharing paradigm in the research community, where data are more accessible and open for public use. For studies to be reproduced and investigated for integrity and generalization by other researchers, the sharing of raw and processed data is crucial. However, providing open access to data is not enough to achieve full reproducibility. To increase the value of open data, one must clearly describe how the data are being curated and made amenable for analysis, and the shared data must be findable, accessible, interoperable, and reusable, as outlined in the FAIR data principles23. These foundational principles include providing rich metadata that is detail-oriented, including a persistent unique identifier (findability), accessing (meta)data with authentication and the unique identifier using a communications protocol (accessibility), assigning (meta)data with a commonly understood format/language (interoperability), and achieving data provenance with an accessible usage-license (reusability). The Massive Analysis and Quality Control (MAQC) Society24 has been established to promote the use of a community-agreed standard for sharing multimodal biomedical data in order to achieve reproducibility in the field, such as through the FAIR principles. Therefore, when translated into practice, these principles would promote the reproducible and transparent handling and sharing of data and code, which would allow researchers to utilize and build from each other’s work and accelerate new discoveries. However, there are many genomic data maintainers and repositories that do not meet the FAIR data principles for sharing data and pipelines. A common prevalent example of this is the use of one pipeline for data processing, with no documentation providing justification for the pipeline choice, impacting the dataset released, which is often only a single version.

In order to address these issues, we developed ORCESTRA (orcestra.ca), a cloud-based platform that provides a transparent, reproducible, and flexible computational framework for processing and sharing large multimodal biomedical data. The ORCESTRA platform orchestrates data-processing pipelines in order to curate customized, versioned, and fully documented data objects, which can be extended to a multitude of data types. This includes 11 pharmacogenomic (in vitro), 3 toxicogenomic, 1 xenographic pharmacogenomic (in vivo), 1 compendium of clinical genomic (21 studies), and 1 radiogenomics data objects that can be explored for a wide range of analyses. ORCESTRA is publicly accessible via orcestra.ca.

Results

The increasing utilization and demand for big data have resulted in the need for effective data orchestration25, which is a process that involves organizing, gathering, and coordinating the distribution of data from multiple locations across a cluster of computational resources (e.g., virtual machines) with specific processing requirements. An ideal orchestration platform for handling large-scale heterogeneous data would consist of the following: (1) a defined workflow; (2) a programming model/framework25, (3) broad availability of computing infrastructure (e.g., virtual machines with storage systems), and (4) a security framework to prevent unauthorized access to data and computational resources. At the workflow level, data from different sources/lineages, including data that are not static, must be effectively managed through the definition of workflow components (tasks) that interact and rely on one another25. Moreover, a programming model should be utilized for the workflow components responsible for handling the respective data (static and dynamic), such as a batch processing model (e.g., MapReduce)25. Lastly, the utilization of a scalable computational environment, such as academic and commercial cloud-computing platforms, would allow for the management and processing of big data, providing the necessary computational resources, ability to transfer data, and monitoring of executed workflows and respective components/tasks, further enabling tracking data provenance. There exist multiple orchestration tools with various features, to our knowledge, that are currently being used for the storage, processing, and sharing of genomic data, namely Pachyderm, DNAnexus, Databricks, and Lifebit (Table 1). We opted for Pachyderm, an open-source orchestration tool for multi-stage language-agnostic data-processing pipelines, maintaining complete reproducibility and provenance through the use of Kubernetes, as it provides the following functionalities:

Programming language: Pachyderm supports creating and deploying language-agnostic pipelines across on-premise or cloud infrastructures, a feature also supported by DNAnexus, Databricks, and Lifebit.

Large dataset support: Users can upload and process large datasets through the use of the Pachyderm file system (PFS), where the data are exposed in its respective container for utilization in pipelines while being placed in an object storage (e.g., Azure Blob, AWS bucket).

Automatic pipeline triggering: Reproducibility and provenance are guaranteed via automatic pipeline triggering when existing data are modified or newly added, which results in the generation of new versions of an output data object. However, because automatic triggering requires the state of each pod within the Kubernetes cluster to be saved, there is a permanent allocation of CPU/RAM for each pod (and therefore each pipeline), which requires a user to create a cluster with potentially costly resources. The other platforms do not require permanent allocation of resources, as for example, Lifebit allows users to spin up instances on-demand to meet the computational requirements for a given pipeline.

Reprocessing: A feature that is found in Pachyderm, DNANexus, and Lifebit is the prevention of recomputation for each pipeline trigger, which comes in handy when a pipeline contains processed raw data that does not need to be reprocessed if there is a change in metadata such as an annotation file.

Docker utilization: Each pipeline can be equipped with a Docker image connected to Docker Hub for running various toolkits, which allows for simplistic pipeline updating when there are future updates to any component of the Docker image. Docker usage is also translated across the other platforms as well.

Versioning of data and pipelines with unique identifiers: Each commit, an operation for submitting and tracking changes to a data source, is supplied with a unique identifier, which is updated with each new commit (parent–child system). This allows users to track different versions of a pipeline and dataset with ease. However, with Databricks and Lifebit, this feature is partially supported, as not every pipeline and respective input/output file(s) are provided with a unique identifier, even when data are updated through commits.

Parallelism support: A pipeline can be parallelized via a constant or coefficient strategy in Pachyderm using workers, which is useful for workloads with large computational requirements. When a constant is set Pachyderm will create the exact number of workers specified (e.g., constant: 5; 5 workers), that will parallelize across nodes in the cluster. The coefficient will result in Pachyderm creating a number of workers based on the number of nodes available (multiple nodes), which will also specify the number of workers per node (e.g., coefficient: 2.0; 20 nodes; 40 workers; 2.0 workers per node). The other platforms also support parallelization, including automatic parallelization of samples across instances.

Data-versioning system: Pachyderm provides direct GitHub integration for data versioning, which enables users to track changes at the file level and submit updates to Pachyderm through commits triggered through webhooks on GitHub. In addition, this also provides users with the ability to publicly view, track, and share all updates made to a pipeline or file connected to Pachyderm with ease.

Open access: Pachyderm provides a free and open-source version of the tool that contains all the functionalities required to develop a platform ensuring transparent and reproducible processing of multimodal data.

Despite these advantages, the choice of Pachyderm is not without compromises. We list below the functionalities that Pachyderm is lacking but would have been beneficial to develop our platform:

Direct mounting of the data: Pachyderm (v.1.9.3) does not allow for direct mounting of data from a cloud storage system (e.g., bucket) to a Pachyderm repository. Data must be manually transferred to the tool’s own file system using the Pachyderm put file command, resulting in essentially an additional copy of the data within a cloud environment. Databricks and Lifebit enable decreasing computation time and cost by not copying data into a file system for it to be used by the platform. This is important when large data sizes will be used in an analysis, which allows a user to simply store their data in a bucket/blob storage account, and mount it to the platform of interest, giving the user the ability to also use the data with other platforms or cloud services without having to repeatedly copy it in an inefficient manner.

Cost-efficiency: Pachyderm utilizes VM’s through a Kubernetes cluster of deployment on a cloud environment, which are costly to keep running indefinitely. Therefore, utilizing Pachyderm on a cloud infrastructure impacts cost-efficiency, in comparison to an on-premise high-performance computing (HPC) infrastructure. A notable feature that is supported by Lifebit, is cost-efficiency through low-priority instance utilization on a cloud provider, allowing for users to execute large-scale analyses at a reduced cost.

Resource allocation: Pachyderm requires persistent RAM/CPU allocation for each pipeline within the Kubernetes cluster, even after a pipeline is successfully executed, which permits automatic pipeline triggering. Thus, an increased amount of computational resources (VM’s scaled up/out) may be required for specific pipelines, which also impacts cost-efficiency.

The ORCESTRA platform

Building on the strengths of the Pachyderm orchestration tool, we have developed ORCESTRA, a cloud-based platform for data sharing and processing of biomedical data based on automation, reproducibility, and transparency. ORCESTRA allows users to create a custom data object that stores molecular profiles, perturbation (chemical and radiation) profiles, and experimental metadata for the respective samples and patients, allowing for integrative analysis of the molecular and perturbation and clinical data (Fig. 1). The platform utilizes datasets from the largest biomedical consortia, including 17 curated data objects containing genomics, pharmacological, toxicological, radiation, and clinical data (Supplementary Table 1). The data objects can accommodate all types of molecular profile data, however, ORCESTRA currently integrates gene expression (RNA-sequencing, microarray), copy number variation, mutation, and fusion molecular data. For RNA-seq data, users can select a reference genome of interest, a combination of quantification tools and their respective versions, along with reference transcriptomes from two genome databases (Ensembl, Gencode) to generate custom RNA-seq expression profiles for all of the cell lines in the dataset. Therefore, each data object will be generated through a custom orchestrated Pachyderm pipeline path, where each piece of input data, pipeline, and output data option is tracked and given a unique identifier to ensure the entire process is completely transparent and reproducible. To ensure data-object generation is fully transparent and that provenance is completely defined, each data object is automatically uploaded to Zenodo and given a public DOI, where the DOI is shared via a persistent web page that possesses a detailed overview of the data that each DOI-associated data object contains and how it was generated. This includes publication sources, treatment sensitivity information and source, raw data source, exact pipelines parameters used for the processing tools of choice, and URLs to reference genomes and transcriptomes used by the tool(s). Moreover, a BioCompute Object is automatically generated alongside each data object, which is a standardized record supported by the U.S. Food and Drug Administration (FDA) for communicating bioinformatic pipelines and verifying/validating them in order to aid in the reproducibility of experiments26. This includes sharing information such as pipeline steps, data input/output sources, and software utilized with their respective versions and parameters. In addition, release notes are also provided by ORCESTRA where the number of samples, treatments, sensitivity experiments, and molecular profile data are tracked between versions of a dataset, allowing users to identify changes between each new data update that were released from the respective consortium and pushed to the platform. This metadata page gets automatically sent to each user via email, providing users with one custom page that hosts all of the information required to understand how the data object was generated. Therefore, all of the data used in the data object is shared in a transparent manner, where researchers can identify the true origins of all data used with confidence and effectively reproduce results.

Data-object generation

ORCESTRA comes with a web-application interface allowing users to interact with the data-processing and data-sharing layers. Users can search existing data objects in the “Search/Request” view by filtering existing data objects with the “data object Parameters” panel. Users can filter existing data objects by selecting datasets with associated drug sensitivity releases, genome references, RNA-seq transcriptomes, RNA-seq processing tools with respective versions, which associates with other respective DNA data types (mutation or CNV) and RNA data types (microarray or RNA-seq). Changes in the parameter selections trigger the web app to submit a query request to a MongoDB database which returns a filtered list of data objects (Fig. 2). The data-object table is then re-rendered with an updated list of data objects. This allows users to search through existing data objects to determine if a data object that satisfies users’ parameter selections already exists, preventing recomputation. Information about the datasets and tools used to generate a data object can be viewed by clicking on a data-object name and navigating to its data-object metadata web page. Users can obtain information such as associated publications, links to the raw drug sensitivity and molecular profile data as well as a Zenodo DOI. In addition, the individual data-object view provides users with the option to download the data object of choice directly from the view.

The web-application layer receives pipeline requests under the form of JavaScript Object Notation (JSON) file and updates the ORCESTRA database with each data-object digital object identifier (DOI) and commit ID. The orchestration functionality scans for new pipeline requests and executes them to generate a versioned data object, which is uploaded to Zenodo to retrieve a DOI in the data-sharing layer.

Users can request a customized data object in the “Search/Request” view by turning the “Request data object” toggle on. This action reconfigures the dropdown options in the “data object Parameters” panel to be in request mode, and displays, on the “Summary” panel, two text input fields for entering a custom name for the data object and a user’s email to receive a notification upon data-object pipeline completion, with the accompanied Zenodo DOI and custom ORCESTRA metadata page link. Pachyderm continuously scans for a new request from the web-app, which will automatically trigger the respective pipelines to build the custom data object, while storing a unique ORCESTRA ID, Pachyderm pipeline commit ID, and Zenodo DOI into the MongoDB database, which increases the level of data provenance and reproducibility, as each data object can be identified through three unique identifiers after creation (Fig. 2). The data-object filtering process as described above continues to function as users select the request parameters, which displays existing data object(s) that satisfy users’ parameter selections. Upon selecting all the required parameters, the “Submit Request” button becomes active for users to submit the pipeline request.

Data-object usage

The data objects generated by ORCESTRA can be utilized to execute large-scale analyses for advancing biomedical research (Supplementary Methods). The platform harnesses various open-source R Bioconductor packages within its Pachyderm workflows in order to create the data objects for each biomedical data type offered. These packages include PharmacoGx for pharmacogenomics data27, ToxicoGx for toxicogenomics data20, Xeva for xenographic pharmacogenomics data19, MetaGxPancreas for clinical data22, and RadioGx for radiogenomics data28. The GRAY, UHNBreast, CCLE, and GDSC2 pharmacogenomic data objects were utilized to showcase the strong association between ERBB2 mRNA expression and Lapatinib drug response (AAC) across all datasets (Supplementary Fig. 1)29,30. In addition, the consistency of Lapatinib response was investigated between CTRPv2 and GDSC1/2, where a stronger consistency was observed between CTRPv2 and GDSC2, as they use the same pharmacological assay (Cell Titre Glo), in comparison to CTRPv2 and GDSC1 (Supplementary Fig. 2)31. To highlight drug compound toxicity, the Open TG-GATEs Human data object was used to identify top differentially expressed genes for “most drug-induced liver injury” (DILI) drug acetaminophen and “no DILI” drug chloramphenicol on primary human hepatocytes (Supplementary Fig. 3)20. For xenographic pharmacogenomics, the Novartis patient-derived xenograft encyclopedia (PDXE) data object expressed a strong correlation between trastuzumab response and ERBB2 expression from breast cancer patient-derived xenograft models (Supplementary Fig. 4)19. The prognostic value of the Pancreatic Cancer Overall Survival Predictor (PCOSP) and clinical models was investigated across pancreatic cancer patients in the MetaGxPancreas data object (Supplementary Fig. 5)32. Lastly, for radiogenomics, the Cleveland data object highlighted the correlation between gene expression and radiosensitivity (AUC—area under the fitted radiation survival curve) across tissue types (Supplementary Fig. 6)28. All analyses/figures can be reproduced via a Code Ocean custom compute capsule (https://codeocean.com/capsule/9215268/tree), which hosts the data objects, respective code, and generated figures, allowing for full transparency.

Data-object metrics

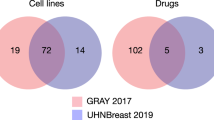

The platform provides several usage metrics for users. These metrics can be accessed through “Home”, “Statistics”, and “Request Status” views. The “Home” view provides an overview of currently available datasets, tools and references to generate data objects, most downloaded data objects, and a number of pending or in-process data-object requests. The “Statistics” view provides a visualized data-object popularity ranking, along with a plot of the number of cell lines, drugs, and genes for the canonical data objects, including intersection, which can be accessed by clicking the “View Statistics” button in the “Home” view. The “Request Status” view displays a tabulated list of data-object requests that are either “pending” (the request has been submitted and saved, but has not been processed in Pachyderm), or “in-process” (the request has been submitted and is processed in Pachyderm).

User accounts for data-object tracking

The platform offers users the option to register for an account with a valid email address. Registered users are able to select existing data objects in the “Search/Request” view and save them as their “favorites” which can be accessed in the “User Profile” view. However, the web application keeps track of data-object requests submitted by users based on their email addresses even without registration. These data objects are automatically added to a user’s favorite data objects and can be viewed in the “User Profile” view.

External data uploading and sharing

The platform enables users to request the processing of their own research data into a curated dataset through the “Data Submission” feature. This feature is accessible only to the registered users. In order to submit their data, the users are asked to complete the data submission form, with the help of data submission guideline documentation and sample data files provided in the documentation section. Upon submitting the request, an email notification is sent to ORCESTRA administrator. The administrator then verifies the submitted data and configures Pachyderm to process the submitted data.

Platform security

In order to prevent unauthorized access to our computational resources, including data and virtual machines, security measures have been implemented throughout our Azure ecosystem. Because Pachyderm resides within a Kubernetes cluster, it is important to prevent outside access to the Kubernetes API server, which was executed through Azure Active Directory. This enforces role-based access control (RBAC) to the cluster, allowing selective access to it. RBAC is also extended to the storage solutions utilized by ORCESTRA, allowing us to monitor data that is imported and accessed while preventing unauthorized access.

Discussion

The high-dimensionality, complexity, and scale of multimodal data present unprecedented challenges for researchers in the biomedical field, in regard to their ability to effectively manage, track, and process the data. The nature of heterogeneous and complex data negatively impacts data provenance, through incomplete or no accompaniment of metadata for a dataset, resulting in the uncertainty of a data lineage33,34,35. Because the granularity of metadata is a determinant of the value of a dataset36, it should provide a rich description of dataset content, following the FAIR data principles, which includes information about dataset origin, how it was generated, if there were any modifications that were made to it from precedent versions, and what these modifications were23,37,38. When the FAIR data principles are not met, issues with reproducibility in the biomedical sciences follow, where data are either not shared or results/estimates and claims cannot be checked for correctness. However, datasets published online, including ones that reside in repositories and from journals, are often not accompanied by sufficient metadata39. In the field of genomics, issues with metadata often include mislabelling or misannotation of data (e.g., incorrect identification numbers), improper data characterization (e.g., mapping files to respective samples and protocols), and inconsistency in the way metadata are presented (nonuniform structure used across consortia)16. Provenance also extends to the computational workflows that are developed to process datasets2, as sharing relevant source code is often not provided15 along with relevant documentation about the workflow, such as in graphical-user interface (GUI) based systems like Galaxy, affecting the ability to reproduce results2. In addition, data maintainers and consortia, such as the Cancer Cell Line Encyclopedia (CCLE)40 and the Genomics of Drug Sensitivity in Cancer (GDSC), often only process the dataset using one pipeline that they believe is the most suitable, without documenting supporting evidence as to why the chosen processing pipeline was selected over other competing ones in the field41,42. This issue is also present in other data types such as xenographic or metagenomics data, where the molecular data are processed and normalized using only one pipeline22,43. Therefore, only a single version of the dataset is released, which makes it difficult for other researchers to perform a diverse set of analyses that require the use of different processing pipelines on the dataset. Lastly, it is important to note that datasets evolve and are therefore not static, as new data are added and respectively depreciated, which further highlights the need for transparent data-sharing practices, especially at the file level where updates can be easily identified.

There are multiple data portals created for accessing and sharing biomedical data, but with limitations in regard to reproducibility (Supplementary Table 2). Below, are sharing practices that are adopted across various data types, such as pharmacogenomics, toxicogenomics, radiogenomics, xenographic pharmacogenomics, and clinical genomics data:

Pharmacogenomics

The Genomic Data Commons Data Portal (NIH/NCI GDC) hosts raw data for the Cancer Cell Lines Encyclopedia (CCLE) from the Broad Institute, including RNA, whole-exome, and whole-genome sequencing data, allowing users to select and download the data type(s) of interest. Obtaining the data can be done through direct download or their GDC Data Transfer Tool by providing a manifest file that possesses the unique identifiers (UUID) of each file, which also allow users to locate the files again through the portal, along with their corresponding run, analysis, and experimental metadata. This is advantageous, as all the raw data (public and controlled access), for both datasets, are located within one portal and can be accessed in an efficient manner. However, the recent addition of new CCLE data (e.g., additional RNA-seq cell lines)41, is found on the European Nucleotide Archive (ENA), but not on GDC, resulting in data source inconsistency that becomes difficult to manage and follow for users. Current and previous versions of other CCLE data (i.e., annotation, drug response) are hosted on a Broad Institute portal, with no release notes or documentation present with each version, forcing researchers to manually identify changes within each file after every release. GRAY, a dataset generated by Dr. Joe Gray’s lab at the Oregon Health and Science University, has had three updates with raw data hosted on NCBI, with drug response and annotation data hosted on SYNAPSE, DRYAD, and/or the papers supplementary section44,45,46. In addition, drug-response data can also be found on the LINCS data portal. Because each version of the dataset is associated with a different respective paper, the data are scattered among various repositories, which makes it challenging to keep track of each source, and for each source to ensure that the data remain readily available, as one failed link would make it difficult for a researcher to reproduce any results. However, for the GRAY dataset, NCBI provides detailed information about the methodology used for the experiments, SYNAPSE provides a wiki and contact source for the dataset and a provenance tracker for each file that is uploaded, and DRYAD stores each publications data as a package organized with subsequent descriptions to keep data organized. A prominent example in effective data-sharing practices is DepMap (depmap.org)47, which provides a portal to download molecular and pharmacological data from a variety of consortia, with an interface that allows users to dive into the multiple data releases for a given dataset, which is accompanied by descriptive metadata such as associated publications and file-level descriptions. This provides users with the ability to download a dataset directly from a source or combine them together to form a custom dataset, all while being able to compare different updates/versions in an interactive manner. However, the portal does not allow users to select different processing pipelines and lacks details regarding the pipelines used for some of the processed data hosted, such as molecular data (e.g., genomic tools used), which highlights a need for increased granularity in the portal.

Toxicogenomics

The Life Science Database (LSDB) Archive is a database that hosts datasets by Japanese researchers (https://dbarchive.biosciencedbc.jp/), such as the TG-GATE toxicogenomics dataset48. The database provides rich metadata for users such as a DOI and clickable sections that provide granular details about each file in the dataset, which includes a description of the file contents and file attributes (e.g., data columns and respective descriptions for each column). In addition, the database allowed for TG-GATE to provide a timeline of updates to the dataset, where data corrections are posted with accompanying corrected files and a description of the update, which allows maintainers to be transparent with users about the dataset lineage. However, even though the maintainers for TG-GATE have indicated that the dataset was updated, detailed file-level changes are not provided, along with the processing pipelines and/or information regarding how the data was generated/processed into their resulting formats.

Xenographic and radiogenomics

The largest datasets for patient-derived tumor xenograft and radiogenomics studies are available through supplementary materials attached to their scientific publications21,43. These supplementary data provide users with information about the methods used to generate the data; however, access is dependent on the journal itself, which raises issues regarding the potential of broken data links. In addition, the amount of data that can be added to a publication via a supplementary section may be limited due to journal restrictions, which increases the likelihood of files being distributed across other data-sharing platforms (e.g., SYNAPSE), increasing the difficulty in locating and keeping track of dataset updates, or resulting in a reliance of contacting authors to obtain a complete dataset that cannot be otherwise shared via the journals web interface.

Clinical genomics

Over the years, clinical genomics data has been stored and shared across a wide range of consortia such as NCBI (GEO/EGA) and/or as supplementary material to a publication. However, this inconsistency has led to the development of several data compendia to consolidate the data for transparent mining/managing, sharing, and analysis, such as Oncomine49, MultiAssayExperiments R package for multiple experimental assays50, and curatedData R packages for molecular profile analysis51. In addition, the MetaGx R packages were developed to allow users to retrieve a compendium of transcriptomic data and standardized metadata from a wide array of studies and cancer types (pancreas, breast, ovarian), allowing for integrative analysis of the data for biomarker discovery22.

In order to address these issues of primary data acquisition and sharing pertaining to multiple studies across a range of biomedical data types, ORCESTRA harnesses a flexible framework that allows for sharing the respective data in a transparent manner. More specifically, all data sources and associated publications are clearly communicated to users for a specified data object, in order to limit the need to execute additional source searches outside of the platform. In addition, all pipelines are shared with users via a direct GitHub link on the metadata web pages, ensuring that users can re-create the objects themselves from the respective data sources. This also includes the Docker image used for each data-object creation, ensuring that computational environments remain consistent for data-object generation outside of the platform, if needed. All data objects also have a persistent identifier (DOI), allowing them to be referenced back to one source, increasing data transparency and provenance, unlike other consortia. Lastly, the platform provides credit and data disclaimers to all primary data generators, which are packaged with data sources, respective pipeline code, associated publications, and persistent identifiers, into one package/environment that is beneficial, as it promotes the FAIR principles in the platform. However, it must be noted that one platform solution alone will not be sufficient to solve all issues with data sharing, which is still facing multiple sociopolitical challenges in the scientific community. ORCESTRA provides a space that enables the unification of all primary data sources across multiple studies into one location, which is a step forward in standardizing the manner in which these data are processed and shared within the research community.

To encourage user uptake, we plan on regularly updating the platform with additional datasets and data types. In addition, we plan on automating the manner in which users can upload their own data for processing through standardized processing pipelines, which will further limit human intervention for generating data objects. Due to the open-source nature of the platform, we hope to invoke more community involvement by allowing users to run local instances of the platform to process their own data in a reproducible and transparent manner using built-in pipelines. Lastly, we aim to implement a metrics system to keep track of data objects used in future publications, in order to demonstrate its impact in the research community.

In conclusion, the ORCESTRA platform provides a new paradigm for sharing ready-to-analyze multimodal data while ensuring full transparency and reproducibility of the curation, processing, and annotation processes. ORCESTRA provides the data provenance and versioning tools necessary to maximize the reusability of data, a cornerstone of Open Science.

Methods

In order for the platform to be as transparent as possible, it harnesses an architecture with three distinct layers that not only works independently to process and interpret precedent data, but also have the capacity to scale (Fig. 3).

The web-application layer allows users to request custom data objects, which are generated through Pachyderm in a Kubernetes cluster within the data-processing layer. Each versioned data object is automatically pushed to the data-sharing layer and uploaded to Zenodo to obtain a DOI. Data objects that have already been processed result in the immediate sharing of custom metadata web pages with users via email.

Web-app layer

The first layer contains the web application which was developed using a Node.js API and React front-end with MongoDB as a database. The layer provides the user with an interaction point to the ORCESTRA platform, allowing users to first select the data type they wish to explore, either Pharmacogenomics; Toxicogenomics; Xenographic pharmacogenomics; Radiogenomics; or Clinical genomics. They can then search for existing data objects, request a new data object by entering pipeline parameters, view data-object request status, and register a personal account to save existing data objects of choice.

Data-processing layer

The second data-processing layer encompasses a Kubernetes cluster on Microsoft Azure that hosts Pachyderm, which utilizes Docker images for running R packages. All of the RNA-seq raw data have been pre-processed with Kallisto and Salmon Snakemake pipelines using an HPC environment, and subsequently pushed to assigned data repositories on Pachyderm, allowing for specified selection from the web-app (transcriptome and tool version). Microarray, cnv, mutation, and fusion data are either processed directly with Pachyderm due to low computational requirements or aggregated into the data objects from public sources. The Pachyderm pipelines aggregate repositories that host data generated on an HPC environment or on GitHub (https://github.com/BHKLAB-Pachyderm) into a Docker image that builds a data object based on user specifications (e.g., RNA-seq data processed by Kallisto v.0.46.1, inclusion of only CNV data) (Fig. 4). The GitHub hosted files can be viewed at the file level for changes and edited which automatically triggers the Pachyderm pipeline with the new modifications to produce a new data object. A unique feature of Pachyderm is the prevention of reprocessing computed data, such as where an update of RNA-seq annotations will not trigger the reprocessing of thousands of drug-response data, which reduces computation time. In addition, Pachyderm can be turned on/off by shutting on/off the computational resources that it utilizes in a cloud environment (e.g., virtual machines). Therefore, ORCESTRA can control costs by preventing the need for the resources to be constantly running, as it will only generate data objects when needed, including requests sent in by users. The ORCESTRA costs for both virtual machines and storage per year on average is collectively ~$2800 CAD. Virtual machines contribute to ~$1300 CAD of this cost, while storage contributes to ~$1500 CAD.

Pipelines are located in ORCESTRAs GitHub page (https://github.com/BHKLAB-Pachyderm), which are executed by running pachtl create-pipeline on their respective JSON file. Each JSON file has specified inputs that are accessed by the pipeline, along with a command section that runs a given script. These scripts are responsible for data generation, which is output into a storage container on Azure. Pipeline repositories are denoted by a “pipelines” suffix in the repository name.

Data-sharing layer

Each generated data object enters the third data-sharing layer where the data object gets automatically uploaded to an online data-sharing repository known as Zenodo, with a DOI so that the data object can be given a persistent location on the internet to be uniquely identified. The generated DOI is then associated with a custom metadata web page that is generated based on the contents of the data object. A BioCompute Object is also generated alongside the data objects, which are automatically deposited to Zenodo, where their DOI is also shared via the custom metadata web pages. Data disclaimers, usage policies, and credits to the original data generators are communicated to users in order to ensure the data is accessed and shared in an acceptable manner.

In addition to publicly sharing curated datasets, the platform leverages Zenodo’s access control feature to enable users to keep their curated dataset access restricted. Users may choose to keep the dataset “private” when submitting their own data for curation through the Data Submission feature. When pachyderm uploads the processed dataset to Zenodo by using their API, it adds a set of parameters to the upload request to keep access right of the uploaded data to the “restricted” status. The uploaded data with “restricted” status is only accessible upon request. Similarly, on the ORCESTRA web application, the information about the private dataset is only accessible to the user who submitted the data. The user may choose to grant access to view information about the private dataset by using the shareable link generation feature that is available on the private dataset page. Finally, the platform offers users an ability to publish the dataset by clicking the “Publish Dataset” button on the private dataset page. Upon receiving this request, the web application updates the database to indicate that the visibility of the dataset is “public”, and executes a series of API requests to Zenodo to change the access right to “open”, making the dataset publicly accessible.

Reporting summary

Further information on research design is available in the Nature Research Reporting Summary linked to this article.

Data availability

The GRAY dataset used in this study has been deposited at https://doi.org/10.5061/dryad.03n60 and is provided under CC0 1.0 Universal (CC0 1.0) Public Domain Dedication license. The CCLE dataset used in this study has been deposited at https://data.broadinstitute.org/ccle_legacy_data and is provided under the Creative Commons Attribution 4.0 license. The CTRPv2 dataset used in this study has been deposited at https://portals.broadinstitute.org/ctrp and is provided under the Creative Commons Attribution 4.0 license. The gCSI dataset used in this study has been deposited at http://research-pub.gene.com/gCSI_GRvalues2019/ and is provided under the Creative Commons BY 4.0 license. The FIMM dataset used in this study has been deposited at https://doi.org/10.1038/nature20171 and is provided under the Creative Commons BY 4.0 license. The GDSC dataset used in this study has been deposited at https://www.cancerrxgene.org and has the following data-usage policy: https://depmap.sanger.ac.uk/documentation/data-usage-policy/. The UHNBreast dataset used in this study has been deposited at https://codeocean.com/capsule/6718332/ and is provided under the Creative Commons BY 4.0 license. The Open TG-GATEs dataset used in this study has been deposited at Lifescience Database Archive: https://dbarchive.biosciencedbc.jp/en/open-tggates/download.html and are provided under Creative Commons Attribution-Share Alike 2.1 Japan. The EMEXP2458 dataset used in this study has been deposited at https://www.ebi.ac.uk/arrayexpress/experiments/E-MEXP-2458/ and is provided under permissive license at https://www.ebi.ac.uk/arrayexpress/help/FAQ.html#data_restrictions. The DrugMatrix dataset used in this study has been deposited at (diXa Data Warehouse—www.dev.ebi.ac.uk/fg/dixa/—study ID DIXA-033) and is attributed to the National Toxicology Program and may be copied and distributed without permission. The PDXE dataset used in this study has been deposited at https://pubmed.ncbi.nlm.nih.gov/26479923/ and may be utilized under NCBI and author guidelines. The MetaGxPancreas dataset used in this study has been deposited at http://bioconductor.org/packages/release/data/experiment/html/MetaGxPancreas.html and is provided under Creative Commons Attribution 4.0 International License. All of the data objects are publicly available on ORCESTRA (orcestra.ca) via dedicated documented web pages, which include respective digital object identifiers (DOI) and Zenodo links for each data object generated. Data for the case studies in the manuscript can be accessed in a custom compute capsule on Code Ocean at https://codeocean.com/capsule/9215268/tree.

Code availability

All of the code used by ORCESTRA is publicly available on GitHub via the Apache 2.0 license: https://github.com/BHKLAB-Pachyderm. All analyses performed using the data objects can be reproduced through a custom compute capsule on Code Ocean: https://codeocean.com/capsule/9215268/tree.

References

Madduri, R. et al. Reproducible big data science: a case study in continuous FAIRness. PLoS ONE 14, e0213013 (2019).

Kanwal, S., Khan, F. Z., Lonie, A. & Sinnott, R. O. Investigating reproducibility and tracking provenance—a genomic workflow case study. BMC Bioinforma. 18, 337 (2017).

Toga, A. W. & Dinov, I. D. Sharing big biomedical data. J. Big Data 2, 1–12 (2015).

Huang, Y. & Gottardo, R. Comparability and reproducibility of biomedical data. Brief. Bioinform. 14, 391–401 (2013).

Patil, P., Peng, R. D. & Leek, J. T. A visual tool for defining reproducibility and replicability. Nat. Hum. Behav. 3, 650–652 (2019).

Finak, G. et al. DataPackageR: reproducible data preprocessing, standardization and sharing using R/Bioconductor for collaborative data analysis. Gates Open Res. 2, 31 (2018).

Suthakar, U., Magnoni, L., Smith, D. R., Khan, A. & Andreeva, J. An efficient strategy for the collection and storage of large volumes of data for computation. J. Big Data 3, 21 (2016).

Köster, J. & Rahmann, S. Snakemake–a scalable bioinformatics workflow engine. Bioinformatics 28, 2520–2522 (2012).

Di Tommaso, P. et al. Nextflow enables reproducible computational workflows. Nat. Biotechnol. 35, 316–319 (2017).

Goble, C. et al. FAIR computational workflows. Data Intell. 2, 108–121 (2020).

Kulkarni, N. et al. Reproducible bioinformatics project: a community for reproducible bioinformatics analysis pipelines. BMC Bioinforma. 19, 349 (2018).

Ahmed, A. E. et al. Managing genomic variant calling workflows with Swift/T. PLoS ONE 14, e0211608 (2019).

Bourgey, M. et al. GenPipes: an open-source framework for distributed and scalable genomic analyses. https://doi.org/10.1101/459552 (2019).

Afgan, E. et al. The Galaxy platform for accessible, reproducible and collaborative biomedical analyses: 2016 update. Nucleic Acids Res. 44, W3–W10 (2016).

Mangul, S. et al. Systematic benchmarking of omics computational tools. Nat. Commun. 10, 1393 (2019).

Learned, K. et al. Barriers to accessing public cancer genomic data. Sci. Data 6, 98 (2019).

Sanoudou, D., Mountzios, G., Arvanitis, D. A. & Pectasides, D. Array-based pharmacogenomics of molecular-targeted therapies in oncology. Pharmacogenomics J. 12, 185–196 (2012).

T. P., A., M., S. S., Jose, A., Chandran, L. & Zachariah, S. M. Pharmacogenomics: the right drug to the right person. J. Clin. Med. Res. 1, 191–194 (2009).

Mer, A. S. et al. Integrative pharmacogenomics analysis of patient-derived xenografts. Cancer Res. 79, 4539–4550 (2019).

Nair, S. K. et al. ToxicoDB: an integrated database to mine and visualize large-scale toxicogenomic datasets. Nucleic Acids Res. 48, W455–W462 (2020).

Yard, B. D. et al. A genetic basis for the variation in the vulnerability of cancer to DNA damage. Nat. Commun. 7, 1–14 (2016).

Gendoo, D. M. A. et al. MetaGxData: clinically annotated breast, ovarian and pancreatic cancer datasets and their use in generating a multi-cancer gene signature. Sci. Rep. 9, 8770 (2019).

Wilkinson, M. D. et al. The FAIR guiding principles for scientific data management and stewardship. Sci. Data 3, 160018 (2016).

Shi, L. et al. The international MAQC Society launches to enhance reproducibility of high-throughput technologies. Nat. Biotechnol. 35, 1127–1128 (2017).

Barika, M. et al. Orchestrating big data analysis workflows in the cloud: research challenges, survey, and future directions. ACM Comput. Survey 52, 1–41 (2019).

Simonyan, V., Goecks, J. & Mazumder, R. Biocompute objects—a step towards evaluation and validation of biomedical scientific computations. PDA J. Pharm. Sci. Technol. 71, 136–146 (2017).

Smirnov, P. et al. PharmacoGx: an R package for analysis of large pharmacogenomic datasets. Bioinformatics 32, 1244–1246 (2016).

Manem, V. S. K. et al. Modeling cellular response in large-scale radiogenomic databases to advance precision radiotherapy. Cancer Res. 79, 6227–6237 (2019).

Haibe-Kains, B. et al. Inconsistency in large pharmacogenomic studies. Nature 504, 389–393 (2013).

Safikhani, Z. et al. Revisiting inconsistency in large pharmacogenomic studies. F1000Res. 5, 2333 (2016).

Hatzis, C. et al. Enhancing reproducibility in cancer drug screening: how do we move forward? Cancer Res. https://doi.org/10.1158/0008-5472.CAN-14-0725 (2014).

Sandhu, V. et al. Meta-analysis of 1,200 transcriptomic profiles identifies a prognostic model for pancreatic ductal adenocarcinoma. JCO Clin. Cancer Inform. 355602. https://doi.org/10.1101/355602 (2019).

Razick, S. et al. The eGenVar data management system–cataloguing and sharing sensitive data and metadata for the life sciences. Database 2014, bau027 (2014).

Hu, R., Yan, Z., Ding, W. & Yang, L. T. A survey on data provenance in IoT. World Wide Web J. Biol. https://doi.org/10.1007/s11280-019-00746-1 (2019).

Sivarajah, U., Kamal, M. M., Irani, Z. & Weerakkody, V. Critical analysis of big data challenges and analytical methods. J. Bus. Res. 70, 263–286 (2017).

Corpas, M., Kovalevskaya, N. V., McMurray, A. & Nielsen, F. G. G. A FAIR guide for data providers to maximise sharing of human genomic data. PLoS Comput. Biol. 14, e1005873 (2018).

Wise, J. et al. Implementation and relevance of FAIR data principles in biopharmaceutical R&D. Drug Discov. Today 24, 933–938 (2019).

Boeckhout, M., Zielhuis, G. A. & Bredenoord, A. L. The FAIR guiding principles for data stewardship: fair enough? Eur. J. Hum. Genet. 26, 931–936 (2018).

Kanduri, C., Domanska, D., Hovig, E. & Sandve, G. K. Genome build information is an essential part of genomic track files. Genome Biol. 18, 175 (2017).

Barretina, J. et al. The Cancer Cell Line Encyclopedia enables predictive modelling of anticancer drug sensitivity. Nature 483, 603–607 (2012).

Ghandi, M. et al. Next-generation characterization of the Cancer Cell Line Encyclopedia. Nature 569, 503–508 (2019).

Haverty, P. M. et al. Reproducible pharmacogenomic profiling of cancer cell line panels. Nature 533, 333–337 (2016).

Gao, H. et al. High-throughput screening using patient-derived tumor xenografts to predict clinical trial drug response. Nat. Med. 21, 1318–1325 (2015).

Hafner, M. et al. Quantification of sensitivity and resistance of breast cancer cell lines to anti-cancer drugs using GR metrics. Sci. Data 4, 170166 (2017).

Daemen, A. et al. Modeling precision treatment of breast cancer. Genome Biol. 14, R110 (2013).

Heiser, L. M. et al. Subtype and pathway specific responses to anticancer compounds in breast cancer. Proc. Natl Acad. Sci. USA 109, 2724–2729 (2012).

Tsherniak, A. et al. Defining a cancer dependency map. Cell 170, 564–576.e16 (2017).

Igarashi, Y. et al. Open TG-GATEs: a large-scale toxicogenomics database. Nucleic Acids Res. 43, D921–D927 (2015).

Rhodes, D. R. et al. Oncomine 3.0: genes, pathways, and networks in a collection of 18,000 cancer gene expression profiles. Neoplasia 9, 166–180 (2007).

Ramos, M. et al. Software for the integration of multiomics experiments in bioconductor. Cancer Res. 77, e39–e42 (2017).

Ganzfried, B. F. et al. curatedOvarianData: clinically annotated data for the ovarian cancer transcriptome. Database 2013, bat013 (2013).

Acknowledgements

This project is supported by the Canadian Institutes of Health Research (CIHR), under the frame of ERA PerMed. The implementation of the ORCESTRA platform has been partially supported by Genome Canada and Ontario Genomics via a Bioinformatics and Computational Biology (B/CB) grant and the Natural Sciences and Engineering Research Council of Canada Natural Sciences and Engineering Research Council of Canada. We thank Helia Mohammadi (Microsoft Canada), Stephanie Chicoine (Microsoft Canada), Nicole Mumford (AirGate) and Michael Masters (Pachyderm) for helping setting up the Pachyderm on Microsoft Azure and optimizing the cloud services.

Author information

Authors and Affiliations

Consortia

Contributions

A.M. created the Pachyderm pipelines, deployed the Azure Kubernetes environment, and wrote the manuscript. P.S. contributed to the Pachyderm pipelines and code for processing gCSI and GDSC data. M.N. contributed the front and back-end of the web application and the manuscript writing. Z.S. provided the code for processing the GRAY and gCSI data objects. C.E. assisted with the web-application front-end, updated R packages used by the platform for compatibility, and contributed to the interpretation of the results (data objects). H.S. assisted with incorporating the mutation profiles for the data objects. S.K.N., A.S.M., and I.S. contributed to the interpretation of the results (data objects). C.H. and G.B. contributed to the front-end of the web application. R.K. and M.S. provided guidance for ensuring the platform meets FAIR data-sharing principles. E.L., Y.Y., S.M., and M.H. contributed data for the gCSI data object. B.H.-K. designed and supervised the work.

Corresponding author

Ethics declarations

Competing interests

B.H.K. is a shareholder and paid consultant for Code Ocean Inc. Code Ocean Inc. did not participate in the design and the execution of the study. All remaining authors declare no competing interests.

Additional information

Peer review information Nature Communications thanks Chancey Christenson, Sarah Harris and the other, anonymous, reviewer(s) for their contribution to the peer review of this work.

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Mammoliti, A., Smirnov, P., Nakano, M. et al. Orchestrating and sharing large multimodal data for transparent and reproducible research. Nat Commun 12, 5797 (2021). https://doi.org/10.1038/s41467-021-25974-w

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41467-021-25974-w

This article is cited by

-

Reusability report: Evaluating reproducibility and reusability of a fine-tuned model to predict drug response in cancer patient samples

Nature Machine Intelligence (2023)

-

Multimodal data fusion for cancer biomarker discovery with deep learning

Nature Machine Intelligence (2023)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.