Abstract

Being fundamentally a non-equilibrium process, synchronization comes with unavoidable energy costs and has to be maintained under the constraint of limited resources. Such resource constraints are often reflected as a finite coupling budget available in a network to facilitate interaction and communication. Here, we show that introducing temporal variation in the network structure can lead to efficient synchronization even when stable synchrony is impossible in any static network under the given budget, thereby demonstrating a fundamental advantage of temporal networks. The temporal networks generated by our open-loop design are versatile in the sense of promoting synchronization for systems with vastly different dynamics, including periodic and chaotic dynamics in both discrete-time and continuous-time models. Furthermore, we link the dynamic stabilization effect of the changing topology to the curvature of the master stability function, which provides analytical insights into synchronization on temporal networks in general. In particular, our results shed light on the effect of network switching rate and explain why certain temporal networks synchronize only for intermediate switching rate.

Similar content being viewed by others

Introduction

Synchronization is critical to the function of many interconnected systems1, from physical2 to technological3 and biological4. Many such systems need to synchronize under the constraint of limited resources. For instance, energy dissipation is required to couple molecular biochemical oscillators through oscillator–oscillator exchange reactions, which are responsible for synchronization in systems such as the cyanobacterial circadian clock5. For multiagent networks with distributed control protocols, including robotic swarms, the synchronization performance is limited by the available budget of control energy6.

Similarly, for networks of coupled oscillators, one important resource is the total coupling budget7, which determines how strongly the oscillators can influence each other. For a typical oscillator network, a minimum coupling strength σc is needed to overcome transversal instability and maintain synchronization. The network structures that achieve synchronization with the minimum coupling strength are optimal, and they are characterized by a complete degenerate spectrum8—all eigenvalues of the Laplacian matrix are identical, except the trivial zero eigenvalue associated with perturbations along the synchronization trajectory. Below σc, there is no network structure that can maintain synchrony without violating the resource constraint.

The results above, however, are derived assuming the network to be static. That is, the network connections do not change over time. Previous studies have shown that temporal networks9,10,11,12,13,14,15 can synchronize better than two of their static counterparts—namely, those obtained either by freezing the network at given time instants16,17,18,19 or by averaging the network structure over time20,21,22. But it remains unclear whether there are temporal networks that can outperform all possible static networks. In particular, can temporal variations synchronize systems beyond the fundamental limit set by the optimal static networks? This question is especially interesting given that past studies have often focused on the fast-switching limit, for which the network structure changes much faster than the node dynamics. These fast-switching networks are equivalent to their static, time-averaged counterparts in terms of synchronization stability17,23,24,25. Thus, no temporal networks can outperform optimal static networks in the fast-switching limit.

In this article, we show that the full potential of temporal networks lies beyond the fast-switching limit, a message echoed by several recent studies21,26,27. Importantly, by allowing a network to vary in time at a suitable rate, synchronization can be maintained even when the coupling strength is below σc for all time t. We also develop a general theory to characterize the synchronizability of commutative temporal networks. The use of commutative graphs in synchronization was pioneered in refs. 18,20 and subsequently adopted in numerous studies22,26,28 for its potential of generating analytical insights beyond the fast-switching limit. An insight provided by our theory is that the effectiveness of introducing time-varying coupling depends critically on the curvature of the master stability function29 at its first zero, which extends the results presented in ref. 30. Moreover, we demonstrate analytically that the condition for improved synchronizability in temporal networks is universally satisfied by coupled one-dimensional maps.

Results

Networks of coupled oscillators

We start by considering systems described by the following dynamical equations:

where L = (Lij) is the normalized Laplacian matrix representing a diffusively coupled network. Here, Lij = δij∑kAik − Aij, with δij being the Kronecker delta and Aij encoding the edge weight from node j to node i. An overall normalization factor is chosen so that the sum of all entries in A, ∑1≤i,j≤nAij, equals n−1. As a consequence, \(\frac{1}{n-1}\mathop{\sum }\nolimits_{i = 1}^{n}{L}_{ii}(t)=\frac{1}{n-1}\mathop{\sum }\nolimits_{i = 2}^{n}{\lambda }_{i}(t)=1\), where the sum over the eigenvalues λi(t) starts from i = 2 because the trivial eigenvalue λ1 associated with the eigenvector v1 = \({(1, 1,\ldots,1)^{\top}}\) is always 0. As a result of the normalization, the amount of resources (per node) used to maintain synchronization can be quantified solely by the coupling strength σ for networks of different sizes and densities. The d-dimensional vector xi describes the state of node i, F is the vector field dictating the intrinsic node dynamics, and H is the coupling function mediating interactions between different nodes.

To determine the stability of the synchronization state x1(t) = x2(t) = ⋯ = xn(t) = s(t), we study the variational equation

Here, \({\boldsymbol{\delta }}={({{\bf{x}}}_{1}-{\bf{s}},\ldots ,{{\bf{x}}}_{n}-{\bf{s}})}^{\top }\) is the perturbation vector, \({{\mathbb{1}}}_{n}\) is the n × n identity matrix, ⊗ represents the Kronecker product, and J is the Jacobian operator. When the Laplacian matrices L(t) and \({\bf{L}}(t^{\prime} )\) commute for any t and \(t^{\prime}\), following the master stability function formalism18,29, we can find an orthogonal matrix Q such that Q⊤L(t)Q is diagonal for all time t, thus decoupling Eq. (2) into n independent d-dimensional equations

Here, {ηi} is linked to the original coordinates through the relation \({({{\boldsymbol{\eta }}}_{1},\ldots ,{{\boldsymbol{\eta }}}_{n})}^{\top }=({{\bf{Q}}}^{\top }\otimes{{\mathbb{1}}}_{d}){\boldsymbol{\delta }}\). Each decoupled equation describes the evolution of an independent perturbation mode ηi. In order for synchronization to be stable, all perturbation modes transverse to the synchronization manifold (namely, the modes η2 to ηn) must asymptotically decay to zero. Since the decoupled variational equations are all of the same form and only differ in λi(t), it is informative to study the maximum Lyapunov exponent of the equation

as a function of α. We refer to this function as the master stability function and denote it as Λ(α).

As we will show throughout the rest of the paper, if Λ″(α0) < 0 when Λ(α) first becomes negative at α0 = σc (Fig. 1), then it is guaranteed that there exist temporal networks that outperform optimal static networks. Intuitively, this is because introducing temporal variation in the network structure allows all nonzero λi(t) to spend a significant amount of time above 1, the optimal value achievable by static networks. (For static networks, because \(\mathop{\sum }\nolimits_{i = 2}^{n}{\lambda }_{i}=n-1\), there must exist 0 < λi < 1 unless all nonzero eigenvalues are identical, in which case λi = 1 for all i ≥ 2 and the network is optimal.) If Λ″(α0) < 0, the synchronization state can gain more stability while λi(t) > 1 than the stability it loses during the period when λi(t) < 1.

Temporal networks that outperform optimal static networks

In order to illustrate a simple scheme for designing temporal networks that synchronize for coupling strength below the critical value σc, we construct a class of Laplacian matrices that have the following spectrum (Fig. 2a):

The nonzero eigenvalues split into two groups with a time-varying gap between them, whereas their sum remains equal to n−1 for all time t. Intuitively, some of the perturbation modes borrow resources from the others to remain stable and then return the favor at a later time. As a result, this kind of dynamic stabilization achieves global synchronization with very limited resources.

a Evolution of the nonzero Laplacian eigenvalues described in Eq. (5), which are split into two degenerate groups. b Temporal network constructed from the Laplacian eigenvalues in a. The weight of each edge is represented by its thickness. In addition, edges whose weight is larger than \(\frac{1}{n}\) are colored orange, whereas those with weight less than \(\frac{1}{n}\) are colored cyan. For this network diagram, we set n = 11 and m = 5, and the corresponding weighted adjacency matrix is given by Eq. (9). Visually, we can see that different parts of the network are being strengthened in an alternating fashion.

One can design networks with a given spectrum by specifying a set of orthonormal eigenvectors {vi}18. For our purpose, any choice of {vi} containing v1 = (1, 1, …, 1)⊤ is valid, which gives rise to a whole range of synchronization-boosting temporal networks. Here, for concreteness, we adopt the eigenbasis proposed in ref. 31:

where i ≥ 2. Combining Eqs. (5) and (6) using the formula \({\bf{L}}(t)=\mathop{\sum }\nolimits_{i = 2}^{n}{\lambda }_{i}(t){{\bf{v}}}_{i}{{\bf{v}}}_{i}^{\top }\) gives rise to a temporal network described by the following weighted adjacency matrix (Fig. 2b):

Substituting Eq. (5) into Eq. (7) shows that edges connecting the first m + 1 nodes have a time-dependent weight of \(\frac{1}{n}+\frac{n{(n-1)}^{2}-m(m+1)(n-1)}{nm(m+1)(n-m-1)}A\sin (\omega t)\), whereas the weight of the other edges evolves according to \(\frac{1}{n}-\frac{(n-1)}{n(n-m-1)}A\sin (\omega t)\). The choice of the time-varying term \(\sin (\omega t)\) is not essential; the sine function can be replaced by any other periodic function p(t) with period T that satisfies \(\mathop{\int}\nolimits_{0}^{T}p(t)\ {\rm{d}}t=0\).

When assuming n odd and \(m=\frac{n-1}{2}\), we get a particularly simple class of temporal networks whose transverse perturbation modes all have the same stability (analogous to the defining property of optimal static networks):

Critical role of the switching rate

To demonstrate the effectiveness of our design, we equip the temporal networks described by Eq. (9) with concrete node dynamics and probe their synchronizability in depth. Here, we choose Stuart–Landau oscillators as our first example, as they represent the canonical dynamics of systems in the vicinity of a Hopf bifurcation32. The oscillators evolve according to the following dynamical equation:

where \({Z}_{j}={x}_{j}+{\rm{i}}{y}_{j}={r}_{j}{{\rm{e}}}^{{\rm{i}}{\theta }_{j}}\in {\mathbb{C}}\) represents the state of the jth oscillator. Equation (10) is the discrete-space counterpart of the Ginzburg–Landau equation33 and admits a limit-cycle synchronous state \({Z}_{j}(t)={{\rm{e}}}^{-{\rm{i}}{c}_{2}t}\ \forall j\). By writing the perturbations in polar coordinates, we find that the Jacobian terms in Eq. (4) become \(J{\bf{F}}=\left(\begin{array}{ll}-2&0\\ -2{c}_{2}&0\end{array}\right)\)and \(J{\bf{H}}=\left(\begin{array}{ll}1&-{c}_{1}\\ {c}_{1}&1\end{array}\right)\), both of which are constant matrices. Thus, according to Eq. (4), the master stability function can be obtained by solving a characteristic polynomial equation and has the following form28:

Figure 3a shows Λ(α) for c1 = −1.8 and c2 = 4, which clearly has Λ″(α0) < 0 at its first zero α0 ≈ 3.

a Master stability function for Stuart–Landau oscillators. Parameters are set to c1 = − 1.8 and c2 = 4. b Maximum Lyapunov exponent Γ as a function of the switching rate ω for different values of the temporal activity A (solid lines). The dashed lines indicate the slow-switching limit predicted by the averaged master stability function \(\overline{{{\Lambda }}}\).

For Stuart–Landau oscillators coupled on temporal networks, Eq. (3) dictates the stability of individual perturbation modes and can be written as

where \({{\bf{B}}}_{i}(t)=\left(\begin{array}{ll}-2-\sigma {\lambda }_{i}(t)&{c}_{1}\sigma {\lambda }_{i}(t)\\ -2{c}_{2}-{c}_{1}\sigma {\lambda }_{i}(t)&-\sigma {\lambda }_{i}(t)\end{array}\right)\) is periodic with period \(T=\frac{2\pi }{\omega }\) (henceforth, we drop the subscript i to ease the notation). According to Floquet theory34, the solution to Eq. (12) must be of the form eμtP(t), where P(t) has period T. The Floquet exponents μ1 and μ2 can be extracted by finding the principal fundamental matrix, and their real parts are the corresponding Lyapunov exponents35. Figure 3b shows the maximum Lyapunov exponent \({{\Gamma }}=\max \{{\rm{Re}}({\mu }_{1}),{\rm{Re}}({\mu }_{2})\}\) as a function of ω for different values of the temporal activity A. (It is clear from Eq. (8) that all transverse perturbation modes have the same Γ. Thus, Γ is also the maximum transverse Lyapunov exponent and determines the synchronization stability.) We set the coupling strength to slightly below σc at σ = 2.9 so that no static network can synchronize. As the temporal activity A is increased, Γ becomes negative for an increasingly wide range of switching rate ω, signaling that the temporal variation in the network structure is successfully stabilizing synchronization under the given coupling budget.

Since the only difference between Eqs. (3) and (4) is the periodic λ(t) vs. the fixed α, it is natural to expect the stability of the temporal network to be related to the master stability function averaged over a suitable range of α. Specifically, one might reasonably associate Γ with the averaged master stability function18,22,26,27,30

where W(λ) is the probability distribution of λ (it follows that \(\mathop{\int}\nolimits_{{\lambda }_{\min }}^{{\lambda }_{\max }}W(\lambda )\ {\rm{d}}\lambda =1\)). However, it is clear that \({\bar{\Lambda}}\) cannot be used to predict Γ in general. One immediate observation is that \({\bar{\Lambda}}\) does not depend on the rate in which λ(t) is changing (it only depends on the distribution of λ), whereas the curves representing Γ in Fig. 3b clearly depend on the switching rate ω. Indeed, in order to go from Γ to \({\bar{\Lambda}}\), we are required to shuffle B(t) temporally in Eq. (12). This operation is forbidden when the matrices \(\{{\bf{B}}(t)| t\in {\mathbb{R}}\}\) do not commute (or, equivalently, when \(\{{\bf{B}}(t)| t\in {\mathbb{R}}\}\) cannot be simultaneously diagonalized). To see why, we can look at the formal solution to Eq. (12) expressed in terms of the matrix exponential:

where Ω(t) is given by the Magnus expansion36:

Here, \(\left[{\bf{B}}(\tau ),{\bf{B}}(\tau ^{\prime} )\right]={\bf{B}}(\tau ){\bf{B}}(\tau ^{\prime} )-{\bf{B}}(\tau ^{\prime} ){\bf{B}}(\tau )\) is the matrix commutator. Equation (15) makes it clear that {B(τ)∣0 < τ < t} can be shuffled without affecting Ω(t) if and only if \(\left[{\bf{B}}(\tau ),{\bf{B}}(\tau ^{\prime} )\right]=0\) for all \(\tau ^{\prime} <\tau \,<\, t\), in which case everything on the right-hand side except the first term vanishes.

However, \({\bar{\Lambda}}\) is still extremely informative on whether a given temporal network can synchronize or not. In particular, for ω → 0 (i.e., slow-switching networks30), Γ approaches the value of \({\bar{\Lambda}}\), as demonstrated in Fig. 3b. Intuitively, this can be understood through a process we call "grow and rotate”. When the matrices \(\{{\bf{B}}(t)| t\in {\mathbb{R}}\}\) commute, η can be decomposed into components that grow independently along the eigendirections of B(t), whose growth rates are dictated by the corresponding eigenvalues. Eventually, the component along the direction with the largest eigenvalue becomes dominant. However, when \(\{{\bf{B}}(t)| t\in {\mathbb{R}}\}\) do not commute, the growth along the eigendirections are often "interrupted”, as the eigenvectors of B(t) are no longer fixed and will rotate over time. To keep track of the growth of the dominant component, we must project η onto the new dominant eigendirection upon rotation. These frequent projections can significantly influence the asymptotic growth rate (this is also why the maximum Lyapunov exponent is usually not the mean of the maximum local Lyapunov exponents). At the slow-switching limit, η can grow along an eigendirection uninterrupted for long enough that the effect of the projections becomes negligible. In this case, Γ is determined by the average growth rate of η in the dominant direction of each B(t), which is exactly \({\bar{\Lambda}}\).

It is worth noting that the equivalence between Γ and \({\bar{\Lambda}}\) at the slow-switching limit is not specific to Stuart–Landau oscillators and can be expected for generic oscillator models26,30. As a result, Λ″(α0) < 0 is a robust indicator that synchronization in a system can benefit from temporal networks. This observation echoes recent results in ref. 30, which demonstrates the importance of a master stability function’s curvature for synchronization in the special case of networks with fixed topology and time-varying overall coupling strength. To see why curvature has such a critical role, we assume the temporal variation of λ around 1 to be small and Taylor expand Λ(α) around α0. Then, the averaged master stability function for coupling strength σ = σc is

Thus, if Λ″(α0) = Λ″(σc) < 0, then \({\bar{\Lambda}}\, <\, 0\) at σ = σc and stability is guaranteed to be improved at the slow-switching limit, where \(\Gamma = {\bar{\Lambda}}\). This improvement is expected to extend into the intermediate switching rate due to the continuity of Γ as a function of ω.

At the other limit, for ω → ∞ (i.e., fast-switching networks), Γ clearly does not match with \(\bar{{{\Lambda }}}\). In particular, Γ does not depend on the temporal activity A. For the system in Fig. 3b, Γ approaches Λ(σ) as ω → ∞, which is the value expected for an optimal static network at coupling strength σ (in this case the time-averaged network is a complete graph with uniform edge weights). The mapping from a temporal network to its time-averaged counterpart at the fast-switching limit is intuitive and well established in the literature17,23,25.

The results above provide new insights into the intriguing phenomenon that certain temporal networks only synchronize for intermediate switching rate21,26,27: when switching is too fast, the temporal network reduces to its static counterpart and one cannot take full advantage of the temporal variation in the connections; when switching is too slow, although the asymptotic stability might be maximized, the system would have desynchronized long before the network experiences any meaningful change. Thus, the sweet spot often emerges at an intermediate switching rate.

In Fig. 4, we show typical trajectories of n = 11 Stuart–Landau oscillators on the temporal networks described by Eq. (9), with the temporal activity set to A = 0.15. Systems in all three panels are initiated close to the synchronous state, and their only difference lies in the switching rate ω, which allows us to compare networks with static, moderate-switching, and fast-switching topologies. By monitoring the synchronization error Δ(t), defined as the standard deviation among Zj(t), we see that only the system with an intermediate switching rate (ω = 1, panel b) can maintain stable synchrony. Interestingly, Δ(t) in that system goes down non-monotonically and is bounded from above by periodic envelopes. The width of each envelope is 2π, which coincides with the period of the changing network topology.

Universal stabilization of low-dimensional maps

The framework developed so far can be readily transferred from differential equations to discrete maps, from continuous variation in the network topology to discrete switching, and from periodic oscillator dynamics to chaotic ones. The discrete-time analog of Eq. (1) can be written as

To demonstrate the advantage of temporal networks in these settings, we focus on the following class of coupled one-dimensional discrete maps:

where \(F:{\mathbb{R}}\to {\mathbb{R}}\) is the mapping function. As we show below, this setup allows us to develop an elegant theory that offers new insights.

Similar to the continuous-time case, the synchronization stability is determined by the decoupled variational equations

For fixed λ, the Lyapunov exponent of Eq. (19) is given by \({\mathrm{ln}}\,| \beta -\sigma \lambda | +{{{\Gamma }}}_{s}\), where Γs = lim\({}_{{\mathcal{T}}\to \infty }\frac{1}{{\mathcal{T}}}\mathop{\sum }\nolimits_{t = 1}^{{\mathcal{T}}}{\mathrm{ln}}\,\left|F^{\prime} (s[t])\right|\) is a finite constant. Thus, the master stability function has a universal form (illustrated in Fig. 1)

Taking the second derivative with respect to α, we see that

Thus, synchronization in any system described by Eq. (18) can benefit from the temporal networks designed in this paper. In particular, this holds for any mapping function F, which encompasses important dynamical systems such as logistic maps, circle maps, and Bernoulli maps.

For concreteness, we set \(F(x)={\sin }^{2}(x+\pi /4)\) and β = 2.8 (the corresponding Γs = − 0.5855), which models the dynamics of coupled optoelectronic oscillators37 and exhibits chaotic dynamics. The time-discretized version of the temporal networks described by Eq. (7) works out-of-the-box for the optoelectronic oscillators, despite the vastly different node dynamics. Here, to demonstrate the flexibility of our network design, we consider the following slightly modified switching scheme, which is also more natural for discrete-time systems:

where ⌊⋅⌋ is the floor function. Basically, the network switches between two configurations every T iterations, with each configuration being the extremal in the continuous scheme described by Eq. (9). Consequently, every nonzero eigenvalue of the temporal Laplacian alternates between 1 + 2A and 1 − 2A with period T.

Again, the averaged master stability function \({\bar{\Lambda}}\) accurately predicts the stability of the temporal network at the slow-switching limit. More interestingly, for systems described by Eq. (18), the connection is much stronger: \({\overline{\Lambda}}\) determines the stability of the temporal network for all switching periods T. To see why, we note that the synchronization stability is determined by the limit product \(\mathop{\prod }\nolimits_{t = 1}^{\infty }\left(\beta -\sigma \lambda [t]\right)F^{\prime} (s[t])\). Normally, these are matrix products and cannot be reordered. However, since 1 × 1 matrix multiplications commute, for one-dimensional maps we can reorder them to obtain

This independence of Γ on T might seem contradictory to the fact that, at the fast-switching limit, temporal networks can be reduced to their static counterparts. But notice that there is usually no fast switching in discrete-time systems—even if the network topology changes at every iteration, it is still evolving at the same timescale as the node dynamics. Moreover, unlike in continuous-time systems16,17,23,25, the discrete nature of the dynamics precludes the use of the averaging techniques16,25 essential for connecting fast-switching networks with their time-averaged counterparts. Thus, one cannot map a temporal network to its time-averaged counterpart in discrete-time systems even when the network topology changes much more rapidly than the node dynamics.

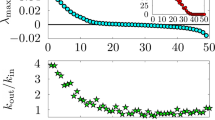

In Fig. 5, we show the maximum transverse Lyapunov exponent Γ of the synchronization state in the optoelectronic system for σ = 1, which is slightly below σc. The dashed line corresponds to the theoretical prediction of Γ based on the averaged master stability function \({\bar{\Lambda }}=\frac{1}{2}\left({\mathrm{ln}}\,| 1+2A-\beta | +{\mathrm{ln}}\,| 1-2A-\beta | \right)+{{{\Gamma }}}_{s}\). As expected, the static network (A = 0), despite being optimal, is unstable. As the temporal activity A is increased, \({\overline{\Lambda}}\) deceases and synchronization is eventually stabilized. On the other hand, the solid lines represent Γ obtained numerically by evolving Eq. (19) for different switching periods T. These lines are shifted vertically by different amounts in Fig. 5, purely as an aid to the eye. The unshifted versions are shown in the inset. Notice that all the lines collapse onto a single curve, demonstrating the excellent agreement between theory and simulations.

The maximum transverse Lyapunov exponent Γ decreases as the temporal activity A is increased. The dashed line represents the theoretical prediction based on \(\overline{{{\Lambda }}}\), whereas the solid lines (shifted vertically for visibility) are calculated directly from Eq. (19) for different switching periods T. Without the shift, all curves completely overlap (inset), which confirms our prediction that the stabilization provided by temporal networks does not depend on the switching rate for coupled one-dimensional maps.

An interesting question is what happens when we introduce random fluctuations to the network structure at each time step t, which makes the temporal network aperiodic and the graph Laplacians noncommutative. In Fig. 6, through direct simulations35, we show that temporal networks still outperform optimal static networks in the presence of these random fluctuations. Here, we use the same model of optoelectronic oscillators and the discrete-switching network considered in Fig. 5, except that independent random Gaussian perturbations of zero mean and standard deviation 0.1/n (10% of the average edge weight) are added to the strength of each edge at every time step. For temporal activity A = 0 (Fig. 6a), synchronization cannot be sustained at coupling strength σ = 1.05. For temporal activity A = 0.15 (Fig. 6b), synchronization is stabilized at the same coupling strength by the variation in network structure. The network size is set to n = 99 and the switching period to T = 10 in our simulations, although the results do not depend sensitively on these two parameters.

The temporal networks are based on the discrete-switching networks given by Eq. (22), which are further made aperiodic and noncommutative by applying random Gaussian perturbations of zero mean to the strength of each edge independently at every time step t. The standard deviation of the perturbations is fixed at 0.1/n (10% of the average edge weight). The spacetime plots show the evolution of the optoelectronic oscillators on temporal networks with a: temporal activity A = 0 and b: temporal activity A = 0.15. Both systems are initialized close to the synchronous state. Synchronization persists only in the second system, even though the network in the first system is an optimal static network (a complete graph with uniform edge weights). Other parameters are set to n = 99, T = 10, β = 2.8, and σ = 1.05.

Discussion

To summarize, we have designed temporal networks that synchronize more efficiently than optimal static networks. These temporal networks are particularly relevant when the coupling budget available in a system to maintain stable synchrony is limited. We provided analytical insight into the synchronizability of commutative temporal networks by linking it to the curvature of the corresponding master stability function. In particular, our analysis reveals the subtle relation between the performance of a temporal network and its switching rate. The switching rate has an especially critical role in systems with high-dimensional oscillator dynamics, and networks with intermediate switching rates often emerge as the most effective.

Our open-loop design has several advantages compared with closed-loop schemes where the network structure is adjusted on-the-fly based on feedback from the node states (often modeled by adaptive networks38). First, our design does not depend sensitively on the node dynamics. As we have shown, the same design works for systems with vastly different node dynamics, and it applies readily to both continuous-time and discrete-time systems. Second, we do not need to monitor all the nodes constantly, which also eliminates the possibility of being detrimentally influenced by measurement errors. Third, the evolution of the network is highly predictable and we can easily control the coupling budget allocated to the system at any given time t, a task that is far more difficult in adaptive networks. On the other hand, closed-loop schemes have the advantage of being readily adaptive to the changing environment and can react quickly to unexpected perturbations39,40. A promising future direction would be to devise hybrid schemes that combine the best from both worlds, which could enable even more efficient and robust synchronization.

In this work, for the sake of analytical tractability, we mostly focused on temporal networks whose Laplacian matrices from different time instants commute. There is evidence that synchronization in temporal networks can benefit when \({\bf{L}}(t){\bf{L}}(t^{\prime} )\ne {\bf{L}}(t^{\prime} ){\bf{L}}(t)\)20. It would therefore be interesting to see whether our design of temporal networks could be further optimized by allowing noncommuting Laplacian matrices. In particular, can random fluctuations in the network structure (which give rise to noncommuting Laplacian matrices in general) outperform our designed temporal networks? More generally, do optimal temporal networks exist for the purpose of synchronization, just like there are optimal static networks? And if so, what are their defining characteristics?

Finally, we hope our results can serve as an important step towards achieving efficient synchronization in complex interconnected systems. For example, many temporal networks arise naturally in the real world through moving agents, whose interactions depend on their spatial distance41,42,43,44. An exciting next step is to understand how our design can be implemented in such systems and how the time-varying connections can be translated into the spatial movement of individual agents.

Data availability

All data needed to evaluate the conclusions are presented in the paper. Additional data related to this paper may be requested from the authors.

Code availability

Code for performing network dynamics simulations and stability calculations are available at https://github.com/y-z-zhang/temporal_sync. An archived version of the code is also provided35. Additional source code may be requested from the authors.

References

Strogatz, S. H. Exploring complex networks. Nature 410, 268–276 (2001).

Roy, R. & Thornburg Jr, K. S. Experimental synchronization of chaotic lasers. Phys. Rev. Lett. 72, 2009–2012 (1994).

Motter, A. E., Myers, S. A., Anghel, M. & Nishikawa, T. Spontaneous synchrony in power-grid networks. Nat. Phys. 9, 191–197 (2013).

Mirollo, R. E. & Strogatz, S. H. Synchronization of pulse-coupled biological oscillators. SIAM J. Appl. Math 50, 1645–1662 (1990).

Zhang, D., Cao, Y., Ouyang, Q. & Tu, Y. The energy cost and optimal design for synchronization of coupled molecular oscillators. Nat. Phys. 16, 95–100 (2020).

Xi, J., Wang, C., Liu, H. & Wang, Z. Dynamic output feedback guaranteed-cost synchronization for multiagent networks with given cost budgets. IEEE Access 6, 28923–28935 (2018).

Nishikawa, T. & Motter, A. E. Maximum performance at minimum cost in network synchronization. Physica D 224, 77–89 (2006).

Nishikawa, T. & Motter, A. E. Network synchronization landscape reveals compensatory structures, quantization, and the positive effect of negative interactions. Proc. Natl. Acad. Sci. USA 107, 10342–10347 (2010).

Pan, R. K. & Saramäki, J. Path lengths, correlations, and centrality in temporal networks. Phys. Rev. E 84, 016105 (2011).

Starnini, M., Baronchelli, A., Barrat, A. & Pastor-Satorras, R. Random walks on temporal networks. Phys. Rev. E 85, 056115 (2012).

Holme, P. & Saramäki, J. Temporal networks. Phys. Rep. 519, 97–125 (2012).

Masuda, N., Klemm, K. & Eguíluz, V. M. Temporal networks: slowing down diffusion by long lasting interactions. Phys. Rev. Lett. 111, 188701 (2013).

Valdano, E., Ferreri, L., Poletto, C. & Colizza, V. Analytical computation of the epidemic threshold on temporal networks. Phys. Rev. X 5, 021005 (2015).

Paranjape, A., Benson, A. R. & Leskovec, J. Motifs in temporal networks. In Proceedings of the Tenth ACM International Conference on Web Search and Data Mining, 601–610 (2017).

Li, A., Cornelius, S. P., Liu, Y.-Y., Wang, L. & Barabási, A.-L. The fundamental advantages of temporal networks. Science 358, 1042–1046 (2017).

Belykh, I. V., Belykh, V. N. & Hasler, M. Blinking model and synchronization in small-world networks with a time-varying coupling. Physica D 195, 188–206 (2004).

Stilwell, D. J., Bollt, E. M. & Roberson, D. G. Sufficient conditions for fast switching synchronization in time-varying network topologies. SIAM J. Appl. Dyn. Syst. 5, 140–156 (2006).

Boccaletti, S. et al. Synchronization in dynamical networks: evolution along commutative graphs. Phys. Rev. E 74, 016102 (2006).

Porfiri, M., Stilwell, D. J. & Bollt, E. M. Synchronization in random weighted directed networks. IEEE Trans. Circuits Syst. I, Reg. Papers 55, 3170–3177 (2008).

Amritkar, R. & Hu, C.-K. Synchronized state of coupled dynamics on time-varying networks. Chaos 16, 015117 (2006).

Jeter, R. & Belykh, I. Synchronization in on-off stochastic networks: windows of opportunity. IEEE Trans. Circuits Syst. I, Reg. Papers 62, 1260–1269 (2015).

Zhou, S., Guo, Y., Liu, M., Lai, Y.-C. & Lin, W. Random temporal connections promote network synchronization. Phys. Rev. E 100, 032302 (2019).

Porfiri, M., Stilwell, D. J., Bollt, E. M. & Skufca, J. D. Random talk: random walk and synchronizability in a moving neighborhood network. Physica D 224, 102–113 (2006).

Kohar, V., Ji, P., Choudhary, A., Sinha, S. & Kurths, J. Synchronization in time-varying networks. Phys. Rev. E 90, 022812 (2014).

Petit, J., Lauwens, B., Fanelli, D. & Carletti, T. Theory of Turing patterns on time varying networks. Phys. Rev. Lett. 119, 148301 (2017).

Chen, L., Qiu, C. & Huang, H. Synchronization with on-off coupling: role of time scales in network dynamics. Phys. Rev. E 79, 045101 (2009).

Golovneva, O., Jeter, R., Belykh, I. & Porfiri, M. Windows of opportunity for synchronization in stochastically coupled maps. Physica D 340, 1–13 (2017).

Pereti, C. & Fanelli, D. Stabilizing Stuart-Landau oscillators via time-varying networks. Chaos Solitons Fractals 133, 109587 (2020).

Pecora, L. M. & Carroll, T. L. Master stability functions for synchronized coupled systems. Phys. Rev. Lett. 80, 2109–2112 (1998).

Zhou, J., Zou, Y., Guan, S., Liu, Z. & Boccaletti, S. Synchronization in slowly switching networks of coupled oscillators. Sci. Rep. 6, 35979 (2016).

Forrow, A., Woodhouse, F. G. & Dunkel, J. Functional control of network dynamics using designed Laplacian spectra. Phys. Rev. X 8, 041043 (2018).

Kuramoto, Y. Chemical Oscillations, Waves, and Turbulence, vol. 19 (Springer Science & Business Media, 2012).

Aranson, I. S. & Kramer, L. The world of the complex Ginzburg-Landau equation. Rev. Mod. Phys. 74, 99–143 (2002).

Kuchment, P. A. Floquet theory for partial differential equations, vol. 60 (Birkhäuser, 2012).

Zhang, Y. Synchronization on temporal networks, https://doi.org/10.5281/zenodo.4663523 (2021).

Blanes, S., Casas, F., Oteo, J.-A. & Ros, J. The Magnus expansion and some of its applications. Phys. Rep. 470, 151–238 (2009).

Hart, J. D., Schmadel, D. C., Murphy, T. E. & Roy, R. Experiments with arbitrary networks in time-multiplexed delay systems. Chaos 27, 121103 (2017).

Gross, T. & Blasius, B. Adaptive coevolutionary networks: a review. J. R. Soc. Interface 5, 259–271 (2008).

Schröder, M., Mannattil, M., Dutta, D., Chakraborty, S. & Timme, M. Transient uncoupling induces synchronization. Phys. Rev. Lett. 115, 054101 (2015).

Berner, R., Sawicki, J. & Schöll, E. Birth and stabilization of phase clusters by multiplexing of adaptive networks. Phys. Rev. Lett. 124, 088301 (2020).

Frasca, M., Buscarino, A., Rizzo, A., Fortuna, L. & Boccaletti, S. Synchronization of moving chaotic agents. Phys. Rev. Lett. 100, 044102 (2008).

Fujiwara, N., Kurths, J. & Díaz-Guilera, A. Synchronization in networks of mobile oscillators. Phys. Rev. E 83, 025101 (2011).

O’Keeffe, K. P., Hong, H. & Strogatz, S. H. Oscillators that sync and swarm. Nat. Commun. 8, 1504 (2017).

Levis, D., Pagonabarraga, I. & Díaz-Guilera, A. Synchronization in dynamical networks of locally coupled self-propelled oscillators. Phys. Rev. X 7, 011028 (2017).

Acknowledgements

Y.Z. acknowledges support from the Schmidt Science Fellowship.

Author information

Authors and Affiliations

Contributions

Y.Z. and S.H.S. conceived the project. Y.Z. performed the research. Y.Z. wrote the paper with input from S.H.S.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Peer review information Nature Communications thanks Duccio Fanelli and the other anonymous reviewer(s) for their contribution to the peer review of this work. Peer reviewer reports are available.

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Zhang, Y., Strogatz, S.H. Designing temporal networks that synchronize under resource constraints. Nat Commun 12, 3273 (2021). https://doi.org/10.1038/s41467-021-23446-9

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41467-021-23446-9

This article is cited by

-

Higher-order interactions shape collective dynamics differently in hypergraphs and simplicial complexes

Nature Communications (2023)

-

Emergence and suppression of chaos in small interdependent networks by localized periodic excitations

Nonlinear Dynamics (2023)

-

AI Pontryagin or how artificial neural networks learn to control dynamical systems

Nature Communications (2022)

-

Unified treatment of synchronization patterns in generalized networks with higher-order, multilayer, and temporal interactions

Communications Physics (2021)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.