Abstract

Structure-forming systems are ubiquitous in nature, ranging from atoms building molecules to self-assembly of colloidal amphibolic particles. The understanding of the underlying thermodynamics of such systems remains an important problem. Here, we derive the entropy for structure-forming systems that differs from Boltzmann-Gibbs entropy by a term that explicitly captures clustered states. For large systems and low concentrations the approach is equivalent to the grand-canonical ensemble; for small systems we find significant deviations. We derive the detailed fluctuation theorem and Crooks’ work fluctuation theorem for structure-forming systems. The connection to the theory of particle self-assembly is discussed. We apply the results to several physical systems. We present the phase diagram for patchy particles described by the Kern-Frenkel potential. We show that the Curie-Weiss model with molecule structures exhibits a first-order phase transition.

Similar content being viewed by others

Introduction

Ludwig Boltzmann defined entropy as the logarithm of state multiplicity. The multiplicity of independent (but possibly interacting) systems is typically given by multinomial factors that lead to the Boltzmann–Gibbs entropy and the exponential growth of phase space volume as a function of the degrees of freedom. In recent decades, much attention was given to systems with long-range and coevolving interactions that are sometimes referred to as complex systems1. Many complex systems do not exhibit an exponential growth of phase space2,3,4,5. For correlated systems, it typically grows subexponentially6,7,8,9,10,11,12,13,14, systems with superexponential phase space growth were recently identified as those capable of forming structures from its components5,15. A typical example of this kind are complex networks16, where complex behavior may lead to ensemble inequivalence17. The most prominent example of structure-forming systems are chemical reaction networks18,19,20. The usual approach to chemical reactions—where free particles may compose molecules—is via the grand-canonical ensemble, where particle reservoirs make sure that the number of particles is conserved on average. Much attention has been given to finite-size corrections of the chemical potential21,22 and nonequilibrium thermodynamics of small chemical networks23,24,25,26. However, for small closed systems, fluctuations in particle reservoirs might become nonnegligible and predictions from the grand-canonical ensemble become inaccurate. In the context of nanotechnology and colloidal physics, the theory of self-assembly27 gained recent interest. Examples of self-assembly include lipid bilayers and vesicles28, microtubules, molecular motors29, amphibolic particles30, or RNA31. The thermodynamics of self-assembly systems has been studied, both experimentally and theoretically, often dealing with particular systems, such as Janus particles32. Theoretical and computational work have explored self-assembly under nonequilibrium conditions33,34. A review can be found in Arango-Restrepo et al.35.

Here, we present a canonical approach for closed systems where particles interact and form structures. The main idea is to start not with a grand-canonical approach to structure-forming systems but to see within a canonical description which terms in the entropy emerge that play the role of the chemical potential in large systems. A simple example for a structure-forming system, the magnetic coin model, was recently introduced in Jensen et al.15. There n coins are in two possible states (head and tail), and in addition, since coins are magnetic, they can form a third state, i.e., any two coins might create a bond state. The phase space of this model, W(n), grows superexponentially, \(W(n) \sim {n}^{n/2}{e}^{2\sqrt{n}} \sim {e}^{n\mathrm{log}\,n}\). We first generalize this model to arbitrary cluster sizes and to an arbitrary number of states. We then derive the entropy of the system from the corresponding log multiplicity and use it to compute thermodynamic quantities, such as the Helmholtz free energy. With respect to Boltzmann–Gibbs entropy, there appears an additional term that captures the molecule states. By using stochastic thermodynamics, we obtain the appropriate second law for structure-forming systems and derive the detailed fluctuation theorem. Under the assumption that external driving preserves microreversibility, i.e., detailed balance of transition rates in quasi-stationary states, we derive the nonequilibrium Crooks’ fluctuation theorem for structure-forming systems. It relates the probability distribution of the stochastic work done on a nonequilibrium system to thermodynamic variables, such as the partial Helmholtz free energy, temperature, and size of the initial and final cluster states. Finally, we apply our results to several physical systems: we first calculate the phase diagram for the case of patchy particles described by the Kern–Frenkel potential. Second, we discuss the fully connected Ising model where molecule formation is allowed. We show that the usual second-order transition in the fully connected Ising model changes to first-order.

Results

Entropy of structure-forming systems

To calculate the entropy of structure-forming systems, we first define a set of possible microstates and mesostates. Let us consider a system of n particles. Each single particle can attain states from the set \({{\mathcal{X}}}^{(1)}=\{{x}_{1}^{(1)},\ldots ,{x}_{{m}_{1}}^{(1)}\}\). The superscript number (1) indicates that the states correspond to a single-particle state, and m1 denotes the number of these states. A typical set of states could be the spin of the particle {↑,↓}, or a set of energy levels. Having only single-particle states, the microstate of the system consisting of n particles is a vector (X1, X2, …, Xn), where \({X}_{k}\in {{\mathcal{X}}}^{(1)}\) is the state of kth particle. Let us now assume that any two particles can create a two-particle state. This two-particle state can be a molecule composed of two atoms, a cluster of two colloidal particles, etc. We call this state as a cluster. This two-particle cluster can attain states \({{\mathcal{X}}}^{(2)}=\{{x}_{1}^{(2)},\ldots ,{x}_{{m}_{2}}^{(2)}\}\). A microstate of a system of n particles is again a vector (X1, X2, …, Xn), but now either \({X}_{k}\in {{\mathcal{X}}}^{(1)}\) or \({X}_{k}\in {{\mathcal{X}}}^{(2)}\times {{\mathbb{Z}}}_{n}^{2}\). For instance, a state of particle k belonging to a two-particle cluster can be written as \({X}_{k}={x}_{1}^{(2)}({k}_{1},{k}_{2})\). The indices in the brackets tell us that the particle k belongs to the cluster of size two in the state \({x}_{1}^{(2)}\) and the cluster is formed by particles k1 and k2 (k1 < k2). Indeed, either k1 = k or k2 = k.

Now assume that particles can also form larger clusters up to a maximal size, m. Consider m as a fixed number, m ≤ n. Generally, clusters of size j have states \({{\mathcal{X}}}^{(j)}=\{{x}_{1}^{(j)},\ldots ,{x}_{{m}_{j}}^{(j)}\}\). The corresponding states of the particle are always elements from sets \({{\mathcal{X}}}^{(j)}\times {{\mathbb{Z}}}_{n}^{j}\) with the restriction that if the kth particle is in a state \({x}_{i}^{(j)}({k}_{1},\ldots ,{k}_{j})\) then kl < kl+1, for all l and one kl = k. Consider an example of four particles. Particles are either in a free state or they form a cluster of size two. A state of each particle is either s(1)—a free particle, or x(2)(i, j)—a cluster compound from particles i and j. As an example, a typical microstate is Ψ = (x(1), x(2)(2,3), x(2)(2,3), x(1)), which means that particles 1 and 4 are free and particles 2 and 3 form a cluster.

Now consider a mesoscopic scale, where the mesostate of the system is given only by the number of clusters in each state \({x}_{i}^{(j)}\). Let us denote \({n}_{i}^{(j)}\) as the number of clusters in state \({x}_{i}^{(j)}\). The mesostate is therefore characterized by a vector \({\mathbb{N}}=\left({n}_{i}^{(j)}\right)\), which corresponds to a frequency (histogram) of microstates. The normalization condition is given by the fact that the total number of particles is n, i.e., \({\sum }_{ij}j{n}_{i}^{(j)}=n\). For example, a mesostate, \({{\mathbb{N}}}_{{{\Psi }}}\), corresponding to a microstate Ψ is \({{\mathbb{N}}}_{\psi }=\left({n}^{(1)}=2,{n}^{(2)}=1\right)\), denoting that there are two free particles and one two-particle cluster.

The Boltzmann entropy36 of this mesostate is given by

where W is the multiplicity of the mesostate, which is the number of all distinct microstates corresponding to the same mesostate. To determine the number of all distinct microstates corresponding to a given mesostate, let us order the particles and number them from 1 to n. By permutation of the particles we obtain the different possible microstates. The number of all permutations is simply n!. However, some permutations correspond to the same microstate and we are overcounting. In our example with one cluster and two free particles, the permutations (4, 2, 3, 1) and (1, 3, 2, 4) correspond to the same microstate Ψ = (x(1), x(2)(2, 3), x(2)(2, 3), x(1)). However, permutation (2, 1, 3, 4) corresponds to the microstate \({{\Psi }}^{\prime} =({x}^{(2)}(1,3),{x}^{(1)},{x}^{(2)}(1,3),{x}^{(1)})\). This microstate is a distinct microstate corresponding to the same mesostate, \({{\mathbb{N}}}_{{{\Psi }}}\equiv {{\mathbb{N}}}_{{{\Psi }}^{\prime} }=\left({n}^{(1)}=2,{n}^{(2)}=1\right)\).

The number of microstates giving the same mesostate can be expressed as the product of configurations with the same state for each \({x}_{i}^{(j)}\). Let us begin with the particles that do not form clusters. The number of equivalent representations for one distinct state is \(\left({n}_{i}^{(1)}\right)!\), which corresponds to the number of permutations of all particles in the same state. For the cluster states, one can think about equivalent representations of one microstate in two steps: first permute all clusters, which gives \(\left({n}_{i}^{(j)}\right)!\) possibilities. Then, permute the particles in the cluster, which gives j! possibilities for every cluster, so that we end up with \({(j!)}^{{n}_{i}^{(j)}}\) combinations.

As an example, consider the case of four particles. First, we look at free particles that attain states \({x}_{1}^{(1)}\) or \({x}_{2}^{(1)}\). Let us consider a mesostate \({{\mathbb{N}}}_{1}=\left({n}_{1}^{(1)}=2,{n}_{2}^{(1)}=2\right)\), i.e., two particles in the first state and two particles in the second. The number of distinct microstates corresponding to the mesostate \({{\mathbb{N}}}_{1}\) is given by \(W({{\mathbb{N}}}_{1})=4!/(2!2!)=6\). All microstates that belong to the mesostate \({{\mathbb{N}}}_{1}\) are

Now imagine that the four particles are either free or form two-particle clusters. The microstate of a particle is either x(1) or x(2)(i, j). Let us consider a mesostate \({{\mathbb{N}}}_{2}=\left({n}^{(1)}=0,{n}^{(2)}=2\right)\), i.e., two clusters of size two. The number of distinct microstates is just \(W({{\mathbb{N}}}_{2})=4!/(2!{(2!)}^{2})=3\). The microstates corresponding to the mesostate \({{\mathbb{N}}}_{2}\) are

For example, a microstate (x(2)(2, 1), x(2)(2, 1), x(2)(4, 3), x(2)(4,3)) is the same as the first microstate because we just relabel 1↔2 and 3↔4. In summary, the multiplicity corresponding to \({x}_{i}^{(j)}\) is \(({n}_{i}^{(j)})!{(j!)}^{{n}_{i}^{(j)}}\), and we can express the total multiplicity as

Using Stirling’s formula \(\mathrm{log}\,n!\approx n\mathrm{log}\,n-n\), we get for the entropy

Using the normalization condition, \(n={\sum }_{ij}j{n}_{i}^{(j)}\), and combining the first term with the remaining ones, we get the entropy per particle in terms of ratios \({\wp }_{i}^{(j)}={n}_{i}^{(j)}/n\)

Normalization is given by \({\sum }_{ij}\,j{\wp }_{i}^{(j)}=1\). Therefore, \({p}_{i}^{(j)}=j{\wp }_{i}^{(j)}\) can be interpreted as the probability that a particle is a part of a cluster in state \({x}_{i}^{(j)}\). On the other hand, the quantity \({\wp }_{i}^{(j)}\) is the relative number of clusters. Since \({\sum }_{ij}\frac{j{n}_{i}^{(j)}}{n}=1\), we neglect the constant without changing the thermodynamic relations.

In the remainder, we denote thermodynamic quantities per particle by calligraphic script and total quantities by normal script. We express the entropy per particle as

or equivalently in terms of the probability distribution, \({p}_{i}^{(j)}\), as

Finite interaction range

Up to now, we assumed an infinite range of interaction between particles, which is unrealistic for chemical reactions, where only atoms within a short range form clusters. A simple correction is obtained by dividing the system into a fixed number of boxes: particles within the same box can form clusters, particles in different boxes cannot. We begin by calculating the multiplicity for two boxes. For simplicity, assume that they both contain n/2 particles. The multiplicity of a system with two boxes, \(\tilde{W}\left({n}_{i}^{(j)}\right)\), is given by the sum of all possible partitions of \({n}_{i}^{(j)}\) clusters with state \({x}_{i}^{(j)}\) into the first box (containing \({\,}^{1}{n}_{i}^{(j)}\) clusters) and the second box (containing \({\,}^{2}{n}_{i}^{(j)}\) clusters), such that \({n}_{i}^{(j)}={\,}^{1}{n}_{i}^{(j)}+{\,}^{2}{n}_{i}^{(j)}\). The multiplicity is therefore

where W is the multiplicity in Eq. (2). The dominant contribution to the sum comes from the term, where \({\,}^{1}{n}_{i}^{(j)}={\,}^{2}{n}_{i}^{(j)}={n}_{i}^{(j)}/2\), so that we can approximate the multiplicity by \(\tilde{W}({n}_{i}^{(j)})\approx W{({n}_{i}^{(j)}/2)}^{2}\). Similarly, for b boxes we obtain the multiplicity

By defining the concentration of particles as \(c=n/b\), the entropy per particle becomes

or, respectively,

Note that the entropy of structure-forming systems is both additive and extensive in the sense of Lieb and Yngvason37. It is also concave, ensuring the uniqueness of the maximum entropy principle. For more details and connections to axiomatic frameworks, see Supplementary Discussion.

Equilibrium thermodynamics of structure-forming systems

We now focus on the equilibrium thermodynamics obtained, for example, by considering the maximum entropy principle. Consider the internal energy

Using Lagrange multipliers to maximize the functional

leads to the following:

and the resulting distribution is

Here, we introduce the partial partition functions, \({{\mathcal{Z}}}_{j}=\frac{{c}^{j-1}}{j!}\sum _{i}{e}^{-\beta {\epsilon }_{i}^{(j)}}\), and the quantity Λ = e−α. Λ is obtained from

which is a polynomial equation of order m in Λ. The connection with thermodynamics follows through Eq. (13). By multiplying with \({\hat{\wp }}_{i}^{(j)}\) and summing over i,j, we get \({\mathcal{S}}(\wp )-\sum _{ij}{\hat{\wp }}_{i}^{(j)}-\alpha -\beta \ {\mathcal{U}}=0\). Note that \(\sum _{ij}{\hat{\wp }}_{i}^{(j)}=\sum _{ij}{\hat{n}}_{i}^{(j)}/n=M/n={\mathcal{M}}\) is the number of clusters, divided by the number of particles in the system. The number of clusters per particle is

The Helmholz free energy is thus obtained as

Finally, we can write the total partition function as

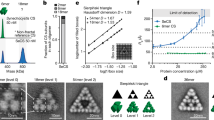

Comparison with the grand-canonical ensemble

To compare the presented exact approach with the grand-canonical ensemble, consider the simple chemical reaction, 2X⇌X2. Without loss of generality, assume that free particles carry some energy, ϵ. We calculate the Helmholtz free energy for both approaches in Supplementary Information. In Fig. 1, we show the corresponding specific heat, \(c(T)=-T\frac{{\partial }^{2}{\mathcal{F}}}{\partial {T}^{2}}\). For large systems, the usual grand-canonical ensemble approach and the exact calculation with a strictly conserved number of particles converge. For small systems, however, there appear notable differences. This is visible in Fig. 1, where only for large n and low concentrations, \(c\), the specific heat for the exact approach (squares) and the grand-canonical ensemble (triangles) become identical. The inset shows the ratio of the specific heat, cC/cGC − 1, vanishing for large n. For large systems, the exact approach and the the grand-canonical ensemble are equivalent.

The specific heat for the canonical ensemble (C) is drawn by squares, and the specific heat for the grand-canonical ensemble (GC) is drawn by triangles. n denotes the number of particles. For small systems the difference of the approaches becomes apparent. The inset shows the ratio of the specific heat calculated from the exact approach to the one obtained from the grand-canonical ensemble, cC/cGC − 1. For large n the quantity decays to zero for any temperature.

Relation to the theory of self-assembly

In many applications, the number of energetic configurations for each cluster size is so large that one is only interested in the distribution of cluster sizes. For this case, it is possible to formulate an effective theory considering contributions from all configurations that is known as the theory of self-assembly. For an overview, see Likos et al.27.

To compute the free energy in terms of the cluster-size distribution, we define the latter as

This is the distribution obtained from a free energy of the ideal gas of clusters, as discussed in Fantoni et al.32 for the case of Janus particles and in Vissersa et al.38 for the more general case of one-patch colloids. The entropy of the relative cluster size can be introduced as

By introducing the partial free energy as

the energy constraint takes the form of the expected free energy, averaged over cluster size, \({{\Phi }}=\mathop{\sum }\nolimits_{j = 1}^{m}{\wp }^{(j)}{{{\Phi }}}_{j}\). The cluster-size distribution is obtained by maximization of the functional

It is clear that Eq. (19) is the solution of the maximization. The free energy can be now expressed as

which has the same structure as when calculated in terms of \({\wp }_{i}^{(j)}\).

Examples for thermodynamics of structure-forming systems

We now apply the results obtained in the previous section to several examples of structure-forming systems. We particularly focus on how the presence of mescoscopic structures of clustered states leads to the macroscopic physical properties. In the presence of structure formation, there exists a phase transition between a free particle fluid phase and a condensed phase, containing clusters of particles. This phase transition is demonstrated in two examples.

The first example on soft-matter self-assembly describes the process of condensation of one-patch colloidal amphibolic particles. This condensation is relevant in applications in nanomaterials and biophysics. The second example covers the phase transition of the Curie–Weiss spin model for the situation where particles form molecules. In Supplementary Information, we discuss the additional examples of a magnetic gas and a size-dependent chemical potential.

Kern–Frenkel model of patchy particles

Recently, the theory of soft-matter self-assembly has successfully predicted the creation of various structures of colloidal particles, including clusters of Janus particles32, polymerization of colloids38, and the crystallization of multipatch colloidal particles39. Kern and Frenkel40 introduced a simple model to describe the self-assembly of amphibolic particles with two-particle interactions. rij denotes a unit vector connecting the centers of particles i and j, rij is the corresponding distance, and ni and nj are unit vectors encoding the directions of patchy spheres. The Kern–Frenkel potential was defined as

where

and

The characteristic quantity, \(\chi ={\sin }^{2}(\theta /2)\), is the particle coverage. In the theory of self-assembly, the cluster-size distribution is determined by the partial partition functions Eq. (19). Due to the enormous number of possible configurations, it is impossible to calculate \({{\mathcal{Z}}}_{j}\) analytically and simulation methods were introduced, including a grand-canonical Monte Carlo method and successive umbrella sampling; for a review, see Rovigatti et al.41. Instead of calculating the exact value of \({{\mathcal{Z}}}_{j}\), we use a stylized model based on Fantoni et al.32. There the partial partition function is parameterized as \(\frac{\mathrm{log}\,{{\mathcal{Z}}}_{j}}{j\epsilon }=b\tanh (aj)\), where b < 0 and a > 0 are the model parameters. While for small cluster sizes, the free energy per particle decreases linearly with the size, for larger clusters, it saturates at b. To calculate the average cluster size, Eq. (16), one has to solve the equation for Λ, Eq. (15). In Fig. 2, we show the phase diagram of the patchy particles for b = − 3 and a = 25 and n = 100. The average number of clusters, M, plays the role of the order parameter. In the phase diagram, one can clearly distinguish three phases. At high temperature, we observe the liquid phase, where most particles are not bound to others. At low temperatures, we have a condensed phase with macroscopic clusters. The two phases are separated by a coexistence phase, where both large clusters and unbounded particles are present. The coexistence phase (gray region) is characterized by a bimodal distribution that can be recognized by calculating the bimodality coefficient42. Results presented in Fig. 2 qualitatively correspond to results obatined in Fantoni et al.32 for the case of Janus particles with χ = 0.5.

The average cluster size (M) as a function of temperature (T) and concentration (c) is seen. The cluster size is given by the color and ranges from M = 0 (purple) to M = 100 (red). We observe three phases: the liquid and condensed phase are divided by a coexistence phase (gray area). Coexistence is characterized by a bimodal distribution that can be detected with a shift in the bimodality coefficient.

Curie–Weiss model with molecule formation

To discuss an example of a spin system with molecule states, consider the fully connected Ising model43,44,45,46 with a Hamiltonian that allows for possible molecule states

Molecule states neither feel the spin–spin interaction nor the external magnetic field, h. Therefore, the sum only extends over free particles. In a mean-field approximation, we use the magnetization, \(m=\frac{1}{n-1}{\sum }_{i\ne j}{\sigma }_{i}\), and express the Hamiltonian as HMF(σi) = −(Jm + h)∑j,freeσj. The self-consistency equation \(m=-\frac{\partial F}{\partial h}{| }_{h = 0}\) leads to an equation for m that is calculated numerically (Supplementary Information) and that is shown in Fig. 3. Contrary to the mean-field approximation of the usual fully connected Ising model (without molecule states), the phase transition is no longer second-order but becomes first-order. There exists a bifurcation where solutions for m = 0 and m > 0 are stable. The second-order transition is recovered for small systems, n→0. The critical temperature is shifted toward zero for increasing n. We performed Monte Carlo simulations to check the result of the mean-field approximation; see Supplementary Information.

Results of the mean-field approximation (solid lines) are in good agreement with Monte Carlo simulations (symbols). Errorbars show the standard deviation of the average value obtained from 1000 independent runs of the simulations (see Supplementary Information for more details). The inset shows the well-known result for the fully connected Ising model without molecule states. Without molecule formation, we observe the usual second-order transition. With molecules, the critical temperature decreases with the number of particles and the phase transition becomes first-order.

Stochastic thermodynamics of structure-forming systems

Consider an arbitrary nonequilibrium state given by \({\wp }_{i}^{(j)}\equiv {\wp }_{i}^{(j)}(t)\), and imagine that the evolution of the probability distribution is defined by a first-order Markovian linear master equation, as is usually assumed in stochastic thermodynamics47,48

\({w}_{ik}^{jl}\) are the transition rates. Note that probability normalization leads to \({\sum }_{ij}j{\dot{\wp }}_{i}^{(j)}=0\). Given that detailed balance holds, \({w}_{ik}^{jl}{\hat{\wp }}_{k}^{(l)}={w}_{ki}^{lj}{\hat{\wp }}_{i}^{(j)}\), the underlying stationary distribution, obtained from \({\dot{\wp }}_{i}^{(j)}=0\), coincides with the equilibrium distribution Eq. (14). From this we get

The time derivative of the entropy per particle is

Using the master Eq. (26) and some straightforward calculations, we end up with the usual second law of thermodynamics

where \(\dot{{\mathcal{Q}}}\) is the heat flow per particle and \({\dot{{\mathcal{S}}}}_{i}\) is the nonnegative entropy production per particle, see Supplementary Information.

Let us now consider a stochastic trajectory, x(τ) = (i(τ),j(τ)), denoting that at time τ, the particle is in state \({x}_{i(\tau )}^{(j(\tau ))}\). We introduce the time-dependent protocol, l(τ), that controls the energy spectrum of the system. The stochastic energy for trajectory x(τ) and protocol l(τ) can be expressed as \(\epsilon (\tau )\equiv {\epsilon }_{i(\tau )}^{(j(\tau ))}(l(\tau ))\). We assume microreversibility from which follows that detailed balance is valid even when the energy spectrum is time-dependent (due to protocol l(τ)). We define the stochastic entropy as

We show that \(\dot{s}({\bf{x}}(\tau ))={\dot{s}}_{i}({\bf{x}}(\tau ))+{\dot{s}}_{e}({\bf{x}}(\tau ))\), where \({\dot{s}}_{i}\) is the stochastic entropy production rate and \({\dot{s}}_{e}\) is the entropy flow equal to \(\dot{q}/T\), where \(\dot{q}\) is the heat flow in Supplementary Information.

The time-reversed trajectory is \(\tilde{{\bf{x}}}(\tau )=(i(T-\tau ),j(T-\tau ))\), and the time-reversed protocol is \(\tilde{l}(\tau )=l(T-\tau )\). The log-ratio of the probability, \({\mathcal{P}}\), of a forward trajectory and the probability, \(\tilde{{\mathcal{P}}}\), of the time-reversed trajectory under the time-reversed protocol is equal to \({{\Delta }}\sigma ={{\Delta }}{s}_{i}+{\mathrm{log}}\,\frac{{j}_{0}}{{\tilde{j}}_{0}}\), where j0 = j(τ = 0) and \({\widetilde{j}}_{0}=\widetilde{j}(\tau =0)\), see Supplementary Information. Hence, \({\mathrm{log}}\,\frac{{\mathcal{P}}({\bf{x}}(\tau ))}{\tilde{P}(\tilde{{\bf{x}}}(\tau ))}={{\Delta }}\sigma\), which leads to the fluctuation theorem49

Assuming that the initial state is an equilibrium state, introducing the stochastic free energy, f(τ) = ϵ(τ) − Ts(τ), and combining the first and the second law of thermodynamics, we get Δsi = β(w − Δf). The stochastic free energy of an equilibrium state is \(f({\hat{\wp }}_{i}^{(j)})=-j\frac{\alpha }{\beta }-\frac{1}{\beta }\), see Supplementary Information.

If we start in an equilibrium distribution with j(τ = 0) = j0 and the reverse experiment also starts in an equilibrium distribution with \(\tilde{j}(\tau =0)={\tilde{j}}_{0}\), by plugging this into Eq. (31) and a simple manipulation, we have

where Φj is the partial free energy Eq. (21). Finally, by a straightforward calculation, we obtain Crooks’ fluctuation theorem49,50

where \({{\Delta }}{{{\Phi }}}_{j}={{{\Phi }}}_{{\tilde{j}}_{0}}(\tilde{l}(0))-{{{\Phi }}}_{{j}_{0}}(l(0))\). For technical details, see Supplementary Information.

Discussion

We presented a straightforward way to establish the thermodynamics of structure-forming systems (e.g., molecules made from atoms or clusters of colloidal particles) based on the canonical ensemble with a modified entropy that is obtained by the proper counting of the system’s configurations. The approach is an alternative to the grand-canonical ensemble that yields identical results for large systems. However, there are significant deviations that might have important consequences for small systems, where the interaction range becomes comparable with system size. Note that our results are valid for large systems (in the thermodynamic limit) as well as small systems at nanoscales. We showed that fundamental relations such as the second law of thermodynamics and fluctuation theorems remain valid for structure-forming systems. In addition, we demonstrated that the choice of a proper entropic functional has profound physical consequences. It determines, for example, the order of phase transitions in spin models.

We mention that we follow a similar reasoning as has been used in the case of Shannon’s entropy: originally, Shannon’s entropy was derived by Gibbs in the thermodynamic limit using a frequentist approach to statistics (probability is given by a large number of repetitions). However, once the formula for entropy had been derived, its validity was extended beyond the thermodynamic limit, which corresponds to the Bayesian approach. It has been shown, e.g., by methods of stochastic thermodynamics, that the formula for the Shannon’s entropy and the laws of thermodynamics remain valid for systems of arbitrary size (with the exception of systems with quantum corrections) and arbitrarily far from equilibrium47. In this paper, we follow the same type of reasoning for the case of structure-forming systems.

Typical examples where our results apply are chemical reactions at small scales, the self-assembly of colloidal particles, active matter, and nanoparticles. The presented results might also be of direct use for chemical nanomotors51 and nonequilibrium self-assembly35. A natural question is how the framework can be extended to the well-known statistical physics of chemical reactions23,24,25,26 where systems are composed of more than one type of atom.

Data availability

Source Data are provided with this paper. All relevant data are available at: https://github.com/complexity-science-hub/Thermodynamics-of-structure-forming-systems.

References

Thurner, S, Klimek, P. & Hanel, R. Introduction to the Theory of Complex Systems (Oxford University Press, 2018).

Hanel, R. & Thurner, S. A comprehensive classification of complex statistical systems and an axiomatic derivation of their entropy and distribution functions. Europhys. Lett. 93, 20006 (2011).

Hanel, R. & Thurner, S. When do generalized entropies apply? How phase space volume determines entropy. Europhys. Lett. 96, 50003 (2011).

Hanel, R., Thurner, S. & Gell-Mann, M. How multiplicity determines entropy and the derivation of the maximum entropy principle for complex systems. Proc. Natl Acad. Sci. USA 111, 6905 (2014).

Korbel, J., Hanel, R. & Thurner, S. Classification of complex systems by their sample-space scaling exponents. New J. Phys. 20, 093007 (2018).

Tsallis, C. Possible generalization of Boltzmann-Gibbs statistics. J. Stat. Phys. 52, 479 (1988).

Rajagopal, A. K. Dynamic linear response theory for a nonextensive system based on the Tsallis prescription. Phys. Rev. Lett. 76, 3469 (1996).

Kaniadakis, G. Statistical mechanics in the context of special relativity. Phys. Rev. E 66, 056125 (2002).

Jizba, P. & Arimitsu, T. The world according to Renyi: thermodynamics of multifractal systems. Ann. Phys. 312, 17 (2004).

Anteneodo, C. & Plastino, A. R. Maximum entropy approach to stretched exponential probability distributions. J. Phys. A 32, 1089 (1999).

Lutz, E. & Renzoni, F. Beyond Boltzmann-Gibbs statistical mechanics in optical lattices. Nat. Phys. 9, 615–619 (2013).

Dechant, A., Kessler, D. A. & Barkai, E. Deviations from Boltzmann-Gibbs statistics in confined optical lattices. Phys. Rev. Lett. 115, 173006 (2015).

Jizba, P. & Korbel, J. Maximum entropy principle in statistical inference: case for non-shannonian entropies. Phys. Rev. Lett. 122, 120601 (2019).

Jizba, P. & Korbel, J. When Shannon and Khinchin meet Shore and Johnson: equivalence of information theory and statistical inference axiomatics. Phys. Rev. E 101, 042126 (2020).

Jensen, H. J., Pazuki, R. H., Pruessner, G. & Tempesta, P. Statistical mechanics of exploding phase spaces: ontic open systems. J. Phys. A 51, 375002 (2018).

Latora, V, Nicosia, V. & Russo, G. Complex Networks: Principles, Methods and Applications (Cambridge University Press, 2017).

Squartini, T., de Mol, J., denHollander, F. & Garlaschelli, D. Breaking of ensemble equivalence in networks. Phys. Rev. Lett. 115, 268701 (2015).

Berge, C. Graphs and Hypergraphs (North-Holland Mathematical Library, 1973).

Temkin, O. N., Zeigarnik, A. V. & Bonchev, D. G. Chemical Reaction Networks: a Graph-Theoretical Approach (CRC Press, 1996).

Flamm, C., Stadler, B. M. R. & Stadler, P. F. Generalized topologies: hypergraphs, chemical reactions, and biological evolution. in Advances in Mathematical Chemistry and Applications, 300–328 (Bentham Science Publishers, 2015).

Smit, B. & Frenkel, D. Explicit expression for finite size corrections to the chemical potential. J. Phys.: Condens. Matter 1, 8659 (1989).

Siepmann, J. I., McDonald, I. R. & Frenkel, D. Finite-size corrections to the chemical potential. J. Phys.: Condens. Matter 4, 679 (1992).

Chandler, D. & Pratt, L. R. Statistical mechanics of chemical equilibria and intramolecular structures of nonrigid molecules in condensed phases. J. Chem. Phys. 65, 2925–2940 (1976).

Kreuzer, H. J. Nonequilibrium Thermodynamics and its Statistical Foundations (Clarendon Press, 1981).

Cummings, P. T. & Stell, G. Statistical mechanical models of chemical reactions: analytic solution of models of A+B⇌AB in the Percus-Yevick approximation. Mol. Phys. 51, 253–287 (1984).

Schmiedl, T. & Seifert, U. Stochastic thermodynamics of chemical reaction networks. J. Chem. Phys. 126, 044101 (2007).

Likos, C. N., Sciortino, F., Zaccarelli, E. & Ziherl, P. Soft matter self-assembly. In Proc. International School of Physics "Enrico Fermi” 193 (IOS Press 2016).

Israelachvili, J. N., Mitchell, D. J. & Ninham, J. W. Theory of self-assembly of lipid bilayers and vesicles. Biochim. et Biophys. Acta 470, 185–201 (1977).

Aranson, I. S. & Tsimring, L. S. Theory of self-assembly of microtubules and motors. Phys. Rev. E 74, 031915 (2006).

Walther, A. & Muller, A. H. E. Janus particles: synthesis, self-assembly, physical properties, and applications. Chem. Rev. 113, 5194–5261 (2013).

Grabow, W. W. & Jaeger, L. RNA self-assembly and RNA nanotechnology. Acc. Chem. Res. 47, 1871–1880 (2014).

Fantoni, R., Giacometti, A., Sciortino, F. & Pastore, G. Cluster theory of Janus particles. Soft Matter 2011, 2419–2427 (2011).

Nguyen, M. & Vaikuntanathan, S. Design principles for nonequilibrium self-assembly. Proc. Natl Acad. Sci. USA 113, 14231–14236 (2016).

Bisker, G. & England, J. L. Nonequilibrium associative retrieval of multiple stored self-assembly targets. Proc. Natl Acad. Sci. USA 115, E10531–E10538 (2018).

Arango-Restrepo, A., Barragán, D. & Rubi, J. M. Self-assembling outside equilibrium: emergence of structures mediated by dissipation. Phys. Chem. Chem. Phys. 21, 17475–17493 (2019).

Boltzmann, L. Über das Arbeitsquantum, welches bei chemischen Verbindungen gewonnen werden kann. Annalen der Physik 258, 39–72 (1884).

Lieb, E. H. & Yngvason, J. The physics and mathematics of the second law of thermodynamics. Phys. Rep. 310, 1–96 (1999).

Vissersa, T., Smallenburga, F., Munao, G., Preisler, Z. & Sciortino, F. Cooperative polymerization of one-patch colloids. J. Chem. Phys. 140, 144902 (2014).

Preisler, Z., Vissers, T., Munao, G., Smallenburg, F. & Sciortino, F. Equilibrium phases of one-patch colloids with short-range attractions. Soft Matter 10, 5121–5128 (2014).

Kern, N. & Frenkel, D. Fluid-fluid coexistence in colloidal systems with short-ranged strongly directional attraction. J. Chem. Phys. 118, 9882–9889 (2003).

Rovigatti, L., Russo, J. & Romano, F. How to simulate patchy particles. Eur. Phys. J. E 41, 59 (2018).

Pfister, R., Schwarz, K., Janczyk, M., Dale, R. & Freeman, J. Good things peak in pairs: a note on the bimodality coefficient. Front. Psychol. 4, 700 (2013).

Griffiths, R. B., Weng, C.-Y. & Langer, J. S. Relaxation times for metastable states in the mean-field model of a ferromagnet. Phys. Rev. 149, 301 (1966).

Botet, R., Jullien, R. & Pfeuty, P. Size scaling for infinitely coordinated systems. Phys. Rev. Lett. 49, 478 (1982).

Gulbahce, N., Gould, H. & Klein, W. Zeros of the partition function and pseudospinodals in long-range Ising models. Phys. Rev. E 69, 036119 (2004).

Colonna-Romano, L., HarveyGould & Klein, W. Anomalous mean-field behavior of the fully connected Ising model. Phys. Rev. E 90, 042111 (2014).

Seifert, U. Stochastic thermodynamics: principles and perspectives. Eur. Phys. J. B 64, 423–431 (2008).

Esposito, M. & Van den Broeck, C. The three faces of the second law: I. Master equation formulation. Phys. Rev. E 82, 011143 (2010).

Esposito, M. & Van den Broeck, C. Three detailed fluctuation theorems. Phys. Rev. Lett. 104, 090601 (2010).

Crooks, G. E. Entropy production fluctuation theorem and the nonequilibrium work relation for free-energy differences. Phys. Rev. E 60, 2721 (1999).

Kagan, D. et al. Chemical sensing based on catalytic nanomotors: motion-based detection of trace silver. J. Am. Chem. Soc. 131, 12082–12083 (2009).

Acknowledgements

The authors acknowledge support from the Austrian Science fund Projects I 3073 and P 29252 and the Austrian Research Promotion agency FFG under Project 857136. The authors would like to thank Tuan Pham for helpful discussions.

Author information

Authors and Affiliations

Contributions

J.K., R.H., and S.T. conceptualized the work, S.D.L. performed the computational work, and all authors contributed to analytic calculations and wrote the paper.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Peer review information Nature Communications thanks Henrik Jensen, Fabien Paillusson and the other, anonymous, reviewer for their contribution to the peer review of this work. Peer reviewer reports are available.

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Source data

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Korbel, J., Lindner, S.D., Hanel, R. et al. Thermodynamics of structure-forming systems. Nat Commun 12, 1127 (2021). https://doi.org/10.1038/s41467-021-21272-7

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41467-021-21272-7

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.