Abstract

Quantum sensors are highly sensitive since they capitalise on fragile quantum properties such as coherence, while enabling ultra-high spatial resolution. For sensing, the crux is to minimise the measurement uncertainty in a chosen range within a given time. However, basic quantum sensing protocols cannot simultaneously achieve both a high sensitivity and a large range. Here, we demonstrate a non-adaptive algorithm for increasing this range, in principle without limit, for alternating-current field sensing, while being able to get arbitrarily close to the best possible sensitivity. Therefore, it outperforms the standard measurement concept in both sensitivity and range. Also, we explore this algorithm thoroughly by simulation, and discuss the T−2 scaling that this algorithm approaches in the coherent regime, as opposed to the T−1/2 of the standard measurement. The same algorithm can be applied to any modulo-limited sensor.

Similar content being viewed by others

Introduction

Supreme sensitivities are realisable by exploiting the coherence of quantum sensors1. For quantum-sensing applications, nitrogen-vacancy (NV) centres in diamond have attracted considerable attention due to their exceptional quantum-mechanical properties1,2, including long spin-coherence times3,4, and due to their great potential for far-field optical nanoscopy5,6,7,8. Furthermore, an increase in sensitivity can be gained for alternating current (AC) field sensing by prolonging the NV spin coherence with dynamical decoupling of the centre’s spin from its environment2,3,9,10,11,12. Therefore, AC field sensing is applied in various areas of physics, chemistry and biology: to detect single spins13,14,15, for nuclear magnetic-resonance of tiny sample-volumes16,17,18,19,20, for nanoscale magnetic-resonance imaging13,21,22,23 and to search for new particles beyond the standard model24,25. In these applications, both a wide range of the AC field amplitude and a high sensitivity are very important, because the magnitude of the AC field strongly depends on the distance r from the NV spin (r−3 in case of a magnetic dipole field). This outlines the most relevant variable for this field of research: the dynamic range, which is the ratio of the range to the sensitivity, the latter being a measure for the smallest measurable field amplitude.

In previous research, NV centres were utilised for sensitive high-dynamic range direct current (DC) magnetic field measurements. A theory paper26 discussed the application of a more general phase-estimation method27 to a single NV nuclear spin in diamond, read out with single-shot measurements. They combined Ramsey interferometry on the nuclear spin with different delays to improve the sensitivity via Bayes’ theorem applied to binary data, which precision, given full visibility, scaled as \({T}_{{\rm{meas}}}^{-1}\) (with Tmeas the measurement time), dubbed Heisenberg-like scaling28. Adaptive27 and non-adaptive28,29 approaches were discussed, but they found to their surprise that under more realistic circumstances, only the non-adaptive method could still show sub-\({T}_{{\rm{meas}}}^{-0.5}\) scaling, by applying different amounts of iterations in a linear way29. The range itself remained the same as with the standard measurement, but they improved the sensitivity for this range, hence improving the dynamic range. This theory was applied to the electron spin30 and the nuclear spin31 of the NV centres via the non-adaptive method. Indeed, they found that the uncertainty scaled sub-\({T}_{{\rm{meas}}}^{-0.5}\) (\({T}_{{\rm{meas}}}^{-0.77}\) 30 and \({T}_{{\rm{meas}}}^{-0.85}\) 31), while they improved the dynamic range by 8.530 and 7.431. More recently, in an experiment at low temperature the adaptive method showed improved results, with scaling close to \({T}_{{\rm{meas}}}^{-1}\) and a claimed improvement (compared to refs. 30,31) of the dynamic range by two orders of magnitude32.

A similar method for AC magnetic field sensing applied different order dynamic-decoupling sequences33. Their improvement of the dynamic range compared to a sequence with 16 π-pulses was about 26, and they explored the effect of the phase of the measured field in depth. Besides, one of the advantages of the previously reported dynamical sensitivity control11 was the increase in the range by 4000 times, up to a theoretical maximum of 5000 times. Their uncertainty for a single measurement was about double that of a similar standard measurement, while the required multi-measurement for the large range worsened the sensitivity further (which is the uncertainty times \(\sqrt{{T}_{{\rm{meas}}}}\)) by \(\sqrt{{N}_{\phi }}\) with Nϕ the number of phases applied in their method (the more phases, the larger the range, but each phase requires an additional measurement).

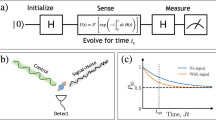

As to see why dynamic-range increasing algorithms are required, we look at the standard measurement. In the standard method to measure the AC magnetic field with NV centres with a synchronised Hahn-echo measurement2,3,9,10 (Fig. 1b), after initialisation into a superposition state with a laser pulse and the first microwave (MW) π/2-pulse, the AC magnetic field is applied. Hence, the spin rotates along the z-axis, thus its phase changes. Halfway the period of the magnetic field, a MW π-pulse flips the spin, such that the phase accumulated during the negative half of the period doubles the acquired phase. The final phase is essentially converted into a population with a final MW π/2-pulse before read-out with a laser pulse. The larger the amplitude of the field, the further the spin rotates, thus the final phase of the spin relates directly to this amplitude.

a Since the measured signal (red dotted line) oscillates due to the rotating spin, the magnetic field B can be determined up to a certain range only. Example ranges are indicated by different colours, and the resulting magnetic field for each range given the measured signal (horizontal black dashed line) with blue crosses. b The conventional and most sensitive way to measure an AC field is given at the top left, with π/2-pulses of a Hahn-echo sequence at the beginning and end of the period, and the π-pulse halfway at the inflection point. The measured area A0 can be reduced by moving the π/2-pulses closer to the centre (top right A0/2, bottom left A0/4, bottom right A0/8). c The fraction of the maximum area A0 vs the fraction of the longest time delay between the π/2-pulses for DC (green dotted line) and AC (cyan line) fields. For DC, this is linear, while for AC, this depends on the area of a sinusoid, which resembles a line near the inflection point, thus the relation becomes quadratic for short time delays. The area and thus uncertainty/range can be changed continuously by changing this delay. d For smaller measured areas, the probed field decreases proportionally, as does the effective frequency. In a, the signal for area A0 was shown, while here, the signals for areas A0/2 and A0/4 are drawn in similar fashion, which are offset for clarity. Combining several measured areas reduces the potential fields (vertical grey dashed arrows), thus increasing the overall range. e After measuring the signal (horizontal green dotted line), with the known uncertainty of the signal (green line along the vertical axis), the probability distribution of the field (magenta line along the horizontal axis) follows via the sinusoidal relationship (red dotted sine-shaped line for area A0/2). f The measurement with the largest area A0 gives several similar peaks in the probability distribution (blue line). However, when combining measurements with different areas (magenta dashed line added A0/2, olive dotted line added A0/4 as well), the number of remaining peaks reduces, while the sharpness (thus uncertainty) remains similar to that of the first measurement.

However, the phase of the spin can be determined only within 2π at best, thus the range of amplitudes is limited. If the sensor is more sensitive, the spin accumulates more phase, thus it revolves for 2π for a smaller AC field amplitude already. Therefore, the more sensitive the system, the smaller the range is. Thus, to benefit from extremely sensitive sensors which utilise entanglement34,35,36 without the limitation of their minuscule range, it is important to increase this range, while retaining their high sensitivity (thus low uncertainty) as much as possible. Moreover, since the measurements of the electron spin of a single NV centre consist of iterating a sequence many times to accumulate sufficient signal (photons for NV centres), the uncertainty scales as \({T}_{{\rm{meas}}}^{-0.5}\) 37.

In this work, we demonstrate and explore a non-adaptive algorithm for quantum sensors to measure AC fields with a large range for which the loss in sensitivity is negligible (thus maximising the dynamic range), both by measurement and extensive simulation. This shows that our algorithm scales nearly Heisenberg-like (here \({T}_{{\rm{meas}}}^{-2}\)) under realistic circumstances, thus even with the reduced contrast in the spin read-out (normally about 30% for NV centres); we explain why this happens, and its importance. Finally, we establish with our algorithm how to increase the range beyond the limit given by the best possible standard measurement, which in principle allows to extend it without bound. Throughout this paper, we use the electron spin of a single NV centre to measure magnetic fields with the phase of the spin coherence. However, the insights of this paper remain the same for similar quantum systems.

Results

Base algorithm

We start with explaining the base of our algorithm (illustrated in Fig. 1), and we clarify the terms referred to throughout the paper and supplementary information. The standard measurement for AC magnetic fields, applying the Hahn-echo sequence, has a limited range Brange = Bperiod/2 due to the sinusoidal shape (with period Bperiod) of the signal response to magnetic field amplitudes (Fig. 1a). The sensitivity is defined as \({\sigma }_{B}\sqrt{{T}_{{\rm{meas}}}}\) with σB the uncertainty of the sensed quantity (here magnetic field amplitude) and Tmeas the measurement time. For this standard measurement, \({\sigma }_{B}={\sigma }_{S}/{{\rm{grad}}}_{\max }\) where σS is the uncertainty in the measured signal of a single measurement (in our case shot-noise limited), and \({{\rm{grad}}}_{\max }\) the maximum gradient in the response3 (for example at the inflection point of the sinusoid in Fig. 1a). Therefore for these measurements, the shorter Bperiod (thus the smaller the range), the steeper the slope, thus the more sensitive, as mentioned earlier.

For the maximum sensitivity, a standard Hahn-echo sequence is performed over the full period of the magnetic field (Fig. 1b, for single NV centres this period should be shorter than about half the coherence time3). Since the acquired phase of the spin is proportional to the area under the magnetic field curve (see Supplementary Information of ref. 3), and hence Bperiod is proportional to this area as well, by reducing the measured area M times (Fig. 1b), the effective period increases by M (Fig. 1a, d). The time delay between the π/2-pulses in the sequence follows from integration to compute the probed area (Fig. 1c). Hereafter, measuring an area A means applying a sequence with this calculated time delay, and A0 is the maximum area. Thus, performing a measurement with a sufficiently small area would be the simplest approach for a large-range measurement. However, roughly comparing with the measurement over the maximum area, using the same number of iterations of the sequence (thus σS is similar) and the same measurement time (no optimisations), the gradient for the reduced area \({{\rm{grad}}}_{\max ,M}={{\rm{grad}}}_{\max ,1}/M\), hence its sensitivity is M times worse.

To improve the sensitivity for a large range, initially, a number of measurements with different areas are combined to uniquely define the magnetic field amplitude in a range limited by the measurement with the smallest area (Fig. 1d). Consequently, only part of the measurement time is spent on the largest area, which has the best sensitivity (but a small range), while the remainder of the time is spent on areas with a worse sensitivity. Therefore, the sensitivity of the combined measurement is strictly worse than this best sensitivity. Using halved areas (hence requiring at least \({\mathrm{log}\,}_{2}\left(M\right)\) additional areas) and the same number of iterations for each area and using no optimisations, for roughly the same σB, the measurement time for the combined sequence \({T}_{{\rm{meas,}}M}=\lceil 1+{\mathrm{log}\,}_{2}\left(M\right)\rceil {T}_{{\rm{meas}},1}\). Thus, the sensitivity would become \(\sqrt{\lceil 1+{\mathrm{log}\,}_{2}\left(M\right)\rceil }\) times worse, which is already a significant improvement compared to the straightforward case in the last paragraph.

The measurements resulting from different areas are combined via Bayes’ theorem. For area An, the measurement gives signal Sn (for example the crosses/circles/triangles on the sinusoids in Fig. 1a, d for three areas). The posterior probability distribution for the magnetic field B given measured signal Sn is

with \(P\left(B\right)\) the prior distribution, \(P\left({S}_{n}\right)\) independent of B, and

with \(S\left(B\right)\) the relation between the signal S and the applied field B (the sinusoids in Fig. 1a, d, e). Fig. 1e visualises these equations. \(P\left({S}_{n}| S\right)\) is a Poisson distribution (counting photons), but it can be approximated by a normal distribution (green line along y-axis in Fig. 1e) when more than ~10 photons arrive (with continuity correction). This is generally the case when the uncertainty is below the maximum uncertainty, as described later. For the first measurement, the prior distribution is flat since there is no initial knowledge about the field, and for the remainder of the measurements, the previous posterior is the new prior distribution. This results in a combined distribution as demonstrated in Fig. 1f.

Uncertainty

Before performing measurements and simulating the algorithm, a definition of merit is required that facilitates both the sensitivity and the range. Therefore, we choose the uncertainty in magnetic field σB, defined as the standard deviation of the magnetic field distribution centred around its maximum value. For sufficiently long measurement times, this gives the same result compared to applying the normal formula. However, the difference is visible for short measurement times, since it takes the range into account: we know the magnetic field is in the given range, which means that if the probability distribution is flat, the uncertainty is at its maximum \({\sigma }_{B,\max }={B}_{{\rm{range}}}/\sqrt{12}\) (see Supplementary Note 1). σB multiplied by \(\sqrt{{T}_{{\rm{meas}}}}\) gives the sensitivity, but this is unsuitable as figure of merit at short measurement times, since its limit is 0 nT Hz−1/2 for Tmeas = 0 s while approaching the asymptotic maximum uncertainty.

At first, since the uncertainty in a range is limited by the worst uncertainty in this range, we simulated the homogeneity of the uncertainty in the complete range. For a standard measurement, the usually reported uncertainty (\({\sigma }_{B}={\sigma }_{S}/{{\rm{grad}}}_{\max }\)) is only true for a single magnetic field amplitude at infinite measurement time, but otherwise it is worse and inhomogeneous. By combining two measurements with the same area but their response shifted by a phase of π/2, the uncertainty becomes more homogeneous, and guarantees a lower uncertainty than the standard measurement across its range. Thus, such a measurement consists of two phases (see for example Supplementary Fig. 7a). The homogeneity is improved further by increasing the number of phases; four phases are used throughout this paper. Supplementary Note 2 describes the details of homogeneity for our algorithm, and for previous ones it is explored in ref. 38. Since the uncertainty is nearly homogeneous, which field is applied is irrelevant while determining this uncertainty. Without prior knowledge or feedback, the uncertainty is ultimately limited by this combination of four phases for the largest area possible3.

Measurement compared with simulation

For our measurements, we use an n-type diamond sample. This was epitaxially grown onto a Ib-type (111)-oriented diamond substrate by microwave plasma-assisted chemical-vapour deposition with enriched 12C (99.998%) and with a phosphorus concentration of ~6 × 1016 atoms cm−3 3,39. We address individual electron spins residing in NV centres with a standard in-house built confocal microscope. MW pulses are applied via a thin copper wire, while magnetic fields are induced with a coil around the sample. All experiments are conducted at room temperature. We use single NV centres with T2s of about 2 ms.

We measure and simulate σB for five sequences to show the consistency between the measurements and simulations, and to get an idea of the working of the base of our algorithm. Please note that the only difference between our measurements and simulations is that the simulations calculate the signal otherwise measured using the known sequence, the set magnetic field amplitude, and the parameters of the measured NV centre. The analysis applied otherwise is exactly the same, thus realistic circumstances are simulated (small contrast, shot-noise as described in Supplementary Note 2, decay due to coherence time T2). The first sequence measures the largest area (here a single period of the field); the second, third and fourth use half, a quarter and an eighth of the largest area; and the fifth sequence includes these four sequences equally in a separate measurement/simulation. All include four phases as mentioned in the last subsection. Initially, the objective is to investigate the details of the algorithm itself, hence to nullify artefacts stemming from overhead times (which are implementation-dependent, and could include laser pulses, MW pulses and waiting times), these are ignored at first and explored in the discussion.

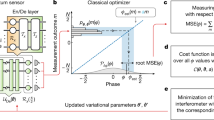

The results are shown in Fig. 2a, which reveals a number of important points. Firstly, the measurements closely match simulations. Secondly, below a certain measurement time, no knowledge about the field is gained, and hence σB is at its maximum. Thirdly, for longer measurement times, σB scales as \({T}_{{\rm{meas}}}^{-0.5}\). Fourthly, for the combined sequence there is a region in Tmeas where σB scales more steeply (here referred to as the steep region). Finally, as explained in the base-algorithm subsection, the uncertainty of the combined sequence is always higher than the uncertainty of the largest-area sequence, since the former spends measurement time on sequences other than this largest-area sequence which has the lowest uncertainty. Of course, the advantage of the combined sequence over the largest-area sequence is its larger range (please remember that \({B}_{{\rm{range}}}\propto {\sigma }_{B,\max }\), see Supplementary Note 1).

a Example uncertainty σB vs measurement time Tmeas for AC sensing (1 kHz) comparing simulations (blue dashed line for largest area A0; magenta, olive and grey dashed lines for A0/2, A0/4 and A0/8; green line for the equally combined sequence with these areas) with measurements (blue crosses, magenta circles, olive triangles, grey diamonds and green pluses, respectively; error bars indicate single standard deviations). The simulations use the same parameters and analysis as the measurements, they are not fits. b Optimised uncertainty vs measurement time for AC sensing (2 kHz) around the steep region. Green pluses give the measurement results, the error bars are single standard deviations. Blue triangles are the simulation results. Red circles display the estimation of the large-range limit. Cyan diamonds plot the Heisenberg limit for infinite T2 (see Supplementary Note 3 for details). The diagonal black dashed lines are guides to the eye for scaling \({T}_{{\rm{meas}}}^{-2}\) and \({T}_{{\rm{meas}}}^{-1.6}\). The overhead time is ignored in order to show the effect of the algorithm only. c Sensitivity vs magnetic field range (at 2 kHz). Blue dots with error bars (single standard deviations) give the sensitivity of our algorithm measured at each range excluding all overhead time. Grey circles plot the sensitivity including all overhead time assuming basic compact sequence design (see Supplementary Note 8), while grey pentagons plot the sensitivity assuming each area requires a separate period. The green diamonds plot the sensitivity for the standard measurement (excluding overhead time) extracted from the smallest area of our algorithm. Please note that our algorithm goes beyond the range possible with the standard measurement (vertical green dashed line) by combining non-integer-multiple areas (see Supplementary Note 6 for details). The horizontal magenta line indicates the sensitivity of the most sensitive standard measurement extracted from the largest area of our algorithm, which thus has a single small range only (the leftmost: ~102 nT). The horizontal black dashed line (on top of the magenta line) gives the fitted sensitivity of our algorithm.

Algorithm design

To design our eventual algorithm, its principle is explored in more detail with additional simulations. Fig. 3a shows the result for changing the relative number of iterations for each area, which reveals that there is a trade-off between the lowest uncertainty reached for measurement times at the steep region and at long measurement times. In other words, depending on Tmeas, a different relative number of iterations gives the lowest uncertainty. When fixing these (Figs. 2a and 3a), the uncertainty is not optimised, and thus it can display very steep curves that can be tuned to even sub-Heisenberg-like scaling (for example \({T}_{{\rm{meas}}}^{-4.0}\) in Fig. 3a).

a For different relative numbers of iterations of the subsequences (written directly left of each curve) of areas A, the uncertainty σB with respect to measurement time Tmeas changes. Depending on the measurement time, a different combination gives the lowest uncertainty. b Minimised uncertainty for a large-range sequence by optimally combining the subsequences (red line). The dashed lines give the uncertainty for single-area sequences (A0 blue, A0/2 magenta, A0/4 olive, A0/8 grey). See Supplementary Note 1 for maximum uncertainty ∝ range. c The relative number of iterations for each area (A0 blue crosses, A0/2 magenta circles, A0/4 olive triangles, A0/8 grey diamonds kept at 100) for each measurement time to minimise the uncertainty, which results in the red line in b. The vertical arrows indicate when a subsequence for its area turns on, since its relative number of iterations becomes significant. The green dashed line gives the relative difference between the most-sensitive small-range sequence (blue dashed line in b) compared to the optimally combined large-range sequence. This difference scales inversely with the measurement time. d When looking at the turning-on points (yellow crosses, fit with black dotted line) for many sequences with different areas (largest area blue line, smallest area red line), it scales as \({T}_{{\rm{meas}}}^{-2}\) for short measurement times. Please note that the optimally combined result in b scales as \({T}_{{\rm{meas}}}^{-0.98}\), since it includes relatively large areas only, equivalent to the lowest lines in this plot.

For our algorithm, we optimise the relative number of iterations at each measurement time to minimise the uncertainty. The result for this measurement-time-wise optimisation is plotted in Fig. 3b. This shows that the longer Tmeas, the closer the sensitivity gets to its ultimate limit, where the scaling approaches \({T}_{{\rm{meas}}}^{-0.5}\). At the steep region of this optimum, the scaling is \({T}_{{\rm{meas}}}^{-0.98}\).

When we look at Fig. 3c, which depicts the relative number of iterations, we can understand how our algorithm works. For very short Tmeas, all measurement time is allotted to the smallest area, since the larger areas are at their maximum uncertainty and hence cannot contribute. But for longer Tmeas, at some time the next area becomes relevant and thus turns on, since it can receive sufficient measurement time to lower σB below its maximum uncertainty. This continues until the largest area turns on, which then keeps increasing in relative importance, at which point the scaling of the uncertainty is about \({T}_{{\rm{meas}}}^{-0.5}\). Thus for longer Tmeas, the largest area receives increasingly more relative measurement time, meaning the uncertainty continuously approaches this ultimate uncertainty, as plotted by the green dashed line in Fig. 3c.

If we would increase the number of areas in the sequence, the uncertainty becomes steeper during the turning-on region (which is the steep region). Figure 3d plots the result for a large amount of areas, indicating that the uncertainty scales as \({T}_{{\rm{meas}}}^{-2}\) up to nearby the largest area. The scaling follows from the quadratic dependence of the area on the subsequence length (see Fig. 1c). Since closer to the largest area, this is not quadratic yet, it becomes less steep (lowest yellow crosses in Fig. 3d). The decay in coherence due to the finite T2 negatively effects the uncertainty as well in this region, further decreasing the steepness. Analogue for DC measurements, the uncertainty scales as \({T}_{{\rm{meas}}}^{-1}\) in the steep region. Supplementary Note 3 discusses scaling in more detail beyond the indication given here. When taking any overhead time into account, the effective measurement time decreases, thus the curves would become even steeper.

So far in the examples with our algorithm, we used halved areas (An = A0/2n for integer n ≥ 0). Even though the uncertainty is mostly defined by the largest area, and the range by the smallest, the middle areas are important for reaching the lowest uncertainty (see Fig. 3c: they partake in the optimal combination). Adding more areas at integer multiples of the smallest area decreases the uncertainty, though slightly (see Supplementary Note 4).

Algorithm measurement

In Fig. 2b, measurement results of our algorithm in the steep region are plotted (for details of the measurement see Supplementary Note 5), together with the Heisenberg limit (which is only true for a small range and infinite T2) and the approximate large-range limit explained in Supplementary Note 3. As mentioned before, and just like in Fig. 3d, the focus is on the scaling that originates from the algorithm, hence all overhead time is ignored. Our algorithm is very close to the limit, as could be expected since at long measurement times most time is spent on the sequence with the largest area. Moreover, our results scale approximately as \({T}_{{\rm{meas}}}^{-1.6}\), which is less steep than the Heisenberg-like scaling of \({T}_{{\rm{meas}}}^{-2}\), since our algorithm keeps approaching this limit.

When merely halving areas in a measurement sequence, its range is defined by the smallest area. Therefore, it would only improve the uncertainty with respect to the standard single-area measurement, but not the range. In this way, given a limit on the time delay between the π/2-pulses, for example owing to a maximum time resolution or waiting time requirements, the maximum range is restricted. However, the range of our algorithm is the inverse of the greatest common divisor of the frequencies in measured signal of all included areas (see Supplementary Note 6). For halved areas, since all larger areas are integer multiples of the smaller ones, this means that the greatest common divisor is the lowest frequency, thus the one related to the smallest area. To increase the range beyond this limit, we combine areas that are not integer multiples of each other. When purely looking at the range, combining two sequences for slightly different areas increases the range far beyond the standard measurement’s range. Thus in principle, the range can be extended unlimitedly. Adding the large areas as well, it is still possible to get arbitrarily close to the ultimate uncertainty (for details see Supplementary Note 6).

The dynamic range of our algorithm is explored with measurements in Fig. 2c, which plots the sensitivity with respect to the range of the measurement sequence. Initially, for each increase in the range, an additional subsequence of half the smallest area is added. However, for the final four ranges, a single area is added at 1.5, 1.25, 1.1 or 1.05 times the smallest area. To compare with shorter sequences and with other results fairly, the sensitivity is chosen instead of the uncertainty (to calculate the dynamic range) and the overhead time is still ignored. It is computed by combining measurements from both the left side and right side of the designed range (as explained before, given the homogeneity of the uncertainty in our algorithm, the applied magnetic field does not matter). The sensitivity for the standard measurement with the same range is plotted as well (derived from the smallest area of our algorithm), and the sensitivity of the most sensitive sequence (derived from the largest area of our algorithm), the latter having a small range only (~102 nT). Our algorithm is nearly as sensitive as the most sensitive sequence, and its range can go beyond that of a standard measurement. In these measurements, the maximum range was limited by our equipment only, and could be improved further.

Discussion

Given a fixed sequence, a subsequence contributes only to the result when the measurement time it receives is sufficiently long to lower the measured uncertainty below its maximum (see Fig. 3c). Therefore in our algorithm, the optimum sequence for a given measurement time includes contributing subsequences only. On the contrary, when combining subsequences in a fixed way with the least sensitive subsequence measured most often, for short measurement times, the more sensitive subsequences do not contribute, and hence their measurement time is wasted. This results in a steeper measurement time dependence in the same way as overhead time does. This illustrates one conclusion of Supplementary Note 3: a steeper dependence leads to a worse algorithm, since when decreasing the measurement time, the uncertainty increases more quickly for a steeper curve.

For our algorithm, if it is possible to choose for which subsequence to increase the number of iterations while measuring, the uncertainty can be minimised for all measurement times (red line in Fig. 3b), since the absolute number of iterations for each subsequence is monotonically increasing over measurement time (see Supplementary Note 7). Please note that it is known beforehand for which subsequence to increase the number of iterations, it does not depend on the measurement results, thus it is a non-adaptive method. Moreover, since our algorithm spends most time on the largest area, any overhead time (which is generally independent of the subsequence) is relatively as short as possible. This is visualised in Fig. 2c, which plots the sensitivities both with and without all potential overhead times, illustrating the overhead is negligible indeed.

For measurement times in the steep region, since quantum sensing is generally chosen for its high sensitivity, a sensor would rather unlikely be used given the high uncertainty. Therefore, this region and its scaling are fairly irrelevant: if a short measurement time is desired, less subsequences are required, which effectively puts the sensor just at the inflection point (when scaling starts to be \({T}_{{\rm{meas}}}^{-0.5}\)).

For measurement times beyond the steep region, σB and thus sensitivity are very close to the limit for a homogeneous range. This is still about \(\sqrt{2}\) worse than the standard sensitivity for a single field at infinite measurement time (Supplementary Fig. 2). It is possible to improve towards this by applying feedback of intermediate results during the measurement, and dropping all but two phases in the process to focus on the two phases with the field to measure located at their maximum gradient, which gives the smallest uncertainty. There is a trade-off between added complexity of such an adaptive measurement32 (real-time processing of data, changing the sequence during the measurement and/or set any phase in the measurement instead of just four with in-phase-quadrature modulation) and gained sensitivity (\(\sqrt{2}\) at best for infinite measurement time), even when ignoring the processing overhead. Moreover, even under these ideal circumstances, the dynamic range, rather relevant for large-range measurements, is actually \(\sqrt{2}\) worse for standard adaptive measurements compared to non-adaptive measurements (see Supplementary Note 2).

An important point ignored so far is how to implement this algorithm at all for AC fields, since its shape needs to be taken into account. As opposed to DC measurements, where the area can be reduced simply by shortening the time delay between the π/2 pulses proportionally, for AC it is more complicated, as illustrated in Fig. 1b, c. Moreover, it might seem that for each iteration another period of the magnetic field is required (resulting in the practical but non-optimal sensitivity plotted with pentagons in Fig. 2c), while for DC all measurements can be strung together, the latter limiting the measurement time. However, something similar is possible for AC fields, since the DC part cancels, as explained in Supplementary Note 8. In our results, we neglected the effect this stringing has on the total measurement time to focus on the working of the algorithm. However, since AC stringing is only slightly less effective than DC stringing, and since often most measurement time is dedicated to the largest area (which has no stringing disadvantage), it justifies the choice to ignore the overhead time of these stringing effects. This is explored in detail in Supplementary Note 8, which describes how to design compact measurement sequences (resulting in the practical closer-to-optimal sensitivity plotted with circles in Fig. 2c). Measurements with these compact sequences illustrate that the practical sensitivity, compared to the overhead-ignored sensitivity, would worsen with about 5% when all overhead time is included using a basic sequence design (see Supplementary Fig. 10).

Additionally, please note that the description of the algorithm focussed on areas to easily translate it to any field, such as DC fields or square waves. Moreover, the frequency of the AC field is not relevant, since for lower frequencies the largest area will not span a whole period, while for high frequencies additional π-pulses are required to optimise the largest area. This defines the lowest uncertainty, which our algorithm approaches for every situation. This uncertainty increases for lower frequencies, since a smaller area is measured within the coherence-limited time delay, while for higher frequencies it decreases, due to the increase in coherence time by a dynamic-decoupling sequence (just for the larger areas, the largest defining the lowest uncertainty). Of course, the shape of the area vs time-delay graph (Fig. 1c) depends on the shape of the field and the chosen pulse sequences.

For practical implementations of the algorithm, as in the example with a single NV centre, the reader is advised that the larger the range becomes, the more prominent the effects of off-resonance MW pulses become. For DC, this is even more important (see for example ref. 31), while for AC, the pulses are often near low fields (for example for the sensitivity-defining large area they are at the inflection points). Thus, care should be taken during the design depending on the chosen quantum system and the available technology.

As a final remark, applying the optimal number of iterations for a long measurement time gives a small chance to conclude the wrong field, since relatively little time is spent in the smaller range-defining areas (see Supplementary Note 9). However, the analysis does not return a single measured field amplitude, but a probability distribution of the field. As demonstrated in Supplementary Note 9, when the field is within a few σB of the actual field, there is a single pronounced peak in this distribution. Oppositely, there are multiple strong peaks if the expected field of the measurement is significantly different. Thus, such a result could easily be discarded (of course effectively slightly reducing the sensitivity to redo the measurement for these cases).

To conclude, we have introduced an ultra-high dynamic-range algorithm for measuring magnetic fields with a quantum sensor, such as a single NV centre, for which the uncertainty, and hence sensitivity, can be arbitrarily close to the ultimate uncertainty/sensitivity by increasing the measurement time. The maximum range depends on the smallest difference in areas attainable, which results in a larger range than possible with a standard measurement. As example, we demonstrated a dynamic range of ~ 107, an improvement of two orders of magnitude compared to previous algorithms32 (please note that a fair comparison between algorithms corrects the results for the coherence time, the minimum/maximum time delays, the applied spin-measurement method and the experimental equipment, as these change the results independent of the applied algorithm). Moreover, we explained the origin of Heisenberg-like scaling in algorithms and why steeper scaling indicates a worse algorithm. Our algorithm and its implications are the same for other modulo-limited sensors, thus it paves the way to optimally benefit from extremely sensitive entanglement-based sensors for large-range applications.

Data availability

The data that support the findings of this study are available from the corresponding author upon reasonable request.

References

Degen, C. L., Reinhard, F. & Cappellaro, P. Quantum sensing. Rev. Mod. Phys. 89, 035002 (2017).

Rondin, L. et al. Magnetometry with nitrogen-vacancy defects in diamond. Rep. Prog. Phys. 77, 056503 (2014).

Herbschleb, E. D. et al. Ultra-long coherence times amongst room-temperature solid-state spins. Nat. Commun. 10, 3766 (2019).

Bar-Gill, N., Pham, L. M., Jarmola, A., Budker, D. & Walsworth, R. L. Solid-state electronic spin coherence time approaching one second. Nat. Commun. 4, 1743 (2013).

Rittweger, E., Han, K. Y., Irvine, S. E., Eggeling, C. & Hell, S. W. STED microscopy reveals crystal colour centres with nanometric resolution. Nat. Photonics 3, 144–147 (2009).

Maurer, P. C. et al. Far-field optical imaging and manipulation of individual spins with nanoscale resolution. Nat. Phys. 6, 912–918 (2010).

Jaskula, J.-C. et al. Superresolution optical magnetic imaging and spectroscopy using individual electronic spins in diamond. Opt. Express 25, 11048–11064 (2017).

Jin, D. et al. Nanoparticles for super-resolution microscopy and single-molecule tracking. Nat. Methods 15, 415–423 (2018).

Taylor, J. M. et al. High-sensitivity diamond magnetometer with nanoscale resolution. Nat. Phys. 4, 810–816 (2008).

Wolf, T. et al. Subpicotesla diamond magnetometry. Phys. Rev. X 5, 041001 (2015).

Lazariev, A., Arroyo-Camejo, S., Rahane, G., Kavatamane, V. K. & Balasubramanian, G. Dynamical sensitivity control of a single-spin quantum sensor. Sci. Rep. 7, 6586 (2017).

Knowles, H. S., Kara, D. M. & Atatüre, M. Observing bulk diamond spin coherence in high-purity nanodiamonds. Nat. Mater. 13, 21–25 (2013).

Grinolds, M. S. et al. Nanoscale magnetic imaging of a single electron spin under ambient conditions. Nat. Phys. 9, 215–219 (2013).

Shi, F. et al. Single-protein spin resonance spectroscopy under ambient conditions. Science 347, 1135–1138 (2015).

Shi, F. et al. Single-DNA electron spin resonance spectroscopy in aqueous solutions. Nat. Methods 15, 697–699 (2018).

Aslam, N. et al. Nanoscale nuclear magnetic resonance with chemical resolution. Science 357, 67–71 (2017).

Mamin, H. J. et al. Nanoscale nuclear magnetic resonance with a nitrogen-vacancy spin sensor. Science 339, 557–560 (2013).

Glenn, D. R. et al. High-resolution magnetic resonance spectroscopy using a solid-state spin sensor. Nature 555, 351–354 (2018).

Boss, J. M., Cujia, K. S., Zopes, J. & Degen, C. L. Quantum sensing with arbitrary frequency resolution. Science 356, 837–840 (2017).

Schmitt, S. et al. Submillihertz magnetic spectroscopy performed with a nanoscale quantum sensor. Science 356, 832–837 (2017).

Rugar, D. et al. Proton magnetic resonance imaging using a nitrogen-vacancy spin sensor. Nat. Nanotechnol. 10, 120–124 (2014).

DeVience, S. J. et al. Nanoscale NMR spectroscopy and imaging of multiple nuclear species. Nat. Nanotechnol. 10, 129–134 (2015).

Perunicic, V. S., Hill, C. D., Hall, L. T. & Hollenberg, L. C. L. A quantum spin-probe molecular microscope. Nat. Commun. 7, 12667 (2016).

Rong, X. et al. Searching for an exotic spin-dependent interaction with a single electron-spin quantum sensor. Nat. Commun. 9, 739 (2018).

Budker, D., Graham, P. W., Ledbetter, M., Rajendran, S. & Sushkov, A. O. Proposal for a cosmic axion spin precession experiment (CASPEr). Phys. Rev. X 4, 021030 (2014).

Said, R. S., Berry, D. W. & Twamley, J. Nanoscale magnetometry using a single-spin system in diamond. Phys. Rev. B 83, 125410 (2011).

Higgins, B. L., Berry, D. W., Bartlett, S. D., Wiseman, H. M. & Pryde, G. J. Entanglement-free Heisenberg-limited phase estimation. Nature 450, 393–396 (2007).

Berry, D. W. et al. How to perform the most accurate possible phase measurements. Phys. Rev. A 80, 052114 (2009).

Higgins, B. L. et al. Demonstrating Heisenberg-limited unambiguous phase estimation without adaptive measurements. N. J. Phys. 11, 073023 (2009).

Nusran, N. M., Momeen, M. U. & Dutt, M. V. G. High-dynamic-range magnetometry with a single electronic spin in diamond. Nat. Nanotechnol. 7, 109–113 (2011).

Waldherr, G. et al. High-dynamic-range magnetometry with a single nuclear spin in diamond. Nat. Nanotechnol. 7, 105–108 (2011).

Bonato, C. et al. Optimized quantum sensing with a single electron spin using real-time adaptive measurements. Nat. Nanotechnol. 11, 247–252 (2015).

Nusran, N. M. & Dutt, M. V. G. Dual-channel lock-in magnetometer with a single spin in diamond. Phys. Rev. B 88, 220410 (2013).

Leibfried, D. et al. Toward Heisenberg-limited spectroscopy with multiparticle entangled states. Science 304, 1476–1478 (2004).

Nagata, T., Okamoto, R., O’Brien, J. L., Sasaki, K. & Takeuchi, S. Beating the standard quantum limit with four-entangled photons. Science 316, 726–729 (2007).

Jones, J. A. et al. Magnetic field sensing beyond the standard quantum limit using 10-spin NOON states. Science 324, 1166–1168 (2009).

Giovannetti, V., Lloyd, S. & Maccone, L. Advances in quantum metrology. Nat. Photonics 5, 222–229 (2011).

Nusran, N. M. & Dutt, M. V. G. Optimizing phase-estimation algorithms for diamond spin magnetometry. Phys. Rev. B 90, 024422 (2014).

Kato, H., Ogura, M., Makino, T., Takeuchi, D. & Yamasaki, S. N-type control of single-crystal diamond films by ultra-lightly phosphorus doping. Appl. Phys. Lett. 109, 142102 (2016).

Acknowledgements

The authors acknowledge the financial support from MEXT Q-LEAP (No. JPMXS0118067395), KAKENHI (No. 15H05868, 16H06326) and the Collaborative Research Program of ICR, Kyoto University (2019-103). They also thank Prof H. Kosaka for helpful discussions.

Author information

Authors and Affiliations

Contributions

E.D.H. designed the algorithm, performed the experiments/simulations/analyses and conceived the supplementary; H.K. grew the phosphorus-doped diamond, assisted by T.M. and S.Y.; N.M. supervised the work; E.D.H. and N.M. wrote the manuscript, and all authors discussed it.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Peer review information Nature Communications thanks the anonymous reviewer(s) for their contribution to the peer review of this work. Peer reviewer reports are available.

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Herbschleb, E.D., Kato, H., Makino, T. et al. Ultra-high dynamic range quantum measurement retaining its sensitivity. Nat Commun 12, 306 (2021). https://doi.org/10.1038/s41467-020-20561-x

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41467-020-20561-x

This article is cited by

-

Scalp attached tangential magnetoencephalography using tunnel magneto-resistive sensors

Scientific Reports (2022)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.