Abstract

The vast amount of design freedom in disordered systems expands the parameter space for signal processing. However, this large degree of freedom has hindered the deterministic design of disordered systems for target functionalities. Here, we employ a machine learning approach for predicting and designing wave-matter interactions in disordered structures, thereby identifying scale-free properties for waves. To abstract and map the features of wave behaviors and disordered structures, we develop disorder-to-localization and localization-to-disorder convolutional neural networks, each of which enables the instantaneous prediction of wave localization in disordered structures and the instantaneous generation of disordered structures from given localizations. We demonstrate that the structural properties of the network architectures lead to the identification of scale-free disordered structures having heavy-tailed distributions, thus achieving multiple orders of magnitude improvement in robustness to accidental defects. Our results verify the critical role of neural network structures in determining machine-learning-generated real-space structures and their defect immunity.

Similar content being viewed by others

Introduction

Disordered systems cover all regimes of structural phases, including periodic, quasiperiodic, and correlated or uncorrelated disordered structures, each of which has its carefully tailored strength and pattern of disorder. The classification of disorder according to microscopic structural information has thus attracted great attention in various fields, such as many-body systems1, network science2, and wave–matter interactions3. In wave physics, rich degrees of freedom in disordered systems enable exotic wave phenomena distinct from those of periodic or quasiperiodic systems, including strong4 or weak5 localizations, broadband responses in wave coupling6 or absorption7, and topological transitions with disorder-induced conductivity8. In particular, localization phenomena have received an extensive amount of attention as the origin of material phase transitions9 and as the toolkit for energy confinement3,10,11 that enables multimode lasing12 and nanoscale sensing13.

Traditional approaches for exploring disordered structures and their related wave behaviors have employed mapping between disordered structures and wave properties through different types of mathematical microstructural descriptors1, such as n-point probability, percolation, or cluster functions. Each descriptor unveils a specific aspect of structural patterns, which enables the classification of disordered structures according to their correlations and topologies and reveals the origin of distinct wave behaviors in each class of disorder. By including the descriptors in the cost function for the optimization process, numerous inverse design methods have also been developed for generating disordered structures from target wave properties: stochastic1,14, genetic15, or topological16 optimizations. However, traditional approaches are still challenging owing to the large design freedom inherited from disordered structures; thus, these approaches require very time-consuming and problem-specific processes to extract microstructural information at each stage of iterative and case-by-case design procedures. Until now, most works have focused on lower orders of microstructural descriptors (for example, two- or three-point probability functions) due to the significant complexity in calculating and interpreting higher-order descriptors1. However, even such simple descriptors have stimulated intriguing concepts and dynamics for disordered structures, such as hyperuniformity17,18,19 for disordered bandgap materials20.

To substitute the time-consuming and problem-specific process of calculating analytical microstructural descriptors while making full use of microstructural information, we can envisage the use of multiple-layer neural network (NN) models as data-driven descriptors to identify the relationship between disordered structures and wave behaviors. This deep-learning-based framework21,22, one of the powerful machine-learning (ML) tools, has proven successful for abstracting the features of data sets in pattern recognition, decision making, and language translation23,24 when carefully preprocessed data can be used. Because of its applicability to general-purpose data formats, deep learning has recently been extended to handle a number of physics problems25,26, such as classifications of crystals27 or topological order28, phase transitions and order parameters29,30,31, optical device designs32,33,34,35,36,37, and image reconstructions38. When we consider the vast amount of design freedom in disordered systems, deep learning will compose a powerful toolkit for resolving complexities in wave behaviors inside disordered structures, as shown in the inference of phases of matter using eigenfunctions26,31.

Here, we employ deep convolutional neural networks (CNNs)39 to identify the physical relationships between disordered structures and wave localization. The prediction of localization properties in disordered structures and the generation of necessary structures for target localizations are achieved with disorder-to-localization (D2L) and localization-to-disorder (L2D) CNNs, respectively, by transforming disordered structures to multicolor images. Using dropout40 or L2 regularization22 techniques to avoid overfitting, the CNNs implemented with Google TensorFlow41 are successfully trained with the expanded training data set of collective and individual lattice deformations, even drawing an extrapolatory inference for the untrained regimes of disorder. Most importantly, our CNN-based generative model identifies disordered structures with scale invariance following the power law. The heavy-tailed distributions in these scale-free structures lead to an increase of two to four orders of magnitude in robustness to unexpected structural errors when compared to conventional disordered structures having normal distributions. We show that the ML-generated scale-free material with hub atoms inherits the properties of robustness to accidental attacks (or defects) and relative fragility to targeted attacks (or modulations)42, in contrast to the democratic robustness of conventional normal-random disordered structures. The proposed approach can be applied to discover unexplored regimes of disorder in general wave systems and paves the way towards the design of materials by manipulating the ML architecture or the training process of NN structures.

Results

Imaging disorder and localization

We consider disordered structures obtained from the random deformation of a finite-size, two-dimensional (2D) square lattice of identical atoms (from Fig. 1a, b). Each atomic site of the lattice can describe a quantum-mechanical wavefunction of an atom, a phononic resonance of a metamaterial, or a propagating mode of an optical waveguide. The standard tight-binding Hamiltonian of an N-atomic system governed by the eigenvalue equation HΨm = EmΨm (m = 0, 1, …, N − 1) is

where ε is the on-site energy, âi† (or âi) is the creation (or annihilation) operator in the ith lattice site, tij is the random hopping integral between the ith and jth lattice sites (1 ≤ i, j ≤ N), and h.c. denotes the Hermitian conjugate. The disordered pattern is described by tij, which is determined by the spatial distance dij between the ith and jth lattice sites. For generality, we consider all orders of hopping between lattice sites by defining the near-field hopping condition tij = t0exp(−αdij), where the coefficients t0 and α are determined by an individual atomic Wannier function43. The distance dij is adjusted by the perturbation on the position of each atom site (see Eq. (5) in “Methods” section).

a A two-dimensional (2D) square lattice crystal and b its deformation that generates a disordered structure. c The projections of the 2D displacement of each atomic site along the x and y axes (Δx and Δy), which define the pixel values of the x axis and y axis color images, respectively. d, e The resulting two-color images obtained from the disordered structure in b. d The red-to-white image for the x-axis projection Δx and e the blue-to-white image for the y-axis projection Δy.

To develop D2L and L2D CNNs for the inference of wave–matter interactions, we devise a multicolor image representation of a disordered structure to be used as the CNN input. In this scenario, a 2D random displacement of an atomic site is projected along x and y spatial axes (Δx and Δy in Fig. 1c), and the resulting two (x and y) projected layers from the entire disordered structure are assigned as two-color images for CNNs (Fig. 1d, e). This projection can be directly extended into a 3D disordered structure, which leads to the sets of three-color images with a tensor form.

The localization property of the proposed structure is quantified by the normalized mode area44 wm, which is defined by the inverse of the inverse participation ratio (IPR) as

where ψms denotes the sth component of the eigenstate Ψm (s = 1, 2, …, N). The operation of the CNNs will then be the inference of the relationships between two-color images (disordered structures) and a 1D array (mode area). The 1D mode area array is reshaped into a single-color 2D image when it is used as the input to the L2D CNN, as discussed later.

Disorder-to-Localization CNN

Figure 2a shows the network structure of the D2L CNN. For the two-color image input, the CNN is composed of 3 cascaded convolution-pooling stages and the fully connected (FC) layer in front of the N-neuron output layer for the 1D array of wm (see “Methods” section for network parameters). Each convolution-pooling stage is a series of the convolution (Conv) layer with 3 × 3 filters to extract a feature map and the max-pooling layer to reduce the feature map size21,22,39. Because each mode has different degrees of localization, it is necessary to fairly estimate the regression error for a wide range of wm values. We thus employ the mean absolute percentage error (MAPE) as the cost function, which has been widely applied to regression and machine learning for forecasting models45,46. The MAPE cost function for the D2L CNN is expressed as

where wmML is the D2L-CNN-calculated mode area and wmTrue is the ground-truth mode area calculated by the Hamiltonian H in Eq. (1).

a The network structure of the disorder-to-localization (D2L) convolutional neural network (CNN). The details of the network parameters are shown in the “Methods” section. b–e The prediction of localization properties wm for b, d weakly disordered, and c, e strongly disordered structures. f, g Comparison of localizations between f the ground truth and g the D2L CNN prediction for a broad range of average mode area wavg of the test data set (1 × 104 realizations). The period of the 16 × 16 unperturbed square lattice (256 atoms) is set to 1 and the hopping parameters are t0 = 3.14 × 10−2 and α = 1.1454 throughout the manuscript. Although the on-site energy does not affect mode areas, we set the on-site energy as ε = 1 for energy spectra in the later discussion (Supplementary Note 6). Mode numbers m are sorted according to localization values in all examples throughout the manuscript.

The CNN is trained with the training data set of randomly deformed lattices and their localization properties. The expanded training sets of 2 × 104 realizations are obtained by introducing both collective and individual deformations of atomic sites to improve the inference ability of the CNN (see “Methods” section, Supplementary Note 1, and Supplementary Fig. 1 for details of the deformation process). The validation accuracy of the CNN defined by 1 – LD2L is monitored with the validation data set of 1 × 104 realizations during the training. After training with the error backpropagation method47, we calculate the test accuracy 1 − LD2L of the trained CNN with the test data set of 1 × 104 realizations (see “Methods” section, Supplementary Note 2, and Supplementary Figs. 2–4 for the extended discussion of the training process, such as avoiding overfitting and selecting the cost function). To monitor overfitting during and after the training, different random seeds for the deformation have been used in the training, validation, and test data sets.

Through the training process, we successfully trained D2L CNN to predict disorder-induced localization. Figure 2b–e shows the ground-truth and ML prediction of the mode areas wm from given disordered structures: nearly crystallized (or weak disorder) (Fig. 2b, d) and nearly random (or strong disorder) (Fig. 2c, e) structures. We also compare the ground-truth (Fig. 2f) and ML-predicted (Fig. 2g) localization for a wide range of localization values of the test data set (1 × 104 realizations). Figure 2f, g is obtained by plotting wm of each realization as a function of the mode number m and coloring each point according to the average mode area \(w_{\mathrm{avg}} = \mathop {\sum}\nolimits_{m = 1}^N {w_m} /N\) of each realization. We note that the ground-truth and ML-predicted localization shows excellent agreement for different values of wavg, achieving the test accuracy 1 − LD2L ~ 94.80%. The trained D2L CNN enables an almost instantaneous prediction of localization properties for each mode from a given disordered structure without solving the eigenvalue problem of the Hamiltonian H in Eq. (1).

Localization-to-disorder CNN

As demonstrated in a classic question48 of “Can one hear the shape of a drum?” and its answer49, the relationship between a wave property (such as the localization or eigenspectrum) and material (or structural) platforms is non-unique, allowing multiple possible structures for a given wave property. This one-to-many relationship between a wave property and matter has made it difficult to achieve a stable inverse design of material from a given wave property because the existence of many solutions (matter) for an input (wave property) prohibits the stable convergence of the optimization for a cost function. In the inverse design of material using the ML method, several different approaches have been proposed to resolve this non-uniqueness problem: training of the input through a trained NN32, training of the inverse NN from a trained forward NN33,34, reinforcement learning35, and iterative design of multiple NNs for each family of material structures with a given scattering property36. Considering the large design freedom in disordered structures, we employ the second approach33,34: training of the inverse L2D CNN using the pre-trained forward D2L CNN.

Figure 3a shows the network structure of the L2D CNN. The L2D CNN has the same network configuration as the D2L CNN (three convolution-pooling stages and the FC layer), except for the input and output layer (see “Methods” section for network parameters). The results of the L2D CNN from the 2N output neurons are reshaped to the two-color images that represent the spatial profile of the ML-generated disordered structure. To guarantee the physical reality of the obtained solution, we utilize the trained D2L CNN with the fixed weight and bias parameters, which instantaneously predicts the localization in ML-generated disordered structures. The connection of the L2D CNN with the trained D2L CNN constructs the localization-to-disorder-to-localization (L2D2L) network (Fig. 3b), which effectively operates as the autoencoder for localization data. The MAPE cost function of the L2D2L CNN is defined as

where wmML is the mode area calculated by the L2D2L CNN and wmTarget is the target mode area. The training of the entire L2D2L CNN (i.e., the partial training of the L2D CNN part) then allows the generation of disordered structures for the target wave localization (see “Methods” section, Supplementary Note 2, and Supplementary Fig. 2 for the training process, including the comparison between the validation and training accuracies). Training, validation, and test data sets are again prepared with different random seeds. We note that although the training data set for the L2D2L CNN consists of localization data obtained from the tight-binding Hamiltonian in Eq. (1), the microstructural information used for the target localization data is not applied to the training of the L2D2L CNN.

a The network structure of the localization-to-disorder (L2D) convolutional neural network (CNN). b The network structure of the localization-to-disorder-to-localization (L2D2L) CNN for training the L2D CNN with the pre-trained disorder-to-localization (D2L) CNN. The details of the network parameters are shown in the “Methods” section. c–e Comparisons of localizations between c the target values, d the machine-learning-predicted values from the L2D2L CNN, and e the Hamiltonian-calculated true values with the disordered structures generated by the L2D CNN for a broad range of average mode area wavg of the test data set (1 × 104 realizations).

The trained L2D CNN achieves a high test accuracy of 1 − LL2D2L ~ 94.21%. We compare the target localizations (Fig. 3c) to the ML-predicted localizations obtained through the L2D2L CNN (Fig. 3d) and the Hamiltonian-calculated true values of the disordered structures generated by the L2D CNN (Fig. 3e), using the same data plotting format with those in Fig. 2f, g. Despite the good agreement between the target and true values (~79.10% between Fig. 3c, e), a non-negligible discrepancy exists near the strong localization regime with large deformations of atomic sites. We note that this test accuracy degradation originates from the emergence of large deformations in the L2D-CNN-generated structure, which easily exceeds the maximum deformation value inside the training data sets for the D2L CNN. Therefore, the test accuracy of the L2D CNN is restricted by the limit of the extrapolation: the inference of the untrained regime of localization. The current good extrapolation could be further improved by expanding the range and type of training data sets and the number of hidden layers. However, we emphasize that large deformations themselves unveil a very intriguing but little recognized property in ML inverse designs32,33,34,35,36,37: the effect of the NN structure on the ML-generated real-space structure, which enables the identification of scale-free properties for waves, as discussed in the later sections.

Scale invariance in ML-generated microstructures

Due to the one-to-many relationship between a wave property and matter, the obtained ML-generated disordered structure corresponds to only one realization among numerous possible options for the target wave property. To examine the property of this ML identification, in Fig. 4a–f, we compare the ML-generated structure with a seed structure having very similar localization properties. For the regimes of weak (Fig. 4a–c) and strong (Fig. 4d–f) disorder, we use initial seed structures (Fig. 4a, d) to obtain the target localization (red curves in Fig. 4c, f). By employing this target localization as an input of the trained L2D CNN, we achieve the corresponding ML-generated structures (Fig. 4b, e), which represent localization properties that are very similar to those of seed structures (black curves in Fig. 4c, f). However, surprisingly, the ML-generated structures consist of lattice deformations that are evidently different from the original deformations in the seed structures. This result originates from the training process of the L2D CNN, which is achieved from the training of the L2D2L CNN using only localization data (Fig. 3a) without the data of seed microstructures. The identification of the microstructure from the target localization can then have many possible options and is determined by the network structure of the L2D CNN, as discussed later.

a–f Comparison between seed and machine-learning- (ML-) generated structures for a–c weak and d–f strong disorder. a, d Seed structures that provide the target localizations for the localization-to-disorder (L2D) convolutional neural network (CNN). b, e ML-generated disordered structures obtained from the L2D CNN. c, f Localization of seed (“Truth”, red dotted lines) and ML-generated (“ML Truth”, black dotted lines) structures, obtained from the tight-binding Hamiltonian in Eq. (1). g Statistical distributions of the strength of the lattice deformation Δr in the seed (green) and ML-generated (orange) structures for 3200 realizations satisfying 0.20 ≤ wavg ≤ 0.30 in the ML design. The first inset g-1 shows the log–log plot of g for the ML design, illustrating the power-law distribution. The orange line (composed of discretized points) represents the complementary cumulative distribution function (CDF) obtained from the data set in g. The black dashed line represents the best fit to the data using the method in refs. 50,51, showing the power-law fitting of (Δr)−3.79. The black dot represents the lower bound Δrmin = 0.432 to the power-law behavior. h The extended plot of the range 0.4 ≤ Δr ≤ 0.8 in g demonstrating the heavy-tailed distribution of the ML design.

For a deeper understanding of the differences between seed and ML-generated structures, we analyze the microstructural statistics of disordered structures by counting the distributions of the atomic site deformation Δri = [(Δrix)2 + (Δriy)2]1/2, where Δrix and Δriy are the displacements of the ith atom along the x and y axes, respectively (1 ≤ i ≤ N; see Eq. (5) in “Methods” section for seed structures, whereas Δri of ML-generated structures is obtained from the L2D CNN). Figure 4g shows the microstructural statistics of the seed and ML-generated structures for 3200 realizations where the ML-generated structures have an average mode area wavg in the range of 0.20 ≤ wavg ≤ 0.30. We note that the seed and ML-generated structures show apparently differentiated statistics. First, the microstructural statistics of the seed structures follows a normal distribution due to the definition of Eq. (5) in Methods. However, the analysis based on the maximum-likelihood fitting method with goodness-of-fit tests50,51 shows that the ML-generated class follows power-law statistics (Δr)−α (inset (g-1) of Fig. 4g) and possesses a heavy-tail distribution (Fig. 4h). To guarantee the reliability of the power-law fitting result, in Supplementary Note 3 and Supplementary Fig. 5, we analyze the power-law exponent α and the lower bound of the heavy tail Δrmin for a different number of realizations. The result shows that the unique statistical distribution of ML-generated structures is maintained for a small number of realizations, from roughly 101 (2560 atoms) to 102 (25,600 atoms) realizations, and even a single realization also provides a similar value of α and Δrmin.

The result in Fig. 4g, h demonstrates that ML-generated disordered structures are composed of scale-invariant deformation without the characteristic perturbation strength of Δr. This finding is in sharp contrast to the characteristic Δr of seed disordered structures, which is defined as the statistical center of their normal distribution. We note that the scale invariance of ML-generated disordered structures is universally observed for varying degrees of localization (Supplementary Note 4 and Supplementary Figs. 6 and 7), which strongly implies that the identification of scale-invariant disordered structures originates from the properties of the L2D CNN, not from the observed wave–matter interactions. In Supplementary Note 5 and Supplementary Fig. 8, we also study the fitting with other heavy-tailed distributions2,50, such as a power-law distribution with an exponential cutoff and a log-normal distribution, again confirming the reliability of the power-law fitting and the observed scale-free invariance.

Furthermore, the seed and ML-generated structures show very similar localization properties and distinct energy spectra (see Supplementary Note 6 and Supplementary Fig. 9 for energy spectra). Therefore, the L2D CNN enables the independent and systematic handling of a part of wave quantities: here, the conservation of localization with an altered energy spectrum through the transformation of microstructural statistics from normal-random to scale-invariant distributions. On the other side, among various possible realizations of disordered structures for a given wave property (here, localization) due to the one-to-many relationship between a wave and matter, the L2D CNN successfully selects one particular realization, which notably has the scale invariance in the structural profile.

Because the values of the output neurons in the L2D CNN determines the lattice deformation in ML-generated structures, the scale invariance in the deformation is strongly related to the NN structure (weight and bias distributions) of the L2D CNN. To examine this conjecture, in Fig. 5a, b, we analyze the relationship between the microstructural statistics of ML-generated structures and the network structure of the L2D CNN, including an ablation study. Among numerous weight and bias parameters (roughly 1.5 × 107 parameters each in the D2L and L2D CNNs), the most critical parameters are the weights from the FC layer (2048 neurons) to the output layer (512 neurons) in the L2D CNN, which are described by 2048 × 512 matrix. Although the weights and bias in hidden layers should also affect the output layer neurons indirectly, we expect that this indirect effect is less significant than the direct effect from the FC-output weights.

a Power-law fitting of the statistical distribution of Δr in machine-learning-generated disordered structures, which is the same figure with Fig. 4g-1 and is shown for comparison. b Power-law fitting of the statistical distribution of the weight strength parameter Wj. c, d Power-law fitting results of the realizations for c high (≥84%) and d low (≤69%) test accuracies. All of the fitting results are based on the same method using in Fig. 4g-1.

For wjix and wjiy, which denote the weights from the ith FC neuron to the jth x axis and y axis output neurons, respectively (1 ≤ i ≤ 2048 and 1 ≤ j ≤ 256 in our design), we define the strength of the weights to the jth output neuron (or the jth atom in an ML-generated disordered structure) as Wj = ∑i[(wjix)2 + (wjiy)2]. Figure 5b shows the CDF of Wj, which represents a very similar statistical distribution with Δr in terms of its inflection point (Fig. 5a) and also possesses the heavy-tailed distribution. The comparison between Fig. 5a, b provides clear-cut evidence of the effect of the NN structure on ML-generated materials. This finding becomes more evident by examining different ML architectures which lead to different weights and bias distributions. In Supplementary Note 7 and Supplementary Figs. 10–12, we conduct an ablation study by investigating another D2L and L2D CNN each with a single-pooling stage, which enables the control of the Wj distribution and the following alteration of ML-generated structures. We note that the heavy-tailed distribution is also maintained in this single-pooling-layer design.

To guarantee the generality of the observed scale-free properties, we also examine the effect of the test accuracy on the scale invariance (Fig. 5c, d). Among 3200 realizations in the example in Fig. 4, we select the sets of ML-generated structures having high (≥84%, 194 realizations, Fig. 5c) and low (≤69%, 191 realizations, Fig. 5d) test accuracies. We note that both cases possess very similar statistical distributions with the power-law fitting result. This result again confirms that the scale invariance originates from the statistical distribution of the ML architecture, not from the mismatch between the ML result and theoretical truth.

Scale-free materials with heavy tails and hub atoms

The scale invariance in microstructural statistics (Figs. 4 and 5) imposes intriguing characteristics on ML-generated disordered structures: “scale-free” properties on waves. Scale-free properties, which represent the power-law probabilistic distribution with heavy-tailed statistics, have been one of the most influential concepts in network science2,52, data science50,51, and random matrix theory53,54. In addition to its ubiquitous nature in biological, social, and technological systems2, the most important impact of scale-free property is the emergence of core nodes, also known as “hubs”, which possess a very large number of links or interactions, thereby governing signal transport inside the system2,42,52. The existence of hub nodes strongly correlates with the robustness of scale-free systems: fault-tolerant behaviors, especially superior robustness to accidental attacks and relative fragility to targeted attacks2,42,55, which can also be extended to other heavy-tailed distributions (Supplementary Note 5) without the perfect scale-free (or power-law) features.

Although the scale-free nature is well-defined in the infinite-size limit2,42,52, similar to the condition of ergodicity in random heterogeneous materials1, the power-law microstructural statistics of our systems with the heavy-tailed distribution leads to well-defined hub behaviors and the following robustness of wave properties. To investigate the robustness of our wave systems, we exert the “attack” (material imperfection, system error, or modulation) on each atom of disordered structures to adjust their localization properties. The attack is defined by the position perturbation of each atom as rix = rix0 + ρacos[ui(0, 2π)] and riy = riy0 + ρasin[ui(0, 2π)], where rix,y (or rix0,y0) are the x and y perturbed (or original) positions of the ith atom in a disordered structure, ρa is the perturbation strength, and ui(p, q) is the random value for the ith atom from the uniform random distribution between p and q.

Figure 6a, b shows the degree of robustness in two disordered structures with different microstructural statistics in terms of the perturbation of localization Δwm. The attack is applied to each atom of normal-random seed (wavg = 0.145) and scale-free ML-generated (wavg = 0.140) disordered structures, which have similar localization properties (~84.05% test accuracy). Remarkably, compared with the seed structure, the scale-free disordered structure shows a reduction of two to four orders of magnitude in the perturbation of mode areas Δwm, especially in highly localized modes (small m). This result demonstrates that the scale-free ML-generated disorder provides more robust localization properties than the normal-random seed disorder, following fault-tolerant behaviors in general scale-free systems2,42,55.

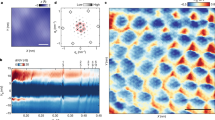

a, b Comparison of the robustness in a seed and b machine-learning- (ML-) generated disordered structures in terms of the perturbation in the mode area from the attack (or error) to a specific atom. Each red point denotes the perturbation of the mth mode area Δwm by imposing the attack to a specific atom. Blue solid lines represent the average perturbation. The blue dashed line in b is the average perturbation of the seed structure shown in a for comparison. c, d Normalized errors δ for attacking each atom in the c seed and d ML-generated disordered structures. Larger δ denotes a more sensitive response of wave localization to the attack. nx and ny denote the x and y indices in the unperturbed square lattice, respectively (0 ≤ nx, ny ≤ 15 for 256 atoms).

In Fig. 6c, d, we also demonstrate the existence of hub atoms, which is the origin of the robustness of scale-free systems2,42. To detect hub atoms in disordered structures, we define the normalized error δ that measures the average perturbation of the mode area Δwm obtained by attacking a specific atom. First, the apparent democratic response of δ, which represents the nearly equal perturbation of Δwm regardless of the perturbed atom position, is observed in the normal-random seed structure (Fig. 6c), following the signal behavior in Erdős–Rényi random systems2. In contrast, our ML-generated scale-free disordered structure is no longer democratic; some hub atoms derive more sensitive responses (larger δ) to the perturbation (Fig. 6d), following the signal behavior in Barabási-Albert scale-free systems2,42. This result successfully demonstrates the scale-free nature of our ML-generated disorder: highly robust localization to accidental perturbations and relatively fragile localization to targeted perturbations on hub atomic sites. Notably, because ML-generated disorder partly exhibits imperfect scale-free, but heavy-tailed distributions, the relationships between the scale-freeness, heavy-tailed distributions, and the defect robustness and modulation sensitivity will require further study.

Discussion

Because the ML-generated lattice deformation is strongly related to the weights of the output neurons in the L2D CNN, the apparent stochastic difference between normal-random seed structures and scale-free L2D CNN outputs raises an interesting open question; the training process of deep NNs could inherently possess the scale-free property. Recently, in random matrix theory, it was demonstrated that the correlations in the weight matrices of well-trained deep NNs can be fit to a power-law with the heavy-tailed distribution53,54. This theory enables the successful analogy between NN structures and ML-generated real-space wave structures in our result: the identification of the “heavy-tailed perturbation distribution” of atomic sites using the “heavy-tailed weight distribution” of CNN neurons. While these complex systems in software and real-space emphasize the role of the “heavy tail” in the statistical distribution, the optimization process of the CNNs in this viewpoint corresponds to the evolutionary process of realizing general scale-free systems2,42,52. We also note that exploring ML architectures to control scale-free properties or even realize non-scale-free distributions will inspire exciting future research in material science and wave physics. For the inverse design of disordered systems and the following statistical analysis of ML-generated materials in terms of scale-free properties, the applications of reinforcement learning, unsupervised learning, or well-trained NNs such as U-net56,57 would be an excellent topic for study. Notably, the utilization of an attention mechanism and the transformer architecture58 would also be helpful to model the relationships between atomic information in disordered structures or wave localization, as similar to an attention score to model the influence each word has on another in natural language processing.

In terms of interpreting tight-binding lattices as graph networks59,60,61, the change in lattice deformations through the ML method (from Fig. 4a, d to Fig. 4b, e) can be explained as the change of isoperimetric parameters62: the relative size of graph vertex subsets to the size of their boundary. Our result then corresponds to the control of isoperimetric parameters while preserving a wave property (here, localization), which enables the independent control of other wave properties (here, error robustness). The further study on graph properties of ML-generated structures is thus necessary to clarify the relationship between physical systems, their graph representations, and the ML-based design.

In terms of the previous studies63,64,65 on disordered structures with power-law correlation distributions, the power-law exponent α is closely related to localization lengths (Supplementary Note 4) and the emergence of an Anderson-like metal-insulator transition. Because we employed the 2D seed structures with an uncorrelated disorder, which eventually lead to Anderson localization according to the scaling theory of localization66, the allowed range of the power-law exponent should be restricted due to the similar degrees of localization. The finding of ML-generated structures with more tunable α is then necessary to extend the regime of disorder achieved by the ML approach. This goal would be enabled by utilizing seed structures that break the traditional assumptions in the scaling theory67, using inhomogeneity, anisotropy, and inelastic scattering.

In conclusion, we demonstrated that the ML approach can identify disordered materials with the target localization, which also have scale-free properties for waves. Instead of calculating microstructural descriptors for analyzing disordered structures, we proposed a CNN-based modeling approach for wave–matter interactions, by using convolution processes in CNNs to abstract and map the relationship between localization and disordered structures. With successful training results for the ML prediction and generation of wave–matter interactions, we showed that ML-generated disordered structures possess scale invariance with power-law microstructural statistics, which is the result of the structural properties of the ML architecture. We demonstrated that the ML-generated disordered structures can operate as scale-free materials for waves with excellent robustness in terms of wave behaviors and hub dynamics. Scale-free materials, or, more broadly, the materials with heavy-tailed distributions discovered by the ML method will stimulate a new design strategy for general wave devices in disordered structures, such as lasing12, energy storage68, and complete bandgap materials20. Scale invariance can significantly improve the performance of these wave devices by achieving robustness to accidental errors (such as unwanted defects in fabrications or measurements) and the fragility to targeted errors (such as the intended system modulation for active devices). Along with the ML generation of scale-free structures with target wave properties, our results will motivate further research on controlling CNN training or selecting different CNN architectures, which will enable the generation of wave structures analogous to various types of complex systems, such as small-world, modular, or self-similar systems. The obtained scale-free wave material will also offer new insight into other scale-free-type material structures, such as Lévy glasses with superdiffusion69,70: the microstructural realization of a random walk having step lengths with a power-law distribution.

Methods

Neural network structures and training hyperparameters of D2L and L2D CNNs

For N = 16 × 16 atomic lattices, the D2L CNN accepts two 16 × 16 images as the input (a disordered structure), whereas the L2D CNN accepts a single 16 × 16 image as the input (a reshaped mode area). For both D2L and L2D CNNs, the numbers of filters (or the thicknesses) of the convolution layers are set to 256, 512, and 1024 in the first, second, and third layers, respectively. We use zero padding to maintain the spatial dimensions of feature maps during the convolution processes22,39. The max-pooling layer leads to the down-sampling of feature maps by extracting the maximum value of each patch with a stride of 2 pixels21,22. The result of three cascaded convolution-pooling states is reshaped (or flattened) to a 1D array and is then connected to the FC layer, which has 2048 neurons. The FC layer is connected to the N-atomic output layer in the D2L CNN for the mode area wm and is connected to the 2N-atomic output layer in the L2D CNN for two-color images that describe a disordered structure.

To avoid a vanishing gradient problem during training, we use the rectified linear unit (ReLU) activation for each layer of CNNs. We utilize the Adam optimization function47 with an exponential decay in the learning rate for stable convergence and employ a mini-batch of size 10 for efficient learning. To avoid overfitting, we apply the dropout method40 in the D2L CNN by randomly keeping 50% of neurons in the FC layer during training and apply the L2 regularization22 in the L2D CNN (TensorFlow scale parameter: 0.05) to suppress excessively large values of weights. The learning processes of the D2L and L2D CNNs are shown in Supplementary Note 2. All ML computations were performed on a single desktop computer with two NVIDIA GeForce RTX 2080 Ti GPUs.

Deformation of lattices for data sets

To train the CNNs, avoiding overfitting to a certain type of disordered structures, the carefully preprocessed training data set has to cover a wide range of the relationship between disordered structures and localization from large to small values of \(w_{\mathrm{avg}} = \mathop {\sum}\nolimits_{m = 1}^N {w_m} /N\). For this purpose, we assign the collective and individual deformations of atomic sites as

where Δrix and Δriy denote the displacements of the ith atom along the x and y axes (1 ≤ i ≤ N), respectively; ui(p, q) is the random value for the ith atom from the uniform random distribution between p and q; ρ is the amplitude of the collective displacement of all atoms, and σ is the amplitude of the individual displacement of each atom. The strengths of the collective and individual deformations are randomly assigned for each realization of the data set, as ρ = ρmaxu(0, 1) and σ = σmaxu(0, 1), where u(a, b) is the random value assigned to each realization from the uniform random distribution between a and b. We set ρmax = 0.6 and σmax = 0.6 for all examples in this manuscript. The comparison between collective and individual deformations through different values of ρmax and σmax are shown in Supplementary Note 1.

Data availability

The data that support the plots and other findings of this study are available from the corresponding author upon request.

Code availability

All code developed in this work will be made available from the corresponding author upon request.

References

Torquato, S. Random Heterogeneous Materials: Microstructure and Macroscopic Properties. Vol. 16 (Springer Science & Business Media, 2002).

Barabási, A.-L. Network Science (Cambridge University Press, 2016).

Wiersma, D. S. Disordered photonics. Nat. Photon. 7, 188–196 (2013).

Anderson, P. W. Absence of diffusion in certain random lattices. Phys. Rev. 109, 1492 (1958).

Van Albada, M. P. & Lagendijk, A. Observation of weak localization of light in a random medium. Phys. Rev. Lett. 55, 2692 (1985).

Jiang, X. et al. Chaos-assisted broadband momentum transformation in optical microresonators. Science 358, 344–347 (2017).

Hsu, C. W., Goetschy, A., Bromberg, Y., Stone, A. D. & Cao, H. Broadband coherent enhancement of transmission and absorption in disordered media. Phys. Rev. Lett. 115, 223901 (2015).

Stützer, S. et al. Photonic topological Anderson insulators. Nature 560, 461 (2018).

Chabé, J. et al. Experimental observation of the Anderson metal-insulator transition with atomic matter waves. Phys. Rev. Lett. 101, 255702 (2008).

Wiersma, D. S., Bartolini, P., Lagendijk, A. & Righini, R. Localization of light in a disordered medium. Nature 390, 671 (1997).

Segev, M., Silberberg, Y. & Christodoulides, D. N. Anderson localization of light. Nat. Photon 7, 197–204 (2013).

Liu, J. et al. Random nanolasing in the Anderson localized regime. Nat. Nanotechnol. 9, 285–289 (2014).

Sheinfux, H. H. et al. Observation of Anderson localization in disordered nanophotonic structures. Science 356, 953–956 (2017).

Yeong, C. & Torquato, S. Reconstructing random media. Phys. Rev. E 57, 495 (1998).

Weber, T. & Bürgi, H.-B. Determination and refinement of disordered crystal structures using evolutionary algorithms in combination with Monte Carlo methods. Acta Crystallogr. A 58, 526–540 (2002).

Eschenauer, H. A. & Olhoff, N. Topology optimization of continuum structures: a review. Appl. Mech. Rev. 54, 331–390 (2001).

Torquato, S. & Stillinger, F. H. Local density fluctuations, hyperuniformity, and order metrics. Phys. Rev. E 68, 041113 (2003).

Torquato, S., Zhang, G. & Stillinger, F. Ensemble theory for stealthy hyperuniform disordered ground states. Phys. Rev. X 5, 021020 (2015).

Torquato, S. Hyperuniform states of matter. Phys. Rep. 745, 1 (2018).

Man, W. et al. Isotropic band gaps and freeform waveguides observed in hyperuniform disordered photonic solids. Proc. Natl Acad. Sci. USA 110, 15886–15891 (2013).

LeCun, Y., Bengio, Y. & Hinton, G. Deep learning. Nature 521, 436 (2015).

Goodfellow, I., Bengio, Y. & Courville, A. Deep Learning (MIT Press, 2016).

Silver, D. et al. Mastering the game of Go with deep neural networks and tree search. Nature 529, 484 (2016).

Mnih, V. et al. Human-level control through deep reinforcement learning. Nature 518, 529 (2015).

Carleo, G. et al. Machine learning and the physical sciences. Rev. Mod. Phys. 91, 045002 (2019).

Ohtsuki, T. & Mano, T. Drawing phase diagrams of random quantum systems by deep learning the wave functions. J. Phys. Soc. Jpn. 89, 022001 (2020).

Ziletti, A., Kumar, D., Scheffler, M. & Ghiringhelli, L. M. Insightful classification of crystal structures using deep learning. Nat. Commun. 9, 2775 (2018).

Rodriguez-Nieva, J. F. & Scheurer, M. S. Identifying topological order through unsupervised machine learning. Nat. Phys. 15, 790–795 (2019).

Carrasquilla, J. & Melko, R. G. Machine learning phases of matter. Nat. Phys. 13, 431 (2017).

Broecker, P., Carrasquilla, J., Melko, R. G. & Trebst, S. Machine learning quantum phases of matter beyond the fermion sign problem. Sci. Rep. 7, 1–10 (2017).

Ohtsuki, T. & Ohtsuki, T. Deep learning the quantum phase transitions in random two-dimensional electron systems. J. Phys. Soc. Jpn. 85, 123706 (2016).

Peurifoy, J. et al. Nanophotonic particle simulation and inverse design using artificial neural networks. Sci. Adv. 4, eaar4206 (2018).

Liu, Z., Zhu, D., Rodrigues, S. P., Lee, K.-T. & Cai, W. Generative model for the inverse design of metasurfaces. Nano Lett. 18, 6570–6576 (2018).

Liu, D., Tan, Y., Khoram, E. & Yu, Z. Training deep neural networks for the inverse design of nanophotonic structures. ACS Photon 5, 1365–1369 (2018).

Sajedian, I., Badloe, T. & Rho, J. Optimisation of colour generation from dielectric nanostructures using reinforcement learning. Opt. Express 27, 5874–5883 (2019).

Baxter, J. et al. Plasmonic colours predicted by deep learning. Sci. Rep. 9, 8074 (2019).

Ma, W., Cheng, F. & Liu, Y. Deep-learning-enabled on-demand design of chiral metamaterials. ACS Nano 12, 6326–6334 (2018).

Rivenson, Y., Zhang, Y., Günaydın, H., Teng, D. & Ozcan, A. Phase recovery and holographic image reconstruction using deep learning in neural networks. Light Sci. Appl. 7, 17141 (2018).

Krizhevsky, A., Sutskever, I. & Hinton, G. E. Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 25, 1097–1105 (2012).

Srivastava, N., Hinton, G., Krizhevsky, A., Sutskever, I. & Salakhutdinov, R. Dropout: a simple way to prevent neural networks from overfitting. J. Mach. Learn. Res. 15, 1929–1958 (2014).

Abadi, M. et al. Tensorflow: Large-scale machine learning on heterogeneous distributed systems. Preprint at http://arxiv.org/abs/1603.04467 (2016).

Barabási, A.-L. & Bonabeau, E. Scale-free networks. Sci. Am. 288, 60–69 (2003).

Ashcroft, N. W., Mermin, N. D. & Rodriguez, S. Solid State Physics (Cengage Learning, 1976).

Schwartz, T., Bartal, G., Fishman, S. & Segev, M. Transport and Anderson localization in disordered two-dimensional photonic lattices. Nature 446, 52–55 (2007).

Yu, R., Li, Y., Shahabi, C., Demiryurek, U. & Liu, Y. Deep learning: a generic approach for extreme condition traffic forecasting. In Proceedings of the 2017 SIAM international Conference on Data Mining 777–785 (2017).

Yildiz, B., Bilbao, J. I. & Sproul, A. B. A review and analysis of regression and machine learning models on commercial building electricity load forecasting. Renew. Sust. Energ. Rev. 73, 1104–1122 (2017).

Kingma, D. P. & Ba, J. Adam: a method for stochastic optimization. Preprint at http://arxiv.org/abs/1412.6980 (2014).

Kac, M. Can one hear the shape of a drum? Am. Math. Monthly 73, 1–23 (1966).

Gordon, C., Webb, D. L. & Wolpert, S. One cannot hear the shape of a drum. Bull. Am. Math. Soc. 27, 134–138 (1992).

Clauset, A., Shalizi, C. R. & Newman, M. E. Power-law distributions in empirical data. SIAM Rev. 51, 661–703 (2009).

Alstott, J. & Bullmore, D. P. powerlaw: a Python package for analysis of heavy-tailed distributions. PLoS ONE 9, e85777 (2014).

Barabási, A.-L. & Albert, R. Emergence of scaling in random networks. Science 286, 509–512 (1999).

Martin, C. H. & Mahoney, M. W. Implicit self-regularization in deep neural networks: Evidence from random matrix theory and implications for learning. Preprint at http://arxiv.org/abs/1810.01075 (2018).

Martin, C. H. & Mahoney, M. W. Heavy-tailed Universality predicts trends in test accuracies for very large pre-trained deep neural networks. Preprint at http://arxiv.org/abs/1901.08278 (2019).

Cohen, R., Erez, K., Ben-Avraham, D. & Havlin, S. Breakdown of the internet under intentional attack. Phys. Rev. Lett. 86, 3682 (2001).

Ronneberger, O., Fischer, P. & Brox, T. U-net: convolutional networks for biomedical image segmentation. In International Conference on Medical image computing and computer-assisted intervention 234–241 (2015).

Rosu, R. A., Schütt, P., Quenzel, J. & Behnke, S. Latticenet: fast point cloud segmentation using permutohedral lattices. Preprint at http://arxiv.org/abs/1912.05905 (2019).

Vaswani, A. et al. Attention is all you need. Adv. Neural Inform. Process. Syst. 30, 5998–6008 (2017).

Kollár, A. J., Fitzpatrick, M. & Houck, A. A. Hyperbolic lattices in circuit quantum electrodynamics. Nature 571, 45–50 (2019).

Yu, S., Piao, X., Hong, J. & Park, N. Interdimensional optical isospectrality inspired by graph networks. Optica 3, 836–839 (2016).

Yu, S., Piao, X. & Park, N. Topological hyperbolic lattices. Phys. Rev. Lett. 125, 053901 (2020).

Hoory, S., Linial, N. & Wigderson, A. Expander graphs and their applications. Bull. Am. Math. Soc. 43, 439–561 (2006).

De Moura, F. A. & Lyra, M. L. Delocalization in the 1D Anderson model with long-range correlated disorder. Phys. Rev. Lett. 81, 3735 (1998).

Izrailev, F. & Krokhin, A. Localization and the mobility edge in one-dimensional potentials with correlated disorder. Phys. Rev. Lett. 82, 4062 (1999).

Croy, A., Cain, P. & Schreiber, M. The role of power-law correlated disorder in the Anderson metal-insulator transition. Eur. Phys. J. B 85, 165 (2012).

Abrahams, E., Anderson, P., Licciardello, D. & Ramakrishnan, T. Scaling theory of localization: absence of quantum diffusion in two dimensions. Phys. Rev. Lett. 42, 673 (1979).

Sheng, P. Introduction to Wave Scattering, Localization and Mesoscopic Phenomena Vol. 88 (Springer Science & Business Media, 2006).

Liu, C. et al. Enhanced energy storage in chaotic optical resonators. Nat. Photon. 7, 473–478 (2013).

Bertolotti, J. et al. Engineering disorder in superdiffusive Levy glasses. Adv. Funct. Mater. 20, 965–968 (2010).

Burresi, M. et al. Weak localization of light in superdiffusive random systems. Phys. Rev. Lett. 108, 110604 (2012).

Acknowledgements

We acknowledge financial support from the National Research Foundation of Korea (NRF) through the Global Frontier Program (S.Y., X.P., N.P.: 2014M3A6B3063708), the Basic Science Research Program (S.Y.: 2016R1A6A3A04009723), and the Korea Research Fellowship Program (X.P., N.P.: 2016H1D3A1938069), all funded by the Korean government.

Author information

Authors and Affiliations

Contributions

S.Y. and N.P. conceived the idea presented in the manuscript. S.Y. and X.P. developed the theory and ML codes using Google TensorFlow. N.P. encouraged S.Y. and X.P. to investigate disordered systems for waves using ML and network theory while supervising the findings of this work. All authors discussed the results and contributed to the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Peer review information Nature Communications thanks Nima Dehmamy and the other, anonymous reviewers for their contribution to the peer review of this work. Peer review reports are available.

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Yu, S., Piao, X. & Park, N. Machine learning identifies scale-free properties in disordered materials. Nat Commun 11, 4842 (2020). https://doi.org/10.1038/s41467-020-18653-9

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41467-020-18653-9

This article is cited by

-

Heavy tails and pruning in programmable photonic circuits for universal unitaries

Nature Communications (2023)

-

Raman microspectroscopy for microbiology

Nature Reviews Methods Primers (2021)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.