Abstract

Optical imaging techniques, such as light detection and ranging (LiDAR), are essential tools in remote sensing, robotic vision, and autonomous driving. However, the presence of scattering places fundamental limits on our ability to image through fog, rain, dust, or the atmosphere. Conventional approaches for imaging through scattering media operate at microscopic scales or require a priori knowledge of the target location for 3D imaging. We introduce a technique that co-designs single-photon avalanche diodes, ultra-fast pulsed lasers, and a new inverse method to capture 3D shape through scattering media. We demonstrate acquisition of shape and position for objects hidden behind a thick diffuser (≈6 transport mean free paths) at macroscopic scales. Our technique, confocal diffuse tomography, may be of considerable value to the aforementioned applications.

Similar content being viewed by others

Introduction

Scattering is a physical process that places fundamental limits on all optical imaging systems. For example, light detection and ranging (LiDAR) systems are crucial for automotive, underwater, and aerial vehicles to sense and understand their surrounding 3D environment. Yet, current LiDAR systems fail in adverse conditions where clouds, fog, dust, rain, or murky water induce scattering. This limitation is a critical roadblock for 3D sensing and navigation systems, hindering robust and safe operation. Similar challenges arise in other macroscopic applications relating to remote sensing or astronomy, where an atmospheric scattering layer hinders measurement capture. In microscopic applications, such as biomedical imaging and neuroimaging1, scattering complicates imaging through tissue or into the brain, and is an obstacle to high-resolution in vivo imaging2. Robust, efficient imaging through strongly scattering media in any of these applications is a challenge because it generally requires solving an inverse problem that is highly ill-posed.

Several different approaches have been proposed to address the challenging problem of imaging through and within scattering media. The various techniques can be broadly classified as relying on ballistic photons, interference of light, or being based on diffuse optical tomography. Ballistic photons travel on a direct path through a medium without scattering and can be isolated using time-gating3,4, coherence-gating5,6, or coherent probing and detection of a target at different illumination angles7. By filtering out scattered photons, the effects of the scattering media can effectively be ignored. While detecting ballistic photons is possible in scattering regimes where the propagation distance is small (e.g., optical coherence tomography8), ballistic imaging becomes impractical for greater propagation distances or more highly scattering media because the number of unscattered photons rapidly approaches zero. Moreover, 3D ballistic imaging typically requires a priori knowledge of the target position in order to calibrate the gating mechanism. Alternatively, methods based on interference of light exploit information in the speckle pattern created by the scattered wavefront to recover an image9,10,11; however, these techniques rely on the memory effect, which holds only for a limited angular field of view, making them most suited to microscopic scales. Other interference-based techniques use wavefront shaping to focus light through or within scattering media, but often require invasive access to both sides of the scattering media12. Guidestar methods13 similarly use wavefront shaping, typically relying on fluorescence14,15,16 or photoacoustic modulation17,18,19 to achieve a sharp focus. Finally, another class of methods reconstructs objects by explicitly modeling and inverting scattering of light. For example, non-line-of-sight imaging techniques invert scattering off of a surface or through a thin layer20,21,22,23,24,25,26, but do not account for diffusive scattering. Diffuse optical tomography (DOT) reconstructs objects within thick scattering media by modeling the diffusion of light from illumination sources to detectors placed around the scattering volume27,28. While conventional CMOS detectors have been used for DOT29,30, time-resolved detection2,31,32,33,34,35,36 is promising because it enables direct measurement of the path lengths of scattered photons.

In all cases, techniques for imaging through or within scattering media operate in a tradeoff space: as the depth of the scattering media increases, resolution degrades. So while ballistic imaging and interference-based techniques can achieve micron-scale resolution at microscopic scales37, for highly scattering media at large scales the resolution worsens and key assumptions fail. For example, the number of ballistic photons drops off, the memory effect no longer holds, and coherent imaging requires long reference arms or becomes a challenge due to large bandwidth requirements38. DOT operates without a requirement for isolating ballistic photons or exploiting interference of light. As such, it is one of the most promising directions for capturing objects obscured by highly scattering media at meter-sized scales or greater with centimeter-scale resolution. Still, current techniques for DOT are often invasive, requiring access to both sides of the scattering media35,39, limited to 2D reconstruction, or they require computationally expensive iterative inversion procedures with generally limited reconstruction quality40.

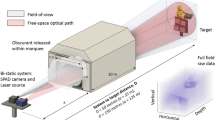

Here, we introduce a technique for noninvasive 3D imaging through scattering media: confocal diffuse tomography (CDT). We apply this technique to a complex and challenging macroscopic imaging regime, modeling and inverting the scattering of photons that travel through a thick diffuser (≈6 transport mean free paths), propagate through free space to a hidden object, and scatter back again through the diffuser. Our insight is that a hardware design specifically patterned after confocal scanning systems (such as commercial LiDARs), combining emerging single-photon-sensitive, picosecond-accurate detectors, and newly developed signal processing transforms, allows for an efficient approximate solution to this challenging inverse problem. By explicitly modeling and inverting scattering processes, CDT incorporates scattered photons into the reconstruction procedure, enabling imaging in regimes where ballistic imaging is too photon inefficient to be effective. CDT enables noninvasive 3D imaging through thick scattering media, a problem which requires modeling and inverting diffusive scattering and free-space propagation of light to a hidden object and back. The approach operates with low computational complexity at relatively long range for large, meter-sized imaging volumes.

Results

Experimental setup

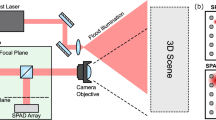

In our experiments, measurements are captured by illuminating points on the surface of the scattering medium using short (≈35 ps) pulses of light from a laser (see Fig. 1). The pulsed laser shares an optical path with a single-pixel, single-photon avalanche diode (SPAD), which is focused on the illuminated point and detects the returning photons (see Supplementary Fig. 1). The SPAD is time gated to prevent saturation of the detector from the direct return of photons from the surface of the scattering medium, preserving sensitivity and bandwidth for photons arriving later in time from the hidden object. A pair of scanning mirrors controlled by a two-axis galvanometer scan the laser and SPAD onto a grid of 32 by 32 points across a roughly 60 by 60 cm area on the scattering medium.

a A pulsed laser and time-resolved single-photon detector raster-scan the surface of the scattering medium. b Light diffuses through the medium, is back-reflected by the hidden object, and diffuses back through the medium to the detector. c Returning photons from the hidden object are captured by the detector over time, with earlier arriving photons being gated out (dashed line). SG scanning galvanometer, BS beamsplitter, OL objective lens, SPAD single-photon avalanche diode, TCSPC time-correlated single-photon counter.

The pulsed laser source has a wavelength of 532 nm and is configured for a pulse repetition rate of 10 MHz with 400 mW average power. For the scattering medium, we use a 2.54-cm thick slab of polyurethane foam. We estimate the scattering properties of the foam by measuring the temporal scattering response and fitting the parameters using a nonlinear regression (see Methods and Supplementary Note 1). The estimated value of the absorption coefficient (μa) is 5.26 × 10−3 ± 5.5 × 10−5cm−1 and the reduced scattering coefficient (\({\mu }_{{\rm{s}}}^{\prime}\)) is 2.62 ± 0.43cm−1. Here, the confidence intervals indicate possible variation in the fitted parameters given uncertainty in the modeling coefficients (see Supplementary Figs. 2 and 3). Thus the length of one transport mean free path is ~3.8 ± 0.6 mm, which is several times smaller than the total thickness of the slab, allowing us to approximate the propagation of light through the foam using diffusion.

Image formation model

To model light transport through the scattering medium, we solve the diffusion equation for the slab geometry of our setup. In this geometry, the physical interface between the scattering medium and the surrounding environment imposes boundary conditions that must be considered. A common approximation is to use an extrapolated boundary condition where the diffusive intensity is assumed to be zero at a flat surface located some extrapolation distance, ze, away from either side of the slab. In other words, for a slab of thickness zd, this condition states that the diffusive intensity is zero at z = −ze and z = zd + ze. As we detail in Supplementary Note 2, the value of ze depends on the amount of internal reflection of diffusive intensity due to the refractive index mismatch at the medium-air interface41. To simplify the solution, we further assume that incident photons from a collimated beam of light are initially scattered isotropically at a distance \({z}_{0}=1/{\mu }_{{\rm{s}}}^{\prime}\) into the scattering medium41,42,43.

The solution of the diffusion equation satisfies the extrapolated boundary condition by placing a positive and negative (dipole) source about z = −ze such that the total diffusive intensity at the extrapolation distance is zero. However, a single dipole source does not satisfy the boundary condition at z = zd + ze. Instead, an infinite number of dipole sources is required, where the dipole of the near interface (z = −ze) is mirrored about the far interface, which is then mirrored about the near interface, and so on, as illustrated in Supplementary Fig. 4. The positions of these positive and negative sources are44

The resulting solution to the diffusion equation is41,44

where ϕ is the power transmitted through the slab per unit area, \({{\bf{r}}}_{{\bf{0}}}\in {\Omega }_{0}=\{({r}_{0,x},{r}_{0,y},{r}_{0,z})\in {\mathbb{R}}\times {\mathbb{R}}\times {\mathbb{R}}\ | \ {r}_{0,z}=0\}\) is the position illuminated by the laser and imaged by the detector, and \({{\bf{r}}}_{{\bf{1}}}\in {\Omega }_{{z}_{{\rm{d}}}}=\{({r}_{1,x},{r}_{1,y},{r}_{1,z})\in {\mathbb{R}}\times {\mathbb{R}}\times {\mathbb{R}}\ | \ {r}_{1,z}={z}_{{\rm{d}}}\}\) is a spatial position on the far side of the scattering medium (see Fig. 1). We also have that c and t are the speed of light within the medium and time, respectively, and D is the diffusion coefficient, given by \(D={(3({\mu }_{{\rm{a}}}+{\mu }_{{\rm{s}}}^{\prime}))}^{-1}\). Generally, truncating the solution to 7 dipole pairs (i.e., i = 0, ±1, ±2, ±3) is sufficient to reduce the error to a negligible value44.

The complete measurement model, consisting of diffusion of light through the scattering medium, free-space propagation to and from the hidden object, and diffusion back through the scattering medium is given as

Here, the measurements τ(t, r0) are described by integrals over three propagation operations (see Fig. 1): (1) the diffusion of light through the scattering medium from point r0 to r1 as modeled using Eq. (2), (2) the free-space propagation of light from r1 to a point x on the hidden object and back to another point r2, and (3) the diffusion of light back through the scattering medium from r2 to r0. The free-space propagation operator, \(I(t^{\prime} ,{{\bf{r}}}_{{\bf{1}}},{{\bf{r}}}_{{\bf{2}}})\), is composed of a function, f, which describes the light throughput from a point on the scattering medium to a point on the hidden object and incorporates the bidirectional scattering distribution function (BSDF), as well as albedo, visibility, and inverse-square falloff factors45. A delta function, δ, relates distance and propagation time, and integration is performed over time and the hidden volume \({\bf{x}}\in \Psi =\{(x,y,z)\in {\mathbb{R}}\times {\mathbb{R}}\times {\mathbb{R}}\ | \ z\ge {z}_{{\rm{d}}}\}\).

The measurements can be modeled using Eq. (3); however, inverting this model directly to recover the hidden object is computationally infeasible. The computational complexity is driven by the requirement of convolving the time-resolved transmittance of Eq. (2) with I(t, r1, r2) of Eq. (3) for all light paths from all points r1 to all points r2. We introduce an efficient approximation to this model, which takes advantage of our confocal acquisition procedure, where the illumination source and detector share an optical path, and measurements are captured by illuminating and imaging a grid of points on the surface of the scattering medium.

The confocal measurements capture light paths which originate and end at a single illuminated and imaged point on the scattering medium. As light diffuses through the scattering medium, it illuminates a patch on the far side of the scattering medium whose lateral extent is small relative to the axial distance to the hidden object. Likewise, backscattered light incident on that same small patch diffuses back to the detector. We therefore approximate the free-space propagation operator I by modeling only paths that travel from an illuminated point r1, to the hidden object, and back to the same point. In other words, we make the approximation r1 ≈ r2. This approximation results in a simplified convolutional image formation model (see Supplementary Note 2, Supplementary Figs. 5 and 6)

where \(\hat{\tau }\) is the approximated measurement and \(\bar{\phi }\) is a convolutional kernel used to model diffusion through the scattering medium and back.

The continuous convolution operator \(\bar{\phi }\) and the continuous free-space propagation operator I are implemented with discrete matrix operations in practice. We denote the discrete diffusion operator as the convolution matrix (or its equivalent matrix-free operation), \(\bar{{\boldsymbol{\Phi }}}\). Then, let A be the matrix that describes free-space propagation to the hidden object and back, and let ρ represent the hidden object albedo. The full discretized image formation model is then given as \(\hat{{\boldsymbol{\tau }}}=\bar{{\boldsymbol{\Phi }}}{\bf{A}}{\boldsymbol{\rho }}\).

Inversion procedure

We seek to recover the hidden object albedo ρ. In this case, a closed-form solution exists using the Wiener deconvolution filter and a confocal inverse filter A−1 used in non-line-of-sight imaging20,21 (e.g., the Light-Cone Transform22,26 or f–k migration23):

F denotes the discrete Fourier transform matrix, \(\hat{\bar{{\boldsymbol{\Phi }}}}\) is the diagonal matrix whose elements correspond to the Fourier coefficients of the 3D convolution kernel, α is a parameter that varies depending on the signal-to-noise ratio at each frequency, and \(\hat{{\boldsymbol{\rho }}}\) is the recovered solution. Notably, the computational complexity of this method is \(O({N}^{3}\mathrm{log}\,N)\) for an N × N × N measurement volume, where the most costly step is taking the 3D Fast Fourier Transform. We illustrate the reconstruction procedure using CDT in Fig. 2 for a hidden scene consisting of a retroreflective letter ‘S’ placed ~50 cm behind the scattering layer. The initial captured 3D measurement volume is deconvolved with the diffusion model, and f–k migration is used to recover the hidden object. A detailed description of f–k migration and pseudocode for the inversion procedure are provided in Supplementary Notes 3–4. While the Wiener deconvolution procedure assumes that the measurements contain white Gaussian noise, we also derive and demonstrate an iterative procedure to account for Poisson noise in Supplementary Notes 5–6 (see also Supplementary Table 1 and Supplementary Figs. 7–13).

The captured time-resolved measurements (a) are deconvolved with the calibrated diffusion operator \(\bar{{\boldsymbol{\Phi }}}\) to compensate for the time delay induced by the scattering layer and estimate a measurement volume without diffusive scattering effects (b, top). An x–t slice shows the estimated streak measurement (b, bottom). Applying a confocal inverse filter recovers the hidden retroreflective letter ‘S’ (c, top), which resembles a photograph of the hidden scene (c, bottom). The reconstructed volume measures 60 cm by 60 cm by 50 cm along the x, y, and z dimensions, respectively, and a gamma of 1/3 is applied for visualization. Scalebars indicate 15 cm. The measurement volume is captured with a 1 min. acquisition time (60 ms per spatial sample). An overview of the reconstruction procedure is provided in Supplementary Movie 1.

Additional captured measurements and reconstructions of objects behind the scattering medium are shown in Fig. 3. The scenes consist of retroreflective and diffusely reflecting objects: a mannequin figure, two letters at different positions (separated axially by 9 cm), and a single diffuse hidden letter. Each of these scenes is centered ~50 cm behind the scattering medium. Another captured scene consists of three traffic cones positioned behind the scattering medium at axial distances of 45, 65, and 78 cm. While retroreflective hidden objects enable imaging with shorter exposure times due to their light-efficient reflectance properties, we also demonstrate recovery of shape and position in the more general case of the diffuse letter. All measurements shown in Fig. 3 are captured by sampling a 70 cm by 70 cm grid of 32 by 32 points on the scattering layer. Exposure times and recorded photon counts for all experiments are detailed in Supplementary Table 2. The total time required to invert a measurement volume of size 32 by 32 by 128 is approximately 300 ms on a conventional CPU (Intel Core i7 9750H) or 50 ms with a GPU implementation (NVIDIA GTX 1650). We compare the reconstruction from CDT to a time-gating approach, which attempts to capture hidden object structure by isolating minimally scattered photons in a short time slice. Additional comparisons and a sensitivity analysis to the calibrated scattering parameters are described and shown in Supplementary Note 7, Supplementary Figs. 14 and 15.

A photograph of the hidden retroreflective mannequin (a) and maximum intensity projections of the captured time-resolved measurements (b), a time-gated measurement slice (c), and the CDT reconstruction (d) are shown. A photograph, measurements, and reconstructions are also shown for two letter-shaped retroreflective hidden objects at different distances (e–h), a diffuse hidden object (i–l), and a group of traffic cones (m–p). We apply a depth-dependent scaling to the traffic cone visualization to account for radiometric falloff. Scalebars indicate 15 cm or 1 ns, and a gamma of 1/3 is applied to each maximum intensity projection51. Captured data are included in Supplementary Data 1 and additional visualizations are provided in Supplementary Movie 2.

Discussion

The approximate image formation model of Eq. (4) is valid when the difference in path length to the hidden object from two points within the illuminated spot on the far side of the scattering medium (r1 → x → r2) and a single illuminated point (r1 → x → r1) is less than the system resolution. As the standoff distance between the scattering medium and hidden object increases, this path length difference decreases, and the approximation becomes more accurate. Interestingly, speckle correlation approaches have a similar characteristic where axial range improves with standoff distance10. In our case, using the paraxial approximation allows us to express the condition where Eq. (4) holds as \(c\Delta t\, > \, \frac{{L}^{2}}{2H}\), where Δt is the system temporal resolution, L is the lateral extent of the illuminated spot on the far side of the scattering medium, and H is the standoff distance. In the diffusive regime, spreading of light causes L to scale approximately as the thickness of the scattering layer, zd46. For large incidence angles outside the paraxial regime, for example, for scanning apertures much greater than H, the worst-case approximation error is ≈L. For our prototype system, Δt ≈ 70 ps and cΔt ≈ L, and so even the maximum anticipated approximation error is close to the system resolution.

In practice, the imaging resolution of the system is mostly dependent on the thickness of the scattering layer and the transport mean free path, \({l}^{* }=1/({\mu }_{{\rm{a}}}+{\mu }_{{\rm{s}}}^{\prime})\). Thick scattering layers cause the illumination pulse to spread out over time, and high-frequency scene information becomes increasingly difficult to recover. The temporal spread of the pulse can be approximated using the diffusive traversal time, \(\Delta {t}_{{\rm{d}}}=\frac{{z}_{{\rm{d}}}^{2}}{6Dc}\), which is the typical time it takes for a photon to diffuse one way through the medium47. If we take the temporal spread for two-way propagation to be approximately twice the diffusive traversal time, we can derive the axial resolution (Δz) and lateral resolution (Δx) in a similar fashion to non-line-of-sight imaging22. This results in Δz ≥ cΔtd and \(\Delta x\ge \frac{c\sqrt{{w}^{2}+{H}^{2}}}{w}\Delta {t}_{{\rm{d}}}\), where 2w is the width or height of the scanned area on the scattering medium (see Supplementary Note 8, Supplementary Fig. 16). This approximation compares well with our experimental results, where 2Δtd ≈ 632 ps and we measure the full width at half maximum of a pulse transmitted and back-reflected through the scattering medium to be 640 ps (shown in Supplementary Fig. 17). Thus, the axial resolution of the prototype system is ~9 cm and the lateral resolution is ~15 cm for H = 50 cm and w = 35 cm.

The current image formation model assumes that the scattering medium is of uniform thickness and composition. In practice, materials could have inhomogeneous scattering and absorption coefficients and non-uniform geometry. The proposed technique could potentially be extended to account for non-uniform scattering layer thickness by modeling the variation in geometry when performing a convolution with the solution to the diffusion equation. The confocal inverse filter could likewise be adjusted to account for scattering from nonplanar surfaces, as has been demonstrated23. Modeling light transport through inhomogeneous scattering media is generally more computationally expensive, but can be accomplished by solving the radiative transfer equation48.

While we demonstrate CDT using sensitive SPAD detectors, combining CDT with other emerging detector technologies may enable imaging at still faster acquisition speeds, with thicker scattering layers, or at longer standoff distances, where the number of backscattered photons degrades significantly. For example, superconducting nanowire single-photon detectors can be designed with higher temporal resolution, lower dark-count rates, and shorter dead-times49 than SPADs. Likewise, silicon photomultipliers (SiPMs) with photon-counting capabilities improve photon throughput by timestamping multiple returning photons from each laser pulse50. On the illumination side, using femtosecond lasers would potentially offer improved temporal resolution if paired with an equally fast detector, though this may require increased acquisition times if the average illumination power is decreased.

CDT is a robust, efficient technique for 3D imaging through scattering media enabled by sensitive single-photon detectors, ultra-fast illumination, and a confocal scanning system. By modeling and inverting an accurate approximation of the diffusive scattering processes, CDT overcomes fundamental limitations of traditional ballistic imaging techniques and recovers 3D shape without a priori knowledge of the target depth. We demonstrate computationally efficient reconstruction of object shape and position through thick diffusers without a priori knowledge of target position and at meter-sized scales.

Methods

Details of experimental setup

In the proposed method, measurements are captured by illuminating points on the surface of the scattering media using short (≈35 ps) pulses of light from a laser (NKT Katana 05HP). The pulsed laser shares an optical path through a polarizing beamsplitter (Thorlabs PBS251) with a single-pixel, single-photon avalanche diode with a 50 × 50 μm active area (Micro Photon Devices PDM Series Fast-Gated SPAD), which is focused on the illuminated point using a 50-mm objective lens (Nikon Nikkor f/1.4). We use the gating capability of the SPAD to turn the detector on just before scattered photons arrive from the hidden object, and detected photons are timestamped using a time-correlated single-photon counter or TCSPC (PicoQuant PicoHarp 300). The combined timing resolution of the system is ~70 ps. The laser and SPAD are scanned onto a grid of 32 by 32 points on the surface of the scattering media using a pair of mirrors scanned with a two-axis galvanometer (Thorlabs GVS-012) and controlled using a National Instruments data acquisition device (NI-DAQ USB-6343). The pulsed laser source has a wavelength of 532 nm and is configured for a pulse repetition rate of 10 MHz with 400 mW average power. Please refer to Supplementary Fig. 1 and Supplementary Movie 1 for visualizations of the hardware prototype.

Calibration of the scattering layer

The reduced scattering and absorption coefficients of the scattering layer (comprising a 2.54-cm thick piece of polyurethane foam) are calibrated by illuminating the scattering layer from one side using the pulsed laser source and measuring the temporal response of the transmitted light at the other side (see Supplementary Figs. 2 and 3) using a single-pixel SPAD detector (Micro Photon Devices PDM series free-running SPAD). The captured measurement is modeled as the temporal response of the foam (given by Eq. (2)) convolved with the calibrated temporal response of the laser and SPAD. Measurements are captured for 15 different thicknesses of the scattering layer, from ~2.54 to 20.32 cm in increments of 1.27 cm. The reduced scattering and absorption coefficients are then found by minimizing the squared error between the measurement model and the observed data across all measurements. A main source of uncertainty in the calibrated values is the value used for the extrapolation distance, which depends on the refractive index of the medium. We find that fixing the refractive index to a value of 1.12 achieves the best fit; however, to quantify uncertainty in the model parameters we also run the optimization after perturbing the refractive index within ±10% of the nominal value (from 1.01 to 1.23). The resulting parameters are \({\mu }_{{\rm{s}}}^{\prime}=2.62\pm 0.43\) cm−1 and μa = 5.26 × 10−3 ± 5.5 × 10−5 cm−1, where the confidence intervals indicate the range containing 95% of the optimized values. We provide additional details about the optimization procedure in Supplementary Note 1.

The full width at half maximum of the spot illuminated by the laser on the far side of the scattering media is measured to be 2.2 cm, which approximately corresponds to the thickness of the scattering media (see Supplementary Fig. 5).

Data availability

Measured data supporting the results shown in Fig. 2 and Fig. 3 are available within Supplementary Data 1. Data are also available online at https://github.com/computational-imaging/confocal-diffuse-tomography and from the authors upon request.

Code availability

Computer code supporting the findings of this study is available within Supplementary Data 1 and online at https://github.com/computational-imaging/confocal-diffuse-tomography.

References

Ntziachristos, V. Going deeper than microscopy: the optical imaging frontier in biology. Nat. Methods 7, 603–614 (2010).

Eggebrecht, A. T. et al. Mapping distributed brain function and networks with diffuse optical tomography. Nat. Photon. 8, 448–454 (2014).

Wang, L., Ho, P., Liu, C., Zhang, G. & Alfano, R. Ballistic 2-D imaging through scattering walls using an ultrafast optical Kerr gate. Science 253, 769–771 (1991).

Redo-Sanchez, A. et al. Terahertz time-gated spectral imaging for content extraction through layered structures. Nat. Commun. 7, 1–7 (2016).

Indebetouw, G. & Klysubun, P. Imaging through scattering media with depth resolution by use of low-coherence gating in spatiotemporal digital holography. Opt. Lett. 25, 212–214 (2000).

Dunsby, C. & French, P. Techniques for depth-resolved imaging through turbid media including coherence-gated imaging. J. Phys. D. 36, R207 (2003).

Kang, S. et al. Imaging deep within a scattering medium using collective accumulation of single-scattered waves. Nat. Photon. 9, 253–258 (2015).

Huang, D. et al. Optical coherence tomography. Science 254, 1178–1181 (1991).

Bertolotti, J. et al. Non-invasive imaging through opaque scattering layers. Nature 491, 232–234 (2012).

Katz, O., Heidmann, P., Fink, M. & Gigan, S. Non-invasive single-shot imaging through scattering layers and around corners via speckle correlations. Nat. Photon. 8, 784–790 (2014).

Popoff, S., Lerosey, G., Fink, M., Boccara, A. C. & Gigan, S. Image transmission through an opaque material. Nat. Commun. 1, 1–5 (2010).

Vellekoop, I. M. & Mosk, A. Focusing coherent light through opaque strongly scattering media. Opt. Lett. 32, 2309–2311 (2007).

Horstmeyer, R., Ruan, H. & Yang, C. Guidestar-assisted wavefront-shaping methods for focusing light into biological tissue. Nat. Photon. 9, 563–571 (2015).

Vellekoop, I. M., Cui, M. & Yang, C. Digital optical phase conjugation of fluorescence in turbid tissue. Appl. Phys. Lett. 101, 81108 (2012).

Wang, K. et al. Rapid adaptive optical recovery of optimal resolution over large volumes. Nat. Methods 11, 625–628 (2014).

Katz, O., Small, E., Guan, Y. & Silberberg, Y. Noninvasive nonlinear focusing and imaging through strongly scattering turbid layers. Optica 1, 170–174 (2014).

Xu, X., Liu, H. & Wang, L. V. Time-reversed ultrasonically encoded optical focusing into scattering media. Nat. Photon. 5, 154–157 (2011).

Judkewitz, B., Wang, Y. M., Horstmeyer, R., Mathy, A. & Yang, C. Speckle-scale focusing in the diffusive regime with time reversal of variance-encoded light (TROVE). Nat. Photon. 7, 300–305 (2013).

Lai, P., Wang, L., Tay, J. W. & Wang, L. V. Photoacoustically guided wavefront shaping for enhanced optical focusing in scattering media. Nat. Photon. 9, 126–132 (2015).

Velten, A. et al. Recovering three-dimensional shape around a corner using ultrafast time-of-flight imaging. Nat. Commun. 3, 1–8 (2012).

Liu, X. et al. Non-line-of-sight imaging using phasor-field virtual wave optics. Nature 572, 620–623 (2019).

O’Toole, M., Lindell, D. B. & Wetzstein, G. Confocal non-line-of-sight imaging based on the light-cone transform. Nature 555, 338–341 (2018).

Lindell, D. B., Wetzstein, G. & O’Toole, M. Wave-based non-line-of-sight imaging using fast f–k migration. ACM Trans. Graph. 38, 1–13 (2019).

Faccio, D., Velten, A. & Wetzstein, G. Non-line-of-sight imaging. Nat. Rev. Phys. 2, 318–327 (2020).

Liu, X., Bauer, S. & Velten, A. Phasor field diffraction based reconstruction for fast non-line-of-sight imaging systems. Nat. Commun. 11, 1–13 (2020).

Young, S., Lindell, D. B. & Wetzstein, G. Non-line-of-sight surface reconstruction using the directional light-cone transform. in Proc. CVPR pp. 1407–1416 (2020).

Boas, D. A. et al. Imaging the body with diffuse optical tomography. IEEE Signal Process. Mag. 18, 57–75 (2001).

Gibson, A. & Dehghani, H. Diffuse optical imaging. Philos. Trans. R. Soc. A 367, 3055–3072 (2009).

Arridge, S. R. & Schweiger, M. A gradient-based optimisation scheme for optical tomography. Opt. Express 2, 213–226 (1998).

Konecky, S. D. et al. Imaging complex structures with diffuse light. Opt. Express 16, 5048–5060 (2008).

Hebden, J. C., Hall, D. J. & Delpy, D. T. The spatial resolution performance of a time-resolved optical imaging system using temporal extrapolation. Med. Phys. 22, 201–208 (1995).

Cai, W. et al. Time-resolved optical diffusion tomographic image reconstruction in highly scattering turbid media. Proc. Natl Acad. Sci. USA 93, 13561 (1996).

Satat, G., Tancik, M. & Raskar, R. Towards photography through realistic fog. in Proc. ICCP pp. 1–10 (2018).

Gariepy, G. et al. Single-photon sensitive light-in-fight imaging. Nat. Commun. 6, 1–7 (2015).

Lyons, A. et al. Computational time-of-flight diffuse optical tomography. Nat. Photon. 13, 575–579 (2019).

Durduran, T., Choe, R., Baker, W. B. & Yodh, A. G. Diffuse optics for tissue monitoring and tomography. Rep. Prog. Phys. 73, 076701 (2010).

Badon, A. et al. Smart optical coherence tomography for ultra-deep imaging through highly scattering media. Sci. Adv. 2, e1600370 (2016).

Wang, Z. et al. Cubic meter volume optical coherence tomography. Optica 3, 1496–1503 (2016).

Satat, G., Heshmat, B., Raviv, D. & Raskar, R. All photons imaging through volumetric scattering. Sci. Rep. 6, 1–8 (2016).

Hoshi, Y. & Yamada, Y. Overview of diffuse optical tomography and its clinical applications. J. Biomed. Opt. 21, 091312 (2016).

Patterson, M. S., Chance, B. & Wilson, B. C. Time resolved reflectance and transmittance for the noninvasive measurement of tissue optical properties. Appl. Opt. 28, 2331–2336 (1989).

Farrell, T. J., Patterson, M. S. & Wilson, B. A diffusion theory model of spatially resolved, steady-state diffuse reflectance for the noninvasive determination of tissue optical properties in vivo. Med. Phys. 19, 879–888 (1992).

Haskell, R. C. et al. Boundary conditions for the diffusion equation in radiative transfer. JOSA A 11, 2727–2741 (1994).

Contini, D., Martelli, F. & Zaccanti, G. Photon migration through a turbid slab described by a model based on diffusion approximation. I. Theory. Appl. Opt. 36, 4587–4599 (1997).

Xin, S. et al. A theory of Fermat paths for non-line-of-sight shape reconstruction. in Proc. CVPR pp. 6800–6809 (2019).

Freund, I. Looking through walls and around corners. Phys. A 168, 49–65 (1990).

Landauer, R. & Buttiker, M. Diffusive traversal time: effective area in magnetically induced interference. Phys. Rev. B 36, 6255–6260 (1987).

Gkioulekas, I., Levin, A. & Zickler, T. An evaluation of computational imaging techniques for heterogeneous inverse scattering. in Proc. ECCV pp. 685–701 (2016).

Natarajan, C. M., Tanner, M. G. & Hadfield, R. H. Superconducting nanowire single-photon detectors: physics and applications. Supercond. Sci. Technol. 25, 063001 (2012).

Buzhan, P. et al. Silicon photomultiplier and its possible applications. Nucl. Instrum. Methods Phys. Res. A 504, 48–52 (2003).

Pettersen, E. F. et al. UCSF chimera-a visualization system for exploratory research and analysis. J. Comput. Chem. 25, 1605–1612 (2004).

Acknowledgements

D.B.L. is supported by a Stanford Graduate Fellowship in Science and Engineering. G.W. is supported by a National Science Foundation CAREER award (IIS 1553333), a Sloan Fellowship, the DARPA REVEAL program, the ARO (PECASE Award W911NF-19-1-0120), and by the KAUST Office of Sponsored Research through the Visual Computing Center CCF grant.

Author information

Authors and Affiliations

Contributions

D.B.L. conceived the method, developed the experimental setup, captured the measurements, and implemented the reconstruction procedures. G.W. supervised all aspects of the project. Both authors took part in designing the experiments and writing the paper and Supplementary Information.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Peer review information Nature Communications thanks Daniele Faccio and the other anonymous reviewer(s) for their contribution to the peer review of this work.

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Lindell, D.B., Wetzstein, G. Three-dimensional imaging through scattering media based on confocal diffuse tomography. Nat Commun 11, 4517 (2020). https://doi.org/10.1038/s41467-020-18346-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41467-020-18346-3

This article is cited by

-

Terahertz bistatic three-dimensional computational imaging of hidden objects through random media

Scientific Reports (2024)

-

Directly and instantly seeing through random diffusers by self-imaging in scattering speckles

PhotoniX (2023)

-

Overlapping speckle correlation algorithm for high-resolution imaging and tracking of objects in unknown scattering media

Nature Communications (2023)

-

Confocal LiDAR for remote high-resolution imaging of auto-fluorescence in aquatic media

Scientific Reports (2023)

-

Ultrasonic barrier-through imaging by Fabry-Perot resonance-tailoring panel

Nature Communications (2023)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.