Abstract

Real-time imaging of countless femtosecond dynamics requires extreme speeds orders of magnitude beyond the limits of electronic sensors. Existing femtosecond imaging modalities either require event repetition or provide single-shot acquisition with no more than 1013 frames per second (fps) and 3 × 102 frames. Here, we report compressed ultrafast spectral photography (CUSP), which attains several new records in single-shot multi-dimensional imaging speeds. In active mode, CUSP achieves both 7 × 1013 fps and 103 frames simultaneously by synergizing spectral encoding, pulse splitting, temporal shearing, and compressed sensing—enabling unprecedented quantitative imaging of rapid nonlinear light-matter interaction. In passive mode, CUSP provides four-dimensional (4D) spectral imaging at 0.5 × 1012 fps, allowing the first single-shot spectrally resolved fluorescence lifetime imaging microscopy (SR-FLIM). As a real-time multi-dimensional imaging technology with the highest speeds and most frames, CUSP is envisioned to play instrumental roles in numerous pivotal scientific studies without the need for event repetition.

Similar content being viewed by others

Introduction

Cameras’ imaging speeds fundamentally limit humans’ capability in discerning the physical world. Over the past decades, imaging technologies based on silicon sensors, such as CCD and CMOS, were extensively improved to offer imaging speeds up to millions of frames per second (fps)1. However, they fall short in capturing a rich variety of extremely fast phenomena, such as ultrashort light propagation2, radiative decay of molecules3, soliton formation4, shock wave propagation5, nuclear fusion6, photon transport in diffusive media7, and morphologic transients in condensed matters8. Successful studies into these phenomena lay the foundations for modern physics, biology, chemistry, material science, and engineering. To observe these events, a frame rate well beyond a billion fps or even a trillion fps (Tfps) is required. Currently, the most widely implemented method is to trigger the desired event numerous times and meanwhile observe it through a narrow time window at different time delays, which is termed the pump-probe method9,10. Unfortunately, it is unable to record the event in real-time and thus only applicable to phenomena that are highly repeatable. Here, real-time imaging is defined as multi-dimensional observation at the same time as the event occurs without event repetition. It has been a long-standing challenge for researchers to invent real-time ultrafast cameras11.

Recently, a handful of groups presented several exciting single-shot trillion-fps imaging modalities, including sequentially-timed all-optical mapping photography12,13,14, frequency-dividing imaging15, non-collinear optical parametric amplifier16, frequency-domain streak imaging17, and compressed ultrafast photography (CUP)18,19. Nevertheless, none of them has imaging speeds beyond 10 Tfps. In addition, the first three methods have their sequence depths (i.e., the number of captured frames in each acquisition) limited to <10 frames because the complexity of their systems grows proportionally to the sequence depth. One promising approach is CUP19, which combines a streak camera with compressed sensing18. A standard streak camera, which has a narrow entrance slit, is a one-dimensional (1D) ultrafast imaging device20 that first converts photons to photoelectrons, then temporally shears the electrons by a fast sweeping voltage, and finally converts electrons back to photons before they are recorded by an internal camera (see the “Methods” section and Supplementary Fig. 1). In CUP, imaging two-dimensional (2D) transient events is enabled by a wide open entrance slit and a scheme of 2D spatial encoding combined with temporal compression19. Unfortunately, CUP’s frame rate relies on the streak camera’s capability in deflecting electrons, and its sequence depth (300 frames) is tightly constrained by the number of sensor pixels in the shearing direction.

Here, we present CUSP, as the fastest real-time imaging modality with the largest sequence depth, overcoming these barriers with the introduction of multiple advanced concepts. It breaks the limitation in speed by employing spectral dispersion in the direction orthogonal to temporal shearing, extending to spectro-temporal compression. Furthermore, CUSP sets a new milestone in sequence depth by exploiting pulse splitting. We experimentally demonstrated 70-Tfps real-time imaging of a spatiotemporally chirped pulse train and ultrashort light pulse propagation inside a solid nonlinear Kerr medium. With minimum modifications, CUSP can function as the fastest single-shot 4D spectral imager [i.e., \(\left( {x,y,t,\lambda } \right)\) information], empowering single-shot spectrally resolved fluorescence lifetime imaging microscopy (SR-FLIM). We monitored the spectral evolution of fluorescence in real time and studied the unusual relation between fluorophore concentration and lifetime at high concentration.

Results

Principles of CUSP

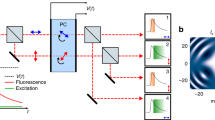

The CUSP system (Fig. 1a) consists of an imaging section and an illumination section. It can work in either active or passive mode, depending on whether a specially engineered illumination beam is required for imaging12,13,14,15,16,17,18,19. The imaging section is shared by both modes, while the illumination section is for active mode only. In the imaging section, after a dynamic scene \(I\left( {x,y,t,\lambda } \right)\) is imaged by an interchangeable lens system, the light path is split into two. In one path, an external camera captures a time-unsheared spectrum-undispersed image (defined as u-View). In the other path, the image is encoded by a digital micromirror device (DMD), displaying a static pseudo-random binary pattern, and is then relayed to the fully opened entrance port of a streak camera (see the “Methods” section). Spatial encoding by either pseudo-random14,18,19,21 or designed22,23,24 patterns is a technique extensively applied in compressed sensing. A diffraction grating, inserted in front of the streak camera, spectrally disperses the scene in the horizontal direction (see Fig. 1b). After being detected by the streak camera’s photocathode, the spatially encoded and spectrally dispersed image experiences temporal shearing in the vertical direction inside the streak tube first and then spatiotemporal-spectrotemporal integration by an internal camera (see Fig. 1c). The streak camera, at the end, acquires a time-sheared spectrum-dispersed image (defined as s-View). See Supplementary Notes 1 and 2 for the characterizations of the streak camera and the imaging section, respectively. Retrieving \(I\) from the raw images in u-View and s-View is an under-sampled inverse problem. Fortunately, the encoded recording allows us to reconstruct the scene by solving the minimization problem aided by regularization (detailed in the “Methods” section)18,19.

a Complete schematic of the system. The beamsplitter pair followed by a glass rod converts a single femtosecond pulse into a temporally linearly chirped pulse train with neighboring sub-pulses separated by \(t_{{\mathrm{sp}}}\), which can be tuned according to the experiment. b Detailed illustration of the spectral dispersion scheme (black dashed box). c Composition of a raw CUSP image in s-View, which includes both spectral dispersion by the grating in the horizontal direction and temporal shearing by the streak camera in the vertical direction. BS, beamsplitter; DMD, digital micromirror device; G, diffraction grating; L, lens; M, mirror. Equipment details are listed in the “Methods” section.

In active mode, we encode time into spectrum via the illumination section, which first converts a broadband femtosecond pulse to a pulse train with neighboring sub-pulses separated by time \(t_{{\mathrm{sp}}}\), using a pair of high-reflectivity beamsplitters. In the following step, the pulse train is sent through a homogeneous glass rod to temporally stretch and chirp each sub-pulse. Since this chirp is linear, each wavelength in the pulse bandwidth carries a specific time fingerprint. Thereby, this pulse train is sequentially timed by \(t\left( {p,\lambda } \right) = pt_{{\mathrm{sp}}} + \eta \left( {\lambda - \lambda _0} \right)\), where \(p = 0,\,1,\,2\,, \ldots ,\,\left( {P - 1} \right)\) represents the sub-pulse sequence, \(\eta\) is the overall chirp parameter, and \(\lambda _0\) is the minimum wavelength in the pulse bandwidth. This timed pulse train then illuminates a dynamic scene \(I\left( {x,y,t} \right) = I\left( {x,y,t\left( {p,\lambda } \right)} \right)\), which is subsequently acquired by the imaging section. See Supplementary Note 3 for experimental details on the illumination section.

In active CUSP, the imaging frame rate is determined by \(R_{\mathrm{a}} = \left| \mu \right|/\left( {\left| \eta \right|d} \right)\), where \(\mu\) is the spectral dispersion parameter of the system, and d is the streak camera’s pixel size. The sequence depth is \(N_{{\mathrm{ta}}} = PB_{\mathrm{i}}\left| \mu \right|/d\), where P is the number of sub-pulses, and \(B_{\mathrm{i}}\) is the used spectral bandwidth of the illuminating light pulse (785 nm to 823 nm). Passive CUSP does not rely on engineered illumination, and therefore, it is well suited to image various luminescent objects11. The independency between \(t\) and \(\lambda\) allows a 4D transient, \(I\left( {x,y,t,\lambda } \right)\), to be imaged using the same algorithm without translating wavelength into time. Instead of extracting P discrete sub-pulses, passive CUSP needs to resolve \(N_{{\mathrm{tp}}}\) consecutive frames with a frame rate of \(R_{\mathrm{p}} = v/d\), where \(v\) is the sweeping speed of the streak tube. This calculation is based on the fact that the scene is sheared by one pixel per frame in the vertical direction (\(y_{\mathrm{s}}\)) so that the time interval between adjacent frames is \(v/d\). The number of sampled wavelengths is \(N_\lambda = B_{\mathrm{e}}\left| \mu \right|/d\), where \(B_{\mathrm{e}}\) is the bandwidth of the emission spectrum from the object. Supplementary Notes 4 and 5 contain additional information on the data acquisition model and reconstruction algorithm.

Imaging an ultrafast linear optical phenomenon

Simultaneous characterization of an ultrashort light pulse spatially, temporally, and spectrally is essential for studies on laser dynamics4 and multimode fibers25. Here, in the first demonstration, we created a spatially and temporally chirped pulse by a grating pair (Fig. 2a). Negative and positive temporal chirps from a 270-mm-long glass rod and the grating pair, respectively, were carefully balanced so that the combined temporal spread td was close to the sub-pulse separation \(t_{{\mathrm{sp}}} = 2\,{\mathrm{ps}}\) (detailed in Supplementary Note 6). The pulse train irradiated a sample of printed letters, which is used as the dynamic scene (see its location in Fig. 1a). Exemplary frames from CUSP reconstruction with a field of view (FOV) of 12.13 mm × 9.03 mm are summarized in Fig. 2b. See the full movie in Supplementary Movie 1. It shows that each sub-pulse swiftly sweeps across the letters from left to right. Due to spatial chirping by the grating pair, the illumination wavelength also changes from short to long over time. The normalized light intensity at a selected spatial point, plotted in Fig. 2c, contains five peaks that correspond to five sub-pulses. Each peak represents one temporal point-spread-function (PSF) of the active CUSP system. The peaks have an average full width at half maximum (FWHM) of 240 fs, corresponding to 4.5 nm in the spectrum domain (i.e., spectral resolution of active CUSP). Fourier transforming the intensity in the spectrum domain to the time domain gives a pulse with a FWHM of 207 fs, indicating that our system operates at the optimal condition bounded by the time-bandwidth limit and temporal chirp13. In the high-spectral-resolution regime, the Fourier-transformation relation between pulse bandwidth and duration dominates in determining temporal resolution, and thus a finer spectral resolution broadens the temporal PSF. Whereas in the low-spectral-resolution regime, temporal chirp takes over such that a poorer spectral resolution leads to a broader temporal PSF as well. Hence, there exists an optimal spectral resolution that enables the best temporal resolution26.

a Schematic of the system used to impart both spatial and temporal chirps to the incoming pulse train using a pair of reflective diffraction gratings (G1 and G2). Each sub-pulse has a positive temporal chirp and illuminates the sample successively. b Selected frames from the reconstructed 70-Tfps movie of the spatially and temporally chirped pulse train sweeping a group of letters. Each frame is cropped from the full field of view to reduce blank space. A 2D map uses color to represent illumination wavelength and grayscale to represent intensity. Intensity is normalized to the maximum, and its color map is saturated at 0.5 to display weak intensities better. See the entire sequence in Supplementary Movie 1. Scale bars: 1 mm. c Temporal profile of the light intensity at \((x_1, y_1)\) (white arrow in b) from the state-of-the-art T-CUP (red dashed line), CUSP (black solid line) and the transform limit (cyan dash-dotted line) calculated based on the CUSP curve in the spectral domain. Inset: normalized light intensity profiles from CUSP and T-CUP in the first 2 ps. d Spatially integrated total intensity over wavelength for five sub-pulses (magenta lines). The reference spectrum (green line) was measured by a spectrometer.

Using a dispersion parameter μ = 23.5 μm nm−1, a chirp parameter \(\eta = 52.6\,{\mathrm{fs}}\,{\mathrm{nm}}^{ - 1}\) and a pixel size d = 6.45 μm, our active CUSP offers a frame rate as high as 70 Tfps. A control experiment imaged the same scene using the state-of-the-art trillion-frame-per-second CUP (T-CUP) technique with 10 Tfps18 (see Supplementary Movie 1). Our system design allows flexible and reliable transitions between CUSP and T-CUP, as explained in Supplementary Note 7 and Supplementary Fig. 10. T-CUP’s reconstructed intensity evolution at the same point exhibits a temporal spread 3.2× wider than that of CUSP. In addition, within any time window, CUSP achieves 7× increase in the number of frames compared with T-CUP (see the blue solid lines in the inset of Fig. 2c). Thus, CUSP surpasses the currently fastest single-shot imaging modality in terms of both temporal resolution and sequence depth. Figure 2d plots the reconstructed total light intensities of the five sub-pulses versus the illumination wavelength. Their profiles are close to the ground truth measured by a spectrometer.

Imaging an ultrafast nonlinear optical phenomenon

Nonlinear light-matter interactions are indispensable in optical communications27 and quantum optics28. Optical-field-induced birefringence, as a result of third-order nonlinearity, has been widely utilized in mode-locked laser29 and ultrafast imaging30,31. Here, in our second demonstration, we focused a single 48-fs laser pulse (referred to as the ‘gate’ pulse), centered at 800 nm and linearly polarized along the y direction, into a Bi4Ge3O12 (BGO) slab to induce transient birefringence, as schematically illustrated in Fig. 3a. A second beam (referred to as the ‘detection’ pulse)—a temporally chirped pulse train from the illumination section of the CUSP system—was incident on the slab from an orthogonal direction, going through a pair of linear polarizers that sandwich the BGO. This is a Kerr gate setup since the two polarizers have polarization axes aligned at +45° and –45°, respectively31,32. The Kerr gate has a finite transmittance of \(T_{{\mathrm{Kerr}}} = \left( {1 - \cos \varphi } \right)/2\) only where the gate pulse travels. Here, \(\varphi\), proportional to the gate pulse intensity, represents the gate-induced phase delay between the two orthogonal polarization directions x and y (see Supplementary Note 8 for its definition and measurement).

a Schematic of single-shot imaging of an ultrashort intense light pulse propagating in a Kerr medium (BGO). Polarizers P1 and P2 have polarization axes aligned at +45° and –45°, respectively. A long-pass filter, LPF, is used to block multi-photon fluorescence. A complete schematic is in Supplementary Fig. 10. b, c 3D visualizations of the reconstructed 980-frame movies acquired at 70 Tfps when the gate focus is (b) outside and (c) inside the field of view (FOV). Transmittance is normalized to each maximum. See the full movies in Supplementary Movie 2. The bottom surfaces plot the centroid x positions of the reconstructed gate pulse versus time and the corresponding theoretical calculations. d, e Representative snapshots of the normalized transmittance profiles (i.e. beam profiles of the gate pulse) in the \(x\)–\(y\) plane for d Experiment 1 and e Experiment 2, at selected time frames defined by blue dashed boxes in (b) and (c), respectively. Scale bars: 0.1 mm. f Evolution of the locally normalized light transmittance at a selected spatial point in Experiment 1 [cyan cross in (d)].

CUSP imaged the gate pulse, with a peak power density of 5.6 × 1014 mW cm−2 at its focus, propagating in the BGO slab. In the first and second experiments, the gate focus was outside and inside the FOV (2.48 mm × 0.76 mm in size), respectively. Figures 3b and c contain 3D visualizations of the reconstructed dynamics, which are also shown in Supplementary Movie 2. Snapshots are shown in Figs. 3d and e. As the gate pulse travels and focuses, the accumulated phase delay \(\varphi\) increases, therefore \(T_{{\mathrm{Kerr}}}\) becomes larger. The centroid positions of the gate pulse (i.e., the transmission region in the Kerr medium) along the horizontal axis x versus time t are plotted at the bottom of Figs. 3b and c, matching well with the theoretical estimation based on a refractive index of 2.07. Note that seven sub-pulses were included in the illumination to provide a 14-ps-long observation window and capture a total of 980 frames with an imaging speed of 70 Tfps. Based on the definition of \(N_{{\mathrm{ta}}}\), each 2-ps sub-pulse encodes 140 frames in its spectrum, and seven sub-pulses arranged in sequence offer 980 frames in total.

Re-distribution of electronic charges in BGO lattices driven by an intense light pulse, like in other solids, serves as the dominant mechanism underlying the transient birefringence30,33, which is much faster than re-orientation of anisotropic molecules in liquids, such as carbon disulfide14,15. To study this ultrafast process, one spatial location from Fig. 3d is chosen to show its locally normalized transmittance evolution (Fig. 3f). Its FWHM of 455 fs estimates a relaxation time of ~380 fs after deconvolution from the temporal PSF (Fig. 2c). This result is close to the BGO’s relaxation time reported in the literature33. Note that T-CUP fails in quantifying this process due to its insufficient temporal resolution (see Supplementary Movie 3 and Supplementary Note 7).

In stark contrast with the well-established pump-probe method, CUSP requires only one single laser pulse to observe the entire time course of its interaction with the material in 2D space. As shown in Supplementary Note 8, the Kerr gate in our experiment was designed to be highly sensitive to random fluctuations in the gate pulse intensity, which are caused by the nonlinear relation between the Kerr gate transmittance and the gate pulse intensity. The experimental comparison in Supplementary Movie 3 reveals that the pump-probe measurement flickers conspicuously, due to shot-to-shot variations, while CUSP exhibits a smooth transmittance evolution, owing to single-shot acquisition. Supplementary Fig. 12 shows that the fractional fluctuation in intensity is amplified 11 times in transmittance. As detailed in Supplementary Note 8, the pump-probe method would require >105 image acquisitions to capture the dynamics in Fig. 3b with the same stability as CUSP’s.

SR-FLIM

One application of passive CUSP is SR-FLIM. Both the emission spectrum and lifetime are important properties of molecules, which have been broadly exploited by biologists and material scientists to investigate a variety of biological processes34 and material characteristics35. Over the past decades, time-correlated single photon counting (TCSPC) has been the gold-standard tool for SR-FLIM36,37. However, TCSPC typically takes tens of milliseconds to even seconds to acquire one dataset, since it depends on repeated measurements. To our knowledge, single-shot SR-FLIM has not been reported so far.

Our experimental implementation, illustrated in Fig. 4a, is a fluorescence microscope interfacing the imaging section of the CUSP system (detailed in the “Methods” section and Supplementary Note 9). This system provides a spectral resolution of 13 nm over the 200-nm bandwidth. A single 532-nm picosecond pulse was deployed to excite fluorescence from the sample of Rhodamine 6G dye (Rh6G) in methanol. Three Rh6G concentrations (22, 36, and 40 mM) with three different spatial patterns were imaged and reconstructed at 0.5 Tfps. The final data has an FOV of 180 μm × 180 μm, contains Ntp = 400 frames over an exposure time of 0.8 ns, and \(N_\lambda = 100\) wavelength samples. Supplementary Movie 4 shows the reconstructed light intensity evolutions in three dimensions (2D space and 1D spectrum). Fluorescence lifetime can be readily extracted by single-exponential fitting. Figures 4b–d summarize the spatio-spectral distributions of lifetimes. Rh6G with a higher concentration has a shorter lifetime due to increased pathways for non-radiative relaxation38. The spatial intensity distributions (insets of Fig. 4b–d) show well-preserved spatial resolutions. Figure 4e plots the intensity distribution of the 22-mM sample in the t-λ space, clearly revealing that the emission peaks at ~570 nm and decays rapidly after excitation. Finally, we show in Fig. 4f that lifetimes remain relatively constant over the entire emission spectra and exhibit minute variations over the spatial domain. These uniform spatial distributions are also confirmed by the spectrally averaged lifetime maps in Fig. 4g. See Supplementary Fig. 14 for more quantitative results from our SR-FLIM.

a Schematic of the fluorescence microscopy setup, connected with the passive CUSP system. DM, dichroic mirror; EmF, emission filter; ExF, excitation filter; FL, focusing lens; M, mirror; Obj, microscope objective; TL; tube lens. b–d Reconstructed lifetime distributions over the spatial (2D) and the spectral (1D) domains for three samples of different patterns and Rh6G concentrations: b 22 mM, c 36 mM, and d 40 mM. The entire spatial-spectral evolutions are in Supplementary Movie 4. Insets: temporally and spectrally integrated light intensity distributions in the \(x\)–\(y\) plane. e Spatially integrated light intensity distribution in the \(t\)–\(\lambda\) space for the sample of 22 mM in (b). This 2D distribution is normalized to its maximum. f Reconstructed average lifetimes at different wavelengths. The gray bands represent the standard deviations of lifetimes in the spatial domain. g Spectrally averaged lifetime distributions in the \(x\)–\(y\) plane for the three samples. Scale bars: 30 μm.

Contrary to the common observation that fluorescence lifetime is independent of concentration3,39, our experiments demonstrate that lifetime can actually decrease with an increased concentration. Such a phenomenon was also observed in a previous study38, attributed to the populated non-radiative relaxations. As the sample becomes highly concentrated, the fluorophores tend to stay at the excited state for a shorter time since the formations of dimers and aggregates increase pathways for relaxation (see Supplementary Note 9)38. To directly characterize our samples’ lifetimes, we conducted a reference experiment using traditional streak camera imaging40,41,42. A uniform sample is projected onto the streak camera with a narrow entrance slit and then the lifetime is readily extracted from the temporal trace of the emission decay in the streak image. The results are plotted in Supplementary Figs. 14d and e. The difference in lifetime measurements between CUSP and the reference experiment is only 10 ps on average, much less than the lifetime.

Discussion

CUSP’s superior real-time imaging speed of 70 Tfps in active mode is three orders of magnitude greater than the physical limit of semiconductor sensors43. Owing to this new speed, CUSP can quantify physical phenomena that are inaccessible using the previous record-holding system (see Supplementary Movie 3). Moreover, active CUSP captures data more than 105 times faster than the pump-probe approach. When switching CUSP to passive mode for single-shot SR-FLIM, the total exposure time for one acquisition (<1 ns) is more than 107 times shorter than that of TCSPC36,37. Additionally, CUSP is to date the only single-shot ultrafast imaging technique that can operate in either active or passive mode. As a generic hybrid imaging tool, CUSP’s scope of application far exceeds the demonstrations above. The imaging speed and sequence depth can be highly scalable via parameter tuning. CUSP can cover its spectral region from X-ray to NIR20, and even matter waves such as electron beams24, given the availability of sources and sensing devices. In addition, CUSP is advantageous in photon throughput, compared with existing ultrafast imaging technologies11.

Both the pump-probe and TCSPC methods require event repetition. Consequently, these techniques are not only slower than CUSP by orders of magnitude as aforementioned, but are also inapplicable in imaging the following classes of phenomena: (1) high-energy radiations that cannot be readily pumped such as annihilation radiation (basis for PET)44, Cherenkov radiation45, and nuclear reaction radiation6; (2) self-luminescent phenomena that occur randomly in nature, such as sonoluminescence in snapping shrimps46; (3) astronomical events that are light-years away44,47; and (4) chaotic dynamics that cannot be repeated48,49. Yet, CUSP can observe all of these phenomena. For randomly occurring phenomena, the beginning of the signal can be used to trigger CUSP. Large amounts of theoretical and technical efforts are required before CUSP can be widely adapted for these applications.

A trade-off between imaging speed and recording time is always found in single-shot ultrafast imaging modalities7,8,12,13,14,15,16,17,18,19,24,25,26. Due to the vast discrepancies in imaging speeds, it is inconvenient to compare different approaches using recording time. Therefore, we introduced a parameter for fair comparison – sequence depth11, which is independent of imaging speeds. In CUSP with a fixed hardware configuration, the maximum sequence depth is solely determined by the FOV [see Eqs. (5) and (6) in the “Methods” section]. In a practical setting, active CUSP can acquire more than 103 frames in one snapshot, which is several times7,18,19 or even orders of magnitude8,12,13,14,15,16,17 higher than those achievable by the state-of-the-art techniques (see the “Methods” section). Additionally, this interplay between sequence depth and FOV limits the maximum FOVs for scenarios where the desired sequence depths are given [see Equations (7) and (8) in the “Methods” section].

Compressed-sensing-enabled single-shot imaging typically has to pay the penalty of compromised spatial resolutions14,18,21,22,50 that are caused by the multiplexing of multi-dimensional information and finite encoding pixel size. A characterization experiment (see Supplementary Note 10 and Supplementary Fig. 15) suggests a 2–3× degradation in spatial resolutions in CUSP. Advanced concepts, such as lossless encoding7 and multi-view projection51, are promising in compensating for this resolution loss. The success of compressed sensing relies on the premise that the unknown object is sparse in some space. For CUSP, this precondition is justified via calculations in Supplementary Table 1. All the scenes in this work have data sparsity >90%, which is sufficient to lead to satisfactory reconstructions, according to a former study52. This restriction on sparsity may be alleviated by optimizing the encoding mask23,53 or the regularizer54 in Equation (2).

CUSP’s temporal resolution in active mode is essentially time-bandwidth limited13. Alternative encoding mechanisms, such as polarization encoding, may be explored to break this barrier and boost the imaging speed to the 1015-fps regime. The imaging speed of passive CUSP is confined by the streak camera. In the past, streak camera technology has been a powerful workhorse in science and engineering20,40,41,42,55. However, it suffers from the low quantum efficiency, space-charge effect, and intrinsic electronic jitter. Most importantly, boost in its imaging speed is at the mercy of the development of faster electronics. We envision that optical shearing that avoids the complex photon–electron conversions could bring forth the next leap in ultrafast imaging. Recent progress in machine learning may facilitate image reconstruction56,57. All of these directions represent only a small portion of the overall efforts in pushing the boundaries of ultrafast imaging technologies.

Methods

Setups and samples

In the imaging section of the active CUSP system (Fig. 1a), the light path of s-View starts by routing the intermediate image, formed by the interchangeable imaging system, to the DMD by a 4f imaging system. A static pseudo-random binary pattern with a non-zero filling ratio of 35% is displayed on the DMD. The encoded dynamic scene is then relayed to the entrance port of the streak camera via the same 4f system. In order to maximize photon utilization efficiency of s-View, a dual-projection scheme was adopted instead in the passive CUSP system for SR-FLIM (see Supplementary Note 9 for details). In the experiment in Fig. 2a, the group of letters were printed on a piece of transparency film to impart complex spatial features. The BGO slab (MTI, BGO12b101005S2) used in the experiments in Fig. 3 has a thickness of 0.5 mm. Its edges were delicately polished by a series of polishing films (Thorlabs) to ensure minimal light scattering for optimal coupling of the gate pulse. In the fluorescence microscopy setup in Fig. 4a, a single excitation pulse was focused on the back focal plane of an objective to provide wide-field illumination in the FOV. The Rh6G solution was masked by a negative USAF target, placed at the sample plane. A dichroic mirror and a long-pass filter effectively blocked stray excitation light. After a tube lens, the image was directed into the imaging section of the passive CUSP system.

Equipment

The imaging section in Fig. 1a includes a 50/50 non-polarizing beamsplitter (Thorlabs, BS014), a DMD (Texas Instruments, LightCrafter 3000), an external CCD camera (Point Grey, GS3-U3-28S4M), 150-mm-focal-length lenses (Thorlabs, AC254-150-B), a 300 lp mm−1 transmissive diffraction grating (Thorlabs, GTI25-03), and a streak camera (Hamamatsu, C6138). In the illumination section, a 270-mm-long N-SF11 glass rod (Newlight Photonics, two SF11L1100-AR800, SF11G1500-AR800, combined with SF11G1200-AR800) was used for the experiment in Fig. 2, while a 95-mm-long N-SF11 glass rod (Newlight Photonics, SF11G1500-AR800, SF11G1400-AR800, combined with SF11G1050-AR800) was used for the experiments in Fig. 3. A femtosecond laser (Coherent, Libra HE) was used as the light source in active CUSP. A picosecond laser (Huaray, Olive-1064-1BW) was used in passive CUSP for fluorescence excitation.

In Fig. 2a, the pair of 300 lp mm−1 reflective gratings are G1 (Newport, 33025FL01-270R) and G2 (Thorlabs, GR25-0310). Figure 3a includes two linear polarizers P1 (Thorlabs, LPVIS100-MP2), P2 (Newport, 05P109AR.16), and a long pass filter (Thorlabs, FGL715). The imaging optics for the experiment in Fig. 2 includes two lenses (Thorlabs, AC508-100-B, and AC127-025-B), giving a ×0.25 magnification. A pair of lenses (Thorlabs, AC254-125-B, and AC254-100-B), with a magnification of ×0.8, was used for the experiments in Fig. 3. The SR-FLIM setup, shown in Fig. 4a, consists of a dichroic mirror (Thorlabs, DMLP550R), a long-pass emission filter (Thorlabs, FEL0550), a short-pass excitation filter (Thorlabs, FES0600), a 75-mm-focal-length focusing lens (Thorlabs, LA1608), a ×4 infinity-corrected objective (Olympus, RMS4X), and a 200-mm-focal-length tube lens (Thorlabs, ITL200).

The DMD spatially encodes the scene by turning each micromirror to either +12° (ON) or –12° (OFF) from the DMD’s surface normal. Each micromirror has a flat metallic coating and reflects the incident light to one of the two directions. Therefore, we can collect the encoded scene either in a retro-reflection mode by tilting the DMD by 12°, which is used in active CUSP (Fig. 1a), or by using two separate sets of relay optics, which is used in passive CUSP (see Supplementary Fig. 13a). Here, one DMD code has a lateral dimension of 56.7 μm × 56.7 μm. Thus, the relay optics with ~0.08 NA has enough spatial resolution to image the DMD onto the streak camera.

As schematically detailed in Supplementary Fig. 1, in the streak camera, the image of the fully opened entrance slit is first relayed to the 3-mm-wide photocathode by the input optics. Then the photocathode converts photons into photoelectrons, which are accelerated through an accelerating mesh. The streak camera can operate in two modes: focus mode and streak mode. In the focus mode, no sweep voltage is applied so that an internal CCD camera (Hamamatsu, Orca R2) only captures time-unsheared images, akin to a conventional sensor. In the streak mode, these photoelectrons experience a temporal shearing on the vertical axis driven by an ultrafast linear sweep voltage. The highest sweeping speed is 100 fs per pixel, equivalently 10 THz18,20. The photoelectron current is amplified by a microchannel plate via the generation of secondary electrons. After a phosphor screen converts the electrons back to photons, an internal CCD camera captures a single 2D image of the photons.

Image acquisition and reconstruction

We denote the optical energy distributions recorded in u-View and s-View as \(E_{\mathrm{u}}\) and \(E_{\mathrm{s}}\), which are related to the dynamic scene \(I\left( {x,y,t,\lambda } \right)\) by

where C represents the spatial encoding by the DMD; \({\boldsymbol{F}}_{\mathbf{u}}\) and \({\boldsymbol{F}}_{\mathbf{s}}\) describe the spatial low-pass filtering due to the optics in u-View and s-View, respectively; D represents image distortion in s-View with respect to the u-View; Sλ denotes the spectral dispersion in the horizontal direction;\({\boldsymbol{Q}}_{\mathbf{u}}\) and \({\boldsymbol{Q}}_{\mathbf{s}}\) are the quantum efficiencies of the external CCD and the photocathode of the streak camera, respectively; St denotes the temporal shearing in the vertical direction; T represents spatiotemporal-spectrotemporal integration over the exposure time of each CCD; and α is the experimentally calibrated energy ratio between the streak camera and the external CCD. Here, we generalize the intensity distributions of the dynamic scenes observed by both active CUSP and passive CUSP as \(I\left( {x,y,t,\lambda } \right)\) for simplicity. The concatenated form of Eq. (1) is \(E = {\boldsymbol{O}}I\left( {x,y,t,\lambda } \right)\), where \(E = \left[ {E_{\mathrm{u}},\,\alpha E_{\mathrm{s}}} \right]\) and O stands for the joint operator.

By assuming the spatiotemporal-spectrotemporal sparsity of the scene and calibrating for O, \(I\left( {x,y,t,\lambda } \right)\) can be retrieved by solving the inverse problem defined as18,58

In Equation (2), argmin represents the argument that minimizes the function in the following bracket. The first term denotes the discrepancy between the solution I and the measurement E via the operator O and \(\left\| \cdot \right\|_2\) represents L2 norm. The second term enforces sparsity in the domain defined by the following regularizer ϕ(I) while the regularization parameter \(\xi\) balances these two terms. We opt to use total variation (TV) in the four-dimensional \(x\)–\(y\)–\(t\)–\(\lambda\) space as our regularizer. For an accurate and stable reconstruction, a software program adapted from the two-step iterative shrinkage/thresholding (TwIST) algorithm58 was utilized. More details on data acquisition and reconstruction can be found in Supplementary Notes 4 and 5. In addition, the assumption of data sparsity is justified in Supplementary Table 1.

CUSP’s reconstruction of a data matrix of dimensions \(N_x \times N_y \times N_{{\mathrm{ta}}}\) (active mode) or \(N_x \times N_y \times N_{{\mathrm{tp}}} \times N_\lambda\) (passive mode) requires a 2D image of \(N_x \times N_y\) in u-View and a 2D image of \(N_{{\mathrm{col}}} \times N_{{\mathrm{row}}}\) in s-View. In active mode,

in passive mode,

In Equations (3) and (4), P is the number of sub-pulses, \(v\) is the streak camera’s shearing speed, \(t_{{\mathrm{sp}}}\) is the temporal separation between adjacent sub-pulses, and d is the streak camera’s pixel size. The finite pixel counts of the streak camera (\(N_x^{{\mathrm{sc}}} \times N_y^{{\mathrm{sc}}} = 672 \times 512\) after 2 × 2 binning) physically restrict \(N_{{\mathrm{col}}} \le 672\) and \(N_{{\mathrm{row}}} \le 512\). In active CUSP imaging shown in Fig. 2, a raw streak camera image of 609 × 449 pixels was required to reconstruct a data matrix of \(N_x \times N_y \times N_{{\mathrm{ta}}} = 470 \times 350 \times 700\). Similarly, in Fig. 3, reconstruction of a data matrix of \(N_x \times N_y \times N_{{\mathrm{ta}}} = 310 \times 90 \times 980\) requires an image of 449×229 pixels from the streak camera. Note that here \(N_{{\mathrm{ta}}}/P\) equals the number of wavelength samples within one sub-pulse, which is 140 in our active CUSP system. In the passive-mode imaging shown in Fig. 4, an SR-FLIM data matrix of \(N_x \times N_y \times N_{{\mathrm{tp}}} \times N_\lambda = 110 \times 110 \times 400 \times 100\) is associated with a streak camera image of 209×509 pixels.

Limits in sequence depth and FOV

Based on Equations (3) and (4) above, when assuming fixed FOVs, there are upper limits in the sequence depth achievable by CUSP. It is straightforward to derive this limit for active mode:

In Eq. (5), 140 represents the number of wavelength samples in one sub-pulse. \(N_{{\mathrm{ta}}}^{{\mathrm{max}}}\) is fundamentally determined by how many sub-pulses (\(P\)) can be accommodated in temporal shearing. For passive mode, the maximum sequence depth is simply

Taking practical numbers for example, when using the 70 Tfps active CUSP to image a scene of \(N_x \times N_y = 350 \times 350\) pixels, we can obtain a maximum sequence depth \(N_{{\mathrm{ta}}}^{{\mathrm{max}}} = 1120\) frames. This number is more than 3 times the maximum sequence depth obtained in T-CUP18 and more than 18 times that of the best single-shot femtosecond imaging modality other than CUP17. The recording time in this case is 16 ps. For the 0.5 Tfps passive CUSP, if the scene is \(N_x \times N_y = 100 \times 100\), then \(N_{{\mathrm{tp}}}^{{\mathrm{max}}} = 413\) frames, which corresponds to a recording time of 826 ps.

Similarly, for scenarios with both sequence depth and number of wavelength samples fixed, the streak camera limits the spatial pixel counts in the reconstructed data in active mode to be \(N_x \le N_x^{{\mathrm{sc}}} - \left( {N_{{\mathrm{ta}}}/P} \right) + 1\) and \(N_y \le N_y^{{\mathrm{sc}}} - \left( {vt_{{\mathrm{sp}}}/d} \right) \times P + 1\). In passive mode, these limits are \(N_x \le N_x^{{\mathrm{sc}}} - N_\lambda + 1\) and \(N_y \le N_y^{{\mathrm{sc}}} - N_{{\mathrm{tp}}} + 1\). Considering the camera pixel size \(d\) and the magnification of the imaging optics \(M\), we can obtain the maximum FOV for active mode:

and for passive mode:

Inserting practical numbers into Equations (7) and (8) and using the configurations of our three demonstrations (Figs. 2–4), we have the maximum FOVs of 13.75 mm × 10.66 mm, 4.30 mm × 3.00 mm, and 0.92 mm × 0.18 mm, respectively.

Data availability

The data that support the findings of this study are available from the corresponding author on reasonable request.

Code availability

The reconstruction algorithm is described in detail in Methods and Supplementary Information. We have opted not to make the computer code publicly available because the code is proprietary and used for other projects.

References

El-Desouki, M. et al. CMOS image sensors for high speed applications. Sensors 9, 430–444 (2009).

Bowlan, P., Fuchs, U., Trebino, R. & Zeitner, U. D. Measuring the spatiotemporal electric field of tightly focused ultrashort pulses with sub-micron spatial resolution. Opt. Express 16, 13663–13675 (2008).

Berezin, M. Y. & Achilefu, S. Fluorescence lifetime measurements and biological imaging. Chem. Rev. 110, 2641–2684 (2010).

Herink, G., Kurtz, F., Jalali, B., Solli, D. R. & Ropers, C. Real-time spectral interferometry probes the internal dynamics of femtosecond soliton molecules. Science 356, 50–54 (2017).

Matlis, N. H. et al. Snapshots of laser wakefields. Nat. Phys. 2, 749–753 (2006).

Kodama, R. et al. Fast heating of ultrahigh-density plasma as a step towards laser fusion ignition. Nature 412, 798–802 (2001).

Liang, J. et al. Single-shot real-time video recording of a photonic Mach cone induced by a scattered light pulse. Sci. Adv. 3, e1601814 (2017).

Barty, A. et al. Ultrafast single-shot diffraction imaging of nanoscale dynamics. Nat. Photonics 2, 415–419 (2008).

Balistreri, M. L. M., Gersen, H., Korterik, J. P., Kuipers, L. & van Hulst, N. F. Tracking femtosecond laser pulses in space and time. Science 294, 1080–1082 (2001).

Zewail, A. H. Four-dimensional electron microscopy. Science 328, 187–193 (2010).

Liang, J. & Wang, L. V. Single-shot ultrafast optical imaging. Optica 5, 1113–1127 (2018).

Nakagawa, K. et al. Sequentially timed all-optical mapping photography (STAMP). Nat. Photonics 8, 695–700 (2014).

Tamamitsu, M. et al. Design for sequentially timed all-optical mapping photography with optimum temporal performance. Opt. Lett. 40, 633–636 (2015).

Lu, Y., Wong, T. T. W., Chen, F. & Wang, L. Compressed ultrafast spectral-temporal photography. Phys. Rev. Lett. 122, 193904 (2019).

Ehn, A. et al. FRAME: femtosecond videography for atomic and molecular dynamics. Light Sci. Appl. 6, e17045 (2017).

Zeng, X. et al. High-resolution single-shot ultrafast imaging at ten trillion frames per second. Preprint at https://arxiv.org/abs/1807.00685 (2018).

Li, Z., Zgadzaj, R., Wang, X., Chang, Y.-Y. & Downer, M. C. Single-shot tomographic movies of evolving light-velocity objects. Nat. Commun. 5, 3085 (2014).

Liang, J., Zhu, L. & Wang, L. V. Single-shot real-time femtosecond imaging of temporal focusing. Light Sci. Appl. 7, 42 (2018).

Gao, L., Liang, J., Li, C. & Wang, L. V. Single-shot compressed ultrafast photography at one hundred billion frames per second. Nature 516, 74–77 (2014).

Guide to Streak Cameras, https://www.hamamatsu.com/resources/pdf/sys/SHSS0006E_STREAK.pdf (Hamamatsu Corp., Hamamatsu City, Japan, 2008).

Llull, P. et al. Coded aperture compressive temporal imaging. Opt. Express 21, 10526–10545 (2013).

Wagadarikar, A., John, R., Willett, R. & Brady, D. Single disperser design for coded aperture snapshot spectral imaging. Appl. Opt. 47, B44–B51 (2008).

Yang, C. et al. Optimizing codes for compressed ultrafast photography by the genetic algorithm. Optica 5, 147–151 (2018).

Liu, X., Zhang, S., Yurtsever, A. & Liang, J. Single-shot real-time sub-nanosecond electron imaging aided by compressed sensing: Analytical modeling and simulation. Micron 117, 47–54 (2019).

Zhu, P., Jafari, R., Jones, T. & Trebino, R. Complete measurement of spatiotemporally complex multi-spatial-mode ultrashort pulses from multimode optical fibers using delay-scanned wavelength-multiplexed holography. Opt. Express 25, 24015–24032 (2017).

Suzuki, T. et al. Single-shot 25-frame burst imaging of ultrafast phase transition of Ge2Sb2Te5 with a sub-picosecond resolution. Appl. Phys. Express 10, 092502 (2017).

Leuthold, J., Koos, C. & Freude, W. Nonlinear silicon photonics. Nat. Photonics 4, 535–544 (2010).

Chang, D. E., Vuletić, V. & Lukin, M. D. Quantum nonlinear optics—photon by photon. Nat. Photonics 8, 685–694 (2014).

Morgner, U. et al. Sub-two-cycle pulses from a Kerr-lens mode-locked Ti:sapphire laser. Opt. Lett. 24, 411–413 (1999).

Takeda, J. et al. Time-resolved luminescence spectroscopy by the optical Kerr-gate method applicable to ultrafast relaxation processes. Phys. Rev. B 62, 10083–10087 (2000).

Duguay, M. A. & Mattick, A. T. Ultrahigh speed photography of picosecond light pulses and echoes. Appl. Opt. 10, 2162–2170 (1971).

Shen, Y. R. The Principles of Nonlinear Optics. (Wiley, 2002).

Yu, Z., Gundlach, L. & Piotrowiak, P. Efficiency and temporal response of crystalline Kerr media in collinear optical Kerr gating. Opt. Lett. 36, 2904–2906 (2011).

Pian, Q., Yao, R., Sinsuebphon, N. & Intes, X. Compressive hyperspectral time-resolved wide-field fluorescence lifetime imaging. Nat. Photonics 11, 411–414 (2017).

Cadby, A., Dean, R., Fox, A. M., Jones, R. A. L. & Lidzey, D. G. Mapping the fluorescence decay lifetime of a conjugated polymer in a phase-separated Blend using a scanning near-field optical microscope. Nano Lett. 5, 2232–2237 (2005).

Bergmann, A. & Becker, W. Multiwavelength fluorescence lifetime imaging by TCSPC. Proc. SPIE, Advanced Photon Counting Techniques, 637204 (2006).

Shrestha, S. et al. High-speed multispectral fluorescence lifetime imaging implementation for in vivo applications. Opt. Lett. 35, 2558–2560 (2010).

Selanger, K. A., Falnes, J. & Sikkeland, T. Fluorescence lifetime studies of Rhodamine 6G in methanol. J. Phys. Chem. 81, 1960–1963 (1977).

Marcu, L. Fluorescence lifetime techniques in medical applications. Ann. Biomed. Eng. 40, 304–331 (2012).

Doukas, A. G., Lu, P. Y. & Alfano, R. R. Fluorescence relaxation kinetics from rhodopsin and isorhodopsin. Biophys. J. 35, 547–550 (1981).

Krishnan, R. V., Saitoh, H., Terada, H., Centonze, V. E. & Herman, B. Development of a multiphoton fluorescence lifetime imaging microscopy system using a streak camera. Rev. Sci. Instrum. 74, 2714–2721 (2003).

Zhang, J. L. et al. Strongly cavity-enhanced spontaneous emission from silicon-vacancy centers in diamond. Nano Lett. 18, 1360–1365 (2018).

Etoh, G. T. et al. The theoretical highest frame rate of silicon image sensors. Sensors 17, 483 (2017).

Merritt, D., Milosavljević, M., Verde, L. & Jimenez, R. Dark matter spikes and annihilation radiation from the galactic center. Phys. Rev. Lett. 88, 191301 (2002).

Rohrlich, D. & Aharonov, Y. Cherenkov radiation of superluminal particles. Phys. Rev. A 66, 042102 (2002).

Lohse, D., Schmitz, B. & Versluis, M. Snapping shrimp make flashing bubbles. Nature 413, 477–478 (2001).

Hawking, S. W. Gravitational radiation from colliding black holes. Phys. Rev. Lett. 26, 1344–1346 (1971).

Solli, D., Ropers, C., Koonath, P. & Jalali, B. Optical rogue waves. Nature 450, 1054–1057 (2007).

Jiang, X. et al. Chaos-assisted broadband momentum transformation in optical microresonators. Science 358, 344–347 (2017).

Wang, P. & Menon, R. Ultra-high-sensitivity color imaging via a transparent diffractive-filter array and computational optics. Optica 2, 933–939 (2015).

Li, L. et al. Single-impulse panoramic photoacoustic computed tomography of small-animal whole-body dynamics at high spatiotemporal resolution. Nat. Biomed. Eng. 1, 0071 (2017).

Liu, X., Liu, J., Jiang, C., Vetrone, F. & Liang, J. Single-shot compressed optical-streaking ultra-high-speed photography. Opt. Lett. 44, 1387–1390 (2019).

Yang, C. et al. Compressed ultrafast photography by multi-encoding imaging. Laser Phys. Lett. 15, 116202 (2018).

Zhu, L. et al. Space-and intensity-constrained reconstruction for compressed ultrafast photography. Optica 3, 694–697 (2016).

Glebov, V. Y. et al. Development of nuclear diagnostics for the National Ignition Facility (invited). Rev. Sci. Instrum. 77, 10E715 (2006).

Zhu, B., Liu, J. Z., Cauley, S. F., Rosen, B. R. & Rosen, M. S. Image reconstruction by domain-transform manifold learning. Nature 555, 487–492 (2018).

Rivenson, Y. et al. Deep learning microscopy. Optica 4, 1437–1443 (2017).

Bioucas-Dias, J. M. & Figueiredo, M. A. A new TwIST: two-step iterative shrinkage/thresholding algorithms for image restoration. IEEE Trans. Image Process. 16, 2992–3004 (2007).

Acknowledgements

The authors thank Dr. Liren Zhu for assistance with the reconstruction algorithm and Dr. Junhui Shi for providing the control program of the precision linear stage. This work was supported in part by National Institutes of Health grant R01 CA186567 (NIH Director’s Transformative Research Award).

Author information

Authors and Affiliations

Contributions

P.W. conceived the system design, built the system, performed the experiments, developed the reconstruction algorithm and analyzed the data. J.L. contributed to the early stage development and experiment. L.V.W. initiated the concept and supervised the project. All authors wrote and revised the paper.

Corresponding author

Ethics declarations

Competing interests

The authors disclose the following patent applications: WO2016085571 A3 (L.V.W. and J.L.), U.S. Provisional 62/298,552 (L.V.W. and J.L.), and U.S. Provisional 62/904,442 (L.V.W. and P.W.).

Additional information

Peer review information Nature Communications thanks Mário A. T. Figueiredo and the other, anonymous, reviewer(s) for their contribution to the peer review of this work. Peer reviewer reports are available.

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Wang, P., Liang, J. & Wang, L. Single-shot ultrafast imaging attaining 70 trillion frames per second. Nat Commun 11, 2091 (2020). https://doi.org/10.1038/s41467-020-15745-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41467-020-15745-4

This article is cited by

-

Swept coded aperture real-time femtophotography

Nature Communications (2024)

-

Artificial intelligence assisted IoT-fog based framework for emergency fire response in smart buildings

Cluster Computing (2024)

-

Photonic signal processor based on a Kerr microcomb for real-time video image processing

Communications Engineering (2023)

-

Single-pulse real-time billion-frames-per-second planar imaging of ultrafast nanoparticle-laser dynamics and temperature in flames

Light: Science & Applications (2023)

-

Real-time observation of optical rogue waves in spatiotemporally mode-locked fiber lasers

Communications Physics (2023)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.