Abstract

Identifying dissipation is essential for understanding the physical mechanisms underlying nonequilibrium processes. In living systems, for example, the dissipation is directly related to the hydrolysis of fuel molecules such as adenosine triphosphate (ATP). Nevertheless, detecting broken time-reversal symmetry, which is the hallmark of dissipative processes, remains a challenge in the absence of observable directed motion, flows, or fluxes. Furthermore, quantifying the entropy production in a complex system requires detailed information about its dynamics and internal degrees of freedom. Here we introduce a novel approach to detect time irreversibility and estimate the entropy production from time-series measurements, even in the absence of observable currents. We apply our technique to two different physical systems, namely, a partially hidden network and a molecular motor. Our method does not require complete information about the system dynamics and thus provides a new tool for studying nonequilibrium phenomena.

Similar content being viewed by others

Introduction

Irreversibility is the telltale sign of nonequilibrium dissipation1,2. Systems operating far-from-equilibrium utilize part of their free energy budget to perform work, while the rest is dissipated into the environment. Estimating the amount of free energy lost to dissipation is mandatory for a complete energetics characterization of such physical systems. For example, it is essential for understanding the underlying mechanism and efficiency of natural Brownian engines, such as RNA-polymerases or kinesin molecular motors, or for optimizing the performance of artificial devices3,4,5. Often the manifestation of irreversibility is quite dramatic, signaled by directed flow or movement, as in transport through mesoscopic devices6, traveling waves in nonlinear chemical reactions7, directed motion of molecular motors along biopolymers8, and the periodic beating of a cell’s flagellum9,10 or cilia11. This observation has led to a handful of experimentally validated methods to identify irreversible behavior by confirming the existence of such flows or fluxes3,12,13,14. However, in the absence of directed motion, it can be challenging to determine if an observed system is out of equilibrium, especially in small noisy systems where fluctuations could mask any obvious irreversibility15. One such possibility is to observe a violation of the fluctuation–dissipation theorem16,17,18; though this approach requires not just passive observations of a correlation function, but active perturbations in order to measure response properties, which can be challenging in practice. Thus, the development of noninvasive methods to quantitatively measure irreversibility and dissipation are necessary to characterize nonequilibrium phenomena.

Our understanding of the connection between irreversibility and dissipation has deepened in recent years with the formulation of stochastic thermodynamics, which has been verified in numerous experiments on meso-scale systems19,20,21,22. Within this framework, it is possible to evaluate quantities as the entropy along single nonequilibrium trajectories23. A cornerstone of this approach is the establishment of a quantitative identification of dissipation, or more specifically entropy production rate \(\dot S\), as the Kullback–Leibler divergence (KLD) between the probability \({\cal{P}}(\gamma _t)\) to observe a trajectory γt of length t and the probability \({\cal{P}}(\tilde \gamma _t)\) to observe the time-reversed trajectory \(\tilde \gamma _t\)1,24,25,26,27,28,29:

where kB is Boltzmann’s constant. The KLD between two probability distributions p and q is defined as \(D[p||q] \equiv \mathop {\sum}\nolimits_x p(x)\,{\mathrm{ln}}\,(p(x)/q(x))\) and is an information-theoretic measure of distinguishability30. For the rest of the paper we take kB = 1, so the entropy production rate has units of time−1. The entropy production \(\dot S\) in Eq. (1) has a clear physical meaning. It is the usual entropy production defined in irreversible thermodynamics by assuming that the reservoirs surrounding the system are in equilibrium. For instance, in the case of isothermal molecular motors hydrolyzing ATP to ADP+P at temperature T, the entropy production in Eq. (1) is \(\dot S = r{\mathrm{\Delta }}\mu /T - \dot W/T\), where r is the ATP consumption rate, Δμ = μATP − μADP − μP is the difference between the ATP, and the ADP and P chemical potentials, and \(\dot W\) is the power of the motor31. In many experiments, all these quantities can be measured except the rate r. Therefore, the techniques that we develop in this paper can help to estimate the ATP consumption rate, even at stalling conditions.

The equality in Eq. (1) is reached if the trajectory γt contains all the meso- and microscopic variables out of equilibrium. Hence the relative entropy in Eq. (1) links the statistical time-reversal symmetry breaking in the mesocopic dynamics directly to dissipation. Based on this connection, estimators of the relative entropy between stationary trajectories and their time reverses allow one to determine if a system is out of equilibrium or even bound the amount of energy dissipated to maintain a nonequilibrium state. Such an approach, however, is challenging to implement accurately as it requires large amounts of data, especially when there is no observable current32.

Despite the absence of observable average currents, irreversibility can still leave a mark in fluctuations. Consider, for example, a particle hoping on a 1D lattice, as in Fig. 1, where up and down jumps have equal probabilities, but the timing of the jumps have different likelihoods. Although there is no net drift on average, the process is irreversible, since any trajectory can be distinguished from its time reverse due to the asymmetry in jump times. Thus, beyond the sequence of events, the timing of events can reveal statistical irreversibility. Such a concept was used, for example, to determine that the E. Coli flagellar motor operates out of equilibrium based on the motor dwell-time statistics33.

In this work, we establish a technique that allows one to identify and quantify irreversibility in fluctuations in the timing of events, by applying Eq. (1) to stochastic jump processes with arbitrary waiting time distributions, that is, semi-Markov processes, also known as continuous time random walks (CTRW) in the context of anomalous diffusion. Such models emerge in a plethora of contexts34,35,36 ranging from economy and finance37 to biology, as in the case of kinesin dynamics38 or in the anomalous diffusion of the Kv2.1 potassium channel39. In fact, as we show below and in the Methods section, semi-Markov processes result in experimentally relevant scenarios where one has access only to a limited set of observables of Markov kinetic networks with certain topologies. We begin by reviewing the semi-Markov framework, where we present our main result of the entropy production rate estimator. Next, we apply our approach to general hidden networks, where an observer has access only to a subset of the states, comparing our estimator with previous proposals for partial entropy production that are zero in the absence of currents. Finally, we address a particularly important case of molecular motors, where their translational motion is easily observed, but the biochemical reactions that power their motion are hidden. Remarkably, our technique allows us to even reveal the existence of parasitic mechano-chemical cycles at stalling—where the observed current vanishes or the motor is stationary—simply from the distribution of step times. In addition, our quantitative lower bound on the entropy production rate can be used to shed light on the efficiency of molecular motors operation and on the entropic cost of maintaining their far-from-equilibrium dynamics40,41,42,43,44.

Results

Irreversibility in semi-Markov processes

A semi-Markov process is a stochastic renewal process α(t) that takes values in a discrete set of states, α = 1, 2, …. The renewal property implies that the waiting time intervals tα in a given state α are positive, independent, and identically distributed random variables. If the system arrives to state α at t = 0, the probability to jump to a different state β at time [t, t + dt] is ψβα(t)dt, with ψβα(t) being the probability density of transition times45. These densities are not normalized, with \(p_{\beta \alpha } \equiv {\int}_0^{\infty} {\psi _{\beta \alpha }} (t){\mathrm{d}}t\) being the probability for the next jump to be α → β given that the walker arrived at α. We assume that the particle eventually leaves any site α, i.e., ψαα(t) = 0 and \(\mathop {\sum}\nolimits_\beta p_{\beta \alpha } = 1\), so the matrix pβα is a stochastic matrix. Its normalized (right) eigenvector Rα with eigenvalue 1, then represents the fraction of visits to each state α.

The waiting time distribution at site α, \(\psi _\alpha (t) = \mathop {\sum}\nolimits_\beta \psi _{\beta \alpha }(t)\), is normalized with average waiting time τα. We can also define the waiting time distribution conditioned on a given jump α → β as ψ(t|α → β) ≡ ψβα(t)/pβα, which is already normalized.

Consider now a generic semi-Markovian trajectory γt of length t with n jumps, which is fully described by the sequence of jumps and jump times, \(\gamma _t = \{ \alpha _1\mathop{\longrightarrow}\limits^{{t_1}}\alpha _2\mathop{\longrightarrow}\limits^{{t_2}} \ldots \mathop{\longrightarrow}\limits^{{t_{n - 1}}}\alpha _n\mathop{\longrightarrow}\limits^{{t_n}}\alpha _{n + 1}\}\) with \(\mathop {\sum}\nolimits_n t_n = t\), occurring with probability \({\cal{P}}(\gamma _t) = \psi _{\alpha _2,\alpha _1}(t_1)\psi _{\alpha _3,\alpha _2}(t_2) \ldots \psi _{\alpha _{n + 1},\alpha _n}(t_n)\). In order to characterize the dissipation of this single trajectory, we must define its time reverse \(\tilde \gamma _t = \{ \alpha _n\mathop{\longrightarrow}\limits^{{t_n}}\alpha _{n - 1}\mathop{\longrightarrow}\limits^{{t_{n - 1}}} \ldots \mathop{\longrightarrow}\limits^{{t_2}}\alpha _1\mathop{\longrightarrow}\limits^{{t_1}}\alpha _0\}\) whose probability is given by \({\cal{P}}(\tilde \gamma _t) = \psi _{\alpha _0,\alpha _1}(t_1) \ldots \psi _{\alpha _{n - 1},\alpha _n}(t_n)\), see Methods and Fig. 5.

Directly applying Eq. (1) to this scenario shows that the KLD between the probability distributions of the forward and backward trajectories can be split into two contributions (see Methods):

The first term, \(\dot S_{{\mathrm{aff}}}\), or affinity entropy production, results entirely from the divergence between the state trajectories, regardless of the jump times, σ ≡ {α1, α2,…,αn+1} and \(\tilde \sigma \equiv \{ \alpha _n, \ldots ,\alpha _1,\alpha _0\}\), that is, it accounts for the affinity between states:

where \(J_{\beta \alpha }^{{\mathrm{ss}}} = p_{\beta \alpha }R_\alpha - p_{\alpha \beta }R_\beta\) is the net probability flow per step, or current, from α to β, and the factor \({\cal{T}} = \mathop {\sum}\nolimits_\alpha \tau _\alpha R_\alpha\) is the mean duration of each step, which can be used to transform the units from per-step to per-time46. We see that the affinity entropy production vanishes in the absence of currents, as it occurs in arbitrary Markov systems32,47.

The contribution due to the waiting times is expressed in terms of the KLD between the waiting time distributions

which is the main result of this paper and allows one to detect irreversibility in stationary trajectories with zero current.

Notice that Rα being the occupancy of state α, pβαRα is the probability to observe the sequence α → β in a stationary forward trajectory, while pμβpβαRα is the probability to observe the sequence α → β → μ.

Equation (2) is the chain rule of the relative entropy applied to the semi-Markov process and the core of our proposed estimator. In the special case of Poisson jumps, D[ψ(t|β → μ)||ψ(t|β → α)] = 0 since all waiting time distributions for jumps starting at a given site β are equal (see Methods), and we recover the standard expression for the relative entropy of Markov processes \(\dot S = \dot S_{{\mathrm{aff}}}\). It is worth mentioning that previous attempts to establish the entropy production of semi-Markov processes failed to identify the term SWTD because they assumed that the waiting time distributions were independent of the final state, as occurs in Markov processes48,49,50. However, such a strong assumption does not hold in many situations of interest, as in the ones discussed below.

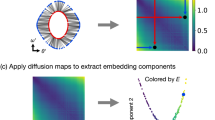

Decimation of Markov chains and second-order semi-Markov processes

Semi-Markov processes appear when sites are decimated from Markov chains of certain topologies. Figure 2 shows representative examples. In Fig. 2a, b, we show two models of a molecular motor that runs along a track with sites {…, i − 1, i, i + 1, …} and has six internal states. If the spatial jumps (red lines) and the transitions between internal states (black lines) are Poissonian jumps, then the motor is described by a Markov process. On the other hand, when the internal states are not accessible to the experimenter, the waiting time distributions corresponding to the spatial jumps i → i ± 1 are no longer exponential and the motion of the motor must be described by a semi-Markov process. Figure 2a shows an example where the decimation of internal states directly yields a semi-Markov process ruling the spatial motion of the motor. The second example, sketched in Fig. 2b, is more involved since the upward and the downward jumps end in different sets of internal states. As a consequence, the waiting time distribution of, say, the jump i → i + 1, depends on the site that the motor visited before site i. Then, the resulting dynamics must be described by a second-order semi-Markov process, that is, one has to consider the states α(t) = [iprev(t), i(t)], where i(t) is the current position of the motor and iprev(t) is the previous position, right before the jump.

Decimation of Markov processes. a, b Molecular motor model: An observer with access only to the position (vertical axis) cannot resolve the internal states (circles). a Decimation to position results in a first-order Markov process, since spatial jumps connect the same internal state. b Decimation results in a second-order semi-Markov process, where the waiting time distribution for spatial transitions depends on whether the motor previously jumped down or up. c Hidden kinetic network: An observer unable to resolve states 3, 4, and 5, treats them as a single hidden state H. The resulting decimated network is a second-order semi-Markov process on the three states 1, 2, and H, where the non-Poissonian waiting time distributions for transitions out of state H depend on the past

The same applies to generic kinetic networks, as the one depicted in Fig. 2c. Suppose that the original network is Markovian with states i = 1, …, 5. However, if the experimenter only has access to states 1 and 2, with the rest clumped together into a hidden state H, then the resulting dynamics is also a second-order semi-Markov process with the reduced set i = 1, 2, H.

For second-order semi-Markov processes the affinity entropy production reads

where p(ijk) ≡ R[ij]p([ij] → [jk]) is the probability to observe the sequence i→j→k. This entropy is still proportional to the current for one-dimensional processes and therefore vanishes in the absence of flows in the observed dynamics, see Methods. The entropy production contribution due to the irreversibility of the waiting time distributions is

Let us emphasize that the calculation of \(\dot S_{{\mathrm{WTD}}}\) requires collecting statistics on sequences of two consecutive jumps, i.e., i → j → k. We now proceed to apply these results to generic cases of simple kinetic networks and molecular motors.

Hidden networks

We first apply our formalism to estimate the dissipation in kinetic networks with hidden states, which have received increasing attention in recent years owing to their many practical and experimental implications24,32,47,51,52,53.

Consider a network where ωij is the transition rate from state j to i, with πi the steady-state distribution. The total entropy production rate at steady-state is54

where the positivity of \(\dot S\) stems from the positivity of each individual term in the sum40,52,55. In order to calculate the total entropy production \(\dot S\) according to Eq. (7), full knowledge of the steady-state probability distribution {πi} and the transition rates between all the microstates {ωij} is required. We would like to assign a partial entropy production rate when one only has access to a limited set of states and transitions. To be concrete, we focus on the scenario depicted in Fig. 2c, where only states 1 and 2 can be observed. Previously, two approaches for assigning partial entropy production rate in such a case have been defined in the literature, both of which provide a lower bound on the total entropy production rate56: the passive partial entropy production rate due to Shiraishi and Sagawa52, and the informed partial entropy production rate due to Polettini and Esposito53,57. The passive partial entropy production rate \(\dot S_{{\mathrm{PP}}}\) for the single observed link is simply given by the corresponding term in Eq. (7)

where the observer is assumed to have access to the steady-state populations of the two states, π1 and π2, as well as the transition rates between them.

The informed partial entropy production \(\dot S_{{\mathrm{IP}}}\) for the single link requires additional information: the observer is assumed to have control over the transition rates of the observed link, without affecting any of the hidden transitions, such that they can stall the corresponding current and record the ratio of populations in the two observed states, \(\pi _1^{{\mathrm{stall}}}/\pi _2^{{\mathrm{stall}}}\). The stalling distribution \(\pi _i^{{\mathrm{stall}}}\) produces an effective thermodynamic description of the observed subsystem53 and an effective affinity with which the informed partial entropy production rate is calculated:

Although the informed partial entropy production was proven to produce a better estimation of the total dissipation compared with the passive partial entropy production, i.e., \(\dot S_{{\mathrm{PP}}} \le \dot S_{{\mathrm{IP}}} \le \dot S\)56, both vanish at stalling conditions. Hence, even if the system is in a nonequilibrium steady-state, when the current over the observed link is zero, these estimators cannot give a nontrivial lower bound on the total entropy production. To be fair, we point out that each estimator uses different information.

For the KLD estimator, we assume that the observer can record whether the system is in states 1 or 2, or in the hidden part of the network, H, which is a coarse-grained state representing the unobserved subsystem. In this case, the resulting contracted network has three states, {1, 2, H}. Jumps between states 1 and 2 follow Poissonian statistics, as in a general continuous-time Markov process, with the same rates as in the original network. On the other hand, jumps from H to 1 or 2 are not Poissonian and depend on the state just prior to entering the hidden part. To apply our results for semi-Markov processes, we thus have to consider the states α(t) = [iprev(t) i(t)], where i(t) = 1, 2, H is the current state and iprev(t) = 1, 2, H is the state right before the last jump. To make the equations more compact, we will use the short-hand notation ij ≡ [i j] for the remainder of this section.

Similar to Eq. (2), the semi-Markov entropy production rate for hidden networks, \(\dot S_{{\mathrm{KLD}}}\), consists of two contributions: the affinity estimator \(\dot S_{{\mathrm{aff}}}\) and the WTD estimator \(\dot S_{{\mathrm{WTD}}}\). In this case, the affinity estimator, Eq. (5), is given by

where \(J_{21}^{{\mathrm{ss}}}\) is the stationary current per step from 1 to 2, defined as \(J_{21}^{{\mathrm{ss}}} = R_{[12]} - R_{[21]}\). As expected, this term vanishes when detailed balance holds and the current is zero (see Methods). Applying Eq. (6) to the semi-Markov process results in the following expression for the contribution of the hidden estimator

where p(ijk) = R[ij]p(ij → jk). In Methods, we further show that for a network of a single cycle of states the informed partial entropy production \(\dot S_{{\mathrm{IP}}}\) equals the affinity estimator \(\dot S_{{\mathrm{aff}}}\) defined in Eq. (5). Summarizing, we have the hierarchy \(\dot S_{{\mathrm{PP}}} \le \dot S_{{\mathrm{IP}}} = \dot S_{{\mathrm{aff}}} \le \dot S_{{\mathrm{KLD}}} \le \dot S\).

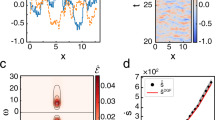

Let us apply the hidden semi-Markov entropy production framework to a specific example of a network with four states, two of which are hidden (Fig. 3a). We have chosen a random 4 × 4 matrix, with non-negative off diagonal entries and zero sum columns, as a generator of a continuous-time Markov jump process over the four states. The rates over the observed link were varied according to ω12(F) = ω12eβFL and ω21(F) = ω21e−βFL over a range of values of a force F that included the stalling force Fstall, where β = 1/T is the inverse temperature and L is a characteristic length scale. For each value of F, we contracted the dynamics to the three states, 1, 2, and H (Fig. 3b, c), and estimated the waiting time distributions ψ(t|2H → H1) and ψ(t|1H → H2) using a kernel density estimate with a positive support58,59 (see Methods), depicted in Fig. 3d. From those distributions, we derived the hidden semi-Markov entropy production rate \(\dot S_{{\mathrm{KLD}}}\) (Fig. 3e). We further calculated both the passive- and informed-partial entropy production rates to compare all the estimators to the total entropy production rate (Fig. 3e). Our results clearly demonstrate the advantage of using the waiting time distributions for bounding the total entropy production rate compared with the two other previous approaches. Our framework can reveal the irreversibility and the underlying dissipation, even when the observed current vanishes, without the need of manipulating the system.

Hidden network. a Four-state network, as seen by an observer, with access only to states 1, 2, H. b, c Illustration of a trajectory over the four possible states (b) where the gray region corresponds to the hidden part. The resulting observed semi-Markov dynamics (c). d Kernel density estimation of the wait time distributions at F = Fstall. e Estimated total entropy production rate \(\dot S\) (solid red line), entropy production for semi-Markov model \(\dot S_{{\mathrm{KLD}}}\) (dashed blue curve), informed partial entropy production rate \(\dot S_{{\mathrm{IP}}}\) (dashed-dotted black curve), the passive partial entropy production rate \(\dot S_{{\mathrm{PP}}}\) (dotted green curve), and the experimental entropy production rate estimated according to the semi-Markov model \(\dot S_{{\mathrm{KLD}}}^{{\mathrm{Exp}}}\) (blue crosses). f, g Relative error (ratio of experimental entropy production rate to analytical value) for three random trajectories as a function of the number of steps at F = Fstall (f) and F = 3β−1L−1 (g) showing faster convergence away from the stalling force. Inset: p-value for rejecting the null hypothesis that the experimental data was sampled from a zero mean distribution as a function of the number of steps for F = Fstall (blue curve), and F = 3β−1L−1 (red curve), showing that the average is statistically significant different from zero. The numerical simulations were done using the Gillespie algorithm with the following transition rates: ω12 = 2 s−1, ω13 = 0 s−1, ω14 = 1 s−1, ω21 = 3 s−1, ω23 = 2 s−1, ω24 = 35 s−1, ω31 = 0 s−1, ω32 = 50 s−1, ω34 = 0.7 s−1, ω41 = 8 s−1, ω42 = 0.2 s−1, ω43 = 75 s−1, where the diagonal elements were chosen to have zero sum coloums

The KLD entropy production rate was also estimated from simulated experimental data, obtained by sampling random trajectories of 107 jumps using the Gillespie algorithm60. The simulated trajectories (Fig. 3b) were coarse-grained into the set of states of the hidden semi-Markov model (Fig. 3c), and the hidden semi-Markov entropy production rate for the simulated experimental data, \(\dot S_{{\mathrm{KLD}}}^{{\mathrm{Exp}}}\), was estimated as above (Fig. 3e, blue crosses). In order to assess the rate of convergence with increasing number of simulated steps, we calculated the \(\dot S_{{\mathrm{KLD}}}^{{\mathrm{Exp}}}\) for different fractions of the 107 steps trajectories, showing <20% error above 105 steps at stalling, and <5% error away from stalling for trajectories with as little as 104 steps (Fig. 3f, g). Let us stress that the hidden semi-Markov entropy production rate averaged over three simulated experimental trajectories produced a lower bound on the total entropy production rate, which was strictly positive and statistically significant different from zero (p < 0.05, Fig. 3g, inset) for all trajectory lengths tested.

Molecular motors

A slight modification of the case analyzed in the previous section allows us to study molecular motors with hidden internal states. We are interested in the schemes previously sketched in Fig. 2a, b, where a motor can physically move in space or switch between internal states. The observed motor position is labeled by {..., i − 1, i, i + 1, ...}. All jumps are Poissonian and obey local detailed balance, with an external source of chemical work, Δμ, and an additional mechanical force F that can act only on the spatial transitions.

Analogous to the previous example, the observed dynamics is a second-order semi-Markov process. To make the following equations more intuitive, we use the graphical notation  for two consecutive upward jumps (i − 1 → i → i + 1),

for two consecutive upward jumps (i − 1 → i → i + 1),  for a downward jump followed by and upward one,

for a downward jump followed by and upward one,  for an upward followed by a downward jump, and

for an upward followed by a downward jump, and  for two consecutive downward jumps. Notice that the probabilities are normalized as

for two consecutive downward jumps. Notice that the probabilities are normalized as  .

.

Similar to Eq. (2), we have the decomposition of the KLD estimator into a contribution from state affinities given by

where the current per step is Jss = Rup − Rdown with Rup = R[i,i+1] (Rdown = R[i,i−1]) corresponding to the occupancy rate of states moving upward (downward). The contribution due to the relative entropy between waiting time distributions is

As in the previous examples, the latter term can produce a lower bound on the total entropy production rate even in the absence of observable currents, in which case \(\dot S_{{\mathrm{aff}}} = 0\). Without chemical work (Δμ = 0), however, the waiting time distributions of the  and

and  processes become identical and the contribution of \(\dot S_{{\mathrm{WTD}}}\) vanishes as well.

processes become identical and the contribution of \(\dot S_{{\mathrm{WTD}}}\) vanishes as well.

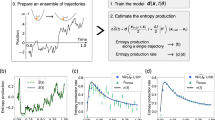

Let us apply the molecular motor semi-Markov entropy production framework to a specific example. We consider the following two-state molecular motor model of a power stroke engine that works by hydrolizing ATP against an external force F, see Fig. 4a.

Molecular motor. a Illustration: Active states (red boxed squares) can use a source of chemical energy while passive states (circles) cannot. The chemical energy is used to power the motor against and external force F. b Illustration of a trajectory for four positions, where the hidden internal active state is denoted by the red shaded regions. c, d Waiting time distributions ψ(t) for the up–up (red) and down–down (blue) transitions at stalling for Δμ = 0 β−1 (c) and Δμ = 10 β−1 (d). Notice that the distributions are only different in the presence of a chemical drive. e Total entropy production rate \(\dot S\) (red), affinity estimator \(\dot S_{{\mathrm{aff}}}\) (green), and entropy production for semi-Markov model \(\dot S_{{\mathrm{KLD}}}\) for Δμ = 0 (left) up to Δμ = 10 (right), as a function of force F, centered at the stall force. f Same as (e) at stalling as a function of chemical drive. The affinity estimator \(\dot S_{{\mathrm{aff}}}\) offers a lower bound constrained by the statistical uncertainty due to the finite amount of data (green shaded region). Calculations were done using the parameters ks = 1 s−1, k0 = 0.01 s−1, and the trajectories were sampled using the Gillespie algorithm61

The state of the motor is described by its physical position and its internal state, which can be either active, that is, capable of hydrolyzing ATP, or passive. We label the active and passive states as i′ and i, respectively, with i = 0, ±1, ±2, …. Owing to the translational symmetry in the system, all the spatial positions are essentially equivalent. The position of the motor is accessible to an external observer, whereas the two internal states i and i′ are indistinguishable. An example of a trajectory is illustrated in Fig. 4b.

The chemical affinity Δμ, arising from ATP hydrolysis, determines the degree of nonequilibrium in our system and biases the transitions i′↔i + 1, whereas the external force F affects all the spatial transitions, regardless of the internal state. The transition rates between the two internal states are defined as ωi′i = ωii′ = ks. Transition rates between passive states obey local detailed balance: ωi,i+1/ωi+1,i = eβFL, where L is the length of a single spatial jump. From the active state, the system can use the ATP to move upward with rates verifying local detailed balance ωi′,i+1/ωi+1,i′ = eβ(FL−Δμ).

The resulting waiting time distributions are shown in Fig. 4c, d, and the estimated entropy production rates as a function of external force are depicted in Fig. 4e, with chemical potential ranging from Δμ = 0 β−1 to 10 β−1. The total entropy production rate \(\dot S\) is calculated using Eq. (7). As expected, the dissipation increases with the nonequilibrium driving force, and vanishes when Δμ = FL = 0. Notice that the affinity estimator \(\dot S_{{\mathrm{aff}}}\) does not provide a lower bound to the total entropy production rate \(\dot S\) at stalling, as it is not statistically different from zero (Fig. 4f), and thus cannot distinguish between nonequilibrium and equilibrium processes. In contrast, the semi-Markov estimator \(\dot S_{{\mathrm{KLD}}}\), which accounts for the asymmetry of the waiting time distributions provides a nontrivial positive bound, even in the absence of observable current.

Discussion

We have analytically derived an estimator of the total entropy production rate using the framework of semi-Markov processes. The novelty of our approach is the utilization of the waiting time distributions, which can be non-Poissonian, allowing us to unravel irreversibility in hidden degrees of freedom arising in any time-series measurement of an arbitrary experimental setup. Our estimator can thus provide a lower bound on the total entropy production rate even in the absence of observable currents. Hence, it can be applied to reveal an underlying nonequilibrium process, even if no net current, flow, or drift, are present. We stress that our method fully quantifies irreversibility. Owing to the direct link between the entropy production rate and the relative entropy between a trajectory and its time reversal, as manifested in Eq. (1), our estimator provides the best possible bound on the dissipation rate utilizing time irreversibility. One can consider utilizing other properties of the waiting time distribution to bound the entropy production, through the thermodynamics uncertainty relations4,61,62, for example.

We have illustrated our method with two possible applications: a situation where only a subsystem is accessible to an external observer and a molecular motor whose internal degrees of freedom cannot be resolved. Using these examples, we have demonstrated the advantage of our semi-Markov estimator compared with other entropy production bounds, namely, the passive- and informed-partial entropy production rates, both of which vanish at stalling conditions.

In summary, we have developed an analytic tool that can expose irreversibility otherwise undetectable, and distinguish between equilibrium and nonequilibrium processes. This framework is completely generic and thus opens opportunities in numerous experimental scenarios by providing a new perspective for data analysis.

Methods

Semi-Markov processes, waiting time distributions and steady states

A semi-Markov stochastic process is a renewal process α(t) with a discrete set of states α = 1, 2, …, N. The dynamics is determined by the probability densities of transition times ψβα(t), which are defined as ψβα(t)dt being equal to the probability that the system jumps from state α to state β in the time interval [t, t + dt] if it arrived at site α at time t = 0. By definition ψαα(t) = 0. When the system is a particle jumping between the sites of a lattice, the semi-Markov process is also called a CTRW. For clarity, we will assume this CTRW picture, that is, the system in our discussion will be a particle jumping between sites α.

The probability densities ψβα(t) are not normalized:

is the probability that, given that the particle arrived at site α, the next jump is α → β. We will assume that the particle eventually leaves any site α, i.e., \(\mathop {\sum}\nolimits_\beta p_{\beta \alpha } = 1\). Then

is normalized and it is the probability density of the residence time at site α. It is also called the waiting time distribution. Its average

is the mean residence time or mean waiting time. We can also define the waiting time distribution conditioned on a given jump α → β,

which is normalized. The function ψβα(t) is in fact the joint probability distribution of the time t and the jump α → β.

The transition probabilities pβα determine a Markov chain given by the visited states α1, α2, α3, ..., regardless of the times when the jumps occur. The transition matrix of this Markov chain is {pβα} and the stationary probability distribution Rα verifies

i.e., the distribution Rα is the right eigenvector of the stochastic matrix {pβα} with eigenvalue 1. Moreover, if the Markov chain is ergodic, then the distribution Rα is precisely the fraction of visits the system makes to site α in the stationary regime. Thus, we call Rα the distribution of visits.

From the distribution of visits one can easily obtain the stationary distribution of the process α(t),

since the particle visits the state α a fraction of steps Rα and spends an average time τα in each step. The normalization constant \({\cal{T}} \equiv \mathop {\sum}\nolimits_\alpha R_\alpha \tau _\alpha\) is the average time per step.

The stationary current in the Markov chain from state α to β is

This is in fact the current per step in the original semi-Markov system since, in an ensemble of very long trajectories, it is the net number of particles that jump from α to β divided by the number of steps. Since the duration of a long stationary trajectory with K steps (K ≫ 1) is K\({\cal{T}}\), the current per unit of time is \(J_{\beta \alpha }^{{\mathrm{ss}}}/{\cal{T}}\). Notice that the average time per step \({\cal{T}}\) acts as a conversion factor that allows one to express currents, entropy production, etc. either as per step or as per unit of time.

The Markovian case

If the process α(t) is Markovian, then the jumps are Poissonian and transition time densities are exponential. Let ωβα be the rate of jumps from α to β. The mean waiting time at site α is the inverse of the the total outgoing rate:

and the waiting time distributions are

with jump probabilities pβα = ταωβα. Notice that the waiting time distribution ψ(t|α → β) does not depends on β. The distribution of visits Rα verifies

and the stationary distribution πα obeys

which is the equation for the stationary distribution that one obtains from the master equation

Decimation of Markov chains

Semi-Markov processes arise in a natural way when states are removed or decimated from Markov processes with certain topologies. Consider a Markov process where two sites, 1 and 2, are connected through a closed network of states i = 3, 4, … that we want to decimate, as sketched in Fig. 2c. If the observer cannot discern between states i = 3, 4, …, the resulting three-state process with i(t) = 1, 2, H is a second-order semi-Markov chain. We want to calculate the effective transition time distribution \(\psi _{21}^{{\mathrm{decim}}}(t)\) from state 1 to state 2 in terms of the distributions ψij(t) of the initial Markov chain. For this purpose, we have to sum over all possible paths from 1 to 2 through the decimated network.

Consider first the paths with exactly n + 1 jumps, like γn+1 = {1 → i1 → i2…in → 2}, where ik = 3, 4, …. The probability that such a path occurs with an exact duration t is

This is a convolution. If one performs the Laplace transform on all time-dependent functions, generically denoted by a tilde,

then Eq. (26) simplifies to

The transition time distribution \(\psi _{21}^{{\mathrm{decim}}}(t)\) in the decimated network is the sum of P(γn+1, t) over all possible paths with an arbitrary number of steps. For Laplace transformed distributions, this is written as

where the sum runs over all possible paths, that is, the indexes ik = 3, 4, … take on all possible values corresponding to decimated sites. Then the sum can be expressed in terms of the matrix Ψ(t) whose entries are the transition time densities [Ψ(t)]ji = ψji(t), i, j = 3, 4, …. If \(\tilde \Psi (s)\) is the corresponding Laplace transform of that matrix, one has

which is a sum only over all the decimated sites i, j = 3, 4, … that are connected to sites 1 and 2, respectively.

The decimation procedure can be used to derive transition time distributions in a kinetic network when the observer cannot discern among a set of states, say 3, 4, 5,…, that are generically labeled as H for hidden, as in Fig. 2c. For the specific case of the figure, the effective transition time distribution from site 1 to site H, for instance, can be written as

whereas the distributions for jumps starting at H depend on the previous state. For instance, if H is reached from 1, the random walk within H starts at site 3 with probability p31/(p31 + p51) and site 5 with probability p51/(p31 + p51). The transition time distribution corresponding to the jump [1H] → [H2] is

where the matrix \(\tilde \Psi (s)\) is a 3 × 3 matrix corresponding to the Laplace transform of the transition time distributions among sites 3, 4, and 5.

Irreversibility in semi-Markov processes

Here we calculate the relative entropy between a stationary trajectory γ and its time reversal \(\tilde \gamma\) in a generic semi-Markov process. A trajectory γ is fully described by the sequence of jumps (see Fig. 5):

and occurs with a probability (conditioned on the initial jump α0 → α1 at t = 0)

The reverse trajectory is

where we assume, for the sake of generality, that states can change under time reversal, \(\tilde \alpha\) being the time reversal of state α. The probability to observe \(\tilde \gamma\), conditioned on the initial jump \(\tilde \alpha _{n + 1} \to \tilde \alpha _n\) at t = 0, is

It is again convenient to consider the forward and backward trajectories without the waiting times, i.e.,

and the probability to observe those trajectories are

The initial jumps of γ and \(\tilde \gamma\) do not contribute to the entropy production in the stationary regime. Then the relative entropy per jump reads

Each time integral can be written as

where α, β, μ is a substring of the forward trajectory σ (α = αk, β = αk+1, μ = αk+2). Inserting this expression in Eq. (41),

Notice that pβαRα is the probability to observe the sequence α, β in the stationary forward trajectory and pμβpβαRα is the probability to observe the sequence α, β, μ. Finally, we can obtain the expression used in the main text for the entropy production per unit of time dividing by the conversion factor \({\cal{T}}\) (average time per step), that is \(\dot S = \delta S/{\cal{T}}\). The result is

where the entropy production corresponding to the affinity of states reads

and the one corresponding to the waiting time distributions is

If \(\alpha = \tilde \alpha\), then the affinity entropy production can be written as

which vanishes in the absence of currents.

Second-order semi-Markov processes

A 2nd-order semi-Markov process i(t) also describes the trajectory of a system that jumps among a discrete set of states i = 1, 2, …. However, i(t) is not semi-Markov because the transition time distributions depend on the previous state iprev(t) visited right before the last jump. Hence, the vector α(t) ≡ [iprev(t) i(t)] is indeed a semi-Markov process.

To quantify the irreversibility of a second-order Markov chain, we introduce the time-reversal state of α = [ij], which is \(\tilde \alpha = [ji]\). However, this is not enough to reconstruct the backward trajectory, since there is a shift compared with the simple semi-Markov case, as illustrated in Fig. 6. In the forward trajectory, the system spends a time tk in state αk = [ik−1ik], with k = 1, …, n, whereas in the backward trajectory it spends the same time tk in state \(\tilde \alpha _{k + 1} = [i_{k + 1}i_k]\). Consequently, the probabilities of the forward and backward trajectories are, respectively,

Repeating the arguments of the previous section, one obtains

The contribution to the entropy production (per step) due to the state affinities now reads

and the contribution due to the waiting time distributions is given by

where p(ijk) = R[ij]p([ij] → [jk]) is the probability to observe the sequence i→j→k in the trajectory and p(ij) = R[ij] is the probability to observe the sequence i→j.

It is interesting to particularize Eq. (51) to a ring with N sites. This is the case of our examples—the hidden network and the molecular motor. In this case, in the stationary regime,

since each site has only two neighbors and therefore p(ijk) + p(iji) = p(ij) for any triplet of contiguous sites ijk. Here Jss is the stationary current between any pair of contiguous sites. Hence, we can write the affinity as

which is proportional to the current. The argument of the logarithm also vanishes at zero current (see Eq. (57) below); consequently, the affinity entropy tends to zero quadratically when as the force is tuned to the stalling condition. This is the usual behavior in linear irreversible thermodynamics, but recall that for semi-Markov processes the affinity entropy production misses the nonequilibrium signature that is present in the waiting time distributions and is assessed by \(\dot S_{{\mathrm{WTD}}}\).

Affinity and informed partial entropy production

Here we show that the informed partial entropy production equals the affinity entropy production for the case of a generic hidden kinetic network proposed in the main text where the observed network forms a single cycle.

First, let us generalize the detailed balance condition for a second-order Markov ring with three states, i = 1, 2, H, and zero stationary current. The stationary distribution R[ij] verifies the master Eq. (18):

If the current vanishes, R[ij] = R[ji] for all i, j, and these equations reduce to

Multiplying the three equations we get the generalized detailed balance condition:

In the observable network, the transitions from states 1 and 2 are still Poissonian and independent of the previous state:

At stall force, the generalized detailed balance condition in Eq. (57) holds and can be written as

where we have taken into account that only the rates ω12 and ω21 are tuned in the protocol proposed by Polettini and Esposito to obtain the informed partial entropy production.

The current in the direction 1 → 2 → H → 1 in the stationary regime can be written as Jss/T = ω12π2 − ω21π1. Then, at stall force \(\omega _{12}^{{\mathrm{stall}}}\pi _2^{{\mathrm{stall}}} = \omega _{21}^{{\mathrm{stall}}}\pi _1^{{\mathrm{stall}}}\). With all these considerations, the argument of the logarithm in Eq. (9) of the main text can be written as

Comparing Eq. (9) in the main text with Eq. (54), one immediately gets \(\dot S_{{\mathrm{IP}}} = \dot S_{{\mathrm{aff}}}\).

Data availability

The data that support the findings of this study are available from the corresponding author upon reasonable request.

Code availability

Source code is available from the corresponding authors upon reasonable request.

References

Maes, C. & Netočný, K. Time-reversal and entropy. J. Stat. Phys. 110, 269–310 (2003).

Parrondo, J. M., Van den Broeck, C. & Kawai, R. Entropy production and the arrow of time. New J. Phys. 11, 073008 (2009).

Gnesotto, F., Mura, F., Gladrow, J. & Broedersz, C. Broken detailed balance and non-equilibrium dynamics in living systems: a review. Rep. Prog. Phys. 81, 066601 (2018).

Li, J., Horowitz, J. M., Gingrich, T. R. & Fakhri, N. Quantifying dissipation using fluctuating currents. Nat. Commun. 10, 1666 (2019).

Brown, A. I. & Sivak, D. A. Toward the design principles of molecular machines. Physics in Canada 73 (2017).

S. Datta, Electronic Transport in Mesoscopic Systems (Cambridge University Press, 1997).

Castets, V., Dulos, E., Boissonade, J. & De Kepper, P. Experimental evidence of a sustained standing turingtype nonequilibrium chemical pattern. Phys. Rev. Lett. 64, 2953 (1990).

Astumian, R. D. & Bier, M. Fluctuation driven ratchets: molecular motors. Phys. Rev. Lett. 72, 1766 (1994).

Brokaw, C. Calcium-induced asymmetrical beating of triton-demembranated sea urchin sperm agella. J. Cell Biol. 82, 401 (1979).

Battle, C. et al. Broken detailed balance at mesoscopic scales in active biological systems. Science 352, 604–607 (2016).

Vilfan, A. & Jülicher, F. Hydrodynamic flow patterns and synchronization of beating cilia. Phys. Rev. Lett. 96, 058102 (2006).

Gladrow, J., Fakhri, N., MacKintosh, F., Schmidt, C. & Broedersz, C. Broken detailed balance of filament dynamics in active networks. Phys. Rev. Lett. 116, 248301 (2016).

Fodor, É. et al. Nonequilibrium dissipation in living oocytes. EPL (Europhys. Lett.) 116, 30008 (2016).

Zia, R. & Schmittmann, B. Probability currents as principal characteristics in the statistical mechanics of non-equilibrium steady states. J. Stat. Mech.: Theory Exp. 2007, P07012 (2007).

Rupprecht, J.-F. & Prost, J. A fresh eye on nonequilibrium systems. Science 352, 514–515 (2016).

Martin, P., Hudspeth, A. & Jülicher, F. Comparison of a hair bundle's spontaneous oscillations with its response to mechanical stimulation reveals the underlying active process. Proc. Natl Acad. Sci. USA 98, 14380–14385 (2001).

Mizuno, D., Tardin, C., Schmidt, C. F. & MacKintosh, F. C. Nonequilibrium mechanics of active cytoskeletal networks. Science 315, 370 (2007).

Bohec, P. et al. Probing active forces via a fluctuation-dissipation relation: Application to living cells. EPL (Europhys. Lett.) 102, 50005 (2013).

Liphardt, J., Dumont, S., Smith, S. B., Tinoco, I. & Bustamante, C. Equilibrium information from nonequilibrium measurements in an experimental test of Jarzynski's equality. Science 296, 1832–1835 (2002).

Collin, D. et al. Verification of the crooks fluctuation theorem and recovery of RNA folding free energies. Nature 437, 231 (2005).

Toyabe, S., Sagawa, T., Ueda, M., Muneyuki, E. & Sano, M. Experimental demonstration of informationto-energy conversion and validation of the generalized Jarzynski equality. Nat. Phys. 6, 988 (2010).

Xiong, T. et al. Experimental verification of a Jarzynski-related information-theoretic equality by a single trapped ion. Phys. Rev. Lett. 120, 010601 (2018).

Seifert, U. Entropy production along a stochastic trajectory and an integral fluctuation theorem. Phys. Rev. Lett. 95, 040602 (2005).

Kawai, R., Parrondo, J. & Van den Broeck, C. Dissipation: the phase-space perspective. Phys. Rev. Lett. 98, 080602 (2007).

Maes, C. The fluctuation theorem as a gibbs property. J. Stat. Phys. 95, 367–392 (1999).

Roldán, É., Barral, J., Martin, P., Parrondo, J. M. & Jülicher, F. Arrow of time in active fluctuations. Preprint at https://arxiv.org/pdf/1803.04743 (2018).

Horowitz, J. & Jarzynski, C. Illustrative example of the relationship between dissipation and relative entropy. Phys. Rev. E 79, 021106 (2009).

Gaveau, B., Granger, L., Moreau, M. & Schulman, L. Dissipation, interaction, and relative entropy. Phys. Rev. E 89, 032107 (2014).

Gaveau, B., Granger, L., Moreau, M. & Schulman, L. S. Relative entropy, interaction energy and the nature of dissipation. Entropy 16, 3173–3206 (2014).

Cover, T. M. & Thomas, J. A. Elements of Information Theory (John Wiley & Sons, 2012).

Parrondo, J. & de Cisneros, B. J. Energetics of Brownian motors: a review. Appl. Phys. A 75, 179–191 (2002).

Roldán, É. & Parrondo, J. M. Estimating dissipation from single stationary trajectories. Phys. Rev. Lett. 105, 150607 (2010).

Tu, Y. The nonequilibrium mechanism for ultrasensitivity in a biological switch: Sensing by maxwell's demons. Proc. Natl Acad. Sci. USA 105, 11737–11741 (2008).

Kindermann, F. et al. Nonergodic diffusion of single atoms in a periodic potential. Nat. Phys. 13, 137 (2017).

Schulz, J. H., Barkai, E. & Metzler, R. Aging renewal theory and application to random walks. Phys. Rev. X 4, 011028 (2014).

Metzler, R., Jeon, J.-H., Cherstvy, A. G. & Barkai, E. Anomalous diffusion models and their properties: nonstationarity, non-ergodicity, and ageing at the centenary of single particle tracking. Phys. Chem. Chem. Phys. 16, 24128–24164 (2014).

Scalas, E. The application of continuous-time random walks in finance and economics. Phys. A: Stat. Mech. Appl. 362, 225–239 (2006).

Fisher, M. E. & Kolomeisky, A. B. Simple mechanochemistry describes the dynamics of kinesin molecules. Proc. Natl Acad. Sci. USA 98, 7748–7753 (2001).

Weigel, A. V., Simon, B., Tamkun, M. M. & Krapf, D. Ergodic and nonergodic processes coexist in the plasma membrane as observed by single-molecule tracking. Proc. Natl Acad. Sci. USA 108, 6438–6443 (2011).

Horowitz, J. M., Zhou, K. & England, J. L. Minimum energetic cost to maintain a target nonequilibrium state. Phys. Rev. E 95, 042102 (2017).

Horowitz, J. & England, J. Information-theoretic bound on the entropy production to maintain a classical nonequilibrium distribution using ancillary control. Entropy 19, 333 (2017).

Pietzonka, P., Barato, A. C. & Seifert, U. Universal bound on the efficiency of molecular motors. J. Stat. Mech.: Theory Exp. 2016, 124004 (2016).

Brown, A. I. & Sivak, D. A. Allocating dissipation across a molecular machine cycle to maximize ux. Proc. Natl Acad. Sci. USA 114, 11057–11062 (2017).

Large, S. J. & Sivak, D. A. Optimal discrete control: minimizing dissipation in discretely driven nonequilibrium systems. Preprint at https://arxiv.org/pdf/1812.08216 (2018).

Cinlar, E. Introduction to Stochastic Processes (Courier Corporation, 2013).

Bedeaux, D., Lakatos-Lindenberg, K. & Shuler, K. E. On the relation between master equations and random walks and their solutions. J. Math. Phys. 12, 2116–2123 (1971).

Roldán, É. & Parrondo, J. M. Entropy production and Kullback-Leibler divergence between stationary trajectories of discrete systems. Phys. Rev. E 85, 031129 (2012).

Esposito, M. & Lindenberg, K. Continuous-time random walk for open systems: fluctuation theorems and counting statistics. Phys. Rev. E 77, 051119 (2008).

Maes, C., Netočný, K. & Wynants, B. Dynamical fluctuations for semi-Markov processes. J. Phys. A: Math. Theor. 42, 365002 (2009).

Wang, H. & Qian, H. On detailed balance and reversibility of semi-Markov processes and single-molecule enzyme kinetics. J. Math. Phys. 48, 013303 (2007).

Andrieux, D. et al. Entropy production and time asymmetry in nonequilibrium fluctuations. Phys. Rev. Lett. 98, 150601 (2007).

Shiraishi, N. & Sagawa, T. Fluctuation theorem for partially masked nonequilibrium dynamics. Phys. Rev. E 91, 012130 (2015).

Polettini, M. & Esposito, M. Effective thermodynamics for a marginal observer. Phys. Rev. Lett. 119, 240601 (2017).

Van den Broeck, C. & Esposito, M. Ensemble and trajectory thermodynamics: a brief introduction. Phys. A: Stat. Mech. Appl. 418, 6–16 (2015).

Esposito, M. & Parrondo, J. M. Stochastic thermodynamics of hidden pumps. Phys. Rev. E 91, 052114 (2015).

Bisker, G., Polettini, M., Gingrich, T. R. & Horowitz, J. M. Hierarchical bounds on entropy production inferred from partial information. J. Stat. Mech.: Theory Exp. 2017, 093210 (2017).

Polettini, M. & Esposito, M. Effective fluctuation and response theory. Preprint at https://arxiv.org/pdf/1803.03552 (2018).

Terrell, G. R. & Scott, D. W. Variable kernel density estimation, Ann. Stat. 20, 1236–1265 (1992).

Botev, Z. I. et al. Kernel density estimation via diffusion. Ann. Stat. 38, 2916–2957 (2010).

Gillespie, D. T. Exact stochastic simulation of coupled chemical reactions. J. Phys. Chem. 81, 2340–2361 (1977).

Gingrich, T. R., Horowitz, J. M., Perunov, N. & England, J. L. Dissipation bounds all steady-state current fluctuations. Phys. Rev. Lett. 116, 120601 (2016).

Barato, A. C. & Seifert, U. Thermodynamic uncertainty relation for biomolecular processes. Phys. Rev. Lett. 114, 158101 (2015).

Acknowledgements

I.A.M. and J.M.R.P. acknowledge funding from the Spanish Government through grants TerMic (FIS2014-52486-R) and Contract (FIS2017-83709-R). I.A.M. acknowledges funding from Juan de la Cierva program. G.B. acknowledges the Zuckerman STEM Leadership Program. J.M.H. is supported by the Gordon and Betty Moore Foundation as a Physics of Living Systems Fellow through Grant No. GBMF4513.

Author information

Authors and Affiliations

Contributions

J.M.R.P. conceived the project. I.A.M. and G.B. performed the numerical simulations and analyzed the data. All authors discussed the results and wrote the paper.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Martínez, I.A., Bisker, G., Horowitz, J.M. et al. Inferring broken detailed balance in the absence of observable currents. Nat Commun 10, 3542 (2019). https://doi.org/10.1038/s41467-019-11051-w

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41467-019-11051-w

This article is cited by

-

Precision-dissipation trade-off for driven stochastic systems

Communications Physics (2023)

-

Estimating time-dependent entropy production from non-equilibrium trajectories

Communications Physics (2022)

-

Irreversibility in dynamical phases and transitions

Nature Communications (2021)

-

Quantitative analysis of non-equilibrium systems from short-time experimental data

Communications Physics (2021)

-

Learning the non-equilibrium dynamics of Brownian movies

Nature Communications (2020)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.