Abstract

Systems coupled to multiple thermodynamic reservoirs can exhibit nonequilibrium dynamics, breaking detailed balance to generate currents. To power these currents, the entropy of the reservoirs increases. The rate of entropy production, or dissipation, is a measure of the statistical irreversibility of the nonequilibrium process. By measuring this irreversibility in several biological systems, recent experiments have detected that particular systems are not in equilibrium. Here we discuss three strategies to replace binary classification (equilibrium versus nonequilibrium) with a quantification of the entropy production rate. To illustrate, we generate time-series data for the evolution of an analytically tractable bead-spring model. Probability currents can be inferred and utilized to indirectly quantify the entropy production rate, but this approach requires prohibitive amounts of data in high-dimensional systems. This curse of dimensionality can be partially mitigated by using the thermodynamic uncertainty relation to bound the entropy production rate using statistical fluctuations in the probability currents.

Similar content being viewed by others

Introduction

Nonequilibrium dynamics is an essential physical feature of biological and active matter systems1,2,3. By harvesting a fuel—in the form of solar energy, a redox potential, or a metabolic sugar—the molecular dynamics in these systems differs profoundly from the equilibrium case. Some of the fuel’s free energy is utilized to perform work or is stored in an alternative form, but the remainder is dissipated into the environment, often in the form of heat1,4. The energetic loss can alternatively be cast as an increase in entropy of the environment, and the entropy production is associated with broken time-reversal symmetry in the system’s dynamics5,6,7. This connection has been leveraged to experimentally classify particular biophysical processes as thermal or active8,9 based on the existence of probability currents10,11. There is great interest in going beyond this binary classification—thermal versus active—to experimentally quantify how active, or how nonequilibrium, a process is12,13,14. Such a quantification could, for example, provide insight into how efficiently molecular motors are able to work together to drive large-scale motions15,16,17,18,19.

One way to quantify this nonequilibrium activity is to measure the dissipation rate, or how much free energy is lost per unit time. In a biophysical setting, a direct local calorimetric measurement is challenging, but signatures of the dissipation are encoded in stochastic fluctuations of the system20, even far-from-equilibrium21,22,23,24,25,26,27,28,29. We set out to develop and explore strategies for inferring the dissipation rate from these experimentally-accessible nonequilibrium fluctuations. In a system of interacting driven colloids, where all degrees of freedom are tracked, Lander et al. have indirectly measured dissipation from fluctuations27. However, it should also be possible to bound dissipation on the basis of nonequilibrium fluctuations in a subset of the relevant degrees of freedom. As a tangible example of our motivation, consider the experiment of Battle et al., which tracked cilia shape fluctuations to determine that the cilia dynamics were driven out of equilibrium9. With suitable analysis of those shape fluctuations, might one determine, or at least constrain, the free energetic cost to sustain the cilia’s motion?

Though our ultimate motivations pertain to these complex systems, here we present an exhaustive analytical and numerical study of a tractable model30. Using a model consisting of beads coupled by linear springs, we demonstrate how the statistical properties of trajectories provides information about the dissipation rate. The bead-spring model furthermore allows us to address various practical considerations that will be important for future experimental applications of the inference techniques: how much data is required, what is the role of coarse graining, and what can be done about the curse of dimensionality. We show that fluctuations in nonequilibrium currents can provide a route to bound the dissipation rate, even in high-dimensional dynamical systems operating outside a linear-response regime. Crucially, we anticipate many of these insights will support the data analysis of experimentally accessible biological and active matter systems.

Results

Bead-spring model

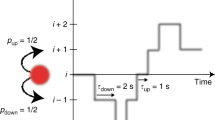

As one of the simplest possible nonequilibrium models, we consider two coupled beads, each allowed to fluctuate in one dimension. The beads are connected to each other and to the boundary walls by linear springs with stiffness k (see Fig. 1). We imagine that the beads are embedded in two different viscous fluids, one hot with temperature Th and the other cold with temperature Tc. These fluids exert a friction γ on each bead, absorbing energy from the beads’ motion. In the absence of coupling between the beads, the average rate at which each thermal bath injects energy exactly balances with the rate it absorbs energy due to frictional drag. By coupling the beads, however, there is a net steady-state rate of heat flow \(\dot Q_{{\mathrm{ss}}}\) from the hot reservoir into the system and out to the cold reservoir. The hot reservoir’s entropy changes with rate \(\dot S_{\mathrm{h}} = - \dot Q_{{\mathrm{ss}}}/T_{\mathrm{h}}\) while the cold reservoir’s entropy increases with rate \(\dot S_{\mathrm{c}} = \dot Q_{{\mathrm{ss}}}/T_{\mathrm{c}}\). In total, the steady-state entropy production rate can therefore be written as

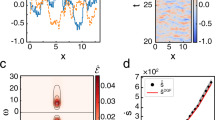

Two coupled beads at different temperatures. a An illustration of the model with the red bead immersed in a hot temperature bath Th and the blue bead immersed in a cold temperature bath Tc. Three springs with equal spring constant k connected the beads and the walls. Displacements away from the equilibrium position of the hot and cold beads are denoted by x1 and x2, respectively. b The steady-state probability density and current as a function of bead displacements for spring constant k = 1, friction γ = 1, and thermal energy scales kBTc = 25 and kBTh = 250. c The local entropy production rate calculated from Eq. (7) of the system as a function of bead displacements for the same parameters

This equation expresses the entropy production rate as the product of a flux \(\dot Q_{{\mathrm{ss}}}\) and the conjugate thermodynamic driving force \((T_{\mathrm{c}}^{ - 1} - T_{\mathrm{h}}^{ - 1})\). The typical situation is that the driving force may be tuned in the lab and the flux is measured as a response.

Suppose, however, that it is not simple to measure the heat flux. Rather, we imagine directly observing the bead positions as a function of time. Those measurements are sufficient to extract the entropy production rate, but to do so we must go beyond the thermodynamics and explicitly consider the system’s dynamics, an approach known as stochastic thermodynamics1,31,32. The starting point is to mathematically describe the bead-spring dynamics with a coupled overdamped Langevin equation \(\mathop {{\bf{x}}}\limits^. = A{\mathbf{x}} + F{\mathbf{\xi }}\), where x = (x1, x2)T is the vector consisting of each bead’s displacement from its equilibrium position, ξ = (ξ1, ξ2)T is a vector of independent Gaussian white noises, and

The matrix A captures deterministic forces acting on the beads due to the springs, while F describes the random forces imparted by the medium. The strength of these random forces depends on the temperature and the Boltzmann constant kB, consistent with the fluctuation-dissipation theorem33.

It is useful to cast the Langevin equation as a corresponding Fokker-Planck equation for the probability of observing the system in configuration x at time t, ρ(x, t):

with D = FFT/2. Though we are modeling a two-particle system, it can be helpful to think of the entire system as being a single point diffusing through x space with diffusion tensor D and with deterministic force γAx. The second equality in Eq. (3) defines the probability current j(x, t). These probability currents (and their fluctuations) will play a central role in our strategies for inferring the rate of entropy production.

Due to its analytic and experimental tractability, this bead-spring system and related variants have been extensively studied as models for nonequilibrium dynamics34,35,36,37,38,39. In particular, the steady-state properties are well-known. Correlations between the position of bead i at time 0 and that of bead j at time t are given by Cij(t) = 〈xi(0)xj(t)〉. The expectation value is taken over realizations of the Gaussian noise to give

The steady-state density and current are expressed simply as

in terms of the long-time limit of the correlation matrix

The steady-state current jss(x) is a vector field that specifies the probability current conditioned upon the system being in configuration x. Associated with this current is a local conjugate thermodynamic force \({\mathbf{F}}({\mathbf{x}}) = k_{\mathrm{B}}{\mathbf{j}}_{{\mathrm{ss}}}^T({\mathbf{x}})D^{ - 1}/\rho _{{\mathrm{ss}}}({\mathbf{x}})\)40,41. The product of the microscopic current and force is the local entropy production rate at configuration x: \(\dot \sigma _{{\mathrm{ss}}}({\mathbf{x}}) = {\mathbf{F}}({\mathbf{x}}) \cdot {\mathbf{j}}_{{\mathrm{ss}}}({\mathbf{x}})\). Upon integrating over all configurations, we obtain the total entropy production rate

Comparing with Eq. (1), we see that the rate of net heat flow is \(\dot Q_{{\mathrm{ss}}} = k_{\mathrm{B}}k(T_{\mathrm{h}} - T_{\mathrm{c}})/4\gamma\). Our ability to analytically compute the heat flow derives from the linear coupling between beads, yet we are ultimately interested in experimental scenarios in which linear coupling could not be assumed. In those more complicated systems, there is no simple analytical expression for the local entropy production rate, but we could still estimate \(\dot \sigma _{{\mathrm{ss}}}\) by sampling trajectories from the steady-state distributions—either in a computer or in the lab. We now consider strategies for this estimation by sampling the bead-spring dynamics and comparing with the analytical expression, Eq. (7).

Estimating the steady state from sampled trajectories

We first seek estimates of jss(x) and ρss(x) from a long trajectory x(t) of bead positions over an observation time τobs. We estimate the steady-state density by the empirical density, the fraction of time the trajectory spends in state x:

where δ is a Dirac delta function. The empirical density is an unbiased estimate of the steady-state density, meaning the fluctuating density ρ(x) tends to ρss(x) in the long-time limit. Similarly, an unbiased estimate for the steady-state currents is the empirical current

This Stratonovich integral can be colloquially read as the time-average of all displacement vectors that were observed when the system occupied configuration x. In practice, experiments typically record the configuration x at discrete-time intervals Δt such that the trajectory is given by the timeseries {xΔt, x2Δt,...}. Consequently we work with estimates of the density and currents42:

where K and L are kernel functions43. The kernel functions make it natural to spatially coarse grain the data, a necessity because experiments have a limited resolution and because most microscopic configurations will never be sampled by a finite-length trajectory. The function K(xiΔt, x) controls how observing the ith data point at position xiΔt impacts the estimate of \(\hat \rho\) at a nearby position x. Similarly, L controls how currents are estimated in the neighborhood of the observed data points. Specific choices for K and L are discussed in the Methods section. Using \(\hat \rho\) and \(\hat {\mathbf{ \jmath}}\) we can now construct direct estimates of the entropy production rate.

Direct strategies for entropy production inference

In computing Eq. (7), we integrated the local entropy production rate F(x) ⋅ jss(x) over all configurations x. When jss(x) and F(x) are not known, it is natural to replace them by the estimators \(\hat {\mathbf{ \jmath}}({\mathbf{x}})\) and \(\hat {\mathbf{F}}({\mathbf{x}}) \equiv k_{\mathrm{B}}\hat {\mathbf{ \jmath}}^T({\mathbf{x}})D^{ - 1}/\hat \rho ({\mathbf{x}})\). Though \(\hat {\mathbf{F}}\) is constructed from the unbiased estimators \(\hat {\mathbf{ \jmath}}\) and \(\hat \rho\), \(\hat {\mathbf{F}}\) is only asymptotically unbiased, necessitating sufficiently long trajectories for the bias to become negligible. Utilizing \(\hat {\mathbf{F}}\), we approximate \(\dot S_{{\mathrm{ss}}}\) by either a spatial or a temporal average:

The performance of these estimators is assessed using data sampled from numerical simulations of the Langevin equation, described further in Methods. As illustrated in Fig. 2, the estimators are biased for any finite trajectory length, but they converge to the analytical result, Eq. (7), with sufficiently long sampling times.

Convergence of dissipation estimates. The spatial (blue solid circles) and temporal (red solid squares) dissipation rate estimates converge to the steady-state value \(\dot S_{{\mathrm{ss}}}\) (red line) of Eq. (7). Estimates of the total dissipation rate, calculated from Eqs. (12) and (13), are extracted from Langevin trajectories simulated for time τobs with timestep 10−3 using k = γ = 1, kBTc = 25, and kBTh = 250 as in Fig. 1. Error bars are the standard error of the mean, computed from 10 independent trajectories. Estimates of the local dissipation rate from the spatial estimator with different trajectory lengths are plotted in the inset

At first glance \(\widehat {\dot S}_{{\mathrm{ss}}}^{{\mathrm{spat}}}\) and \(\widehat {\dot S}_{{\mathrm{ss}}}^{{\mathrm{temp}}}\) may appear equivalent due to ergodicity. Indeed, with an infinite amount of sampling, both schemes must yield the same result, \(\dot S_{{\mathrm{ss}}}\), but the temporal estimator converges significantly faster with finite sampling. Plots of the estimated local dissipation rate (Fig. 2 inset) hint at the reason \(\widehat {\dot S}_{{\mathrm{ss}}}^{{\mathrm{spat}}}\) converges more slowly: \(\dot \sigma _{{\mathrm{ss}}}({\mathbf{x}})\) must be accurately estimated by \(\hat {\dot \sigma }_{{\mathrm{ss}}}({\mathbf{x}}) = \hat {\mathbf{F}}({\mathbf{x}}) \cdot \hat {\mathbf{ \jmath}}({\mathbf{x}})\) throughout the entire configuration space. The integral in Eq. (12) equally weights \(\hat {\dot \sigma }_{{\mathrm{ss}}}({\mathbf{x}})\) at all x, even those points which have been infrequently (or never) visited by the stochastic trajectory. Our x has dimension two, but we will also consider higher-dimensional configuration spaces, for example by coupling more than two beads in a linear chain. If that configuration space has dimension greater than three or four, it becomes impractical to estimate \(\dot \sigma _{{\mathrm{ss}}}\) across the entire space. Furthermore, estimating Eq. (12) for high-dimensional x confronts the classic problem of performing numerical quadrature on a high-dimensional grid, where it is well-known that Monte Carlo integration becomes a superior method.

The temporal integral can be thought of as a convenient way to implement such a Monte Carlo integration, with sampled x’s coming from the configurations of the stochastic trajectory. Notably, Eq. (13) is computed from estimates of the thermodynamic force near the sampled configurations xiΔt, precisely where the finite trajectory has been most reliably sampled. In contrast, Eq. (12) requires spurious extrapolation of the kernel density estimates (\(\hat \rho\) and \(\hat{\mathbf{ \jmath}}\)) to points which are far from the any sampled configurations. The advantage of the temporal estimator over the spatial one becomes even more pronounced as dimensionality increases. Nevertheless, even \(\widehat {\dot S}_{{\mathrm{ss}}}^{{\mathrm{temp}}}\) becomes harder to estimate when x grows in dimensionality. Getting accurate estimates of F around the xiΔt requires observing several trajectories which have cut through that part of configuration space while traveling in each direction. But when the dimensionality is large, recurrence to the same configuration-space neighborhood takes a long time. Consequently, we turn to a complementary method which can be informative even when x is too high-dimensional to accurately estimate F.

Indirect strategy for entropy production inference

Thus far our estimators have been based on detailed microscopic information, but as the dimensionality of x increases, estimating the microscopic steady-state properties requires exponentially more data. To combat this curse of dimensionality, it is standard to project high-dimensional dynamics onto a few preferred degrees of freedom9,44,45,46. For example, the projected coordinates could be two principle components from a principle component analysis. Such projected dynamics have been used to detect broken detailed balance9, however, these reduced dynamics overlook hidden dissipation from the discarded degrees of freedom.

An alternative strategy that retains contributions from all degrees of freedom is provided by recent theoretical results relating entropy production and current fluctuations in general nonequilibrium steady-state dynamics28,29,47,48,49,50,51,52. To this end, we introduce a single projected macroscopic current, constructed as a linear combination of the microscopic currents:

where d(x) is a vector field that weights how much a microscopic current at x contributes to the macroscopic current jd. Any physically measurable current—electrons flowing through a wire, heat passing from one bead to the other, or the production of a chemical species in a reaction network—can be cast as such a linear superposition of microscopic currents. Figure 3 illustrates one particular example by applying the weighting field d(x) = F(x) to project microscopic currents onto the single macroscopic current jF. Each step of the trajectory is weighted by the value of d associated with the observed transition, and this weighted average, accumulated as a function of time, is the fluctuating macroscopic current (fluctuating because it depends on the particular stochastic trajectory). Each trajectory observed for a time τobs yields a measurement jd of the fluctuating current, and many such trajectories give a distribution P(jd) characterized by mean 〈jd〉 and variance Var(jd). The thermodynamic uncertainty relation (TUR)28,29,48,49,50 then constrains the entropy production rate in terms of the dynamical fluctuations of this macroscopic current:

Extracting current fluctuations from trajectories. a A realization of a long trajectory diffusing through configuration space. The macroscopic current is computing by choosing a vector field d(x), here chosen as the thermodynamic force field F(x). b On the microscopic scale, the trajectory may be modeled as discrete jumps between neighboring lattice sites (here labeled with symbols: lozenge, star, square, …). The continuous-space vector field is decomposed into components along the direction of possible jumps, i.e., d evaluated at the black triangle site can be expressed in terms of the weight d◊,▲ associated with a jump from the black triangle to the white diamond. c A realization of the trajectory gives a single value of the empirical current jd. By recording many realizations, the empirical current distribution P(jd) is sampled to give 〈jd〉 and Var(jd) (inset). In the case that d = F, the mean slope of this accumulated current is the average entropy production rate. d The empirical current for a single realization is constructed as the sum of the d weights for each microscopic transition of the jump process

Note that we have used Var(jd) to denote the variance of the macroscopic empirical current distribution, but some prior work29,48 used this notation to denote the way the variance scaled with observation time. The difference between these notations is the factor of τobs in the denominator of the right hand side of Eq. (15).

Unlike the field of microscopic currents, j(x), the macroscopic current jd is a single scalar quantity, allowing estimates of its cumulants—particularly the mean \(\widehat {\left\langle {j_{\mathbf{d}}} \right\rangle }\) and variance \(\widehat {{\mathrm{Var}}(j_{\mathbf{d}})}\)—to be extracted from a modest amount of experimental data. Indeed, measurements of kinesin fluctuations have recently been used to infer constraints on the efficiency of these molecular motors18,53. Importantly, the TUR is valid for any choice of d, granting freedom to consider fluctuations of arbitrary macroscopic currents, some of which will yield tighter bounds than others. In a later section, we use Monte Carlo sampling to seek a choice for d which yields the tightest possible bound, but first we consider an important physically motivated choice, d = F. In this case, the macroscopic current jF is the fluctuating entropy production rate (cf. Eqs. (7) and (14)), so \(\left\langle {j_{\mathbf{F}}} \right\rangle = \dot S_{{\mathrm{ss}}}\). With access to F, we can thus compute the entropy production rate by simply taking the mean of the generalized current (the average slope in Fig. 3), or we could use the fluctuations from repeated realizations of jF to get a bound on \(\dot S_{{\mathrm{ss}}}\) via Eq. (15).

It perhaps seems foolish to settle for a bound if one could compute the actual entropy production rate, but in practice one would not typically have access to F. More likely, it would only be possible to estimate F from data as \(\hat {\mathbf{F}}\). With sufficient data, \(\hat {\mathbf{F}}\) converges to F such that a temporal estimate of the entropy production rate would eventually become accurate, but this convergence is slow in high dimensions. Alternatively, by choosing \({\mathbf{d}} = \hat {\mathbf{F}}\), we obtain a TUR lower bound estimate

A key advantage of this estimate is that it is less sensitive to whether \(\hat {\mathbf{F}}\) has converged than either \(\widehat {\dot S}_{{\mathrm{ss}}}^{{\mathrm{spat}}}\) and \(\widehat {\dot S}_{{\mathrm{ss}}}^{{\mathrm{temp}}}\). When \(\hat {\mathbf{F}}\) is noisily estimated due to little data or high dimensionality, the TUR estimate can nevertheless provide an accessible route to constraining the entropy production rate from experimental data.

Convergence of the entropy production rate estimates

To assess the costs and benefits of the various estimation schemes, we numerically sampled trajectories for the two-bead model of Fig. 1 and for a variant with five beads coupled along a one-dimensional chain with spring constant k, the five beads being embedded in thermal baths whose temperatures linearly ramp from Tc to Th. Equation (7) gives the entropy production rate for the two-bead model as a function of the bath temperatures. An analogous expression is derived in Supplementary Note 1 for the model with five beads, and both expressions are plotted with a solid red line in Fig. 4. The temporal and spatial estimators both converge to these analytical expressions in the long trajectory limit, while the TUR estimate tends to the lower bound \(\dot S_{{\mathrm{TUR}}}^{{\mathbf{(d)}}}\). We performed a series of calculations to assess: (1) how close is this lower bound to the true dissipation rate and (2) how long of a trajectory is needed to converge all three estimates.

Performance of dissipation rate estimators. Data are shown both for the model with two beads (a) and the higher-dimensional model with five beads (b). The TUR bound with d = F (dashed black line) becomes tighter to the actual dissipation rate (solid red line) when the dynamics is closer to equilibrium (Tc/Th → 1) and in the limit of many beads. Inset plots show the estimator convergence rates for temperature ratios of 0.1 and 0.5, with error bars reporting standard error, computed from 10 independent samples. The blue dashed line in a is the TUR bound resulting from a Monte Carlo optimization scheme, as described further in Fig. 5. The bottom right inset of b reflects that the TUR estimator may be useful as a practical proxy for the entropy production rate for high-dimensional systems when the dynamics is weakly driven

We discuss the convergence results first, plotted as insets in Fig. 4. Using a trajectory of length τobs, \(\hat {\mathbf{F}}\) was estimated, and this estimated thermodynamic force field was used to plot how quickly \(\widehat {\dot S}_{{\mathrm{ss}}}^{{\mathrm{spat}}}\) and \(\widehat {\dot S}_{{\mathrm{ss}}}^{{\mathrm{temp}}}\) converged to their expected value of \(\dot S_{{\mathrm{ss}}}\). To compare convergence of the TUR bound on an equal footing, we recognize that the τobs → ∞ limit of a long trajectory with perfect sampling will not yield \(\dot S_{{\mathrm{ss}}}\) but rather the bound \(\dot S_{{\mathrm{TUR}}}^{({\mathbf{F}})}\). In all three cases we scale the estimate by its appropriate infinite-sampling limit and observe how quickly this ratio decays to one. The superiority of the temporal estimator over the spatial one is clear in the two-bead model, and the inadequacy of the spatial estimator is so stark in the higher-dimensional five-bead model that it was prohibitive to compute. The TUR estimator performance is comparable to the temporal average estimator when F can be estimated well (low dimensionality and large thermodynamic driving). In the more challenging situation that the phase space is high dimensional and the statistical irreversibility is more subtle, the TUR estimator appears to offer some advantage. It converges with roughly an order of magnitude fewer samples than are required for \(\widehat {\dot S}_{{\mathrm{ss}}}^{{\mathrm{temp}}}\) (see bottom right inset of Fig. 4b).

To understand how well one can estimate the entropy production rate from current fluctuations, we must also address how close the TUR lower bound is to \(\dot S_{{\mathrm{ss}}}\). The dashed black line of Fig. 4 shows that the TUR lower bound equals the actual entropy production rate in the near-equilibrium limit Tc → Th. Far from equilibrium, the TUR lower bound remains the same order of magnitude as the entropy production rate, with the deviation increasing with the size of the temperature difference. Comparing the dashed black lines in two different dimensions, we can see that as more beads are added to the model, this deviation between \(\dot S_{{\mathrm{TUR}}}^{({\mathbf{F}})}\) and \(\dot S_{{\mathrm{ss}}}\) decreases. Hence the TUR bound more closely approximates the actual entropy production rate with increasing dimensionality and decreasing thermodynamic force, precisely the conditions when the TUR estimate converges more rapidly.

Optimizing the macroscopic current

Thus far we have focused on measuring the statistics of a particular macroscopic empirical current, the fluctuating entropy production, constructed by choosing d = F. This choice was a natural starting point since the fluctuations are known to saturate Eq. (15) in the equilibrium limit Tc → Th29. However, when working with timeseries data we had to replace F by the estimate \(\hat {\mathbf{F}}\), and this estimated thermodynamic force is error-prone in high dimensions. In the previous section we saw that the TUR estimator is sufficiently robust that a tight bound for \(\dot S_{{\mathrm{ss}}}\) may be inferred even when \(\hat {\mathbf{F}}\) has not fully converged to F. This robustness derives from the validity of Eq. (15) for all possible choices of d. The generality of the TUR can be further leveraged by optimizing over d:

We are not aware of methods to explicitly compute the optimal choice of d, but a vector field d*(x) which outperforms F(x) can be found readily by Monte Carlo (MC) sampling with a preference for macroscopic currents with a large TUR ratio 〈jd〉2/Var(jd).

Each step of the MC algorithm requires 〈jd〉 and Var(jd), which could be estimated with trajectory sampling, as illustrated in Fig. 3a, c. In fact, one could collect a single long trajectory—from an experiment or from simulation—then sample d* based on mean and variance estimates \(\widehat {\left\langle {j_{{\mathbf{d}}^ \ast }} \right\rangle }\) and \(\widehat {\left\langle {{\mathrm{Var}}(j_{{\mathbf{d}}^ \ast })} \right\rangle }\) for that fixed trajectory. Such a scheme is enticing, but we warn that the procedure is susceptible to over-optimization of the TUR ratio since optimizing to maximize the ratio \(\widehat {\left\langle {j_{{\mathbf{d}}^ \ast }} \right\rangle }^2/\widehat {{\mathrm{Var}}(j_{{\mathbf{d}}^ \ast })}\) is not the same as optimizing the ratio \(\left\langle {j_{{\mathbf{d}}^ \ast }} \right\rangle ^2/{\mathrm{Var}}(j_{{\mathbf{d}}^ \ast })\). The former can yield a large value just because the trajectory happens to return anomalous estimates for the mean and variance of the generalized current. The latter ratio does not depend on any one trajectory but has rather averaged over all trajectories. Avoiding over-optimization requires appropriate cross-validation. For example, d* could be selected based on one sampled trajectory then the dissipation bound inferred by an independently sampled trajectory.

Rather than implementing such a cross-validation scheme, we avoided the over-optimization problem for this model system by putting the dynamics on a grid to compute the means and variances exactly. As described in Methods, we construct a continuous-time Markov jump process on a square lattice with grid spacing h = {h1, h2} such that the h → 0 jump process limits to the same Fokker-Planck description, Eq.(3), as the continuous-space Langevin dynamics48. The vector field d(x) is also discretized as a set of weights dx+h,x associated with the transition from x to the neighboring microstate at x + h (see Fig. 3b, d). In place of trajectory sampling, the mean and variance can be extracted from a standard computation of the current’s scaled cumulant generating function as a maximum eigenvalue of a tilted rate matrix54,55,56.

The MC sampling returns an ensemble of nearly-optimal choices for d* such that \(\dot S_{{\mathrm{ss}}} \ge \dot S_{{\mathrm{TUR}}}^{({\mathbf{d}}^ \ast )} \ge \dot S_{{\mathrm{TUR}}}^{({\mathbf{F}})}\). Each d* from the ensemble yields a similar TUR ratio, but the near-optimal vector fields are qualitatively distinct (see Fig. 5). We lack a physical understanding of the differences between the various near-optimal choices d* and the thermodynamic force field F. Even without a clear physical interpretation, we have a straightforward numerical procedure for extracting as tight of an entropy production bound as can be obtained from macroscopic current fluctuations.

Monte Carlo sampling for maximally informative currents. We seek a weighting vector field d such that the TUR bound is as close to the true entropy production rate as possible. Starting either with d = F (blue curve) or with a random vector field (red curve), a Markov chain Monte Carlo procedure was used to change d in search of a higher \(\dot S_{{\mathrm{TUR}}}^{({\mathbf{d}})}/\dot S_{{\mathrm{ss}}}\) ratio. The Monte Carlo sampling discovers diverse ways to yield a similar maximal value of the TUR ratio, suggesting that while the optimization problem is not dominated by a single basin, competitive near-optimal solutions can be discovered from a variety of starting points

Discussion

Physical systems in contact with multiple thermodynamic reservoirs support nonequilibrium dynamics that manifest as probability currents in phase space. Detection of these currents has been used in a biophysical context to differentiate between dissipative and equilibrium processes. In this paper, we have explored how the currents can be further utilized to infer the rate of entropy production. Using a solvable toy model, we demonstrated three inference strategies: one based on a spatial average, one based on a temporal average, and one based on fluctuations in the currents.

Regardless of strategy, the entropy production inference becomes more challenging and requires more data as the thermodynamic drive decreases. This challenge results from the fact that weakly driven systems produce trajectories which look very similar when played forward or backward in time. The weaker the drive, the more data it requires to confidently detect the statistical irreversibility.

It is in this weak driving limit that we see the most stark difference between the performance of the three studied estimators. As we move to higher-dimensional but weakly driven systems, it requires too much data to detect the statistical irreversibility at every point in phase space, so performing spatial averages is out of the question. The temporal average can still be taken, but for a fixed amount of data, estimates of F become systematically more error-prone with increased dimensionality. In that limit we find it useful to measure not just the average current, but also the variance. By leveraging the TUR we circumvent the need to accurately estimate F and achieve more rapid convergence.

The TUR-inspired estimator is not without pitfalls. Most prominently, it only returns a bound on the entropy production rate, and it is not simple to understand how tight this bound will be. That tightness, characterized by \(\eta \equiv \dot S_{{\mathrm{TUR}}}^{({\mathbf{F}})}/\dot S_{{\mathrm{ss}}}\), does not, for example, depend solely on the strength of the thermodynamic drive. In Supplementary Note 2 and Supplementary Figure 2, we make this point by separately tuning the various spring constants to show how η depends on properties of the system in addition to the ratio of reservoir temperatures. Though our modestly sized toy systems (no more than five coupled beads) always produce η of order unity, there is little reason to believe that the TUR bound will remain a good proxy for the entropy production rate in the limit of a high-dimensional system in which only a few degrees of freedom are visible. Future experiments are needed to elucidate whether these inference strategies can be usefully applied to the complex biophysical dynamics that has motivated our study.

Methods

Numerically generating the bead-spring dynamics

We simulate the bead-spring dynamics in two complementary ways: as discrete-time trajectories in continuous-space and as continuous-time trajectories in discrete space. The results presented in Figs. 2 and 4 stem from continuous-space calculations. Trajectories are generated by numerically integrating the overdamped Langevin equation using the stochastic Euler integrator with timestep Δt according to x(i+1)Δt = xiΔt + AxiΔtΔt + Fη, where η is a vector of random numbers drawn from the normal distribution with variance Δt for each timestep. Setting k = γ = 1, we numerically integrate the equation of motion with timestep Δt = 0.001. The initial condition x0 is effectively drawn from the steady state by starting the clock after integrating the dynamics for a long time from a random initial configuration. In addition to the discrete-time simulations, continuous-time jump trajectories were simulated in discrete space with a rate

for transitioning from a lattice site at position x to a neighboring site at position x + h48. This discrete-space trajectory was generated by first discretizing the phase space on a 200 by 200 grid with x1 ranging from −50 to 50 and x2 ranging from −20 to 20 as shown in Fig. 3a. The Markov jump process is simulated using the Gillespie algorithm57.

Estimating density and current

To form histogrammed estimates, we bin the data into a 100 by 100 grid with x1 ranging between ±50 and x2 ranging between ±20. We can write the kernel functions as \(K({\mathbf{x}}_{i{\mathrm{\Delta }}t},{\mathbf{x}}) = L({\mathbf{x}}_{i{\mathrm{\Delta }}t},{\mathbf{x}}) = \mathop {\sum}\nolimits_{m,n} \chi _{mn}({\mathbf{x}})\chi _{mn}({\mathbf{x}}_{i{\mathrm{\Delta }}t})\), where χmn is the indicator function taking the value 1 only if the argument lies in the bin with row and column indices m and n. Alternatively, a continuous estimate of the density and current can be constructed using smooth non-negative functions for K and L, each of which integrates to one. For our kernel density estimates, we place a Gaussian at each data point by choosing K(xiΔt, x) ∝ exp[(x − xiΔt)TΣ−1(x − xiΔt)]. The breadth of the ith Gaussians bi, known as the bandwidth, sets the diagonal matrix Σ−1 via \(\Sigma _{ii} = b_i^2\). The estimation of currents proceeds similarly using kernel regression with the Epanechnikov kernel58

where d is the spatial dimension and xiΔt;j is the jth component of the configuration xiΔt at discrete time i. The bandwidth for both Gaussian and Epanechnikov kernels are chosen using the rule of thumb suggested by Bowman and Azzalini58, specifically

Here N denotes the length of the data, and \(\tilde {\boldsymbol{\sigma }}\) is the median absolute deviation estimator computed by \(\tilde {\boldsymbol{\sigma }} = \sqrt {{\mathrm{median}}\{ |v - {\mathrm{median}}(v)|\} {\mathrm{median}}\{ |{\mathbf{x}} - {\mathrm{median}}({\mathbf{x}})|\} }\), where v is the magnitude of the velocities. In general the bandwidth will go to zero with increasing data length, so the kernel estimator should be asymptotically unbiased. In that limit of infinite data, the differences between histogram and kernel density estimates are insignificant. When data is limited, we find the fastest convergence by using kernel density estimates with a multivariate Gaussian for K and the Epanechnikov kernel for L.

To optimally handle limited data, the bandwidth is typically chosen to minimize the mean squared error (MSE) of the estimated function59,60,61:

where the expectation value is taken over realizations of trajectories. The MSE is naturally a function of the bandwidth since the value of the estimator depends on b. Supplementary Figure 1 shows this bandwidth-dependence of the MSE estimated from the five-bead model temporal estimator and TUR lower bound with τobs = 1200 and Tc/Th = 0.1. Notice that the TUR lower bound tends to be less sensitive to the choice of bandwidth.

Estimation of the TUR lower bound

To get estimates for the current’s mean and variance, \(\widehat {\left\langle {j_{\mathbf{d}}} \right\rangle }\) and \(\widehat {{\mathrm{Var}}(j_{\mathbf{d}})}\), from a single realization of length τobs, we first divide the trajectory into τobs/Δτ subtrajectories of length Δτ. For the continuous-time Markov jump process as shown in Fig. 3b, the vector field d(x) is discretized as a set of weights dx+h,x associated with the edges of the lattice and the trajectory is series of lattice sites occupied over time. The accumulated current, as illustrated in Fig. 3d, is computed as the sum of weights along the subtrajectory k: \(J_{\mathbf{d}}^{(k)} = \mathop {\sum}\nolimits_i {{\mathbf{d}}_{{\mathbf{x}}_i,{\mathbf{x}}_{i + 1}}} .\) For the continuous-space Langevin dynamics, the accumulated current for subtrajectory is given by \(J_{\mathbf{d}}^{(k)} = \mathop {\sum}\nolimits_i {\mathbf{d}} \left( {\frac{{{\mathbf{x}}_{i{\mathrm{\Delta }}t} + {\mathbf{x}}_{(i - 1){\mathrm{\Delta }}t}}}{2}} \right) \cdot \left( {{\mathbf{x}}_{i{\mathrm{\Delta }}t} - {\mathbf{x}}_{(i - 1){\mathrm{\Delta }}t}} \right)\). This accumulated current is scaled by the trajectory length to get the fluctuating macroscopic current for subtrajectory k: \(j_{\mathbf{d}}^{(k)} = J_{\mathbf{d}}^{(k)}/\Delta \tau\). The sample mean and variance of \(\left\{ {j_{\mathbf{d}}^{(1)},j_{\mathbf{d}}^{(2)}},... \right\}\) give \(\widehat {\left\langle {j_{\mathbf{d}}} \right\rangle }\) and \(\widehat {{\mathrm{Var}}(j_{\mathbf{d}})}\), respectively.

Computing the mean and variance by tilting

It is useful to conceptualize 〈jd〉 and Var(jd) in terms of sampled trajectories, but finite trajectory sampling will result in statistical errors. We may alternatively compute the mean and variance as the first two derivatives of the scaled cumulant generating function \(\phi (\lambda ) = {\mathrm{lim}}_{\tau _{{\mathrm{obs}}} \to \infty }\frac{1}{{\tau _{{\mathrm{obs}}}}}{\mathrm{ln}}\left\langle {e^{\lambda j_{\mathbf{d}}\tau _{{\mathrm{obs}}}}} \right\rangle\), evaluated at λ = 0. The expectation value averages over all trajectories of length τobs, and in the long-time limit, ϕ(λ) coincides with the maximum eigenvalue of the tilted operator with matrix elements \({\Bbb W}(\lambda )_{{\mathbf{x}} + {\mathbf{h}},{\mathbf{x}}} = {\Bbb W}_{{\mathbf{x}} + {\mathbf{h}},{\mathbf{x}}}e^{\lambda d_{{\mathbf{x}} + {\mathbf{h}},{\mathbf{x}}}}\)54,55,56. By discretizing space, we computed ϕ(λ) around λ = 0 as the maximal eigenvalue of the tilted operator. Using numerical derivatives, we estimate

with δλ = 0.00001.

MC optimization

We seek a vector field d(x) such that the TUR bound is as large as possible. To identify such a choice of d, we first decompose it into a basis of M = 100 Gaussians:

The ith Gaussian, centered at position x(i), carries a weight w(i). The centers for the first 50 Gaussians are uniformally sampled with x1 ranging from −50 to 50 and x2 from −20 to 20. The breadth of the Gaussians along the i direction, Bii, is set to 10% of the length of the interval from which uniform samples are drawn. Only the weights for these 50 Gaussians will be allowed to freely vary. The remaining 50 Gaussians are paired with the first 50 to impose the antisymmetry d(x) = −d(−x). Practically, this antisymmetry constraint is achieved by placing a second Gaussian at −x with the opposite weight as the Gaussian positioned at x.

With this regularization, we replace the optimization of d with a sampling problem. We sample the first 50 weights w in proportion to \({\mathrm{exp}}(\beta \dot S_{{\mathrm{TUR}}}^{({\mathbf{d}})})\), where β is an effective inverse temperature and \(\dot S_{{\mathrm{TUR}}}^{({\mathbf{d}})}\) depends on the weights since d depends on w. By choosing β = 5000, the sampling is strongly biased toward weights that give a near-optimal value of the TUR bound. After initializing the weights with uniform random numbers from [−1, 1], Monte Carlo moves w → w′ were proposed by perturbing the wi's by random uniform numbers drawn from [−0.5, 0.5]. The d′ corresponding to these new weights was computed according to Eq. (24), and the TUR bound for that proposed macroscopic current was computed using numerical derivatives of the tilted operator \({\Bbb W}(\lambda )\) around λ = 0 as described above. The maximum eigenvalue calculations made use of Mathematica’s implementation of the Arnoldi method, performed using sparse matrices. Each proposed move to w′ was accepted with the Metropolis criterion \({\mathrm{min}}[1,{\mathrm{exp}}( - \beta (\dot S_{{\mathrm{TUR}}}^{({\mathbf{d}})} - \dot S_{{\mathrm{TUR}}}^{({\mathbf{d}}' )}))]\).

In addition to starting from a random choice of d, we performed MC sampling about the thermodynamic force by expressing d as

Again, we have 100 Gaussians, half of them uniformally placed throughout the space and the rest positioned to make the perturbation antisymmetric. We stochastically update the weights by adding a uniform random number drawn from [−0.05, 0.05], and conditionally accept the update with the same Metropolis factor as before. The resulting TUR lower bound tends toward higher values until it hits a plateau (Fig. 5 blue line). For each temperature ratio in Fig. 4a, the MC sampling was run for 500 steps, after which the TUR bound achieved a plateau and further optimization is either impossible or at least significantly more challenging.

Data availability

Representative data generated from sampling trajectories with the aforementioned codes can be accessed online at https://doi.org/10.5281/zenodo.2576526.

Code availability

Computer codes implementing all simulations and analyses described in this manuscript are available for download at https://doi.org/10.5281/zenodo.2576526.

References

Seifert, U. Stochastic thermodynamics, fluctuation theorems and molecular machines. Rep. Prog. Phys. 75, 126001 (2012).

Marchetti, M. C. et al. Hydrodynamics of soft active matter. Rev. Mod. Phys. 85, 1143 (2013).

Gnesotto, F. S., Mura, F., Gladrow, J. & Broedersz, C. P. Broken detailed balance and non-equilibrium dynamics in living systems: a review. Rep. Prog. Phys. 81, 066601 (2018).

Qian, H., Kjelstrup, S., Kolomeisky, A. B. & Bedeaux, D. Entropy production in mesoscopic stochastic thermodynamics: nonequilibrium kinetic cycles driven by chemical potentials, temperatures, and mechanical forces. J. Phys.: Condens. Matter 28, 153004 (2016).

Feng, E. H. & Crooks, G. E. Length of time’s arrow. Phys. Rev. Lett. 101, 090602 (2008).

Roldán, É., Martinez, I. A., Parrondo, J. M. R. & Petrov, D. Universal features in the energetics of symmetry breaking. Nat. Phys. 10, 457 (2014).

Roldán É. Irreversibility and Dissipation in Microscopic Systems (Springer, 2014).

Martin, P., Hudspeth, A. J. & Jülicher, F. Comparison of a hair bundle’s spontaneous oscillations with its response to mechanical stimulation reveals the underlying active process. Proc. Natl Acad. Sci. USA 98, 14380–14385 (2001).

Battle, C., et al. Broken detailed balance at mesoscopic scales in active biological systems. Science 352, 604–607 (2016).

Qian, H. Vector field formalism and analysis for a class of thermal ratchets. Phys. Rev. Lett. 81, 3063 (1998).

Zia, R. K. P. & Schmittmann, B. Probability currents as principal characteristics in the statistical mechanics of non-equilibrium steady states. J. Stat. Mech.: Theory Exp. 2007, P07012 (2007).

Fodor, É. et al. How far from equilibrium is active matter? Phys. Rev. Lett. 117, 038103 (2016).

Fodor, É. et al. Nonequilibrium dissipation in living oocytes. EPL (Europhys. Lett.) 116, 30008 (2016).

Ghanta, A., Neu, J. C. & Teitsworth, S. Fluctuation loops in noise-driven linear dynamical systems. Phys. Rev. E 95, 032128 (2017).

Mizuno, D., Tardin, C., Schmidt, C. F. & MacKintosh, F. C. Nonequilibrium mechanics of active cytoskeletal networks. Science 315, 370–373 (2007).

Lau, A. W. C., Lacoste, D. & Mallick, K. Nonequilibrium fluctuations and mechanochemical couplings of a molecular motor. Phys. Rev. Lett. 99, 158102 (2007).

Fakhri, N. et al. High-resolution mapping of intracellular fluctuations using carbon nanotubes. Science 344, 1031–1035 (2014).

Pietzonka, P., Barato, A. C. & Seifert, U. Universal bound on the efficiency of molecular motors. J. Stat. Mech.: Theory Exp. 2016, 124004 (2016).

Brown, A. I. & Sivak, D. A. Allocating dissipation across a molecular machine cycle to maximize flux. Proc. Natl Acad. Sci. USA 114, 11057–11062 (2017).

Kubo, R. The fluctuation-dissipation theorem. Rep. Prog. Phys. 29, 255 (1966).

Kurchan, J. Fluctuation theorem for stochastic dynamics. J. Phys. A. Math. Gen. 31, 3719 (1998).

Crooks, G. E. Entropy production fluctuation theorem and the nonequilibrium work relation for free energy differences. Phys. Rev. E 60, 2721 (1999).

Harada, T. & Sasa, Si Equality connecting energy dissipation with a violation of the fluctuation-response relation. Phys. Rev. Lett. 95, 130602 (2005).

Collin, D. et al. Verification of the Crooks fluctuation theorem and recovery of RNA folding free energies. Nature 437, 231 (2005).

Seifert, U. & Speck, T. Fluctuation-dissipation theorem in nonequilibrium steady states. EPL (Europhys. Lett.) 89, 10007 (2010).

Jarzynski, C. Equalities and inequalities: irreversibility and the second law of thermodynamics at the nanoscale. Annu. Rev. Condens. Matter Phys. 2, 329–351 (2011).

Lander, B., Mehl, J., Blickle, V., Bechinger, C. & Seifert, U. Noninvasive measurement of dissipation in colloidal systems. Phys. Rev. E 86, 030401 (2012).

Barato, A. C. & Seifert, U. Thermodynamic uncertainty relation for biomolecular processes. Phys. Rev. Lett. 114, 158101 (2015).

Gingrich, T. R., Horowitz, J. M., Perunov, N. & England, J. L. Dissipation bounds all steady-state current fluctuations. Phys. Rev. Lett. 116, 120601 (2016).

Gladrow, J. Broken Detailed Balance in Active Matter—Theory, Simulation and Experiment. Master’s thesis, Georg-August-Universität Göttingen (2015).

Sekimoto, K. Kinetic characterization of heat bath and the energetics of thermal ratchet models. J. Phys. Soc. Jpn. 66, 1234–1237 (1997).

Sekimoto, K. Langevin equation and thermodynamics. Prog. Theor. Phys. Suppl. 130, 17–27 (1998).

Van Kampen, N. G. Stochastic Processes in Physics and Chemistry, Vol. 1 (Elsevier, 1992).

Rieder, Z., Lebowitz, J. L. & Lieb, E. Properties of a harmonic crystal in a stationary nonequilibrium state. J. Math. Phys. 8, 1073–1078 (1967).

Bonetto, F., Lebowitz, J. L. & Lukkarinen, J. Fourier’s law for a harmonic crystal with self-consistent stochastic reservoirs. J. Stat. Phys. 116, 783–813 (2004).

Ciliberto, S., Imparato, A., Naert, A. & Tanase, M. Heat flux and entropy produced by thermal fluctuations. Phys. Rev. Lett. 110, 180601 (2013).

Falasco, G., Baiesi, M., Molinaro, L., Conti, L. & Baldovin, F. Energy repartition for a harmonic chain with local reservoirs. Phys. Rev. E 92, 022129 (2015).

Chun, H.-Myung & Noh, J. D. Hidden entropy production by fast variables. Phys. Rev. E 91, 052128 (2015).

Mura, F., Gradziuk, G. & Broedersz, C. P. Non-equilibrium scaling behaviour in driven soft biological assemblies. Phys. Rev. Lett. 121, 038002 (2018).

Qian, H. Mesoscopic nonequilibrium thermodynamics of single macromolecules and dynamic entropy-energy compensation. Phys. Rev. E 65, 016102 (2001).

Van den Broeck, C. & Esposito, M. Three faces of the second law. II. Fokker-Planck formulation. Phys. Rev. E 82, 011144 (2010).

Just, W., Kantz, H., Ragwitz, M. & Schmüser, F. Nonequilibrium physics meets time series analysis: Measuring probability currents from data. EPL (Europhys. Lett.) 62, 28 (2003).

Lamouroux, D. & Lehnertz, K. Kernel-based regression of drift and diffusion coefficients of stochastic processes. Phys. Lett. A 373, 3507–3512 (2009).

Weiss, J. B. Fluctuation properties of steady-state Langevin systems. Phys. Rev. E 76, 061128 (2007).

Gladrow, J., Fakhri, N., MacKintosh, F. C., Schmidt, C. F. & Broedersz, C. P. Broken detailed balance of filament dynamics in active networks. Phys. Rev. Lett. 116, 248301 (2016).

Gladrow, J., Broedersz, C. P. & Schmidt, C. F. Nonequilibrium dynamics of probe filaments in actin-myosin networks. Phys. Rev. E 96, 022408 (2017).

Pietzonka, P., Barato, A. C. & Seifert, U. Universal bounds on current fluctuations. Phys. Rev. E 93, 052145 (2016).

Gingrich, T. R., Rotskoff, G. M. & Horowitz, J. M. Inferring dissipation from current fluctuations. J. Phys. A: Math. Theor. 50, 184004 (2017).

Pietzonka, P., Ritort, F. & Seifert, U. Finite-time generalization of the thermodynamic uncertainty relation. Phys. Rev. E 96, 012101 (2017).

Horowitz, J. M. & Gingrich, T. R. Proof of the finite-time thermodynamic uncertainty relation for steady-state currents. Phys. Rev. E 96, 020103 (2017).

Proesmans, K. & Van den Broeck, C. Discrete-time thermodynamic uncertainty relation. EPL (Europhys. Lett.) 119, 20001 (2017).

Chiuchiù, D. & Pigolotti, S. Mapping of uncertainty relations between continuous and discrete time. Phys. Rev. E 97, 032109 (2018).

Seifert, U. Stochastic thermodynamics: from principles to the cost of precision. Phys. A: Stat. Mech. its Appl. 504, 176–191 (2018).

Lebowitz, J. L. & Spohn, H. A Gallavotti–Cohen-type symmetry in the large deviation functional for stochastic dynamics. J. Stat. Phys. 95, 333–365 (1999).

Lecomte, V., Appert-Rolland, C. & van Wijland, F. Thermodynamic formalism for systems with Markov dynamics. J. Stat. Phys. 127, 51–106 (2007).

Touchette, H. The large deviation approach to statistical mechanics. Phys. Rep. 478, 1–69 (2009).

Gillespie, D. T. Exact stochastic simulation of coupled chemical reactions. J. Phys. Chem. 81, 2340–2361 (1977).

Bowman, A. W. & Azzalini, A. Applied Smoothing Techniques for Data Analysis: the Kernel Approach with S-Plus Illustrations, Vol. 18 (OUP Oxford, 1997).

Sheather, S. J. & Jones, M. C. A reliable data-based bandwidth selection method for kernel density estimation. J. R. Stat. Soc. Series B Stat. Methodol. 53, 683–690 (1991).

Sheather, S. J. Density estimation. Stat. Sci. 588–597 (2004).

Turlach, B. A. Bandwidth selection in kernel density estimation: a review. CORE Inst. de. Stat. 19, 1–33 (1993).

Acknowledgements

We gratefully acknowledge the Gordon and Betty Moore Foundation for supporting T.R.G. and J.M.H. as Physics of Living Systems Fellows through Grant GBMF4513. This research was supported by a Sloan Research Fellowship (to N.F.), the J.H. and E.V. Wade Fund Award (to N.F.), and the Human Frontier Science Program Career Development Award (to N.F.).

Author information

Authors and Affiliations

Contributions

J.M.H, T.R.G. and N.F. designed research, J.L. and T.R.G. performed research and analyzed data, J.L., J.M.H., T.R.G. and N.F. wrote the paper.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Journal peer review information: Nature Communications thanks the anonymous reviewers for their contribution to the peer review of this work. Peer reviewer reports are available.

Publisher’s note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Li, J., Horowitz, J.M., Gingrich, T.R. et al. Quantifying dissipation using fluctuating currents. Nat Commun 10, 1666 (2019). https://doi.org/10.1038/s41467-019-09631-x

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41467-019-09631-x

This article is cited by

-

Irregular Gyration of a Two-Dimensional Random-Acceleration Process in a Confining Potential

Journal of Statistical Physics (2024)

-

Unifying speed limit, thermodynamic uncertainty relation and Heisenberg principle via bulk-boundary correspondence

Nature Communications (2023)

-

Inferring scale-dependent non-equilibrium activity using carbon nanotubes

Nature Nanotechnology (2023)

-

Estimating time-dependent entropy production from non-equilibrium trajectories

Communications Physics (2022)

-

Time irreversibility in active matter, from micro to macro

Nature Reviews Physics (2022)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.