Abstract

The topology of interactions in network dynamical systems fundamentally underlies their function. Accelerating technological progress creates massively available data about collective nonlinear dynamics in physical, biological, and technological systems. Detecting direct interaction patterns from those dynamics still constitutes a major open problem. In particular, current nonlinear dynamics approaches mostly require to know a priori a model of the (often high dimensional) system dynamics. Here we develop a model-independent framework for inferring direct interactions solely from recording the nonlinear collective dynamics generated. Introducing an explicit dependency matrix in combination with a block-orthogonal regression algorithm, the approach works reliably across many dynamical regimes, including transient dynamics toward steady states, periodic and non-periodic dynamics, and chaos. Together with its capabilities to reveal network (two point) as well as hypernetwork (e.g., three point) interactions, this framework may thus open up nonlinear dynamics options of inferring direct interaction patterns across systems where no model is known.

Similar content being viewed by others

Introduction

The collective dynamics of many natural systems ranging from regulatory circuits and metabolic systems1,2,3,4,5,6,7 to communication, distribution, and supply networks8,9 is derived from the direct interactions of their parts. Determining how such systems are connected may help us in understanding and controlling their function10,11. Current nonlinear dynamics approaches may recover direct interactions from the collective dynamics of a system if a mathematical model is provided in advance and only their unknown parameters, network links, and nonlinear terms are to be determined11,12,13,14,15,16,17,18,19. Such models, however, are usually not at hand under most experimental conditions, thereby constraining the applicability of these methods to a limited number of examples. Recent works20,21 on low-dimensional systems suggest that approximating the dynamics through expansions in basis functions may reveal the interaction patterns, if such dynamics admits a sparse representation in the proposed basis. A more recent model-free approach that takes into account the nonlinear network dynamics requires to externally drive the systems in a controlled way, thus enabling reconstruction from experimental settings for one particular range of settings22. Common model-free approaches not considering the nonlinear system dynamics construct functional links by detecting statistical dependencies (e.g., correlations, mutual information, Granger causality, and extensions thereof)23,23,24,25,26,27,28,29,31 and thus are prone to recover indirect interactions among the units of a network, for instance, due to common external inputs or decorrelating effects induced by other units in the network11,27,28,32,32,34. Although latest efforts have focused on filtering indirect connections27,28 from pairwise statistical dependencies, recent studies show that these functional links can only match direct connections under specific homogeneity conditions35, which rarely occur in real-world systems.

In this article, we propose a novel concept for inferring direct interactions in coupled dynamical systems, relying only on their nonlinear collective dynamics, with neither assuming specific dynamic models to be known in advance nor assuming the dynamics admits a sparse representation, nor imposing controlled drivings, nor expecting statistical dependencies to faithfully reveal direct, physical interactions. To achieve this goal, we here change the perspective and ask which units j of the network provide direct physical interactions to a given unit i and appear on the right hand side of its differential equation, rather than asking for details of the interaction functions among those units. We demonstrate that the problem of inferring direct interactions based on observed nonlinear dynamics may be posed as a multivariate regression problem by introducing an explicit dependency matrix and thereby systematically decomposing each units dynamics into pairwise, three-point, and higher-order interactions with other units in the network. Such decompositions provide restricting equations for mapping the collective dynamics to direct interactions. We validate and characterize the predictive power of our approach by successfully revealing the structure of generic as well as specific biological model systems. These model systems may exhibit complex noisy dynamics such as transient dynamics toward steady states, periodic and non-periodic dynamics, or chaos, and have standard pairwise as well as hypernetwork (such as three point) interactions. Interaction networks may even be revealed if some units are not measured (and thus hidden during observation).

Results

Mapping time series to direct interactions

To understand which information a time series contains about the direct interactions in networks, consider a system whose time evolution is given by

where \({\bf{x}}(t) = \left[ {x_1(t),...,x_N(t)} \right]^\intercal \in {\Bbb R}^N\) is the state of the entire system consisting of units with variables x i (t), \({\bf{\dot x}} = d{\bf{x}}(t){\mathrm{/}}dt\) denotes its temporal derivative, \({\boldsymbol{\xi }}(t) = \left[ {\xi _1(t), \ldots ,\xi _N(t)} \right] \in {\Bbb R}^N\) represents external noise acting on the whole system, and \({\bf{F}}:{\Bbb R}^N \to {\Bbb R}^N\) is any smooth, typically nonlinear function that we assume to be unknown. Common examples are the regulation functions in models for gene regulatory networks3,5,7 or rate laws in metabolic systems36.

Given a multivariate time series

recorded at discrete time points t m = mΔt + t 0, system identification aims to reveal the exact functional form of F and to exactly predict the systems future37. Owing to the high dimensionality of most networks, such identification is typically restricted or even impossible. Here we address the problem in a slightly yet essentially different manner, asking only: which of the variables x j directly acts on a given unit i and thus explicitly appears on the right hand side of Eq. (1)? We aim to reveal not only pairwise network interactions, specified by terms of the form \(\dot x_i = ... + g_j^i\left( {x_j} \right) + g_{ij}^i\left( {x_i,x_j} \right) + \ldots ,\) but also higher-order hypernetwork interactions, induced, for instance, by terms of the form \(\dot x_i = ... + g_{jk}^i\left( {x_j,x_k} \right) + g_{ijk}^i\left( {x_i,x_j,x_k} \right) + ...\) where two or more units j and k different than i jointly influence unit i directly.

To distinguish among units and at the same time treat all orders of interactions simultaneously, we introduce explicit dependency matrices Λi ∈ {0, 1}N×N, diagonal matrices defined by

Hence, if a unit j directly acts on unit i, we have \({\mathrm{\Lambda }}_{jj}^i\) equals 1, and \({\mathrm{\Lambda }}_{jj}^i\) equals 0 otherwise. With this notation, the dynamics of the units becomes

where \(f_i:{\Bbb R}^N \to {\Bbb R}\) is a smooth function that specifies the deterministic evolution of component i and \(\xi _i(t) \in {\Bbb R}\) represents external noise acting on i.

The explicit dependency matrix Λi selects which variables x j directly control the rate of change of x i , thus going beyond the related graph-theoretical notions of adjacency and incidence matrices and thereby emphasizing aspects of the dynamics: first, it offers a uniform representation of pairwise and higher-order interactions; and second, it is thus suitable for generic dynamical systems representations, as it appears exactly once in the right hand side of Eq. (4).

The resulting generic model (Eq. (4)) links state space points x(t) at time t to their rate of change \(\dot x_i(t)\). In particular, the complemented system state \(s_i(t) = \left[ {{\bf{x}}(t),\dot x_i(t)} \right]^\intercal \in {\Bbb R}^{N + 1}\) is an element of a higher-dimensional “dynamics space” \({\cal D}_i\) for each i formed by the state space and the rate of change of unit i. Therefore, the f i specifying the dynamics defines a smooth manifold \({\cal M}_i \subset {\cal D}_i\), with the \({\mathrm{\Lambda }}_{jj}^i\) indicating whether or not \({\cal M}_i\) is constant in direction x j . cf. Fig. 1.

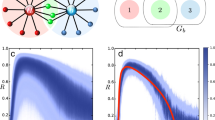

Sampling dynamics spaces to reveal network structure. a Eq. (4) determines a mapping from state space components x to rates of change \(\dot x_i\), defining a smooth manifold \({\cal M}_i\) (in dynamics space \({\cal D}_i\)) determined by f i and Λi. b Two-stage decomposition of unknown functions f i , first into interactions of different orders with other units in the network, second each interaction into basis functions, thereby resulting in a linear system (Eq. (6)) restricting the connectivity structure. c Quality of inferences versus the number M of measurements (displayed here for non-periodic dynamics of networks of phase-coupled oscillators of N = 20 and n i = 10 incoming connections per unit). “Continuous sampling” takes as observed dynamics one long trajectory of M sample time steps; “distributed sampling” takes M/m short time series of m = 10 sample time steps starting from different initial conditions, creating a longer time series of length M. The initial conditions were randomly drawn from uniform distributions defined in the interval [−π, π]

In practical scenarios, the functions f i are generally not accessible. We address this challenge in two stages. First, we functionally decompose the dynamics of units i ∈ {1, 2, ..., N} into interaction terms with the entire network as

where \(g_j^i:{\Bbb R} \to {\Bbb R}\), \(g_{js}^i:{\Bbb R}^2 \to {\Bbb R}\), \(g_{jsw}^i:{\Bbb R}^3 \to {\Bbb R}\) and, in general \(g_{j_1j_2 \ldots j_K}^i:{\Bbb R}^K \to {\Bbb R}\), represent the (unknown) K-th order interactions between units j k for all k ∈ {1, 2, …, K} and unit i. Specifically, the decomposition (Eq. (5)) separates contributions to unit i arising from different orders, e.g., pairwise and higher-order interactions with other units in the system. The Λ i are defined such that, if \({\mathrm{\Lambda }}_{rr}^i \equiv 0\), all functions \(g_{j_1,j_2,...,j_K}^i\) with any of the indices j k = r disappear from the right hand side of Eq. (5).

Given that functions \(g_{j_1,j_2,...,j_K}^i\) are taken to not be accessible, we decompose each \(g_{j_1,j_2,...,j_K}^i\) into basis functions h as

where P k indicates the number of basis functions employed in the expansion, c.f. ref. 38. Thus, provided a time series (2) where Δt is sufficiently small such as to reliably estimate time derivatives \(\dot x_{i,m}\), revealing direct interactions becomes identifying the non-zero coefficients in the right hand side of Eq. (6) that best fit the estimated \(\dot x_{i,m}\). Such expansions (Eq. (6)) differ qualitatively from those developed in refs. 15,16,18,20 since ours do neither require the functions \(g_{j_1,j_2,...,j_K}^i\) to be represented exactly by the basis functions chosen nor the condition to admit a sparse representation in the basis. Instead, we only require the functions h to form any basis of a relevant function space, thereby additionally allowing the investigator to choose basis functions not appearing explicitly in any of the \(g_.^i\). In particular, this reduced requirement implies that, for instance, all coefficients \(c_{j,p}^i \equiv 0\) are (indistinguishable from) zero for all p if there is no functional dependency \(g_j^i\left( {x_j} \right) = \mathop {\sum}\nolimits_p c_{j,p}^ih_{j,p}(x_j) \equiv 0\).

This weaker requirement is sufficient to impose a structure of blocks of zero and non-zero coefficients in Eq. (6), representing absent and existing interactions, respectively, thereby posing a mathematical regression problem with grouped variables39,39,40,42. To solve such structured problems, we developed the Algorithm for Revealing Network Interactions (ARNI) (Supplementary Note 1), a greedy approach based on the Block Orthogonal Least Squares (BOLS) algorithm40. Specifically, our approach takes the time series of all units in the network as inputs and returns a ranked list of interactions indicating the order in which interactions in the right hand side of Eq. (6) were identified as most strongly lowering a cost function (see text below and Supplementary Note 1/Supplementary Fig. 3). We remark that here we do not intend to recover the actual functional form of interactions, but instead we aim at determining the existence or absence of interactions between units. So, even if our scheme infers an optimal model from a given time series, it is not guaranteed that such a model would agree with an actual model generating the dynamics43. Indeed, the fact that we only ask for the units interacting with a given unit and not for details of the coupling functions enables robust performance across systems (compare Figs. 1, 2, 3, 4, 5, and 6).

Inferring interactions from transients to steady state and from non-periodic dynamics. We simulated short transient time series of m = 5 time points starting from different initial conditions and created composite longer time series as in Fig. 1. Thus M = S × m, where S is the number of distinct transient dynamics. a–c Revealing interactions from transients toward steady state. a Adjacency matrix of a network of N = 100 units under Michaelis–Menten kinetics with n i = 10 incoming connections. b Example of transient dynamics toward steady states, where \(\dot x_j = 0\) for all j ∈ {1, 2, ..., N}. c Quality of inferences with respect to M using our approach (ARNI), correlations (Corr), partial correlations (PCorr), and transfer entropy (TE). d–f Revealing interactions from non-periodic dynamics. d Adjacency matrix of a network of N = 50 phase-coupled oscillators with n i = 10 incoming connections (black squares) per unit. e Example of derivatives for several oscillators. f Quality of reconstruction from short trajectories with respect to M = S × m with m = 10

Inferring interactions from noisy and chaotic dynamics. Reconstruction of chaotic oscillator networks of N = 20 and n i = 5 incoming interactions. a Rössler oscillators themselves constitute subnetworks of three interconnected units. b Example of noisy derivatives in a Rössler oscillator. c Quality of reconstruction from transient trajectories with respect to M = S × m with m = 10, using our approach (ARNI), correlations (Corr), partial correlations (PCorr), and transfer entropy (TE)

Performance on networks and hypernetworks. a Minimum length M 0.95 of time series required for achieving AUC score >0.95 versus the number of units N with a logarithmic fit. The inset shows the same data with N on logarithmic scale. The number of incoming interactions n i = 10 was fixed for all networks. b M 0.95 versus n i with a linear fit, for networks of fixed size N = 50. c M 0.95 versus standard deviation of noise level η with a exponential fit. The inset shows the same data with M 0.95 on logarithmic scale. d Cartoon of networks with p h = 0 and p h = 1. e Systematic reconstructions of hypernetworks of N = 20 phase-coupled oscillators for varying p h ∈ [0, 1] and M ∈ {200, 500, 1000} and fixed n i = 5. f Systematic reconstructions of networks of N = 20 (n i = 10) units versus the fraction of observed (non-hidden) units

Learning curves with respect to the number of iterations l reveal appropriate expansions. a–f Learning curves for basis functions shown in Table 1; indices in Table 1 equal panel identification. The training and validation sets are composed by the 60 and 40% of recordings, respectively. For all basis functions a–d chosen from an appropriate class (here: true pairwise interactions), the curve exhibits an L-shape with a clear plateau starting at the correct number of incoming interactions (vertical dashed line), above adding more interactions reduces the fitting costs only weakly or not at all. If basis functions are chosen from an inappropriate class (e, f), the cost functions decrease only mildly and do not exhibit L-shape

Reconstructions are still viable if units are hidden and measurements are noisy. Revealing direct interactions within a subset of units from noisy transients toward steady states. The network size is fixed at N = 100. a Representation of a network with a subset of measured units (green) and a subset of hidden units (red). b, c \(\dot x_i\) in noise-free and noisy transients. d Quality of reconstruction versus number of measurements employing our approach (ARNI), correlations (Corr), partial correlations (PCorr), and transfer entropy (TE) on a subset of 40 (randomly selected) recorded units. e Systematic reconstruction over different collections of subsets. The variable R indicates the fraction of recorded units. Averages over 50 random subsets of R < 1 indicate that our approach outperforms correlations, partial correlations, and transfer entropy across different R values

Revealing direct links in model systems

To demonstrate the robustness of our approach, we inferred the interactions of model systems and compared our results to those obtained from thresholding correlations11,44, partial correlations45, and transfer entropy46. In particular, we have selected such quantities because they are model independent, and they have been traditionally used to quantify interactions in networked systems. We tested our framework on systems displaying diverse types of collective dynamics, such as transient dynamics toward steady states, non-periodic dynamics, and chaotic and noisy dynamics, as emerging in models of Michaelis Menten kinetics in gene regulation, generic heteroclinic, and generic chaotic oscillatory dynamics. We measured the quality of reconstruction in terms of area under the receiver-operating-characteristic curve (AUC) score (Supplementary Note 3). The AUC score equals 1 for perfect reconstruction and it equals 1/2 for predictions equivalent to random guessing.

Predictions improve with longer time series as well as by composing one long time series out of different short ones, as illustrated for non-periodic dynamics in Fig. 1c. This indicates that sampling sufficient parts of state space is essential for revealing direct network interactions. Generally, we found that if long time series are not available (or not preferred, see the following), compositions of short time series are at least equally appropriate for reconstruction, see, e.g., Fig. 1c. Exemplary tests demonstrate that even time series as short as m = 5 time points recorded from dynamics from different trajectories evolving toward a steady state might be sufficient. Moreover, reconstruction quality improves with the total number of available recordings M = S × m where S is the number of experiments, in contrast to inferences from thresholding correlations, partial correlations, and transfer entropy, which cannot predict existing interactions under these minimal sampling conditions (Fig. 2a–c). Moreover, inference studies on collections of short time series extracted from non-periodic dynamics further confirms that larger numbers M = S × m of recordings improve quality (as expected). Again, correlations, partial correlations, and transfer entropy are in general less capable of capturing the intrinsic structure of interactions under equally minimal conditions (Fig. 2d–f). Finally, interactions may still be recovered in networks of higher-dimensional units by extending Eq. (5) to include all components \(x_i^d(t)\) of i ∈ {1, 2, …, N}, where d ∈ {1, 2, …, D i } and D i is the number of components of unit i, Fig. 3a–c.

Performance

To further characterize the performance of our approach, we carried out systematic reconstructions of various networks of different sizes, numbers of incoming connections per unit, noise levels, fraction of higher-order (hypernetwork) interactions, and number of hidden units (Fig. 4). We report four classes of results. First, the number M θ of time points necessary for AUC scores larger than a threshold θ scales sublinearly with the size of the network, Fig. 4a, and linearly with the number of incoming connections per unit, Fig. 4b. Moreover, inferring the incoming connections of single units in large sparse networks (N = 1000, n i = 10) in conventional hardware (Intel® CoreTM i5-2430M) takes 65 ± 26 s per unit. Such results highlight the potential applicability of our approach in combination with parallel computing for revealing interactions in real-world networks, which are often large in size and sparsely connected. Second, M θ depends supralinearly on the noise level η, Fig. 4c. Here, sampling longer time series (more data) improves reconstruction quality. These results indicate that inference is still viable for highly noisy dynamics at the expense of recording longer time series. Third, systematic reconstructions of hypernetwork interactions in exemplary models of phase-coupled oscillators (Supplementary Note 4) suggest that our results are independent of the probability of having hypernetwork interactions p h, Fig. 4d, e. This is a consequence of treating pairwise and higher-order interactions equally, by decomposing the coupling into orders of jointly acting units via explicit dependency matrices (Eq. (3)). Thus the approach is insensitive to the appearance of higher-order interactions. Finally, even if some units of the network are not measured (hidden units), existing and non-existing links among measured units may still be reliably inferred, Fig. 4f. To compute AUC scores, we compare our predictions for the existence and absence of links among the measured units with those actually existing and not existing among those units, making no statement about indirect interactions mediated by hidden units. As more units are hidden, the quality of reconstruction decreases because the hidden units act upon the measured units in an unknown way. Still, sampling longer time series again improves reconstruction quality. Thereby, the model-free approach provides accurate predictions even if only a fraction of the network is recorded.

Proper basis functions and learning curves

Selecting an appropriate class of basis functions to represent the network interactions in system (Eq. (6)) is vital for any such approach. Choosing basis functions that capture the intrinsic nature of interactions (e.g., h(x i ), h(x i , x j ), h(x i , x j , x w ), and so on) by construction yields optimal results. However, to exactly pick the correct interaction function requires prior knowledge of the potential functions involved in coupling units of the system under consideration. To overcome this limitation, we aim at appropriate classes of coupling functions only but do not require to pick a correct function (that would enable prediction of time series). While the former implies to find basis functions of correct order, the latter implies to find a unique set of basis functions capable of fitting the recorded dynamics (see below for further consequences). We remark that a particularly chosen basis function constitutes a representative of an entire class of appropriate functions. For instance, the functions indexed a–d in Table 1 are all equally appropriate representatives of the class of pairwise functions \(g_{ij}^i\left( {x_i,x_j} \right)\), Fig. 5.

We investigated the effects of selecting different basis functions. For the example shown in Fig. 5, we studied networks of phase-coupled oscillators and divided the time series in a training set (60% of time points) for inferring interactions and a validation set (40% of time points) for evaluating the predictions; we tracked the evolution of a fitting cost function with respect to the l-th discovered interaction. Specifically, the fitting cost function is defined as

where M s is the number of time points in the set and \(\widehat {\dot x}_{i,m}(l) \in {\Bbb R}\) is the prediction by our approach of a computed \(\dot x_{i,m}\) using the inferred interactions up to the l-th discovered interaction.

The functional forms of the cost function C i (l), depending on the number l of interactions considered, are either L-shaped, indicating the number of incoming connections at the knee l* of the L (basis functions a–d of Table 1, Fig. 5a–d), or not, thereby not revealing any features of the network (basis functions e and f of Table 1, Fig. 5e, f). Simultaneously to reveal the number of incoming connections, the first l * interactions actually chosen provide the full information about which units j directly act on unit i. We remark that, for sufficiently short sampling intervals, both the time derivative \(\dot x_{i,m}\) as well as its estimator \(\widehat {\dot x}_{i,m}\) are obtainable from recorded dynamics data without any model assumption.

These findings confirm that basis functions that merely capture the essential structure of the interactions but not necessarily exactly represent the full dynamics are sufficient to reveal network connectivity. As a consequence, reconstruction of direct network interactions is possible without preknowlegde about a system model.

Effects of noise and hidden units

In experimentally relevant biological settings, there may be several uncontrolled factors affecting the recorded time series. For instance, in gene networks, noisy dynamics is simultaneously present at several different levels (e.g., gene-intrinsic, network intrinsic, and cell-intrinsic)2. Fundamentally, noise complicates the inference process by corrupting measurements of units dynamics, thereby masking network interactions. Moreover, one may not have complete access to measure all units in the network. This may induce correlating or decorrelating effects among units, thus promoting the recovery of indirect interactions34,47.

To test the robustness of our approach against the combination of both noise and hidden units, we simulated transients toward steady states under the external influence of Gaussian noise and recorded the dynamics of only a subset of randomly selected units in the network. Results indicate that both noise and hidden units moderately reduce the performance of our approach, Fig. 6. However, the inference quality still increases with M, Fig. 6d, such that larger sampling collections may still reveal interaction topology. Moreover, systematic reconstructions of different sets of recorded units indicate that our predictions generally outperform those extracted from correlations, partial correlations, and transfer entropy.

Robust inference of biological networks

Next we establish the potential of our framework to reconstruct interactions for biological system settings. Specifically, we demonstrate results on two networked model biological systems: glycolytic oscillator in yeast48 and circadian clock in Drosophila 49. The glycolytic oscillator, exhibiting one of the classical examples for cellular oscillations, accounts for the main reactions of glycolysis. Here we focus on a model for anaerobic glycolytic oscillations in yeast, containing the influx of glucose and outflux of pyruvate and/or acetaldehyde48 (see Supplementary Note 5 for an extended description). The circadian clock underlies the biological response to the day–night cycle, and the oscillations it exhibits in Drosophila are driven by a negative feedback between two genes and the complex that is formed by the proteins they code for. The model equations for the circadian clock are based on ref. 49 (see Supplementary Note 5).

Employing the above approach of combining a dynamics space representation, expanding in suitable families of basis functions, and solving the resulting linear regression problem by an orthogonal least squares method, we reconstructed the interactions between the different components of the glycolytic oscillator (Fig. 7a, b) and the circadian clock (Fig. 7c, d) from transient dynamics toward their periodic orbits. As for the other systems’ settings, the results confirm that larger number M of observations improve the predictions. Moreover, the reconstruction quality by this method again outperforms those resulting from correlations, partial correlations, and transfer entropy.

Reconstructing biological model systems. a–c Revealing interactions of glycolytic oscillator in yeast. a Glycolytic oscillator network, red and green links represent network and hypernetwork interactions, respectively. b Quality of reconstruction from transient trajectories as a function of M = S × m with m = 10. c Circadian clock network in Drosophila. d Quality of reconstruction as in b

Discussion

We proposed a model-free framework for inferring direct interaction networks from only the time series of collective nonlinear system dynamics. First, defining the notion of explicit dependency matrices enabled us to systematically decompose each units’ dynamics into pairwise, three-point, and higher-order interactions and at the same time treat present influences from one unit to another on the same footing independently of the interaction order. Second, by capturing the structure (but not necessarily the exact functional form) of the dynamical influences through appropriately chosen basis functions, we posed the reconstruction problem based on nonlinear dynamics as a mathematical regression problem with grouped variables. Given that the reconstructions of the sets of incoming connections to different units of the network are mathematically independent (despite using overlapping recorded dynamical data), the framework is scalable (see Supplementary Note 2) and computationally parallelizable for large networks. Reconstruction is robust across a wide range of dynamical regimes, combined pairwise and hypernetwork interactions, noise, and hidden units.

The main advantage of our framework is its minimal sampling conditions. For instance, in systems during transients to steady states (such as in gene regulation3,5,7) or periodic orbits (such as in glycolytic oscillations48), we reconstructed direct interactions without the need to know the actual strength or actual distributed patterns of perturbations from those states. In contrast to several previous studies1,11,13,50,50,, our framework in general does not require to apply external driving signals and if a system is externally driven, e.g., to create transients, these signals need not be controlled; thus our framework might be suitable for systems not easily accessible for controlled driving or external driving at all. Moreover, collections of very short time series, in practice potentially resulting from different experiments on the same system, are sufficient for reconstruction. In particular, collective dynamics that is transient, stochastically driven, or otherwise sufficiently complex helps revealing interactions, whereas certain stable dynamics on low-dimensional subsets of state space only sample limited regions of the dynamics space and thus in principle do not provide full information about network interactions. Lower-dimensional dynamics may in particular be induced by symmetries or other invariants represented by algebraic conditions, such as z(x) = 0. For instance, in systems evolving in synchronized states, the existence and directionality of interactions are impossible to extract from time series32. Furthermore, the number of independent measurements required for successful reconstruction grows linearly with the local number of interaction partners and sublinearly with the number of units in the network, providing an advantage for reconstructing large systems. As we illustrated by examples, our framework may be easily combined with learning curves derivable from recorded data only and thus enables researchers to determine the accuracy of inferences when there is no ground truth available.

Previous studies on inferring the direct interaction structure from time series have focused on the reconstruction of networks with known local dynamics and coupling functions11,12,14,15,16,17,18. Such prior knowledge reduces the task to a standard linear algebra problem, where one has to solve linear systems of equations to reveal the network connections, cf. ref.11 for a comprehensive review. Recent work on low-dimensional dynamical systems20, based on expanding the system dynamics in basis functions, requires the dynamics to admit a sparse representation in the proposed basis. Moreover, a work21 applying an extension of the method described in ref. 20 on models for gene regulation also suggests that such approaches scale supralinearly with the dimensionality of the network for both the number of candidate coupling functions and the time points necessary for successful reconstruction. The theory presented above does neither require prior knowledge of parameters and coupling functions involved in the network dynamics nor does it require these functions to admit a sparse representations in any basis chosen; it is not limited to low-dimensional networked systems, also because the number of necessary time points for successful reconstruction scales sublinearly with network size.

Taken together, this model-free, robust framework can be based on collections of short time series, noisy data, partially inaccessible units, and essentially arbitrary nonlinear dynamics and may thus enable the reconstruction of direct interaction networks from dynamical data from a new range of times series from coupled dynamical systems where no model is known.

Methods

Overview

To generate dynamical trajectories displaying transients toward steady states, we simulated dynamical systems employing Michaelis–Menten kinetics (Supplementary Note 4), systems frequently used to model gene regulation3,5,27. To generate dynamical trajectories exhibiting transients to periodic dynamics, we employed two biological model systems: (i) glycolytic oscillations in yeast48 and circadian clock in Drosophila 49 (Supplementary Note 5), which possess hypernetwork interactions, where two units jointly and directly influence a third such that their interaction function cannot be disentangled into sums of pairwise interactions. To study the effects of non-periodicity, we simulated networks of phase-coupled oscillators (Supplementary Note 4) whose coupling stems from a simple model of weakly coupled populations of biological neurons52,53,54. Finally, to test robustness against chaos and noise, we simulated networks of noisy and asynchronous Rössler oscillators (Supplementary Note 4), prototypical systems for studying chaos55.

In what follows, we provide a brief description of each model (see Supplementary Notes 4 and 5 for further details).

Gene regulatory circuits

To simulate systems mimicking gene regulation, we simulated networks of dynamical systems having Michaelis–Menten kinetics3,27

having n i randomly-selected incoming connections per node. Here J ij of \(J \in {\Bbb R}^{N \times N}\) represents a weighted and directed link from unit j to i.

Networks and hypernetworks of phase-coupled oscillators

To generate non-periodic dynamics, we simulated a model52 of phase-coupled oscillators with coupling functions having two Fourier modes

with constant natural frequencies ω i .

We extended this model to hypernetworks of the form

Differently from (Eq. (9)), here we introduce the second-order interaction matrix \(E^i \in {\Bbb R}^{N \times N}\) for all i = {1, 2, …, N}. Specifically, the element \(E_{jk}^i\) quantify how strongly units j and k jointly and directly influence unit i.

Networks of Rössler oscillators

To generate chaotic dynamics, we simulated networks of coupled Rössler oscillators55. The dynamics of each oscillator \({\boldsymbol{x}}_i = \left[ {x_i^1,x_i^2,x_i^3} \right] \in {\Bbb R}^3\) is set by three differential equations

where \(\xi _i^k\) with k ∈ {1, 2, 3} represent external noisy signals acting on the unit’s components.

Glycolytic oscillator model

To test performance on biological model systems, we first simulated the glycolytic oscillator defined as48

where S 1 represents the concentration of glucose, S 2 that of glyceraldehydes-3-phosphate and dihydroxyacetone phosphate pool, S 3 that of 1, 3-bisphosphoglycerate, S 4 that of cytosolic pyruvate and acetaldehyde pool, S 5 that of NADH, S 6 that of ATP, and S 7that of extracellular pyruvate and the acetaldehyde pool.

Circadian clock

A second biological model system we have studied is the circadian clock, underlying the response to the day–night cycle. It is defined as49:

where M T and M P are tim and per mRNAs, respectively. T 0, T 1, and T 2 are forms of the TIM protein, P 0, P 1, and P 2 are forms of the PER protein, and C and C N are forms of the PER–TIM complex.

Data availability

All data reported in this study are available from the corresponding authors upon request. Example codes for simulating and reconstructing network dynamical systems may be found at https://github.com/networkinference/ARNI.

References

Gardner, T. S., di Bernardo, D., Lorenz, D. & Collins, J. J. Inferring genetic networks and identifying compound mode of action via expression profiling. Science 301, 102–105 (2003).

Kaern, M., Elston, T. C., Blake, W. J. & Collins, J. J. Stochasticity in gene expression: from theories to phenotypes. Nat. Rev. Genet. 6, 451–464 (2005).

Karlebach, G. & Shamir, R. Modelling and analysis of gene regulatory networks. Nat. Rev. Mol. Cell Biol. 9, 770–780 (2008).

Fujita, A. et al. Modeling nonlinear gene regulatory networks from time series gene expression data. J. Bioinform. Comput. Biol. 6, 961–979 (2008).

Marbach, D. et al. Revealing strengths and weaknesses of methods for gene network inference. Proc. Natl. Acad. Sci. USA 107, 6286–6291 (2010).

Bar-Joseph, Z., Gitter, A. & Simon, I. Studying and modelling dynamic biological processes using time-series gene expression data. Nat. Rev. Genet. 13, 552–564 (2012).

Marbach, D. et al. Wisdom of crowds for robust gene network inference. Nat. Methods 9, 796–804 (2012).

Ronellenfitsch, H., Lasser, J., Daly, D. C. & Katifori, E. Topological phenotypes constitute a new dimension in the phenotypic space of leaf venation networks. PLoS Comput. Biol. 11, e1004680 (2015).

Kirst, C., Timme, M. & Battaglia, D. Dynamic information routing in complex networks. Nat. Commun. 7, 11061 (2016).

Cornelius, S. P., Kath, W. L. & Motter, A. E. Realistic control of network dynamics. Nat. Commun. 4, 1942 (2013).

Timme, M. & Casadiego, J. Revealing networks from dynamics: an introduction. J. Phys. A Math. Theor. 47, 343001 (2014).

Yu, D., Righero, M. & Kocarev, L. Estimating topology of networks. Phys. Rev. Lett. 97, 188701 (2006).

Timme, M. Revealing network connectivity from response dynamics. Phys. Rev. Lett. 98, 224101 (2007).

Shandilya, S. G. & Timme, M. Inferring network topology from complex dynamics. New J. Phys. 13, 013004 (2011).

Wang, W.-X., Yang, R., Lai, Y.-C., Kovanis, V. & Harrison, M. A. F. Time-series based prediction of complex oscillator networks via compressive sensing. Europhys. Lett. 94, 48006 (2011).

Wang, W. X., Yang, R., Lai, Y. C., Kovanis, V. & Grebogi, C. Predicting catastrophes in nonlinear dynamical systems by compressive sensing. Phys. Rev. Lett. 106, 154101 (2011).

Han, X., Shen, Z., Wang, W. X. & Di, Z. Robust reconstruction of complex networks from sparse data. Phys. Rev. Lett. 114, 028701 (2015).

Wang, W.-X., Lai, Y.-C. & Grebogi, C. Data based identification and prediction of nonlinear and complex dynamical systems. Phys. Rep. 644, 1–76 (2016).

Liu, Y.-Y. & Barabási, A.-L. Control principles of complex systems. Rev. Mod. Phys. 88, 035006 (2016).

Brunton, S. L., Proctor, J. L. & Kutz, J. N. Discovering governing equations from data by sparse identification of nonlinear dynamical systems. Proc. Natl. Acad. Sci. USA 113, 3932–3937 (2016).

Mangan, N. M., Brunton, S. L., Proctor, J. L. & Kutz, J. N. Inferring biological networks by sparse identification of nonlinear dynamics. IEEE Trans. Mol. Biol. Multiscale Commun. 2, 52–63 (2016).

Nitzan, M., Casadiego, J. & Timme, M. Revealing physical interaction networks from statistics of collective dynamics. Sci. Adv. 3 e1600396 (2017).

Granger, C. W. J. Investigating causal relations by econometric models and cross-spectral methods. Econometrica 37, 424–438 (1969).

Ren, J., Wang, W. X., Li, B. & Lai, Y. C. Noise bridges dynamical correlation and topology in coupled oscillator networks. Phys. Rev. Lett. 104, 058701 (2010).

Quinn, C. J., Coleman, T. P., Kiyavash, N. & Hatsopoulos, N. G. Estimating the directed information to infer causal relationships in ensemble neural spike train recordings. J. Comput. Neurosci. 30, 17–44 (2011).

Friston, K. J. Functional and effective connectivity: a review. Brain Connect. 1, 13–36 (2011).

Barzel, B. & Barabási, A.-L. Network link prediction by global silencing of indirect correlations. Nat. Biotechnol. 31, 720–725 (2013).

Feizi, S., Marbach, D., Médard, M. & Kellis, M. Network deconvolution as a general method to distinguish direct dependencies in networks. Nat. Biotechnol. 31, 726–733 (2013).

Guo, X., Zhang, Y., Hu, W., Tan, H. & Wang, X. Inferring nonlinear gene regulatory networks from gene expression data based on distance correlation. PLoS One. 9, e87446 (2014).

Tirabassi, G., Sevilla-Escoboza, R., Buldú, J. M. & Masoller, C. Inferring the connectivity of coupled oscillators from time-series statistical similarity analysis. Sci. Rep. 5, 10829 (2015).

Ching, E. S. C. & Tam, H. C. Reconstructing links in directed networks from noisy dynamics. Phys. Rev. E 95, 010301 (2017).

Paluš, M. & Vejmelka, M. Directionality of coupling from bivariate time series: How to avoid false causalities and missed connections. Phys. Rev. E 75, 056211 (2007).

Nawrath, J. et al. Distinguishing direct from indirect interactions in oscillatory networks with multiple time scales. Phys. Rev. Lett. 104, 38701 (2010).

Zou, Y., Romano, M. C., Thiel, M., Marwan, N. & Kurths, J. Inferring indirect coupling by means of recurrences. Int. J. Bifurc. Chaos 21, 1099–1111 (2011).

Lin, W., Wang, Y., Ying, H., Lai, Y. C. & Wang, X. Consistency between functional and structural networks of coupled nonlinear oscillators. Phys. Rev. E 92, 012912 (2015).

Kaplan, U., Türkay, M., Biegler, L. & Karasözen, B. Modeling and simulation of metabolic networks for estimation of biomass accumulation parameters. Discret. Appl. Math. 157, 2483–2493 (2009).

Bekey, G. A. System Identification-An Introduction and a Survey 15 (Springer London, London, 1970).

Hastie, T., Tibshirani, R. & Friedman, J. The Elements of Statistical Learning, Springer Series in Statistics. (Springer New York, NY, 2009).

Eldar, Y. C. & Mishali, M. Robust recovery of signals from a structured union of subspaces. IEEE Trans. Inf. Theory 55, 5302–5316 (2009).

Majumdar, A. & Ward, R. K. Fast group sparse classification. Can. J. Electr. Comput. Eng. 34, 136–144 (2009).

Eldar, Y. C., Kuppinger, P. & Bölcskei, H. Block-sparse signals: uncertainty relations and efficient recovery. IEEE Trans. Signal Process. 58, 3042–3054 (2010).

Duarte, M. F. & Eldar, Y. C. Structured compressed sensing: from theory to applications. IEEE Trans. Signal Process. 59, 4053–4085 (2011).

Judd, K. & Nakamura, T. Degeneracy of time series models: the best model is not always the correct model. Chaos 16, 033105 (2006).

Lünsmann, B. J., Kirst, C. & Timme, M. Transition to reconstructibility in weakly coupled networks. PLoS ONE 12, 1–12 (2017).

Opgen-Rhein, R. & Strimmer, K. From correlation to causation networks: a simple approximate learning algorithm and its application to high-dimensional plant gene expression data. Bmc. Syst. Biol. 1, 37 (2007).

Schreiber, T. Measuring information transfer. Phys. Rev. Lett. 85, 461–464 (2000).

Hirata, Y. & Aihara, K. Identifying hidden common causes from bivariate time series: a method using recurrence plots. Phys. Rev. E 81, 016203 (2010).

Wolf, J. & Heinrich, R. Effect of cellular interaction on glycolytic oscillations in yeast: a theoretical investigation. Biochem. J. 345, 321–334 (2000).

Leloup, J.-C. & Goldbeter, A. Chaos and Birhythmicity in a model for circadian oscillations of the PER and TIM proteins in drosophila. J. Theor. Biol. 198, 445–459 (1999).

Yeung, M. K., Tegner, J. & Collins, J. J. Reverse engineering gene networks using singular value decomposition and robust regression. Proc. Natl. Acad. Sci. USA 99, 6163–6168 (2002).

Yu, D. & Parlitz, U. Estimating parameters by autosynchronization with dynamics restrictions. Phys. Rev. E 77, 66221 (2008).

Hansel, D., Mato, G. & Meunier, C. Clustering and slow switching in globally coupled phase oscillators. Phys. Rev. E 48, 3470–3477 (1993).

Hansel, D., Mato, G. & Meunier, C. Phase dynamics for weakly coupled hodgkin-huxley neurons. Europhys. Lett. 23, 367–372 (2007).

Izhikevich, E. M. Dynamical Systems in Neuroscience: The Geometry of Excitability and Burtsing. (MIT Press, Cambridge, 2007).

Rössler, O. E. An equation for continuous chaos. Phys. Lett. A. 57, 397–398 (1976).

Buhmann, M. D. Radial Basis Function: Theory and Implementations (Cambridge University Press, Cambridge, 2003).

Acknowledgements

We thank Fabio Schittler Neves, Benedict Lünsmann and Fenna Müller for useful discussions. M.T. thanks Albert Laszlo Barabasi for hospitality and useful discussions during a visit in March 2016. We acknowledge support by the German Research Foundation and the Open Access Publication Funds of the TU Dresden. This work is supported through the German Science Foundation (DFG) by a grant toward the Center of Excellence “Center for Advancing Electronics Dresden” (cfaed). We also gratefully acknowledge support from the Federal Ministry of Education and Research (BMBF Grant Nos. 03SF0472E and 03SF0472F) and the Max Planck Society.

Author information

Authors and Affiliations

Contributions

All authors conceived the research and contributed materials and analysis tools. J.C. and M.T. developed the theory and algorithms and designed the research. All authors provided model systems and the quality measures. J.C., M.N., and S.H. carried out the numerical experiments. All authors analyzed the data, discussed and interpreted the results, and wrote the manuscript.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing financial interests.

Additional information

Publisher's note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic supplementary material

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Casadiego, J., Nitzan, M., Hallerberg, S. et al. Model-free inference of direct network interactions from nonlinear collective dynamics. Nat Commun 8, 2192 (2017). https://doi.org/10.1038/s41467-017-02288-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41467-017-02288-4

This article is cited by

-

Uncovering hidden nodes and hidden links in complex dynamic networks

Science China Physics, Mechanics & Astronomy (2024)

-

Unsupervised relational inference using masked reconstruction

Applied Network Science (2023)

-

Periodic synchronization of isolated network elements facilitates simulating and inferring gene regulatory networks including stochastic molecular kinetics

BMC Bioinformatics (2022)

-

RSNET: inferring gene regulatory networks by a redundancy silencing and network enhancement technique

BMC Bioinformatics (2022)

-

Autonomous inference of complex network dynamics from incomplete and noisy data

Nature Computational Science (2022)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.