Abstract

Today, breeders perform genomic-assisted breeding to improve more than one trait. However, frequently there are several traits under study at one time, and the implementation of current genomic multiple-trait and multiple-environment models is challenging. Consequently, we propose a four-stage analysis for multiple-trait data in this paper. In the first stage, we perform singular value decomposition (SVD) on the resulting matrix of trait responses; in the second stage, we perform multiple trait analysis on transformed responses. In stages three and four, we collect and transform the traits back to their original state and obtain the parameter estimates and the predictions on these scale variables prior to transformation. The results of the proposed method are compared, in terms of parameter estimation and prediction accuracy, with the results of the Bayesian multiple-trait and multiple-environment model (BMTME) previously described in the literature. We found that the proposed method based on SVD produced similar results, in terms of parameter estimation and prediction accuracy, to those obtained with the BMTME model. Moreover, the proposed multiple-trait method is atractive because it can be implemented using current single-trait genomic prediction software, which yields a more efficient algorithm in terms of computation.

Similar content being viewed by others

Introduction

Breeders often want to improve more than one trait simultaneously in their breeding programs and thus conduct various experiments. For example, Teixeira et al. (2016) reported that a breeding program in Brazil measured 41 pig traits obtained by crossing 345 F2 pig populations of Brazilian Piau × commercial pigs. To analyze this type of experiment, breeders implement one of the following two approaches: (i) they perform a univariate analysis (one trait at a time), therefore ignoring the correlation between traits that does not allow to improve either parameter estimates or prediction accuracy, or (ii) they perform a multiple-trait analysis, which may not only take into account the correlation between traits but may also significantly increase the computing intensity.

Implementing first approach is valid when the correlation between traits is low or close to zero, but it is less desirable when the correlation between traits is moderate to strong. However, implementing the second approach is sometimes challenging—for example, when there are a large number of traits, and under these circumstances, breeders opt for the first approach. Furthermore, implementing the second approach is also challenging because early breeding programs start with at least 1000 lines that are evaluated in multiple environments, which complicates the analysis, as including genotype × environment (G × E) increases the dimensionality of the data considerably (Chiquet et al. 2013).

As mentioned above, multiple-trait analysis improves parameter estimates and prediction accuracy. With regard to parameter estimates, Schulthess et al. (2017) found that multiple-trait analysis improves parameter estimates, while Calus and Veerkamp (2011) found modest improvement using multiple-trait analysis compared to separate-trait analysis for prediction accuracy; these authors also showed that the performance of multiple-trait analysis depends considerably on whether only some traits are missing in only some individuals or in all individuals. Jia and Jannink (2012) also showed evidence favoring multiple-trait analysis compared to single-trait analysis, finding that the genetic correlation between traits is the basis for the benefit of multiple-trait analysis. Jiang et al. (2015) later arrived at the same conclusion in favor of multiple-trait analysis. Montesinos-López et al. (2016) also found modest improvement in the prediction accuracy of multiple-trait analysis when comparing correlated traits to the analysis that assumes null correlation between traits. Along these lines, He et al. (2016) concluded that modeling multiple traits could improve the prediction accuracy for correlated traits in comparison to univariate-trait analysis. Schulthess et al. (2017) also found that multiple-trait analysis is better in terms of prediction accuracy than separate-trait analysis, pointing out that the multiple-trait model is better when the degree of relatedness between genotypes is weaker.

There is evidence suggesting that even when traits are correlated, genomic-enabled prediction accuracy is not improved, as stated by Montesinos-López et al. (2017a) and as shown by Märtens et al. (2016), who compared multiple-trait analysis to single-trait analysis and found no difference in terms of prediction accuracy. This issue was also documented by Oliveira and Teixeira-Pinto (2015) who proved this result by stating that, in the multivariate linear regression case (when the covariates in each equation are the same), even if the errors are strongly correlated, the multivariate model gives the same result (both point estimates and standard errors) as fitting individual regressions with ordinal least squares for each outcome, despite the level of correlation between the errors.

As a breeding tool, genomic selection (GS) uses all available molecular markers (Meuwissen et al. 2001) to design genomic-assisted breeding programs and develop new marker-based models for genetic evaluation. GS provides opportunities to obtain higher rates of genetic gain than traditional phenotypic selection in less time and at a reasonable cost. For example, in animal breeding, GS allows animal scientists to select young animals early in life that do not have records, greatly reducing evaluation costs and generation intervals when compared to the traditional progeny test schemes (Schaeffer 2006; Boichard et al. 2016). In general, for traits that have a long generation time or are difficult to evaluate (i.e., insect resistance, bread-making quality, and others), GS is cheaper and/or easier than traditional phenotypic selection because more candidates can be characterized for a given cost, thus enabling increased selection intensity. Hence, GS has a number of merits over traditional selection because it reduces selection duration and increases selection accuracy, intensity, efficiency, and gains per unit of time. In addition, it saves time and financial investment, along with producing reliable results (Rutkoski et al. 2011; Desta and Ortiz 2014). This enables faster development of improved crop varieties to cope with the challenges of climate change and the decrease in arable land (Bhat et al. 2016).

Therefore, we propose an alternative method for analyzing multiple-trait and multi-environment data—one that takes into account the correlation between traits. This model will be useful for analyzing multiple-trait and multi-environment data because the linear predictor may include the following interaction terms: environment × trait, genotype × trait, and three-way interaction (environment × genotype × trait), assuming an unstructured variance–covariance matrix in the genetic and residual covariance matrices of traits and an identity matrix for the correlation matrix between environments.

The proposed method consists of four steps. In the first step, we transform the original matrix of response variables into a matrix of response variables of the same dimension but between uncorrelated transformed traits using singular value decomposition (SVD), which is equivalent to using principal component analyses (PCA). This first step is performed ignoring all the information of the design effect and other covariates. In the second step, given that the traits are not correlated, we apply a single-trait analysis where we can take into account the design effect, along with the effects of genotypes, environments, genotype × environment interaction, and other covariates, if they are available. In the third step, we collect and put together all the parameter estimates of the single analysis, which are transformed in the fourth step to obtain the parameter estimates and/or predictions for the traits in the original scale of the multiple-trait and multiple-environment data. Our approach has the same goal as the canonical transformation method proposed by Thompson (1977), which involves using special matrices to transform the observations on several correlated traits into new variables that are uncorrelated to each other, which means that these new variables can be analyzed as single-trait analysis, but the results (predictions) are transformed back to the original scale of the observations (Mrode 2014). However, our method is different to the Thompson (1977) method since our approach directly decorrelates the matrix of response variables with the SVD, while the Thompson (1977) method transforms the variance of the response var(Y) = Σt + R, such that QRQT = I and QΣtQT = W, where I is an identity matrix and W is a diagonal matrix, of course assuming that Σt and R are positive definite matrices and that there is a matrix Q. More details of this method can be found in chapter 6 of the book by Mrode (2014). A detailed example of the Thompson (1977) method is available in Appendix E, section E.1, in the book by Mrode (2014). The Thompson (1977) method has the inconvenience that if the (co)variances R and Σt are unknown, the transformations mentioned above need to be applied at each iteration of the Henderson mixed model equations. For this reason, it's implementation is not straightforward using current univariate software.

The advantage of the proposed alternative method is that the analysis can be performed directly using the current software for univariate genomic selection and prediction. However, it is important to point out that when the distribution of the traits has considerably departed from normality, the proposed method does not guarantee independence between the transformed traits, a key assumption for the successful implementation of the proposed model, since for non-normal traits lack of correlation does not imply independence. Also, the proposed method can be implemented to perform the analysis even when there are some missing traits per individual and some individuals are missing in some environments, as long as the level of unbalance is not strong.

Materials and methods

Statistical models

Multiple-trait multiple-environment model

Since genotype × environment interaction is of paramount importance in plant breeding, the following univariate linear mixed model is usually used for each trait:

where yij represents the normal response from the jth line in the ith environment (i = 1, 2, …, I, j = 1, 2, …, J). Ei represents the effect of the ith environment and is assumed as a fixed effect, gj represents the random effect of the genomic effect of the jth line, with g = (gj, …, gJ)T ~ \(N\left( {{\mathbf{0}},\sigma _1^2{\kern 1pt} {\boldsymbol{G}}_g} \right)\), \(\sigma _1^2\) denotes the genomic variance and Gg is of order J × J and represents the genomic relationship matrix (GRM) and is calculated using the Van Raden (2008) method as Gg = \(\frac{{{\boldsymbol{ZZ}}^T}}{p}\), where p denotes the number of markers and Z the matrix of markers of order J × p. The Gg covariance matrix is constructed using the observed similarity at the genomic level between lines, rather than the expected similarity based on pedigree. gEij is the random interaction term between the genomic effect of the jth line and the ith environment where gE = (gE11, …, gEIJ)T ~ \(N\left( {{\mathbf{0}},\sigma _2^2{\kern 1pt} {\bf{I}}_I \otimes {\boldsymbol{G}}} \right)\), \(\sigma _2^2\) denotes the variance of the interaction term of genotype by environment, and eij is a random error term associated with the jth line in the ith environment distributed as N(0, σ2), with σ2 denoting the residual variance. This model is usually used for each of the l = 1, …, L traits, where L denotes the number of traits under study. Next we will present the multivariate version of model (1); for this reason, first we provide the notation for the matrix variate normal distribution, which is a generalization of the multivariate normal distribution. In particular, let the (n × p) random matrix, M, be distributed as matrix variate normal distribution denoted as M ~ NMn×p(Η, Ω, Σ), if and only if, the (np × 1) random vector vec(M) is distributed as multivariate normal denoted as Nnp(vec(Η), Σ ⊗ Ω); therefore, NMn×p denotes the (n × p) dimensional matrix variate normal distribution, Η is a (n × p) location matrix, Σ is a (p × p) first covariance matrix, and Ω is a (n × n) second covariance matrix (Srivastava and Khatri 1979). vec(.) and ⊗ are the standard vector operator and Kronecker product, respectively.

To account for the correlation between traits, all of the L traits given in Eq. (1) are jointly modeled in a whole multiple-trait, multiple-environment mixed model as follows:

where Y is of order n × L, X is of order n × I, β is of order I × L, Z1 is of order n × J, b1 is of order J × L and contains the first interaction term genotype × trait, Z2 is of order n × IJ, b2 is of order IJ × L and contains the second interaction term genotype × environment × trait, and e is of order n × L, with b1 distributed under matrix variate normal distribution as NMJ×L (0, Gg, Σt), where Σt is the unstructured genetic (co)variance matrix of traits of order L × L, b2 ~ NMJI×L(0, ΣE ⊗ Gg, Σt), where ΣE is an unstructured (co)variance matrix of order I × I, and e ~ NMn×L(0, In, Re), where Re is the unstructured residual (co)variance matrix of traits of order L × L, and Gg is the GRM described above. The Bayesian multiple-trait and multiple-environment (BMTME) model resulting from Eq. (2) was implemented by Montesinos-López et al. (2016).

First, we provided a modified version of the original BMTME model proposed by Montesinos-López et al. (2016), and in the next section, we will provide the modified Gibbs sampler for this modified BMTME model.

Gibbs sampler for the BMTME model

Outlined below is the Gibbs sampler for estimating the parameter of interest in the BMTME model. While the order is somewhat arbitrary, we suggest the following:

Step 1. Simulate β according to the normal distribution given in Supplementary material (A.1).

Step 2. Simulate b1 according to the normal distribution given in Supplementary material (A.2).

Step 3. Simulate b2 according to the normal distribution given in Supplementary material (A.3).

Step 4. Simulate Σt according to the inverse Wishart (IW) distribution given in Supplementary material (A.4).

Step 5. Simulate ΣE according to the IW distribution given in Supplementary material A (A.5).

Step 6. Simulate Re according to the IW distribution given in Supplementary material A (A.6).

Step 7. Return to step 1 or terminate when chain length is adequate to meet convergence diagnostics.

The main differences between this Gibbs sampler and that given by Montesinos-López et al. (2016) are: (i) that this modified Gibbs sampler assumes an unstructured variance–covariance matrix for environments, while the original BMTME model assumes a diagonal variance–covariance matrix for environments, and (ii) that the original BMTME model used non-informative priors based on the Half-t distribution of each standard deviation term and uniform priors on each correlation of the covariance matrices of traits (genetic and residual). The modified BMTME model presented here assumes weak informative priors not based on the Half-t distribution of each standard deviation (details of the hyperparameters of the BMTME model are given in Supplementary material). The hyperparameters for the BMTME model were set similar to those used in the BGLR software (Pérez-Rodríguez and de los Campos 2014). The modified full conditional of this modified BMTME model, which supports the Gibbs sampler given above, is provided in Supplementary material. Next, we will describe the parameterization of the model given in Eq. (2) to develop the proposed alternative method that is based on SVD.

BMTME_Thompson version

Following Thompson (1977) and Ducrocq and Chapuis (1993), we define Q as a matrix of order L × L such that QΣtQT = Dt, where Dt is a diagonal matrix also of order L × L and QReQT = It. The Q matrix always exists and can be calculated as Q = LTP, where \({\boldsymbol{P}} = {\bf{U}}_e{\boldsymbol{B}}_e^{ - 0.5}{\boldsymbol{U}}_e^T\), where Ue and Be are obtained by applying the SVD to \({\bf{R}}_e = {\bf{U}}_e{\bf{B}}_e{\boldsymbol{U}}_e^T\), while LT is obtained also by applying the SVD to PΣtPT = LDtLT. Then, by applying a linear transformation to Eq. (2), we obtain:

Then note that:

Since Dt and It are diagonal matrices of order t × t, the full conditional distributions for the transformed random effects \({\boldsymbol{b}}_1^\&\) and \({\boldsymbol{b}}_2^\&\) of the BMTME model are:

Full conditional for \(\mathrm{vec}\left( {{\boldsymbol{b}}_1^\& } \right)\)

where \(\widetilde {\bf{\Sigma }}_{{\boldsymbol{b}}_1^\& }\) = \(( {{\bf{D}}_t^{ - 1} \otimes {\boldsymbol{G}}_g^{ - 1} + {\boldsymbol{I}}_L \otimes {\boldsymbol{Z}}_1^T{\boldsymbol{Z}}_1} )^{ - 1}\) and \(\mathrm{vec}( {\widetilde {\boldsymbol{b}}_1^\& } )\) = \(\widetilde {\bf{\Sigma }}_{{\boldsymbol{b}}_1^\& }\left( {{\boldsymbol{I}}_L \otimes {\boldsymbol{Z}}_1^T} \right)\left[ {vec\left( {\boldsymbol{Y}^\& } \right) - vec\left( {{\boldsymbol{X\beta }}^\& } \right) - vec({\boldsymbol{Z}}_2{\boldsymbol{b}}_2^\& )} \right]\).

Full conditional for \(vec\left( {{\boldsymbol{b}}_2^\& } \right)\)

where \(\widetilde {\bf{\Sigma }}_{{\boldsymbol{b}}_2^\& }\) = \(( {{\bf{D}}_t^{ - 1} \otimes {\mathbf{\Sigma }}_E^{ - 1} \otimes {\boldsymbol{G}}_g^{ - 1} + {\boldsymbol{I}}_L \otimes {\boldsymbol{Z}}_2^T{\boldsymbol{Z}}_2} )^{ - 1}\) and \(vec\left( {\widetilde {\boldsymbol{b}}_2^\& } \right)\) = \(\widetilde {\bf{\Sigma }}_{{\boldsymbol{b}}_2^\& }\left( {{\boldsymbol{I}}_L \otimes {\boldsymbol{Z}}_2^T} \right)\left\{ {\mathrm{vec}\left( {\boldsymbol{Y}^\& } \right) - \mathrm{vec}\left( {{\boldsymbol{X\beta }}^\& } \right) - \mathrm{vec}\left( {{\boldsymbol{Z}}_1{\boldsymbol{b}}_1^\& } \right)} \right\}\).

It is important to point out that the full conditionals of \({\boldsymbol{b}}_1^\&\) and \({\boldsymbol{b}}_2^\&\) are diagonals for traits; for this reason, these full conditionals can be sampled independently for each trait, as:

Full conditional for \({\boldsymbol{b}}_1^{\& (l)}\) for l = 1, 2, …, L

where \(\widetilde {\bf{\Sigma }}_{{\boldsymbol{b}}_1^{\& ({\boldsymbol{l}})}}\) = \(\left( {d_l^{ - 1} \otimes {\boldsymbol{G}}_g^{ - 1} + {\boldsymbol{Z}}_1^T{\boldsymbol{Z}}_1} \right)^{ - 1}\) and \(\widetilde{\boldsymbol{b}}_1^{\& (l)} = \widetilde{\bf{\Sigma }}_{{\boldsymbol{b}}_1^\& }( {{\boldsymbol{Z}}_1^T} )[ {{\boldsymbol{Y}}^{\& (l)} - {\boldsymbol{X\beta }}^{\& (l)} - {\boldsymbol{Z}}_2{\boldsymbol{b}}_2^{\& (l)}} ]\).

Full conditional for \({\boldsymbol{b}}_2^{\& (l)}\) for l = 1, 2, …, L

where \(\widetilde {\bf{\Sigma }}_{{\boldsymbol{b}}_2^{\& (l)}}\) = \(( {d_l^{ - 1} \otimes {\mathbf{\Sigma }}_E^{ - 1} \otimes {\boldsymbol{G}}_g^{ - 1} + {\boldsymbol{Z}}_2^T{\boldsymbol{Z}}_2} )^{ - 1}\) and \(\widetilde {\boldsymbol{b}}_2^{\& (l)}\) = \(\widetilde {\bf{\Sigma }}_{{\boldsymbol{b}}_2^{\& ({\boldsymbol{l}})}}\left( {{\boldsymbol{Z}}_2^T} \right)\left\{ {\boldsymbol{Y}^{\& (l)} - {\boldsymbol{X\beta }}^{\& (l)} - {\boldsymbol{Z}}_1{\boldsymbol{b}}_1^{\& (l)}} \right\}\).

Therefore, the Gibbs sampler for the BMTME Thompson version should be:

Step 1. Simulate β according to the normal distribution given in Supplementary material (A.1).

Step 2. Simulate \({\boldsymbol{b}}_1^{\& (l)}\) for l = 1, 2, …, L according to the normal distribution given in Eq. (6).

Step 3. Simulate \({\boldsymbol{b}}_2^{\& (l)}\) for l = 1, 2, …, L according to the normal distribution given in Eq. (7).

Step 4. Then transform back Y = Y&Q(−1)T, \({\boldsymbol{\beta }} = {\boldsymbol{\beta }}^\& {\boldsymbol{Q}}^{( - 1)T}\), \({\boldsymbol{b}}_1 = {\boldsymbol{b}}_1^\& {\boldsymbol{Q}}^{( - 1)T}\) and \({\boldsymbol{b}}_2 = {\boldsymbol{b}}_2^\& {\boldsymbol{Q}}^{( - 1)T}\).

Step 5. Simulate Σt according to the IW distribution given in Supplementary material (A.4).

Step 6. Simulate ΣE according to the IW distribution given in Supplementary material (A.5).

Step 7. Simulate Re according to the IW distribution given in Supplementary material (A.6).

Step 8. Return to step 1 or terminate when chain length is adequate to meet convergence diagnostics.

The Gibbs sampler for the BMTME Thompson version is similar to the original modified Gibbs sampler except that steps 2 and 3 were replaced for univariate sampling for the transformed random effects (b1 and b2), which are back transformed in Step 4. The advantage of the BMTME Thompson version is that it allows sampling the random effects of b1 and b2 independently for each trait, which allows improving the speed of the Gibbs sampler because it can be parallelized. However, the remaining parameters (β, Σt, ΣE, and Re) are sampled exactly as the original Gibbs sampler.

BMTME_Approx model with SVD

An alternative but only approximate method is to transform the matrix of response variables with SVD as Y = UDVT, where U and V are orthogonal matrices called the left and right singular vectors, respectively, of dimensions n × n and L × L, while D is a rectangular diagonal matrix of the singular values of order n × L (where only the first L values of D are positive while the rest are zeros). Therefore, the reparametrized model given in Eq. (2) can be rewritten as:

where Y* = UD = YV, β* = βV, \({\boldsymbol{b}}_1^ \ast\)=b1V, \({\boldsymbol{b}}_2^ \ast = {\boldsymbol{b}}_2{\it{V}}\), e* = eV. Y* is of order n × L, β* is of order I × L, \({\boldsymbol{b}}_1^ \ast\) is of order J × L, \({\boldsymbol{b}}_2^ \ast\) is of order IJ × L, and e* is of order n × L. Note that the parametrized model does not have the same conceptual definition as the original model (2), first, because β* is a random matrix and not an unknown constant matrix as the matrix of fixed effects, β, and second, because \({\boldsymbol{b}}_1^ \ast\), \({\boldsymbol{b}}_2^ \ast\), and e* are no longer independent. However, if we fix V as part of the subjacent data structure, and we suppose that Σt = VDt1VT and Re = VDteVT (they have a restricted parameter space, Lin and Smith (1990)), \({\boldsymbol{b}}_1^ \ast\) is distributed as a matrix variate normal distribution as NMJ×L (0, Gg, Dt1), \({\boldsymbol{b}}_2^ \ast\) ~ \(NM_{JI \times L}\left( {{\mathbf{0}},{\mathbf{\Sigma }}_E \otimes {\boldsymbol{G}}_{\boldsymbol{g}},{\bf{D}}_{t1}} \right)\), and e* ~ NMn×L(0, In, Dte), where Dt1 and Dte are diagonal variance–covariance matrices of dimension L × L. It is important to point out that, under this approximate model, the (co)variance matrix for environment is assumed an identity matrix, ΣE = II.

To use the existing software, we replaced the distribution of transformed random effects \({\boldsymbol{b}}_2^ \ast\) with \({\boldsymbol{b}}_2^ \ast\) ~ NMJI×L(0, II ⊗ Gg, Dt2), where Dt2 is another diagonal variance–covariance matrix of traits of dimension L × L. With this, the resulting model (8) can be estimated with the R package BGLR, which is appropriate for univariate analysis. From Eq. (8), it is clear that the parameter estimates and predicted values of the original model (Eq. (2)) without transformation can be approximated as:

Steps for implementing the proposed BMTME_Approx model

Step 1: De-correlate the original traits with the SVD as Y = UDVT and use it as response variable Y* = UD = YV.

Step 2: Implement model (1) but using one column at a time of the uncorrelated matrix Y* as the response variable. This means that a total of L single analyses are done with the model in Eq. (1).

Step 3: With the output of Step 2, the predicted values can be calculated in terms of the transformed values with \(\widehat {\boldsymbol{Y}}^ \ast = {\boldsymbol{X}}\widehat {\boldsymbol{\beta }}^ \ast + {\boldsymbol{Z}}_1\widehat {\boldsymbol{b}}_1^ \ast + {\boldsymbol{Z}}_2\widehat {\boldsymbol{b}}_2^ \ast\). With this new output, we construct the diagonal matrices \(\widehat {\bf{D}}_{t1}\), \(\widehat {\bf{D}}_{t2}\), and \(\widehat {\bf{D}}_{te}\).

Step 4: Finally, with Eq. (12), we obtain the predicted values in terms of the original traits. Furthermore, with Eqs. (9–11, 13–15), we obtain the parameter estimates of the beta coefficients, β, random effects b1 and b2, as well as the variance–covariance matrices of traits corresponding to traits in the first interaction term, Σt1, for traits in the second interaction term, Σt2, and the residual variance–covariance matrix of traits, Re. The R code for implementing this proposed BMTME_Approx model in BGLR is given in Supplementary material.

For implementing the proposed BMTME_Approx model with random cross-validation, we will follow the four-step procedure exactly as described above, except for the first step, which is modified. Now, in Step 1, we will de-correlate the traits in the training data set (Yt) with the SVD as \({\boldsymbol{Y}}_t = {\boldsymbol{U}}_t{\boldsymbol{D}}_t{\it{V}}_t^{\boldsymbol{T}}\), where the subscript t denotes that these matrices were estimated with the training data set. Then we transform the response variable into Y* = UtDt = YtUt, and expand Y* with the number of rows that are the same size as the testing data set; the expanded rows should all be replaced with NA, to represent the missing values. The positions of the expanded rows with missing values will correspond to the testing data set. Once this is done, the remaining steps must be followed exactly as in the above procedure that is described for the full data set. Note that the dimension of the response variable matrix with the training data set has fewer rows than the full data set of response variables.

It is important to point out that the BMTME model was built to estimate only one variance–covariance matrix of traits, Σt, involved in the two interaction terms, genotype × trait and genotype × environment × trait. However, the BMTME_Approx model allows estimating a variance–covariance matrix of traits for each interaction term in which the traits are involved. The BMTME model is also able to estimate an unstructured variance–covariance matrix for the environments, ΣE; this is not reported for the BMTME_Approx model because an identity matrix is assumed.

Hyperparameters

First we provide the hyperparameters for the BMTME model: β ~ MNI×L (β0, II, Sβt), b1|Σt ~ MNJ×L (0, Gg, Σt), b2|Σt, ΣE ~ MNIJ×L (0, ΣE ⊗ Gg, Σt), ΣE ~ IW(νE = 5, SE = SE), Σt ~ IW(νt = 5, St = St), and Re ~ IW(νe = 5, Se = Se); β0 was obtained as the least square of each trait. The remaining hyperparameters Sβt, St, SE, and Se are given in Supplementary material. The hyperparameters for the BMTME_Approx were exactly the same to those of the BMTME, but for the univariate analysis, it were as those used in the BGLR software (Pérez-Rodríguez and de los Campos 2014). The proposed Gibbs sampler was implemented in the R-software (R Core Team 2018). A total of 60,000 iterations were performed with a burn-in of 20,000, so that 40,000 samples were used for inference. To eliminate potential problems due to the autocorrelation function (ACF), we considered a thinning of 5. The convergence of the MCMC chains was monitored using trace plots, ACF and Gelman-Rubin diagnostics. We provide weakly informative priors to implement the proposed models. It is important to point out that the proposed BMTME_Approx model only works for normally distributed traits. However, when there is considerable departure from normality, we suggest using independent component analysis (ICA) instead of SVD for transforming the matrix of response variables (Y) (see Supplementary material for its implementation).

Simulated data set 1 and data set 2

To test the proposed models and methods, we simulated multiple-trait and multiple-environment data using the model in Eq. (2). For this first data set, we used the following parameters: 3 environments, 3 traits, 200 genotypes, and 1 replication of the environment–trait–genotype combination. We assumed that βT = [13, 10, 5, 12, 8, 7, 11, 9, 6], where the first three beta coefficients belong to traits 1, 2, and 3 in environment 1, the second three values belong to the three traits in environment 2, and the last three belong to environment 3. We assumed that the GRM is known and equal to Gg = 0.3I200 + 0.7J200, where I200 is an identity matrix of order 200 and J200 is a matrix of order 200 × 200 of ones. The parameters used for building the GRM were chosen to provide a high-level relationship between lines (Montesinos-López et al. 2016).

Therefore, the total number of observations is 3 × 200 × 3 × 1 = 1800, that is, 600 for each trait. Since a covariance matrix can be expressed in terms of a correlation matrix (Rr) and a standard deviation matrix \(\left( {{\boldsymbol{D}}_r^{1/2}} \right)\) as: \({\mathbf{\Sigma }}_r = {\boldsymbol{D}}_r^{1/2}{\boldsymbol{R}}_{\boldsymbol{r}}{\boldsymbol{D}}_r^{1/2}\), with r = t, E, e, where r = t represents the genetic covariance between traits, r = E represents the genetic covariance matrix between environments, and r = e represents the residual covariance matrix between traits. For the three covariance matrices (r = t, E, e), we used Rr = 0.75I3 + 0.25J3, where J3 is a matrix of order 3 × 3 of ones, and \({\boldsymbol{D}}_t^{1/2}\) = diag(0.9, 0.8, 0.9), \({\boldsymbol{D}}_E^{1/2}\) = diag(0.5, 0.65, 0.75) and \({\boldsymbol{D}}_e^{1/2}\) = diag(0.6, 0.42, 0.33). For the second data set, the parameters used in the simulation were: βT = [13, 12.5, 12, 11.5, 11, 10.5, 10, 12, 11.5, 11, 10.5, 10.5, 10,10,11,11.5,12, 12, 11, 10,10.5], where the first seven beta coefficients belong to traits 1–7 in environment 1, the second seven values to the 7 traits in environment 2, and the last seven belong to environment 3.

The matrix of the relationship between lines was generated as Gg = 0.3I200 + 0.7J200 and was equal to the first simulation data set. Here the total number of observations is 3 × 200 × 7 × 1 = 4200, that is, 600 for each trait. For two of the three covariance matrices (f = t, e), we used

\({\boldsymbol{D}}_f^{1/2}\) = diag(1, 1.0003, 1.0003, 1, 0.9996, 1, 1.0003), while \({\boldsymbol{R}}_E = {\boldsymbol{D}}_E^{1/2}\) = diag(1, 1, 1), that is, we assumed independence between the environments. It is important to point out that this second data set has a high genetic and environmental correlation between traits.

Experimental data sets

Maize data set

The first real data set used for implementing the proposed model is composed of 309 double-haploid maize lines. Traits available in this data set include grain yield (GY), anthesis-silking interval (ASI), and plant height (PH); each of these traits was evaluated in three optimum rain-fed environments (EBU, KAT, and KTI). After editing, information from 158,281 markers was used. This data set was also used by Montesinos-López et al. (2016) and includes best linear unbiased estimates (BLUEs) obtained based on a mixed model analysis of individual trials of a first analysis.

Wheat data set

Here we present information on the second real data set used for implementing the proposed model. This real data set is composed of 250 wheat lines that were extracted from a large set of 39 yield trials grown during the 2013–2014 crop season in Ciudad Obregon, Sonora, Mexico (Rutkoski et al. 2016). The traits under study were days to heading (DH), GY, PH, and the green normalized difference vegetation index (NDVI). Each of these traits was evaluated in three environments (Bed2IR, Bed5IR, and Drip). The marker information used after editing was from 12,083 markers, this data set also used by Montesinos-López et al. (2016); those interested in obtaining more details about this data set can consult this publication. The phenotypes of each trait are BLUEs obtained after a first analysis where they were adjusted by the experimental field design.

High-throughput (HTP) data set

This data set belongs to an experiment that used HTP wheat plant phenotyping conducted at Ciudad Obregon, Sonora, México. The data set is comprised of 976 wheat lines that were extracted from a large set of 1170 lines from the CIMMYT Global Wheat Program. The following traits were under study: GY, DH, red normalized difference vegetation index (RNDVI), green normalized difference vegetation index (GNDVI), simple ratio (SRa), ratio analysis of reflectance spectra chlorophyll a (RARSa), ratio analysis of reflectance spectra chlorophyll b (RARSb), ratio analysis of reflectance spectra chlorophyll c (RARSc), normalized pheophytinization index (NPQI), and photochemical reflectance index (PR). Each of these traits was evaluated in three environments (drought, irrigated, and reduced irrigation). After marker editing, information from 1448 markers was used. This data set was also used by Montesinos-López et al. (2017b, c). The phenotypes of each trait are BLUEs obtained after a first analysis where they were adjusted by the experimental field design.

Large EYT set

This data set belongs to CIMMYT’s three elite yield trial (EYT) nurseries, consisting of 2505 lines of wheat, genotyped by genotyping-by-sequencing (GBS). These were evaluated for GY, DH, and PH in five environments (BED_5IR, FLAT_5IR, BED_2IR, FLAT_DRIP, and LHT) evaluated in Ciudad Obregon, Mexico, under bed and flat planting systems. The EYT nurseries were sown in 39 trials, each containing 28 lines and two checks that were arranged in an alpha lattice design with three replications and six blocks. The nurseries were evaluated for the three traits under study on a plot basis during 2014 (EYT 13–14), 2015 (EYT 14–15), and 2016 (EYT 15–16). We used BLUEs as observed values of the breeding lines resulting from adjusting for the corresponding experimental design. All the 2505 lines were genotyped using GBS (Elshire et al. 2011; Poland et al. 2012) at Kansas State University, with an Illumina HiSeq2500 for obtaining genome-wide markers. Markers with missing data >60% (minor allele frequency <5% and percentage of heterozygosity >10%) were removed, and we obtained 2038 markers, which were used for the analysis. Also, the traits used are BLUEs obtained after a first analysis where they were adjusted by the experimental field design in each trial.

Random cross-validation scheme

For testing the prediction ability of the proposed models, the BMTME_Approx model and the BMTME model, we implemented a type of cross-validation where all the traits are missing in some individuals and the information of some individuals is missing in some environments (that is, their lines and traits are missing), but in at least one environment there is information available on those individuals. We implemented a 20 random cross-validation scheme for all the data sets under study, with the exception of the HTP and the large EYT data sets, in which we implemented 10 random cross-validations. For the 20 random cross-validation scheme, in each partition we assigned 20% of the data to the testing set and the remaining 80% of the data to the training set, while for the 10 random cross-validation scheme, we assigned 30% of the data to the testing set and 70% to the training set. The models were fitted with the information in the training data sets, and the prediction accuracy was evaluated with the testing data sets.

The metrics used for reporting the prediction accuracy were the Pearson’s correlation (Cor) and the mean square error of prediction (MSEP) obtained by averaging the information of the 20 (or 10) random partitions resulting from the testing data sets. The models were implemented in the R package (R Core Team 2018) and the proposed BMTME_Approx model can be implemented in the BGLR package of de los Campos and Pérez-Rodríguez (2014). On the other hand, the BMTME model was implemented in R with the model proposed by Montesinos-López et al. (2016).

Data repository

The phenotypic and genotypic information of the experimental wheat and maize data sets included in this study can be downloaded from the link http://hdl.handle.net/11529/10646 (Montesinos-López et al. 2016). This link includes phenotypic data on maize (Data.maize) and wheat (Data.trigo), as well as genomic data on maize (G.maize) and wheat (G.trigo).

The HTP data and materials used in this study can be downloaded from the link given in Montesinos-Lopez et al. (2017c): http://hdl.handle.net/11529/10693 that contains a file corresponding to the phenotypic and band data for each environment, Drought.Phe and Bands.RData, EarlyHeat.Phe, and Bands.RData, Irrigated.Phe and Bands, RData, Irrigated.Phe and Bands.RData. The large EYT data (Data.EYT.2018) can be downloaded from the link http://hdl.handle.net/11529/10547920.

Results

The results are described in two main sections: The first section presents the results of the simulated data sets, while the second presents the results of the real data sets. It is important to point out that we do not present results for the BMTME Thompson version because it is only a different reparametrization of the original BMTME model.

Simulated data sets

Data set 1

First, we present the parameter estimates of the BMTME and the BMTME_Approx models. Table 1 shows that the beta coefficients of both models are similar. In general, the beta coefficients of the BMTME model are larger than those of the BMTME_Approx model, the smallest difference is 3% observed in environment 1 and trait 1, and the largest difference is 15.7% observed in environment 3 and trait 3. The variance–covariance matrix of traits (Σt) of the BMTME model is quite similar to the variance–covariance matrices of the BMTME_Approx model (Σt1, Σt2). The variance–covariance components of the residual of both models are quite similar, with the smallest difference (1.6%) observed in trait 2 and trait 1 and the largest difference (33.7%) observed in trait 3 and trait 2. It is worth pointing out again that the BMTME and BMTME_Approx models are different; consequently, we cannot expect the exact same parameter estimates. Finally, when comparing the observed versus the predicted values for each trait using Pearson’s correlation and MSEP, we observed that the BMTME_Approx model produced predicted values that are very similar to those of the BMTME model (see Table 1).

With regard to prediction accuracy, Table 2 shows that the BMTME model was the best: In six out of the nine trait–environment combinations, it was superior to the BMTME_Approx model in terms of Pearson’s correlation and MSEP. On average, the BMTME model was superior to the BMTME_Approx model by 0.94% and 0.88% in terms of Pearson’s correlation and MSEP, respectively. From the results of these simulated data, it is evident that the BMTME_Approx model is very similar to the BMTME model in terms of prediction accuracy (Table 2).

Data set 2

First, we present the parameter estimates of the BMTME and BMTME_Approx models. Table 3 shows that the beta coefficients of both models are similar, and in general, the beta coefficients of the BMTME model are larger than those of the BMTME_Approx model. The smallest difference is 5.15%, which is observed in environment 3 and trait 6, while the largest difference is 12.37%, observed in environment 1 and trait 5. The variance–covariance matrix of traits (Σt) in the BMTME model is very similar to the variance–covariance matrices of the BMTME_Approx model (Σt1, Σt2). The variance–covariance components of the residual of both models are quite similar, with the smallest difference (0.48%) observed in trait 7 and trait 1 and the largest difference (27.38%) observed in the variance of trait 5. It is worth reiterating that, with the BMTME and BMTME_Approx, we should not expect exactly the same parameter estimates, as the models are different. Finally, when comparing the observed versus the predicted values for each trait using Pearson’s correlation and MSEP, the BMTME_Approx model produced predicted values that were slightly better than those of the BMTME model (see Table 3). In terms of Pearson’s correlation, the smallest difference in favor of the BMTME_Approx model was 6.73% observed in trait 6, while the largest difference, also in favor of the BMTME_Approx model, was 14.16% observed in trait 3. In terms of MSEP, the smallest difference was 13.81% in trait 1 and the largest difference was 19.34% in trait 5, both in favor of the BMTME_Approx model.

Table 4 shows that the proposed approximate model, BMTME_Approx, was better than the BMTME model in terms of Pearson’s correlation, as shown in 14 out of the 21 trait–environment combinations. However, in terms of MSEP, the BMTME model was superior to the the BMTME_Approx model, as exemplified by 12 out of the 21 trait–environment combinations. On average, in terms of Pearson’s correlation, the BMTME_Approx model was better than the BMTME model by 7.24%, while in terms of MSEP, both models were, on average, almost identical.

Experimental data sets

Maize data set

First, we compared the parameter estimates of the two models (BMTME and BMTME_Approx). Table 5 shows that the beta coefficients of the proposed BMTME_Approx model are all similar to those of the BMTME model. The variance–covariance matrix of traits (Σt) in the BMTME model is quite similar to the variance–covariance matrices of the BMTME_Approx model (Σt1,Σt2). When comparing the variance–covariance components of the residual of both models, we observe that 6 out of the 9 terms are not significantly different; however, the remaining 3 terms are quite different, as they belong to the covariance of trait PH with the other traits. Finally, when comparing the observed versus the predicted values for each trait using Pearson’s correlation and MSEP, we see that the BMTME_Approx model is slightly better, since in Pearson’s correlation, it outperformed the BMTME model in 2 out of the 3 traits and, on average, the BMTME_Approx model was 2.1% (for trait GY) and 1.4% (for trait ASI) better than the BMTME model. In terms of MSEP, the BMTME_Approx model was better than the BMTME model in 2 out of the 3 traits, and, on average, the BMTME_Approx model was 7% better (for trait GY) and 4.11% better (for trait ASI) than the BMTME model (see Table 5). In general, the parameter estimates and predictions are relatively similar in this data set.

Next, in Table 6, we see that the proposed approximate model, BMTME_Approx, was better than the BMTME model in terms of Pearson’s correlation, as shown in 5 out of the 9 trait–environment combinations. However, in terms of MSEP, the BMTME model was superior to the BMTME_Approx model in 7 out of the 9 trait–environment combinations. On average, the BMTME_Approx model was better than the BMTME model by 11.72% in terms of Pearson’s correlation, while conversely, the BMTME model was better than the BMTME_Approx by 37.94% (Table 6) in terms of MSEP.

Wheat data set

First, we compared the parameter estimates of the two models (BMTME and BMTME_Approx) and then their prediction accuracy. Table 7 shows that the beta coefficients of the proposed BMTME_Approx model are similar to those of the BMTME in 9 out of the 12 parameters; however, there are substantial differences in three of the beta coefficients corresponding to trait NDVI. The variance–covariance matrix of traits (Σt) in the BMTME model is significantly different from the variance–covariance matrices of the BMTME_Approx model (Σt1, Σt2).

When comparing the variance–covariance components of the residual of both models, it is evident that 9 out of the 16 terms are not notably different, while the remaining 7 terms are. These very different terms belong to the covariance of trait NDVI with the other traits. Finally, when we compared the models in terms of the observed versus the predicted values for each trait using Pearson’s correlation and MSEP, we saw that the BMTME_Approx model was marginally better, and it was superior to the BMTME model for Pearson’s correlation in 3 out of the 4 traits and, on average, 0.99% better for trait DH, 22.04% better for trait GY, and 17.16% better for trait PH. Furthermore, for MSEP, the BMTME_Approx model was better than the BMTME model in 3 out of the 4 traits and, on average, 18.88% better for trait DH, 48.85% better for trait GY, and 57.5% better for trait PH (see Table 7).

When comparing both models in terms of prediction accuracy with only the testing set of the 20 random partitions implemented, Table 8 shows that the proposed alternative model, BMTME_Approx, was better than the BMTME model in 8 out of the 12 trait–environment combinations in terms of Pearson’s correlation and in 7 out of the 12 trait–environment combinations in terms of MSEP. On average, the BMTME_Approx model was also better than the BMTME model by 2.1% in terms of Pearson’s correlation and by 6.87% in terms of MSEP (Table 8).

HTP data set

In Table 9 we provide the parameter estimates of the HTP data set, which has 10 traits evaluated in 3 environments, with 976 lines evaluated in each environment. The estimates of the beta coefficients are reasonable since they are consistent with the sample average for each trait in each environment, which is equivalent to the least square estimates.

Rather than reporting the variance–covariance of traits, we report the correlation between traits, which can be more useful, as it gives a better idea of the level of correlation between traits in the whole data set. Our proposed BMTME_Approx model estimates two variance–covariance matrices for the genetic part of the traits and one for the residual part of the traits; in this vein, we report two correlation matrices for the genetic part of the traits and one for the residual correlation of the traits. In the section “genetic correlation between traits” in Table 9, the upper diagonal part gives the correlations between traits corresponding to the term genotype × trait, where we can observe that 33 (73.33%) of the 45 possible correlations were, in absolute values, >0.5. On the other hand, in the lower diagonal part of the genetic correlation section are given the genetic correlations that correspond to the three-way interaction term environment × genotype × trait. Here we can observe that only 16 (35.56%) of the 45 possible correlations are >0.5.

In general, there is evidence of a reasonably high genetic correlation between traits in this HTP data set. In Table 9, the section on the residual correlation between traits shows that only 15 (33.33%) out of the 45 possible residual correlations are >0.5. This information indicates that the phenotypic correlation between the ten traits is more influenced by the genetic part than by the residual part. Finally, the “prediction accuracy” section of Table 9 shows the Pearson’s correlation and MSEP obtained for the whole data set between the observed and predicted values for each trait. In general, all the observed Pearson’s correlations are >0.86, with the exception of the RNDVI, GNDVI, and SRa traits, which had a Pearson’s correlation <0.8.

The prediction accuracies for the HTP data set are given in Table 10. The predictions reported were the average of the ten random partitions of the testing data set. Here we only report the prediction accuracy for the BMTME_Approx model since it is extremely difficult in terms of implementation time to run the BMTME_model due to the large data set. Table 10 shows that the best predictions in terms of Pearson’s correlation were observed for trait DH, while the predictions for the rest of the traits in general were low. Also, in terms of Pearson’s correlation, the best predictions were observed in the irrigated environment. On the other hand, in terms of MSEP for traits GY and DH, the best predictions were observed in the drought environment; however, there were no significant differences in terms of prediction accuracy between the remaining traits, since in all traits, the MSE was almost zero.

Large EYT data set

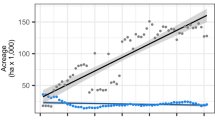

In Table 11, we provide the parameter estimates of the EYT data set that has 3 traits evaluated in 5 environments, with 2505 lines evaluated in each environment. We found that the estimates of the beta coefficients are reasonable since they are consistent with the least square estimates.

Here we also report the correlation between traits to have a better idea of the level of correlation between traits in the whole data set. Furthermore, we report two matrices of correlation for the genetic part of traits and one for the residual correlation of traits. In the section “genetic correlation between traits” in Table 11, the upper diagonal part gives the correlations between traits corresponding to the term genotype × trait, where we can observe that the correlation between PH and DH was the only one >0.5. On the other hand, in the lower diagonal part of the genetic correlation section, between traits are given the genetic correlations that correspond to the three-way interaction term environment × genotype × trait. Here we can observe that only the correlation between GY and PH was >0.5 (Table 11).

In Table 11, in the section on the residual correlation between traits, we can observe that only the correlation between GY and PH was >0.5. Finally, in the “prediction accuracy” section of Table 11, we can observe the Pearson’s correlation and MSEP obtained for the whole data set between the observed and predicted values for each trait. In general, all the observed Pearson’s correlations are >0.89.

The prediction accuracies for this large wheat data set are given in Table 12. The predictions reported are the average of the ten random partitions of the testing data set. Here we only report the prediction accuracy for the BMTME_Approx model; owing to the size of the data set, it almost impossible to run the BMTME_model. Table 12 shows that the best predictions in terms of Pearson’s correlation were for trait GY, while the worst were for trait DH. At the same time, the best predictions for GY, DH, and PH in terms of Pearson’s correlation were observed in environments BED_5IR, FLAT_5IR, and BED_2IR, respectively. On the other hand, in terms of MSEP for traits GY, DH, and PH, the best predictions were observed in BED_2IR, LHT, and FLAT_5IR, respectively (Table 12).

Discussion

The amounts of data that the breeding programs around the world are generating continue to increase; consequently, there is a growing need to extract more knowledge from the data being produced. To this end, multiple-trait models are commonly used to take advantage of correlated traits to improve parameter estimation and prediction accuracy. However, when there is a large number of traits, implementing these types of models is challenging. Therefore, it is necessary to develop efficient multiple-trait and multiple-environment models for whole-genome selection in order to take advantage of multiple correlated traits. In this paper, we propose an alternative method for analyzing multi-trait data that could be useful for whole-genome selection in the context of an abundance of traits. Some advantages of the proposed method are: (i) it can be implemented in current genomic selection software that was built for univariate analysis (e.g., BGLR, ASREML, package Sommer of R); (ii) it can be implemented with a large number of traits and, in general, with a large data set since this model is implemented in four steps; and (iii) this method can be more efficient in terms of implementation time because univariate analyses for each trait (step 2 of the procedure) are required for its implementation, which allows an implementation in parallel and with low dimensions compared to the BMTME model that models all the traits simultaneously and also (iv) decreases the probability of having collinearity and convergence problems, since when more correlated traits are added to the multivariate analysis, both problems increase (Schulthess et al. 2016).

In Supplementary material, we provide the R code for implementing the proposed method. When the matrix of response variable (Y) shows a considerable departure from normality, the proposed BMTME_Approx model is expected to be inefficient due to the fact that uncorrelated traits do not imply independence for non-normal data. For this reason, under these circumstances, in Supplementary material we also provide a solution to this situation based on the ICA that transforms the original unnecessary Gaussian matrix of response variables (Y) into a matrix of independent variables (Y*).

Also, it is important to point out that the BMTME_Thompson version is more efficient computationally than the original BMTME model since it allows sampling independently for each trait the random effects of b1 and b2, which improves computational efficiency because it avoids sampling from huge multivariate normal distributions. More specifically, the dimensions of the full conditionals are L times smaller than the original BMTME model. Another advantage is that the BMTME_Thompson model is not an approximation to the BMTME model, since it is only a reparametrization of the original model, which allows obtaining exactly the same parameter estimates and prediction accuracy at a lower cost in terms of implementation time, since the sampling process of the full conditionals of the random effects of b1 and b2 is done individually for each trait.

The proposed BMTME_Approx model takes advantage of the fact that optimal point estimates of any linear combination of the means and variances of the various separate analyses for each response variable can be obtained. Therefore, our proposed method uses a linear transformation of the separate parameter estimates to provide reasonable estimates of the beta coefficients (β), random effects (b1 and b2), and variance–covariance matrices (Σt1, Σt2, and Re) for a multivariate model. However, although the proposed model is able to provide reasonable approximate parameter estimates for the multivariate model by doing a separate analysis for each trait, it is unable to provide estimates of the standard error for off diagonal elements in the variance–covariance estimates.

Based on the results of the simulated and real data sets, we have reason to argue that the proposed BMTME_Approx model produces competitive predictions compared to those produced by the BMTME model, even though the parameter estimates resulting from the proposed BMTME_Approx model are quite different from those resulting from the BMTME model (mainly in the wheat real data set). However, the differences in parameter estimates between the BMTME and the BMTME_Approx models can be attributed to the fact that the BMTME_Approx model estimates two genetic variance–covariance matrices for traits (one for the interaction term genotype × trait and the other for the three-way interaction term environment × genotype × trait), while the BMTME only estimates one variance–covariance for both interaction terms. Also, in terms of prediction accuracies for simulated data set 1 (simulated with environments and correlated traits), the BMTME was better than the BMTME_Approx model, but in the second simulated data set, we observed that the BMTME_Approx (that assumes independence between environments and correlated traits) was better than the BMTME (that assumes correlated traits between environments and traits), which can be attributed to the fact that this second data set was simulated assuming independence between environments and correlated traits.

It is also important to point out that the proposed BMTME_Approx model can be implemented when there are many traits. For example, in the HTP and Large EYT data sets where there are a large number of traits, the application of the BMTME model becomes almost impossible due to the fact that samples are extracted from a very large number of multivariate normal distributions, and the modeling process is performed jointly for all the traits and not separately for each trait, as in the proposed BMTME_Approx model. This is a key element for achieving an efficient estimation process in terms of implementation time. Additionally, the proposed BMTME_Approx model can be implemented simultaneously since the procedure for estimating the required parameters requires a separate analysis for each trait.

Another point that we would like to highlight is that our proposed model is multiple-trait and multiple-environment but with the restriction that an identity matrix is assumed for the variance–covariance matrix of environments. However, even with this restrictive assumption in the variance–covariance matrix of environments, the model has the advantage of taking into account the interaction terms environment × trait, genotype × trait, and the three-way interaction environment × genotype × trait. Furthermore, it takes into account the correlated traits and can be implemented using conventional software for whole-genome prediction.

Conclusions

The results of the simulated and real data sets show that the proposed alternative method produced results that are similar to those of the conventional multiple-trait analysis. For this reason, the proposed method is an attractive alternative for analyzing multiple-trait data in the context of a large number of traits. However, it is important to point out that the significant differences found in parameter estimates between the proposed BMTME_Approx model and the BMTME model can be attributed mainly to the fact that the BMTME_Approx model allows the estimation of two genetic variance–covariance matrices for traits, one for the interaction term genotype × trait, and the other for the term environment × genotype × trait, while the BMTME model only estimates one genetic matrix of variance–covariance for traits.

References

Bhat JA, Ali S, Salgotra RK, Mir ZA, Dutta S, Jadon V, Prabhu KV (2016) Genomic selection in the era of next generation sequencing for complex traits inplant breeding. Front Genet 7:221. https://doi.org/10.3389/fgene.2016.00221

Boichard D, Ducrocq V, Croiseau P, Fritz S (2016) Genomic selection in domestic animals: principles, applications and perspectives. C R Biol 339:274–277

Calus MP, Veerkamp RF (2011) Accuracy of multi-trait genomic selection using different methods. Genet Sel Evol 43:26. https://doi.org/10.1186/1297-9686-43-26

Chiquet J, Tristan M, Robin S (2013). Multi-trait genomic selection via multivariate regression with structured regularization. MLCB NIPS 2013, Oct 2013, South Lake Thao, United States.

de los Campos G, Pérez-Rodríguez P (2014). Bayesian Generalized Linear Regression. R package version 1.0.4. http://CRAN.R-project.org/package=BGLR.

Desta ZA, Ortiz R (2014) Genomic selection: genome-wide prediction in plant improvement. Trends Plant Sci 19:592–601. https://doi.org/10.1016/j.tplants.2014.05.006.

Ducrocq V, Besbes B (1993) Solution of multiple trait animal models with missing data on some traits. J Anim Breed Genet. 110:81–92

Elshire RJ, Glaubitz JC, Sun Q, Poland JA, Kawamoto K, Buckler ES, Mitchell SE (2011) A robust, simple genotyping-by-sequencing (GBS) approach for high diversity species. PLoS ONE 6:e19379. https://doi.org/10.1371/journal.pone.0019379

He D, Kuhn D, Parida L (2016) Novel applications of multitask learning and multiple output regression to multiple genetic trait prediction. Bioinformatics 32:i37–i43. https://doi.org/10.1093/bioinformatics/btw249

Hyvärinen A, Karhunen J, Oja E (2001) Independent component analysis, 1st edn. John Wiley & Sons, New York, ISBN 0-471-22131-7

Jia Y, Jannink J-L (2012) Multiple-trait genomic selection methods increase genetic value prediction accuracy. Genetics 192:1513–1522. https://doi.org/10.1534/genetics.112.144246

Jiang J, Zhang Q, Ma L, Li J, Wang Z, Liu JF (2015) Joint prediction of multiple quantitative traits using a Bayesian multivariate antedependence model. Heredity 115:29–36

Lin CY, Smith SP (1990) Transformation of multitrait to mixed model analysis of data with multiple random effects. J Dairy Sci 73:2494–2502

Märtens K, Hallin J, Warringer J, Liti G, Parts L (2016) Predicting quantitative traits from genome and phenome with near perfect accuracy. Nat Commun 7:11512. https://doi.org/10.1038/ncomms11512

Meuwissen THE, Hayes BJ, Goddard ME (2001) Prediction of total genetic value using genome-wide dense marker maps. Genetics 157:1819–1829

Montesinos-López OA, Montesinos-López A, Crossa J, Toledo F, Pérez-Hernández O, Eskridge KM, Rutkoski J (2016) A genomic Bayesian multi-trait and multi-environment model. G3 6:2725–2744

Montesinos-López OA, Montesinos-López A, Crossa J, Toledo FH, Montesinos-López JC, Singh P, Salinas-Ruiz J (2017a) A Bayesian Poisson-lognormal model for count data for multiple-trait multiple-environment genomic-enabled prediction. G3 7:1595–1606. https://doi.org/10.1534/g3.117.039974

Montesinos-López A, Montesinos-López OA, Cuevas J, Mata-López WA, Burgueño J, Mondal S, Huerta J, Singh R, Autrique E, González-Pérez L, Crossa J (2017b) Genomic Bayesian functional regression models with interactions for predicting wheat grain yield using hyper-spectral image data. Plant Methods 13:1–29

Montesinos-López OA, Montesinos-López A, Crossa J, de los Campos G, Alvarado G, Mondal S, Rutkoski J, González-Pérez L (2017c) Predicting grain yield using canopy hyperspectral reflectance in wheat breeding data. Plant Methods 13:1–23

Mrode RA (2014) Linear models for the prediction of animal breeding values. CABI, Boston, MA

Oliveira R, Teixeira-Pinto A (2015) Analyzing multiple outcomes: is it really worth the use of multivariate linear regression? J Biom Biostat 6:256. https://doi.org/10.4172/2155-6180.1000256

Poland JA, Brown PJ, Sorrells ME, Jannink JL (2012) Development of high-density genetic maps for barley and wheat using a novel two-enzyme genotyping-by-sequencing approach PLoS ONE 7:e32253. https://doi.org/10.1371/journal.pone.0032253

R Core Team (2018). R: A language and environment for statistical computing. R Foundation for Statistical Computing. Vienna, Austria, ISBN 3-900051-07-0. http://www.R-project.org/.

Rutkoski JE, Heffner EL, Sorrells ME (2011) Genomic selection for durable stem rust resistance in wheat. Euphytica 179:161–173. https://doi.org/10.1007/s10681-010-0301-1

Rutkoski J, Poland J, Mondal S, Autrique E, González-Pérez L, Crossa J, Reynolds M, Singh R (2016) Predictor traits from high-throughput phenotyping improve accuracy of pedigree and genomic selection for grain yield in wheat. G3 6:2799–2808

Schaeffer LR (2006) Strategy for applying genome-wide selection in dairy cattle. J Anim Breed Genet 123:218–223

Schulthess AW, Wang Y, Miedaner T, Wilde P, Reif JC, Zhao Y (2016) Multiple-trait and selection indices-genomic predictions for grain yield and protein content in rye for feeding purposes. Theor Appl Genet 129:273–287

Schulthess AW, Zhao Y, Longin CFH, Reif JC (2017). Advantages and limitations of multiple-trait genomic prediction for Fusarium head blight severity in hybrid wheat (Triticum aestivum L.). Theor Appl Genet. https://doi.org/10.1007/s00122-017-3029-7.

Srivastava MS, Khatri CG (1979) An introduction to multivariate statistics. Elsevier North Holland, New York, NY, USA

Stone JV (2004) Independent component analysis: a tutorial introduction. MIT Press, Cambridge, Massachusetts, ISBN 0-262-69315-1

Teixeira FRF, Nascimento M, Nascimento ACC, Silva FF, Cruz CD, Azevedo CF, Paixão DM, Barroso LMA, Verardo LL, Resende MDV, Guimarães SEF, Lopes PS (2016) Factor analysis applied to genome prediction for high-dimensional phenotypes in pigs. Genet Mol Res 15:gmr.15028231

Thompson R (1977) Estimation of quantitative genetic parameters. In: Pollak E, Kempthorne O, Bailey TB (eds) Proceedings of the International Conference on Quantitative Genetics. Iowa State University Press, Ames, IO, pp 639–657

Van Raden PM (2008) Efficient methods to compute genomic predictions. J Dairy Sci 91:4414–4423

Acknowledgements

We thank all CIMMYT scientists who were involved in conducting the extensive multi-environment maize and wheat trials and generated the phenotypic and genotypic data used in this study. We acknowledge the financial support provided by USAID and the Cornell-CIMMYT Genomic Selection projects in maize and wheat breeding financed by the Bill and Melinda Gates Foundation.

Author information

Authors and Affiliations

Corresponding authors

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Electronic supplementary material

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Montesinos-López, O.A., Montesinos-López, A., Crossa, J. et al. A singular value decomposition Bayesian multiple-trait and multiple-environment genomic model. Heredity 122, 381–401 (2019). https://doi.org/10.1038/s41437-018-0109-7

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s41437-018-0109-7