Abstract

Purpose

Noninvasive prenatal screening (NIPS) for fetal aneuploidy via cell-free DNA has been commercially available in the United States since 2011. In 2016, the American College of Medical Genetics and Genomics (ACMG) issued a position statement with specific recommendations for testing laboratories. We sought to evaluate adherence to these recommendations.

Methods

We focused on commercial laboratories performing NIPS testing in the United States as of 1 January 2018. Sample laboratory reports and other materials were scored for compliance with ACMG recommendations. Variables scored for common and sex chromosome aneuploidy detection included detection rate, specificity, positive and negative predictive value, and fetal fraction. Labs that performed analysis of copy-number variants and results for aneuploidies other than those commonly reported were identified. Available patient education materials were similarly evaluated.

Results

Nine of 10 companies reported fetal fraction in their reports, and 8 of 10 did not offer screening for autosomal aneuploidies beyond trisomy 13, 18, and 21. There was inconsistency in the application and reporting of other measures recommended by ACMG.

Conclusions

Laboratories varied in the degree to which they met ACMG position statement recommendations. No company adhered to all laboratory guidance.

Similar content being viewed by others

INTRODUCTION

Since 2011, noninvasive prenatal screening (NIPS) has entered clinical practice through independent commercial companies and academic and hospital-based laboratories, with some estimates projecting an annualized growth rate of 15% between 2017 and 2027.1 In 2016, the American College of Medical Genetics and Genomics (ACMG) published an updated position statement on NIPS for fetal aneuploidy.2 While some recommendations overlapped with those from the American College of Obstetricians and Gynecologists (ACOG)/Society for Maternal–Fetal Medicine (SMFM),3 the National Society of Genetic Counselors (NSGC),4 and International Society for Prenatal Diagnosis (ISPD),5 the ACMG recommendations were distinct in suggesting that testing laboratories adhere to a specific format and content when reporting results. The 2016 ACMG document states that detection rate (DR), specificity (SPEC), positive predictive value (PPV), negative predictive value (NPV), and fetal fraction (FF) should be included when reporting results for trisomies 13, 18, 21, sex chromosome aneuploidies, and copy-number variants (CNVs).2 Laboratories were also “encouraged to meet the needs of providers and patients by delivering meaningful screening reports, engaging in education, and identifying ways to address distributive justice.”2 This study attempted to assess laboratory adherence to the ACMG’s NIPS recommendations two years after their publication.2

MATERIALS AND METHODS

Identification of NIPS

Our team identified NIPS tests commercially available in the United States as of 1 January 2018. For this analysis, we excluded NIPS from single-health systems, single-hospital systems, or academic/university settings. We also excluded any umbrella companies (e.g., LabCorp), which did not directly sell commercially available NIPS; instead, we focused on their subsidiary testing companies (e.g., Integrated Genetics and Sequenom). We chose not to include companies that advertised selling NIPS as of 1 January 2018, but either did not appear to have launched their NIPS or stopped selling NIPS during our analysis period. An initial listing of NIPS was obtained from Concert Genetics (www.concertgenetics.com) and the National Society of Genetic Counselors. We also conducted an Internet search using the terms “prenatal test,” “prenatal screen,” “NIPT,” “NIPS,” “noninvasive prenatal screen,” and “noninvasive prenatal test.”

Analyses

We collected patient education materials and sample reports for each NIPS test result via the companies’ web pages, exhibit booths at the 2018 ACMG Annual Meeting, and/or direct request (see Supplementary Materials for listing of source documents). Authors were divided into groups of two, and each group analyzed one NIPS laboratory for its adherence to all of the recommendations that pertained to laboratories in the 2016 ACMG position statement.2 This primary analysis used a categorical rating scale: green (full adherence), yellow (partial adherence), and red (little to no evidence of adherence). Where we were unable to obtain information or test reports to assess compliance, the criterion was marked as “could not assess.”

After the initial analyses were performed, a second analysis of the same material was carried out. This time one team member was assigned to analyze one or two recommendations across all NIPS laboratories. The same categorical rating was applied. Differences between primary and secondary analyses were reconciled through discussion and, when necessary, full-team analysis. In all cases, reconciliation was achieved.

RESULTS

In total we identified 11 NIPS tests that met our selection criteria, and we obtained sufficient materials to analyze ten companies (Table 1). Progenity’s Innatal Prenatal Screen was not analyzed due to a lack of available materials during the analysis timeframe. A summary of results organized by laboratory is shown in Table 2. We present adherence to the recommendations in order by which they first appeared in the ACMG guidelines.

Recommendation 1 [Common Aneuploidy]

Laboratories should provide readily visible and clearly stated detection rate (DR), specificity (SPEC), positive predictive value (PPV), and negative predictive value (NPV) for conditions being screened, in pretest marketing materials, and when reporting laboratory results to assist patients and providers in making decisions and interpreting results.

None of the ten NIPS laboratories consistently provided all four of these statistics on both the pretest marketing materials and the laboratory reports (Table 2). InformaSeq did not delineate PPV and NPV for trisomies 13 and 18. MaterniT21 PLUS did not consistently have DR and specificity listed on pretest marketing materials; PPV and NPV were not included on result reports or in pretest marketing materials. Harmony did not include PPV and NPV in pretest marketing materials. QNatal did not include PPV and NPV on laboratory results or in pretest marketing materials; DR and sensitivities were not listed in all available pretest marketing materials. Panorama did not list SPEC, PPV, or NPV in their pretest marketing materials for patients. Determine 10 did not provide PPV or NPV on the result reports; none of the four statistics are listed in the pretest marketing materials. Prelude and the Informed Prenatal Test did not consistently report the four statistics in the pretest marketing materials. Pathgroup NIPS did not list NPV on laboratory results or pretest marketing materials. Claritest did not mention any of the four statistics in the laboratory results, and only a few of them were mentioned in patient-specific pretest marketing materials.

Recommendation 2

ACMG does not recommend NIPS to screen for autosomal aneuploidies other than those involving chromosomes 13, 18, and 21.

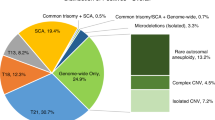

Eight of the laboratories met this criterion, while two did not (Table 2). Those two were MaterniT21 PLUS, which screened for trisomies 16 and 22, and Determine 10, which screened for trisomies 9 and 16.

Recommendation 3

All laboratories should include a clearly visible fetal fraction on NIPS reports.

Nine of the laboratories met this criterion, while one did not (Table 2). Determine 10 did not include fetal fractions on the results report.

Recommendation 4

All laboratories should specify the reason for a no-call when reporting NIPS results.

Five of the laboratories fully met this criterion (Table 2). We were unsuccessful in eliciting responses from the remaining laboratories after requests for clarification.

Recommendation 5 [Sex Chromosome Aneuploidy]

Laboratories include easily recognizable and highly visible DR, SPEC, PPV, and NPV for each sex chromosome aneuploidy when reporting results to assist patients and providers in making decisions and interpreting results.

None of the laboratories met this criterion fully (Table 2). InformaSeq did not offer PPV or NPV on result reports. MaterniT21 PLUS, Harmony, and PathGroup’s NIPS did not offer any of the four statistics. QNatal listed DR, SPEC, and PPV for all of the sex chromosome aneuploidies combined, but not individually; NPV was not listed. A sample report also stated, “Y chromosomal material was not detected. Consistent with female fetus [sic].” The Panorama sample report for XXY did not include NPV, and DR and SPEC were listed as “not applicable.” Determine 10 did not include PPV or NPV on the result reports. Prelude did not include PPV or NPV on sample report for Turner syndrome; none of the statistics were reported on result report for trisomy X. Informed Prenatal Test did not include PPV or NPV regularly. ClariTest did not list PPV or NPV for Turner syndrome, and limited data were cited for XXY, XXX, and XYY.

Recommendation 6

Laboratory requisitions and pretest counseling information should specify the DR, SPEC, PPV, and NPV of each copy-number variant (CNV) screened. This material should state whether PPV and NPV are modeled or derived from clinical utility studies (natural population or sample with known prevalence).

Six NIPS laboratories offered screening for CNVs. Of these, MaterniT21 PLUS did not list PPV or NPV for the CNVs in its pretest marketing materials. Harmony included DR and SPEC for 22q11 microdeletion syndrome in pretest marketing materials for providers, but not for patients. In no locations were PPV and NPV listed. Panorama, Prelude, QNatal, and PathGroup’s NIPS did not routinely report the four statistics.

Recommendation 7

Laboratories should include easily recognizable and highly visible DR, SPEC, PPV, and NPV for each copy-number variant (CNV) screened when reporting laboratory results to assist patients and providers in making decisions and interpreting results. Reports should state whether PPV and NPV are modeled or derived from clinical utility studies (natural population or sample with known prevalence).

Of the laboratories offering CNV screening, the Panorama test did list all four statistics on its sample report for 22q11 deletion syndrome. MaterniT21 PLUS, Harmony, QNatal, Prelude, and PathGroup’s NIPS did not regularly provide all four statistics; some of these companies cited “insufficient prevalence data,” even when such data were available. Furthermore, the ACMG position statement allows the use of modeled data when laboratory-specific data are not available.

Recommendation 8

Laboratories provide patient-specific PPV when reporting positive test results. Laboratories provide population-derived PPV when reporting positive results in cases in which patient-specific PPV could not be determined due to unavailable clinical information. Laboratories provide modeled PPV when reporting positive results for which neither patient-specific nor population-derived PPV are possible.

QNatal’s sample report for Down syndrome listed an “age-adjusted” PPV, demonstrating to the clinician that patient-specific variables like age were taken into account. InformaSeq’s sample report and pretest marketing materials relied on a single clinical study and were not patient-specific, nor do they seem to be based on the laboratory’s experience with actual results. MaterniT21 PLUS, Determine 10, Harmony, Prelude, and ClariTest did not regularly offer PPVs or explanations for the clinician on how they might be derived. On a sample report for Panorama, the PPV was described as incorporating the “Panorama Algorithm,” but no information was offered for the clinician on what this included. The Informed Prenatal Test made use of Illumina’s published performance with verification using their own data. This screen also appeared to provide a patient-specific PPV for a “positive” Down syndrome report when maternal age was known. PathGroup’s NIPS reports has a spot for PPV information for autosomal aneuploidies, however, we did not have a sample report with an actual value to determine how this was explained. On a sample report for a sex chromosome aneuploidy, PathGroup stated that performance data is limited, “precluding accurate calculation of a PPV.”

Concluding Comment

Laboratories are encouraged to meet the needs of providers and patients by delivering meaningful screen reports, engaging in education, and identifying ways to address distributive justice, a medical ethical principle that challenges genomics-based innovative and clinically useful technologies.

This concluding comment occurs at the end of the 2016 ACMG position statement.2 In the absence of explicit benchmarks for this multifaceted comment, but in light of its important and distinctive charges, we evaluated companies based on (1) the inclusion of information on screen reports to guide results interpretation, (2) the inclusion of educational information on websites and pretest marketing materials, (3) offering links to independent educational resources and patient support organizations, (4) offering programs to support costs for low-resource and underinsured patients, and (5) providing clear and easily accessible information on costs and available discount programs. While it can be difficult to fully distinguish between materials that might be considered educational as opposed to marketing-based, we based our criteria on whether the language and statistics presented were solely focused on the benefits of the specific test being marketed versus materials that included other clinical options in a value neutral way. We also assessed whether materials provided links to neutral third parties, such as professional societies or well-known advocacy organizations.

No laboratory fully met this charge, but likewise no laboratory completely failed to meet it. Test reports from several laboratories provided little or no explanatory information, or links to such information, to guide interpretation of results. However, PathGroup’s NIPS included a second page in its screen reports that contained information, aimed at providers counseling patients, to aid results interpretation and next steps (including links to patient support organizations). Prelude screen reports included clear, patient-centered information about screen-positive conditions, along with links to patient support resources online. Harmony screen reports contained a link to its website, leading to significant patient- and provider-directed information about the test and the screened conditions.

Reports varied in their overall level of readability according to standard reading grade level scales. Websites and pretest marketing materials contained variable amounts of educational information, typically intermingled with marketing messaging. The most common deficiency in this area was a failure to provide or link to contextual, psychosocially nuanced materials regarding the conditions for which NIPS is available.

Prelude and PathGroup’s NIPS included educational information that duplicated the information included on screen reports, with the same links to external patient support resources and organizations. Few other laboratories contained links to independent educational or patient support resources; Harmony and Informed Prenatal Test were two exceptions. ClariTest offered educational materials on its website and included a guide to help providers explain PPV. Panorama, Determine 10, QNatal, InformaSeq, and MaterniT21 PLUS also offered variable amounts of educational information on their websites and in printed materials. Several laboratories noted that they offer genetic counseling support for patients and/or providers to aid in results interpretation.

Regarding access to NIPS for low-income or underinsured patients, we found limited information on laboratory websites or printed materials. Few laboratories offered explicit cost information or up-front information on specific discounts for patients with limited resources. Exceptions include Qnatal, which offered free or discounted testing based on income, with amounts clearly stated on their website along with an application for this program. Prelude offered discounted or free testing to qualified patients, and the income qualifications for free testing were detailed on the website. Six other tests (InformaSeq, MaterniT21 PLUS, Harmony, Panorama, Informed Prenatal Test, and ClariTest) mentioned programs offering discounts to qualified patients but required patients or providers to contact them for further information and/or to negotiate such access. We found no mention of any patient discount or accessibility initiatives for Determine 10 or PathGroup’s NIPS.

When assessing the labs for their provision of or references to patient education resources as recommended in the ACMG recommendations, we applied a broad assessment to acknowledge good faith efforts by labs to provide condition-specific patient educational resources. Labs were noted as making progress if any ACMG-recommended patient education materials (or other nationally recognized patient advocacy groups) were cited on at least one of the following mediums: websites, lab reports, or marketing materials. Labs were noted as fulfilling the ACMG criteria if they listed the majority of ACMG-recommended resources through at least one of the aforementioned mediums. One lab, QNatal, met this criterion by providing links to the majority of ACMG-recommended resources in their online patient content resources, in addition to links to nationally recognized patient advocacy groups. Half of the tests—InformaSeq, MaterniT21 PLUS, Informed Prenatal Tests, Panorama, and Determine 10—provided no references to ACMG-recommended patient education resources or nationally recognized patient advocacy groups and did not meet the ACMG criteria in any form. ClariTest listed some nationally recognized patient advocacy groups in their patient brochure but none of the resources recommended by ACMG, and Pathgroup’s NIPS made a good faith effort to create patient education sheets for different conditions but only included one of the ACMG-recommended resources. Both Prelude and Harmony listed some ACMG-recommended resources in their online patient portals, and Prelude further provided references to the recommended resources for specific conditions in their lab reports.

DISCUSSION

This is the first report that evaluates laboratory compliance with professional organization recommendations around reporting results for noninvasive prenatal screening. As recommended by the ACMG, 9 of 10 of companies reported fetal fractions on their reports; 8 of 10 did not offer screening for autosomal aneuploidies beyond trisomy 13, 18, and 21; and all specified the reasons for reporting no-call results for reports that could be assessed. Conversely, there was considerable inconsistency and inadequacy in reporting the full range of statistics recommended by the ACMG. The 2016 ACMG recommendations state the provision of DR, SPEC, PPV, and NPV on results is a necessary precondition for valid provision of testing for common trisomies, sex aneuploidies, and copy-number variants.2 Based on this analysis, none of the laboratories analyzed met the standard for common trisomies and sex aneuploidies and only Panorama met the standards for copy-number variants. The ACMG recommendations were clear that “laboratories should not offer screening” for these genetic conditions “if they cannot report DR, SPEC, PPV, and NPV for these conditions.” In sum, all laboratories followed some of the 2016 ACMG recommendations, but none of the laboratories met all of them.

While laboratories are not obligated to adhere to ACMG guidelines, what is entirely unclear from this type of study is their reasoning for choosing not to do so, at times. One possibility is that portions of the 2016 ACMG recommendations have become outdated—or not even fully justified when originally published—as laboratories methodologies have been refined. Another possibility is that the provisions of the 2016 ACMG recommendations are both current and well justified, but that commercial forces are at play: laboratories that offer NIPS testing need to compete well in a crowded and lucrative market, as other authors have assessed.6 Another possibility is that the 2016 ACMG recommendations were disregarded by some or all laboratories, but inasmuch as they overlapped with recommendations from other groups, showed apparent compliance. At the very least, the 2016 ACMG recommendations are not viewed by laboratories as authoritative, as none of the laboratories followed all of the recommendations. Confirmation bias speaks to the tendency to interpret information consistent with a predetermined point of view. The 2016 ACMG position statement was the first professional guidelines to recommend “informing all pregnant women that NIPS is the most sensitive screening option for traditionally screened aneuploidies (i.e., Patau, Edwards, and Down syndromes).” This recommendation has not gone unnoticed by laboratories.

The ACMG recommendations also say that laboratories should support the educational and access needs of patients and providers. However, a lack of specific, measurable benchmarks limits the ability of laboratories to make well-informed and good faith efforts to meet this criterion.

Patients receiving a prenatal diagnosis of Down syndrome are 2.5 times more likely to rate their experience as negative than positive.7 The ACMG recommendation to provide patient education resources offers labs a unique opportunity to give clinicians meaningful tools to improve the diagnostic experience and better meet the needs of patients.8 In the past 10 years, a number of patient education resources and tools have been developed that represent a consensus between the medical and patient advocacy communities to fulfill the needs of patients as acknowledged in the ACMG criteria. While about half the labs made good faith efforts to meet our scoring criteria, about half the labs did not recommend any specific resources to patients or providers. To ensure patients and providers can locate and identify credible, medically accurate patient education materials, we recommend that labs be consistent in including references to all ACMG-recommended resources in lab reports, patient education materials, and online patient content. Aligned with the principle of distributive justice, we suggest that laboratories provide these materials to providers when high-risk results are delivered. Expectant parents have reported that providers do not always provide accurate and up-to-date information around the time of result disclosure.9 By providing patient educational materials identified by ACMG at these vulnerable times, laboratories could help ensure that clinicians have the timely resources for their counseling sessions. The ACMG and other national organizations (e.g., ACOG, SMFM, NSGC) might also consider the roles that they can play in the distribution of these patient educational materials.

Our analysis obligated us to take a closer look at the 2016 ACMG recommendations. At the time the position statement was written, there was considerable national discussion around PPV of NIPS and prenatal genetic screening in general. Expectations, by some, were that the PPV for NIPS would be low relative to the DR. Instead, randomized controlled trials established two important findings: the first was that the PPV for classical approaches to aneuploidy screening was very low (<10%); the second was that PPV for NIPS was generally over 50% in low-risk and high-risk groups.10,11,12 The ACMG placed heavy weight on reporting all test metrics (DR, SPEC, PPV, and NPV). In some cases, reporting all test metrics does not make sense. For example, it would not make sense to report a PPV when a test is negative. In other cases, a true PPV cannot be determined. In most cases of sex chromosome aneuploidy a true positive is not readily determined at birth, and it is impractical for laboratories to follow patients for years. To this extent, some of our ratings for the laboratories by these criteria might be restrictively low by assessing on how the guidelines were written in 2016.

Similarly ACMG recommendations that fetal fraction be reported were based on findings from randomized controlled trials that suggested an increased risk of fetal aneuploidy after a “no-call” due to low fetal fraction.11 In addition, a controversial study emphasized the need to confirm there was fetal DNA in maternal circulation.13 A low fetal fraction in 2015 when the ACMG writing group convened was defined as 4%;14 however, at its inception this number was not based on stringent criteria. In fact, laboratories can make a “call” today at fetal fractions below 4%. The External Quality Assessment Specialist Advisory Group, a consortium of European genetics labs, recently published guidance for the reporting of NIPS for their laboratories.15 The group felt that the minimal fetal fraction needed for the limit of detection is not only related to the specific approach but also to the sequencing depth. As such, while the group “could not reach consensus on reporting fetal fraction, it was decided that if reported, the significance in relation to the test result should be made clear.”15 Whether some laboratories did not report fetal fraction for any of these reasons is unknown.

Our study is not without limitations. Our team acknowledged that the provisions of the ACMG recommendations should not necessarily receive equal weight. A failure to link to one specific patient resource is, of course, much less problematic than failing to provide the statistics necessary to correctly interpret a test result. In addition, based on the information we received from laboratories, we were unable to assess one of the recommendations from the 2016 ACMG document: “all laboratories should establish and monitor analytical and clinical validity for fetal fraction.”2 Overall, while we made concerted efforts to obtain all available information from each assessed laboratory, we also recognize that we may have overlooked information that some laboratories communicate to providers and patients. In particular, some laboratories rely on patient and provider portals to which we did not have access, and these may contain additional interpretive and educational information. As further information is received, we intend to update a version of Table 2, which will be posted at the Prenatal Research Information Consortium (https://prenatalinformation.org/table). Future research might include a survey of laboratories, asking why they did not embrace all of the 2016 ACMG recommendations so that revisions of these recommendations can be implemented.

As laboratories continue to improve and revise their NIPS reports and communication with patients and providers, we believe findings we provide can be useful. Laboratories are increasingly leaving the confines of their lab (e.g., analysis and reporting) and are edging closer to the clinical arena (e.g., interpreting within a clinical context and counseling). They should be held to standards of care and best practices. We maintain that consistency and transparency in test content, education, and reporting would benefit patients and providers by improving medical practice assessment and patient informed decision making. In addition, payers would be able to more accurately determine relative merits of NIPS tests and their understanding of pricing structures. Finally, when ACMG updates its existing recommendations, we hope that this assessment process will help to clarify the benchmarks and milestones useful for evaluating laboratory NIPS services.

References

Global non-invasive prenatal testing and newborn screening market, 2017-2027. https://www.prnewswire.com/news-releases/global-non-invasive-prenatal-testing-and-newborn-screening-market-2017-2027-300479661.html. Accessed 24 October 2018.

Gregg AR, Skotko BG, Benkendorf JL, et al. Noninvasive prenatal screening for fetal aneuploidy, 2016 update: a position statement of the American College of Medical Genetics and Genomics. Genet Med. 2016;18:1056–1065.

Committee on Practice Bulletins—Obstetrics, Committee on Genetics, and the Society for Maternal-Fetal Medicine. Practice bulletin no. 163: screening for fetal aneuploidy. Obstet Gynecol. 2016;127:e123–137.

Devers PL, Cronister A, Ormond KE, Facio F, Brasington CK, Flodman P. Noninvasive prenatal testing/noninvasive prenatal diagnosis: the position of the National Society of Genetic Counselors. J Genet Couns. 2013;22:291–295.

Benn P, Borrell A, Chiu RW, et al. Position statement from the Chromosome Abnormality Screening Committee on behalf of the Board of the International Society for Prenatal Diagnosis. Prenat Diagn. 2015;35:725–734.

Glenn EP, Robert GB. Sequencing cell-free DNA in the maternal circulation to screen for down syndrome, other common trisomies, and selected genetic disorders. In: Netto GJ, Kaul KL, Best, BPG, eds. Genomic Applications in Pathology. 2nd ed. 2019 edition. New York, NY: Springer; 2018. p. 721–749.

Nelson Goff BS, Springer N, Foote LC, et al. Receiving the initial Down syndrome diagnosis: a comparison of prenatal and postnatal parent group experiences. Intellect Dev Disabil. 2013;51:446–457.

Levis DM, Harris S, Whitehead N, Moultrie R, Duwe K, Rasmussen SA. Women’s knowledge, attitudes, and beliefs about Down syndrome: a qualitative research study. Am J Med Genet A. 2012;158A:1355–1362

Skotko BG, Kishnani PS, Capone GT, Down Syndrome Diagnosis Study Group. Prenatal diagnosis of Down syndrome: how best to deliver the news. Am J Med Genet A. 2009;149A:2361–2367.

Bianchi DW, Parker RL, Wentworth J, et al. DNA sequencing versus standard prenatal aneuploidy screening. N Engl J Med. 2014;370:799–808.

Norton ME, Jacobsson B, Swamy GK, et al. Cell-free DNA analysis for noninvasive examination of trisomy. N Engl J Med. 2015;372:1589–1597.

Zhang H, Gao Y, Jiang F, et al. Non-invasive prenatal testing for trisomies 21, 18 and 13: clinical experience from 146,958 pregnancies. Ultrasound Obstet Gynecol. 2015;45:530–538

Takoudes T, Hamar B. Performance of non-invasive prenatal testing when fetal cell-free DNA is absent. Ultrasound Obstet Gynecol. 2015;45:112.

Pergament E, Cuckle H, Zimmermann B, et al. Single-nucleotide polymorphism-based noninvasive prenatal screening in a high-risk and low-risk cohort. Obstet Gynecol. 2014;124:210–218.

Deans ZC, Allen S, Jenkins L, et al. Recommended practice for laboratory reporting of non‐invasive prenatal testing of trisomies 13, 18 and 21: a consensus opinion. Prenat Diagn. 2017;37:699–704.

Acknowledgements

The authors would like to thank Judith Benkendorf for her early assistance with this research.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Disclosure

Financial conflicts of interest: B.G.S. occasionally consults on the topic of Down syndrome (DS) through Gerson Lehrman Group. He receives remuneration from DS nonprofit organizations for speaking engagements and associated travel expenses. B.G.S. receives annual royalties from Woodbine House, Inc., for the publication of his book, Fasten Your Seatbelt: A Crash Course on Down Syndrome for Brothers and Sisters. Within the past 2 years, B.G.S. has received research funding from F. Hoffmann–La Roche, Inc. to conduct clinical trials on study drugs for people with DS. B.G.S. is occasionally asked to serve as an expert witness for legal cases where DS is discussed. M.W.L. and S.M. are employees of the National Center for Prenatal & Postnatal Resources at the University of Kentucky, which produces and sells patient educational material recommended by professional guidelines; M.W.L. and S.M. receive no direct compensation from the sale of such materials. K.B. has financial holdings in GenomeSmart, a patient education platform. M.A.A. is an employee of Mayo Clinic, which provides cell-free DNA testing services through Mayo Medical Laboratories; M.A.A. receives no direct compensation from the sale of such tests. A.R.G. participated in the production of a continuing medical education (CME) activity on NIPT for Medscape. Other conflicts of interest: Beyond the items mentioned in the financial disclosures above, B.G.S. serves in a nonpaid capacity on the Honorary Board of Directors for the Massachusetts Down Syndrome Congress, the Board of Directors for the Band of Angels Foundation, and the Professional Advisory Committee for the National Center for Prenatal and Postnatal Down Syndrome Resources. B.G.S. has a sister with DS. M.W.L. serves in a nonpaid capacity on the D.S. Education Foundation for Down Syndrome of Louisville. M.W.L. has a daughter with DS. S.M. has a son with DS. S.K. and A.R.G. serve in a nonpaid capacity on the Board of Directors for the American College of Medical Genetics and Genomics (ACMG). A.R.G. also serves on the ACMG Foundation Board of Directors. M.A.A. has received travel funding compensation from the Association for X and Y Chromosome Variations. The other authors declare no conflicts of interest.

Additional information

Publisher’s note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

About this article

Cite this article

Skotko, B.G., Allyse, M.A., Bajaj, K. et al. Adherence of cell-free DNA noninvasive prenatal screens to ACMG recommendations. Genet Med 21, 2285–2292 (2019). https://doi.org/10.1038/s41436-019-0485-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s41436-019-0485-2

Keywords

This article is cited by

-

Never “totally prepared”: Support groups on helping families prepare for a child with a genetic condition

Journal of Community Genetics (2023)

-

Prenatal screening in the era of non-invasive prenatal testing: a Nationwide cross-sectional survey of obstetrician knowledge, attitudes and clinical practice

BMC Pregnancy and Childbirth (2020)

-

Inaccuracies and shortcomings in “Adherence of cell-free DNA noninvasive prenatal screens to ACMG recommendations”

Genetics in Medicine (2019)

-

Response to Johansen Taber et al.

Genetics in Medicine (2019)