Abstract

To evaluate all simulation models for ophthalmology technical and non-technical skills training and the strength of evidence to support their validity and effectiveness. A systematic search was performed using PubMed and Embase for studies published from inception to 01/07/2019. Studies were analysed according to the training modality: virtual reality; wet-lab; dry-lab models; e-learning. The educational impact of studies was evaluated using Messick’s validity framework and McGaghie’s model of translational outcomes for evaluating effectiveness. One hundred and thirty-one studies were included in this review, with 93 different simulators described. Fifty-three studies were based on virtual reality tools; 47 on wet-lab models; 26 on dry-lab models; 5 on e-learning. Only two studies provided evidence for all five sources of validity assessment. Models with the strongest validity evidence were the Eyesi Surgical, Eyesi Direct Ophthalmoscope and Eye Surgical Skills Assessment Test. Effectiveness ratings for simulator models were mostly limited to level 2 (contained effects) with the exception of the Sophocle vitreoretinal surgery simulator, which was shown at level 3 (downstream effects), and the Eyesi at level 5 (target effects) for cataract surgery. A wide range of models have been described but only the Eyesi has undergone comprehensive investigation. The main weakness is in the poor quality of study design, with a predominance of descriptive reports showing limited validity evidence and few studies investigating the effects of simulation training on patient outcomes. More robust research is needed to enable effective implementation of simulation tools into current training curriculums.

摘要

本文旨在评估所有眼科技术与非技术仿真训练设备的优点, 以找到支持其有效性的证据。使用PubMed和Embase从开始研究至2019年7月1日进行文献检索。研究根据训练模式进行分类: 虚拟现实、实际操作实验室、教学实验室以及跟随电子设备学习。使用Messick有效性框架以及转化医学McGaghie模型评估其有效性。本文共纳入131项研究, 涉及 93种不同的模拟训练设备, 其中53项研究基于虚拟现实设备、47项基于实际操作的实验室模型、26项基于教学实验室模型和5项基于电子设备学习。只有2项研究涉及所有5种模拟设备。最有效的模型有Eyesi眼外科系统、Eyesi直接检眼镜和眼外科技能评估测试系统 (Eye Surgical Skills Assessment Test, ESSAT)。模拟训练设备刺激模型的有效性等级大多限制在2级 (包含有效), Sophocle玻璃体视网膜手术模拟设备显示为3级 (下游效应), 以及白内障手术5级 (靶向效应) 。本文针对大多数模型进行了阐述, 对Eyesi进行了深入探讨。本文的局限性在于纳入的研究实验设计质量差, 描述性报告显示有效性证据有限, 很少有研究调查模拟训练对患者结局的影响。因此需要更有力的研究证据, 以便在现有培训课程中有效地应用仿真训练设备。

Similar content being viewed by others

Introduction

Historically training in ophthalmology, as in other surgical specialties, has been based on a Halstedian model of apprenticeship learning. Trainees are assumed to be competent upon completing a minimum number of surgical procedures. Changes to the clinical environment and professional values have forced a review of this approach [1]. One of the problems associated with this model is the inconsistency in levels of knowledge and skills gained due to variations in clinical exposure and educational opportunities [2]. Using the total number of procedures that a trainee has performed as a benchmark for skill is also problematic as quantity does not equate to quality and competency cannot be accurately discerned in this way. Reductions in training hours due to regulations such as the European Working Time Directive further limit potential training opportunities [3]. Furthermore, growing ethical concerns over the use of patients for training purposes [4] are also having major impacts on training particularly in the early stages of the learning curve. Studies have shown close correlation between experience and complication rate [5, 6].

These issues highlight the need for improved training programmes with the development and objective assessment of proficiency prior to treating patients. Simulation models offer a platform for trainees to improve their clinical and surgical skills, enabling focussed, competency-based training without putting patients at risk. The healthcare sector is continually making rapid technological advances and the development of simulator models as safe and effective tools for training and assessment has risen dramatically. This trend has been observed within the field of ophthalmology [7], but the extent to which simulation is used varies widely between different training programmes. Its role remains limited by a lack of formal, standardised integration into existing curricula.

The purpose of this systematic review is to comprehensively evaluate the effectiveness and validity of all simulator models developed for ophthalmic training to date.

Methods

This review was carried out following the PRISMA (Preferred Reporting Items for Systematic Reviews and Meta-analyses) statement and registered on the international prospective register of systematic reviews, “PROSPERO”, prior to conduction of this study (registration number: CRD42018087929).

Eligibility criteria

All original studies were included if they described simulation or e-learning for technical or non-technical skills development in ophthalmic training. Inclusion criteria for study participants were ophthalmologists of any grade and medical students who had completed or were completing their ophthalmology attachment. Studies were excluded if they did not provide original data; articles not specific to ophthalmology; and studies that did not use simulation for training or assessment purposes. We included all papers irrespective of the language.

Search methods

A systematic search of PubMed and Embase was carried out, using the terms “(simulat* OR virtual reality OR wet lab OR cadaver OR model OR e-learning) AND ophthalm* AND (training OR programme OR course)”. Search date was from inception to 01/07/2019. Reference lists from included articles and relevant reviews were hand searched for eligible studies.

Study selection

Two authors, RL and WYL, carried out independent, duplicate searches. All abstracts were reviewed and articles that were potentially eligible were read in full. A final list of studies meeting the eligibility criteria was compared and disagreements resolved by discussion (Fig. 1).

Data collection

The same two authors extracted data for each study separately and differences were resolved through discussion. Data collected included details of the simulator model, type of study design, number of participants and their training level, training task(s) involved, duration of training, and outcome data addressing validity and effectiveness of the model.

Data analysis

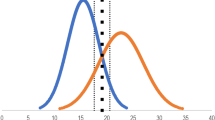

Studies were grouped according to simulator type: virtual reality; wet lab (live or cadaveric animal models and human cadaveric models); dry lab (synthetic models); and e-learning models. Validity was evaluated based on Messick’s modern validity framework [8] and the strength of each source of validity evidence was measured using a validated rating scale [9]. Effectiveness was quantified using an adaptation of McGaghie’s proposed levels of simulation-based translational outcomes (Table 1) [10]. Qualitative analysis was carried out due to the heterogeneity of study designs.

Results

A total of 3989 articles were screened, of which 3751 were excluded following abstract review. After reading the remaining 238 articles in full, a further 107 were excluded. A total of 131 original articles were included in this systematic review (Fig. 1). Details of findings are summarised in Tables 2–5 according to simulator type.

Virtual reality

Eyesi Surgical

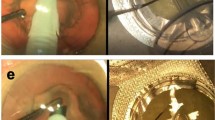

The Eyesi Surgical (VRmagic, Mannheim, Germany) is a high-fidelity virtual reality simulator designed for practising intraocular procedures. It consists of a mannequin head that houses a model eye connected to a computer interface and an operating microscope. The movements and positions of surgical instruments are tracked by internal sensors, producing a virtual image that is viewed through the microscope, as well as on separate touchscreen. The software contains training modules that simulate different steps in cataract and vitreoretinal surgeries. The system records performance metrics, enabling scores and feedback to be generated [11]. Of all virtual reality simulator models developed for use in ophthalmology training, the Eyesi has been the most extensively assessed, with a total of 33 validity studies.

Cataract surgery

[Summary: content = 2; response processes = 1; internal structure = 2; relations to other variables = 2; consequences = 2; translational outcomes = level 5].

Twenty-eight studies assessed the Eyesi cataract training modules, collectively demonstrating all five sources of validity evidence, with data strongly supporting each parameter (score = 2) except for response processes, which had more limited evidence (score = 1) [11,12,13,14,15,16,17,18,19,20,21,22,23,24,25,26,27,28,29,30,31,32,33,34,35,36,37,38]. A randomised controlled trial (RCT) by Feudner et al. showed that those who trained with the Eyesi achieved significant improvements in their capsulorhexis performance in the wet lab compared with the no-training, control group [14]. Another RCT suggested that virtual reality training was comparable to training using wet lab [13]. Residents were assessed on their first capsulorhexis in the operating room following either Eyesi or web-lab training. Overall technical scores were equivalent. The study also provided evidence of predictive validity with a direct correlation between time taken to complete the training modules on the Eyesi and true operating room time, as well as overall performance score.

Regarding patient outcomes, five studies demonstrated the transfer effects of Eyesi with reduced complications in live cataract surgery following training [12, 21, 29, 35, 38]. Of note, a multi-centre retrospective study involving 265 ophthalmology trainees across the UK showed that complication rates dropped from 4.2 to 2.6% (38% reduction) following the introduction of Eyesi simulators into training programmes [35]. Similarly, a study by Baxter et al. demonstrated that the use of a structured curriculum with wet lab and Eyesi training led to a considerable reduction in complication rates compared with reported figures for traditional training programmes [38]. However a recent study also testing transfer of skills showed some limitations to Eyesi training [36]. Performance during Eyesi training was comparing to subsequent performance in theatre. Results showed that improvements in OR performance was only observed for ophthalmologists who were less experienced and that the ability for Eyesi scores to discriminate between novice and experienced surgeons could only be seen in the first few training sessions.

Vitreoretinal surgery

[Summary: content = 1; response processes = 1; internal structure = N; relations to other variables = 1; consequences = N; translational outcomes: level 2].

Only four studies have evaluated the vitreoretinal modules on the Eyesi Surgical Simulator [39,40,41,42]. These studies support the content validity for vitreoretinal surgery training, as well as response processes, and relations to other variables. Similar to cataract surgery training, scores on the vitreoretinal modules were able to discriminate between experienced and inexperienced surgeons. One study reported evidence for response processes through the standardisation of testing and assessment such as allocating set time periods for training, standardised instructions and using the same supervisor. This evidence remains limited at the best [43]. Studies on the vitreoretinal modules also demonstrated a learning curve with overall scores increasing and completion time decreasing with repeated attempts, indicating contained effects in using the Eyesi for vitreoretinal training. No evidence has been published to support internal structure and consequences or transfer of skills to the operating room.

MicroVisTouch

[Summary: content = 0; response processes = N; internal structure = N; relations to other variables = 1; consequences = N; translational outcomes = N].

The MicroVisTouch (ImmersiveTouch, Inc, Chicago, USA) is another commercially available virtual reality simulator that was introduced after the Eyesi, with a report of the prototype published in 2012 [44]. Unlike the Eyesi, the MicroVisTouch features a single handpiece that is attached to a robotic arm and is used to control the appropriate instrument according to the procedure being simulated. It also differs from the Eyesi in that it has an integrated tactile feedback interface, reportedly the first ophthalmic simulator to have this feature [45]. Currently, simulation is limited to three key steps in cataract surgery (clear corneal incision, capsulorhexis and phacoemulsification), although further modules are being developed.

Compared with the Eyesi, fewer studies have assessed the MicroVisTouch. Two groups have reported, implicitly, that the simulator demonstrates content validity for simulating capsulorhexis and that there is evidence of relations to other variables [44, 45], but other sources of validity evidence are lacking. Evidence supporting the effectiveness of using the simulator is also lacking. A third group adapted the MicroVisTouch by customising the algorithm and integrating OCT (Optical Coherence Tomography) scans of varying vitreoretinal conditions to the simulator, enabling patient-specific simulation training of vitreoretinal procedures (epiretinal membrane and internal limiting membrane peeling) [46]. However, the validity and effectiveness of this model was not tested in the original study and no further reports have been found.

Eyesi Ophthalmoscopes

Direct

[Summary: content = 1; response processes = 2; internal structure = 2; relations to other variables = 2; consequences = 1; translational outcomes: level 2].

The Eyesi Direct Ophthalmoscope (VRmagic, Mannheim, Germany) is a virtual reality simulator that enables fundoscopy examination practice, consisting of an ophthalmoscope handpiece with built-in display and a patient model head connected to a touchscreen. A range of patient cases and pathologies can be selected from the programme and objective feedback is provided based on the trainee’s performance [47].

Although only two studies were found evaluating this simulator, there was strong evidence for its validity. Borgersen et al. published the only study in this review to assess validity using all five parameters in Messick’s framework, and showed that the consequences of using a set pass/fail score to accurately discriminate between inexperienced participants (medical students), who were given a fail compared with the experienced participants (ophthalmology consultants) who all passed [48]. The second study showed that participants who trained with the simulator achieved higher scores in an OSCE (Objective Structured Clinical Examination) assessment compared with a control group who only received classical training, thus demonstrating contained effects for translational outcomes [49].

Indirect

[Summary: content = 0; response processes = N; internal structure = N; relations to other variables = 1; consequences = N; translational outcomes: level 1].

The Eyesi Indirect Ophthalmoscope (VRmagic, Mannheim, Germany) is similar to the Eyesi Direct, an ophthalmoscope headband that is connected to a display showing a 3D virtual patient and virtual lenses when physical, diagnostic lenses are placed over the model head. As with the Eyesi Direct, physiologic and pathologic functions for the virtual patient can be controlled and varied.

Only two studies were found for this simulator [50, 51]. In contrast to the Eyesi Direct, validity evidence was limited to relations to other variables as one study showed that the simulator could discriminate between medical students and ophthalmology trainees [50]. Effectiveness was limited to internal acceptability as participants gave positive feedback of their experience in using the simulator.

Others

A variety of different virtual reality simulators have also been described, including three models for cataract surgery [52,53,54]; five for vitreoretinal surgery [55,56,57,58,59]; one for endoscopic endonasal surgery [60]; two for general ophthalmic surgery [61, 62]; 1 for ophthalmic anaesthesia [63]; 1 on ocular ultrasound [64]; and 1 for indirect ophthalmoscopy [54]. However, these have all been stand-alone reports with limited evidence of content validity only (scores of 0 or 1). An exception is the Endoscopic Endonasal Surgery Simulator by Weiss et al., which was tested in an RCT and demonstrated good internal structure [60]. Effectiveness was only tested in four models, with the Sophocle retinal photocoagulation simulator shown to be the most effective (downstream effects) as live assessment on real patients showed that the simulator group performed similarly to the control group who had previously practised on patients [58]. As with the other descriptive study models, these simulators have not been further investigated.

Wet lab

A total of 47 studies on wet-lab models were found, of which 12 were mixed models used in conjunction with an inanimate device or artificial system. From the animal model studies, 22 used porcine-related specimens, 3 used sheep specimens, 4 used goat eyes and 3 rabbit eyes. The number of studies using human cadaveric eyes or isolated lens were 17, of which 3 were used in combination with animal tissue.

Cataract surgery

[Summary: content = 2; response processes = 0; internal structure = N; relations to other variables = N; consequences = N; translational outcomes = level 2].

There were 16 studies describing the use of wet-lab models for cataract surgery [65,66,67,68,69,70,71,72,73,74,75,76,77,78,79,80]. These demonstrated content validity only, with no evidence for other validity parameters. Models which showed the strongest evidence for validity were pig eyes filled with cooked chestnuts for practising phacoemulsification [71] and rabbit eyes fixed with paraformaldehyde for simulating capsulorhexis [74]. These two models demonstrated contained effects and internal acceptability respectively.

Vitreoretinal surgery

[Summary: content = 0; response processes = N; internal structure = N; relations to other variables = N; consequences = N; translational outcomes = N].

One study described the use of rabbit eyes for performing pars plana vitrectomy, from which content validity could be inferred [81]. However, all other sources of validity evidence and indications of effectiveness were lacking.

Glaucoma surgery

[Summary: content = 1; response processes = 2; internal structure = N; relations to other variables = 1; consequences = N; translational outcomes = level 2].

Six studies were found for glaucoma surgery [82,83,84,85,86,87], with the majority lacking formal validity assessment. One study, which tested placement of human cadaveric eyes into a model head Marty the Surgical Simulator (Iatrotech Inc., Del Mar, USA) for goniotomy simulation, demonstrated good response processes and evidence of internal acceptability [84]. Dang et al. also showed that performing trabeculectomies on porcine eyes with added canalograms for outflow quantification had some evidence for relations to other variables and contained effects [82].

Corneal surgery

[Summary: content = 1; response processes = N; internal structure = N; relations to other variables = 2; consequences = N; translational outcomes = 1].

The use of wet-lab models for practising corneal surgery has been described in four studies [88,89,90]. Content validity and relations to other variables were demonstrated in one study [91], which tested the feasibility of simulating Descemet’s membrane endothelial keratoplasty on human corneas with an artificial anterior chamber with a 3D-printed iris. However, evidence of other validity parameters and effects were not demonstrated in the other studies.

Strabismus surgery

[Summary: content = 0; response processes = 0; internal structure = N; relations to other variables = N; consequences = N; translational outcomes = level 2].

Two wet-lab models were found for strabismus surgery, both using porcine eyes. White et al. added bacon to the eyes to simulate extraocular muscles [92], whereas Vagge et al. asked residents to practice on a chicken breast model followed by the pig eyes [93]. Discussion of content validity and response processes was made in both studies but no data were reported. Internal acceptability and contained effects were demonstrated for the two models respectively.

Oculoplastic surgery

[Summary: content = 2; response processes = 0; internal structure = N; relations to other variables = N; consequences = N; translational outcomes = level 2].

Four studies described the use of wet-lab oculoplastic simulators [94,95,96,97]. These all demonstrated content validity, with one study by Pfaff showing strongest evidence for this parameter [95]. One group showed that using a split pig head for practising lid procedures had good internal acceptability [94] and another group using human cadaver eyes showed that trainees had improved comfort, confidence and technical skills in performing canthotomy and cantholysis procedures [97].

Orbital surgery

[Summary: content = 0; response processes = N; internal structure = N; relations to other variables = N; consequences = N; translational outcomes = N].

Altunrende et al. describe using a sheep cranium to practise ocular dissection for orbital surgery. Content validity was reported but any further effectiveness of the model was not testes [98].

Ocular trauma

[Summary: content = 1; response processes = N; internal structure = N; relations to other variables = 0; consequences = N; translational outcomes = level 1].

A recent study by Mednick et al. showed that placing iron particles on human cadaver eyes for corneal rust ring removal simulation had evidence of content validity and relations to other variables [99]. Internal acceptability was shown to be high. Another study on ocular trauma surgery described the use of goats’ eyes for practising corneoscleral perforation repair [100]. However, as the study was purely descriptive, it was not possible to assess its validity or effectiveness.

Diagnostic examination

[Summary: content = 0; response processes = N; internal structure = N; relations to other variables = N; consequences = N; translational outcomes = N].

One Study by Uhlig and Gerding tested the use of porcine eyes placed inside an adjustable, artificial orbit for practising direct and indirect fundoscopy, as well as gonioscopy [101]. As this was a descriptive study, no evidence for validity or effectiveness was given.

Others

The remaining wet-lab models were either used to simulate a wide range of anterior and/or posterior segment surgeries or general micro-surgical skills [102,103,104,105,106,107,108,109,110,111].

Only two models, both using porcine eyes for micro-surgical skills assessment, provided data supporting their validity. Ezra et al. investigated the use of a video-based, modified Objective Structured Assessment of Technical Skill (OSATS) assessment tool. They demonstrated good internal structure, with high inter-rater reliability, and relations to other variables, with significant correlation between the OSATS scores and results from a separate motion-tracking device [109].

The Eye Surgical Skills Assessment Test (ESSAT), involving the use of porcine eyes and feet as part of a three-station assessment, demonstrated all five sources of validity evidence. One study showed, via a panel of ophthalmic surgery experts, that there was strong evidence of content validity [110]. A further masked study demonstrated that the ESSAT showed strong inter-rater reliability (internal structure) and that the senior resident in the study scored higher than the junior resident (relations to other variables) [111]. Unlike other models, the study authors also went on to discuss the potential consequences of using the ESSAT as an assessment tool, weighing up the benefits of setting a competence score that trainees would need to meet before performing on real patients, with the potential problems of the ESSAT becoming a stressful test preventing less confident residents from entering the operating room. The effectiveness of using this test, however, was not tested.

Altogether, the wet-lab studies, which assessed effectiveness only evaluated responses to participant surveys (internal acceptability) [105] and performance improvements on the models themselves (contained effects) [108, 109]; downstream effects were not demonstrated.

Dry lab

Twenty-six studies on synthetic models were identified, of which eight were developed for practising diagnostic examination techniques (slit lamp, direct and indirect ophthalmoscopy), six for vitreoretinal surgery, one for strabismus surgery, four for laser procedures, two for orbital surgery, one for cataract surgery, one for oculoplastic surgery, one for ocular trauma, one for general ophthalmic surgery and one for combined fundoscopy examination and laser procedures.

Cataract surgery

[Summary: content = 0; response processes = N; internal structure = N; relations to other variables = N; consequences = N; translational outcomes = level 2].

Abellán et al. developed a low-cost cataract surgery simulator using a methacrylate support and aluminium foil for capsulorhexis simulation [112]. This was the only inanimate simulator to be tested in an RCT and demonstrated transfer effects as those who trained using the model achieved a higher percentage of satisfactory capsulorhexis in subsequent practice with animal eye models compared with those who had begun training with the animal eyes. Further validity evidence was lacking from the study.

Vitreoretinal surgery

[Summary: content = 0; response processes = N; internal structure = N; relations to other variables = 1; consequences = N; translational outcomes = level 2].

For vitreoretinal surgery, two different validated models were found. Hirata et al. used quail eggs within a silicone cap to simulate membrane peeling. The model was shown to discriminate between experienced and inexperienced surgeons in terms of operating time and the success rate of membrane peeling (relations to other variables) [113]. This was similarly tested in a study by Yeh et al. where, using the artificial VitRet Eye Model (Phillips Studio, Bristol, UK) filled with vitreous-like fluid to simulate a variety of vitreoretinal surgery procedures, a positive correlation was observed between the trainees’ level of experience and total score [114]. In terms of effectiveness, the models by Yeh and Hirata showed internal acceptability and contained effects, respectively.

Other dry-lab models for vitreoretinal surgery included the use of an artificial orbit with diascleral illumination [115]; a modified rubber eye [116]; a medium-fidelity model constructed using a wooden frame and tennis ball to simulate the globe [117]; and an artificial eye with inner limited membrane made using hydrogel [118]. However, these models were only described, with no assessment of validity or effectiveness.

Strabismus

[Summary: content = 1; response processes = 2; internal structure = 2; relations to other variables = N; consequences = N; translational outcomes = level 2].

One study was found on the use of a low-fidelity, dry-lab model for strabismus surgery simulation [119]. The model consisted of a rubber ball simulating the globe; elastic band simulating the recti muscles, a piece of latex to simulate the conjunctiva and cornea. Results showed no significant differences between this model and a higher-fidelity, wet-lab model and this dry-lab model. The study showed strong evidence of valid response processes and internal structure as the authors performed a pre-randomisation test to determine baseline dexterity and ensured stratified randomisation of participants into two different groups with equal baseline dexterity. The process for evaluating the participants’ skills after training was also robust as their performance was evaluated by two independent ophthalmologists using three different validated assessment scales (ICO-OSCAR, OSATS and ASS).

Laser surgery

[Summary: content = 0; response processes = N; internal structure = N; relations to other variables = 1; consequences = N; translational outcomes = level 2].

Out of the four laser simulators found [120,121,122,123], only the model designed by Simpson et al. showed evidence of validity through relations to other variables [103]. The effectiveness of training with this model, however, was not investigated. Conversely, a capsulotomy simulator by Moisseiev and Michaeli demonstrated contained effects but did not test for validity [121].

Oculoplastic surgery

[Summary: content = 0; response processes = 0; internal structure = N; relations to other variables = N; consequences = N; translational outcomes = N].

One oculoplastic surgery dry-lab model was found, using an anatomically correct skull model for simulating nasolacrimal duct surgery [124]. However, this was descriptive only, with no assessment of validity or effectiveness.

Orbital surgery

[Summary: content = 0; response processes = N; internal structure = N; relations to other variables = N; consequences = N; translational outcomes = N].

There were two studies using 3D-printed orbit models for simulating orbital surgery [125, 126]. However, as these were also descriptive only, evidence for their validity and effectiveness was not shown.

Trauma management

[Summary: content = 1; response processes = N; internal structure = N; relations to other variables = N; consequences = N; translational outcomes = level 1].

The Newport Eye is a simple training phantom using a craft eye, resins and ground black pepper to simulate corneal foreign body removal [127]. This demonstrated evidence for content validity as experts agreed that the model was realistic in terms of its tissue colour, consistency and anatomy. Trainees also reported being more confident with the procedure after using the model, demonstrating internal acceptability. However, this simulator does not appear to have been used by other groups and no further reports were identified.

Diagnostic examination

[Summary: content = 0; response processes = 0; internal structure = N; relations to other variables = 1; consequences = N; translational outcomes = 0].

The largest proportion of dry-lab models were designed for practising examinations, including slit lamp and fundoscopy (direct and indirect) [128,129,130,131,132,133,134,135,136]. Two studies tested the validity and effectiveness of the EYE Exam Simulator (Kyoto Kagaku Co., Japan), a popular tool for fundoscopy practice. This consists of a head model with adjustable pupil sizes and changeable fundus slides to represent different retinal conditions. McCarthy et al. showed that response processes were generally poor as letters were added to the slides to check the participant’s field of vision through the ophthalmoscope and the majority were unable to identify the markers or pathology on the slides [132]. Survey responses also indicated that there was low user satisfaction with the model as trainees did not feel it was realistic or that the exercise improved their skills. On the other hand, Akaishi et al. showed that there was a strong correlation between accuracy of examination on the EYE simulator and previous experience of performing fundoscopy in the clinic, providing some evidence for its validity [128].

All other studies of ophthalmoscopy and slit-lamp simulators were descriptive, showing internal content validity only.

E-learning

Aside from training technical skills, tools have also been developed for improving cognitive and other non-technical skills such as teamwork and leadership. A total of five studies were found using this modality, with the majority testing its use amongst medical students. All studies incorporated both training and assessment as part of the course.

Computer-Assisted Learning Ophthalmology Program

[Summary: content = 2; response processes = 2; internal structure = N; relations to other variables = N; consequences = N; translational outcomes = level 1].

The Computer-Assisted Learning Ophthalmology Program designed by Kaufman and Lee is a multi-media, interactive tutorial, which aims to help medical students learn about the pupillary light reflex [137]. Content validity was demonstrated extensively by experts and response processes were thoroughly assessed as each student’s experience and thought process during the training was evaluated by an external interviewer after the programme. Despite the positive responses from all groups showing that it was a valid and effective simulator (internal acceptability), it has not been used by other medical schools and no further reports have been found.

Case-based e-learning modules with Q&A games

[Summary: content = 0; response processes = N; internal structure = N; relations to other variables = N; consequences = 1; translational outcomes = level 2].

A study by Stahl et al. also tested the consequences of using e-learning modules as a part of ophthalmology teaching for a group of 272 medical students [138]. Although validity parameters were not formally tested, the authors found that students who used e-learning more frequently achieved better exam results.

Ophthalmic Operation Vienna

[Summary: content = 0; response processes = N; internal structure = 2; relations to other variables = N; consequences = N; translational outcomes = level 2].

An RCT by the Medical University of Vienna evaluated the use of a 3D animated programme for learning different steps in ophthalmic surgery [139]. This demonstrated strong internal structure as a reliability analysis of the multiple-choice questions used at the end of the programme showed a Cronbach’s α coefficient of 0.7, indicating high reliability. Those in the simulation group also outperformed the control group in the final test, showing contained effects.

3D computer animations for learning neuro-ophthalmology and anatomy

[Summary: content = 0; response processes = N; internal structure = N; relations to other variables = N; consequences = N; translational outcomes = level 2].

A similar study by Glittenberg and Binder was carried out, investigating the use of a combination of various 3D design software for teaching complex topics in neuro-ophthalmology [140]. No evidence was provided supporting its validity. However, effectiveness was demonstrated as students responded very positively to the programme in a satisfaction questionnaire (internal acceptability) and also achieved significantly better results in a post-lecture test compared with the control group (contained effects).

The Virtual Mentor

[Summary: content = 2; response processes = N; internal structure = 0; relations to other variables = 1; consequences = N; translational outcomes = level 2].

Whilst most e-learning studies were designed to help medical students, one model was developed for ophthalmology residents to develop non-technical skills. A multi-centre RCT tested the effects of using The Virtual Mentor, an interactive, computer-based programme teaching the cognitive aspects of performing hydrodissection in cataract surgery, including decision making and error recognition [141]. Test questions demonstrated good content validity as they were developed and modified by cataract surgery experts across nine academic institutions. Test scores also demonstrated relations to experience, with correlation between total marks and residency year of training. Despite the lack of data quantifying the reliability of this model, the study showed a degree of internal structure as residents were randomised using a stratified design according to their academic centre and residency year, factors which would likely have influenced the test scores. Internal acceptability was demonstrated by positive user feedback and contained effects through higher post-test scores and a greater mean increase in pre- to post-test results in the simulator group compared with the control group.

Discussion

This systematic review of simulation training in ophthalmology provides a comprehensive evaluation of all available simulation tools using the modern taxonomy. Virtual reality simulators were the most widely evaluated and the Eyesi Surgical Simulator in particular. For cataract surgery, evidence to support all aspects of content validity have been reported. Critically data support the collateral effects of using the Eyesi with training being shown to result in improve operating room performance and lower complications. In contrast, only a much more limited assessment of other ophthalmic simulation training tools has been undertaken including the vitreoretinal training modules for the Eyesi Surgical system. A wide variety of dry-lab and wet-lab training models were reported. Use of dry-lab models in ophthalmology was more limited compared with other surgical specialities [142] with no evidence to suggest any model was particularly effective. In contrast, a relatively high number of wet-lab models was reported. In general, acceptability was high with positive participant feedback and there was evidence, albeit limited, to support the educational impact of wet-lab training. Cadaveric animal tissue was most commonly used and no significant benefits of human over animal cadaveric models were reported. Only five studies reported the use of e-learning. These results do support its potential for ophthalmology training but further assessment needs to be undertaken before incorporation into the training curriculum. Lastly, there was a paucity of studies addressing non-technical skills training in this area. The impact of human factors on patient safety is well-recognised corresponding to the rapid increase in non-technical skills training in medicine [143]. One study in this review, the Virtual Mentor e-learning programme, included cognitive components of cataract surgery training [141]. A pilot study by Saleh et al. also demonstrated the feasibility of using high-fidelity, immersive simulation for cataract surgery, using scenarios based on previous patient safety incidents and evaluating the cross-validity and reliability of four established assessment tools (OTAS, NOTECHS, ANTS and NOTSS) [144].

Simulation tools are increasingly being used for assessment of technical and non-technical skills, both formative and summative. In this review, only one assessment tool, ESSAT, has been described. Strong validity evidence has been shown for the ESSAT but further research on the development of standards and application of the ESSAT tool has not yet been performed. Effective skills assessment is becoming increasingly important both to support competency-based training, as well as enable objective proficiency assessment. In response to growing calls for greater transparency and accountability, formal ongoing credentialing and certification are being considered to ensure doctors maintain the necessary skills and knowledge throughout their professional careers. Simulators are being used to provide objective skills assessment but especially in such high-stakes assessment, rigorous validation of the assessment tools is required before they can be implemented.

Overall, the majority of studies lacked a formal validation process, with 45% of studies (n = 59) being purely descriptive. Furthermore, most validity assessments used the outdated validity frameworks which greatly limits the value of these results. “Face validity” was commonly reported as validity evidence despite the recognition that such subjective assessment of the perceived realism of a simulator is largely irrelevant to its educational impact [145]. Likewise the concept of construct validity using expert–novice comparisons remains widely used but again offers little useful insight into a simulator’s educational impact. The lack of validation studies appears greater than in other specialties. Similar systematic reviews of otolaryngology and orthopaedic simulation training reported rates of descriptive studies of 23% and 38%, respectively [146, 147]. This resonates with findings from a recent review of simulation-based validation studies across all surgical specialities that reported that only 6.6% used Messick’s validity framework [148]. Evidence for a number of components were particularly deficient. Internal structure was rarely assessed, a fundamental area evaluating the reliability and generalisability of scores. For the wet-lab and dry-lab groups, a large number of authors have attempted to establish validity through feedback from study participants on whether the simulator was a valid representation of the surgical correlate. However, this is flawed since the majority of these participants are inexperienced and input should be made from those who have more expertise in the procedure of interest. Effectiveness and translational outcomes were also not extensively tested. In particularly wet-lab and dry-lab simulation studies predominantly reported evidence from user satisfaction surveys, with few assessing for skill improvement and none investigating the relationship to OR performance or patient-related outcomes. Although several studies have linked Eyesi training with reduced complication rates, the majority of these have been retrospective studies which did not control for important confounders such as participants undertaking other forms of training. A few studies explored the collateral effects of simulation training on a systemic level, such as cost saving or policy changes. Two separate preliminary analyses on cost effectiveness were carried out in 2013, both suggested that the cost to benefit ratio was unfavourable. One study predicted, on the basis of cost modelling, that residency programmes would not be able to recoup the costs of purchasing one Eyesi model within 10 years under the most optimistic scenario [149]. The other study suggested that, realistically, it would take 34 years to make a cost recovery [150]. In contrast, the most recent study by the Royal College of Ophthalmologists in the UK argued that the Eyesi was a cost-effective method if costs of complication were include. Access to an Eyesi simulator led to a 1.5% decrease in complication rates, which were inferred to result in an estimated 280 fewer cases of posterior capsular rupture complications alone per year. This would amount to a saving of roughly £560,000 per year and, using this figure, the authors calculated that the cost of purchasing 20 Eyesi simulators would be regained within 4 years. Due to the contrast in findings between these three studies and the implications for both patient safety and costs for healthcare providers, further attempts should be made to provide an updated reflection of current cost effectiveness of Eyesi simulators. In addition, there should be more studies to test whether the same potential benefits gained from Eyesi training can be achieved with a lower-cost model.

The limitations to this study are that, although a broad search criterion was applied using comprehensive search terms, it is possible that some reports using different terminology may have been missed. As discussed above, a large proportion of studies suffered from poor methodologies, utilising outdated concepts of validation and greatly limiting the conclusions that can be drawn. The heterogeneity in methodology and outcomes across the studies also prevented the use of quantitative analysis. In addition, the majority of e-learning studies included in this review recruited medical students rather than ophthalmic professionals, thus results obtained may not be reflective of specialised training.

Conclusion

The increasing importance of simulation training in ophthalmology is reflected by the number and variety of models described in the literature. The Eyesi Surgical remains the only model to have undergone extensive testing and the necessary evidence supporting its use has been reported. The main limitations of current research lie in the use of outdated validity frameworks, a lack of attempt made to establish the collateral, systemic effects of using simulator models and the low quality of validation study designs. Future studies need to follow current recommendations on the assessment and validation of educational tools to ensure that simulation-based training is successfully incorporated into current systems of training in ophthalmology, especially for high-stakes applications such as credentialing and assessment.

References

Reznick RK, MacRae H. Teaching surgical skills-changes in the wind. N Engl J Med. 2006;355:2664–9.

Zhou AW, Noble J, Lam WC. Canadian ophthalmology residency training: an evaluation of resident satisfaction and comparison with international standards. Can J Ophthalmol. 2009;44:540–7.

Chikwe J, de Souza AC, Pepper JR. No time to train the surgeons. BMJ. 2004;328:418–9.

Gallagher AG, Traynor O. Simulation in surgery: opportunity or threat? Ir J Med Sci. 2008;177:283–7.

Tarbet KJ, Mamalis N, Theurer J, Jones BD, Olson RJ. Complications and results of phacoemulsification performed by residents. J Cataract Refract Surg. 1995;21:661–5.

Rutar T, Porco TC, Naseri A. Risk factors for intraoperative complications in resident-performed phacoemulsification surgery. Ophthalmology. 2009;116:431–6.

McCannel CA. Simulation surgical teaching in ophthalmology. Ophthalmology. 2015;122:2371–2.

Messick S. Meaning and values in test validation: the science and ethics of assessment. Educ Res. 1989;18:5–11.

Beckman TJ, Cook DA, Mandrekar JN. What is the validity evidence for assessments of clinical teaching? J Gen Intern Med. 2005;20:1159–64.

McGaghie WC, Issenberg SB, Barsuk JH, Wayne DB. A critical review of simulation-based mastery learning with translational outcomes. Med Educ. 2014;48:375–85.

Ahmed Y, Scott IU, Greenberg PB. A survey of the role of virtual surgery simulators in ophthalmic graduate medical education. Graefes Arch Clin Exp Ophthalmol. 2011;249:1263–5.

Belyea DA, Brown SE, Rajjoub LZ. Influence of surgery simulator training on ophthalmology resident phacoemulsification performance. J Cataract Refract Surg. 2011;37:1756–61.

Daly MK, Gonzalez E, Siracuse-Lee D, Legutko PA. Efficacy of surgical simulator training versus traditional wet-lab training on operating room performance of ophthalmology residents during the capsulorhexis in cataract surgery. J Cataract Refract Surg. 2013;39:1734–41.

Feudner EM, Engel C, Neuhann IM, Petermeier K, Bartz-Schmidt KU, Szurman P. Virtual reality training improves wet-lab performance of capsulorhexis: results of a randomized, controlled study. Graefes Arch Clin Exp Ophthalmol. 2009;247:955–63.

Gonzalez-Gonzalez LA, Payal AR, Gonzalez-Monroy JE, Daly MK. Ophthalmic surgical simulation in training dexterity in dominant and nondominant hands: results from a pilot study. J Surg Educ. 2016;73:699–708.

Le TD, Adatia FA, Lam WC. Virtual reality ophthalmic surgical simulation as a feasible training and assessment tool: results of a multicentre study. Can J Ophthalmol. 2011;46:56–60.

Li E, Fay P, Greenberg PB. A virtual cataract surgery course for ophthalmologists-in-training. R I Med J. 2013;96:18–9.

Li E, Paul AA, Greenberg PB. A revised simulation-based cataract surgery course for ophthalmology residents. R I Med J. 2016;99:26–7.

McCannel CA. Continuous curvilinear capsulorhexis training and non-rhexis related vitreous loss: the specificity of virtual reality simulator surgical training (An American Ophthalmological Society Thesis). Trans Am Ophthalmol Soc. 2017;115:T2.

Nathoo N, Ng M, Ramstead CL, Johnson MC. Comparing performance of junior and senior ophthalmology residents on an intraocular surgical simulator. Can J Ophthalmol. 2011;46:87–8.

Pokroy R, Du E, Alzaga A, Khodadadeh S, Steen D, Bachynski B, et al. Impact of simulator training on resident cataract surgery. Graefes Arch Clin Exp Ophthalmol. 2013;251:777–81.

Privett B, Greenlee E, Rogers G, Oetting TA. Construct validity of a surgical simulator as a valid model for capsulorhexis training. J Cataract Refract Surg. 2010;36:1835–8.

Roohipoor R, Yaseri M, Teymourpour A, Kloek C, Miller JB, Loewenstein JI. Early performance on an eye surgery simulator predicts subsequent resident surgical performance. J Surg Educ. 2017;74:1105–15.

Saleh GM, Theodoraki K, Gillan S, Sullivan P, O’Sullivan F, Hussain B, et al. The development of a virtual reality training programme for ophthalmology: repeatability and reproducibility (part of the International Forum for Ophthalmic Simulation Studies). Eye. 2013;27:1269–74.

Selvander M, Asman P. Virtual reality cataract surgery training: learning curves and concurrent validity. Acta Ophthalmol. 2012;90:412–7.

Selvander M, Asman P. Ready for OR or not? Human reader supplements Eyesi scoring in cataract surgical skills assessment. Clin Ophthalmol. 2013;7:1973–7.

Solverson DJ, Mazzoli RA, Raymond WR, Nelson ML, Hansen EA, Torres MF, et al. Virtual reality simulation in acquiring and differentiating basic ophthalmic microsurgical skills. Simul Healthc. 2009;4:98–103.

Spiteri AV, Aggarwal R, Kersey TL, Sira M, Benjamin L, Darzi AW, et al. Development of a virtual reality training curriculum for phacoemulsification surgery. Eye. 2014;28:78–84.

Staropoli PC, Gregori NZ, Junk AK, Galor A, Goldhardt R, Goldhagen BE, et al. Surgical simulation training reduces intraoperative cataract surgery complications among residents. Simul Healthc. 2018;13:11–5.

Thomsen AS, Kiilgaard JF, Kjaerbo H, la Cour M, Konge L. Simulation-based certification for cataract surgery. Acta Ophthalmol. 2015;93:416–21.

Thomsen AS, Smith P, Subhi Y, Cour M, Tang L, Saleh GM, et al. High correlation between performance on a virtual-reality simulator and real-life cataract surgery. Acta Ophthalmol. 2017;95:307–11.

Lucas L, Schellini SA, Lottelli AC. Complications in the first 10 phacoemulsification cataract surgeries with and without prior simulator training. Arq Bras Oftalmol. 2019;82:289–94.

Ng DS, Sun Z, Young AL, Ko ST, Lok JK, Lai TY, et al. Impact of virtual reality simulation on learning barriers of phacoemulsification perceived by residents. Clin Ophthalmol. 2018;12:885–93.

Colne J, Conart JB, Luc A, Perrenot C, Berrod JP, Angioi-Duprez K. EyeSi surgical simulator: construct validity of capsulorhexis, phacoemulsification and irrigation and aspiration modules. J Fr Ophtalmol. 2019;42:49–56.

Ferris JD, Donachie PH, Johnston RL, Barnes B, Olaitan M, Sparrow JM. Royal College of Ophthalmologists’ National Ophthalmology Database study of cataract surgery: report 6. The impact of EyeSi virtual reality training on complications rates of cataract surgery performed by first and second year trainees. Br J Ophthalmol. 2019;104:324–9.

la Cour M, Thomsen ASS, Alberti M, Konge L. Simulators in the training of surgeons: is it worth the investment in money and time? 2018 Jules Gonin lecture of the Retina Research Foundation. Graefes Arch Clin Exp Ophthalmol. 2019;257:877–81.

Bozkurt Oflaz A, Ekinci Koktekir B, Okudan S. Does cataract surgery simulation correlate with real-life experience? Turk J Ophthalmol. 2018;48:122–6.

Baxter JM, Lee R, Sharp JA, Foss AJ. Intensive cataract training study G. Intensive cataract training: a novel approach. Eye. 2013;27:742–6.

Koch F, Koss MJ, Singh P, Naser H. Virtual reality in ophthalmology. Klin Monbl Augenheilkd. 2009;226:672–6.

Park L, Song JJ, Dodick JM, Helveston EM. The EYESI 2.2 ophthalmosurgical simulator: is it a good teaching tool? Investig Ophthalmol Vis Sci. 2006;47:4421.

Rossi JV, Verma D, Fujii GY, Lakhanpal RR, Wu SL, Humayun MS, et al. Virtual vitreoretinal surgical simulator as a training tool. Retina. 2004;24:231–6.

Vergmann AS, Vestergaard AH, Grauslund J. Virtual vitreoretinal surgery: validation of a training programme. Acta Ophthalmol. 2017;95:60–5.

Cisse C, Angioi K, Luc A, Berrod JP, Conart JB. EYESI surgical simulator: validity evidence of the vitreoretinal modules. Acta Ophthalmol. 2019;97:e277–e82.

Banerjee PP, Edward DP, Liang S, Bouchard CS, Bryar PJ, Ahuja R, et al. Concurrent and face validity of a capsulorhexis simulation with respect to human patients. Stud Health Technol Inform. 2012;173:35–41.

Sikder S, Luo J, Banerjee PP, Luciano C, Kania P, Song JC, et al. The use of a virtual reality surgical simulator for cataract surgical skill assessment with 6 months of intervening operating room experience. Clin Ophthalmol. 2015;9:141–9.

Kozak I, Banerjee P, Luo J, Luciano C. Virtual reality simulator for vitreoretinal surgery using integrated OCT data. Clin Ophthalmol. 2014;8:669–72.

Ricci LH, Ferraz CA. Ophthalmoscopy simulation: advances in training and practice for medical students and young ophthalmologists. Adv Med Educ Pract. 2017;8:435–9.

Borgersen NJ, Skou Thomsen AS, Konge L, Sorensen TL, Subhi Y. Virtual reality-based proficiency test in direct ophthalmoscopy. Acta Ophthalmol. 2018;96:e259–e61.

Boden KT, Rickmann A, Fries FN, Xanthopoulou K, Alnaggar D, Januschowski K, et al. Evaluation of a virtual reality simulator for learning direct ophthalmoscopy in student teaching. Ophthalmologe. 2019;117:44–49.

Chou J, Kosowsky T, Payal AR, Gonzalez Gonzalez LA, Daly MK. Construct and face validity of the Eyesi Indirect Ophthalmoscope Simulator. Retina. 2017;37:1967–76.

Loidl M, Schneider A, Keis O, Ochsner W, Grab-Kroll C, Lang GK, et al. Augmented reality in ophthalmology: technical innovation complements education for medical students. Klin Monbl Augenheilkd. 2019. [Epub ahead of print].

Agus M, Gobbetti E, Pintore G, Zanetti G, Zorcolo A. Real time simulation of phaco-emulsification for cataract surgery training. The third workshop on virtual reality interactions and physical simulations (VRIPHYS 2006). Madrid: The Eurographics Association; 2006. p. 10.

Choi KS, Soo S, Chung FL. A virtual training simulator for learning cataract surgery with phacoemulsification. Comput Biol Med. 2009;39:1020–31.

Laurell CG, Soderberg P, Nordh L, Skarman E, Nordqvist P. Computer-simulated phacoemulsification. Ophthalmology. 2004;111:693–8.

Hikichi T, Yoshida A, Igarashi S, Mukai N, Harada M, Muroi K, et al. Vitreous surgery simulator. Arch Ophthalmol. 2000;118:1679–81.

Jonas JB, Rabethge S, Bender HJ. Computer-assisted training system for pars plana vitrectomy. Acta Ophthalmol Scand. 2003;81:600–4.

Neumann PF, Sadler LL, Gieser J, editors. Virtual reality vitrectomy simulator. Medical image computing and computer-assisted intervention—MICCAI’98. Berlin: Springer Berlin Heidelberg; 1998.

Peugnet F, Dubois P, Rouland JF. Virtual reality versus conventional training in retinal photocoagulation: a first clinical assessment. Comput Aided Surg. 1998;3:20–6.

Verma D, Wills D, Verma M. Virtual reality simulator for vitreoretinal surgery. Eye. 2003;17:71–3.

Weiss M, Lauer SA, Fried MP, Uribe J, Sadoughi B. Endoscopic endonasal surgery simulator as a training tool for ophthalmology residents. Ophthalmic Plast Reconstr Surg. 2008;24:460–4.

Hunter IW, Jones LA, Sagar MA, Lafontaine SR, Hunter PJ. Ophthalmic microsurgical robot and associated virtual environment. Comput Biol Med. 1995;25:173–82.

Sinclair MJ, Peifer JW, Haleblian R, Luxenberg MN, Green K, Hull DS. Computer-simulated eye surgery. A novel teaching method for residents and practitioners. Ophthalmology. 1995;102:517–21.

Merril JR, Notaroberto NF, Laby DM, Rabinowitz AM, Piemme TE. The Ophthalmic Retrobulbar Injection Simulator (ORIS): an application of virtual reality to medical education. Proc Annu Symp Comput Appl Med Care. 1992:702–6.

Mustafa M, Montgomery J, Atta H. A novel educational tool for teaching ocular ultrasound. Clin Ophthalmol. 2011;5:857–60.

Dada VK, Sindhu N. Cataract in enucleated goat eyes: training model for phacoemulsification. J Cataract Refract Surg. 2000;26:1114–6.

Farooqui JH, Jaramillo A, Sharma M, Gomaa A. Use of modified international council of ophthalmology- ophthalmology surgical competency assessment rubric (ICO-OSCAR) for phacoemulsification- wet lab training in residency program. Indian J Ophthalmol. 2017;65:898–9.

Hashimoto C, Kurosaka D, Uetsuki Y. Teaching continuous curvilinear capsulorhexis using a postmortem pig eye with simulated cataract(2)(2). J Cataract Refract Surg. 2001;27:814–6.

Kayikcioglu O, Egrilmez S, Emre S, Erakgun T. Human cataractous lens nucleus implanted in a sheep eye lens as a model for phacoemulsification training. J Cataract Refract Surg. 2004;30:555–7.

Leuschke R, Bhandari A, Sires B, Hannaford B. Low cost eye surgery simulator with skill assessment component. Stud Health Technol Inform. 2007;125:286–91.

Liu ES, Eng KT, Braga-Mele R. Using medical lubricating jelly in human cadaver eyes to teach ophthalmic surgery. J Cataract Refract Surg. 2001;27:1545–7.

Mekada A, Nakajima J, Nakamura J, Hirata H, Kishi T, Kani K. Cataract surgery training using pig eyes filled with chestnuts of various hardness. J Cataract Refract Surg. 1999;25:622–5.

Pandey SK, Werner L, Vasavada AR, Apple DJ. Induction of cataracts of varying degrees of hardness in human eyes obtained postmortem for cataract surgeon training. Am J Ophthalmol. 2000;129:557–8.

Puri S, Srikumaran D, Prescott C, Tian J, Sikder S. Assessment of resident training and preparedness for cataract surgery. J Cataract Refract Surg. 2017;43:364–8.

Ruggiero J, Keller C, Porco T, Naseri A, Sretavan DW. Rabbit models for continuous curvilinear capsulorhexis instruction. J Cataract Refract Surg. 2012;38:1266–70.

Saraiva VS, Casanova FH. Cataract induction in pig eyes using viscoelastic endothelial protection and a formaldehyde-methanol mixture. J Cataract Refract Surg. 2003;29:1479–81.

Sengupta S, Dhanapal P, Nath M, Haripriya A, Venkatesh R. Goat’s eye integrated with a human cataractous lens: a training model for phacoemulsification. Indian J Ophthalmol. 2015;63:275–7.

Sudan R, Titiyal JS, Rai H, Chandra P. Formalin-induced cataract in goat eyes as a surgical training model for phacoemulsification. J Cataract Refract Surg. 2002;28:1904–6.

Sugiura T, Kurosaka D, Uezuki Y, Eguchi S, Obata H, Takahashi T. Creating cataract in a pig eye. J Cataract Refract Surg. 1999;25:615–21.

Tolentino FI, Liu HS. A laboratory animal model for phacoemulsification practice. Am J Ophthalmol. 1975;80:545–6.

van Vreeswijk H, Pameyer JH. Inducing cataract in postmortem pig eyes for cataract surgery training purposes. J Cataract Refract Surg. 1998;24:17–8.

Abrams GW, Topping T, Machemer R. An improved method for practice vitrectomy. Arch Ophthalmol. 1978;96:521–5.

Dang Y, Waxman S, Wang C, Parikh HA, Bussel II, Loewen RT, et al. Rapid learning curve assessment in an ex vivo training system for microincisional glaucoma surgery. Sci Rep. 2017;7:1605.

Lee GA, Chiang MY, Shah P. Pig eye trabeculectomy-a wet-lab teaching model. Eye. 2006;20:32–7.

Patel HI, Levin AV. Developing a model system for teaching goniotomy. Ophthalmology. 2005;112:968–73.

Patel SP, Sit AJ. A practice model for trabecular meshwork surgery. Arch Ophthalmol. 2009;127:311–3.

Arora A, Nazarali S, Sawatzky L, Gooi M, Schlenker M, Ahmed IK, et al. K-RIM (Corneal Rim) angle surgery training model. J Glaucoma. 2019;28:146–9.

Nazarali S, Arora A, Ford B, Schlenker M, Ahmed IK, Poulis B, et al. Cadaver corneoscleral model for angle surgery training. J Cataract Refract Surg. 2019;45:76–9.

Droutsas K, Petrak M, Melles GR, Koutsandrea C, Georgalas I, Sekundo W. A simple ex vivo model for teaching Descemet membrane endothelial keratoplasty. Acta Ophthalmol. 2014;92:e362–5.

Fontana L, Dal Pizzol MM, Tassinari G. Experimental model for learning and practicing big-bubble deep anterior lamellar keratoplasty. J Cataract Refract Surg. 2008;34:710–1.

Vasquez Perez A, Liu C. Human ex vivo artificial anterior chamber model for practice DMEK surgery. Cornea. 2017;36:394–7.

Famery N, Abdelmassih Y, El-Khoury S, Guindolet D, Cochereau I, Gabison EE. Artificial chamber and 3D printed iris: a new wet lab model for teaching Descemet’s membrane endothelial keratoplasty. Acta Ophthalmol. 2019;97:e179–e83.

White CA, Wrzosek JA, Chesnutt DA, Enyedi LB, Cabrera MT. A novel method for teaching key steps of strabismus surgery in the wet lab. J AAPOS. 2015;19:468–70.e1.

Vagge A, Gunton K, Schnall B. Impact of a strabismus surgery suture course for first- and second-year ophthalmology residents. J Pediatr Ophthalmol Strabismus. 2017;54:339–45.

Kersey TL. Split pig head as a teaching model for basic oculoplastic procedures. Ophthalmic Plast Reconstr Surg. 2009;25:253.

Pfaff AJ. Pig eyelid as a teaching model for eyelid margin repair. Ophthalmic Plast Reconstr Surg. 2004;20:383–4.

Zou C, Wang JQ, Guo X, Wang TL. Pig eyelid as a teaching model for severe ptosis repair. Ophthalmic Plast Reconstr Surg. 2012;28:472–4.

Patel SR, Mishall P, Barmettler A. A human cadaveric model for effective instruction of lateral canthotomy and cantholysis. Orbit. 2020;39:87–92.

Altunrende ME, Hamamcioglu MK, Hicdonmez T, Akcakaya MO, Birgili B, Cobanoglu S. Microsurgical training model for residents to approach to the orbit and the optic nerve in fresh cadaveric sheep cranium. J Neurosci Rural Pract. 2014;5:151–4.

Mednick Z, Tabanfar R, Alexander A, Simpson S, Baxter S. Creation and validation of a simulator for corneal rust ring removal. Can J Ophthalmol. 2017;52:447–52.

Pujari A, Kumar S, Markan A, Chawla R, Damodaran S, Kumar A. Buckling surgery on a goat’s eye: A simple technique to enhance residents’ surgical skill. Indian J Ophthalmol. 2019;67:1327–8.

Uhlig CE, Gerding H. A dummy orbit for training in diagnostic procedures and laser surgery with enucleated eyes. Am J Ophthalmol. 1998;126:464–6.

Auffarth GU, Wesendahl TA, Solomon KD, Brown SJ, Apple DJ. A modified preparation technique for closed-system ocular surgery of human eyes obtained postmortem: an improved research and teaching tool. Ophthalmology. 1996;103:977–82.

Lenart TD, McCannel CA, Baratz KH, Robertson DM. A contact lens as an artificial cornea for improved visualization during practice surgery on cadaver eyes. Arch Ophthalmol. 2003;121:16–9.

Borirak-chanyavat S, Lindquist TD, Kaplan HJ. A cadaveric eye model for practicing anterior and posterior segment surgeries. Ophthalmology. 1995;102:1932–5.

Ramakrishnan S, Baskaran P, Fazal R, Sulaiman SM, Krishnan T, Venkatesh R. Spring-action Apparatus for Fixation of Eyeball (SAFE): a novel, cost-effective yet simple device for ophthalmic wet-lab training. Br J Ophthalmol. 2016;100:1317–21.

Mohammadi SF, Mazouri A, Jabbarvand M, Rahman AN, Mohammadi A. Sheep practice eye for ophthalmic surgery training in skills laboratory. J Cataract Refract Surg. 2011;37:987–91.

Porrello G, Giudiceandrea A, Salgarello T, Tamburrelli C, Scullica L. A new device for ocular surgical training on enucleated eyes. Ophthalmology. 1999;106:1210–3.

Todorich B, Shieh C, DeSouza PJ, Carrasco-Zevallos OM, Cunefare DL, Stinnett SS, et al. Impact of microscope-integrated OCT on ophthalmology resident performance of anterior segment surgical maneuvers in model eyes. Investig Ophthalmol Vis Sci. 2016;57:146–53.

Ezra DG, Aggarwal R, Michaelides M, Okhravi N, Verma S, Benjamin L, et al. Skills acquisition and assessment after a microsurgical skills course for ophthalmology residents. Ophthalmology. 2009;116:257–62.

Fisher JB, Binenbaum G, Tapino P, Volpe NJ. Development and face and content validity of an eye surgical skills assessment test for ophthalmology residents. Ophthalmology. 2006;113:2364–70.

Taylor JB, Binenbaum G, Tapino P, Volpe NJ. Microsurgical lab testing is a reliable method for assessing ophthalmology residents’ surgical skills. Br J Ophthalmol. 2007;91:1691–4.

Abellan E, Calles-Vazquez MC, Cadarso L, Sanchez FM, Uson J. Design and validation of a simulator for training in continuous circular capsulotomy for phacoemulsification. Arch Soc Esp Oftalmol. 2013;88:387–92.

Hirata A, Iwakiri R, Okinami S. A simulated eye for vitreous surgery using Japanese quail eggs. Graefes Arch Clin Exp Ophthalmol. 2013;251:1621–4.

Yeh S, Chan-Kai BT, Lauer AK. Basic training module for vitreoretinal surgery and the Casey Eye Institute Vitrectomy Indices Tool for Skills Assessment. Clin Ophthalmol. 2011;5:1249–56.

Uhlig CE, Gerding H. Illuminated artificial orbit for the training of vitreoretinal surgery in vitro. Eye. 2004;18:183–7.

Iyer MN, Han DP. An eye model for practicing vitreoretinal membrane peeling. Arch Ophthalmol. 2006;124:108–10.

Rice JC, Steffen J, du Toit L. Simulation training in vitreoretinal surgery: a low-cost, medium-fidelity model. Retina. 2017;37:409–12.

Omata S, Someya Y, Adachi S, Masuda T, Hayakawa T, Harada K, et al. A surgical simulator for peeling the inner limiting membrane during wet conditions. PLoS ONE. 2018;13:e0196131.

Adebayo T, Abendroth M, Elera GG, Kunselman A, Sinz E, Ely A, et al. Developing and validating a simple and cost-effective strabismus surgery simulator. J AAPOS. 2018;22:85–8 e2.

Ganne P, Krishnappa NC, Baskaran P, Sulaiman SM, Venkatesh R. Retinal laser and photography practice eye model: a cost-effective innovation to improve training through simulation. Retina. 2018;38:207–10.

Moisseiev E, Michaeli A. Simulation of neodymium:YAG posterior capsulotomy for ophthalmologists in training. J Cataract Refract Surg. 2014;40:175–8.

Simpson SM, Schweitzer KD, Johnson DE. Design and validation of a training simulator for laser capsulotomy, peripheral iridotomy, and retinopexy. Ophthalmic Surg Lasers Imaging Retina. 2017;48:56–61.

Weidenthal DT. The use of a model eye to gain endophotocoagulation skills. Arch Ophthalmol. 1987;105:1020.

Coats DK. A simple model for practicing surgery on the nasolacrimal drainage system. J AAPOS. 2004;8:509–10.

Adams JW, Paxton L, Dawes K, Burlak K, Quayle M, McMenamin PG. 3D printed reproductions of orbital dissections: a novel mode of visualising anatomy for trainees in ophthalmology or optometry. Br J Ophthalmol. 2015;99:1162–7.

Scawn RL, Foster A, Lee BW, Kikkawa DO, Korn BS. Customised 3D printing: an innovative training tool for the next generation of orbital surgeons. Orbit. 2015;34:216–9.

Marson BA, Sutton LJ. The Newport eye: design and initial evaluation of a novel foreign body training phantom. Emerg Med J. 2014;31:329–30.

Akaishi Y, Otaki J, Takahashi O, Breugelmans R, Kojima K, Seki M, et al. Validity of direct ophthalmoscopy skill evaluation with ocular fundus examination simulators. Can J Ophthalmol. 2014;49:377–81.

Chew C, Gray RH. A model eye to practice indentation during indirect ophthalmoscopy. Eye. 1993;7:599–600.

Kumar KS, Shetty KB. A new model eye system for practicing indirect ophthalmoscopy. Indian J Ophthalmol. 1996;44:233–4.

Lewallen S. A simple model for teaching indirect ophthalmoscopy. Br J Ophthalmol. 2006;90:1328–9.

McCarthy DM, Leonard HR, Vozenilek JA. A new tool for testing and training ophthalmoscopic skills. J Grad Med Educ. 2012;4:92–6.

Miller KE. Origami model for teaching binocular indirect ophthalmoscopy. Retina. 2015;35:1711–2.

Morris WR. A simple model for demonstrating abnormal slitlamp findings. Arch Ophthalmol. 1998;116:93–4.

Romanchuk KG. Enhanced models for teaching slit-lamp skills. Can J Ophthalmol. 2003;38:507–11.

Kylstra JA, Diaz JD. A simple eye model for practicing indirect ophthalmoscopy and retinal laser photocoagulation. Digit J Ophthalmol. 2019;25:1–4.

Kaufman D, Lee S. Formative evaluation of a multimedia CAL program in an ophthalmology clerkship. Med Teach. 1993;15:327–40.

Stahl A, Boeker M, Ehlken C, Agostini H, Reinhard T. Evaluation of an internet-based e-learning ophthalmology module for medical students. Ophthalmologe. 2009;106:999–1005.

Prinz A, Bolz M, Findl O. Advantage of three dimensional animated teaching over traditional surgical videos for teaching ophthalmic surgery: a randomised study. Br J Ophthalmol. 2005;89:1495–9.

Glittenberg C, Binder S. Using 3D computer simulations to enhance ophthalmic training. Ophthalmic Physiol Opt. 2006;26:40–9.

Henderson BA, Kim JY, Golnik KC, Oetting TA, Lee AG, Volpe NJ, et al. Evaluation of the virtual mentor cataract training program. Ophthalmology. 2010;117:253–8.

Cook DA, Brydges R, Zendejas B, Hamstra SJ, Hatala R. Technology-enhanced simulation to assess health professionals: a systematic review of validity evidence, research methods, and reporting quality. Acad Med. 2013;88:872–83.

Wood TC, Raison N, Haldar S, Brunckhorst O, McIlhenny C, Dasgupta P, et al. Training tools for nontechnical skills for surgeons—a systematic review. J Surg Educ. 2017;74:548–78.

Saleh GM, Wawrzynski JR, Saha K, Smith P, Flanagan D, Hingorani M, et al. Feasibility of human factors immersive simulation training in ophthalmology: the London Pilot. JAMA Ophthalmol. 2016;134:905–11.

Downing SM. Face validity of assessments: faith-based interpretations or evidence-based science? Med Educ. 2006;40:7–8.

Musbahi O, Aydin A, Al Omran Y, Skilbeck CJ, Ahmed K. Current status of simulation in otolaryngology: a systematic review. J Surg Educ. 2017;74:203–15.

Morgan M, Aydin A, Salih A, Robati S, Ahmed K. Current status of simulation-based training tools in orthopedic surgery: a systematic review. J Surg Educ. 2017;74:698–716.

Borgersen NJ, Naur TMH, Sorensen SMD, Bjerrum F, Konge L, Subhi Y, et al. Gathering validity evidence for surgical simulation: a systematic review. Ann Surg. 2018;267:1063–8.

Lowry EA, Porco TC, Naseri A. Cost analysis of virtual-reality phacoemulsification simulation in ophthalmology training programs. J Cataract Refract Surg. 2013;39:1616–7.

Young BK, Greenberg PB. Is virtual reality training for resident cataract surgeons cost effective? Graefes Arch Clin Exp Ophthalmol. 2013;251:2295–6.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Lee, R., Raison, N., Lau, W.Y. et al. A systematic review of simulation-based training tools for technical and non-technical skills in ophthalmology. Eye 34, 1737–1759 (2020). https://doi.org/10.1038/s41433-020-0832-1

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s41433-020-0832-1

This article is cited by

-

Virtual reality experiential learning improved undergraduate students’ knowledge and evaluation skills relating to assistive technology for older adults and individuals with disabilities

BMC Medical Education (2024)

-

Simulation-based training for intraoperative posterior capsule rupture management: an analysis of nontechnical skills development and the relationship between technical and nontechnical skills

Eye (2024)

-

Development and evaluation of a portable and soft 3D-printed cast for laparoscopic choledochojejunostomy model in surgical training

BMC Medical Education (2023)

-

Non-technical skills simulation-based training model for managing intraoperative posterior capsule rupture during cataract surgery

Eye (2023)

-

Creation of a proof-of-concept 3D printed corneal trauma simulation model

Eye (2023)