Abstract

Purpose

To determine whether a purpose-built glare tester, in combination with new glare-specific questions, can better assess glaucoma patients’ experience of glare and visual disability than visual acuity (VA) or visual fields alone.

Patients and methods

Consecutive patients attending for visual fields in a single glaucoma clinic from 03/06/2019–01/11/2019 underwent VA assessment using a Brightness Acuity Tester as a glare source, examination for media opacities, and completed the VFQ-25 with four additional glare-related questions. One hundred and sixteen eyes (64 patients) were included for analysis. The amount of disability glare (VA reduction due to glare) was compared according to presence/absence of media opacity and glaucoma severity. Subjective glare symptoms were compared with objective disability glare measurement using a Bland–Altman plot.

Results

Patients with advanced–severe glaucoma had significantly worse disability glare than those with mild–moderate glaucoma. VA reduction due to glare from mild–moderate to acute–severe glaucoma (mean −3.4 letters, P = 0.01, CI −0.8, −6.0) was of almost identical magnitude to that due to presence of any media opacity (mean −3.3 letters, P = 0.03, CI −0.4, −6.2). There was a trend towards greater disability glare in more symptomatic patients, with good agreement between subjective and objective glare measurements for those with few glare signs or symptoms, but progressively more variation either side of agreement as either measurement worsened.

Conclusions

Glaucoma is associated with objective and subjective acuity reduction in the presence of glare. We demonstrated an increase in disability glare in patients with more severe glaucoma. The worse the glare symptoms or signs, the more variable the agreement between objective and subjective assessments.

Similar content being viewed by others

Introduction

Research has found that in older patients and those with glaucoma, visual function under non-ideal circumstances could not be predicted from standard visual acuity (VA) testing in clinic [1, 2]. Lighting conditions such as glare are not present in standard clinical tests of visual function. Patients’ visual difficulties experienced outside of the clinic may therefore be underrepresented and underappreciated by clinicians. The purpose of this paper is to determine whether a glare tester and glare-specific questions added to a visual function questionnaire can more comprehensively assess the glaucoma patient’s experience of glare and visual disability.

Patients with glaucoma have been demonstrated to suffer from poorer quality of life (QoL) compared to those without. With increasing severity of glaucoma, QoL worsens [3]. Patients may report greater visual difficulties than expected from the results of visual function tests. Bhorade et al. investigated reasons for this by testing glaucoma patients’ vision first in clinic, and then at home [1]. They found that all tests of visual function produced worse results at home than in clinic, with a third of participants reading ≥2 lines better in clinic. The disparity between vision in clinic and at home demonstrates how clinical testing may not represent day-to-day conditions or usual visual functioning.

Only a very small proportion of glaucoma research considers the impact of lighting conditions such as glare on people with glaucoma; a recent systematic review by Enoch et al., including 56 studies, is the first to focus on the impacts of different lighting conditions on the vision and QoL of people with POAG [4]. It provides evidence that lighting conditions may cause frequent difficulties for visual function, QoL and the daily activities of people with glaucoma. Questionnaire-based studies have found that activities involving lighting, glare and dark adaptation were the most frequently reported and most disabling symptoms related to visual function in glaucoma patients, and that glare sensitivity is a risk factor for self-reported difficulty with everyday activities [3,4,5,6]. Nelson et al. found that with increasing glaucomatous visual field loss, patients had worsening questionnaire scores relating to glare, lighting and dark adaptation (P = 0.001) [7]. Psychophysical studies also suggest that glaucoma negatively affects low-luminance contrast sensitivity (CS) and glare symptoms [4]. Glare symptoms will typically not be picked up by standard VA or visual field testing. Disability glare is glare that impairs vision. It occurs when there is forward intraocular light scatter (straylight) from a light source elsewhere in the visual field, owing to imperfections in the optical media. This straylight casts a veiling luminance on the retina, reducing image contrast and impairing vision [2, 8, 9]. It can be clinically measured as the reduction in VA or CS resulting from a nearby glare source [10]. Common causes of disability glare include cataracts, capsular opacification, keratoconus, and corneal oedema [8].

Glare conditions can be recreated in clinic using glare test devices. These emit a light source of adjustable brightness, whilst concurrent VA or CS measurements quantify vision with and without glare illumination. There is no gold standard approach for measuring disability glare; Mainster and Turner note that ‘many clinical disability glare testers have been developed but results have been variable and no widely accepted testing protocol exists despite over 2 decades of study’ [9]. Results depend on the luminance and location of the glare source, and the resultant straylight. Therefore, the distance and angle of the light from the eye must be kept constant in any glare test [2].

One such glare tester is the handheld brightness acuity tester (BAT), of which there are several manufacturers (e.g., Marco Ophthalmic Inc., VisionMax Inc.). Authors have previously used this device to assess the effect of glare on VA and CS in both cataract and glaucoma with varying results. One study found it a reliable device with good repeatability and it has scored well in prediction of outdoor Snellen VA [10, 11]. Another study found an associated link between BAT-induced disability glare and a visual symptoms questionnaire [7]. However, other experimenters have found the BAT to be of poor validity and reliability, possibly because of the pupillary miosis it induces [2]. Benefits of the BAT are that luminance is easily controlled with three fixed brightness settings, and it is handheld against the eye by the patient themself.

The use of glare testers is much more common in the USA than the UK; surveys from the USA in the 1990s showed that 60–70% of cataract surgeons were using glare tests [2]. In the year 2000, a questionnaire survey of 473 cataract surgeons in the UK found only 12% (n = 53) used glare tests, most commonly using any readily available glare source such as pen torches, ophthalmoscopes or anglepoise lamps. Whilst the BAT was the most frequently named purpose-built glare tester, only seven surgeons reported its use [12].

To better understand and more fully assess glaucoma patients’ visual function, all patients attending one glaucoma clinic completed a questionnaire and underwent VA testing with a glare tester. This report compares their reported visual symptoms with the objectively measured disability glare.

Methods

Inclusion criteria: every consecutive patient coming for visual fields in a single glaucoma clinic between the 3rd of June 2019 and the 1st of November 2019.

Exclusion criteria: eight eyes from six patients were removed from data analysis. Reasons included VA to hand movements or NPL only (n = 3), unable to locate the aperture of the glare tester (n = 2) or incomplete data entry (n = 3).

The Glaucoma Severity Staging system was used for glaucoma staging [13]. Mild to moderate (MM) glaucoma was defined as a Humphrey mean deviation (MD) score of −0.01 to −12.0. Advanced to severe (AS) glaucoma was defined as a MD score of −12.01 or worse.

Presence of cataract was assessed in all patients using a slit lamp. For the purposes of comparison patients were split into two groups—clear visual axis vs. media opacity. Media opacity was defined as any cataract grading greater than (+), i.e., +, ++ or +++ (equivalent to LOCS III, NC3, C3 P2 or worse [14]). Patients with a clear visual axis included those having undergone cataract surgery with IOL placement.

The glare tester used was a handheld BAT (Vmax Vision Inc. FL, USA). It has three pre-set glare levels: (1) high, (2) medium, (3) low selected using a dial. The brightness levels at the 12-mm diameter entrance pupil are calibrated to 400 FL (1370.4 candela/m2), 100 FL (342.6 candela/m2) and 12 FL (41.11 candela/m2), respectively. Ambient brightness was measured at 222 lux (64.8 FL) using an Isotech ILM-350 digital light metre (RS Components, Corby, UK).

Using forced-choice testing, participants’ BCVA was measured using a digital display ETDRS VA chart at 3 m to give an ETDRS letter score [15]. The digital chart provides a new and unique combination of letters every time a repeat test is taken to avoid memorisation. Patients VA was then measured using the BAT with each eye. Patients were instructed to hold the device up to their eye and stare through the 12-mm aperture. The device was used with the patient’s glasses if necessary for BCVA. The test was repeated at all three brightness levels. A disability glare score was calculated by subtracting the number of letters correctly identified without glare from the number of letters identified with glare.

Patients were invited to complete the Visual Function Questionnaire (VFQ-25 version 2000) [16]. These 25 QoL-related questions were supplemented with four additional glare-related questions (A1–A4, Table 1) designed to better understand the impact of glare on patients. These questions were primarily derived from patient’s descriptions in a qualitative study we undertook in 2002 [17]. The questions were piloted with patients not included in the study sample and modified by their feedback before inclusion.

As for the VFQ-25, a Likert scale (from 0 to 10) was employed for responses to these questions, with 0 representing the worst possible symptoms and 10 the best. A composite score of the four questions (referred to as the ‘A’ score) was made by converting the maximum possible score of 40 to a maximum score of 100 (by multiplying responses by 2.5). This is similar to the VFQ-25 scoring protocol where responses are recoded to a score out of 100, with higher scores representing better visual functioning [18]. These composite ‘A’ scores were intended as an additional glare-specific sub-scale and were thus kept separate from the overall composite VFQ-25 scores. Patients completed the questionnaire by ticking the boxes alone, with assistance only if they had problems with reading or comprehension.

The subjective report of glare was compared with the objective measurement in a Bland–Altman plot [19]. Results were converted to percentages to generate a comparable scale for both measurements. For the subjective results, the composite score of the four questions was expressed as a percentage of the asymptomatic maximum possible score (i.e., 0% being the worst, 100% the best). For the objective measurement, the drop in VA under glare (ETDRS letter score) was expressed as a percentage of the maximum-recorded acuity reduction due to glare in the group. For example, the biggest drop in acuity under glare (−18 letters) scored 0%, the minimum reduction (0 letters, or any letters gained) scored 100%, so a drop in acuity of −9 letters would score 50%. This was calculated for both better and worse eyes of each individual in terms of acuity reduction from glare. Since we were unsure whether patients might be most symptomatic from their worse eye or from their better eye Bland–Altman plots were generated for each.

Results

The records of a total of 64 patients (116 eyes) were included in the data analysis. As this was a pilot study the sample size was pragmatic, with a sample of 64 adequate to show a reduction in acuity due to glare in 60 ± 12% of individuals with 95% confidence. The mean age was 70 years (range 41–92) and 37 (58%) were male. Three quarters (75%, n = 48) were Caucasian with 10 (16%) West African and the remainder other ethnicities.

All eyes had glaucoma and MD results were held for 108 eyes. Seventy-eight (72%) had MM glaucoma with a mean MD of −3.5 (SD 3.9), the remaining 30 (28%) had AS glaucoma with a mean MD of −17.2 (SD 3.2). A clear visual axis was recorded in 72 (62%) of all eyes.

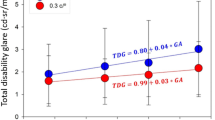

The ambient lighting was 64.8 FL. Figure 1 shows the mean VA plotted against increasing brightness of the BAT with standard error bars (s.e.m.). It can be seen that the first BAT setting made no impact on VA. There was an incremental loss of acuity for 100 FL (mean 2 letters loss) and 400 FL (mean 4 letters loss).

There was a slight increase in mean visual acuity (VA) at the lowest brightness setting (12 FL) of the Brightness Acuity Tester (BAT) compared to in ambient lighting conditions without a glare source (64.8 FL). With increasing BAT brightness (from 12–400 FL) mean VA decreased. Points are plotted with standard error (s.e.m.) bars.

Taking the results for ambient lighting/no glare and 400 FL, Table 2 shows the results of comparing acuity reduction by glaucoma severity and by presence of media opacity, using a two-tailed unpaired t-test. It can be seen that both media opacity and glaucoma severity increase the degree of visual loss with glare.

VFQ-25 response proportion was 67% (n = 43); for the four additional glare questions it was 64% (n = 41). Patients with AS glaucoma scored worse than those with MM glaucoma in self-rated general health (58.3 vs. 68.8 out of 100) and general vision (53.3 vs. 80 out of 100). The composite scores of the additional glare questions (or ‘A’ scores) ranged from 20 to 97.5 out of 100, with a mean of 77.4 (SD 19.3). The lowest scoring questions were A1 and A2 (mean 6.7, 7.3 out of 10, respectively), which were questions relating to bright lighting. When comparing with the disability glare results there is a challenge since many patients had differing acuity changes for each of their eyes. We compared the self-reported visual symptoms with the acuity change in the eye affected more by glare and also with that in the lesser affected eye. In the worse affected eyes, the mean drop in acuity with 400 FL glare was −4.7 letters (range −18 to 0); in the lesser affected eyes it was –0.9 (range −12 to +13). Bland–Altman plots were constructed comparing the composite ‘A’ scores with the change in VA as detailed in the methods (Fig. 2). The resultant plots were very similar for eyes least affected by glare and those most affected by glare. These showed a general agreement, with high variation for large amounts of VA reduction/perceived visual disability and greater agreement where there was little VA reduction/perceived visual disability.

We do not have a reference body of work to determine the minimal clinically important difference for responses to the glare questions. The median response to glare questions was 8 with most responses 8–10. Two cut-offs were created and investigated to create a binary outcome of those less symptomatic and those more symptomatic, one at 7 and below and one at 8 and below (for more symptomatic), with a summation for the four questions combined. The mean reduction in acuity with glare for less symptomatic individuals was then compared to the reduction for more symptomatic individuals using a two-tailed unpaired t-test and is shown in Table 1 (for better eye and worse eye). As some groups had quite unequal sample sizes, the Welch’s t-test was undertaken in addition. The P value of this is shown in brackets. Both cut-offs suggested concordance between the subjective and objective results, but this was more pronounced for the cut-off of 7, which is why this is given in Table 1. For better eyes questions A2, A4 and the summed questions were most concordant.

The possibility of ocular surface disturbance contributing to both objective and subjective glare was investigated. No association was found between drops, number of drops and preserved/non-preserved drops for either the acuity drop with the BAT or questions A1–A4. In order to investigate the contributions of media opacity, severity of glaucoma and age to objective reduction in acuity in the presence of glare logistic regression was undertaken. A binary outcome was created. Those with improvement or no change in acuity were coded 0 (n = 12) and those with any decrease in acuity with glare were coded as 1 (n = 33). Univariate and multivariate logistic regression is shown in Table 3 and shows no real suggestion of confounding.

Discussion

In common with previous reports patients’ VA decreased as glare brightness increased. The maximum brightness setting (400 FL) yielded the greatest VA reduction (mean −4.0 letters loss) and was of most use in assessing the effect of glare on vision, so VA measurement at all three BAT brightness levels seems unnecessary. Our finding of a direct relationship between presence of media opacity and VA reduction from glare (−6.1 vs. −2.8 letters loss with and without media opacity, P = 0.03) is in agreement with previous reports [10].

AS glaucoma produced significantly worse disability glare compared to MM glaucoma (mean VA reduction of −5.0 letters vs. −1.6 letters), which was also true when those with media opacities were excluded. The difference in mean VA reduction due to glare between MM and AS glaucoma (−3.4 letters reduction, P = 0.01) was of almost identical magnitude to that between those with and without a clear visual axis in our cohort (mean difference of −3.3 letters, P = 0.03). This finding is not so widely reported. Siah et al. recently evaluated the relationship between macular pigment optical density (MPOD) and disability glare in open angle glaucoma [20]. They found that lower MPOD levels were associated with poorer CS under glare and with self-reported symptoms of glare.

In our study patients reported worse symptoms relating to sunny outdoor conditions and glare from headlights (A1 + A2) than in supermarkets or navigating stairs (A3 + A4). There was a trend towards greater VA reduction due to glare between less symptomatic and more symptomatic patients, with greater concordance when a cut-off of 7/10 and below was used to define ‘more symptomatic’. The Bland–Altman plots showed good agreement between subjective and objective glare measurements for those with little disability glare and a lack of symptoms, with progressively more variation either side of agreement as either score worsened. This was true both for eyes most affected by glare and those least affected by glare. This could be due to the simplistic approach used to generate a comparable scale for the subjective and objective findings in the construction of the Bland–Altman plot. The symptoms in question may not be linear; a much larger study would need to be undertaken to investigate the validity of this approach. Other authors have also reported variable agreement between subjective and objective glare assessment [21, 22]. Just as with pain, the same stimulus may be perceived as severe by some individuals and minor by others, resulting in a variability in subjective appreciation of an objective finding. Despite this, patients found the additional glare questions very relevant to their experience of glaucoma, and the BAT easy to use.

The concept of the ‘patient acceptable symptom state’ (PASS) could be applied to future studies to estimate an acceptable level of glare symptoms for patients. It has been defined as the value beyond which patients consider themselves well. With an anchoring question to determine whether their current state is satisfactory, it can be used to determine relevant cut-offs for patient-reported outcome measures. The PASS cut-off has been defined as the 75th centile of scores of those who consider their current state satisfactory [23]. Though developed for rheumatology trials, it has also been used to evaluate VA and patient-reported visual function outcomes of cataract extraction [24].

Limitations of the study include the BAT’s pinhole effect, the use of high-contrast VA testing and small numbers of patients with severe objective visual reduction—only 15 eyes lost more than 10 letters whilst using the BAT. Viewing the ETDRS chart through the central aperture of the BAT, and the glare-source-induced miosis, may both cause a pinhole effect improving distance VA, which other authors have noted [2, 9, 25]. Our results showed a slight increase in mean VA at the lowest BAT brightness compared to VA under ambient lighting (Fig. 1). This may lead to an underestimation in VA reduction from glare in non-clinical settings. A high-contrast ETDRS chart was used as per standard practice in the clinic. Elliot and Bullimore tested multiple glare testers for reliability and validity and found that CS or low-contrast acuity measured in the presence of glare was superior to disability glare measured using high-contrast tests such as the ETDRS chart [10]. High-contrast acuity charts may not best reflect everyday vision of low-contrast objects such as faces.

It is well known that increasing severity of glaucoma is correlated with poorer QoL [3]. As noted by Enoch et al., clinicians must be aware of the effects of illumination on glaucoma patients and that ‘visual function as measured in the clinic may not reflect their real-world visual performance, especially at night or under scotopic conditions’ [4]. The purpose of this data collection was to determine whether additional tools such as the BAT, in combination with new glare-specific questionnaires can help us better assess the glaucoma patient’s experience of glare and visual disability than VA or visual field measurements alone. Our study highlights two key points. First, we demonstrated that severe glaucoma produces significant disability glare. Second, glaucoma is associated with both objective and subjective worsening of vision due to glare, but there is poor agreement between measures where either is more than minimal.

Summary

What was known before

-

Glaucoma patients suffer from poorer QoL.

-

Optic nerve assessment, visual fields and VA are the mainstay of clinical assessment.

-

In glaucoma visual function outside in non-ideal circumstances cannot be predicted from standard acuity testing in clinic.

-

Glare is a significant problem for glaucoma patients but is not often measured or enquired about by clinicians.

What this study adds

-

Severity of glaucoma is associated with worse VA under glare conditions.

-

Glaucoma is associated with both objective and subjective acuity reduction in the presence of glare.

-

The worse the glare symptoms or signs, the more variable the agreement between objective and subjective assessments.

References

Bhorade A, Perlmutter M, Wilson B, Kambarian J, Chang S, Pekmezci, et al. Differences in vision between clinic and home and the effect of lighting in older adults with and without glaucoma. JAMA Ophthalmol. 2013;131:1554.

Aslam T, Haider D, Murray I. Principles of disability glare measurement: an ophthalmological perspective. Acta Ophthalmologica Scand. 2007;85:354–60.

Goldberg I, Clement C, Chiang T, Walt J, Lee L, Graham S, et al. Assessing quality of life in patients with glaucoma using the glaucoma quality of Life-15 (GQL-15) Questionnaire. J Glaucoma. 2009;18:6–12.

Enoch J, Jones L, Taylor D, Bronze C, Kirwan J, Jones P, et al. How do different lighting conditions affect the vision and quality of life of people with glaucoma? A systematic review. Eye. 2019;34:138–54.

Janz N, Wren P, Lichter P, Musch D, Gillespie B, Guire K, et al. The collaborative initial glaucoma treatment study interim quality of life findings after initial medical or surgical treatment of glaucoma. Ophthalmology. 2001;108:1954–65.

Rubin G, Bandeen-Roche K, Huang G, Muñoz B, Schein O, Fried L, et al. “The association of multiple visual impairments with self-reported visual disability: SEE Project.”. Investigative Ophthalmol Vis Sci. 2001;42:64–72.

Nelson P, Aspinall P, Papasouliotis O, Worton B, O’Brien C. Quality of life in glaucoma and its relationship with visual function. J Glaucoma. 2003;12:139–50.

American Academy of Ophthalmology. Contrast sensitivity and glare testing in the evaluation of anterior segment disease. Opthalmology. 1990;97:1233–7.

Mainster M, Turner P. Glare’s causes, consequences, and clinical challenges after a century of ophthalmic study. Am J Ophthalmol. 2012;153:587–93.

Elliott D, Bullimore M. Assessing the reliability, discriminative ability, and validity of disability glare tests. Investigative Ophthalmol Vis Sci. 1993;34:108–19.

Neumann A, McCarty G, Locke J, Cobb B. Glare disability devices for cataractous eyes: a consumerʼs guide. J Cataract Refractive Surg. 1988;14:212–6.

Frost N, Sparrow J. Use of vision tests in clinical decision making about cataract surgery: results of a national survey. Br J Ophthalmol. 2000;84:432–4.

Mills R, Budenz D, Lee P, Noecker R, Walt J, Siegartel L, et al. Categorizing the stage of glaucoma from pre-diagnosis to end-stage disease. Am J Ophthalmol. 2006;141:24–30.

Chylack L, Wolfe J, Singer D, Leske M, Bullimore M, Bailey I, et al. The lens opacities classification system III. Arch Ophthalmol. 1993;111:831.

Kniestedt C, Stamper R. Visual acuity and its measurement. Ophthalmol Clin North Am. 2003;16:155–70.

Mangione C, Lee P, Gutierrez P, Spritzer K, Berry S, Hays R. Development of the 25-list-item National Eye Institute Visual Function Questionnaire. Arch Ophthalmol. 2001;119:1050.

Green J, Siddall H, Murdoch I. Learning to live with glaucoma: a qualitative study of diagnosis and the impact of sight loss. Soc Sci Med. 2002;55:257–67.

Mangione C. Nei.nih.gov. 2000. https://www.nei.nih.gov/sites/default/files/2019-06/manual_cm2000.pdf. Accessed 17 Feb 2020.

Giavarina D. Understanding Bland Altman analysis. Biochemia Med. 2015;25:141–51.

Siah W, O’Brien C, Loughman J. Macular pigment is associated with glare-affected visual function and central visual field loss in glaucoma. Br J Ophthalmol. 2017;102:929–35.

Adamsons I, Vitale S, Stark W, Rubin G. The association of postoperative subjective visual function with acuity, glare, and contrast sensitivity in patients with early cataract. Arch Ophthalmol. 1996;114:529.

Elliott D, Hurst M, Weatherill J. Comparing clinical tests of visual function in cataract with the patient’s perceived visual disability. Eye. 1990;4:712–7.

Tubach F. Evaluation of clinically relevant states in patient reported outcomes in knee and hip osteoarthritis: the patient acceptable symptom state. Ann Rheum Dis. 2005;64:34–7.

Quintana J, Aguirre U, Las-Hayas C, Gonzalez N, Garcia S, Escobar A. Use of the patient acceptable symptom state and the minimal clinically important difference to evaluate the outcomes of cataract extraction. Am J Ophthalmol. 2011;152:234–43.e3.

Tan J, Spalton D, Arden G. Comparison of methods to assess visual impairment from glare and light scattering with posterior capsule opacification. J Cataract Refractive Surg. 1998;24:1626–31.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Ethics statement

This study was a retrospective review of routinely collected clinical data. As such it is registered with our audit department as data exploration.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Hamedani, M., Dulley, B. & Murdoch, I. Glaucoma and glare. Eye 35, 1741–1747 (2021). https://doi.org/10.1038/s41433-020-01164-8

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s41433-020-01164-8

This article is cited by

-

Evaluation of the association between macular damage and disability glare in patients with advanced glaucoma

Graefe's Archive for Clinical and Experimental Ophthalmology (2022)