Abstract

Background

In mCRC, CEA is used to monitor response to systemic therapy together with imaging. After the end of induction, no major improvement in tumour shrinkage is expected, and the availability of a marker able to predict progressive disease (PD) versus no-PD might allow avoiding CT scans.

Methods

We pooled data from patients with baseline CEA ≥ 10 ng/mL included in TRIBE and TRIBE2 studies with the aim of identifying a threshold for percent increase of CEA from nadir able to predict PD after the end of the induction therapy.

Results

In total, 1178 paired CEA and radiological assessments from 434 patients were included. According to the optimal cut-off determined by ROC, a CEA increase of at least 120% from nadir differentiated between PD and no-PD with a sensitivity of 74% and a specificity of 78%, excluding PD in the 92% of radiological assessments and allowing to avoid the 67% of CT scans. However, CEA cut-off of 120% was not able to detect radiological PD in 26% of cases. In order to mitigate this issue, a different clinically relevant threshold was evaluated based on the best sensitivity cut-off. Therefore, using any CEA increase from nadir as a threshold, the sensitivity grew to 93% and only in the 7% of cases the radiological PD was not detected.

Conclusions

In mCRC with baseline CEA ≥ 10 ng/mL, CEA values can accurately predict PD versus no-PD after the end of the first-line induction therapy.

Similar content being viewed by others

Background

Carcinoembryonic antigen (CEA) is a glycoprotein involved in cell adhesion and is normally produced in gastrointestinal tissues during foetal development. Serum levels of CEA are usually low in the blood of healthy individuals (0–5 ng/ml), but may raise in some non-neoplastic conditions including inflammatory bowel disease, hepatitis, pancreatitis, pulmonary infections, in smokers (rarely over 10 ng/ml), and in patients affected by gastrointestinal, lung and breast tumours [1, 2].

Although CEA is elevated in approximately two-thirds of colorectal cancer (CRC) patients, international guidelines do not recommend its use as a screening or diagnostic tool due to the low sensitivity and specificity [3,4,5].

In metastatic CRC (mCRC), changes in CEA serum levels are used to monitor response to systemic therapies together with radiological imaging. In particular, a series of retrospective studies showed a correlation between CEA variation and response to chemotherapy, thus suggesting a role for CEA in avoiding radiological imaging [6,7,8,9]. A recent pooled analysis of mCRC patients with elevated baseline CEA from seven randomised clinical trials of first-line chemotherapy with or without targeted therapy collected in the ARCAD database identified a threshold for CEA decrease from baseline to the first and second CT scan reassessment to select patients with no progressive disease in order to avoid CT scan [10]. However, in this phase some information provided by imaging assessment highly affect subsequent treatment choices including the possibility to perform locoregional treatments, the choice to administer maintenance therapy or to recommend a treatment holiday. Therefore, radiological assessment during upfront chemotherapy may be highly informative in patients with decreased CEA levels.

Nowadays, after the induction phase, maintenance periods or treatment breaks are common choices in the treatment of mCRC patients [11]. Indeed after 4–6 months of combination therapy, no major improvement in tumour shrinkage is expected. Therefore, in this phase, the availability of a marker able to predict disease control status (progression disease (PD) versus no-PD) would be useful to avoid or defer CT scan. To the best of our knowledge, no specific data are available in this regard.

Drawing from these considerations, we assessed the role of CEA in predicting PD after induction therapy in mCRC patients randomised in two Phase III randomised trials by Gruppo Oncologico del Nord Ovest, TRIBE and TRIBE2, that compared FOLFOXIRI (5-fluorouracil, leucovorin, oxaliplatin and irinotecan) plus bevacizumab with FOLFIRI (5-fluorouracil, leucovorin and irinotecan) or FOLFOX (5-fluorouracil, leucovorin and oxaliplatin) plus bevacizumab, as first-line treatment. In both studies, maintenance therapy with 5-fluorouracil and bevacizumab until disease progression, unacceptable adverse events or consent withdrawal was planned following the upfront combination therapy [12,13,14].

Methods

Study design and procedures

TRIBE and TRIBE2 are two Phase III randomised, open-label, multicentre trials involving 1187 initially unresectable untreated mCRC patients. In the TRIBE study, 508 patients were randomised in a 1:1 ratio to receive FOLFIRI/bevacizumab or FOLFOXIRI/bevacizumab, while in the TRIBE2 trial, 679 patients were randomised in a 1:1 ratio to receive FOLFOX/bevacizumab followed by FOLFIRI/bevacizumab after disease progression (PD) or FOLFOXIRI/bevacizumab followed by the reintroduction of the same agents after PD. All treatments were administered up to 12 cycles in TRIBE and up to 8 cycles in TRIBE2, followed by 5-fluorouracil plus bevacizumab until PD, unacceptable adverse events, or consent withdrawal in both trials [12,13,14].

CEA and radiological disease assessments (contrast-enhanced chest and abdominal CT scan, or abdomen MRI and chest CT if contrast-enhanced CT scan was contraindicated) were performed every 8 weeks. PD assessment was based on investigator reported measurements according to RECIST version 1.0 and version 1.1 in TRIBE and TRIBE2, respectively.

In the present analysis, only patients with a baseline value of CEA ≥ 10 ng/mL prior to the beginning of the induction treatment, not progressing during induction therapy, and with at least one paired radiological evaluation and CEA assessment after the end of the induction therapy were included. CEA and CT scan assessments were considered paired if no more than 15 days between the two exams had elapsed.

Objectives

The primary aim was to investigate whether the increase of CEA from nadir (the lowest value of CEA after baseline) could predict progressive disease during maintenance or follow-up after the end of the induction therapy in mCRC patients enrolled in the TRIBE and TRIBE2 studies. In particular, we aimed at identifying a threshold for percent increase in CEA from nadir able to predict disease progression. To this purpose, all paired CEA and radiological assessments after the end of induction were analysed.

Statistics

The cut-off for percentage increase in CEA from nadir was selected using two approaches:

-

The optimal cut-off determined by ROC analysis to maximise the Youden index (i.e. sensitivity + specificity −1). Internal validation was conducted by calculating the bootstrap optimism-corrected AUC.

-

A clinically relevant cut-off to maximise sensitivity (i.e. to minimise the cases of radiological PD not associated with increasing of CEA).

Using each of the above criteria, the prediction performance of the selected cut-offs was evaluated by sensitivity, specificity, positive predictive value (PPV) and negative predictive value (NPV).

The chi-square test, Fisher’s exact test and Mann–Whitney test were used, when appropriate, to compare clinical and biological features between patients with CEA value <10 ng/mL and ≥10 ng/mL, and between patients with radiological PD not associated with CEA increase and all other patients. Odds ratios (OR) and the corresponding 95% Confidence Intervals (CIs) were estimated using the logistic regression model. Progression-free survival (PFS) was determined according to the Kaplan–Meier estimates method.

All statistical tests were two-sided, and P values of 0.05 or less were deemed significant. Statistical analyses were performed using SAS version 9.4 (SAS Institute, Inc., Cary, NC). The data cut-off for the present analysis was July 31, 2014 and July 30, 2019 for TRIBE and TRIBE2, respectively.

Results

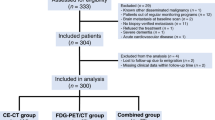

Among 1187 patients enrolled in TRIBE and TRIBE2 studies, 1117 had a baseline value of CEA available (733 (66%) with CEA ≥ 10 ng/mL and 384 (34%) with CEA < 10 ng/mL) (Fig. 1). As shown in Supplementary Table 1, patients with CEA < 10 ng/mL had more frequently an ECOG-PS of 0 (P < 0.01), metachronous disease (P < 0.01), previously resected primary tumour (P < 0.01), no liver metastases (P < 0.01), oligometastatic disease (P < 0.01) and low tumour burden (P < 0.01) as previously defined [15], BRAF mutated tumours (P = 0.01), and had previously received adjuvant therapy (P < 0.01).

Overall, 434 patients fulfilled the inclusion criteria of this study (Fig. 1). Their clinicopathological baseline characteristics are listed in Table 1. Most of the patients were males (61%) and had ECOG-PS 0 (89%), left-sided primary tumour (67%), synchronous disease (87%), liver metastases (89%) and RAS mutated tumour (63%). Median CEA levels were 99 ng/ml and 5 ng/ml at baseline and at nadir, respectively.

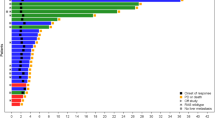

At the ROC analysis, the raise of CEA from nadir was significantly associated with PD with high predictive accuracy (AUC: 0.81, 95% CI: 0.79–0.83, P < 0.01) (Fig. 2). Internal validation of the model using the bootstrap optimism-corrected AUC showed similar results (AUC: 0.81, 95% CI: 0.78–0.84, P < 0.01). The optimal cut-off determined by the ROC analysis was 120%: a CEA increase of at least 120% from nadir differentiated between PD and no-PD with a sensitivity of 74% (95% CI: 68–79%) and a specificity of 78% (95% CI: 75–81%) (Table 2 and Fig. 3a). Using this cut-off, PD could be properly excluded in the 92% (95% CI: 90–93%) of radiological assessments and 67% of CT scans (i.e. cases with no CEA increase or increase <120%) could be avoided. Patients with an increase in CEA of at least 120% from nadir had a 48% probability of PD compared to 8% in patients with no increase or an increase of CEA < 120% (OR: 10.0, 95% CI: 7.29–13.82, P < 0.01). However, in 26% of PD cases, no CEA increase or a CEA increase <120% was observed (Fig. 3b). No specific characteristics able to distinguish these patients from those in which PD occurs with a CEA increase of at least 120% could be identified (Table 3). In patients with no radiological evidence of PD at the time of a CEA increase of at least 120% from nadir, such an increase anticipated the evidence of disease progression by a median time of 3.9 months. Of note, after a median follow-up of 26.3 months, PD never occurred in the 6% of cases (Supplementary Fig. 1A).

Based on the best sensitivity cut-off, any CEA increase from nadir differentiated between PD and no-PD with the sensitivity of 93% (95% CI: 89–95%) and specificity of 35% (95% CI: 32–39%) (Table 2 and Fig. 3c). Using this cut-off, PD could be excluded in 95% (95% CI: 92–96%) of patients and 29% of CT scans could be avoided. Patients with an increase in CEA from nadir had a 28% probability of PD compared to 5% in patients without CEA increase (OR: 6.8, 95% CI: 4.16–11.02, P < 0.01). Only in 7% of PD cases, the CEA levels did not increase from nadir (Fig. 3d). This group had more frequently oligometastatic disease at baseline with respect to other patients (P = 0.04) (Table 3). In patients with no radiological evidence of PD at the time of any CEA increase from nadir, such an increase anticipated the evidence of disease progression by a median time of 5.8 months. After a median follow-up of 28.3 months, 11% of these patients never experienced PD (Supplementary Fig. 1B).

Discussion

The evaluation of response to systemic therapies is based on radiological imaging by means of a CT scan or MRI every two to three months, according to RECIST [16].

During the upfront induction treatment, other measures of response, such as early tumour shrinkage and depth of response, provide additional useful prognostic information [17]. Moreover, radiological imaging is also essential to determine eligibility for local treatment modalities, including surgery, ablation, or radiation especially in potentially resectable diseases [11].

On the other side, during maintenance or treatment breaks, the main objective of disease reassessment by radiological imaging is the detection of PD or disease relapse. To this end, monitoring tumour evolution through a simple and cheap serum marker may limit the use of CT scans, thus avoiding unnecessary and relatively expensive series of imaging assessments.

According to international guidelines, CEA is the marker of choice to monitor mCRC in addition to radiological imaging. However, ASCO recommendations also highlight that data are not sufficient to routinely use CEA serum levels as an alternative to imaging [18]. Indeed, CEA increase during chemotherapy may also be related to treatment-induced changes in liver function [19], and other non-cancer-related causes, including acute or chronic inflammatory conditions. Moreover, only retrospective studies based on small numbers of patients that did not lead to the identification of a cut-off value for CEA variation able to predict radiological PD are available [6,7,8,9,10].

Here, we attempted at evaluating the effectiveness of different cut-off values of CEA increase to be adopted in the daily practice to predict PD during maintenance or treatment breaks following the completion of the first-line induction therapy. The optimal cut-off identified by the ROC analysis, a CEA increase of at least 120% from nadir, showed a predictive accuracy >80%, with high sensitivity and specificity (74% and 78%, respectively) confirmed by bootstrap as internal validation. Due to the high NPV, PD could be excluded in more than 90% of CEA measurements. On the other hand, due to the lower PPV (48%), the need to confirm PD with a radiological assessment is corroborated by our data. As a consequence, around 70% of the radiological assessments could be avoided adopting this cut-off value. The main limitation is that the CEA cut-off of 120% was not able to detect PD in 26% of cases.

In order to mitigate this issue, a different clinically relevant cut-off was chosen. A previous study, evaluating the association between CEA increase and disease control in 66 mCRC patients enrolled in a Phase I–II study, showed that any CEA increase from nadir or baseline is the best threshold to predict radiological PD at different time points with sensitivity and NPV of 100% [20]. Using any increase of CEA from nadir, the sensitivity raised from 74% to 93% and PD was not detected only in 7% of cases. Patients with no CEA increase at the time of PD were more frequently oligometastatic at baseline. Due to the lower specificity (35% vs 78%) and PPV (28% vs 48%), the number of avoidable radiological assessments was reduced at around 30% of cases.

Clear limitations of our work are the lack of centralised radiological review of imaging, and the local assessment of CEA. Indeed, no specific recommendation was reported in the study protocols to evaluate CEA always in the same site for the same patient during follow-up. Due to variability of the upper limit of normal CEA across laboratories, and to exclude both patients with normal CEA value (usually < 5 ng/ml), and with increased CEA for non-neoplastic conditions (generally < 10 ng/ml), we included only patients with baseline CEA ≥ 10 ng/mL in our analysis. Another limitation is the retrospective nature of the study, even if the large sample size based on prospectively collected data from two large Phase III randomised trials may mitigate this issue. In addition, all the patients included in this series received chemotherapy plus bevacizumab, so that we cannot conclude about the opportunity to translate present results to patients receiving an anti-EGFR-based upfront treatment.

In conclusion, in mCRC patients with baseline CEA ≥ 10 ng/mL, after the end of induction therapy, a CEA increase from nadir of at least 120% is able to predict the disease control status (PD vs no-PD) with the best sensitivity and specificity, and with very high NPV, and allows avoiding imaging in around the 70% of cases. Therefore, this threshold could be used during follow-up in most patients not candidates to metastases resection after the end of induction chemotherapy thus sparing a relevant amount of radiological assessments. Using any increase of CEA as cut-off further increases the sensitivity of this assessment while reducing specificity. This threshold should be especially evaluated when missing PD may cause immediate deterioration of patients’ conditions due to a high risk of disease-related symptoms (i.e. liver failure due to multiple liver metastases, intestinal occlusion due to peritoneal carcinomatosis, uncontrolled pain due to pelvic relapse). While novel tumour monitoring approaches, including circulating tumour DNA [21,22,23], are currently under investigation to properly assess their added value in the therapeutic route of affected patients and their cost-effectiveness, present data require confirmation before being translated into clinical practice.

Data availability

Datasets supporting the results of this work are available to editors, referees and readers promptly upon request.

References

Hammarström S. The carcinoembryonic antigen (CEA) family: structures, suggested functions and expression in normal and malignant tissues. Semin Cancer Biol. 1999;9:67–81.

Kuipers EJ, Grady WM, Lieberman D, Seufferlein T, Sung JJ, Boelens PG, et al. Colorectal cancer. Nat Rev Dis. Prim. 2015;1:15065.

Argilés G, Tabernero J, Labianca R, Hochhauser D, Salazar R, Iveson T, et al. Localised colon cancer: ESMO Clinical Practice Guidelines for diagnosis, treatment and follow-up. Ann Oncol J Eur Soc Med Oncol. 2020;31:1291–305.

Konishi T, Shimada Y, Hsu M, Tufts L, Jimenez-Rodriguez R, Cercek A, et al. Association of preoperative and postoperative serum carcinoembryonic antigen and colon cancer outcome. JAMA Oncol. 2018;4:309–15.

Duffy MJ, van Dalen A, Haglund C, Hansson L, Klapdor R, Lamerz R, et al. Clinical utility of biochemical markers in colorectal cancer: European Group on Tumour Markers (EGTM) guidelines. Eur J Cancer. 2003;39:718–27.

Iwanicki-Caron I, Di Fiore F, Roque I, Astruc E, Stetiu M, Duclos A, et al. Usefulness of the serum carcinoembryonic antigen kinetic for chemotherapy monitoring in patients with unresectable metastasis of colorectal cancer. J Clin Oncol. 2008;26:3681–6.

Yu P, Zhou M, Qu J, Fu L, Li X, Cai R, et al. The dynamic monitoring of CEA in response to chemotherapy and prognosis of mCRC patients. BMC Cancer. 2018;18:1076.

Michl M, Stintzing S, Fischer von Weikersthal L, Decker T, Kiani A, Vehling-Kaiser U, et al. CEA response is associated with tumor response and survival in patients with KRAS exon 2 wild-type and extended RAS wild-type metastatic colorectal cancer receiving first-line FOLFIRI plus cetuximab or bevacizumab (FIRE-3 trial). Ann Oncol. 2016;27:1565–72.

Ward U, Primrose JN, Finan PJ, Perren TJ, Selby P, Purves DA, et al. The use of tumour markers CEA, CA-195 and CA-242 in evaluating the response to chemotherapy in patients with advanced colorectal cancer. Br J Cancer. 1993;67:1132–5.

Gulhati P, Yin J, Pederson L, Schmoll H-J, Hoff P, Douillard J-Y, et al. Threshold change in CEA as a predictor of non-progression to first-line systemic therapy in metastatic colorectal cancer patients with elevated CEA. JNCI J Natl Cancer Inst. 2020;112:1127–36.

Van Cutsem E, Cervantes A, Adam R, Sobrero A, Van Krieken JH, Aderka D, et al. ESMO consensus guidelines for the management of patients with metastatic colorectal cancer. Ann Oncol J Eur Soc Med Oncol. 2016;27:1386–422.

Loupakis F, Cremolini C, Masi G, Lonardi S, Zagonel V, Salvatore L, et al. Initial therapy with FOLFOXIRI and bevacizumab for metastatic colorectal cancer. N Engl J Med. 2014;371:1609–18.

Cremolini C, Loupakis F, Antoniotti C, Lupi C, Sensi E, Lonardi S, et al. FOLFOXIRI plus bevacizumab versus FOLFIRI plus bevacizumab as first-line treatment of patients with metastatic colorectal cancer: updated overall survival and molecular subgroup analyses of the open-label, phase 3 TRIBE study. Lancet Oncol. 2015;16:1306–15.

Cremolini C, Antoniotti C, Rossini D, Lonardi S, Loupakis F, Pietrantonio F, et al. Upfront FOLFOXIRI plus bevacizumab and reintroduction after progression versus mFOLFOX6 plus bevacizumab followed by FOLFIRI plus bevacizumab in the treatment of patients with metastatic colorectal cancer (TRIBE2): a multicentre, open-label, phase 3, randomised, controlled trial. Lancet Oncol. 2020;21:497–507.

Moretto R, Rossini D, Zucchelli G, Lonardi S, Bergamo F, Santini D, et al. Oligometastatic colorectal cancer: prognosis, role of locoregional treatments and impact of first-line chemotherapy-a pooled analysis of TRIBE and TRIBE2 studies by Gruppo Oncologico del Nord Ovest. Eur J Cancer. 2020;139:81–9.

Eisenhauer EA, Therasse P, Bogaerts J, Schwartz LH, Sargent D, Ford R, et al. New response evaluation criteria in solid tumours: revised RECIST guideline (version 1.1). Eur J Cancer. 2009;45:228–47.

Heinemann V, Stintzing S, Modest DP, Giessen-Jung C, Michl M, Mansmann UR. Early tumour shrinkage (ETS) and depth of response (DpR) in the treatment of patients with metastatic colorectal cancer (mCRC). Eur J Cancer. 2015;51:1927–36.

Locker GY, Hamilton S, Harris J, Jessup JM, Kemeny N, Macdonald JS, et al. ASCO 2006 update of recommendations for the use of tumor markers in gastrointestinal cancer. J Clin Oncol J Am Soc Clin Oncol. 2006;24:5313–27.

Moertel CG, Fleming TR, Macdonald JS, Haller DG, Laurie JA, Tangen C. An evaluation of the carcinoembryonic antigen (CEA) test for monitoring patients with resected colon cancer. J Am Med Assoc. 1993;270:943–7.

Hermunen K, Lantto E, Poussa T, Haglund C, Österlund P. Can carcinoembryonic antigen replace computed tomography in response evaluation of metastatic colorectal cancer? Acta Oncol Stock Swed. 2018;57:750–8.

Tie J, Kinde I, Wang Y, Wong HL, Roebert J, Christie M, et al. Circulating tumor DNA as an early marker of therapeutic response in patients with metastatic colorectal cancer. Ann Oncol J Eur Soc Med Oncol. 2015;26:1715–22.

Corcoran RB, Chabner BA. Application of cell-free DNA analysis to cancer treatment. N Engl J Med. 2018;379:1754–65.

Siravegna G, Mussolin B, Venesio T, Marsoni S, Seoane J, Dive C, et al. How liquid biopsies can change clinical practice in oncology. Ann Oncol. 2019;30:1580–90.

Acknowledgements

The authors are grateful to GONO and ARCO Foundations, to all participating patients and their families, and to the GONO investigators from the participating Italian centres.

Funding

The study was supported by GONO and ARCO Foundations (no grant number applicable).

Author information

Authors and Affiliations

Contributions

Study concepts: RM, DR, VC, AF and CC. Study design: RM, DR, VC, AF and CC. Data acquisition: DR and VC. Quality control of the data and algorithms: DR and VC. Data analysis and interpretation: RM, DR, VC, AF and CC. Statistical analysis: RR and DR. Paper preparation: RM, DR and VC. Paper editing: AF and CC. Paper review: all authors.

Corresponding author

Ethics declarations

Competing interests

DR: travel, accommodations and expenses—Takeda. SL: consulting or advisory role—Amgen, Lilly, Merck Serono and Servier. Speakers’ Bureau—Bristol-Myers Squibb, Lilly, Merck Serono, Roche and Servier. Research funding—Amgen and Merck Serono. AZ: consulting or advisory role—Amgen, Bayer, Merck Serono and Servier. Speakers’ Bureau—Servier. AF: honoraria—Amgen, Lilly, Merck, Roche and Servier. Consulting or advisory role—Amgen, Bayer, Bristol-Myers Squibb, Lilly, Merck, Roche and Servier. Research funding—Amgen (Inst), Bayer (Inst), Merck (Inst), MSD (Inst), Roche (Inst), Sanofi (Inst) and Servier (Inst). Travel, accommodations and expenses—Amgen, Bayer, Merck, Roche and Servier. CC: honoraria—Amgen, Bayer, Merck, Roche and Servier. Consulting or advisory role—Amgen, Bayer, MSD, Roche. Speakers’ Bureau—Servier. Research funding—Bayer, Merck, Servier. Travel, accommodations and expenses—Roche and Servier. The remaining authors declare no competing interests.

Ethics approval and consent to participate

All patients provided written informed consent to study procedures before enrolment. TRIBE and TRIBE2 studies were conducted in accordance with the Declaration of Helsinki. Approval for TRIBE and TRIBE2 protocols were obtained from local ethics committees of participating sites.

Consent to publish

Not applicable.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

About this article

Cite this article

Moretto, R., Rossini, D., Conca, V. et al. CEA increase as a marker of disease progression after first-line induction therapy in metastatic colorectal cancer patients. A pooled analysis of TRIBE and TRIBE2 studies. Br J Cancer 125, 839–845 (2021). https://doi.org/10.1038/s41416-021-01483-x

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s41416-021-01483-x

This article is cited by

-

NLCECA score: a serum inflammatory-tumor biomarker score to predict survival of advanced perihilar cholangiocarcinoma after hepatic arterial infusion chemotherapy

Scientific Reports (2024)

-

CEA dynamics for predicting response after anti-EGFR monoclonal antibody treatment in metastatic colorectal cancer

Scientific Reports (2023)

-

The cancer inflammation prognostic index is a valuable biomarker for predicting the survival of patients with stage I–III colorectal cancer

Scientific Reports (2023)