Abstract

Mental health is an integral part of the quality of life of cancer patients. It has been found that mental health issues, such as depression and anxiety, are more common in cancer patients. They may result in catastrophic consequences, including suicide. Therefore, monitoring mental health metrics (such as hope, anxiety, and mental well-being) is recommended. Currently, there is lack of objective method for mental health evaluation, and most of the available methods are limited to subjective face-to-face discussions between the patient and psychotherapist. In this study we introduced an objective method for mental health evaluation using a combination of convolutional neural network and long short-term memory (CNN-LSTM) algorithms learned and validated by visual metrics time-series. Data were recorded by the TobiiPro eyeglasses from 16 patients with cancer after major oncologic surgery and nine individuals without cancer while viewing18 artworks in an in-house art gallery. Pre-study and post-study questionnaires of Herth Hope Index (HHI; for evaluation of hope), anxiety State-Trait Anxiety Inventory for Adults (STAI; for evaluation of anxiety) and Warwick-Edinburgh Mental Wellbeing Scale (WEMWBS; for evaluation of mental well-being) were completed by participants. Clinical psychotherapy and statistical suggestions for cutoff scores were used to assign an individual’s mental health metrics level during each session into low (class 0), intermediate (class 1), and high (class 2) levels. Our proposed model was used to objectify evaluation and categorize HHI, STAI, and WEMWBS status of individuals. Classification accuracy of the model was 93.81%, 94.76%, and 95.00% for HHI, STAI, and WEMWBS metrics, respectively. The proposed model can be integrated into applications for home-based mental health monitoring to be used by patients after oncologic surgery to identify patients at risk.

Similar content being viewed by others

Introduction

Cancer significantly affects the quality of life of patients. Psychologic evaluation and support of patients are key to alleviate emotional distress, enhance coping, and improve the ability of handling cancer diagnosis, subsequent management, and overall prognosis1,2. It has been shown that the extent of disease and physical impairment from treatment is associated with the severity of mood disorders among patients with lung cancer3. Moreover, the frequency of intrusive thoughts in many patients with cancer diagnosis, especially breast cancer survivors, is primarily related to psychological distress4. One study found that health metrics are associated with behavioral and psychological changes, and reducing psychological distress was crucial for better health2. Among patients with pancreatic cancer, 71% had symptoms of depression and 48% had anxiety-related disorders5. A strong association between suicidal ideation and depression in patients with advanced cancer has been reported, and the incidence of suicide in patients diagnosed with cancer is approximately double the incidence in the general population6,7.

Despite the general agreement about the importance of psychological assessment and/or intervention for patients with malignancy, there is lack of an objective method for evaluation of the mental health of this patient cohort8. Currently, mental health evaluation is performed by self-reported, subjective, questionnaire-based evaluation that may be time consuming and complicated9,10,11,12. One form included 65 items, and a shorter version was developed to facilitate administration among patients on cancer therapies that included 37 items9,10,11,13. Another utilized self-reported Patient Health Questionnaire-9 (PHQ-9) compound scores, based on typing patterns to detect tendency towards depression14.

The development of an objective mental health evaluation method that automatically monitors mental health metrics can improve and promote mental health evaluation, streamline referrals to psychosocial services, and improve patient care.

Some of the studies proposing objective methods for mental health evaluation summarized in Table 1. However, the majority of these studies propose methods that require feature engineering and also none of them takes into account a clinically approved assessment method.

Eye movements are a function of brain activity and extra-ocular muscle properties15. Motor function of the eye is linked to the central nervous system, therefore, disorders that affect the cerebral cortex, the brainstem, or the cerebellum can disturb ocular motor function16,17,18,19. Visual perception demands proper functioning of ocular motor systems which control the position and movement of eyes (to focus on corresponding areas of the retinas of both eyes20), in addition to pupil size adjustment. Human perception of the environment relies on the capacity of brain neural networks (especially within the visual cortex) to adapt to changes in stimuli21,22,23,24. Understanding the rate of adaptation to stimuli by cortical networks is essential to understand the relationship between sensory coding and behavior21,22,23,24. Prior studies have confirmed a strong association between ocular motor function and cognitive and mental disorders, including Alzheimer, Parkinson, Huntington and Wilson’s diseases17,25,26,27,28,29,30, in addition to psychiatric disorders such as autism31,32, attentional disorder18, antisocial personality disorder33, and post-traumatic stress disorder34,35. This relationship has been shown in experimental psychology and clinical neuroscience. It has already been shown that inhibitory saccades are impaired in Alzheimer’s disease, and is attributed to neurodegeneration in the frontal and prefrontal lobes17,29,30. Prior studies have also shown that after an unpleasant-stimulus, emotions were indexed by eye-blink startle, and left-sided frontal EEG activation occurred36. Visual metrics have also been proposed for detection of human physiological changes including mental fatigue37, cognition and cognitive development38, mental workload evaluation39,40, stress level in students41, threat-related emotional facial expressions in infancy42, shared attention for virtual agent43, and emotional arousal and autonomic activation44.

Deep learning is a set of training methods that allows automatic processing of inputs without feature engineering via hierarchical layers24,25. Due to its superiority in complex pattern recognition, deep learning has become state of the art, and has achieved success in areas such as strategic games26, speech recognition27, medical imaging28, and health informatics29. However, data about deep learning techniques for the evaluation of mental health remains scarce.

Combination of CNN and LSTM techniques has been frequently applied to physiological parameters for diagnosis, detection, and monitoring of health in several medical applications45,46,47. The LSTM technique is a special form of recurrent neural network (RNN)48. It is well-suited for the classification of time-series signals49. On the other hand, CNN technique is known for extracting the significant features in data50.

In this study, we investigate the feasibility of using deep learning and developing algorithm to utilize visual metrics for objective evaluation of mental health.

Methods

Subjects

Sixteen patients who underwent an oncologic surgical procedure at Roswell Park Comprehensive Cancer Center and nine volunteers without cancer were included (I 43217, NCT03688945). Procedures included: gastrointestinal (46%: gastrectomy, HIPEC, colectomy, and Whipple), urologic (35%: radical cystectomy, radical nephrectomy, and parastomal hernia repair), thoracic (13%: minimally invasive esophagostomy), and soft tissue procedures (7%, amputation). Patients did not require continuous monitoring and had no visual impairment. Informed consent was obtained from all participants.

Experimental setup

All participants (n = 25) attended at least one session for 15 min at the dedicated in-house art gallery, developed in collaboration with the Albright Knox Art Gallery. Eighteen pieces of art were selected based on the suggestions within the existing literature, by an expert panel of art professionals from the Albright-Knox Art Gallery51,52,53. All participants looked at each specific art for 50 s and they look at 18 art works in the same order. Only time portions that the subject is directly looking at each artwork are included in the analyses.

Art selection was guided by the Albright-Knox’s mission-philosophy of providing inspirational spaces that support learning and inquiry. Art that rewards multiple or prolonged viewing were selected in order to hold the viewer’s attention. Compositions and content interpreted as uplifting, positive or transcendent were also considered.

Selected artwork included three categories: abstract, figurative, and landscape (Fig. 1). Based on Getty’s Art and Architecture Thesaurus, abstract art referred to works that were not clear representations of objectives from reality and instead rely on shapes, colors, forms, and gestures to achieve their effects. Figurative featured representation of a form or figure generally that of human or animal and retained ties to the visible world. Landscape art depicted outdoor scenes typically dominated by the land, hills, fields, and other natural elements. The art gallery was divided into three spaces; each corresponds to the specific type of art included. Patients and participants were blinded to the type of art.

TobiiPro2 eyeglasses were used to record eye movement time-series metrics (sampling rate: 100 Hz) from participants while they viewed the art in the gallery. The recorded time series include 20 features of time, gaze point X, gaze point Y, gaze point 3D X, gaze point 3D Y, gaze point 3D Z, gaze direction left X, gaze direction left Y, gaze direction left Z, gaze direction right X, gaze direction right Y, gaze direction right Z, pupil position left X, pupil position left Y, pupil position left Z, pupil position left X, pupil position left Y, pupil position left Z, pupil diameter left, and pupil diameter right. Total recordings were 370, and the duration of each recording was 15 min.

Participant-based evaluations

All participants completed three validated post-study questionnaires assessing hope (HHI; Herth Hope Index), anxiety (STAI; State-Trait Anxiety Inventory for Adults), and mental wellbeing (WEMWBS; Warwick-Edinburgh Mental Wellbeing Scale).

HHI (range 12–48) is a validated 12-item scale with 4 response categories evaluating the level of hope. Higher HHI scores indicated higher hope level54. Scores were categorized into class 0 (low) (12 < HHI < 36), class 1 (intermediate) (36 < HHI < 42), and class 2 (high) (42 < HHI < 48) levels of hope55,56.

STAI (range 20–80) is a 20-item scale with four response categories. Higher values indicate higher anxiety. Based on psychotherapy literature, we considered 20 < STAI < 44 as class 0 (normal), 44 < STAI < 54 as class 1 (risk of anxiety and suggests a mood disorder), and 54<STAI < 80 as class 2 (significant symptoms of anxiety)57.

WEMWBS (range 14–70): it is 14-item scale with five response categories covering functioning aspects of mental well-being. Three categories were considered for WEMWBS, class 0: 14 < WEMWBS < 42 (low), class 1: 42 < WEMWBS < 59 (intermediate), and class 2: 59 < WEMWBS < 70 (high)58,59.

Visual data preprocessing

Tobii Pro eye glasses2 are an infrared video-based remote eye-tracking system used to record visual time series at 100 Hz. The moving average filter was applied to gaze data for noise reduction, while the window size of three points was considered.

Deep neural network

Our mental health metrics (HHI, STAI, WEMWBS) evaluation method was formalized as a supervised three-class classification problem. The Inputs to the model were extracted by using multivariate visual time series. The output of the model was the predicted labels representing corresponding mental health metrics levels of individuals, which can be encoded as class ‘0’, class ‘1’, class ‘2’. The ground-truth mental health metrics were acquired from participants’ subjective assessments and clinical cutoff scores. The objective cost function for training the network was defined as a categorical cross-entropy cost, to train CNN-LSTM to output a probability over the three classes for each input.

Network architecture

The architecture of our proposed deep neural network consists of 1D convolution, dropout, maxpooling, flatten, lstm, fully connected, and softmax (Table 2). The depth of the network was chosen after trial-and-error from the training/validation procedure.

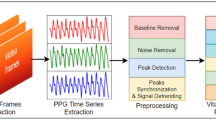

The CNN-LSTM architecture, an LSTM architecture specifically designed for sequence prediction problems, involves using Convolutional Neural Network (CNN) layers for feature extraction on input data combined with LSTMs to support sequence prediction60. The CNN LSTM model will read subsequences of the main sequence (time series) in as blocks/wraps, extract features from each block, then allow the LSTM to interpret the features extracted from each block45.

Input

We used the aforementioned recorded visual metrics to develop inputs to deep neural network architecture. Visual metrics recorded from 16 patients and 9 volunteers without cancer were used to train the model and tune deep neural network parameters. Data Augmentation: Limited size of the available dataset might cause overfitting of the deep model61. To prevent happening this problem, we applied data augmentation techniques to time-series data to enlarge the size of the dataset and increase classification accuracy62,63,64. Before inputting data into our network, we carried out a label-preserving cropping with a sliding window, where the motion sub-sequences were extracted using a sliding window with a fixed-size, within the trial. The annotation for each window is identical to the class label of the original trial, from which the sub-sequences are extracted. We considered a time window moving throughout 20 considered visual time series to extract blocks. One approach to implementing this model was to split each window of 51-time points into subsequences for the CNN model to process. The 51-time points in each window were split into three subsequences of 17-time points by wrapper layer. We then considered sequences with a length of 17-time points and 20 features as input to the CNN-LSTM algorithm.

Implementation

A Convolutional neural network-Long short-term memory (CNN-LSTM) algorithm was implemented using Keras library with Tensorflow backend based on Python 3.6. During the optimization, our network was trained by minimizing the categorical cross-entropy cost between the predicted and ground-truth labels. To train the net efficiently, we run mini-batch with the size of 64 to update gradient descent, which calculated network parameters on a subset of the training data at each iteration65. For training, totally 250 epochs were run, and the network parameters were optimized by Adam optimizer66, and parameters of learning rate = 0.0001, beta1 = 0.9, beta2 = 0.999, epsilon = 1 × 10−8. Overview of the developed model is represented in Fig. 2. Networks with the same architecture are trained to evaluate HHI, STAI, and WEMWBS metrics.

Three different networks with the same architecture are trained to evaluate HHI, STAI, and WEMWBS metrics. The HHI, STAI, and WEMWBS are metrics used for subjective evaluation of hope, stress/anxiety, and mental well-being, respectively. Blocked data through visual metrics time series were used as input to the CNN-LSTM model. In this study, sliding window length ‘L’ is 51 and 20 visual metrics are considered as dimension of input to the network. The output of the model is level of mental well-being (evaluated by WEMWBS metric), anxiety/stress (evaluated by STAI metric and hope (evaluated by HHI metric) based on three categories of low (class label: 0), intermediate (class label: 1), and high (class label: 2) categories.

To validate the model classification, we used leave-one-supertrial-out (LOSO) cross-validation schemes in this work. This process is repeated in five fold where each fold consists of each one of the five supertrials. The average of all five-fold performance measures in each test set is reported and gives classification results.

Results

We evaluate the proposed deep learning approach for self-proclaimed mental health status classification. The confusion matrices of classification results are obtained from the testing set under the five-fold LOSO cross-validation scheme. Figures 3–5 show the results of three-class self-proclaimed mental health status classification.

Hope evaluation

The F1-score: 93.81%, and accuracy: 93.81% for HHI level classification. Confusion matrix for HHI level classification is shown in Fig. 3.

STAI evaluation

The F1-score: 94.77% and accuracy: 94.76% (confusion matrix shown in Fig. 4).

WEMWBS evaluation

The F1-score: 95% and accuracy: 95% (confusion matrix shown in Fig. 5).

Discussion

In this study we used visual metrics as source of input to CNN-LSTM model to objectify the evaluation of mental health status metrics (HHI, STAI, and WEMWBS), considering participant-based responses for validated questionnaires. Results showed promising accuracy 93.81%, 94.76%, and 95.00% for HHI, STAI, and WEMWBS, respectively. Our primary goal was to introduce and evaluate the applicability of a deep learning-based approach for objective evaluation of mental health in patients after major oncologic surgery.

We developed an end-to-end algorithm and integrated temporal dynamics on rich representations of visual time series, while simultaneously classifying mental health. LSTM has the characteristic of resolving the problem of vanishing gradients, which is a problem of RNNs48. LSTM introduces the concept of a memory unit. They can decide when to forget and when to remember hidden states for future time steps. Hence, LSTMs are able to train models on long term dependencies49. Characteristics of CNN and LSTM algorithms make their combination suitable for mental health evaluation using visual metrics time series. We recorded visual metrics in an acceptable and patient-friendly approach that would pose minimal or no additional burden or discomfort for patients after surgery. Most studies for mental health evaluation rely on subjective evaluations in combination with physiological parameters. The proposed evaluation method is end-to-end and does not require features engineering and preprocessing. The developed model may be used for formal evaluation or assessment at home to objectively monitor patient’s mental health without the need for a hospital visit in the future.

Despite the uniqueness of this study, several limitations exist. Subjective mental health metrics evaluations were based on scores given at the end of each session (observing 18 art pieces). The effect on mental health because of a particular artwork was not considered. The small number of participants is another limitation. The developed model lacks rigorous testing and evaluation for medical application. However, we believe it has the potential to monitor mental health in patients after oncologic surgery in an objective fashion, with minimal discomfort to patients.

Conclusion

A novel data-driven deep architecture model for the objective classification of mental health metrics was developed. This model, once validated, will serve as a real-time, patient-friendly model of mental health assessment. Future work would include further validation of the model for objective and remote monitoring of mental health, as well as investigating the effect of the specific surgery type or artwork viewed.

References

Andersen, B. L. Psychological interventions for cancer patients to enhance the quality of life. In R. M. Suinn & G. R. VandenBos (Eds.), Cancerpatients and their families: Readings on disease course, coping, and psychological interventions (p. 131–162). American Psychological Association. https://doi.org/10.1037/10338-006 (1999).

Andersen, B. L. et al. Distress reduction from a psychological intervention contributes to improved health for cancer patients. Brain, Behav., Immun. 21, 953–961 (2007).

Cella, D. F. et al. The relationship of psychological distress, extent of disease, and performance status in patients with lung cancer. Cancer 60, 1661–1667 (1987).

Vickberg, S. M., Bovbjerg, D. H., DuHamel, K. N., Currie, V. & Redd, W. H. Intrusive thoughts and psychological distress among breast cancer survivors: Global meaning as a possible protective factor. Behav. Med. 25, 152–160 (2000).

Passik, S. D. & Breitbart, W. S. Depression in patients with pancreatic carcinoma: diagnostic and treatment issues. Cancer 78, 615–626 (1996).

Anguiano, L., Mayer, D. K., Piven, M. L. & Rosenstein, D. A literature review of suicide in cancer patients. Cancer Nurs. 35, E14–E26 (2012).

Misono, S., Weiss, N. S., Fann, J. R., Redman, M. & Yueh, B. Incidence of suicide in persons with cancer. J. Clin. Oncol. 26, 4731 (2008).

Brunault, P. et al. Major depressive disorder, personality disorders, and coping strategies are independent risk factors for lower quality of life in non‐metastatic breast cancer patients. Psycho‐Oncology 25, 513–520 (2016).

Hann, D., Winter, K. & Jacobsen, P. Measurement of depressive symptoms in cancer patients: evaluation of the Center for Epidemiological Studies Depression Scale (CES-D). J. Psychosom. Res. 46, 437–43. (1999).

Manne, S. & Schnoll, R. Measuring cancer patients’ psychological distress and well-being: a factor analytic assessment of the Mental Health Inventory. Psychol. Assess. 13, 99–109 (2001).

Cassileth, B. R. et al. A psychological analysis of cancer patients and their next-of-kin. Cancer 55, 72–76 (1985).

Mystakidou, K., Tsilika, E., Parpa, E., Galanos, A. & Vlahos, L. Brief cognitive assessment of cancer patients: evaluation of the Mini-Mental State Examination (MMSE) psychometric properties. Psychooncology 16, 352–357 (2007).

Baker, F., Denniston, M., Zabora, J., Polland, A. & Dudley, W. N. A. A POMS short form for cancer patients: psychometric and structural evaluation. Psychooncology 11, 273–81. (2002).

Mastoras, R. E. et al. Touchscreen typing pattern analysis for remote detection of the depressive tendency. Sci. Rep. 9, 13414 (2019).

Holland, C. & Komogortsev, O. V. Biometric identification via eye movement scanpaths in reading. 2011 International Joint Conference on Biometrics (IJCB). pp. 1–8 (Washington, DC, 2011) https://doi.org/10.1109/IJCB.2011.6117536.

Vidal, M., Turner, J., Bulling, A. & Gellersen, H. Wearable eye tracking for mental health monitoring. Comput. Commun. 35, 1306–1311 (2012).

Crawford, T. J. et al. Inhibitory control of saccadic eye movements and cognitive impairment in Alzheimer’s disease. Biol. psychiatry 57, 1052–1060 (2005).

Ramat, S., Leigh, R. J., Zee, D. S. & Optican, L. M. What clinical disorders tell us about the neural control of saccadic eye movements. Brain 130, 10–35 (2006).

Cogan, D. G., Chu, F. C. & Reingold, D. B. Ocular signs of cerebellar disease. Arch. Ophthalmol. 100, 755–760 (1982).

Sharpe, J. & Wong, A. M. Anatomy and physiology of ocular motor systems. Walsh Hoyt’s Clin. Neuro-Ophthalmol. 1, 809–885 (2005).

Sharpee, T. O. et al. Adaptive filtering enhances information transmission in visual cortex. Nature 439, 936 (2006).

Schwabe, L. & Obermayer, K. Rapid adaptation and efficient coding. Biosystems 67, 239–244 (2002).

Wainwright, M. J. Visual adaptation as optimal information transmission. Vis. Res. 39, 3960–3974 (1999).

Vinje, W. E. & Gallant, J. L. Sparse coding and decorrelation in primary visual cortex during natural vision. Science 287, 1273–1276 (2000).

Rosenhall, U., Johansson, E. & Gillberg, C. Oculomotor findings in autistic children. J. Laryngol. Otol. 102, 435–439 (1988).

Kuskowski, M. A. Eye movements in progressive cerebral neurological disease. In Neuropsychology of Eye Movement. p. 159–188 (Psychology Press, 2013).

White, O. B., Saint-Cyr, J. A., Tomlinson, R. D. & Sharpe, J. A. Ocular motor deficits in Parkinson’s disease: II. Control of the saccadic and smooth pursuit systems. Brain 106, 571–587 (1983).

Fletcher, W. A. & Sharpe, J. A. Smooth pursuit dysfunction in Alzheimer’s disease. Neurology 38, 272–272 (1988).

Kaufman, L. D., Pratt, J., Levine, B. & Black, S. E. Antisaccades: a probe into the dorsolateral prefrontal cortex in Alzheimer’s disease. A critical review. J. Alzheimer’s Dis. 19, 781–793 (2010).

Pierrot-Deseilligny, C., Milea, D. & Müri, R. M. Eye movement control by the cerebral cortex. Curr. Opin. Neurol. 17, 17–25 (2004).

Noris, B., Benmachiche, K., Meynet, J., Thiran, J. P. & Billard, A. G. Analysis of head-mounted wireless camera videos for early diagnosis of autism. In Computer Recognition Systems 2. p. 663–670 (Springer, 2007).

Boraston, Z. & Blakemore, S. J. The application of eye‐tracking technology in the study of autism. J. Physiol. 581, 893–898 (2007).

Costa, L. & Bauer, L. O. Smooth pursuit eye movement dysfunction in substance-dependent patients: mediating effects of antisocial personality disorder. Neuropsychobiology 37, 117–123 (1998).

Davidson, P. R. & Parker, K. C. Eye movement desensitization and reprocessing (EMDR): a meta-analysis. J. Consulting Clin. Psychol. 69, 305 (2001).

Ross, R. J. et al. Rapid eye movement sleep disturbance in posttraumatic stress disorder. Biol. Psychiatry 35, 195–202 (1994).

Jackson, D. C. et al. Now you feel it, now you don’t: Frontal brain electrical asymmetry and individual differences in emotion regulation. Psychological Sci. 14, 612–617 (2003).

Yamada, Y. & Kobayashi, M. Detecting mental fatigue from eye-tracking data gathered while watching video: evaluation in younger and older adults. Artif. Intell. Med. 91, 39–48 (2018).

Eckstein, M. K., Guerra-Carrillo, B., Singley, A. T. & Bunge, S. A. Beyond eye gaze: What else can eyetracking reveal about cognition and cognitive development?. Dev. Cogn. Neurosci. 25, 69–91 (2017).

Wu, C. et al. Eye-tracking metrics predict perceived workload in robotic surgical skills training. Hum. Factors 62, 1365–1386 (2019).

Di Stasi, L. L., Antolí, A., Gea, M. & Cañas, J. J. A neuroergonomic approach to evaluating mental workload in hypermedia interactions. Int. J. Ind. Ergonomics 41, 298–304 (2011).

Jyotsna, C. & Amudha, J. Eye Gaze as an Indicator for Stress Level Analysis in Students. In 2018 International Conference on Advances in Computing, Communications and Informatics (ICACCI). (IEEE, 2018).

Hoehl, S. & Striano, T. Neural processing of eye gaze and threat‐related emotional facial expressions in infancy. Child Dev. 79, 1752–1760 (2008).

Peters, C., Asteriadis, S., Karpouzis, K. & de Sevin, E. Towards a real-time gaze-based shared attention for a virtual agent. In International Conference on Multimodal Interfaces. (2008).

Bradley, M. M., Miccoli, L., Escrig, M. A. & Lang, P. J. The pupil as a measure of emotional arousal and autonomic activation. Psychophysiology 45, 602–607 (2008).

Oh, S. L., Ng, E. Y., San Tan, R. & Acharya, U. R. Automated diagnosis of arrhythmia using combination of CNN and LSTM techniques with variable length heart beats. Comput. Biol. Med. 102, 278–287 (2018).

Tan, J. H. et al. Application of stacked convolutional and long short-term memory network for accurate identification of CAD ECG signals. Comput. Biol. Med. 94, 19–26 (2018).

Yıldırım, Ö., Pławiak, P., Tan, R. S. & Acharya, U. R. Arrhythmia detection using deep convolutional neural network with long duration ECG signals. Comput. Biol. Med. 102, 411–420 (2018).

Hüsken, M. & Stagge, P. Recurrent neural networks for time series classification. Neurocomputing 50, 223–235 (2013).

Hochreiter, S. & Schmidhuber, J. Long short-term memory. Neural Comput. 9, 1735–1780 (1997).

LeCun, Y. & Bengio, Y. Convolutional networks for images, speech, and time series. Handb. Brain Theory Neural Netw. 3361, 1995 (1995).

Friedrich, M. The arts of healing. JAMA 281, 1779–1781 (1999).

Lankston, L., Cusack, P., Fremantle, C. & Isles, C. Visual art in hospitals: case studies and review of the evidence. J. R. Soc. Med. 103, 490–499 (2010).

Poggi, E. Beyond traditional treatment: establishing art as therapy. Healthcare Design Magazine (2006).

Herth, K. Abbreviated instrument to measure hope: development and psychometric evaluation. J. Adv. Nurs. 17, 1251–1259 (1992).

Dembo, J. S. & Clemens, N. A. The ethics of providing hope in psychotherapy. J. Psychiatr. Pract.® 19, 316–322 (2013).

Duggleby, W., Ghosh, S., Cooper, D. & Dwernychuk, L. Hope in newly diagnosed cancer patients. J. Pain. Symptom Manag. 46, 661–670 (2013).

Kvaal, K., Ulstein, I., Nordhus, I. H. & Engedal, K. The Spielberger state‐trait anxiety inventory (STAI): the state scale in detecting mental disorders in geriatric patients. Int. J. Geriatr. psychiatry.: A J. psychiatry. late life allied Sci. 20, 629–634 (2005).

Warwick-Edinburgh Mental Well-Being Scale (WEMWBS) User Guide. (2008).

Stranges, S., Samaraweera, P. C., Taggart, F., Kandala, N. B. & Stewart-Brown, S. Major health-related behaviours and mental well-being in the general population: the Health Survey for England. BMJ open 4, e005878 (2014).

Donahue, J. et al. Long-term recurrent convolutional networks for visual recognition and description. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2015).

LeCun, Y., Bengio, Y. & Hinton, G. Deep learning. nature 521, 436–444 (2015).

Cui, Z., Chen, W. & Chen, Y. Multi-scale convolutional neural networks for time series classification. arXiv preprint arXiv: https://arxiv.org/abs/1603.06995 (2016).

Le Guennec, A., Malinowski, S. & Tavenard, R. Data augmentation for time series classification using convolutional neural networks. In ECML/PKDD workshop on advanced analytics and learning on temporal data (2016).

Um, T. T. et al. Data augmentation of wearable sensor data for parkinson’s disease monitoring using convolutional neural networks. In Proceedings of the 19th ACM International Conference on Multimodal Interaction. pp. 216–220 (2017).

Li, M., Zhang, T., Chen, Y. & Smola, A. J. Efficient mini-batch training for stochastic optimization. In Proc. 20th ACM SIGKDD international conference on Knowledge discovery and data mining. (ACM, 2014).

Kingma, D. P. & Ba, J. Adam: a method for stochastic optimization. arXiv preprint arXiv: https://arxiv.org/abs/1412.6980 (2014).

Zhai, J. & Barreto, A. Stress recognition using non-invasive technology. In Proceedings of the 19th International Florida Artificial Intelligence Research Society Conference FLAIRS. pp. 395–400 (2006).

Alghowinem, S. et al. Multimodal depression detection: fusion analysis of paralinguistic, head pose and eye gaze behaviors. IEEE Trans. Affect. Comput. 9, 478–490 (2016).

Acknowledgements

This work was funded by the Roswell Park Alliance Foundation. Authors thank artists from the Albright-Knox Art Gallery, Buffalo, NY; Joe Lin-Hill and Russell Davidson, for providing art pieces.

Author information

Authors and Affiliations

Contributions

Conceptualization: S.B.S., K.G. Data curation: S.B.S., Z.L., A.A.H., K.A.G. Formal analysis: S.B.S. Funding acquisition: K.A.G. Methodology: S.B.S., K.G. Validation: S.B.S. Writing – original draft: S.B.S. Writing – review & editing: S.B.S., A.A.H., A.S.E., and K.A.G.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Shafiei, S.B., Lone, Z., Elsayed, A.S. et al. Identifying mental health status using deep neural network trained by visual metrics. Transl Psychiatry 10, 430 (2020). https://doi.org/10.1038/s41398-020-01117-5

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41398-020-01117-5