Abstract

BACKGROUND

Screen-based media use is prevalent in children and is associated with health risks. American Academy of Pediatrics (AAP) recommendations involve access to screens, frequency, content, and co-viewing. The aim of this study was to test the ScreenQ, a composite measure of screen-based media use.

METHODS

ScreenQ is a 15-item parent report measure reflecting AAP recommendations. Range is 0–26, higher scores reflecting greater non-adherence. With no “gold standard” available, four validated measures of skills and parenting practices cited as influenced by overuse were applied as the external criteria, including expressive language, speed of processing, emergent literacy, and cognitive stimulation at home. Psychometric analyses involved Rasch methods and Spearman’s ρ correlations.

RESULTS

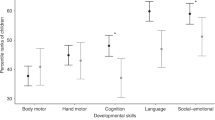

Sixty-nine families were administered ScreenQ. Child age ranged from 36 to 63 months old (52 ± 8; 35 girls). Mean ScreenQ score was 9.6 (±5.0; 1–22). Psychometric properties were strong (rCo-α = 0.74). ScreenQ scores were negatively correlated with CTOPP-2 (Comprehensive Test of Phonological Processing, Second Edition) (rρ = −0.57), EVT-2 (Expressive Vocabulary Test, Second Edition) (rρ = −0.45), GRTR (Get Ready to Read!) (rρ = −0.30) and StimQ-P (rρ = −0.42) scores (all p ≤ 0.01).

CONCLUSIONS

ScreenQ shows potential as a composite measure of screen-based media use in young children in the context of AAP recommendations. ScreenQ scores were correlated with lower executive, language and literacy skills, and less stimulating home cognitive environment.

Similar content being viewed by others

Introduction

Screen-based media are prevalent in children’s lives beginning in infancy, and are a major aspect of the “ecosystem” in which they learn and form relationships.1 In addition to traditional programming, rapidly emerging technologies, particularly portable devices, provide unprecedented access to media of a vast range of content.1,2 American Academy of Pediatrics (AAP) recommendations cite cognitive, behavioral, and health risks of excessive and inopportune use.3 These include obesity,4 language delay,5 poor sleep,2,6 impaired executive function7 and general cognition,8 and decreased parent–child engagement.9,10,11 Recent evidence suggests potential neurobiological risks, as well.12,13,14 Despite these risks and recommendations, use has been increasing, recently estimated at almost 3 h per day between 3 and 8 years old.1

Accompanying this technological upheaval are a growing number of variables fueling risks and benefits at different ages. Categories cited in AAP recommendations include access to screens (e.g., exclude from bedrooms, monitor portable devices), frequency of use (e.g., limit under 1 h per day, defer initiation until age 18 months), content (e.g., non-violent, slower-paced), and potential to facilitate grownup–child interaction (e.g., encourage co-viewing).3 To accurately assess the impact of these variables on child development and health, it is critical to measure them. However, existing measures largely involve television,15 and other approaches often used involve parent report diaries16 or single frequency items for various media.17 There is currently no validated composite measure reflecting current modes of use (e.g., tablets, apps), reflecting an important evidence gap.

The purpose of this study was to introduce and psychometrically assess a novel, composite parent report measure of screen-based media use (ScreenQ) in a sample of preschool-age children. Such a measure is useful to provide a holistic view of adherence with AAP recommendations at this age, which in turn reflect increasingly multi-dimensional aspects of use (notably, via portable devices) that are unwieldly or impossible to capture with individual items. The ScreenQ was developed in accordance with a conceptual model involving four domains cited in 2016 AAP recommendations that are generalizable across technological platforms: access to screens, frequency of use, media content, and caregiver–child co-viewing.3 It has been pilot tested and refined over the past 2 years, applying rigorous psychometric criteria.18 Given the lack of a “gold standard” measure of screen time use in children reflecting current use and that direct observation was not feasible for this study, external validity was explored using validated measures reflecting documented cognitive and parenting-related risks of excessive or inopportune use: Comprehensive Test of Phonological Processing, Second Edition (CTOPP-2; Rapid Object Naming subtest), Expressive Vocabulary Test, Second Edition (expressive language), Get Ready to Read! (GRTR; emergent literacy skills),19 and StimQ-P (cognitive stimulation in the home).20 Psychometric analyses explored internal consistency and criterion-related validity referenced to these measures. In addition to beginning to establish the validity of ScreenQ for use in young children, we hypothesized that scores would correlate with lower executive, language and literacy skills and less stimulating home cognitive environment.

Methods

Sample

This study was conducted from August 2017 to November 2018, and involved 69 parent–child dyads enrolled in a magnetic resonance imaging (MRI)-based study of emergent literacy development. Recruitment was via advertisement at a large children’s medical center and local pediatric primary care clinics. Inclusion criteria were: 3 to 5 years old, born at least 36 weeks gestation, native English-speaking household, no history of neurodevelopmental disorder conferring risk of delays, and no contraindications to MRI. Demographic information collected for analyses during the study included child age, gender, race, parental marital status, household income, and maternal education. Families were compensated for time and travel, and this study was approved by our Institutional Review Board.

ScreenQ

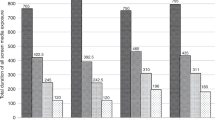

The conceptual model for the ScreenQ was derived from aspects of media use cited in current AAP recommendations: access to screens, frequency of use, media content, and caregiver–child co-viewing.3 These aspects are incorporated as subscales. The measure’s name was inspired by the StimQ assessment of home cognitive environment, which involves similar conceptual domains in its reading subscale.20 The ScreenQ is intended to be administered by a clinical provider. Wording was refined in consultation with experts in measure development at our institution and parents of young children (largely of low SES) attending a hospital-based primary care clinic, and a previous version was pilot tested with parents of preschool-age children during a preceding study and revised.18 Of note, based on these analyses and concerns about item wording and scoring, an item regarding “educational” use was removed, and will be added to a future version. Estimated reading level of the current version is 6th grade (Flesch–Kincaid criteria), which is scripted and has 15 items summarized in Fig. 1 and also Table 2. Item responses are largely binary or ordinal (Likert scale), although some involving frequency are numerical and translated to an ordinal score in the context of AAP recommendations. Ordinal scoring assigns 0 to 2 points. Weightings for binary items were determined a priori based on the level of evidence of risks, such as use in bedrooms (2 points; high) and fast- vs. slower-paced content (1 point; moderate). Total range is 0–26 points, with a score of 0 reflecting perfect adherence to AAP recommendations and higher scores reflecting greater use contrary to recommendations.

Four domains (subscales) of the ScreenQ measure, which correspond to aspects of use cited in AAP screen time recommendations for young children: Access to screens, frequency of use, content, and caregiver–child co-viewing. The 15 ScreenQ items are summed for a total score ranging from 0 (perfect adherence with recommendations) to 26 (extreme non-adherence).

For this study, research coordinators administered the ScreenQ to a custodial parent in a private room before or during the child’s MRI, with responses entered into a REDCap database21 for subsequent analysis.

Reference measures

Four criterion-referenced standards of child cognitive abilities and parenting practices were utilized, which were selected on the basis of cited associations with screen-based media use in children. The EVT-2 (Pearson) is a norm-referenced assessment of expressive vocabulary for children of age 2.5 years and older.22 The CTOPP-2 (Pearson; Rapid Object Naming subtest) is a comprehensive, norm-referenced instrument designed to assess phonological processing abilities as prerequisites to reading fluency in children.23 The Rapid Object Naming subtest was selected given the pre-kindergarten age range of this study, as it is designed to assess efficiency of retrieval of information from memory and execution of operations quickly (speed of processing) in younger children who have not yet mastered letters, numbers, or colors. EVT-2 and CTOPP-2 each generate an age-adjusted standard score. The GRTR is a norm-referenced assessment of core emergent literacy skills for children 3–6 years of age, predictive of reading outcomes.19 The StimQ-P is a validated measure of cognitive stimulation in the home for children 3 to 6 years old, and consists of four subscales involving mostly “yes/no” questions: (1) availability of learning materials, (2) reading, (3) parental involvement in developmental advance, and (4) parental verbal responsivity.20

For this study, the EVT-2, CTOPP-2, and GRTR were administered to the child prior to MRI, and the StimQ was administered to the parent after the ScreenQ.

Statistical analyses

Statistical analyses proceeded in four steps. First, demographic characteristics were computed for the entire sample of 69 children. Second, descriptive statistics were computed for all ScreenQ items and subscales, as well as our external standards and relevant demographic variables. Third, ScreenQ items were analyzed individually using a combination of classical and modern-theory Rasch analysis. Finally, relationships between ScreenQ scores and those on external standards were explored.

Classical analyses are generally correlation-based techniques used to document reliability and validity at the test level. Rasch models, on the other hand, are a family of mathematical, one parameter, logistic regression models that estimate item difficulty, and are designed for use with categorical, item-level data. Within this family of models, partial-credit Rasch modeling was deemed most appropriate given the ordinal, but varying nature of response options across items (e.g., some items were scored as 0, 1, while others were scored as 0, 1, 2).24 Rasch coefficients are expressed as log odds ratios (logits), with the average difficulty (or endorsability) score centered at zero and successively higher scores (+1, 2, 3 logits) representing increasingly higher difficulty levels, and successively lower scores (−1, 2, 3 logits) representing increasingly easier difficulty levels when compared to the average score, comparable in many ways to interpreting other standardized scores that are 1, 2, or 3 standard deviations (SDs) away from their mean. All 15 ScreenQ items were evaluated for difficulty, smoothness, modality, polarity, and sufficiency of observations (density) across responses. Model fit was tested for each item to identify any that were markedly or unnecessarily influencing scale-level distributions.

Preliminary estimates of validity were computed, beginning with Cronbach’s coefficient alpha (αCr) as our measure of internal consistency and Spearman’s ρ (rρ) correlation coefficients between ScreenQ total score and EVT-2 standard, CTOPP-2 Rapid Object Naming standard, GRTR, and StimQ-P scores as measures of criterion-related validity. Spearman’s ρ coefficients were chosen as our measure of association due to the non-normative nature of the distributions.

For all analyses, the criterion for statistical significance was set at an unadjusted α = 0.05 level. All analyses were conducted using SAS v9.4 and Winsteps v4.0 software.

Results

Demographic characteristics

Mean age for the 69 children in the study was 52 ± 8 months old (range 36–63 months; 51% girls). Our sample was diverse and balanced in terms of household income and maternal education. Demographics are summarized in Table 1.

Descriptive statistics for ScreenQ and external standards

Research coordinators reported no difficulty administering the ScreenQ, with all parents completing the survey in <2 min with no concerns with item clarity noted. Mean ScreenQ score was 9.6 (±5.0; range 1–22).

Mean CTOPP-2 standard score was 9.1 (±3.2; range 2–15). Mean EVT-2 standard score was 110.3 (±15.4; 87–144), with 70% of scores in the average range for age (±1 SD; 85–115). Mean GRTR score was 16.5 (±6.4; 5–25), with 18% below average for age, 38% average, and 44% above average. Mean STIMQ-P score was 41.8 (±6.9; 21–52).

ScreenQ item analysis

Item analytics for the ScreenQ are provided in Table 2. These assess performance of individual items, whether each adds value to the measure and how well they work together, referenced to established criteria. Item response density was over a minimum 5% for each response option. Rasch estimates of item difficulty were balanced overall and in terms of symmetry around zero (average difficulty), within the desired ±2 SD ranging from −1.22 (less difficult; item 4) to 1.45 (more difficult; item 10). “Difficulty,” also called endorseability, reflects the degree to which parents feel that the item reflects their child’s screen use. Point-measure correlations were all positive and ranged from 0.14 (item 12) to 0.71 (item 7), indicating a low to moderately high relationship between each of the ScreenQ items and the entire scale, with all items contributing unique variance to the overall score. Item fit statistics using empirically derived z values were all at or below the traditional ± 2 SDs, suggesting no outliers likely to influence the distributions.25 Inter-item correlations were low to moderate, with significant correlations ranging from rρ = 0.25 (items 1 and 2; bedroom access—has a portable device) to 0.72 (items 1 and 8; bedroom access—use to help sleep), shown in Table 3. Items 1 and 7 (bedroom access, hours per day of use) were significantly correlated with the greatest number of other items, 8 followed by item 8 (use to help sleep).

Demographic associations

There were significant correlations between higher ScreenQ scores and male child gender (rρ = 0.31), non-Caucasian race (rρ = 0.44), unmarried parent (rρ = 0.41), lower household income (rρ = −0.54), and lower maternal education (rρ = −0.41; all p < 0.05), but not child age.

Internal consistency and validity

Internal consistency as estimated by Cronbach’s coefficient α was rCo-α = 0.74. For criterion-related validity, correlations between ScreenQ total score and external standards were: EVT-2 standard (rρ = −0.45), CTOPP-2 Rapid Object Naming (rρ = −0.57), GRTR (rρ = −0.30), and STIMQ-P (rρ = −0.42; all p ≤ 0.01). Scatter plots of ScreenQ total score vs. scores for each of these standards are shown in Fig. 2.

Discussion

Over the course of a single generation, the landscape of childhood has transformed—work, play, relationships—outpacing the ability of pediatric providers and researchers to clearly define it. This upheaval has fueled confusion and controversy among parents, clinicians, educators, and advocates regarding promise or peril of rapidly changing technologies and the media that they convey. AAP recommendations cite many variables conferring or mitigating risk, notably portable devices.3 To understand the influence of these variables, it is critical to measure them as reliably and ecologically as possible. While objective assessments are feasible in limited contexts (e.g., categories of iPhone use), parent report remains efficient, inexpensive, and appealing for clinical and research use, with potential to capture quantitative and qualitative behaviors, contextual insights (e.g., in bedrooms), and fewer privacy concerns. This study provides initial evidence supporting internal consistency and validity of a brief, composite parental report measure of screen-based media use in young children (ScreenQ), and associations with child cognitive skills and parenting practices.

We attribute strong performance of the ScreenQ to an evidence-based conceptual model derived from AAP recommendations,3 which guided item and scale development. Range of item difficulty—here interpreted as aspects of use that parents endorsed more (“easier”) or less (“harder”) often, also referred to as endorseability—was balanced, suggesting the potential to capture diverse usage patterns. Importantly, difficulty level for items 14–15 is interpreted in reverse, as higher scores for these reflect less AAP-recommended behavior. The easiest items were item 4 (use on school nights), item 2 (child has a portable device), and item 13b (use of video games/apps alone), which are consistent with recent statistics of overall and portable device use in children,1 and possibly use in preschool settings.26 Violent content (item 10), use for calming (item 9), and fast-paced content (item 12) were the hardest items, possibly attributable to the relatively young age of children in this study, adherence with AAP recommendations,3 and/or social desirability bias for oft-stigmatized behaviors subject to interpretation. Overall, items from the content domain (items 10–12) were slightly more difficult, suggesting greater parental restriction on content, and those from access (items 1–5) less difficult, suggesting fewer restrictions on access, likely attributable to portable devices.

Inter-item correlations for the ScreenQ suggest that each item contributed uniquely to the composite score, yet relationships among them reveal important aspects of use and risk. Item 1 (bedroom screens) had the broadest and most strongly positive inter-item correlations, including with hours of use (rρ = 0.52), earlier age of initiation (rρ = 0.35), use to help the child sleep (rρ = 0.72), use alone (rρ = 0.30), and the child selecting/downloading media by themselves (rρ = 0.51). These are consistent with the well-described relationship between bedroom screens and maladaptive use, particularly in terms of impaired sleep.27,28 Inter-item correlations involving access to portable devices (item 2) were also robust and consistent with current evidence,1 including higher overall use (rρ = 0.46), earlier age of initiation (rρ = 0.48), use for calming (rρ = 0.29), and use of games/apps alone (rρ = 0.32). Correlation between item 3 (use at meals) and item 9 (use for calming; rρ = 0.44) is consistent with recently described association between media use during meals and negative child emotionality,29 suggesting patterns of maladaptive social–emotional use. This is similarly suggested by correlation between item 5 (use while waiting) and item 8 (use to help sleep; rρ = 0.37). Interestingly, item 11 (the child choosing media independently) was associated with a range of other factors, including bedroom access (rρ = 0.51), use at meals (rρ = 0.36), use while waiting (rρ = 0.32), higher overall use (rρ = 0.37), use to help sleep (rρ = 0.37), and use alone (0.27), suggesting potentially high impact of greater parental oversight of media choices. However, despite moderate correlation between items 14 and 15 (dialog during and after use; rρ = 0.42), neither of these protective co-viewing behaviors were substantially associated with lower potentially risky ones. Altogether, these inter-item relationships suggest an appealing feature of the ScreenQ to detect and characterize interrelationships of use during this formative stage of development when habits are reinforced.30 Interestingly, total ScreenQ scores were more strongly correlated with EVT-2, CTOPP-2, and GRTR scores than any individual item, and with all StimQ items except for discussion after viewing/use (Table 3), highlighting the value of the composite measure. This finding suggests limitations of measuring and applying a single aspect of media use, such as hours per day.

Moderate to high correlation between ScreenQ and lower EVT-2 standard scores is consistent with the documented relationship between excessive screen time and language delays.5,31 This finding is particularly striking given the relatively small sample size and magnitude of effect. Similarly, high negative correlation with CTOPP-2 Rapid Object Naming is consistent with cited effects on working memory and executive function.7,32 Negative correlations between ScreenQ and GRTR scores—of which language is a major component—are also consistent with prior evidence.33 It is unclear whether these effects were fueled by maladaptive qualities of media itself (e.g., violent content), or mediated via less constructive stimulation in the home (e.g., less parental interactivity), which was found via negative correlation between ScreenQ and StimQ-P scores. Screen time has been linked to decreased parent–child engagement,9,11,29 including shared reading,34,35 and a mediating effect seems likely, and worthy of future study. Correlations between higher ScreenQ scores and male gender, lower household income, and lower maternal education (i.e., lower socioeconomic status [SES]) are consistent with current usage statistics1 reflecting higher use and risk for boys and households of lower SES.36

Our study has limitations that should be noted. While reasonably diverse, our sample size was relatively small, and our results may not be generalizable to larger populations or groups, such as other ethnicities or fathers. Our study involved a relatively narrow age range (3–5 years old); yet, this span is formative in terms of child development and viewing habits, and media use tends to increase with age, particularly for entertainment.1,30 Further study involving wider age ranges would also be worthwhile. All children scored average or better on the EVT-2, and it is unclear if a negative correlation with ScreenQ scores would apply in children with lower language abilities, although we believe that they could be even stronger. External standards were chosen in the context of cited negative effects of screen-based media, and may not adequately identify potential benefits. Like all parent report measures, ScreenQ is subject to social desirability bias, and comparison with direct observation would be useful to quantify such bias and provide an objective external criterion, although this was not feasible within the confines of this study and is planned as an important next step. ScreenQ development did not involve rigorous qualitative methods such as focus groups, although items were pilot tested for clarity with families in clinical settings across versions and no parental concerns during this study were noted. While most items performed well, one (educational content) was removed due to negative correlation with total ScreenQ score and concerns that this was attributable to unclear wording. As educational content is stressed in AAP recommendations3 and germane to our conceptual model (although “educational” claims can be misleading9,37), this item will be revised for a future version. ScreenQ was administered by research coordinators and feasibility for clinical use is uncertain, although with simple reading level and brief administration time, we believe that it could be adapted for parents to complete during a well visit. Most importantly, while this study offers a respectable first step towards validation in the preschool-age range, more extensive, longitudinal studies including an objective screen time criterion are needed.

This study also has important strengths. The ScreenQ measure is grounded in an evidence-based conceptual model mirroring current AAP recommendations,3 which guided item and measure development. While indirect, analysis of criterion-related validity was also evidence-based in the context of four validated measures, including one regarding parenting practices and three directly administered to the child. Correlations of these with ScreenQ were highly significant, concordant, and in directions predicted by our hypotheses and literature documenting risks of screen-based media use in children. Analysis involved rigorous psychometric techniques, providing insights into individual ScreenQ items and the composite measure, which showed strong internal consistency, especially for a new measure.38 The issue of screen-based media use in children is a major and growing public health concern, and ScreenQ begins to address a major evidence gap, with potential for further study. This includes predictive validity, other measures of parenting and health, and development of a reduced version for clinical use. Overall, at this preliminary stage, ScreenQ shows promise as a brief, psychometrically-sound composite measure of screen-based media use in children, providing important insights into the increasingly digitized ecosystem in which they grow up.

Conclusion

In this study involving a small yet diverse sample of parents of preschool-age children, the 15-item ScreenQ measure exhibited strong psychometric properties, including internal consistency and validity referenced to external standards reflecting documented risks of excessive screen-based media use. Higher ScreenQ scores were correlated with significantly lower performance in each of these standards, including expressive language, speed of processing, emergent literacy, and cognitive stimulation in the home. At this preliminary stage, the ScreenQ shows promise as an efficient and valid composite measure of screen time reflecting current AAP recommendations, warranting further investigation.

References

Rideout, V. The Common Sense Census: Media Use by Kids Age Zero to Eight (Common Sense Media, San Francisco, 2017).

Carter, B., Rees, P., Hale, L., Bhattacharjee, D. & Paradkar M. S. Association between portable screen-based media device access or use and sleep outcomes: a systematic review and meta-analysis. JAMA Pediatr. 170, 1202–1208 (2016).

AAP Council on Communications and Media. Media and Young Minds. Pediatrics (American Academy of Pediatrics, Elk Grove, 2016).

Robinson, T. N. et al. Screen media exposure and obesity in children and adolescents. Pediatrics 140, S97–S101 (2017).

Anderson, D. R. & Subrahmanyam, K. Digital screen media and cognitive development. Pediatrics 140, S57–S61 (2017).

Garrison, M. M., Liekweg, K. & Christakis, D. A. Media use and child sleep: the impact of content, timing, and environment. Pediatrics 128, 29–35 (2011).

Lillard, A. S., Li, H. & Boguszewski, K. Television and children’s executive function. Adv. Child Dev. Behav. 48, 219–248 (2015).

Walsh, J. J. et al. Associations between 24 h movement behaviours and global cognition in US children: a cross-sectional observational study. Lancet Child Adolesc. Health 2, 783–791 (2018).

Choi, J. H. et al. Real-world usage of educational media does not promote parent–child cognitive stimulation activities. Acad. Pediatr. 18, 172–178 (2018).

Tomopoulos, S. et al. Is exposure to media intended for preschool children associated with less parent-child shared reading aloud and teaching activities? Ambul. Pediatr. 7, 18–24 (2007).

Mendelsohn, A. L. et al. Infant television and video exposure associated with limited parent-child verbal interactions in low socioeconomic status households. Arch. Pediatr. Adolesc. Med. 162, 411–417 (2008).

Paulus, M. P. et al. Screen media activity and brain structure in youth: evidence for diverse structural correlation networks from the ABCD study. Neuroimage 185, 140–153 (2019).

Hutton, J. S., Dudley, J., Horowitz-Kraus, T., DeWitt, T. & Holland, S. K. Differences in functional brain network connectivity during stories presented in audio, illustrated, and animated format in preschool-age children. Brain Imaging Behav. 14, 130–141 (2020).

Horowitz-Kraus, T. & Hutton, J. S. Brain connectivity in children is increased by the time they spend reading books and decreased by the length of exposure to screen-based media. Acta Paediatr. 107, 685–693 (2018).

Bryant, M. J., Lucove, J. C., Evenson, K. R. & Marshall, S. Measurement of television viewing in children and adolescents: a systematic review. Obes. Rev. 8, 197–209 (2007).

Magee, C. A., Lee, J. K. & Vella, S. A. Bidirectional relationships between sleep duration and screen time in early childhood. JAMA Pediatr. 168, 465–470 (2014).

Nightingale, C. M. et al. Screen time is associated with adiposity and insulin resistance in children. Arch. Dis. Child 102, 612–616 (2017).

Hutton, J. S., Xu, Y., DeWitt, T. & Ittenbach, R. F. Assessment of Screen-Based Media Use in Children: Development and Psychometric Refinement of the ScreenQ Pediatric Academic Societies Meeting (Toronto, Canada, 2018).

Phillips, B. M., Lonigan, C. J. & Wyatt, M. A. Predictive validity of the get ready to read! Screener: concurrent and long-term relations with reading-related skills. J. Learn Disabil. 42, 133–147 (2009).

Bellevue Project for Early Language Literacy and Education Success (BELLE). STIMQ Home Cognitive Environment (Bellevue Project for Early Language, Literacy, and Education Success (BELLE), New York University School of Medicine, Department of Developmental and Behavioral Pediatrics, 2018).

Harris, P. et al. Research electronic data capture (REDCap)—a metadata-driven methodology and workflow process for providing translational research informatics support. J. Biomed. Inf. 42, 377–381 (2009).

Williams, K. T. Expressive Vocabulary Test 2nd edn (EVT-2) (Pearson Assessments, Minneapolis, 2007).

Wagner, R. K., Torgesen, J. K., Rashotte, C. A. & Pearson, N. A. CTOPP-2: Comprehensive Test of Phonological Processing–Second Edition (Pro-Ed, Austin, 2019).

Smith, E. V. & Smith, R. M. Rasch Measurement: Advanced and Specialized Applications (JAM Press, Maple Grove, 2007).

Smith, R. M. Detecting item bias with the Rasch model. J. Appl Meas. 5, 430–449 (2004).

Early Childhood Teacher. ECE Technology: 10 Trending Tools for Teachers (EarlyChildhoodTeacher.org, Washington, 2019).

Pagani, L. S., Harbec, M. J. & Barnett, T. A. Prospective associations between television in the preschool bedroom and later bio-psycho-social risks. Pediatr. Res. 85, 967–973 (2019).

Duggan, M. P., Taveras, E. M., Gerber, M. W., Horan, C. M. & Oreskovic, N. M. Presence of small screens in bedrooms is associated with shorter sleep duration and later bedtimes in children with obesity. Acad Pediatr. 19, 515–519 (2019).

Domoff, S. E., Lumeng, J. C., Kaciroti, N. & Miller, A. L. Early childhood risk factors for mealtime TV exposure and engagement in low-income families. Acad. Pediatr. 17, 411–415 (2017).

Hamilton, K., Spinks, T., White, K. M., Kavanagh, D. J. & Walsh, A. M. A psychosocial analysis of parents’ decisions for limiting their young child’s screen time: an examination of attitudes, social norms and roles, and control perceptions. Br. J. Health Psychol. 21, 285–301 (2016).

Ma, J. & Birken, C. Handheld Screen Time Linked with Speech Delays in Young Children (Pediatric Academic Societies Meeting, San Francisco, 2017).

McNeill, J., Howard, S. J., Vella, S. A. & Cliff D. P. Longitudinal associations of electronic application use and media program viewing with cognitive and psychosocial development in preschoolers. Acad Pediatr. 19, 520–528 (2019).

Radesky, J. S. & Christakis, D. A. Increased screen time: implications for early childhood development and behavior. Pediatr. Clin. N. Am. 63, 827–839 (2016).

Yuill, N. & Martin, A. F. Curling up with a good E-Book: mother–child shared story reading on screen or paper affects embodied interaction and warmth. Front. Psychol. 7, 1951 (2016).

Chiong, C., Ree, J. & Takeuchi, L. Comparing Parent–Child Co-reading on Print, Basic, and Enhanced e-Book Platforms (The Joan Ganz Cooney Center at Sesame Workshop, New York, 2012).

Ribner, A., Fitzpatrick, C. & Blair, C. Family socioeconomic status moderates associations between television viewing and school readiness skills. J. Dev. Behav. Pediatr. 38, 233–239 (2017).

Bentley, G. F., Turner, K. M. & Jago, R. Mothers’ views of their preschool child’s screen-viewing behaviour: a qualitative study. BMC Public Health 16, 718 (2016).

Peterson, R. A. A meta-analysis of Cronbach’s coefficient alpha. J. Consumer Res. 21, 381–391 (1994).

Acknowledgements

We would like to thank research coordinators Arielle Wilson and Amy Kerr for overseeing data collection, data entry, and quality control. We also acknowledge Dr. Alan Mendelsohn, M.D. and his team at the Bellevue Project for Early Language, Literacy, and Education Success (BELLE Project) for the development of the StimQ measure, which provided inspiration for the ScreenQ name, and for their generosity sharing StimQ for the benefit of children and families. This study was funded by a Procter Scholar Award from the Cincinnati Children’s Research Foundation (Hutton).

Author information

Authors and Affiliations

Contributions

J.S.H. researched and drafted the ScreenQ screening instrument used in this study, designed the study protocol, supervised data collection, collaborated in data analysis and measure refinement, and drafted the initial manuscript and subsequent revisions. G.H. and R.D.S. performed all data analysis for this study, created the tables and graphs, and reviewed and revised the manuscript and subsequent revisions. T.D.W. provided guidance on the study protocol design, analysis, and measure refinement, and reviewed and revised the manuscript. R.I. directed and supervised psychometric and other data analyses, consulted in the development and refinement of ScreenQ screening instrument, reviewed and revised the manuscript, and approved the final manuscript as submitted.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

About this article

Cite this article

Hutton, J.S., Huang, G., Sahay, R.D. et al. A novel, composite measure of screen-based media use in young children (ScreenQ) and associations with parenting practices and cognitive abilities. Pediatr Res 87, 1211–1218 (2020). https://doi.org/10.1038/s41390-020-0765-1

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s41390-020-0765-1

This article is cited by

-

Parental beliefs and mediation co-mediate the SES effect on chinese preschoolers’ early digital literacy: A chain-mediation model

Education and Information Technologies (2023)

-

Young children’s screen time during the first COVID-19 lockdown in 12 countries

Scientific Reports (2022)

-

Associations between digital media use and brain surface structural measures in preschool-aged children

Scientific Reports (2022)