Abstract

Background

Technology holds promise for delivery of accessible, individualized, and destigmatized obesity prevention and treatment to youth.

Objectives

This review examined the efficacy of recent technology-based interventions on weight outcomes.

Methods

Seven databases were searched in April 2020 following PRISMA guidelines. Inclusion criteria were: participants aged 1–18 y, use of technology in a prevention/treatment intervention for overweight/obesity; weight outcome; randomized controlled trial (RCT); and published after January 2014. Random effects models with inverse variance weighting estimated pooled mean effect sizes separately for treatment and prevention interventions. Meta-regressions examined the effect of technology type (telemedicine or technology-based), technology purpose (stand-alone or adjunct), comparator (active or no-contact control), delivery (to parent, child, or both), study type (pilot or not), child age, and intervention duration.

Findings

In total, 3406 records were screened for inclusion; 55 studies representing 54 unique RCTs met inclusion criteria. Most (89%) included articles were of high or moderate quality. Thirty studies relied mostly or solely on technology for intervention delivery. Meta-analyses of the 20 prevention RCTs did not show a significant effect of prevention interventions on weight outcomes (d = 0.05, p = 0.52). The pooled mean effect size of n = 32 treatment RCTs showed a small, significant effect on weight outcomes (d = ‒0.13, p = 0.001), although 27 of 33 treatment studies (79%) did not find significant differences between treatment and comparators. There were significantly greater treatment effects on outcomes for pilot interventions, interventions delivered to the child compared to parent-delivered interventions, and as child age increased and intervention duration decreased. No other subgroup analyses were significant.

Conclusions

Recent technology-based interventions for the treatment of pediatric obesity show small effects on weight; however, evidence is inconclusive on the efficacy of technology based prevention interventions. Research is needed to determine the comparative effectiveness of technology-based interventions to gold-standard interventions and elucidate the potential for mHealth/eHealth to increase scalability and reduce costs while maximizing impact.

Similar content being viewed by others

Introduction

The global prevalence of overweight and obesity among children and adolescents aged 5–19 years has quadrupled over the past four decades [1]. Although effective prevention and treatment interventions exist [2], widespread access to evidence-based interventions remains a significant challenge. Furthermore, inadequate insurance coverage and reimbursement for childhood obesity treatment prevents children and families from obtaining affordable care [3], which may exacerbate global obesity disparities among disadvantaged youths [4].

Pediatric weight-management interventions that incorporate digital “eHealth” technologies or mobile “mHealth” technologies are promising low-cost solutions to increase access to care, as they can often be accessed anywhere, anytime. Furthermore, eHealth/mHealth technologies may bolster engagement through novel “kid-friendly” programming (e.g., exergaming, virtual reality) and may prevent weight gain among youth at-risk for overweight/obesity or enhance the durability of weight change through intervention personalization (e.g., momentary feedback, booster texts). Preliminary meta-analytic findings support the acceptability and feasibility of eHealth/mHealth technologies as both stand-alone and adjunctive interventions for pediatric obesity [5, 6]. However, the heterogeneity of eHealth/mHealth technologies included in prior studies precludes conclusions on whether or not intervention efficacy varies by type of technology and target population (e.g., parent- vs child-facing) [7, 8]. Evaluation of these factors is crucial in order to understand the extent to which technological interventions should be considered in the development of effective, affordable, integrated care models for pediatric obesity. Furthermore, given the constant advancement of technology, the application of emerging eHealth/mHealth technologies to pediatric obesity prevention and treatment warrants an updated investigation. Therefore, we synthesized the recent literature on technology-based interventions for the prevention and treatment of overweight/obesity in youth.

Method

Literature search and selection of studies

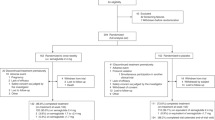

The review methodology was preregistered on PROSPERO (registration number: CRD42020150683). A systematic literature search was conducted following PRISMA guidelines [9] (see Appendix A for PRISMA checklist). A medical librarian (LHY) searched the literature for records including the concepts of obesity prevention and treatment, technology-based interventions, children, and randomized controlled trials. The librarian created search strategies using a combination of keywords and controlled vocabulary (see Appendix B for fully reproducible search strategies) in Ovid Medline 1946-, Embase.com 1947-, Scopus 1823-, Cochrane Central Register of Controlled Trials (CENTRAL), The Cumulative Index to Nursing and Allied Health Literature (CINAHL) 1937-, PsycINFO 1927-, and Clinicaltrials.gov 1997-. All search strategies were completed in September 2019. A total of 5036 results were found. After using the de-duplication processes based on previously published guidance [10], 2436 duplicate records were deleted, resulting in a total of 2600 unique citations included in the project library. Searches were updated April 25, 2020 by running all searches again and removing duplicates against the original Endnote library. An additional 806 unique records were found, resulting in a total of 3406 screened for inclusion.

Inclusion and exclusion criteria

Identified studies were screened for eligibility if they met the following inclusion criteria: (1) pediatric population (1–18 years old); (2) use of technology in an intervention targeting prevention or treatment of overweight and/or obesity (intervention must include use of technology but did not have to be solely delivered through technology; e.g., text messaging, phone calls, telehealth, mobile applications (apps), email, machine learning adaptive interventions); (3) primary or secondary outcome of relative weight or adiposity (e.g., BMIz, body fat percentage, fat mass index); (4) randomized controlled trial (RCT); and (5) published after January 2014 (previous 5 years). This was due to recent reviews ending their searches with literature published in 2014 [6, 11, 12] or focusing exclusively on parent-delivered [8], self-monitoring [5], or mobile technology [13] interventions for pediatric weight management. Furthermore, the aim of the present review was on advancing the current state of evidence by focusing on recent and current technology interventions. Phone calls were included as technology in this review as this approach to delivering telemedicine is often included in mHealth reviews [14, 15] and represents one way that technology reduces barriers to treatment by remotely delivering intervention components through mobile devices.

Studies were ineligible if they met any of the following exclusion criteria: (1) target population exclusively infants or adults (i.e., <1 or >18 years old); (2) lack of original data (e.g., trial protocol, review, commentary, secondary analysis of included study, or conceptual study) and/or lack of evaluation of participant outcomes (e.g., software, hardware, or computing/engineering proof of concepts); (3) primary purpose of the technology was for assessment (e.g., ecological momentary assessment) as opposed to intervention; (4) intervention primarily targeted chronic condition other than weight management (e.g., diabetes, chronic pain, psychiatric disorders); (5) intervention targeted children with a specific chronic condition (e.g., children with developmental delays, children with diabetes); (6) no reporting of weight outcome; or (7) not an RCT.

If the primary purpose of the technology was for intervention and not assessment and the technology was not the main intervention element, the technology still needed to be an essential component in the intervention. Therefore, in the first stage of the abstract screening process, records were examined for technology use. If the technology component was ancillary to the intervention such that it did not warrant mention in the abstract, it was believed that the trial did not warrant the label of “eHealth/mHealth intervention” or inclusion in this review. Importantly, if there was ambiguity about technology use, we were conservative in our exclusion of articles in the abstract screening, and we included records in the full text review if it was unclear whether technology was used and whether it was for assessment or intervention (or both).

Data extraction and synthesis

Search results received from the medical librarian (LHY) were uploaded into Covidence systematic review software (Veritas Health Innovation, Melbourne, Australia) and screened by two authors (LAF and ACG) for inclusion/exclusion. Duplicates were removed prior to title and abstract screening. Disagreements were resolved through consensus or with a third reviewer (EFC). Year of publication, country, study design, participant characteristics (e.g., age, sex), intervention description, and main outcomes related to weight were abstracted using Microsoft Excel, version 1908. Studies were classified as prevention or treatment interventions given the differences in expected outcome for participants in these trials (i.e., anticipated decreases in adiposity for treatment interventions versus anticipated maintenance or stable adiposity for prevention interventions). Treatment interventions were defined as interventions targeting weight loss among youth with overweight or obesity. Prevention interventions were interventions that could have included youth across the weight spectrum and that did not target decreases in adiposity for the entire sample of participants.

Quality assessment

The quality assessment was completed in Covidence systematic review software (Veritas Health Innovation, Melbourne, Australia). Studies were independently rated by two authors (LAF and ACG) following the criteria established for studies included in Cochrane reviews [16]. Risk of bias was assessed for the following: sequence generation, allocation concealment, blinding of participants and personnel, blinding of outcome assessors, incomplete outcome data, selective outcome data, and other sources of bias. Studies were rated for each of the seven criteria as low risk of bias, high risk of bias, or unclear. Raters discussed any disagreements and reached consensus. Following established quality threshold recommendations [17], studies were judged overall based on the number of unclear and high risk of bias judgments. A study was judged to have overall high risk of bias if more than one criteria were rated as high risk or more than four criteria were rated as unclear. Studies were judged to have some concerns regarding bias if they had a high risk of bias for one of the seven criteria and at least one criteria with judgments of “unclear.” Algorithms for implementing criteria to reach risk of bias judgments previously outlined were used when, for example, measures were used to mitigate the risk of absence of masking participants and personnel to treatment condition, which is often not possible with behavioral interventions [17].

Meta-analysis

Given the inherent differences in expected outcome change for prevention trials and treatment trials, two separate analyses were conducted. Means and standard deviations, or effect estimates and standard errors, were extracted from all included articles (see Appendix C for tables of data that were extracted or provided by authors to calculate effect sizes). When data were not reported, authors were contacted (n = 5), of which two provided unpublished raw means and standard deviations for the present analyses [18, 19]. The studies of authors who did not provide data were excluded from the meta-analysis but included in the narrative review [20,21,22]. Two studies [23, 24] reported median and interquartile range, which were converted to mean and standard deviation using previously published equations [25].

The effects were then converted into a standardized effect size to compare between studies. Effect sizes (Cohen’s d) were calculated by subtracting the mean score of the intervention group (Mi) from the mean score of the comparator group (Mc) and dividing the result by the pooled standard deviations of both groups. This was done at post-test unless baseline differences on outcome variables existed despite the randomization. Then Cohen’s d values were calculated as the difference between the standardized pre- and post-change score for each group. Using previously published methods [26], 95% Confidence Intervals (CI) were calculated for the effect size of each study. Effect size conventions proposed by Cohen were used (i.e., d = .20, d = .50, and d = .80 indicate small, medium, and large effects, respectively) [27].

We used a random effects model with inverse variance weighting [28] to estimate a pooled mean effect size with 95% CI due to the diversity of studies and populations. Presence of heterogeneity was examined with I2-statistic and τ2. I2 is a percentage indicating proportion of the total variability in a set of effect sizes due to true heterogeneity (i.e. between-studies variability). A value of 0% indicates an absence of heterogeneity, and larger values show increasing levels of heterogeneity (i.e., 25%, 50%, and 75% can be considered low, moderate and high levels of heterogeneity, respectively) [29]. The DerSimonian-Laird estimator [30] was used to calculate τ2, and the Jackson method [31] was used to calculate the 95% CI for τ2 as a measure of between-studies variance, with values closer to 0 suggesting less heterogeneity [32].

Meta-regressions examined whether intervention effects differed based on: (1) comparator type (i.e., active comparator vs. waitlist control), (2) technology role (i.e., adjunct to treatment vs. mostly [e.g., one initial in-person session] or solely technology-delivered), (3) technology use (i.e., provider-delivered telehealth such as phone calls or video chats vs. other technologies used in a variety of ways that do not require a trained provider to deliver the content such as web programs, apps, texts, video/exergames, sensors, e-mails, and social media), (4) delivery target (i.e., parent-, child-, or both parent- and child-delivered interventions), (5) trial type pilot or n < 100 vs. n ≥ 100, (6) mean participant age, and (7) intervention duration in months.

For three-arm RCTs, the true control (e.g., wait list control or minimal contact) was used as the comparison group, with two exceptions: the two non-technology-based comparison conditions were combined, e.g., [33], unless the active intervention also contained the technology component, e.g., [34], in which case the two technology-based conditions were combined. All analyses were conducted in R Version 4.0.2 [35], using the meta package [36] for analyses, the metaviz package [37] for data visualization, and the dmetar package for meta-regressions.

Results

Study selection

Qualitative synthesis

Ninety-one full text articles were reviewed for inclusion; 55 articles [18,19,20,21,22,23,24, 33, 34, 38,39,40,41,42,43,44,45,46,47,48,49,50,51,52,53,54,55,56,57,58,59,60,61,62,63,64,65,66,67,68,69,70,71,72,73,74,75,76,77,78,79,80,81,82,83] representing 54 unique RCTs were identified as meeting the inclusion criteria for the qualitative synthesis (Fig. 1). Although two articles reported on the same RCT, one provided outcomes at post-intervention [49] and the other at long-term follow-up [48]; therefore, both articles were included in the review. Ten additional studies were identified from the reference lists of the included articles. However, all 10 articles were excluded due to the following reasons: not an RCT (n = 4), no weight outcome (n = 3), no technology component (n = 2), and no full text (n = 1).

Quantitative synthesis

Overall, data were available for 52 of 54 unique RCTs (n = 32 treatment trials and n = 20 prevention trials) at post-intervention and 14 RCTs (n = 6 treatment trials and n = 8 prevention trials) at long-term follow-up. Meta-regression models were only computed for post-intervention outcomes given the small number of prevention and treatment RCTs with data at long-term follow-up.

Study characteristics

Most studies (n = 27) were conducted with community samples, 15 with a clinical sample (e.g., patients at an outpatient clinic for obesity treatment), and 13 with a school sample. Twenty-two interventions focused on prevention of overweight and obesity and the other 33 articles reported on treatment interventions. Twenty-seven studies were conducted in the United States, 13 studies were from western or central Europe, four were from southeast Asia, four were from Australia, two from New Zealand, two from South America, one from the Middle East, one from eastern Europe, and one from Canada. Target participants ranged from 1.5 to 18 years of age. Studies typically targeted a specific age group, i.e., young children (<5 years) or infants (n = 9), children (<13 years; n = 27), or adolescents (n = 18); however, one study targeted both children and adolescents aged 4 to 17 years [65]. Although all studies were RCTs, 25 were cluster RCTs, five identified as pilot RCTs, and one was a crossover RCT. Seventeen studies did not include parents, 17 studies included parents in the intervention but they were not the primary targets, 13 studies targeted both the parent and the child, and the remaining eight studies primarily targeted parents. Table 1 displays study characteristics for all studies included in the review.

Study quality

Twenty-nine of the 55 articles (55%) were judged as having an overall low risk of bias, defined as having fewer than three criteria judged as “unclear.” Thirteen articles (24%) were judged as having some concerns overall, based on a priori judgment algorithms [17]. Seven articles (13%) were judged as having some concerns but not likely to significantly bias the studies’ results. These articles did not mask participants and personnel to treatment condition; however, since it is often not possible to blind participants and personnel in behavioral interventions, this criteria was judged to be less likely to significantly bias results when other measures were used to reduce the risk of differential behaviors by patients and healthcare providers [17]. Finally, six articles (11%) were judged as having an overall high risk of bias. Information on the quality assessment ratings for all articles is included in Table 2.

Type of intervention

Study interventions varied immensely in treatment modality and content. The interventions included mobile phone app interventions [42, 48, 49], text-based interventions [19, 60, 61], home-delivered interventions with technology adjunct components [34, 63], group sessions or interactive classes with phone calls [53, 58], group-based exergames [78], exergaming in addition to family-based behavioral treatment [82], and active video games as replacements for non-active video games [75], among others.

Although 21 studies did not report that the intervention was informed by any theoretical basis, most studies (n = 34, 62%) described at least one theory or evidence-based approach that informed the development of the intervention, and many (n = 15, 27%) reported several frameworks. The most common theories reported were social cognitive theory (n = 21), motivational interviewing (n = 7), cognitive-behavioral therapy (n = 4), and self-determination theory (n = 6).

The type of technology that each intervention utilized was also variable, and 11 interventions used more than one type of technology. Of the interventions that only utilized one type of technology (n = 44), six used text messaging, six involved exergaming, 10 relied on phone calls for remote delivery of the intervention, one used a wearable sensor, six used a mobile app, one used email, 10 used a web platform, one used a computerized decision tree for tailored intervention, two used video games, and one used video chat for delivering the intervention. Many studies involved multiple types of technology, including the internet (n = 15), phones calls (n = 14), text messaging (n = 12), mobile app (n = 8), exergames (n = 7), wearable sensors (n = 5), emails (n = 3), video chat (n = 3), computerized decision tree (n = 1), and social media (n = 1).

We created three separate classifications for the technology function for the interventions (i.e., solely technology-delivered, mostly technology-delivered, and technology used as adjunct to intervention). Using technology adjunctively refers to complementing in-person treatment with digital technology components. A study was classified as solely technology-delivered when it did not rely on any in-person elements. Finally, a “mostly” technology-delivered intervention involved one to two in-person sessions followed by a completely technology-delivered intervention. This was created to distinguish different ways of employing technology in an intervention, whether it involved regular in-person elements (i.e., using technology adjunctively), involved no in-person elements (i.e., solely technology-based) or minimal initial in-person elements (i.e., mostly technology-based). Twenty-four studies tested interventions delivered solely through technology. Twenty-four studies used technology as an adjunct to in-person delivered intervention. Seven studies were mostly delivered through technology but involved one or two in-person counseling sessions.

Intervention length and dosage

Intervention length ranged from 1 to 24 months, with an average intervention duration of 6.5 (SD = 4.5) months. Dosage varied from being structured contact (e.g., online modules for 60 min one time per week; biweekly phone calls with behavioral coach; three text messages per day) to self-paced use (i.e., engagement with the intervention content depended on the participant).

Comparison and control conditions

Although all included studies had comparison groups, studies varied greatly depending on the scope of the research question. Many studies (41%; n = 22) included an active control comparison condition that did not have a technology component, which ranged from an educational pamphlet to in-person treatment (e.g., family-based treatment, cognitive-behavioral therapy). Fifteen studies (27%) included a no-contact control condition (i.e., waitlist), 10 studies included a usual care comparison condition, and thirteen studies had an active intervention comparison with a technology component. Six studies (11%) had three intervention arms [24, 33, 34, 41, 55, 80]; most (n = 5) included a usual care or no-contact comparison condition along with an active intervention comparison, which involved technology components for some (n = 4).

Follow-up

Seventeen studies (31%) measured outcomes at an additional time point after the post-intervention time point. Studies varied in length of long-term follow-up, from 2 to 18 months, with an average of 8.6 (SD = 4.6) months.

Intervention efficacy

Prevention of overweight or obesity

Narrative

Out of 22 prevention studies representing 21 unique RCTs, six articles (five unique prevention RCTs; 24% of unique prevention RCTs) found significant intervention effects at post-intervention. Three of the RCTs that found significant intervention effects were solely technology-delivered interventions [44, 48, 49, 71], compared to no-contact waitlist controls [44] and active comparators without technology components [49, 71]. The other two RCTs that reported significant intervention effects used technology components as an adjunct to treatment [34, 50], compared to an active control with technology [34] and a waitlist control [50]. Two of the significant studies reported on the same RCT [48, 49].

Sixteen of 22 prevention studies representing 21 unique RCTs did not find significant differences between intervention and comparison conditions on adiposity or weight outcomes at post-intervention (76% of unique prevention RCTs). Four prevention studies that reported overall null findings did report significant effects for the intervention in subgroups. Two studies reported intervention effects among children with overweight [73] or obesity [59] at baseline, another reported greater reductions in weight status among girls at post-intervention and long-term follow-up [74], and the last study reported attendance at intervention classes as a predictor of slower increases of BMIz [58].

Of the nine prevention studies that included an additional follow-up measure post-intervention, eight trials reported no significant differences in adiposity measures between intervention and comparison conditions at follow-up; one study reported significant effects of the waitlist condition on BMIz at long-term follow-up, which was 6 months post-intervention [75]. Of the four unique RCTs that reported significant short-term intervention effects, only one of these reported long-term follow-up outcomes, finding no sustained intervention effects at long-term follow-up [48].

Meta-analysis

The random effects model with inverse variance weighting was used to calculate the pooled mean effect size for n = 20 prevention trials (Fig. 2). The estimated mean effect size was 0.004 (95% CI = −0.078, 0.086), which was not significantly different from zero (p = 0.930), where negative effect sizes represent greater effects of the treatment condition on outcomes compared to the comparator/control condition. Heterogeneity of the effect sizes at post-intervention was moderate (I2 = 42.6%; 95% CI = 2.4%, 66.2%), confirmed by a significant test of heterogeneity, Q (19) = 33.08, p = 0.024. The estimated between-studies variance suggests some heterogeneity among the true effects (τ2 = 0.01, 95% CI = 0.00, 0.07).

The estimated mean effect size for n = 8 prevention trials at long-term follow-up was not significantly different from zero (d = 0.063, 95% CI = ‒0.019, 0.145, Fig. 3). Heterogeneity of the effect sizes at follow-up was low (I2 = 7.0%, 95% CI = 0.0%, 69.9%), confirmed by a nonsignificant test of heterogeneity (Q (7) = 7.53, p = 0.376). The estimated τ2 indicated low heterogeneity between studies (τ2 = 0.00, 95% CI = 0.00, 0.04).

Meta-regressions at post-intervention

Meta-regression models with random effects demonstrated no significant differences in study effect size by comparator type (n = 11 active comparators, n = 9 waitlist control, p = 0.735), technology role (n = 9 adjunct to treatment, n = 11 mostly or solely technology-delivered, p = 0.294), and technology use (n = 4 provider-delivered telehealth, n = 16 non-provider-delivered technology, p = 0.367), delivery target (child-delivered (n = 12), parent-delivered (n = 3), or both parent- and child-delivered (n = 5), p = 0.697), study type (n = 7 pilot trials and/or n < 100, n = 13 non-pilot trials, p = 0.516), age (p = 0.818) or intervention duration (p = 0.151).

Post-hoc analyses

Publication bias was assessed using a funnel plot (Fig. 4) and Eggers’ test, which did not indicate funnel plot asymmetry (intercept = -0.19, 95% CI = -1.61, 1.23, p = 0.800). This suggests that the scatter in the funnel plot may be due to sampling variation and does not suggest publication bias [84]. Influence analyses involved examination of outliers, Baujat plot and diagnostics, influence diagnostics, and a sensitivity analysis. Outlier analyses at post-intervention suggested that excluding one study, Rerksuppaphol et al. [71], would reduce the heterogeneity of effect sizes from 42.6% to 2.3% and the test of heterogeneity would no longer be significant (p > 0.05). This study was also identified as having the largest contribution to the heterogeneity of study effect sizes (14.0) in Baujat plots and diagnostics. The pooled mean effect of n = 19 RCTs when excluding this study is still not significantly different from zero (d = 0.027, 95% CI = -0.032, 0.086, p = 0.364). Meta-regressions with the one study excluded produced the same results (all ps > 0.05). Finally, a sensitivity analysis excluding the seven prevention RCTs that identified as pilot studies or had samples less than 100 demonstrated a pooled mean effect size of 0.015 (95% CI = -0.075, 0.105), which was not significantly different from zero (p = 0.745). Meta-analytic results for prevention RCTs are presented with all studies retained in analyses given that the significance of the pooled mean effect remains non-significant whether potential outliers are included or excluded.

Number Study: (1) Coknaz et al. (2019, Turkey, n = 106). (2) DaSilva et al. (2019, Brazil, n = 895). (3) Delisle Nystrom et al. (2018, Sweden, n = 263). (4) Faith et al. (2019, USA, n = 28). (5) Fulkerson et al. (2015, USA, n = 160). (6) Gao et al. (2019, USA, n = 32). (7) Gutierrez-Martinez et al. (2018, Colombia, n = 120). (8) Haines et al. (2018, Canada, n = 44). (9) Hammersley et al. (2019, Australia, n = 86). (10) Hull et al. (2018, USA, n = 277). (11) Kennedy et al. (2018, Australia, n = 607). (12) Love-Osborne et al. (2014, USA, n = 165). (13) Lubans et al. (2016, Australia, n = 361). (14) Maddison et al. (2014, New Zealand, n = 251). (15) Nollen et al. (2014, USA, n = 51). (16) Rerksuppaphol et al. (2017, Thailand, n = 217). (17) Sherwood et al. (2015, USA, n = 60). (18) Sherwood et al. (2019, USA, n = 421). (19) Simons et al. (2015, The Netherlands, n = 260). (20) Smith et al. (2014, Australia, n = 361).

Treatment of overweight or obesity

Narrative

Six of 33 treatment studies all representing unique RCTs (21%) found significant differences in weight loss outcomes among the treatment group compared to the comparison group at post-intervention. Four of the efficacious interventions were mostly or solely delivered through technology [38, 42, 77, 80] and the remaining two used technology adjunctively [55, 82]. Four of the six RCTs with significant results involved active comparators while two had no-contact control comparison groups.

Twenty-seven of 33 treatment studies (79%) did not find significant differences between treatment and comparison conditions on weight outcomes at post-intervention. Two of these 27 studies (8%) reported significant improvement in measures of adiposity (e.g., percent body fat, BMIz) in both the intervention and active comparator groups at post-intervention, with one intervention delivered solely through technology [41] and the other using technology adjunctively [81]. Despite finding no differences between conditions, five studies reported significant improvements in measures of adiposity among those in the intervention groups [19, 51, 60, 66, 70], which all had active comparators. Two treatment studies that reported overall null findings did report significant effects for the intervention in subgroups. One trial reported significant intervention effects among treatment adherers compared to no-treatment control [78], and another reported significant intervention effects among boys, but not girls, compared to usual care [69].

Eight treatment studies reported on weight outcomes at an additional follow-up after the intervention, with one study using a cross-over trial and another only assessing the intervention group at long-term follow-up [43, 51]. Both studies, which used technology adjunctively and included active comparators, reported maintenance of weight status at follow-up for the intervention group, despite no significant differences with the control group at post-intervention. Two of the other eight studies reported significant treatment effects at post-intervention vs. the comparator; these treatment effects were sustained at 6-month [38] and 10- month follow-up [55], respectively. The first RCT [38] delivered the intervention mostly through technology compared to waitlist, and the other RCT used technology adjunctively compared to both an active comparator and waitlist.

Meta-analysis

A random effects model with inverse variance weighting was used to calculate the pooled mean effect size for n = 32 treatment trials (Fig. 5). The estimated mean effect size was small (d = ‒0.133, 95% CI = ‒0.199, ‒0.067) but significantly different from zero (p < 0.001). Heterogeneity of the effect sizes at post-intervention was low (I2 = 15.3%, 95% CI = 0.0%, 45.2%), confirmed by a nonsignificant test of heterogeneity (Q (31) = 36.58, p = 0.226). The estimated τ2 indicated low heterogeneity between studies (τ2 = 0.00, 95% CI = 0.00, 0.05).

The estimated mean effect size for n = 6 treatment trials at long-term follow-up was also significantly different from zero (d = ‒0.352, 95% CI = ‒0.521, ‒0.184) (Fig. 6). Heterogeneity of the effect sizes at follow-up was low (I2 = 0.0%, 95% CI = 0.0%, 51.1%), confirmed by a non-significant test of heterogeneity (Q (5) = 2.60, p = 0.762). The estimated τ2 indicated low heterogeneity between studies (τ2 = 0.00, 95% CI = 0.00, 0.12).

Meta-regressions at post-intervention

There were no significant effects of comparator type (n = 17 active comparators, n = 15 waitlist control, p = 0.121), technology role (n = 14 adjunct to treatment, n = 18 mostly or solely technology-delivered, p = 0.555), or technology use (n = 19 provider-delivered telehealth, n = 9 non-provider-delivered technology, or n = 4 both) p = 0.362.

Random effects models demonstrated a significant effect of study type (point estimate = −0.17, 95% CI = −0.31, −0.03, QM (1) = 6.00, p = 0.014), where effects were larger for pilot trials (reference group) and/or RCTs with n < 100 (n = 20). Shorter intervention duration was related to greater intervention effects (point estimate = 0.01, 95% CI = 0.00, 0.02, QM (1) = 4.26, p = 0.040). Greater child age was related to greater effects of the intervention (point estimate = −0.03, 95% CI = −0.05, −0.01, QM (1) = 6.23, p = 0.012). Finally, there was a significant difference of effect size across the three categories of delivery: child-delivered (n = 21), parent-delivered (n = 3), or both parent- and child-delivered (n = 8), QM (2) = 8.81, p = 0.012. Specifically, study effect sizes favored the technology arm for child-delivered interventions compared to parent-delivered interventions (point estimate = 0.40, 95% CI = 0.13, 0.66, p = 0.003).

Post-hoc analyses

Publication bias was assessed using a funnel plot (Fig. 7) and Eggers’ test, which did not suggest the presence of funnel plot asymmetry or publication bias (intercept = −0.59, 95% CI = −1.25, 0.06, p = 0.086) [84]. Influence analyses involved examination of outliers, Baujat plot and diagnostics, and influence diagnostics. Outlier analyses suggested that excluding two studies, Armstrong et al. [23]. and Garza et al. [55] would reduce the heterogeneity of effect sizes from 15.3% to 0.0% and result in a non-significant test of heterogeneity (p > 0.05). Both studies were also identified as having small contribution to the heterogeneity of study effect sizes (11.48 and 5.13, respectively) in Baujat plots and diagnostics, with influence analyses identifying Armstrong et al. as the largest contributor to the heterogeneity of effect sizes, although excluding neither study would result in substantial changes in I2 or the estimated mean effect size. The pooled mean effect of n = 30 RCTs when excluding these two studies is still significantly different from zero (d = −0.128, 95% CI = −0.182, −0.074, p < 0.001). Finally, a sensitivity analysis excluding the 20 treatment RCTs that identified as pilot studies or had samples less than 100 demonstrated a pooled mean effect size of −0.084 (95% CI = −0.168, −0.001) which still significantly favored the technology treatment arm (p = 0.048) but was smaller in magnitude. Meta-analytic results for treatment RCTs are presented with all studies retained in analyses given the initial low heterogeneity of effect sizes and that the significance of the pooled mean effect remains significant whether potential outliers are included or excluded.

Number Study: (21) Abraham et al. (2015, Hong Kong, n = 48). (22) Ahmad et al. (2018, Malaysia, n = 134). (23) Armstrong et al. (2018, USA, n = 101). (24) Bagherniya et al. (2018, Iran, n = 172). (25) Banos et al. (2019, Spain, n = 47). (26) Baranowski et al. (2019, USA, n = 200). (27) Bohlin et al. (2017, Sweden, n = 37). (28) Bruno et al. (2018, Spain, n = 52). (29) Chen et al. (2019, USA, n = 40). (30) Christison et al. (2016, USA, n = 80). (31) Currie et al. (2018, USA, n = 64). (32) Davis et al. (2016, USA, n = 103). (33) Fleischman et al. (2016, USA, n = 40). (34) Foley et al. (2014, New Zealand, n = 322). (35) Garza et al. (2019, USA, n = 71). (36) Gerards et al. (2015, Netherlands, n = 86). (37) Jensen et al. (2019, USA, n = 47). (38) Kulendran et al. (2016, United Kingdom, n = 27). (39) Mameli et al. (2018, Italy, n = 30). (40) Markert et al. (2014, Germany, n = 303). (41) Moschonis et al. (2019, Greece, n = 65). (42) Nawi et al. (2015, Malaysian, n = 97). (43) Norman et al. (2016, USA, n = 106). (44) Pfeiffer et al. (2019, USA, n = 1519). (45) Rifas-Shiman et al. (2017, USA, n = 441). (46) Staiano et al. (2017, USA, n = 41). (47) Staiano et al. (2018, USA, n = 46). (48) Sze et al. (2015, USA, n = 40). (49) Taveras et al. (2015, USA, n = 549). (50) Taveras et al. (2017, USA, n = 721). (51) Trost et al. (2014, USA, n = 75). (52) Wald et al. (2018, USA, n = 73).

Discussion

Technology use in pediatric weight management interventions is burgeoning, as evidenced by the identification of 54 unique RCTs published in the last 6 years alone. Studies included in this review utilized web-based platforms, smartphone apps, emails, telemedicine (phone calls or videochats), exergames, video games, text messages, and computerized decision tools, with ~25% of the studies employing multiple types of technology throughout the intervention. Twenty-two of these articles targeted prevention of overweight or obesity among pediatric populations across the weight spectrum, and 33 of the articles reported outcomes from treatment interventions. Most (89%) of the interventions were of high or moderate quality. A substantial proportion (62%) of the interventions were informed by at least one theory or evidence-based approach.

Prevention interventions

Meta-analyses of the 20 prevention trials did not find a significant mean effect of prevention interventions on weight outcomes at post-intervention. Heterogeneity of the true effects was moderate, but did not appear to be associated with the inclusion of pilot studies or studies with small samples according to sensitivity analyses [85]. The five prevention RCTs with significant post-intervention effects were compared to both active (n = 3) and no-contact (n = 2) comparators, involved exergames, an app, a web-based platform, e-mail, and a wearable sensor, and used technology to deliver the intervention (n = 3) or as an adjunctive component to the intervention (n = 2).

There was also not a significant mean effect of prevention interventions at long-term follow-up. Overall, the heterogeneity of comparators, technology type, study design, and intervention design preclude conclusions regarding the efficacy of digital prevention interventions for the maintenance of pediatric weight outcomes. Null prevention effects may provide important insights regarding the non-inferiority of mHealth/eHealth solutions compared to in-person interventions; however, more research is needed to understand the variability in effects.

Treatment interventions

Overall, the vast majority (76%) of treatment studies did not find significant differences between the comparator and intervention conditions on child weight outcomes. However, meta-analyses of the 32 treatment trials showed a small, albeit significant, effect of the mHealth/eHealth interventions on post-intervention weight outcomes. These results extend the findings from previous reviews, which reported low or no technology-based treatment effects on weight outcomes but significant treatment effects on weight-related behaviors (e.g., physical activity, diet) [12, 13, 86,87,88]. The low heterogeneity of effect sizes at post-intervention suggests the small treatment effects are similar across studies. Gold-standard in-person family-based behavioral interventions demonstrate efficacy for treating childhood obesity [89], with moderate to large effect sizes [90]. Only four studies in the present review compared gold-standard interventions to gold-standard plus technology interventions [40, 43, 47, 82]; however, one study used technology in both treatment arms [47], and only one study found enhanced effects in the technology condition [82]. More research is needed to compare effects of gold-standard treatments to those supplemented by or delivered solely through technology.

Significant effects of the digital treatment interventions were also found at long-term follow-up; however, only two of the six studies reported differences between comparator and treatment on weight outcomes at long-term follow-up. Although heterogeneity of the effect sizes was low, the small number of RCTs included in the long-term meta-analyses (n = 6) suggest that more research is needed to draw conclusions regarding long-term efficacy of digital treatment interventions on child weight outcomes.

There were significantly greater effects of the treatment interventions on weight outcomes for trials that were shorter in duration and had small sample sizes and/or were pilot trials. It is unsurprising that pilot trials were associated with greater treatment effects, as it has been found that the largest effects may come from smaller trials [85]; importantly, the small treatment effects of technology-based interventions were still significant in sensitivity analyses when removing these pilot trials. Previous meta-analyses of pediatric weight interventions [91] and mHealth weight interventions [92] have not found associations between duration and treatment efficacy; however, it has been shown that adult weight loss interventions with longer duration were more likely to report no intervention effects [93]. Although counterintuitive, the negative association between intervention duration and treatment effect found in this study could point to the inconclusive long-term efficacy of digital interventions found in this study, as well as documented issues with engagement and long-term adherence of digital interventions [94].

In addition, digital intervention effects varied based on the delivery target, such that treatment interventions that were delivered to the child had greater effects of the technology arm on child outcomes as compared to interventions delivered only to the parent. Relatedly, greater treatment effects were associated with greater mean child age; these findings coincide in that it is likely that the same interventions targeting older children are more likely to target the child as opposed to the parent. Previous meta-analytic findings on the effectiveness of parent/caregiver-delivered eHealth/mHealth interventions are mixed. A review of eight parent-focused eHealth interventions for pediatric obesity found no significant effect of the interventions on child weight-related outcomes [8]; however, results from a review on the effect of mHealth interventions on youth health outcomes suggests that treatment effects are stronger with the inclusion of parents/caregivers in the intervention [86]. Traditional (i.e., non-technology-based) childhood obesity interventions that are delivered to the parent have been shown to be effective on child weight outcomes [95], and parent-only interventions may be as efficacious as interventions delivered to both the parent and child [89, 96]. It is unclear why parent-only studies in this review did not outperform the comparators. The relatively small number of treatment studies in this subgroup (n = 3) highlight the need for more work to draw conclusions regarding the efficacy of parent-delivered technology interventions to treat childhood obesity.

Other subgroup analyses

Meta-regression analyses did not suggest that the effect of treatment or prevention interventions on weight outcomes differed by technology use (i.e., provider-delivered telehealth, non-provider-delivered technology, or both). Two studies in this review did not attempt to separately evaluate technology from the intervention; these studies manipulated intervention content or dosage between study conditions but employed technology as a method of intervention delivery in both conditions [34, 60]. Notably, durations and dosages (contact frequency) of the interventions were highly variable. Study designs made it difficult to separately evaluate the effect of the technology from other intervention components. This is particularly relevant when studies are comparing no-contact control with a multi-component, technology-assisted intervention, where it is unclear whether the intervention, separate from technology-use, is driving the effect. Furthermore, the content of the intervention varied substantially across the studies, although most targeted energy-balance behaviors and were informed by theory or evidence-based practice. Elucidating the impact of intervention content on outcomes is an important future direction for mHealth/eHealth research.

In addition, meta-regressions suggest that the effects of prevention and treatment studies are not different when technology is used as an adjunct or enhancement to the intervention as compared to when the intervention is primarily delivered through technology. Both solely technology-based interventions and interventions with technology-based adjunctive components were represented among prevention and treatment studies that reported positive effects on adiposity measures. However, given the variability in study designs, comparators, and interventions, further research is needed to evaluate the role of these factors on outcomes.

Other subgroup analyses suggested that the effectiveness of prevention and treatment studies did not differ when the intervention was compared to an active comparator condition (e.g., in-person treatment; attentional control) or a no-contact control condition (e.g., waitlist, usual care). These findings may provide important context in which to interpret the results; however, as more studies emerge, it will be valuable to explore potential differences in intervention effects for studies with different types of “active” comparators such as comparing effects between RCTs with active educational/attentional controls versus active, evidence-based intervention comparators. Many studies tested the efficacy of the technology-based intervention by comparing outcomes to those in non-active control conditions [18, 38, 46, 50, 52, 59, 62, 65, 75, 77, 78]. Among those studies that found positive effects, it is unclear whether that is driven by the type of technology; the sample population (e.g., clinical, adolescent); the intervention content, duration, dose; or a complex interplay of several or all these components. As research continues in this area, researchers should work to elucidate the factors associated with optimal outcomes, including utilizing novel methodologies to optimize mHealth/eHealth interventions [97].

Other studies in this review attempted to isolate the technology component, and several studies demonstrated the non-inferiority of technology-delivered interventions to other treatment modalities [68, 79, 82], such as print-based materials compared to web-based materials [41], telephone-delivered coaching compared to videochats [47], and in-person treatment compared to telephone-delivered treatment [22]. Technology-assisted interventions may provide important benefits that promote valuable treatment outcomes such as increased access to treatment, decreased reliance on personnel and consumable resources, and acceptability and feasibility to the families [6]. Therefore, the small treatment effects and null prevention effects may provide important insights into the non-inferiority of mHealth/eHealth solutions compared to in-person or more resource-intensive interventions.

Challenges and future directions

Engagement and adherence

Engagement and adherence have been shown to be important for weight loss in mHealth/eHealth interventions [98, 99]; however, studies do not consistently measure and report adherence data, and when reported, adherence rates are often suboptimal [75] and decline over time [68, 83]. To elucidate the potential of technology, measures of engagement such as how often a participant logs onto the study website or when a study text message is read could enable stronger conclusions about technology efficacy. Research is needed to understand how to increase motivation to engage in technology-delivered interventions, particularly for self-guided interventions [100]. Identification of technology-based strategies to increase and sustain engagement in treatment is an important future direction. Researchers should strive to creatively integrate features of gamification, entertainment, relaxation, and social connectedness into digital interventions to promote engagement. Finally, greater attention to targeted and patient-centered designs can inform engagement approaches.

Long-term efficacy

Results highlight the limited long-term efficacy of technology-based interventions for pediatric weight management. Of the 19 RCTs in this review that included a follow-up assessment, only four (21%) reported significant intervention effects at long-term follow-up, most (n = 3) of which used technology adjunctively and included an active comparator. Future research should focus on identifying strategies to extend the benefits of treatment beyond the intervention period, including risk factors for behavioral “relapse” and mechanisms that facilitate long-term treatment response. Greater attention to the environmental, social, cultural, and psychological context in which interventions are delivered can also provide critical insights [101]. Active (e.g., ecological momentary assessment) and passive digital data collection (e.g., wearable sensors, global positioning systems in mobile devices) combined with advanced analytic approaches (e.g., machine learning), can enhance the prediction of important microtemporal mechanisms that may partially explain the limited long-term efficacy of interventions. Ultimately, these discoveries could inform the tailoring of interventions that provide personalized, adaptive approaches to support youth in long-term weight maintenance [102].

Recruitment and attrition

Numerous studies in this review reported challenges with recruiting adequate sample sizes [24, 79] and were therefore underpowered to find intervention effects. Research is needed to understand how to address challenges involved in recruiting pediatric populations [103]. Attrition is also a noteworthy challenge highlighted in this review [18, 23, 43, 83], with some studies reporting as high as 70% attrition [45]. This challenge is not exclusive to mHealth/eHealth interventions and was reported across study conditions. Prior meta-analyses of four pediatric obesity mHealth interventions suggest that mHealth interventions may ameliorate drop-out rates [13]. Future research should examine correlates of drop-out, including non-response.

This review also suggests the relative nascency of other novel technologies in pediatric weight management interventions. For example, there is increasing evidence for the potential of virtual and augmented reality in changing health behaviors [104] and preliminary evidence for improving children’s health outcomes [105]. With the increasing accessibility and popularity of these types of media, this medium may be ripe for translation of treatment. Similarly, interventions that are informed by machine learning algorithms that tailor aspects of the intervention to an individual based on the variability of measured factors of the individual over time may prove to be important next steps or useful tools for future interventions [106]. Only one article in this review used a computerized algorithm to inform treatment decisions [66], although a recent review suggests that computer decision support tools may be useful in the management of pediatric obesity [107].

Strengths and limitations

The rigorous methodology following PRISMA guidelines and the collaboration with a medical librarian to inform the search strategy are strengths of this study. The trials in this review all employed a randomized controlled design, which allowed for examination of intervention efficacy on weight outcomes across the studies. Included studies were of moderate quality overall. We were able to synthesize within studies based on other subgroup analyses examining type of technology, delivery population, comparator condition, and technology use (i.e., whether technology is useful as an adjunct to more traditional treatment or whether interventions delivered solely through technology are superior), allowing for more nuanced understanding of the various mHealth/eHealth intervention designs. Research should further explore these subgroups.

Technology use in weight management interventions can greatly increase the scalability of treatment, both in terms of increased reach of interventions and reduced cost of delivery. Only evaluating the effect of an intervention on weight outcomes may not adequately convey the value of technology-assisted solutions in terms of their increased scalability as well as their effect on other health-related outcomes [108]. Indeed, previous research has shown that technology-based interventions are highly feasible and acceptable among pediatric populations and their families [6] and significantly impact weight-related health behaviors, self-monitoring behavior, and psychosocial functioning [5, 6, 12]. An assessment of the psychological and behavioral impact of technology-based interventions was beyond the scope of this review; however, future evaluations of treatment efficacy should consider these important outcomes. Cost-benefit analyses would also enable greater understanding of the utility of technology-based solutions in pediatric weight management.

Due to the relatively low number of studies that reported long-term outcomes, we were unable to examine moderators of the long-term efficacy of technology-assisted interventions for pediatric weight management. Heterogeneity in intervention content, dose of treatment, and study design including comparator type prevented conclusions regarding the efficacy of these components.

As the COVID-19 pandemic catalyzes health care systems and ongoing interventions to rapidly shift from in-person appointments to telemedicine and use of digital tools [109], evaluation of the non-inferiority of digital solutions and identification of specific disadvantages for one modality versus another may be particularly important inquiries. Moreover, results should be interpreted with a lens toward scalability, cost-benefits, and consideration of population impact when interpreting small effects, which may provide small but substantial population-level impact with less use of resources compared to traditional in-person treatment. An average weight loss of 0.07 BMIz units (compared to 0.04 BMIz units in the comparator arms) was observed among the 20 treatment RCTs in this review that measured BMIz as an outcome, which may not meet clinically significant thresholds, yet may still offer lifetime medical cost savings [110] and temper the projected impact of the COVID-19 pandemic on child weight gain [111]. The ubiquity of technology provides unique opportunity to increase access to care in ways that may be less invasive, burdensome, or costly; further, technological solutions can reach populations that may not otherwise have had access to necessary evidence-based treatment.

Conclusion

This review suggests that mHealth/eHealth interventions for pediatric obesity are viable solutions that may be effective at promoting short- and long-term decreases in adiposity outcomes, but evidence is inconclusive regarding the efficacy for prevention of pediatric overweight or obesity. Research should utilize novel study designs [97] and harness technology in innovative ways to address challenges in pediatric weight management interventions, such as adherence, engagement, attrition, and long-term efficacy. More research is needed to determine the comparative effectiveness of technology-based interventions to efficacious gold-standard interventions and elucidate the potential for mHealth/eHealth to increase scalability and reduce costs while also providing significant public health impact.

References

World Health Organization. Obesity and Overweight Fact Sheet. https://www.who.int/en/news-room/fact-sheets/detail/obesity-and-overweight2020 [https://www.who.int/en/news-room/fact-sheets/detail/obesity-and-overweight].

Altman M, Wilfley DE. Evidence update on the treatment of overweight and obesity in children and adolescents. J Clin Child Adolesc Psychol. 2015;44:521–37.

Wilfley DE, Staiano AE, Altman M, Lindros J, Lima A, Hassink SG, et al. Improving access and systems of care for evidence-based childhood obesity treatment: conference key findings and next steps. Obesity (Silver Spring). 2017;25:16–29.

Wang Y, Lim H. The global childhood obesity epidemic and the association between socio-economic status and childhood obesity. Int Rev Psychiatry. 2012;24:176–88.

Darling KE, Sato AF. Systematic review and meta-analysis examining the effectiveness of mobile health technologies in using self-monitoring for pediatric weight management. Childhood Obesity. 2017;13:347–55.

Turner T, Spruijt-Metz D, Wen CKF, Hingle MD. Prevention and treatment of pediatric obesity using mobile and wireless technologies: a systematic review. Pediatr Obes. 2015;10:403–9.

Bradley LE, Smith-Mason CE, Corsica JA, Kelly MC, Hood MM. Remotely delivered interventions for obesity treatment. Curr Obes Rep. 2019;8:354–62.

Hammersley ML, Jones RA, Okely AD. Parent-focused childhood and adolescent overweight and obesity eHealth Interventions: a systematic review and meta-analysis. J Med Internet Res. 2016;18:e203.

Moher D, Liberati A, Tetzlaff J, Altman DG. Preferred reporting items for systematic reviews and meta-analyses: the PRISMA statement. PLoS Med. 2009;6:e1000097.

Bramer WM, Giustini D, de Jonge GB, Holland L, Bekhuis T. De-duplication of database search results for systematic reviews in EndNote. J Med Library Assoc. 2016;104:240–3.

Chaplais E, Naughton G, Thivel D, Courteix D, Greene D. Smartphone interventions for weight treatment and behavioral change in pediatric obesity: a systematic review. Telemed e-Health. 2015;21:822–30.

Chen J-L, Wilkosz ME. Efficacy of technology-based interventions for obesity prevention in adolescents: a systematic review. Adolesc Health Med Ther. 2014;5:159–70.

Lee J, Piao M, Byun A, Kim J. A systematic review and meta-analysis of intervention for pediatric obesity using mobile technology. Stud Health Technol Inform. 2016;225:491–4.

Son Y-J, Lee Y, Lee H-J. Effectiveness of mobile phone-based interventions for improving health outcomes in patients with chronic heart failure: a systematic review and meta-analysis. Int J Environ Res Public Health. 2020;17:1749.

Wali S, Hussain-Shamsy N, Ross H, Cafazzo J. Investigating the use of mobile health interventions in vulnerable populations for cardiovascular disease management: scoping review. JMIR mHealth uHealth. 2019;7:e14275.

Higgins JP, Green S, eds. Cochrane handbook for systematic reviews of interventions. Version 5.1.0 ed. www.handbook.cochrane.org. 2011.

Higgins JP, Savovic J, Page MJ, Sterne JA, Development Group for RoB 2.0. Revised Cochrane risk of bias tool for randomized trials (RoB 2.0) https://www.bristol.ac.uk/media-library/sites/social-community-medicine/images/centres/cresyda/RoB2-0_indiv_main_guidance.pdf2016.

Baranowski T, Baranowski J, Chen TA, Buday R, Beltran A, Dadabhoy H, et al. Videogames that encourage healthy behavior did not alter fasting insulin or other diabetes risks in children: randomized clinical trial. Games Health J. 2019;8:257–64.

Jensen CD, Duraccio KM, Barnett KA, Fortuna C, Woolford SJ, Giraud-Carrier CGFeasibility. acceptability, and preliminary effectiveness of an adaptive text messaging intervention for adolescent weight control in primary care. Clin Pract Pediatric Psychol. 2019;7:57–67.

van Grieken A, Vlasblom E, Wang L, Beltman M, Boere-Boonekamp MM, L’Hoir MP, et al. Personalized web-based advice in combination with well-child visits to prevent overweight in young children: cluster randomized controlled trial. J Med Internet Res. 2017;19:e268.

Fonseca H, Prioste A, Sousa P, Gaspar P, Machado MC. Effectiveness analysis of an internet-based intervention for overweight adolescents: Next steps for researchers and clinicians. BMC Obesity. 2016;3:15.

Bohlin A, Hagman E, Klaesson S, Danielsson P. Childhood obesity treatment: telephone coaching is as good as usual care in maintaining weight loss – a randomized controlled trial. Clinical Obesity. 2017;7:199–205.

Armstrong S, Mendelsohn A, Bennett G, Taveras EM, Kimberg A, Kemper AR. Texting motivational interviewing: a randomized controlled trial of motivational interviewing text messages designed to augment childhood obesity treatment. Childhood obesity (Print). 2018;14:4–10.

Abraham AA, Chow WC, So HK, Yip BHK, Li AM, Kumta SM, et al. Lifestyle intervention using an internet-based curriculum with cell phone reminders for obese Chinese teens: A randomized controlled study. PLoS ONE. 2015;10:e0125673.

Wan X, Wang W, Liu J, Tong T. Estimating the sample mean and standard deviation from the sample size, median, range and/or interquartile range. BMC Med Res Methodol. 2014;14:135.

Hedge L, Olkin I Statistical methods for meta-analysis. Orlando: Academic Press Inc; 2014.

Cohen J Statistical power analysis for the behavioral sciences. 2nd ed. Hillsdale: Lawrence Erlbaum; 1988.

Lipsey MW, Wilson DB Practical meta-analysis. Thousand Oaks, CA, US: Sage Publications, Inc; 2001. ix, 247-ix, p.

Higgins JP, Thompson SG, Deeks JJ, Altman DG. Measuring inconsistency in meta-analyses. BMJ. 2003;327:557–60.

DerSimonian R, Laird N. Meta-analysis in clinical trials. Control Clin Trials. 1986;7:177–88.

Jackson D. Confidence intervals for the between-study variance in random effects meta-analysis using generalised Cochran heterogeneity statistics. Res Synth Methods. 2013;4:220–9.

Higgins JPT. Commentary: heterogeneity in meta-analysis should be expected and appropriately quantified. Int J Epidemiol. 2008;37:1158–60.

Gutiérrez-Martínez L, Martínez RG, González SA, Bolívar MA, Estupiñan OV, Sarmiento OL. Effects of a strategy for the promotion of physical activity in students from Bogotá. Rev Saude Publica. 2018;52:79.

Haines J, Douglas S, Mirotta JA, O’Kane C, Breau R, Walton K, et al. Guelph Family Health Study: pilot study of a home-based obesity prevention intervention. Can J Public Health = Revue canadienne de sante publique. 2018;109:549–60.

R Core Team. R: A language and environment for statistical computing. Vienna, Austria: R Foundation for Statistical Computing; 2018.

Balduzzi S, Rücker G, Schwarzer G. How to perform a meta-analysis with R: a practical tutorial. Evid Based Ment Health. 2019;22:153–60.

Schild AHE, Voracek M. Finding your way out of the forest without a trail of bread crumbs: development and evaluation of two novel displays of forest plots. Res Synth Methods. 2015;6:74–86.

Ahmad N, Shariff ZM, Mukhtar F, Lye MS. Family-based intervention using face-to-face sessions and social media to improve Malay primary school children’s adiposity: a randomized controlled field trial of the Malaysian REDUCE programme. Nutr J. 2018;17:74.

Bagherniya M, Darani FM, Sharma M, Maracy MR, Birgani RA, Ranjbar G, et al. Assessment of the efficacy of physical activity level and lifestyle behavior interventions applying social cognitive theory for overweight and obese girl adolescents. J Res Health Sci. 2018;18:e00409.

Banos RM, Oliver E, Navarro J, Vara MD, Cebolla A, Lurbe E, et al. Efficacy of a cognitive and behavioral treatment for childhood obesity supported by the ETIOBE web platform. Psychol Health Med. 2019;24:703–13.

Bruñó A, Escobar P, Cebolla A, Álvarez-Pitti J, Guixeres J, Lurbe E, et al. Home-exercise childhood obesity intervention: a randomized clinical trial comparing print versus web-based (move it) platforms. J Pediatr Nurs. 2018;42:e79–e84.

Chen JL, Guedes CM, Cooper BA, Lung AE. Short-term efficacy of an innovative mobile phone technology-based intervention for weight management for overweight and obese adolescents: pilot study. Interact J Med Res. 2017;6:e12.

Christison AL, Evans TA, Bleess BB, Wang H, Aldag JC, Binns HJ. Exergaming for health: a randomized study of community-based exergaming curriculum in pediatric weight management. Games Health J. 2016;5:413–21.

Coknaz D, Mirzeoglu AD, Atasoy HI, Alkoy S, Coknaz H, Goral K. A digital movement in the world of inactive children: favourable outcomes of playing active video games in a pilot randomized trial. Eur J Pediatr. 2019;178:1567–76.

Currie J, Collier D, Raedeke TD, Lutes LD, Kemble CD, Dubose KD. The effects of a low-dose physical activity intervention on physical activity and body mass index in severely obese adolescents. Int J Adolesc Med Health. 2018;30: /j/ijamh.2018.30.issue-6/ijamh-2016-0121/ijamh-2016-0121.xml.

Da Silva KBB, Ortelan N, Murta SG, Sartori I, Couto RD, Fiaccone RL, et al. Evaluation of the computer-based intervention program staying fit Brazil to promote healthy eating habits: the results from a school cluster-randomized controlled trial. Int J Environ Res Public Health. 2019;16:1674.

Davis AM, Sampilo M, Gallagher KS, Dean K, Saroja MB, Yu QHJ, et al. Treating rural paediatric obesity through telemedicine vs. telephone: outcomes from a cluster randomized controlled trial. J Telemed Telecare. 2016;22:86–95.

Delisle Nyström C, Sandin S, Henriksson P, Henriksson H, Maddison R, Löf M. A 12-month follow-up of a mobile-based (mHealth) obesity prevention intervention in pre-school children: the MINISTOP randomized controlled trial. BMC Public Health. 2018;18:658.

Delisle Nyström C, Sandin S, Henriksson P, Henriksson H, Trolle-Lagerros Y, Larsson C, et al. Mobile-based intervention intended to stop obesity in preschool-aged children: The MINISTOP randomized controlled trial. Am J Clin Nutr. 2017;105:1327–35.

Faith MS, Diewald LK, Crabbe S, Burgess B, Berkowitz RI. Reduced eating pace (RePace) behavioral intervention for children prone to or with obesity: does the turtle win the race? Obesity. 2019;27:121–9.

Fleischman A, Hourigan SE, Lyon HN, Landry MG, Reynolds J, Steltz SK, et al. Creating an integrated care model for childhood obesity: a randomized pilot study utilizing telehealth in a community primary care setting. Clinical Obesity. 2016;6:380–8.

Foley L, Jiang Y, Ni Mhurchu C, Jull A, Prapavessis H, Rodgers A, et al. The effect of active video games by ethnicity, sex and fitness: Subgroup analysis from a randomised controlled trial. Int J Behav Nutr Phys Act. 2014;11:46.

Fulkerson JA, Friend S, Flattum C, Horning M, Draxten M, Neumark-Sztainer D, et al. Promoting healthful family meals to prevent obesity: HOME Plus, a randomized controlled trial. Int J Behav Nutr Phys Act. 2015;12:154.

Gao Z, Lee JE, Zeng N, Pope ZC, Zhang Y, Li X. Home-based exergaming on preschoolers’ energy expenditure, cardiovascular fitness, body mass index and cognitive flexibility: a randomized controlled trial. J Clin Med. 2019;8:1745.

Garza C, Martinez DA, Yoon J, Nickerson BS, Park KS. Effects of telephone aftercare intervention for obese hispanic children on body fat percentage, physical fitness, and blood lipid profiles. Int J Environ Res Public Health. 2019;16:5133.

Gerards SMPL, Dagnelie PC, Gubbels JS, Van Buuren S, Hamers FJM, Jansen MWJ, et al. The effectiveness of lifestyle triple P in the Netherlands: a randomized controlled trial. PLoS ONE. 2015;10:e0122240.

Hammersley ML, Okely AD, Batterham MJ, Jones RA. An internet-based childhood obesity prevention program (Time2bHealthy) for parents of preschool-aged children: randomized controlled trial. J Med Internet Res. 2019;21:e11964.

Hull PC, Buchowski M, Canedo JR, Beech BM, Du L, Koyama T, et al. Childhood obesity prevention cluster randomized trial for Hispanic families: outcomes of the healthy families study. Pediatr Obes. 2018;13:686–96.

Kennedy SG, Smith JJ, Morgan PJ, Peralta LR, Hilland TA, Eather N, et al. Implementing resistance training in secondary schools: a cluster randomized controlled trial. Med Sci Sports Exerc. 2018;50:62–72.

Kulendran M, King D, Schmidtke KA, Curtis C, Gately P, Darzi A, et al. The use of commitment techniques to support weight loss maintenance in obese adolescents. Psychol Health. 2016;31:1332–41.

Love-Osborne K, Fortune R, Sheeder J, Federico S, Haemer MA. School-based health center-based treatment for obese adolescents: feasibility and body mass index effects. Childhood Obesity. 2014;10:424–31.

Lubans DR, Smith JJ, Plotnikoff RC, Dally KA, Okely AD, Salmon J, et al. Assessing the sustained impact of a school-based obesity prevention program for adolescent boys: the ATLAS cluster randomized controlled trial. Int J Behav Nutr Phys Act. 2016;13:92.

Maddison R, Marsh S, Foley L, Epstein LH, Olds T, Dewes O, et al. Screen-time weight-loss intervention targeting children at home (SWITCH): a randomized controlled trial. Int J Behav Nutr Phys Act. 2014;11:111.

Mameli C, Brunetti D, Colombo V, Bedogni G, Schneider L, Penagini F, et al. Combined use of a wristband and a smartphone to reduce body weight in obese children: randomized controlled trial. Pediatr Obes. 2018;13:81–7.

Markert J, Herget S, Petroff D, Gausche R, Grimm A, Kiess W, et al. Telephone-based adiposity prevention for families with overweight children (T.A.F.F.-Study): one year outcome of a randomized, controlled trial. Int J Environ Res Public Health. 2014;11:10327–44.

Moschonis G, Michalopoulou M, Tsoutsoulopoulou K, Vlachopapadopoulou E, Michalacos S, Charmandari E, et al. Assessment of the effectiveness of a computerised decision-support tool for health professionals for the prevention and treatment of childhood obesity. Results from a randomised controlled trial. Nutrients. 2019;11:706.

Nawi AM, Jamaludin FIC. Effect of internet-based intervention on obesity among adolescents in Kuala Lumpur: a school-based cluster randomised trial. Malays J Med Sci. 2015;22:47–56.

Nollen NL, Mayo MS, Carlson SE, Rapoff MA, Goggin KJ, Ellerbeck EF, Mobile technology for obesity prevention: a randomized pilot study in racial- and ethnic-minority girls. Am J Prev Med. 2014;46:404–8.

Norman G, Huang J, Davila EP, Kolodziejczyk JK, Carlson J, Covin JR, et al. Outcomes of a 1-year randomized controlled trial to evaluate a behavioral ‘stepped-down’ weight loss intervention for adolescent patients with obesity. Pediatr Obes. 2016;11:18–25.

Pfeiffer KA, Robbins LB, Ling J, Sharma DB, Dalimonte-Merckling DM, Voskuil VR, et al. Effects of the Girls on the Move randomized trial on adiposity and aerobic performance (secondary outcomes) in low-income adolescent girls. Pediatr Obes. 2019;14:e12559.

Rerksuppaphol L, Rerksuppaphol S. Internet based obesity prevention program for Thai school children-a randomized control trial. J Clin Diagn Res. 2017;11:SC07–11.

Rifas-Shiman SL, Taveras EM, Gortmaker SL, Hohman KH, Horan CM, Kleinman KP, et al. Two-year follow-up of a primary care-based intervention to prevent and manage childhood obesity: the high five for kids study. Pediatr Obes. 2017;12:e24–e7.

Sherwood NE, JaKa MM, Crain AL, Martinson BC, Hayes MG, Anderson JD. Pediatric primary care-based obesity prevention for parents of preschool children: a pilot study. Childhood Obesity (Print). 2015;11:674–82.

Sherwood NE, Levy RL, Seburg EM, Crain AL, Langer SL, JaKa MM, et al. The healthy homes/healthy kids 5-10 obesity prevention trial: 12 and 24-month outcomes. Pediatr Obes. 2019;14:e12523.

Simons M, Brug J, Chinapaw MJM, De Boer M, Seidell J, De Vet E. Replacing non-active video gaming by active video gaming to prevent excessive weight gain in adolescents. PLoS ONE. 2015;10:e0126023.

Smith JJ, Morgan PJ, Plotnikoff RC, Dally KA, Salmon J, Okely AD, et al. Smart-phone obesity prevention trial for adolescent boys in low-income communities: The ATLAS RCT. Pediatrics. 2014;134:e723–e31.

Staiano AE, Beyl RA, Guan W, Hendrick CA, Hsia DS, Newton RL. Home-based exergaming among children with overweight and obesity: a randomized clinical trial. Pediatr Obes. 2018;13:724–33.

Staiano AE, Marker AM, Beyl RA, Hsia DS, Katzmarzyk PT, Newton RL. A randomized controlled trial of dance exergaming for exercise training in overweight and obese adolescent girls. Pediatr Obes. 2017;12:120–8.

Sze YY, Daniel TO, Kilanowski CK, Collins RL, Epstein LH. Web-based and mobile delivery of an episodic future thinking intervention for overweight and obese families: a feasibility study. JMIR mHealth uHealth. 2015;3:e97

Taveras EM, Marshall R, Kleinman KP, Gillman MW, Hacker K, Horan CM, et al. Comparative effectiveness of childhood obesity interventions in pediatric primary care: a cluster-randomized clinical trial. JAMA Pediatrics. 2015;169:535–42.

Taveras EM, Marshall R, Sharifi M, Avalon E, Fiechtner L, Horan C, et al. Comparative effectiveness of clinical-community childhood obesity interventions a randomized clinical trial. JAMA Pediatrics 2017;171:e171325.

Trost SG, Sundal D, Foster GD, Lent MR, Vojta D. Effects of a pediatricweight management program with and without active video games a randomized trial. JAMA Pediatrics. 2014;168:407–13.

Wald ER, Ewing LJ, Moyer SCL, Eickhoff JC. An interactive web-based intervention to achieve healthy weight in young children. Clin Pediatr (Phila). 2018;57:547–57.

Egger M, Smith GD, Schneider M, Minder C. Bias in meta-analysis detected by a simple, graphical test. BMJ. 1997;315:629.

Dechartres A, Trinquart L, Boutron I, Ravaud P. Influence of trial sample size on treatment effect estimates: meta-epidemiological study. Br Med J. 2013;346:f2304.

Fedele DA, Cushing CC, Fritz A, Amaro CM, Ortega A. Mobile health interventions for improving health outcomes in youth: a meta-analysis. JAMA Pediatrics. 2017;171:461–9.

Wickham CA, Carbone ET. Who’s calling for weight loss? A systematic review of mobile phone weight loss programs for adolescents. Nutr Rev. 2015;73:386–98.

Quelly SB, Norris AE, DiPietro JL. Impact of mobile apps to combat obesity in children and adolescents: A systematic literature review. J Spec Pediatr Nurs. 2016;21:5–17.

Chai LK, Collins C, May C, Brain K, Wong See D, Burrows T. Effectiveness of family-based weight management interventions for children with overweight and obesity: an umbrella review. JBI Database Syst Rev Implement Rep. 2019;17:1341–427.

Berge JM, Everts JC. Family-based interventions targeting childhood obesity: a meta-analysis. Child Obes. 2011;7:110–21.

Wilfley DE, Tibbs TL, Van Buren DJ, Reach KP, Walker MS, Epstein LH. Lifestyle interventions in the treatment of childhood overweight: a meta-analytic review of randomized controlled trials. Health Psychol. 2007;26:521–32.

Schippers M, Adam PCG, Smolenski DJ, Wong HTH, de Wit JBF. A meta-analysis of overall effects of weight loss interventions delivered via mobile phones and effect size differences according to delivery mode, personal contact, and intervention intensity and duration. Obes Rev. 2017;18:450–9.

Teixeira PJ, Going SB, Sardinha LB, Lohman TG. A review of psychosocial pre-treatment predictors of weight control. Obes Rev. 2005;6:43–65.

Donkin L, Christensen H, Naismith SL, Neal B, Hickie IB, Glozier N. A systematic review of the impact of adherence on the effectiveness of e-therapies. J Med Internet Res. 2011;13:e52.

Boutelle KN, Rhee KE, Liang J, Braden A, Douglas J, Strong D, et al. Effect of attendance of the child on body weight, energy intake, and physical activity in childhood obesity treatment: a randomized clinical trial. JAMA pediatrics. 2017;171:622–8.

Loveman E, Al-Khudairy L, Johnson RE, Robertson W, Colquitt JL, Mead EL, et al. Parent-only interventions for childhood overweight or obesity in children aged 5 to 11 years. Cochrane Database Syst Rev. 2015;12:CD012008.

Collins LM, Murphy SA, Strecher V. The multiphase optimization strategy (MOST) and the sequential multiple assignment randomized trial (SMART): new methods for more potent eHealth interventions. Am J Prev Med. 2007;32 :S112–8.

Patrick K, Calfas KJ, Norman GJ, Rosenberg D, Zabinski MF, Sallis JF, et al. Outcomes of a 12-month web-based intervention for overweight and obese men. Ann Behav Med. 2011;42:391–401.

Shapiro JR, Koro T, Doran N, Thompson S, Sallis JF, Calfas K, et al. Text4Diet: a randomized controlled study using text messaging for weight loss behaviors. Prev Med. 2012;55:412–7.

Newman MG, Szkodny LE, Llera SJ, Przeworski A. A review of technology-assisted self-help and minimal contact therapies for anxiety and depression: is human contact necessary for therapeutic efficacy? Clin Psychol Rev. 2011;31:89–103.

Walton GM, Yeager DS. Seed and soil: psychological affordances in contexts help to explain where wise interventions succeed or fail. Curr Dir Psychol Sci. 2020;29:219–26.

Almirall D, Nahum-Shani I, Sherwood NE, Murphy SA. Introduction to SMART designs for the development of adaptive interventions: with application to weight loss research. Transl Behav Med. 2014;4:260–74.

Kelleher E, Davoren MP, Harrington JM, Shiely F, Perry IJ, McHugh SM. Barriers and facilitators to initial and continued attendance at community-based lifestyle programmes among families of overweight and obese children: a systematic review. Obes Rev. 2017;18:183–94.

Wiederhold BK, Riva G, Gutierrez-Maldonado J. Virtual reality in the assessment and treatment of weight-related disorders. Cyberpsychology Behav Soc Netw. 2016;19:67–73.

Kiefer AW, Pincus D, Richardson MJ, Myer GD. Virtual reality as a training tool to treat physical inactivity in children. Front Public Health. 2017;5:349.