Abstract

Background/Objectives

Because no validated tool exists to assess nutrition knowledge regarding weight management we developed and tested the Weight Management Nutrition Knowledge Questionnaire (WMNKQ).

Subjects/Methods

The questionnaire assesses nutrition knowledge in these categories: energy density of food, portion size/serving size, alcohol and sugar sweetened beverages, how food variety affects food intake, and reliable nutrition information sources. In total 60 questions were reviewed by 6 experts for face validity and quantitative analysis was used to assess item difficulty, item discrimination, internal consistency, inter-item-correlation, test-retest reliability, construct validity, criterion validity, and convergent validity.

Results

The final WMNKQ contained 43 items. Experts removed 3 of the original 60 questions and modified 41. Eighteen items did not meet criteria for item difficulty, item discrimination, and/or inter-item correlation; 4 were retained. The WMNKQ met criteria for internal consistency (Cronbach's alpha = 0.88), reliability (test-retest correlation ρ = 0.90, P < 0.0001), construct validity (known groups comparison) - dietitians scored 16% better (p < 0.0001) than information technology workers, and criterion validity (pre- to post-intervention improvement in knowledge scores = 11.2% (95% CI 9.8–12.5, p < 0.0001)). Participants younger than age 55 scored significantly better than those over age 55 (convergent validity).

Conclusions

The WMNKQ measures how well nutrition principles of weight management are understood.

Similar content being viewed by others

Introduction

The high prevalence [1] and associated medical costs of obesity in the United States provide an incentive to optimize obesity treatment, most often based upon comprehensive lifestyle interventions [2]. Factors contributing to fat gain include excess energy intake and low physical activity [3]. Some properties of food and food consumption patterns clearly contribute to excess energy intake. These include consumption of high energy dense foods [4, 5], large portion sizes [6], a greater variety of foods [7, 8] high in palatability [9], and sugar sweetened beverages [10]. Successful comprehensive lifestyle weight management programs help clients learn these nutrition concepts, teach cognitive behavior principles and support increased physical activity [2]. Most programs rely on the amount and duration of weight loss as the primary basis for evaluating success of all three components. There have been no systematic attempts to determine whether the nutritional knowledge components of these programs have been sufficiently well understood by the clients. When assessed, lifestyle programs used a general nutrition knowledge tool to measure knowledge [11].

Lack of success in losing weight may reflect inadequate teaching of important nutrition principles, failure to implement behavior change skills or both. We hypothesized that teaching nutrition principles would be more useful than asking clients to memorize a series of facts, because those who understand major concepts can apply them to novel situations not covered by a series of examples. A questionnaire that addresses knowledge related to the key dietary principles of successful weight management will allow programs to identify whether the nutrition education component has successfully transmitted fundamental concepts.

Understanding nutrition principles does not necessarily guarantee behavioral changes that result in successful long term weight loss. However, the knowledge, attitude, and behavior [12, 13] model of health education implies that, although attitude is an important factor, it must be in conjunction with a gain in knowledge to result in successful behavior change. With the goal of evaluating the nutrition education component of our lifestyle intervention for obesity [14] we conducted a thorough literature review that involved a PubMed search using the key words “nutrition” or “nutritional” and “knowledge” and “questionnaire” or “survey” in the title. We found no validated instruments that tested dietary principles of weight management; instead, all published validated instruments tested general nutrition knowledge.

To address this gap, we developed a questionnaire that measures nutrition knowledge as it relates to weight management.

Methods

The questionnaire will be referred to as the Weight Management Nutrition Knowledge Questionnaire (WMNKQ). We included questions regarding both the types of information that people should understand and potential sources of information to avoid. We did this because many of our patients rely on media that promotes products with no proven weight loss benefit [15]. As part of WMNKQ, we included two questions regarding sources of reliable nutrition information in order to allow future evaluations of whether knowledge of this topic can predict long-term weight management success. The aim of this report is to describe the development and validation steps for the WMNKQ. All protocols used to test the WMNKQ were reviewed and approved by the Mayo Clinic Institutional Review Board (IRB).

Developing the questionnaire item pool

A pool of ~200 questions was developed by gathering items from published nutrition questionnaires and writing new items (Fig. 1). The questions were designed so that as a whole they would assess the understanding of concepts, as opposed to a series of nutrition facts. We focused on areas of nutrition knowledge that are most specific to weight management – energy density of food, how portion size or serving size affects food intake, alcohol-containing and sugar sweetened beverages, the influence of the variety of available foods on food intake; we also included questions on reliable nutrition information sources.

A total of 18 items did not fulfill criteria for item difficulty, item discrimination, and or inter-item correlation. Four of these items were retained. Although item 34 did not fulfill criteria for item difficulty and item discrimination, it was retained because it was only one of two items to address energy content of alcoholic beverages.

Questions were gathered from different sources including the Parmenter and J Wardle Questionnaire [16], NHLBI [17], CDC [18], Mayo Clinic Patient Education Center, and USDA [19]. Permission to use one item modified from the Parmenter and J Wardle questionnaire [16], was granted by Springer Nature. We solicited questions and critiques from a registered dietitian and five scientists outside Mayo Clinic with recognized expertize in this field. The WMNKQ was designed for a 9th grade reading using the Flesch–Kincaid readability tests [20] level to ensure that the questions were clear, not confusing, non-debatable, and worded simply. We included questions in the five subtopic areas of nutrition knowledge mentioned above. By eliminating questions with similar content and those that were too complex or arcane, we reduced the item pool to 60 questions for further assessment.

Face validity

Face validity refers to how relevant the items appear to be to the respondents [16]. These 60 questions were sent to six experts who were PhD or MD scientists working in the field of nutrition and registered dietitians for assessment of face validity. Experts were asked whether the topics covered were comprehensive within the domain of weight management specific nutrition knowledge and if the questions were unequivocal. They provided feedback for modifications, elimination and/or additions of questions. The draft after following these recommendations consisted of 57 items.

Quantitative assessment

Two-hundred eighty-six adults residing in Olmsted County, MN (n = 187) and Duval County, FL (n = 99) completed the original 57 item questionnaire in person. Of the Olmsted County participants, 136 were recruited through Mayo Clinic campus advertisements and 51 were recruited from a church in Rochester, Minnesota. The 99 participants from Duval County were recruited at a health education event on Edward Waters College Campus. All participants were ≥ 18 years old and able to read/speak proficient English. All demographic data was self-reported, including BMI.

Validity Testing

Construct validity is a psychometric test that determines the extent that an instrument is measuring what it is intended to measure [16]. Construct validity was assessed by using the “known-groups method [21]” by administering the WMNKQ to 18 registered dietitians and 25 information technology (IT) specialists. Participants were recruited through flyer ads and website ads. These two groups have similar amounts of post-secondary education and we hypothesized they would differ in nutrition knowledge because of the nature of their careers. Independent sample t-tests were used to assess the difference in scores between groups, both for the overall questionnaire and for each section. Analysis of covariance was performed to control for demographic differences between the dietitians and IT specialists. Test-retest reliability was assessed by giving the questionnaire to the dietitians and IT specialists on two separate occasions 2 weeks apart. We then assessed the correlation between the scores obtained on the two occasions the questionnaire was administered.

In psychometric analysis of questionnaires, criterion validity testing is done to assess whether items in an instrument correlate with an expected outcome [22]. We hypothesized that an intervention designed to increase weight management nutrition knowledge would result in an increase in score on our questionnaire; this is a well-recognized approach to test for “criterion validity”. Criterion validity was assessed by providing a nutrition knowledge intervention consisting of three, one-hour classes, to 119 participants of the Olmstead county cohort as part of the qualitative assessment component of this study. The materials used for the classes were developed and adapted from material used in the Look AHEAD trial [23]. Some content was created from materials developed by registered dietitians, a physician trained in nutrition and a clinical and translation science PhD doctoral student. The sessions were provided over a 1–2 week period to twelve groups of participants with approximately 10 participants per group. The sessions were designed to address the general concepts we identified as important for weight management without giving examples that are present in the questionnaire. The didactic content was delivered with PowerPoint presentations, hands on learning activities with food models, and 15 min for discussion, questions and answers. The WMNKQ was administered pre- and post-intervention. A $45 gift card incentive was given for attending all three sessions and completing both pre- and post-intervention questionnaires. Three different interventionists (two experienced weight management registered dietitian and a PhD student) taught the classes using identical materials; each interventionist taught four groups. The improvement in scores was compared for all participants and between the participants taught by the different interventionists to determine whether there was greater or lesser knowledge gain as a function of the interventionist to whom the participants were assigned. Changes in the results before and after the nutrition knowledge intervention class were compared to assess criterion validity of the questionnaire and for each section.

Convergent validity assesses whether expected associations exist between instrument responses and demographic variables that should differ between groups. If we observed the predicted correlations between age, education, and nutrition knowledge as assessed by our questionnaire we would take this “convergent validity” as additional evidence for the usefulness of WMNKQ. Convergent validity was assessed using data from 126 participants from Duval County and Olmsted County. We hypothesized that nutrition knowledge score would be greater in more educated participants [24] and in younger than to older participants [25]. Correlations between scores and demographic variables were examined. The association between nutrition knowledge scores with both age groups and education was assessed using analysis of covariance (ANCOVA).

Scoring System

The final questionnaire, with notations for which category and the correct answers, is provided in the Supplementary Information. For validation purposes, the total score was expressed as a percentage correct. We also examined a weighted score system that may be more useful in guiding the nutrition teaching and evaluation of comprehensive lifestyle intervention programs. For this system the energy density questions are weighted as 40%, the portion size questions are weighed at 40% and the beverage section is weighted 20%. The questions on the topics of how food variety affects food intake and reliable nutrition information sources are scored separately.

Statistical Analysis Methods

All analyses were performed utilizing two sided tests with the significance level alpha of set a priori at 0.05. We report the relevant P values and 95% confidence intervals. Binary analysis is given as proportions, and means are accompanied by standard deviations. Statistical power analyses were performed to determine the appropriate number of volunteers to recruit as part of the IRB approval process and prior to beginning each of these studies.

For item difficulty, Kline et al. suggested that items answered correctly by less than 20% or more than 80% are of limited value and should be discarded [26]. However, Parmenter et al. suggested a range of > 30% and < 90% for their nutrition knowledge questionnaire because they determined that their population had a higher than average nutrition knowledge [16]. We adopted the range of > 90% or < 30% correct answers because the Olmstead county population has higher than expected health literacy [27], which may confer higher nutrition knowledge. For Item discrimination, Kline, Streiner, and Norman [26, 28, 29] suggest that an items with an item-total score correlation of less than 0.2 can be discarded; we utilized this as our screening value. For inter-item correlation, we calculated the agreement in scores between every two items in the questionnaire. Questions with an r > 0.9 were flagged for being potentially redundant [26]. For internal consistency reliability, Klein suggested that the minimum requirement is ≥ 0.7 [26, 30]. We calculated Cronbach's alpha for the questionnaire overall and for each section.

For construct validity testing, we used an independent t-test to compare overall mean between dietitians and IT and used an analysis of co-variance to correct for demographic differences. For test-retest reliability we used the Spearman’s correlation to examine similarities in results from questionnaires filled out in a 2 week interval with no intervention; Kline [26] suggest a minimum requirement of ρ of 0.7 for test-retest reliability. For criterion validity, we utilized a paired t- test to examine changes in score from before to after the nutrition education intervention.

Results

Face validity

Of the original 200 items, 60 were selected based on face validity. Feedback was incorporated into the questionnaire and the initial draft consisted of 57 items. Three questions were eliminated because the items were considered too ambiguous or arcane.

Quantitative analysis

Demographics for the participants from medical center and from Duval County are provided in Table 1. Eighteen items were flagged for possible exclusion based upon the criteria described in the Methods. Of those eighteen items, four were retained because they tested important concepts and were considered necessary in our questionnaire (see below).

Item difficulty

Six items were deemed unhelpful in assessing weight management nutrition knowledge because they were answered correctly by greater than 90% of participants; all 6 were excluded.

Five items were answered correctly by less than 30% of participants; these could either be poorly worded (ambiguous) or were dealing with topics that are less common knowledge. Of these 5 items, 4 were excluded and 1 (number 34) was retained. Item 34 tested knowledge regarding the energy content of different alcoholic beverages. This item displayed the greatest single question improvement (56%) in score following the nutrition education intervention (see below), indicating that the low score reflected a lack of knowledge rather than difficult wording of the question. A total of 9 items were excluded on the basis of item difficulty.

Item discrimination

Eight items had an item to total score correlation of less than 0.2. Three items were excluded and four (numbers 2, 6, 28 and 34) were retained. Item 2 tested knowledge of how adding low energy density food to a high energy density food can decrease the overall calories per serving, a concept that we deemed necessary to test. In addition, the improvement in score for this question was 29% following the intervention. Item 6 tested the knowledge of the “buffet effect,” a concept that examines how the variety of foods available at a meal affects food intake. The improvement in score for this question was 27% following the nutrition education intervention. Item 28 likewise tested the concept that a larger number of food options at a meal increases the likelihood of consuming excess calories. The improvement in score of this question in response to the educational intervention was 40%. Item 34 was retained as described above.

Item to item correlation

Two items had a score correlation of greater than 0.9 and were already excluded for not meeting item difficulty criteria.

Internal consistency

Overall Cronbach’s alpha for the remaining 43 items was 0.88. Cronbach’s alpha for each category of questions is provided in Table 2.

Construct validity (known-groups comparison)

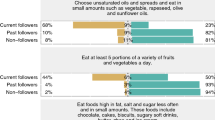

Eighteen dietitians and 25 IT workers completed the questionnaire; their demographics are provided in Table 3. The dietitians were mainly women and IT workers were mainly men. The dietitians scored an average of 92% (95% CI 90–95 %) and the average score for IT workers was 76% (95% CI 72 - 81%), for an overall difference of 16% (p < 0.0001), which remained significant after adjusting for sex and income (Table 4). The dietitians did not score significantly better in the topic of how food variety affects food intake, however, there were only two questions on this topic.

Test-retest reliability

The registered dietitians and IT workers were administered the questionnaire 14 ± 2 days apart. Spearman correlation for total score was ρ = 0.90, p < 0.0001, indicating acceptable test-retest reliability (Table 5).

Criterion validity

Of the original 286 participants, 136 enrolled in the nutrition knowledge intervention classes. Of these, 119 attended all 3 classes and completed the post-intervention questionnaire. For these participants the 57-item questionnaire was administered, but criterion validity was calculated using only the 43 final items. The improvement in overall scores was 11.2% (95% CI 9.8–12.5, p < 0.0001, Table 6), indicating the questionnaire met the standards for criterion validity. Table 6 provides the differences in scores by topic. The improvement in scores was not different between the three interventionists (P = 0.35).

Two of the interventionists had no access to the survey for at least 6 months before they began teaching the classes. The third interventionist (DM) was responsible for providing the paper surveys to the participants and thus had access to the survey. Evidence that this does not invalidate the criterion validity results includes the lack of between-group differences and that the improvement in scores for those 79 participants taught by the first two interventionists was 11.6% (95% confidence interval 8.6% - 14.6%, P < 0.0001.

Based on the results of internal consistency, it was more reasonable to group specific sets of questions (Table 6). A weighted scoring algorithm was developed to use in future lifestyle intervention programs. Trustworthy nutrition information source and variety of food affects energy intake sections were not included in the weighted scoring algorithm to derive a total score because of their low internal consistency. The four questions included in these two sections remain in the questionnaire as standalone questions.

Convergent validity

Consistent with our hypothesis, participants with undergraduate and graduate degrees scored significantly better on this questionnaire than those with a high school diploma or less (Table 7). Nutrition knowledge scores were also higher among the younger (age < 35) and middle age (35–54) participants compared to the older participants (age ≥ 55).

Discussion

We developed and tested the WMNKQ, a 43 item questionnaire that measures nutrition knowledge as it relates to weight management. We subjected our questionnaire to tests for item difficulty, item discrimination and item-to-item correlation in order to remove unnecessary questions. WMNKQ was tested using published criteria for internal consistency, construct validity using the known-groups comparison approach, test-retest reliability, criterion validity, and we found evidence for convergent validity. The results indicate that the WMNKQ is a useful tool that can assess nutrition knowledge relevant to weight management in order to determine how well-linked this knowledge is to long-term success.

Our results indicate that some types of energy intake concepts are not well-understood, but that deficits in these knowledge areas are readily correctable. Our participants scored < 50% correct in the categories of alcohol intake/sugar sweetened beverages and how variety of food affects energy intake. However, after attending classes to improve nutrition knowledge as it relates to weight management, scores on both sections improved significantly (Table 4). The potential quantitative effects of these concepts are not trivial. Sixty-nine percent of US adults consume at least 255 Kcal/day from alcohol [31] and 49% consume at least one sugar sweetened beverage/day (average 145 Kcal/day) [32], which may contribute to excess energy intake and obesity [33, 34]. With respect to the potential effects of greater food variety, previous studies have shown that volunteers offered three food choices versus one consume ~20% more food [8]. Furthermore, consumption of a greater variety of energy dense foods is associated with overweight [35]. Thus, greater food variety is a quantitatively important contributor to excess energy intake. The WMNKQ should allow investigators to assess whether a better understanding of these two concepts is associated with greater success in weight management.

The category of energy density (19 questions) [36] was overemphasized in our questionnaire because of its quantitative importance in controlled studies [37]. A direct association between dietary energy density and weight gain has been shown [36]. We developed questions using food labels with calorie content and portion size to assess whether people can evaluate energy density information, including how fiber-rich foods relate to energy density. The second largest category in terms of questions (15 questions) was related to portion size, where we tested the understanding of serving size and standard portions, knowledge that is essential for achieving weight loss [38]. The ability to understand food labels [39, 40] was incorporated into several categories to determine whether knowledge deficits are related to food label understanding vs. the topic itself. We included 2 questions on “reliable nutrition information sources” because, although there is widespread availability of digital nutrition information, this information is often inaccurate [15]. We addressed this issue in our comprehensive lifestyle weight management program in response to participant feedback and chose to include questions that briefly assess knowledge of this topic. We hope to eventually understand whether a better understanding of what sources of nutrition information has predictive value for successful weight management. The topic(s) of alcohol/sugar sweetened beverages and how food variety affects energy intake [7, 41] were also less amenable to non-repetitive examples and we also included limited numbers of questions on these topics. This makes it difficult to perform all of the statistical analyses for the categories with fewer questions, however. That said, the analyses of the questionnaire overall support our contention that it can provide a useful tool to test for nutrition knowledge specific to weight management. Although, other nutrition knowledge questionnaires contain questions/sections related to weight management [15, 24, 42,43,44,45], our questionnaire is unique because it includes the above described five categories as they pertain to weight management.

Most nutrition knowledge questionnaires [16, 25, 42,43,44,45] examine general nutrition knowledge and undergo a series of validation tests [16, 25, 44, 45]. All 4 general nutrition knowledge questionnaires [16, 25, 42,43,44,45] underwent tests for construct validity, test-retest reliability and internal consistency. Item difficulty analysis was performed in the GNKQ, Jones, and the Feren questionnaires, but not the GNKQ-R. Item discrimination analysis was performed in the GNKQ, GNKQ-R, and the Jones questionnaires, but not in the Feren questionnaire. The GNKQ-R included tests for criterion validity and convergent validity. None of the questionnaires performed item to item correlation analysis. The WMNKQ underwent analysis and appropriate modifications when tested for item difficulty, item discrimination, item-to-item correlation and internal consistency. It met validity criteria for test-retest reliability, construct validity, criterion validity, and convergent validity. Thus, our evaluation of the WMNKQ was more stringent than the available general nutrition knowledge questionnaires.

As was done with other questionnaires [16, 25, 42,43,44,45], we retained some questions that did not meet the usual statistical criteria for difficulty or item discrimination. The retained questions had marked improvements in scores after a standardized weight management nutrition education intervention, suggesting that these knowledge domains are distinct and that deficits are correctable. The overall average improvement in score was 11%, whereas the 4 items we retained that did not meet usual criteria for inclusion demonstrated a much greater improvement in scores. If these items were worded in a difficult or ambiguous way, a nutrition knowledge intervention would likely not result in an improvement in score for these items. We propose that retaining knowledge-related items that don’t meet the usual criteria for difficulty or item discrimination is acceptable if there is evidence from criteria validity assessment that the questions address areas of uncommon knowledge.

Improvement in scores following a nutritional knowledge intervention is important for questionnaire validation. We used several tactics to ensure that we were not teaching to the questionnaire. The presentations were developed around the topics, not the specific questions. Only one of the three interventionists was involved in question development and the interventionists were not allowed to have the questionnaire available around the time of the classes. Had an interventionist’s specific knowledge of the questionnaire been an important factor in score improvement, the participants in that individual’s classes should have outperformed the other two interventionists. Instead we found that the improvement in scores achieved by the participants was consistent between all three interventionists.

The test-retest reliability of the WMNKQ was highly statistically significant, with most sections demonstrating a Spearman correlation of ρ = 0.7 or greater (Table 5). The exception was for the section on how a variety of foods affects food intake, which only contained 2 questions. Gaining a high level of test-retest reliability with only 2 questions would require that over 43 persons would have to give the exact same answers for both questions. Therefore, it is very difficult to achieve a correlation of ≥ 0.70 with only two questions. The rational for including only two questions for this category is discussed above. The WMNKQ has acceptable test-retest reliability, as demonstrated by the test-retest correlation for the overall score of 0.90.

One limitation of the study is that the sample size power calculations were to test for statistical significance of the questionnaire as a whole and not for each subsection. Only 45 dietitians and information technologists participated in the test-retest and known group comparison validation tests, which limited the statistical power when analyzing subsections of the questionnaire. However, we were still able to demonstrate that the questionnaire is a robust tool. Another limitation of this study was that the Olmsted County participants were mostly white and for the most part more educated than volunteers from Duval County, which can introduce bias. However, upon convergent validity analysis nutrition knowledge was related to education level not race. Finally, the questionnaire is that it is designed for the adult population and was not tested in the pediatric or adolescent population. However, most participants in lifestyle intervention programs are older than 18.

The WMNKQ has multiple potential applications. It could be used to assess the effectiveness of nutrition knowledge interventions as part of interventional lifestyle programs.. The WMNKQ may be useful to understand whether nutrition knowledge in the areas included in the questionnaire predicts long-term weight loss success. We acknowledge that gain in nutrition knowledge does not necessarily predict weight loss [46] because of the importance of the behavior change component necessary to translate the knowledge into action. The WMNKQ can potentially be used as a tool to help guide the allocation of public nutrition education efforts to different demographic areas. The scoring in sections of the questionnaires can pinpoint specific knowledge deficits to guide funds. We also plan to employ the questionnaire as part of patient intake prior to nutrition consultations to focus health education efforts towards closing specific knowledge gaps. In conclusion, we propose that the WMNKQ helps quantify the understanding of basic concepts in the areas of nutrition knowledge with respect to weight management and will provide a useful tool to test whether that knowledge relates to improved outcomes.

References

Ogden CL, Carroll MD, Kit BK, Flegal KM. Prevalence of obesity in the United States, 2009–2010. NCHS. Data Brief. 2012;82:1–8.

Jensen MD, Ryan DH, Apovian CM, Ard JD, Comuzzi AG, Donato KA, et al. 2013 AHA/ACC/TOS guideline for the management of overweight and obesity in adults: a report of the American College of Cardiology/American Heart Association Task Force on Practice Guidelines and The Obesity. Society. Circulation. 2014;129:S102–38.

Church TS, Thomas DM, Tudor-Locke C, Katzmarzyk PT, Earnest CP, Rodarte RQ, et al. Trends over 5 decades in U.S. occupation-related physical activity and their associations with obesity. PLoS ONE. 2011;6:e19657.

Rolls BJ. The relationship between dietary energy density and energy intake. Physiol Behav. 2009;97:609–15.

Rouhani MH, Haghighatdoost F, Surkan PJ, Azadbakht L. Associations between dietary energy density and obesity: a systematic review and meta-analysis of observational studies. Nutrition. 2016;32:1037–47.

Ledikwe JH, Ello-Martin JA, Rolls BJ. Portion sizes and the obesity epidemic. J Nutr. 2005;135:905–9.

Rolls BJ, Van Duijvenvoorde PM, Rowe EA. Variety in the diet enhances intake in a meal and contributes to the development of obesity in the rat. Physiol Behav. 1983;31:21–7.

Rolls BJ, Rowe EA, Rolls ET, Kingston B, Megson A, Gunary R. Variety in a meal enhances food intake in man. Physiol Behav. 1981;26:215–21.

McCrickerd K, Forde CG. Sensory influences on food intake control: moving beyond palatability. Obes Rev. 2016;17:18–29.

Schulze MB, Manson JE, Ludwig DS, Colditz GA, Stampfer MJ, Willett WC, et al. Sugar-sweetened beverages, weight gain, and incidence of type 2 diabetes in young and middle-aged women. JAMA. 2004;292:927–34.

Barbosa LB, Vasconcelos SM, Correia LO, Ferreira RC. Nutrition knowledge assessment studies in adults: a systematic review. Ciencia & saude coletiva. 2016;21:449–62.

Ajzen I. The theory of planned behaviour: reactions and reflections. Psychol. Health. 2011;26:1113–27.

Fishbein M, Ajzen I. Belief, attitude, intention and behaviour: An introduction to theory and research, 27 ed. Addison-Wesley Pub. Co.: Reading, MA, 1975.

Mikhail DS, Jensen T,B, Wade TW, Myers JF, Frank JM, Wieland M, et al. Methodology of a multispecialty outpatient Obesity Treatment Program. Contemporary Clinical Trials Communications. 2018;10:36–41.

Tonsaker T, Bartlett G, Trpkov C. Health information on the Internet: gold mine or minefield? Canadian Family Physician Medecin de famille Canadien. 2014;60:407–8.

Parmenter K, Wardle J. Development of a general nutrition knowledge questionnaire for adults. Eur J Clin Nutr. 1999;53:298–308.

National Heart, Lung, and Blood Institute. Choosing Foods for Your Family. 2013, May [Available from: https://www.nhlbi.nih.gov/health/educational/wecan/eat-right/choosing-foods.htm].

Centers for Disease Control and Prevention. Healthy Eating for a Healthy Weight. 2016, September [Available from: https://www.cdc.gov/healthyweight/healthy_eating/index.html].

United States Department of Agriculture. Choose MyPlate. 2018 January 31 [Available from: https://www.choosemyplate.gov/].

Flesch R. A new readability yardstick. JAppl Psychol. 1948;32:221–33.

Hofman CS, Lutomski JE, Boter H, Buurman BM, de Craen AJ, Donders R, et al. Examining the construct and known-group validity of a composite endpoint for The Older Persons and Informal Caregivers Survey Minimum Data Set (TOPICS-MDS); A large-scale data sharing initiative. PLoS ONE. 2017;12:e0173081.

Cordier R, Milbourn B, Martin R, Buchanan A, Chung D, Speyer R. A systematic review evaluating the psychometric properties of measures of social inclusion. PLoS ONE. 2017;12:e0179109.

Ryan DH, Espeland MA, Foster GD, Haffner SM, Hubbard VS, Johnson KC, et al. Look AHEAD (Action for Health in Diabetes): design and methods for a clinical trial of weight loss for the prevention of cardiovascular disease in type 2 diabetes. Control Clin Trials. 2003;24:610–28.

Furnee CA, Groot W, van den Brink HM. The health effects of education: a meta-analysis. Eur J Public Health. 2008;18:417–21.

Kliemann N, Wardle J, Johnson F, Croker H. Reliability and validity of a revised version of the General Nutrition Knowledge Questionnaire. Eur J Clin Nutr. 2016;70:1174–80.

Kline P The handbook of psychological testing. Routledge: London; New York, 1993. vi, 627.

Fabbri M, Yost K, Finney Rutten LJ, Manemann SM, Boyd CM, Jensen D, et al. Health literacy and outcomes in patients with heart failure: a prospective community study. Mayo Clin Proc. 2018;93:9–15.

Streiner DL, Norman GR Health measurement scales: a practical guide to their development and use, 2nd ed. Oxford University Press: Oxford; New York, 1995. viii, 231 p.

Everitt B, Skrondal A The Cambridge dictionary of statistics, 4th ed. Cambridge University Press: Cambridge, UK; New York, 2010. ix, 468.

Cronbach LJ, Meehl PE. Construct validity in psychological tests. Psychol Bull. 1955;52:281–302.

Organization WH. Alcohol Consumption Levels and Patterns. 2014.

Rosinger A, Herrick K, Gahche J, Park S. Sugar-sweetened Beverage Consumption Among U.S. Adults, 2011-2014. NCHS data brief. 2017;270:1–8.

Sayon-Orea C, Martinez-Gonzalez MA, Bes-Rastrollo M. Alcohol consumption and body weight: a systematic review. Nutr Rev. 2011;69:419–31.

Bebb HT, Houser HB, Witschi JC, Littell AS, Fuller RK. Calorie and nutrient contribution of alcoholic beverages to the usual diets of 155 adults. Am J Clin Nutr. 1971;24:1042–52.

Rolls BJ The role of portion size, energy density, and variety in obesity and weight management. In: Wadden TA, Bray GA, editors. Handbook of Obesity Treatment. Second ed. New York, NY: The Guilford Press; 2018. p. 93–104.

Ledikwe JH, Blanck HM, Kettel Khan L, Serdula MK, Seymour JD, Tohill BC, et al. Dietary energy density is associated with energy intake and weight status in US adults. Am J Clin Nutr. 2006;83:1362–8.

Flood JE, Rolls BJ. Soup preloads in a variety of forms reduce meal energy intake. Appetite. 2007;49:626–34.

Rolls BJ. What is the role of portion control in weight management? Int J Obes (Lond). 2014;38(Suppl 1):S1–8.

Ollberding NJ, Wolf RL, Contento I. Food label use and its relation to dietary intake among US adults. J Am Diet Assoc. 2010;110:1233–7.

Satia JA, Galanko JA, Neuhouser ML. Food nutrition label use is associated with demographic, behavioral, and psychosocial factors and dietary intake among African Americans in North Carolina. J Am Diet Assoc. 2005;105:392–402. discussion -3

Norton GN, Anderson AS, Hetherington MM. Volume and variety: relative effects on food intake. Physiol Behav. 2006;87:714–22.

Pellegrini N, Salvatore S, Valtuena S, Bedogni G, Porrini M, Pala V, et al. Development and validation of a food frequency questionnaire for the assessment of dietary total antioxidant capacity. J Nutr. 2007;137:93–8.

Obayashi S, Bianchi LJ, Song WO. Reliability and validity of nutrition knowledge, social-psychological factors, and food label use scales from the 1995 Diet and Health Knowledge Survey. J Nutr Educ Behav. 2003;35:83–91.

Jones AM, Lamp C, Neelon M, Nicholson Y, Schneider C, Wooten Swanson P, et al. Reliability and validity of nutrition knowledge questionnaire for adults. J Nutr Educ Behav. 2015;47:69–74.

Feren A, Torheim LE, Lillegaard IT. Development of a nutrition knowledge questionnaire for obese adults. Food Nutr Res. 2011; 55. https://doi.org/10.3402/fnr.v55i0.7271.

Worsley A. Nutrition knowledge and food consumption: can nutrition knowledge change food behaviour? Asia Pac J Clin Nutr. 2002;11(Suppl 3):S579–85.

Acknowledgements

We appreciate the help of Pam Reich for study coordination and the cooperation of our volunteers.

Funding

Supported by grants DK40484, DK45343 and CTSA Grant Number UL1 TR002377 from the National Center for Advancing Translational Sciences (NCATS), a component of the National Institutes of Health (NIH). The content is solely the responsibility of the authors and does not necessarily represent the official views of the NIH.

Author information

Authors and Affiliations

Contributions

DM – designed and performed the studies, analyzed the data, and wrote the manuscript. MG, KB, and MA performed the studies, reviewed and edited the manuscript. BR, KY, and JB-B designed the study and edited the manuscript. PN analyzed the data and edited the manuscript. MDJ designed, performed and oversaw the study, reviewed and edited the manuscript

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, and provide a link to the Creative Commons license. You do not have permission under this license to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Mikhail, D., Rolls, B., Yost, K. et al. Development and validation testing of a weight management nutrition knowledge questionnaire for adults. Int J Obes 44, 579–589 (2020). https://doi.org/10.1038/s41366-019-0510-1

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s41366-019-0510-1