Abstract

Despite great advances in basic neuroscience knowledge, the improved understanding of brain functioning has not yet led to the introduction of truly novel pharmacological approaches to the treatment of central nervous system (CNS) disorders. This situation has been partly attributed to the difficulty of predicting efficacy in patients based on results from preclinical studies. To address these issues, this review critically discusses the traditional role of animal models in drug discovery, the difficulties encountered, and the reasons why this approach has led to suboptimal utilization of the information animal models provide. The discussion focuses on how animal models can contribute most effectively to translational medicine and drug discovery and the changes needed to increase the probability of achieving clinical benefit. Emphasis is placed on the need to improve the flow of information from the clinical/human domain to the preclinical domain and the benefits of using truly translational measures in both preclinical and clinical testing. Few would dispute the need to move away from the concept of modeling CNS diseases in their entirety using animals. However, the current emphasis on specific dimensions of psychopathology that can be objectively assessed in both clinical populations and animal models has not yet provided concrete examples of successful preclinical–clinical translation in CNS drug discovery. The purpose of this review is to strongly encourage ever more intensive clinical and preclinical interactions to ensure that basic science knowledge gained from improved animal models with good predictive and construct validity readily becomes available to the pharmaceutical industry and clinical researchers to benefit patients as quickly as possible.

Similar content being viewed by others

INTRODUCTION

Since the founding of the American College of Neuropsychopharmacology (ACNP) in December 1961, there have been tremendous advances in neuroscience knowledge that have greatly improved our understanding of brain functioning in normal and diseased individuals. Unfortunately, however, these scientific advancements have not yet led to the introduction of truly novel pharmacological approaches to the treatment of central nervous system (CNS) disorders in general, and psychiatric disorders in particular (Hyman and Fenton, 2003; Fenton et al, 2003; Pangalos et al, 2007). The reasons for this mismatch in progress in basic neuroscience knowledge and the introduction of novel medications for CNS disorders is a topic that continues to be discussed and debated extensively (eg Sams-Dodd, 2005; Schmid and Smith, 2005; Pangalos et al, 2007; Conn and Roth, 2008). Unfortunately, toxicological problems have led to concerns or even the discontinued development of a number of putative CNS medications that capitalized on our improved understanding of brain function, such as corticotropin-releasing factor 1 receptor antagonists (Steckler and Dautzenberg, 2006), glutamate receptor antagonists (Sveinbjornsdottir et al, 1993), phosphodiesterase 4 (PDE4) inhibitors (Horowski and Sastre-y-Hernandez, 1985), cannabinoid CB1 receptor antagonists (Murray Law, 2007), and a β-amyloid vaccine (Imbimbo, 2002). Another reason for the high attrition of drugs in the clinic has been suggested to be the poor predictive power of animal models for efficacy in humans (Food and Drug Administration, 2004; Kola, 2008). Although toxicological issues appear to be better controlled by frontloading the drug development process with the appropriate tests, thereby leading to decreased attrition, a high rate of dropout of drug development candidates remains unchanged in the clinic due to insufficient efficacy (Kola and Landis, 2004). In response to these challenges, ACNP formed a Medication Development Task Force (2004–2008) with the purpose of contributing to efforts to improve this situation. Under the auspices of this Task Force, the subcommittee on the Role of Animal Models in CNS Drug Discovery (Chair: Athina Markou) solicited input from both ACNP members and the wider scientific community in industry and academia regarding how animal models may best contribute to the drug discovery process and what changes may be needed. The present review reflects primarily the views of the authors with input, solicited as part of the Task Force's work, from several scientists listed in the ‘Acknowledgments’ section to the chair of the subcommittee by email or unstructured discussions. Great interest in the topic has been garnered among those working in, or interacting with, this area of drug discovery. Most noteworthy is the overwhelming agreement among scientists in both industry and academia regarding the function of animal models in drug discovery, what is currently lacking, and what is needed to ensure that the information that animal models can provide is utilized most effectively.

An animal model is defined as any experimental preparation developed in an animal for the purpose of studying a human condition (Geyer and Markou, 1995). No perfect animal model exists for any aspect of any CNS disorder, as implied by the term ‘modeling.’ One needs to be aware of the strengths and limitations of models to allow appropriate interpretation of the data provided by a particular model. The limitations and strengths of most models have been extensively discussed, debated, and empirically investigated, leading to refinements and improved understanding of the extent of the utility of each model (eg Markou et al, 1993; Cryan et al, 2002; Millan and Brocco, 2003; Matthews and Robbins, 2003; Willner, 2005; Einat and Manji, 2006; Steckler et al, 2008; Gotz et al, 2004; Janus et al, 2007; Geyer 2006a; Ellenbroek and Cools, 2000; Carter et al, 2008; Enomoto et al, 2007; McGowan et al, 2006; Gotz and Ittner, 2008). A strong focus on the limitations of the models in the 1990s led to views that in vivo animal models represent a bottleneck in drug discovery (Tallman, 1999). These issues are strongly intertwined with the healthy debate about the risk/benefit ratio of animal testing in general, which has led to the establishment of organizations like the United Kingdom's National Centre for the three R's (Replacement, Refinement, and Reduction of animal use in research, http://www.nc3rs.org.uk/). Such efforts are supported by both academia and industry and focus on finding alternatives to whole-animal testing procedures. This reductionist approach has been encouraged also by the explosion of the genomic and proteomic technologies that opened up new areas of discoveries in biomedical sciences (Drews and Ryser, 1997; Hopkins and Groom, 2002; Imming et al, 2006). Recently, however, with the increased emphasis on translational medicine (see below) and the escalating costs of drug development, the use of high quality, predictive, in vivo animal models has been recognized as an essential component of modern drug discovery if late-stage failure for lack of clinical efficacy is to be avoided.

This review addresses the above issues by first describing the traditional role of animal models in drug discovery and the reasons why this approach has led to suboptimal utilization of the information that animal models provide. The difficulties encountered in the use of animal models in translational medicine are discussed extensively. Finally, the authors offer their views on how animal models can contribute effectively to drug discovery and translational medicine and what changes need to be made to improve the probability of achieving clinical benefit. This review is not intended to provide a listing of currently available animal models; nor will it discuss the advantages and limitations of specific models. References to specific animal models are made only as examples to clarify general points.

THE ROLE OF ANIMAL MODELS IN TRANSLATIONAL APPROACHES TO DRUG DISCOVERY TODAY

Proof of concept (PoC), translational medicine, endophenotypes, and biomarkers are almost synonymous descriptors of the ‘desired’ approach to early 21st century drug discovery. Whether such efforts that utilize these concepts will bring the success that is sought, both for the patient and the industry, remains to be determined.

Magic Bullet Approach to CNS Drug Discovery

The 1980s and 1990s were a time of great excitement, with the prospect of translating advances in G-protein-coupled receptors and ionotropic receptor subtyping into effective and selective ‘magic bullets’ for CNS disorders (see for example, the American Chemical Society's Pharmaceutical Century, Ten Decades of Drug Discovery; http://pubs.acs.org/journals/pharmcent/Ch8.html; Imming et al, 2006). Neurotransmitters, such as serotonin and dopamine, are generally believed to be involved in many CNS disorders (eg Iversen, 2006; Iversen and Iversen, 2007; Feuerstein, 2008). For example, hypotheses have been proposed regarding the involvement of serotonin and norepinephrine in mood disorders (Schildkraut, 1965; Glowinski and Axelrod, 1965; Coppen, 1967; Bunney and Davis, 1965; Coppen and Doogan, 1988; Caldecott-Hazard et al, 1991; Markou et al, 1998), dopamine dysfunction in schizophrenia (Creese et al, 1976), and cholinergic abnormalities in Alzheimer's disease (Davies and Maloney, 1976; Perry et al, 1978; Drachman, 1977). For example, once serotonin was shown to exert its effects through interactions with at least 14 different receptor subtypes (Hoyer et al, 1994), the design and synthesis of selective ligands that could be screened in animal models of schizophrenia, depression, or anxiety were expected to allow the benefit of the magic bullet approach to emerge. Unfortunately, however, this approach to drug discovery has not been shown to be as successful as anticipated (eg Tricklebank, 1996; Green, 2006; Jones and Blackburn, 2002; Van der Schyf et al, 2007). This difficulty may be attributed partly to the fact that insufficient attention was paid to the type of information provided by assays and models used to build the case for clinical evaluation. For example, the exploration of novel environments, such as the open field, light–dark box, and elevated plus maze, were and continue to be the most widely used animal tests for assessing anxiolytic potential (Dawson and Tricklebank, 1995; Shekhar et al, 2001; Carobrez and Bertoglio, 2005; Crawley, 2008; Crawley et al, 1997; Holmes, 2001; File, 2001). These tests rely on classic approach-avoidance conflict that enables an inhibition of exploration to be interpreted as a marker of anxiety (eg Holmes, 2001).

The assumption is that the animal moves less in bright light or in the absence of an enclosure and so must be more ‘anxious,’ confirmed by the ability of clinically effective benzodiazepine anxiolytics to increase exploration under these conditions (Crawley, 1985). These models have both face and predictive validity for classical mechanisms for anxiolytic actions, and can also be used to effectively distinguish among different classes of drugs. The issue though is that these tests do not necessarily detect desired effects of compounds with novel mechanisms of action accurately and, in particular, are not always correct in predicting efficacy in the clinic. For example, the clear efficacy of antidepressants in the treatment of generalized anxiety disorders does not back-translate to the elevated plus maze (for review, see Borsini et al, 2002), and the results are at best inconsistent (Lister, 1987; Handley and McBlane, 1993; Silva et al, 1999; Cole and Rodgers, 1995; Silva and Brandao, 2000; Kurt et al, 2000). Several issues need to be considered when using these tests to assess putative anxiolytic activity that, if ignored, may have contributed to poor predictability, at least in the case of antidepressants. First, consideration should be given to the possible contribution of changes in approach behavior that may be elicited by novelty-seeking, rather than changes in the avoidance dimension of the conflict (Dulawa et al, 1999). Second, an attempt should be made to separate state from trait anxiety. State anxiety is more likely to reflect a natural response to an unfamiliar environment and to be biologically advantageous, whereas trait anxiety may mimic pathological anxiety states in which anxiety is present in the absence of threatening stimuli. The animal's trait anxiety may interact with the manipulation-induced state anxiety, which may be mediated by different brain circuitries, and thus confound the response to pharmacological interventions (Steckler et al, 2008). Determination of the cross-species predictability of these tests requires the evaluation of the effects of ‘active’ compounds in analogous human paradigms (see Young et al, 2007, for a description of how this may be accomplished).

Since the 1980s, the pressure to test compounds in relatively high-throughput animal models increased further as it has become progressively easier to design, synthesize, and screen molecules with high affinity and selectivity for biological activity at receptors or enzymes involved in controlling cell function (Imming et al, 2006; Lundstrom, 2007a). Now that recombinant DNA technology is widely used (Lundstrom 2007b), interest in a target is often based initially on its presence in brain regions perceived as relevant to the disorder(s) of interest rather than well-defined functions of the target. For example, receptor X is found in rodent cortex, hippocampus, and amygdala. The assumption, therefore, is that the target may be important in the control of cognition and emotional states. The receptor is then genetically knocked out, and the resultant genetically altered mouse shows increased locomotor activity in a novel environment, heightened responsiveness to the administration of psychostimulant drugs, decreased prepulse inhibition of the acoustic startle response, and impaired ability to learn to navigate a water maze. Such data are often considered sufficient justification to initiate a search for small molecules that could realize the therapeutic potential of pharmacologically interfering with the function of this receptor in humans, with schizophrenia being the targeted disorder in the patient population. That is, the target becomes a ‘target of interest’ that supports the setting up of medicinal chemistry programs, in vitro screening, and counter-screening assays (ie testing for the presence of desired properties in screening and the presence of undesired properties in counter-screening). Molecules gradually emerge with significant affinity for the target and an ability to alter the target's function, and the project moves from ‘target-to-hit’ to ‘hit-to-lead’ (A hit is defined as a chemical having a significant degree of activity at a particular molecular target. A lead is defined as a chemical having significant activity at a molecular target whose structure is, or is thought to be, readily modified to improve selectivity or toxicological and pharmacokinetic properties necessary for investigation in man). Progressive refinement of the activity of the (lead) molecules occurs, such that affinity increases to low nanomolar levels, with selectivity increasing to 30, 50, or 100 times the affinity for closely related recognition sites. Measures of lipophilicity and polar surface area (a major determinant of drug transport generally defined as the area associated with nitrogen and oxygen atoms and the hydrogen atoms bonded thereto) suggest that the compound will cross the blood-brain barrier or pass from gut to blood when administered orally. In vitro tests predict the likely metabolism of the compounds, the speed and extent to which they are metabolized by a certain class of hepatic enzymes using hepatic microsomes from a range of species, including human. Gross safety concerns, such as hERG channel activity (Patch-clamp hERG assay is a reliable model of QT-interval prolongation that is an effect that considerably increases the risk of cardiac arrhythmia.) or cellular toxicity, are addressed quickly and efficiently in vitro. After these ‘practical’ considerations are addressed, the question still remains regarding the putative clinical efficacy of the compound(s). The answer to the latter question partly relies on how predictive the specific knockout models are for the effects of acute or chronic pharmacological intervention in patients. How well do increased locomotor activity in a novel environment, heightened responsiveness to the administration of psychostimulants, or altered water maze learning predict the antipsychotic and cognition-enhancing effects of novel compounds in schizophrenia patients?

This well-tried, refined, and perfected approach leads to the availability of a tool molecule with high affinity and selectivity that can be administered orally to laboratory animals for preclinical PoC assessment. These preclinical tests, preferably conducted in a disease model (Disease models are purported to reflect a pathological aspect of the disorder. Assays are screens for therapeutic activity of compounds and may not necessarily accurately reflect phenomenological or neuropathological aspects of the disorder.), address the important question of whether the compound has the required activity in the whole animal predicted from the in vitro tests. Considering the long timelines for bringing a drug to market (12 years 10 months for all drugs registered in 2002; Kola and Landis, 2004; http://www.fda.gov/cder/regulatory/applications/), considerable pressure exists to achieve preclinical PoC as rapidly as possible so that clinical trials may be initiated. The ease of obtaining preclinical PoC depends on the strength of the hypothesis that motivated the synthetic program.

CNS Drug Discovery in a Disease with a Hypothesized Biomarker

Differences exist in the functions that animal models have in drug discovery for psychiatric compared with neurological disorders in which the molecular basis of the disease may be better understood, and disease biomarkers are more likely to be known, at least to some extent. Although this review focuses primarily on drug discovery for psychiatry, the following example about Alzheimer's disease highlights the challenges in CNS discovery, even in disorders in which a hypothesized biomarker is available. Drug discovery for Alzheimer's disease may involve the hypothesis that compound X will lower amyloid A1−42 in mice carrying gene mutations, leading to overexpression of the protein. The desired PoC appears straightforward in this case. After devising a suitable drug administration regimen and measuring declining levels of amyloid A1−42 in the brain with the drug treatment, a sufficient degree of PoC is obtained to encourage further development of the molecule. The hypothesis that leads to this approach to PoC is very different than the one that needs to be taken if the hypothesis is that decreasing amyloid A1−42 will lead to cognitive improvement in mice overexpressing the protein. The latter PoC approach requires much more from the genetic model than the originally described neurobiological PoC. First, a cognitive deficit needs to be identified in the genetically modified animal. Decisions need to be made regarding which test or tests would be most appropriate to detect this potential improvement and, if more than one test is needed, how many would be required to show a positive therapeutic response to treatment before accepting that there is PoC. Second, an indication of the timing and progression of the deficit in the genetically modified mice is needed so that the treatment may be applied at the appropriate time/age of the mouse. Possible outcomes include confirmation of the biochemical hypothesis but no effect of the drug treatment on the cognitive measures, or alternatively, the cognitive measures improve in response to treatment but amyloid A1−42 expression does not change. One then needs to determine, ideally a priori, which PoC provides the best basis on which to move forward with the particular pharmacological approach. However, if the decision is made a priori regarding the PoC on which to rely, it is not clear why one should spend time and resources with the other type of PoC. There are no obvious answers to these issues because many unknown variables are involved. Some may argue that the cognitive measures are both more sensitive and more meaningful than the biochemical parameters because the whole-brain concentration of amyloid A1−42 poorly reflects the cellular events in the specific brain circuitry that underlies the behavioral deficits. Alternatively, the cognitive measures may be considered less sensitive because (1) they are not measuring the relevant cognitive modality, (2) they are not accurately measuring the relevant cognitive modality, or (3) the progression of amyloid A1−42 deposition is so rapid in the animal model compared with the clinical condition that the narrow time window for intervention decreases the probability of selecting the optimum time for treatment in the animal model.

With suitable quantitative imaging approaches, the same biochemical PoC measures used in experimental animals are feasible in Alzheimer's patients (Edison et al, 2006; Newberg et al, 2006; Rowe et al, 2007). Proof-of-concept would be achieved if amyloid A1−42 expression is reduced, and the decision to invest the necessary funds to carry the compound to market is made. If amyloid A1−42 is not lowered significantly after a specified chronic treatment protocol, then further development of the compound(s) can be halted. Note that this hypothetical PoC is not based on ‘patient outcomes’ (ie reduction in clinical symptoms or improvement in quality of life), but rather on ‘improved’ hypothesized biomarkers that may be considered more readily amenable to translational work than functional outcome. This biochemical PoC is an attractive approach because it relies on the mechanistic action of the drug and thus allows decisions to be made on the basis of quantitative data in relatively small samples of patients or experimental animals.

In practice, PoC-based decision-making is more difficult to execute than described above. Strict adherence to accepting or rejecting the hypothesis is often softened because (1) the reliability of measures may be poorly understood, and (2) each movement of a compound from Phase 0 to Phase III still represents a considerable investment that many are reluctant to make on the basis of the outcome of a single and still unvalidated experiment in terms of its predictive power, however well designed. Furthermore, often unknown is to what degree a biomarker should change to allow for reliable predictions of clinical efficacy. For example, the field is still learning about the drug-induced lowering of amyloid A1−42 that is required to see a clinically meaningful response in Alzheimer's patients.

Feed-Forward Loop

Similar to scientific concepts, compounds have their champions who will vary in their tenacity in the face of adversity. The occasional success of a deeply troubled drug that finally provides clinical benefit despite all the odds against provides sufficient partial reinforcement for some to not give up when attempts are made to effect rapid termination. Positive outcomes carry compounds forward, but a negative outcome usually fuels tremendous debate and usually additional studies, thus compromising the potential efficiency that is inherent in the approach. Each movement of a compound from Phase 0 (preclinical) through Phase I (safety and tolerability testing in volunteers) and Phase IIa (safety and tolerability in patients) before embarking on trials of clinical efficacy (Phase IIb and Phase III) represents a considerable investment that many are reluctant to initiate on the basis of the outcome of a single preclinical experiment, however well designed.

Nevertheless, because of these difficult issues and the considerable preclinical monetary and time investments made in the particular target and compound(s), often whatever positive preclinical PoC is available is taken as sufficient to rationalize the expenditures made thus far and justify further spending and moving the compounds forward to human PoC tests. Thus, unless there is complete failure to show PoC at any level of experimental testing, a feed-forward loop tends to occur for lead compounds. This situation is highly detrimental to the drug discovery process and is one of the several reasons that in vivo animal models are considered nonpredictive of the clinical assessment of putative medications. That is, even if the predictions from the animal models are mixed (some positive and some negative) and provide only a few glimpses of hope for efficacy in humans, the global prediction from the aggregate of the preclinical animal data is considered positive due to the forces of the feed-forward loop. If the compound fails in human PoC tests, or even worse in Phase III clinical trials, then most in vivo animal testing conducted in the context of the particular project are considered as nonpredictive and thus useless, regardless of the cautious or qualified predictions that such testing may have generated. In conclusion, confidence in the ‘to be tested’ hypothesis is clearly the key to the proper use of PoC, as well as a priori acceptance of the path to be taken according to each potential outcome.

Preclinical PoCs based on biochemical activity have perhaps unwittingly been used in drug discovery quite successfully for many years, out of necessity rather than for heightened efficiency or reliance on hypotheses about the etiology of the disorders. For example, inhibitors of monoamine oxidase and serotonin reuptake were developed based on the PoC hypothesis that they should increase neurotransmitter availability but in the absence of any behavioral test in small animal models (Jacobsen, 1986; Wong et al, 2005). Such data were a sufficient PoC in the context of a general unproven hypothesis about a specific neurotransmitter deficit in a particular disease. Had robust, validated animal models of depression been available at the time, the decision-making process may have been different. Indeed, if the forced swim test (Porsolt et al, 1978) had been the most frequently used preclinical assay for the detection of antidepressant-like compounds when fluoxetine was developed (the Investigative New Drug application for permission to examine the drug in man was filed in 1977; Wong et al, 2005), it is interesting to consider fluoxetine's potential fate given its lack of activity in the original rat forced swim test before the test was modified to detect the efficacy of compounds such as fluoxetine (Cryan et al, 2002). If activity in the original forced swim test had been taken as a necessary requirement before deciding to develop the compound fully, depressed patients would not have benefited from the availability of fluoxetine.

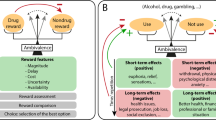

Another example of a recent success story that relied on a strong PoC hypothesis, in which animal models played a critical and decisive role, is the approval by the Food and Drug Administration (FDA) of varenicline, a partial agonist at the α4β2 nicotinic acetylcholine receptor, as a smoking cessation aid in 2006 (Rollema et al, 2007b). Basic science findings led to the hypothesis that actions at this receptor should be effective therapeutics for smoking cessation (for review, see Picciotto and Corrigall, 2002). The search for an efficacious α4β2 partial agonist at the nicotinic acetylcholine receptor was initiated by Pfizer in 1993. After considerable medicinal chemistry efforts that optimized pharmacological activity, oral bioavailability, brain penetration, and pharmacokinetic properties, varenicline was discovered in 1998 (Dr H. Rollema, personal communication). The key aspect of the biochemical and behavioral PoC was the availability of widely accepted neurochemical and behavioral measures of efficacy in tests of nicotine dependence. Specifically, varenicline was shown to reverse nicotine-induced increases in dopamine in the nucleus accumbens and have functional partial agonist activity (Rollema et al, 2007a). In behavioral models, varenicline decreased intravenous nicotine self-administration and was less reinforcing than nicotine in a progressive-ratio schedule of reinforcement, although it substituted for nicotine in the drug discrimination test in rats (Rollema et al, 2007a, 2007b). Although none of these neurochemical (ie dopamine levels in the nucleus accumbens) or behavioral (eg intravenous nicotine self-administration) measures have proven predictive validity as tests for anti-smoking efficacy (bupropion, another FDA-approved smoking cessation aid, increases dopamine in the nucleus accumbens and has inconsistent effects on nicotine self-administration; eg, Paterson et al, 2007; Bruijnzeel and Markou, 2003), the strong a priori hypothesis, as well as the wide acceptance and trust in the construct validity of these not-yet-validated animal models led to the subsequent clinical trials that demonstrated the efficacy of varenicline in the clinical population (Gonzales et al, 2006; Jorenby et al, 2006). This elegantly simple hypothesis and approach, coupled with a strong trust in the a priori hypothesis and the yet unvalidated animal models (although, notably, these models have good construct validity for the specific therapeutic indication; Markou et al, 1993; see below), led to the first compound with actions at CNS nicotinic receptors to be introduced to the market (Arneric et al, 2007). It should be noted that drug discovery for drug abuse and dependence may be considered easier than for other psychiatric disorders, as the etiology of this disorder is known to be excessive exposure to the drug of abuse, thus making the design of animal models with good etiological and construct validity feasible, even when these models do not have proven predictive value.

THE ROLE (OR LACK THEREOF) FOR ANIMAL MODELS IN DRUG PROFILING TODAY: THE CRISIS OF VALIDATION

Perhaps one of the most important roles that animal models should have in drug discovery that unfortunately is not fully realized today (see below) is the contribution of the data generated by the animal models to drug profiling (Williams, 1990; Spruijt and DeVisser, 2006; Hart, 2005). The desired profile of a pharmaceutical product is a list of features, such as desired efficacy, therapeutic indications, safety, absorption, metabolism, and elimination, current knowledge about the disease (including etiology), putative molecular targets and mechanisms, unmet medical needs, and analysis of the market (eg market size, competition, risks, opportunities) (Nwaka and Ridley, 2003). Such product profiling is the result of a multidisciplinary analysis involving regulatory, commercial, clinical, and basic research branches of a pharmaceutical company. The product profile defines the set of desired criteria that the ‘ideal’ drug candidate is expected to satisfy to progress through the various stages of drug discovery and development. The pharmacological criteria that may be included in a product profile are very important because the in vivo efficacy (eg effective dose 50 [ED50] or dose that gives 50% of the maximum possible response) can be compared with drug levels needed to induce the first signs of toxicity to provide an estimate of the ‘margin of safety’ (Margins of safety are more commonly determined as the ratio of concentrations of drug in plasma at the lowest dose at which an adverse event is recorded to the concentration at the dose inducing the required efficacy.) or ‘therapeutic window.’

Although the descriptions of the animal model(s) that are to be used in the selection of drug candidates are not necessarily included in the product profile, efficacy data are expected to be obtained in valid model(s) of the targeted disease, with experimental protocols that could ideally be translated to the clinic. Thus, not only are the animal models expected to have both predictive and construct validity, they are also expected to utilize experimental designs and dependent measures that are comparable to those that are or will be used in humans (Hyman and Fenton, 2003). Generally, the product profile definition is a commercially and clinically driven process that is meant to clarify, set, and align goals and objectives to be shared by the different branches of the company (Poland and Wada, 2001). For preclinical research and development work, the product profile is meant to assist in optimizing efforts at the earliest possible stages and to allow ‘in-progress' continuous monitoring of pharmacological characterization of the drug candidate within the perspective of the compounds’ purported clinical uses (for a non-industrial product profile, see the National Institute on Drug Abuse definition at http://www.fda.gov/CDER/guidance/6910dft.htm). Importantly, the key to increasing the contribution of animal models to successful drug discovery is the continued effort to align experimental paradigms and parameters across species.

Gold Standard Reference Compound and Its Role in Drug Discovery

One of the most common contributions of animal models to the traditional product profile today is the comparison of the pharmacological features of the drug candidate to a standard best-in-class reference drug, referred to as the ‘gold standard.’ The gold standard is often a currently used medication for the targeted disorder that is perceived as being the best, or one of the best, treatments. In the absence of a clinically used drug, the gold standard may be a drug acting on the same mechanism that has shown efficacy in human laboratory studies, but not necessarily in clinical trials. Although efficacy is the most important comparison with the gold standard, predicting improved efficacy of a candidate drug over a reference drug based on current animal models may be difficult, at least in the area of psychiatry, simply because the signal-to-noise ratio is often relatively low (Conn and Roth, 2008). However, demonstrating improved margins of safety or duration of action/frequency of drug administration, which are other important features for drug innovation (Erice statement on drug innovation, 2008), may be possible.

Because no significant innovative progress has been made in CNS drug discovery for decades (Kola and Landis, 2004), there is a great shortage of suitable gold standards against which to evaluate novel compounds. Despite this situation, high predictive validity of in vivo assays and models is the feature most often demanded. Gold standards simply do not exist for certain disorders, such as cognitive deficits of schizophrenia (Floresco et al, 2005; Geyer and Heinssen, 2005). This situation has led to the ‘crisis of validation’ that is now experienced by researchers working with animal models. The overreliance on the use of gold standard reference compounds (many of which are suboptimal or simply lacking) and/or on a few animal models that are considered ‘standard’ and ‘validated,’ often leads to circular arguments and high risk of only ever being able to develop ‘me-too’ compounds. Although these limitations are widely recognized (eg Brodie, 2001; Geyer and Markou, 2002), moving beyond the self-imposed confines that this approach entails has thus far proven difficult.

UNIDIRECTIONAL INFORMATION FLOW AS AN IMPEDIMENT TO IMPROVED PREDICTIONS FROM ANIMAL MODELS

Despite the extensive criticism of animal models (eg Horrobin, 2003), they continue to play a major role in drug discovery because of the need to calculate parameters, such as margin of safety referred to in section ‘The role (or lack thereof) for animal models in drug profiling today: the crisis of validation’, as well as for the primary purpose of target validation. These potential contributions can only materialize, however, if the preclinical testing is part of an effective and efficient decision tree (Gorodetzky and Grudzinskas, 2005) and if preclinical/clinical cross-validation is a real day-to-day process (Pangalos et al, 2007). Unfortunately, in the vast majority of cases, information flow is rigidly unidirectional. The drug discovery pipeline is often considered as a progression from preclinical to clinical, with flow of animal data to the clinical domain but not vice versa. Conversely, the product profile is defined primarily clinically and commercially and is provided to the preclinical scientists. What is lacking is sufficient cross-talk between both disciplines. Such a unidirectional flow of information does not allow for any pragmatic and rational modification of the animal models and leads to unrealistic expectations about how animal model data may best contribute to this process. Unless this situation changes, no progress in learning how to prevent false negatives and false positives originating from animal model data will occur.

HOW ANIMAL MODELS CAN CONTRIBUTE EFFECTIVELY TO DRUG DISCOVERY AND TRANSLATIONAL MEDICINE IN THE FUTURE

How to Deal with the Crisis of Validation

Despite the recognition that in vivo animal models have relatively low throughput compared with molecular assays, and in some cases it may not be feasible to evaluate their predictive validity, the issue is not whether a molecular assay, a rodent behavioral model, or a human test is preferable. Instead, the important question is how each assay, disease model, and test may be optimally used and how data derived from each are interpreted and applied most appropriately and effectively to the drug discovery and decision-making processes. For example, a possible strategy could be to establish the model early on for target validation, using already existing (although perhaps less than ideal) compounds that target the novel mechanism of action or other methods, such as sRNAi or knockout technologies. This approach will confirm sensitivity and specificity of the model. Once assay ‘connectivity’ has been established (ie a significant correlation between in vitro activity at the target and in vivo activity in the model has been demonstrated), medicinal chemistry efforts can be directed toward improving those features of a molecule that will convert it from a tool to a medicine. Only those lead compounds shown to exhibit the desired properties in terms of physiochemical and in vitro properties, pharmacokinetics, and safety need be tested in these models. Moreover, if wisely chosen, the preclinical model can aid the design of the clinical trial needed for human PoC studies. In summary, sophisticated animal models can be used to increase the confidence in the functional significance of a target and determine the pathway for further drug development to facilitate a rapid ‘win or kill’ decision-making process. Especially in cases where the predictive validity of a model is relatively unknown because of the absence of clinically active reference drugs, it is critical to avoid using behavioral assays (as discussed above) that have limited construct validity simply because they happen to be fast and high-throughput. Such an approach would only provide for more rapid but wrong decision-making (Sarter, 2006). Furthermore, one should exclude models that lead to false positives; that is, models that show beneficial effects of currently used medications that nevertheless do not clinically treat the deficit that the animal model is purported to assess. For example, consider the search for procognitive cotreatments to be used in schizophrenia patients already treated with stable regimens of antipsychotics having robust antagonist actions at dopamine D2 receptors. Given the level of dopamine receptor occupancy in these patients, an animal model of cognition that was responsive to D2 dopamine receptor antagonists would need to be excluded from efforts to discover procognitive adjunctive treatments (Geyer, 2006a). By contrast, α7 nicotinic acetylcholine receptor activators (agonists and positive allosteric modulators) induce cognitive improvement in animal models of schizophrenia, although currently they do not appear to match the criteria for the ‘ideal’ product profile (Chiamulera and Fumagalli, 2007). On the basis of positive effects in yet unvalidated animal models of cognition in terms of their relevance to schizophrenia, the α7 nicotinic acetylcholine partial agonist DMXBA has been evaluated in schizophrenia patients with preliminary positive results (Olincy et al, 2006). However, because of the reliance on yet untested models in terms of their translational value, it is difficult to evaluate whether the statistically significant effects of DMXBA in the preclinical and preliminary human data reflect the potential for a significant clinical outcome. That is, it is not known what effect size in either the animal model or the early human PoC will translate into a true clinical benefit with functional significance for the patients. Human-specific placebo effects further complicate the measurement of effect size in the clinic.

In summary, when approaching therapeutic indications where there is still great unmet medical need, we need to shift the focus from overreliance on predictive validity and the classic drug target validation, described at the beginning of this review, to the reliance on construct and etiological validity. This is certainly a high-risk/high-benefit approach that needs to be viewed as a much-needed long-term investment in the development of the field of translational research that will eventually decrease timelines and cost.

How to Deal with our Poor Understanding of the Etiology of CNS Disorders

One of the major problems in model development is the lack of a good understanding of the etiology of CNS disorders and psychiatric disorders in particular. Because animal models consist of both an inducing condition or perturbation and one or several dependent measures (Geyer and Markou, 2002; Steckler, 2002), the choice of an inducing condition by necessity involves a hypothesis about the etiology of the disorder that may or may not be correct. The decision whether to use nonperturbed animals (ie not using an inducing condition) or animals that exhibit a deficit because of the implementation of a manipulation presents another challenge (Floresco et al, 2005). There are examples of how compounds affect behavior, and presumably neurobiological processes, differently in healthy vs diseased organisms. For example, in an animal model of anhedonia with relevance to depression, the combination of a selective serotonin reuptake inhibitor (eg fluoxetine or paroxetine) with a serotonin-1A receptor antagonist permanently reversed the anhedonia associated with psychostimulant withdrawal in rats, while inducing a transient anhedonic state in control rats (Harrison et al, 2001; Harrison and Markou, 2001; Markou et al, 2005). Similarly, the response of rats to atomoxetine in the stop signal reaction time test of impulsivity is much greater in slow compared with fast responders (Robinson et al, 2008).

One experimental strategy that is increasingly used in drug development is the multifactorial approach that employs several dependent measures, such as molecular imaging (eg PET ligand development to predict receptor occupancy necessary to elicit a meaningful effect, or fMRI or phMRI), electrophysiological approaches (eg, EEG, ERPs), psychophysiological measures (eg prepulse inhibition of startle), neurochemical approaches (eg cerebrospinal fluid measures), or neuroendocrine and autonomic parameters that can readily be measured in animals and humans, alongside behavioral measures. A high degree of coherence between multiple dependent variables lends support to the PoC hypothesis, either by indicating the involvement or recruitment of the brain circuitry hypothesized to underlie the disorder or by better defining the active dose range. Another approach that complements the use of different dependent variables is the use of multiple experimental manipulations to model several different inducing conditions and/or engage several dependent measures. An example is the genetic predisposition of animals to a deficit and then the use of environmental manipulations to reveal or exacerbate the deficit. Such a multifactorial approach provides ample correlational data to improve the prediction of outcome in humans. In addition to being of value for drug development, models with good etiological and predictive validity could also be used for further target identification (eg using genomics or proteomics technologies) and thus provide additional opportunities for drug discovery.

Use of Translational Measures

An important issue relevant to the development of translational science for CNS disorders is the identification and design of improved measures with shared construct validity between preclinical and clinical research to assist in validation of the animal models (Geyer and Markou, 1995). A distinction needs to be made between endophenotypes vs symptoms of the disorder. Endophenotypes, also referred to as intermediate phenotypes (Tan et al, 2008), are heritable, primarily state-independent markers seen in diseased individuals. Owing to their heritability, endophenotypes are also observed more frequently in nondiseased family members of the patients than in the general population (Gottesman and Shields, 1973; Gould and Gottesman, 2006; McArthur and Borsini, 2008). Two examples of endophenotypes are prepulse inhibition and P50 deficits seen in schizophrenia patients and their relatives (Braff et al, 2008; Javitt et al, 2008; Geyer, 2006b; Patterson et al, 2008). In addition, one may consider assessing constructs characteristic of the disorder but not necessarily heritable or observed in asymptomatic relatives of the patients. Accordingly, some symptoms may be endophenotypes, but in most cases they are not. Some phenotypes may also be readily measurable in both animals and man (eg deficits in prepulse inhibition or attentional set-shifting; Javitt et al, 2008). Identical measures in humans and experimental animals are likely to be analogous or even homologous (in the sense of being mediated by the same neural substrates) and thus greatly facilitate translation. Such measures are highly desirable and cross-predictive but not always feasible to design and assess in one or the other population (ie experimental animals, healthy human volunteers, patients). As a caveat, such homologous measures do not necessarily represent clinical trial endpoints as defined in Phase II or III protocols and as presently accepted by health authorities, which adds another level of complexity. In many cases, one may be limited to analogous measures that are intended to assess the same construct or process in both experimental animals and humans.

An example may be provided from the anxiety field. The ‘anxious’ rodent endophenotype would be displayed by an animal showing a consistent and heritable anxiety-like profile in a variety of situations that may not necessarily involve a threatening environmental situation. That is, natural avoidance of ‘threatening’ situations in the elevated plus maze or in open, well-lit spaces for rodents may not be considered an endophenotype (Steckler et al, 2008).

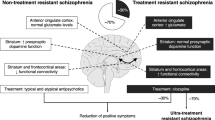

Another example is from the field of schizophrenia. The administration of an N-methyl-D-aspartate (NMDA) receptor antagonist, such as ketamine or phencyclidine, in rodent models induces some of the clinical aspects of schizophrenia (eg Amitai et al, 2007; Freeman et al, 1984; Halberstadt, 1995; Javitt and Zukin, 1991). NMDA receptor antagonists induce schizophrenia-like symptoms in healthy volunteers (Luby et al, 1959; Javitt, 1987; Krystal et al, 1994; Malhotra et al, 1996) and worsen symptoms in schizophrenia patients (Lahti et al, 1995; Malhotra et al, 1997). Again, care in the choice of variables being measured needs to be exercised if NMDA receptor antagonists are to be utilized usefully as an inducing condition of schizophrenia-like symptoms. One has to avoid the trap of assuming that every CNS response to ketamine is a reflection of its psychotomimetic actions. For example, changes in locomotor activity induced by NMDA receptor antagonist administration are not likely to closely reflect psychotomimetic effects or cognitive deficits induced by the NMDA receptor antagonists (Geyer and Markou, 1995; Cartmell et al, 2000; Henry et al, 2002). One approach may be to focus on a variable that is known to be impaired in schizophrenia and impaired after administration of ketamine, such as working memory (Honey et al, 2004; Morgan and Curran, 2006) or prepulse inhibition (Geyer et al, 2001). In the former case, the key is to identify the assay of working memory in rodents that most closely resembles the assay of working memory in humans and is sensitive to ketamine. If compound X reverses the ketamine-induced impairment in working memory induced in rats, then the same compound should also reverse the working memory impairment induced in human volunteers by ketamine. Reversal of working memory deficits in schizophrenia patients, using the same assay system, would greatly enhance confidence in the therapeutic potential of the compound. Nevertheless, such a series of positive and promising experimental outcomes would not necessarily indicate that compound X will provide clinical benefit to schizophrenia patients. If consistency is achieved from rats to human volunteers but not to schizophrenia patients, and no tangible benefit to the patient is apparent, it may be concluded that the assay is a poor measure of working memory or that the impairment and/or improvement by the drug candidate compound is of little clinical consequence.

In defining the neurobehavioral tests to be used, some preclinical scientists have tried to design their tests to be as close as possible to those used in humans. A good example of this approach is Logan's stop signal reaction time test of motor inhibition and corticostriatal impulsivity that was adapted by Eagle and Robbins (2003) for use in the rat. In humans, stop signal reaction time can be decreased by treatment with the selective norepinephrine reuptake inhibitor atomoxetine (Chamberlain et al, 2006). Similar results have been obtained in the rat (Robinson et al, 2008). As indicated above, an alternative approach is to make the human test more ‘rat-like’ as described by Shipman and Astur (2008). Using a virtual reality approach, the Morris water maze test of hippocampal spatial memory in the rat has been adapted for use in human volunteers while in an MRI scanner. These experiments not only indicate the level of hippocampal involvement in executing the task, but also showed that performance impairment in elderly subjects correlates with reduced levels of hippocampal activation. Similar efforts are underway to establish a human version of the rodent novel environment exploration paradigm (Young et al, 2007).

It must be recognized, however, no matter how apparently similar human and rodent neurobehavioral tests can be made, it would be unrealistic to expect that behavioral tests could be totally aligned across species. Although similarities exist, dissimilarities are evident, including anatomical discontinuity likely matched by some cognitive discontinuity (Premack, 2007). Many cognitive tests in animals are also thwarted by the rat adopting mediating strategies that influence overall performance in the task. This situation occurs in human tests also, but controlling for such artifacts in humans is easier by giving explicit instructions. The ‘virtual water maze’ experiment described above not only provides a behavioral readout but also demonstrates the neuronal substrates underlying the response. The behavior and anatomy cross-translate between humans and rat, with convergent validity and predictive validity in the broader sense of the latter term (Geyer and Markou, 1995). If a pharmacological manipulation alters both the behavior and the activation of the hippocampal substrate, for example, then the neuroanatomical PoC (that compound X influences spatial memory through an action on hippocampal activity) can be accepted with confidence. A missing component, however, is understanding how the effect of compound X on hippocampal activity leads to altered performance in the cognitive task. Although fMRI and phMRI have been applied to the rat, such testing may only occur in situations in which movement is severely restricted or the subject is in an anesthetized state. Some of these limitations of measuring neuronal activation in vivo will eventually be overcome either by electrophysiological monitoring or by use of online electrochemical measurements of tissue oxygen, glucose, or blood flow in freely moving animals (ie through the use of measures that underlie fMRI; Viswanathan and Freeman, 2007; see Lowry and O'Neill, 2006). The predictions from the animal models to the human condition can be only as good as the correspondence between the measures in humans and those in experimental animals. Current animal models may be very predictive of specific measures and constructs in humans, but unfortunately such measures are not what are currently assessed in the various phases of most clinical trials.

The Impact on Preclinical Models of a Dimensional, Rather Than Syndromal, Approach to Psychiatric Treatments

In addition to the understanding that one needs to focus on specific aspects of disorders (see above; Hyman and Fenton, 2003), researchers and clinicians in psychiatry have long recognized that few signs and symptoms of psychiatric disorders are specific to any particular diagnostic category; rather, they reflect dimensions of illness that cut across diagnoses (eg Segal and Geyer, 1986; Geyer and Markou, 1995; Hyman and Fenton, 2003). Discussions have addressed the possibility that this phenomenon reflects the comorbidity of multiple diagnostic entities within individual patients vs substantial overlaps in the symptoms that characterize various diagnostic syndromes (Geyer, 2006b; Markou et al, 1998; Gould and Gottesman, 2006; McArthur and Borsini, 2008). Because the latter view appears to be most widely accepted (see references above), the development of the new American Psychiatric Association Diagnostic and Statistical Manual of Mental Disorders (DSM-V) is taking a far more dimensional approach than ever before (Lecrubier, 2008). That is, a deficit within a specific domain, such as dysfunctions of cognition in schizophrenia, may not abide by diagnostic boundaries but rather be an important feature of multiple disorders (eg schizophrenia, bipolar disorder, attention deficit disorders, mild cognitive impairment, Alzheimer's, etc; Andrews et al, 2008; Young et al, 2007). Another example is the symptom of anhedonia, which can be seen as a core aspect of both depression and the negative symptoms of schizophrenia (eg Markou and Kenny, 2002; Paterson and Markou, 2007). Accordingly, different dimensions of a particular diagnostic entity may require treatment by different pharmacological approaches (Hyman and Fenton, 2003). As discussed above, the study of specific signs and symptoms seen either in a particular diagnostic category or across multiple categories are amenable to cross-species translational studies. Furthermore, this approach is more likely to lead to the identification of neurobiological substrates subserving behavioral abnormalities, the pharmacological amelioration of such abnormalities, and potential etiologies relevant to psychiatric disturbances (Geyer and Markou, 2002). The dimensional approach to psychiatric disorders and their treatment adds further strength to the arguments above emphasizing the importance of achieving the closest possible correspondence between the measures used in preclinical models and the PoC measures used to assess potential efficacy relatively early in the drug development process (ie Phase I or II).

The example of recent efforts to identify treatments specifically targeting cognitive deficits, rather than the entire multidimensional syndrome of schizophrenia, provides some insights into how translational psychiatry and drug development may proceed. To summarize the recent history (see Geyer, 2006a), one critical bottleneck limiting the development of treatments directed at the cognitive deficits in schizophrenia was identified as the inability of companies to register a compound specifically for this indication (Fenton et al, 2003). Although cognitive deficits are core features of schizophrenia and are not treated adequately by current antipsychotic drugs (Bilder et al, 1992; Gallhofer et al, 1996; Mortimer, 1997), the US FDA was not prepared to evaluate drugs for this indication. Therefore, the National Institute of Mental Health (NIMH) developed an initiative called ‘Measurement and Treatment Research to Improve Cognition in Schizophrenia’ (MATRICS; http://www.matrics.ucla.edu/) that developed broad consensus regarding how the cognitive impairments in schizophrenia may be assessed and treated (Marder and Fenton, 2004). MATRICS helped establish a way for FDA to consider registering compounds intended to treat cognitive deficits in schizophrenia, independent of treating psychosis per se. Through a series of conferences over a two-year period, MATRICS identified seven primary domains of cognitive deficits in schizophrenia and developed a neurocognitive battery of tests to be used in clinical assessments of potential cognitive enhancers, which is now publicly available (Nuechterlein et al, 2004).

The need to identify and develop cross-species tools with which to predict and evaluate novel treatments of cognitive deficits is being addressed partially by another NIMH-funded program: ‘Treatment Units for Research on Neurocognition in Schizophrenia’ (TURNS; http://www.turns.ucla.edu). This multisite clinical trials network is implementing the clinical trial design developed by the MATRICS program (see website). In some instances, compounds that were nominated for consideration by TURNS have become the focus of NIMH-funded industry-academic collaborative grants using special funding mechanisms designed for this purpose. TURNS includes a Biomarkers Subcommittee designed to facilitate the inclusion of specific biomarkers in conjunction with clinical tests (http://www.turns.ucla.edu/preclinical-TURNS-report-2006b.pdf). The supplementation of clinical neurocognitive assessments with biochemical, genetic, psychophysiological, or brain imaging measures having the potential to serve as biomarkers may facilitate the processes of drug discovery and development. One unanticipated outcome of the TURNS program has been an agreement that NIMH would provide specific support to small businesses through the mechanism of Small Business Innovation Research grants to further either preclinical or early clinical studies of promising new targets identified by TURNS as opportunities for novel treatments of impaired cognition in schizophrenia. Such programs are encouraged to be designed as collaborations between the small business and academic centers, with TURNS serving as an optional conduit of information and recommendations to assist companies in finding the appropriate academic partners.

As discussed above, given the absence of any treatments known to ameliorate the cognitive deficits in schizophrenia, preclinical drug discovery programs have difficulty assessing the predictive validity of the many cognitive tests available (Floresco et al, 2005). As a result, current efforts are based primarily on our understanding of the theoretical constructs and neurobiology related to cognition (Carter and Barch, 2007; Chudasama and Robbins, 2006). A subsequent program, ‘Cognitive Neuroscience measures of Treatment Response of Impaired Cognition in Schizophrenia’ (CNTRICS; http://cntrics.ucdavis.edu), is designed to bring the modern tools and concepts of cognitive neuroscience to bear upon the assessment of cognitive deficits in schizophrenia and the efficacy of pharmacotherapeutics in ameliorating these deficits (see http://cntrics.ucdavis.edu). The literature indicates that often there is very limited validation of the more sophisticated tests needed because in many cases less than a handful of compounds have ever been assessed in these tests, and several of these tests are only established in single laboratories. CNTRICS sought to build a consensus regarding potential new translational paradigms that might be adaptable for use in preclinical and early clinical assessments of treatment effects on specific cognitive domains that are impacted in schizophrenia (Carter et al, 2008). In CNTRICS, paradigms that had already been applied to cross-species and PoC studies in schizophrenia, such as prepulse inhibition of startle, were not the focus. Rather, the goal was to identify new neurocognitive tasks having robust construct validity that had promise for adaptation to the study of schizophrenia but had not yet been examined in this context. In response to the discussions led by CNTRICS, NIMH has developed new funding programs to encourage and support the further development of such paradigms and their adaptation to make them suitable for use in psychiatric patient populations. Thus, the process initiated by the MATRICS program has led to a series of developments involving consensus-building, task definition, task development, clinical trials paradigms, construct validation, information sharing, industry-academic collaboration, and governmental efforts to design appropriate funding mechanisms to move the field forward.

On the European front, The European Commission has recently approved a budget of €2 billion, with half provided by the pharmaceutical industry, to be specifically applied to the improvement of preclinical–clinical translation via industrial-academic collaborative consortia (Innovative Medicines Initiative, http://imi.europa.eu/documents_en.html). Of this appropriation, €10 million will be dedicated to the first wave of projects focusing on the specific need to improve preclinical–clinical translation in psychiatric drug discovery.

The efforts in the United States focusing on cognitive deficits are a unique example of how industry, academia, and government came together to address a large unmet medical need. It is too soon to assess whether or not this approach and these efforts will be successful, however. On the basis of this experience and the lessons learned, similar and improved approaches may be applied also to other dimensions of psychopathology. Despite promising discussions and some progress, the remaining task of animal model validation is of such magnitude that no single pharmaceutical company or academic center can effectively address the issues relevant even to a specific context, such as cognitive deficits. Thus, developing mechanisms for data sharing is essential, and both industrial and academic researchers must contribute. Government funding, coupled with financial support from the industry, could potentially contribute in providing the mechanisms to accomplish this data sharing. At least two additional programs that may foster such discussions over the next few years are proposed and evaluated, one focused on further efforts related to cognition in schizophrenia and the other much more broad. Such organized conference-based programs may provide critical support because many questions remain. Is a more coordinated approach required, combining industry and academia? Should a recommendation be made to set up consortia from industry and academia to tackle some of the issues related to preclinical discovery approaches on a precompetitive level (Floresco et al, 2005)? Should a ‘virtual institution’ coordinate such activities? For example, a Roadmap initiative from the NIH has established a Wikipedia-style data-management resource that is currently focused on psychiatric disorders that may facilitate data sharing (Sabb et al, 2008). Do needs exist for a shift in mind-set with regard to (1) industry being willing to share more data, resources, and compounds on a longer term basis, and (2) academia being prepared for some more practically oriented groundwork rather than cutting-edge scientific experimentation leading to high-impact publications? Whether such industry, academia, and government initiatives will lead to the successful introduction to the market of new CNS medications with novel mechanisms of action remains to be determined. Nevertheless, such collaborative work has led to renewed enthusiasm and hope that have greatly revitalized the field and efforts in CNS drug discovery, at least in the fields of cognition and schizophrenia. If proven successful, similar initiatives will be undertaken for other dimensions of psychopathology.

SUMMARY AND CONCLUSIONS

It is now recognized that the use of whole animal models is an integral part of CNS drug discovery due to (1) the nature of CNS disorders, particularly psychiatric disorders and (2) the continued emphasis on translational approaches by workers in the industrial, governmental, and academic sectors (eg Lindsay, 2003; Arguello and Gogos, 2006; Littman and Williams, 2005; Spedding et al, 2005; Van Dam and De Deyn, 2006; O'Connell and Roblin, 2006; Sultana et al, 2007; Nordquist et al, 2008). In addition to the extensive review articles written on the topic, the unstructured input received by the Animal Model Subcommittee of the ACNP Medication Task Force almost unanimously indicated the need for translational efforts to enhance the utility of animal models in drug discovery. This emphasis on translational science is not new. The continued plea for such efforts is due to the fact that true translational work is slow and difficult and requires enormous cooperation and collaboration by workers in rather diverse fields that often use different languages and have different emphases in their work.

The realignment of objectives within particular scientific groups and regulatory agencies required to conduct translational work is a major challenge to the field. It is required that researchers develop a common language across all fields and familiarize themselves with each other's experimental approaches to identify the most relevant measures to use. Preclinical and clinical measures need to assess as closely as possible homologous, or at least analogous, biological variables. In some cases, translation from man to animals is required, whereas in other cases the converse is needed. Experimental animal tests need to be made more human-like, and human tests can be devised that are more like the animal procedures. Such correspondence between preclinical and clinical measures will greatly enhance predictability, and thus promote translation back and forth between animal and human studies. Furthermore, the measures used both preclinically and clinically should have construct validity, defined as measuring accurately the theoretical behavioral and neurobiological variables that are considered core to the disorder of interest (eg Cronbach and Meehl, 1955; Geyer and Markou, 1995). The reliance on construct validity is particularly relevant in cases in which no clinically effective medications exist for a particular indication and insufficient information about etiology exists to support the demonstration of etiological validity. A multifactorial approach that utilizes several behavioral and biological endpoints is likely to be a fruitful approach for all disorders, particularly in cases where no known therapeutics are available. A multifactorial approach provides converging data that facilitate decision-making, particularly when such decision-making is based on a solid theoretical rationale and is made a priori. Much of the above can be accomplished only when emphasis is placed on specific dimensions of psychopathology that often characterize more than one clinical diagnostic group. Finally, it cannot be overemphasized that there is great need for clinical trials, even in Phase III, to include measures of putative biomarkers and/or translational tests that provide objective laboratory-based measures, along with measures of clinical outcome (Kraemer et al, 2002; Frank and Hargreaves, 2003; De Gruttola et al, 2001). Such data are enormously valuable for determining the predictive value of measures provided by animal models and clinical experimental work, such as PoC in humans, and for promoting our understanding of the neuropathology of such disorders.

This approach requires significant monetary and time investment. Both United States and European government agencies have already made such monetary investments and have strongly signaled their intent to fund work in academia or industry-academia collaborations that will enhance translational science and drug discovery with the long-term goal of introducing new chemical entities as therapeutics to the market. Thus, the challenge for the industry is to allow sufficient time for this approach to produce the desired results. In summary, a cooperative approach is required for the diverse multidisciplinary expertise needed to cope with the magnitude of the task and the significant time and monetary investment that is required by all parties involved.

Within the aforementioned general principles, several decision-making scenarios may be envisioned as being fruitful in making the best utilization of animal model data (eg Sams-Dodd, 2005; Sultana et al, 2007; Pangalos et al, 2007). As illustrated by the examples provided in this review and other similar reviews in the literature, each drug discovery quest in CNS disorders is characterized by its own issues, including (1) limitations and strengths of animal models, target(s), and available lead compounds, (2) clinical requirements, (3) desired effect sizes that will lead to significant clinical improvement, and (4) availability, or lack, there of biomarkers and/or knowledge of neurosubstrates. Thus, although a translational decision flow chart is suggested to be agreed upon by preclinical and clinical researchers a priori for a particular project, designing a detailed universal decision-making plan for all cases may be difficult. Often, there are several different solutions and approaches to each drug discovery project, each with its own merits and limitations.

In summary, the current translational approach recognizes that accurate predictions are based on the quality, reliability, and relevance to the disorder of both the preclinical and clinical measures. Although this requirement increases the burden on the animal models because extensive refinement and revalidation are required, the improved predictability of the models is expected to outweigh the effort required. In addition, the requirement of extensive validation is not only an issue for animal studies; the same applies to challenge studies in healthy volunteers or sophisticated neurobiologically informed tests in patient trials that need to prove their validity to regulatory authorities.

In conclusion, the new translational approach combined with the evolving focus on constructs and dimensions that are core to CNS disorders, as well as emphasis on the identification of reliable biomarkers for CNS disorders that correlate with clinical and functional endpoints (Kraemer et al, 2002; Frank and Hargreaves, 2003; De Gruttola et al, 2001), provide a fresh and optimistic approach to minimizing the risk in drug discovery and development. This approach involves parallel and theoretically linked advances in the preclinical and clinical aspects of the process.

References

Amitai N, Semenova S, Markou A (2007). Cognitive-disruptive effects of the psychotomimetic phencyclidine and attenuation by atypical antipsychotic medications in rats. Psychopharmacology 193: 521–537.

Andrews G, Anderson TM, Slade T, Sunderland M (2008). Classification of anxiety and depressive disorders: problems and solutions. Depress Anxiety 25: 274–281.

Apolone G, Bassi M, Begaud B, Cascorbi I, Chiamlera C, Dodoo A et al (2008). Erice statement on drug innovation. Br J Clin Pharmacol 65: 440–441.

Arguello PA, Gogos JA (2006). Modeling madness in mice: one piece at a time. Neuron 52: 179–196.

Arneric SP, Holladay M, Williams M (2007). Neuronal nicotinic receptors: a perspective on two decades of drug discovery research. Biochem Pharmacol 74: 1092–1101.

Bilder RM, Lieberman JA, Kim Y, Alvir JM, Reiter G (1992). Methylphenidate and neuroleptic effects on oral word production in schizophrenia. Neuropsychiatry Neuropsychol Behav Neurol 5: 262–271.

Borsini F, Podhorna J, Marazziti D (2002). Do animal models of anxiety predict anxiolytic-like effects of antidepressants? Psychopharmacology 163: 121–141.

Braff DL, Greenwood TA, Swerdlow NR, Light GA, Schork NJ (2008). Advances in endophenotyping schizophrenia. World Psychiatry 7: 11–18.

Brodie MJ (2001). Do we need any more new antiepileptic drugs? Epilepsy Res 45: 3–6.

Bruijnzeel AW, Markou A (2003). Characterization of the effects of bupropion on the reinforcing properties of nicotine and food in rats. Synapse 50: 20–28.

Bunney Jr WE, Davis JM (1965). Norepinephrine in depressive reactions: a review. Arch Gen Psychiatry 13: 483–494.

Caldecott-Hazard S, Morgan DG, DeLeon-Jones M, Oversteet DH, Janowsky D (1991). Clinical and biochemical aspects of depressive disorders: II. Transmitter/receptor theories. Synapse 9: 251–301.

Carobrez AP, Bertoglio LJ (2005). Ethological and temporal analyses of anxiety-like behavior: the elevated plus maze model 20 years on. Neurosci Biobehav Rev 29: 1193–1205.

Carter CS, Barch DM (2007). Cognitive neuroscience-based approaches to measuring and improving treatment effects on cognition in schizophrenia: the CNTRICS initiative. Schizophr Bull 33: 1131–1137.

Carter CS, Barch DM, Buchanan RW, Bullmore E, Krystal JH, Cohen J et al (2008). Identifying cognitive mechanisms targeted for treatment development in schizophrenia: an overview of the first meeting of the Cognitive Neuroscience Treatment Research to Improve Cognition in Schizophrenia Initiative. Biol Psychiatry 64: 4–10.

Cartmell J, Monn JA, Schoepp DD (2000). Attenuation of specific PCP-evoked behaviors by the potent mGlu2/3 receptor agonist, LY379268 and comparison with the atypical antipsychotic, clozapine. Psychopharmacology 148: 423–429.

Chamberlain SR, Muller U, Blackwell AD, Clark L, Robbins TW, Sahakian BJ (2006). Neurochemical modulation of response inhibition and probabilistic learning in humans. Science 311: 861–863.

Chiamulera C, Fumagalli G (2007). Nicotinic receptors and the treatment of attentional and cognitive deficits in neuropsychiatric disorders: focus on the α7 nicotinic acetylcholine receptor as a promising drug target for schizophrenia. Central Nervous System Agents Med Chem 7: 269–288.

Chudasama Y, Robbins TW (2006). Functions of frontostriatal systems in cognition: comparative neuropsychopharmacological studies in rats, monkeys and humans. Biol Psychol 73: 19–38.

Cole JC, Rodgers RJ (1995). Ethological comparison of the effects of diazepam and acute/chronic imipramine on the behavior of mice in the elevated plus maze. Pharmacol Biochem Behav 52: 473–478.

Conn PJ, Roth BL (2008). Opportunities and challenges of psychiatric drug discovery: roles for scientists in academic, industry, and government settings. Neuropsychopharmacology 33: 2300.

Coppen A (1967). The biochemistry of affective disorders. Br J Psychiatry 113: 1237–1264.

Coppen AJ, Doogan DP (1988). Serotonin and its place in the pathogenesis of depression. J Clin Psychiatry 49 (Suppl 4): 4–11.

Crawley JN (1985). Exploratory behavior models of anxiety in mice. Neurosci Biobehav Rev 9: 37–44.

Crawley JN (2008). Behavioral phenotyping strategies for mutant mice. Neuron 57: 809–818.

Crawley JN, Belknap JK, Collins A, Crabbe JC, Frankel W, Henderson N et al (1997). Behavioral phenotypes of inbred mouse strains: implications and recommendations for molecular studies. Psychopharmacology 132: 107–124.

Creese I, Burt DR, Snyder SH (1976). Dopamine receptor binding predicts clinical and pharmacological potencies of antischizophrenic drugs. Science 192: 481–483.

Cronbach LJ, Meehl PE (1955). Construct validity in psychological tests. Psychol Bull 52: 281–302.

Cryan JF, Markou A, Lucki I (2002). Assessing antidepressant activity in rodents: recent developments and future needs. Trends Pharmacol Sci 23: 238–245.

Davies P, Maloney AJF (1976). Selective loss of central cholinergic neurons in Alzheimer's disease. Lancet 2: 1403.

Dawson GR, Tricklebank MD (1995). Use of the elevated plus maze in the search for novel anxiolytic agents. Trends Pharmacol Sci 16: 33–36.

De Gruttola VG, Clax P, DeMets DL, Downing GJ, Ellenberg SS, Friedman L et al (2001). Considerations in the evaluation of surrogate endpoints in clinical trials: summary of a National Institutes of Health workshop. Control Clin Trials 22: 485–502.

Drachman DA (1977). Memory and cognitive function in man: does the cholinergic system have a specific role? Neurology 27: 783–790.

Drews J, Ryser S (1997). The role of innovation in drug development. Nat Biotechnol 15: 1318–1319.

Dulawa SC, Grandy DK, Low MJ, Paulus MP, Geyer MA (1999). Dopamine D4 receptor-knock-out mice exhibit reduced exploration of novel stimuli. J Neurosci 19: 9550–9556.

Eagle DM, Robbins TW (2003). Inhibitory control in rats performing a stop-signal reaction-time task: effects of lesions of the medial striatum and d-amphetamine. Behav Neurosci 117: 1302–1317.

Edison P, Archer HA, Hinz R, Hammers A, Pavese N, Tai YF et al (2006). Amyloid, hypometabolism, and cognition in Alzheimer disease: an [11C]PIB and [18F]FDG PET study. Neurology 68: 501–508.

Einat H, Manji HK (2006). Cellular plasticity cascades: genes-to-behavior pathways in animal models of bipolar disorder. Biol Psychiatry 59: 1160–1171.

Ellenbroek BA, Cools AR (2000). Animal models for the negative symptoms of schizophrenia. Behav Pharmacol 11: 223–233.

Enomoto T, Noda Y, Nabeshima T (2007). Phencyclidine and genetic animal models of schizophrenia developed in relation to the glutamate hypothesis. Methods Find Exp Clin Pharmacol 29: 291–301.

Fenton WS, Stover EL, Insel TR (2003). Breaking the log-jam in treatment development for cognition in schizophrenia: NIMH perspective. Psychopharmacology 169: 365–366.

Feuerstein TJ (2008). Presynaptic receptors for dopamine, histamine, and serotonin. Handb Exp Pharmacol 184: 289–338.

File SE (2001). Factors controlling measures of anxiety and responses to novelty in the mouse. Behav Brain Res 125: 151–157.

Floresco S, Geyer MA, Gold LH, Grace AA (2005). Developing predictive animal models and establishing a preclinical trials network for assessing treatment effects on cognition in schizophrenia. Schizophr Bull 31: 888–894.

Food and Drug Administration (2004). Innovation or Stagnation? Challenge and Opportunity on the Critical Path to New Medical Products. U.S. Food and Drug Administration.

Frank R, Hargreaves R (2003). Clinical biomarkers in drug discovery and development. Nat Rev Drug Discov 2: 566–580.