Abstract

From thermodynamic origins, the concept of entropy has expanded to a range of statistical measures of uncertainty, which may still be thermodynamically significant1,2. However, laboratory measurements of entropy continue to rely on direct measurements of heat. New technologies that can map out myriads of microscopic degrees of freedom suggest direct determination of configurational entropy by counting in systems where it is thermodynamically inaccessible, such as granular3,4,5,6,7,8 and colloidal9,10,11,12,13 materials, proteins14 and lithographically fabricated nanometre-scale arrays. Here, we demonstrate a conditional-probability technique to calculate entropy densities of translation-invariant states on lattices using limited configuration data on small clusters, and apply it to arrays of interacting nanometre-scale magnetic islands (artificial spin ice)15. Models for statistically disordered systems can be assessed by applying the method to relative entropy densities. For artificial spin ice, this analysis shows that nearest-neighbour correlations drive longer-range ones.

Similar content being viewed by others

Main

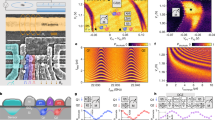

Our artificial spin-ice15 systems are arrays of lithographically defined single-domain ferromagnetic islands (25 nm thick and 220 nm×80 nm in area) on the links of square and honeycomb lattices (Fig. 1). Shape anisotropy forces island moments to point along the long axes, forming effective Ising spins. The coercive field is about 770 Oe (that is, a barrier of order 105 K), whereas the field from one island on a neighbour is only of order 10 Oe. The arrays are demagnetized by rotating in an in-plane external magnetic field Hext, initially strong enough to produce complete polarization, subsequently reduced to zero in small increments15,16 ΔHext, with reversal of the field at each step. For small step sizes, the result is a statistically reproducible macrostate, operationally defined by the demagnetization protocol15,16, which is probed by magnetic force microscopy to obtain the static moments of individual islands. We want the entropy of a single macrostate, but distinct runs might produce distinct macrostates (for example, a residual magnetization at larger step size). In most cases, data are collected in a single run, averting the problem, but the entropy of a macrostate mixture would be relatively unimportant anyway, as is shown in Supplementary Section S3. For large structurally regular systems such as ours, it is more appropriate to work not with total entropy, but with entropy density (see equation (1)) having units of bits per island, a value of 1 corresponding to complete disorder.

The strongest interactions, between islands meeting at a vertex, favour head-to-tail moment alignment. However, not all of these can be satisfied simultaneously, resulting in a kind of frustration. Still, for the square lattice, the ground state is only twofold degenerate15, because type-I vertices, as defined in Fig. 1, are lowest in energy. That the ordered ground state is never found experimentally16,17 suggests that the evolution is kinetically constrained18,19. For instance, one spin flip converts a type-II to a type-III; flips of two perpendicular islands are required to reach type-I. In contrast to the square lattice, the honeycomb lattice has a macroscopically degenerate ground state when only nearest- or next-nearest-neighbour interactions are effective (longer-range interactions break the degeneracy20 at a much lower energy scale). The interactions prefer a 2-in/1-out or 1-in/2-out arrangement at every vertex (quasi-ice rule). This constraint alone produces a state, ideal quasi-ice, with an entropy density of 0.724 bits per island. Interactions between (mono)pole strengths Q at neighbouring vertices21,22 reduce the ground-state degeneracy to 0.15 bits per island by favouring Q=−1 (2-in/1-out) next to Q=+1. The contrast between the square- and honeycomb-lattice ground states—twofold degenerate versus macroscopically degenerate—provides an opportunity to investigate the interplay between the strictures of kinetic constraint and the freedom of massive degeneracy.

We now develop a method to extract the entropy densities on our lattices from the measured configurations of the island magnetic moments. Consider a finite cluster Λ of islands, for example, the five-island cluster comprising two adjacent vertices (see Fig. 2 legend) and the collection of random variables σΛ that are the spins belonging to Λ. The Shannon–Gibbs–Boltzmann entropy23,24,25 of PΛ(σΛ), the distribution of σΛ, is

where the sum runs over all possible values of the random variable(s) σΛ. Note that S is rendered dimensionless by omitting Boltzmann’s constant, and the base of the logarithm is 2, so that the units are bits. If Λ is taken ever larger while the fraction of islands on the edge tends to zero (van Hove limit), we obtain the bulk entropy density s:

If each island moment independently points either way with probability 1/2, then the entropy density is one bit per island, the largest possible. Lower entropy density indicates correlations in a generic sense. For example, the fully polarized initial state created by a large Hext has zero entropy density.

All bounds are derived using configuration statistics for the five-island cluster Λ5 shown on the right. Crosses are the direct estimate S(Λ5)/5, whereas filled diamonds use inequality (5) and open circles use inequality (4). The dashed lines are the result of our technique applied to ideal quasi-ice (every vertex type-I with no other restrictions), the upper from the simple cluster-estimate, and the lower two (indistinguishable) from the two conditioning approximations. The simple cluster-estimate applied to a single vertex would give 0.86 bits per island. The solid line at 0.724 shows the actual entropy density of ideal quasi-ice. At the smallest lattice constants and smallest step sizes, subtle signs of longer-ranged monopole correlations appear.

The obvious approximation to s suggested by equation (1) is simply S(PΛ)/|Λ| for the biggest practicable cluster. However, this ‘simple cluster-estimate’ is not very good because the configuration space grows exponentially with cluster size |Λ|, and boundary-crossing correlations are completely neglected. To understand the last point, suppose the entire lattice covered without gaps or overlap by translates of Λ. The state constructed from the marginals of P on those translates, taking them to be independent, has entropy density exactly S(PΛ)/|Λ|. However, short-range boundary-crossing correlations are the same as corresponding intracluster correlations, so are reflected in small-cluster data and can be properly counted using conditional entropy. The method resembles one proposed some years ago26,27 for Monte Carlo simulations of lattice-spin models in equilibrium.

One way to think of the total entropy of a given macrostate is as the average uncertainty about the particular microstate at hand. Imagine a microstate of the honeycomb lattice revealed three islands (one vertex) at a time, row-by-row. One instant in the process looks like this (the grey vertex is about to be revealed):

Neglecting the (far distant) lattice edge, each newly revealed vertex bears the same spatial relation to those already known, so the revelation, on average, reduces the uncertainty by exactly three times the entropy per spin. Cast this way, the entropy density appears as a conditional entropy28. The conditional entropy of σΛ given σΓ is

where P(σΛ|σΓ)=P(σΛ,σΓ)/P(σΓ) is the conditional probability of σΛ given σΓ. The joint entropy of σΛ and σΓ then has the pleasant decomposition S(σΛ,σΓ)=S(σΛ|σΓ)+S(σΓ). (Learning σΓ and σΛ at once is the same as learning σΓ and then σΛ.) Note that if Λ and Γ overlap, common spins contribute zero to S(σΛ|σΓ).

As a simple illustration, suppose Λ and Γ are single islands, with the probabilities for P(σΛ,σΓ) being given by P(↓,↑)=0 and P(↑,↑)=P(↑,↓)=P(↓,↓)=1/3. If we know that σΓ=↑, then the remaining uncertainty about σΛ is zero, but if we know that σΓ=↓, then the uncertainty is total: 1 bit. Weighting by the probabilities of σΓ to be ↑ or ↓ gives the entropy of σΛ conditioned on σΓ: P(σΓ=↑)·(0)+P(σΓ=↓)·(1)=2/3 bit.

The Methods section explains how conditional entropy and other basic notions of information theory can be used to obtain good approximations to the entropy density s from limited data. The result of applying two such approximations to the experimental data for honeycomb lattices is plotted in Fig. 2 as a function of field step size ΔHext for each lattice constant, along with one simple cluster-estimate for comparison. Data-set sizes are reported in Supplementary Section S1. The simple cluster-estimate S(Λ)/|Λ| using the five-island di-vertex (Fig. 2 legend) provides a very poor bound compared with our conditioning technique. Reducing the lattice constant or step size should lower the entropy because the first leads to stronger interactions, and the second gives interactions a better chance to be the decisive factor for island flips. The expected lattice constant trend is seen but there is an unexpected plateau with respect to ΔHext.

It makes sense to compare the experimental states with ideal honeycomb quasi-ice through entropy densities. That of honeycomb quasi-ice is 0.724 bits per island (Supplementary Section S2). However, proper comparison requires using the same estimates for both systems. The dashed lines in the plot show the estimates for the model system, the upper for the simple cluster-estimate (compare crosses) and the lower two (indistinguishable) for the conditioning estimates. Hence, the 520 nm array at ΔHext=1.6 Oe has lower entropy than ideal quasi-ice. This can be explained by correlations between net magnetic charge Q=±1 of nearest-neighbour vertices. Ideal quasi-ice has a weak anticorrelation: 〈QiQj〉≈−1/9. In some experimental samples, this correlation reaches −0.25, reflected in a small entropy decrease. Complete sublattice ordering, 〈QiQj〉=−1, would reduce the entropy to s≈0.15 bits per island (Supplementary Section S2). This extra correlation may explain the slightly better performance of the bound with a complete vertex in the conditioning data.

The entropy of honeycomb artificial spin ice reveals a state close to ideal quasi-ice, with slight antiferromagnetic vertex charge ordering. The contrasting failure of a.c. demagnetization of the square lattice to approach the completely ordered ground state is precisely quantified by entropy. We use three approximations (upper bounds) for the square-lattice entropy density. They are found by a procedure parallel to that for the honeycomb lattice and are shown in the legend of Fig. 3. The three agree well, and this rough convergence test suggests that they are close to the true entropy densities. As for the honeycomb lattice, we expect smaller entropy for smaller ΔHext or smaller lattice spacing. In general, this seems to be the case, but the ground state is never approached. Even extrapolations ΔHext→0 have large entropy densities.

The three approximations agree closely. Even extrapolated to zero step size, a.c.-demagnetized square spin ice never approaches the ground state. The diamond estimate is always lower than the square because the former retains more conditioning, whereas the added islands are the same. Data-set sizes are reported in Supplementary Information.

Closer inspection suggests jamming at ΔHext. A kinetically constrained approach to ground states defines the behaviour of complex systems across many fields19, such as protein folding14, self-assembly, glasses and granular systems3,4,5,6,7,8. Ergodicity is thwarted by both tall energy barriers and configuration-space constrictions, combined into free-energy barriers. An ergodic system explores all of configuration space, whereas folding proteins live within a ‘folding funnel’. This dynamic constriction of allowed configurations introduces many deep conceptual challenges. a.c.-demagnetized artificial spin ice puts the conceptual challenge of kinetic constraint into sharp relief: as the rotating external field weakens, islands one-by-one fall out of ‘field following’ mode, driven by inter-island interactions that suppress the local depolarization field and lock in the orientation of the fallen-away island. Thus, each spin probably makes only a single decision on how to point on escaping coercion, with no prospect of later surmounting barriers. The system’s approach to the ground state is essentially one-way. Thus, it is not surprising that only a macroscopically degenerate ground-state target can be ‘hit’. Notwithstanding this failure of ergodicity, square-lattice artificial spin ice can still be described by a statistical model based, like thermodynamics, on maximum entropy16,17. As an extreme case of kinetic constraint, rotationally annealed artificial spin ice can afford unique insights into statistical mechanics of complex systems. For example, array topology can control the ground-state degeneracy, as seen here.

Even without detailed knowledge of how the final square-lattice states develop, a concisely descriptive model may be sought. We conjecture that the lattice state is fully determined by correlations between nearest-neighbour pairs diagonally or straight across a vertex, and thus model it by a constrained maximum-entropy state, which is as random as possible, consistent with those correlations. Adapting the conditioning techniques (see the Methods section), we can efficiently estimate the entropy density of the experimental states relative to the maximum-entropy state, s(expt||ME). This global measure of dissimilarity does not depend on identifying the ‘right’ correlations, and allows an assessment of the model. The results are given in Table 1.

Note that29 the probability that a typical experimental state in region Λ is likely to be mistaken for a maximum-entropy state decays asymptotically as exp[−|Λ|s(expt||ME)]. Apparently, the entropy reduction below independent islands in the square lattices is well accounted for by nearest-neighbour correlations, and those they entail.

The reduction in the entropy of an interacting system below that of uncoupled degrees of freedom is due mostly to short-range correlations, even near a critical point. Thus, the efficient conditional entropy technique described here can be applied to a wide variety of resolvable complex systems such as granular media and colloidal systems that can now be spatially resolved in the required detail9,10,11,12,13. Entropy density is a general measure of order that is not tied to pre-identified correlations. Hence, it is especially valuable for states such as square ice or other jammed, glassy states that are far from identifiable landmarks.

Methods

According to the discussion around equation (2), the entropy density s of the infinite honeycomb lattice is given by (ignore the colour for now)

Now we find small-cluster approximations to this entropy density, using two principles28. (1) If σΓ is known there is no uncertainty about it, so S(σΛ,σΓ|σΓ)=S(σΛ|σΓ) for arbitrary Λ and Γ. Thus, in pictorial equations, visual perspicuity will dictate retention or omission of conditioning variables on the left of the bar. (2) Providing more conditioning information lessens uncertainty: S(σΛ|σΓ)≥S(σΛ|σΓ,σΓ′). Unlike a simple application of equation (1), our conditional entropy method fully accounts for short-range correlations without boundary error. Like the simple cluster-estimate, it provides upper bounds on the true entropy density. Dropping all but the red islands in equation (3) yields

Alternatively, if we add a vertex in two stages rather than all at once as in equation (3), we immediately arrive at

The red islands again provide a visual cue. Following it, we get the bound

Bounds for a translation-invariant state on the square lattice can be obtained in a similar way. We use the one-step bounds

and

and the two-step bound

A constrained maximum-entropy state on the square lattice with given correlations between diagonal and across-the-vertex nearest-neighbour correlations coincides with a Gibbs state for an Ising model with effective pair interactions for the two types of nearest neighbour of whatever strength is required to reproduce the required correlations. We have previously16 studied specific pair correlations in such maximum-entropy states using Monte Carlo simulation. Building on the conditioning techniques developed in this Letter, we compute s(expt||ME), the relative entropy density of an experimental state to the corresponding constrained maximum-entropy state. In the case of two probability measures on the configuration space of a lattice system, the relative entropy of their restrictions to some finite region Λ is

The limiting relative entropy density that we want is

The logarithm of the probability in equation (6) can be expanded in terms of conditionals just as was done for the entropy. For any collection {X1,…,XN} of random variables (the m=1 term being read as an unconditioned probability),

parallels exactly the formula

The main difference is that log2P(·) is a random variable, whereas S(·) is a number. Any of the class of approximations for the conditional entropy densities can now be applied to the conditional probabilities to obtain the relative entropy. However, we do not get bounds in this way, just ordinary estimates.

If PME is a maximum-entropy state constrained to have expectations of specified observables match their expectations in P, then the relative entropy density s(P||PME) ought to equal the difference in the absolute densities. We believe that the method we have used is superior to this simple subtraction because it suppresses the unwanted effects of fluctuations in the counting of low-probability configurations. Owing to limited experimental data, configurations that would be expected to have only a few occurrences may have none at all, which has an anomalously large effect in the subtraction.

References

Landauer, R. Irreversibility and heat generation in the computing process. IBM J. Res. Dev. 5, 183–191 (1961).

Leff, H. S. & Rex, A. F. (eds) Maxwell’s Demon 2: Entropy, Classical and Quantum Information, Computing 2nd edn (Institute of Physics, 2003).

Liu, A. J. & Nagel, S. R. Jamming is not just cool any more. Nature 396, 21–22 (1998).

Coniglio, A. & Nicodemi, M. The jamming transition of granular media. J. Phys. Condens. Matter 12, 6601–6610 (2000).

O’Hern, C. S., Sibert, L. E., Liu, A. J. & Nagel, S. R. Jamming at zero temperature and zero applied stress: The epitome of disorder. Phys. Rev. E 68, 011306 (2003).

Corwin, E. I., Jaeger, H. M. & Nagel, S. R. Structural signatures of jamming in granular media. Nature 435, 1075–1078 (2005).

Majumdar, T. S., Sperl, M., Luding, S. & Behringer, R. P. Jamming transition in granular systems. Phys. Rev. Lett. 98, 058001 (2007).

Behringer, R. P., Daniels, K. E., Majumdar, T. S. & Sperl, M. Fluctuations, correlations and transitions in granular materials: Statistical mechanics for a non-conventional system. Phil. Trans. R. Soc. A 366, 493–504 (2008).

Kegel, W. K. & van Blaaderen, A. Direct observation of dynamical heterogeneities in colloidal hard-sphere suspensions. Science 287, 290–293 (2000).

Weeks, E. R., Crocker, J. C., Levitt, A. C., Schofield, A. & Weitz, D. A. Three-dimensional direct imaging of structural relaxation near the colloidal glass transition. Science 287, 627–631 (2000).

Cui, B., Lin, B. & Rice, S. A. Dynamical heterogeneity in a dense quasi-two-dimensional colloidal liquid. J. Chem. Phys. 114, 9142–9155 (2001).

Gasser, U., Weeks, E. R., Schofield, A., Pusey, P. N. & Weitz, D. A. Real-space imaging of nucleation and growth in colloidal crystallization. Science 292, 258–262 (2001).

Alsayed, A. M., Islam, M. F., Zhang, J., Collings, P. J. & Yodh, A. G. Premelting at defects within bulk colloidal crystals. Science 309, 1207–1210 (2005).

Pande, V. S., Grosberg, A. Y. & Tanaka, T. Heteropolymer freezing and design: Towards physical models of protein folding. Rev. Mod. Phys. 72, 259–314 (2000).

Wang, R. et al. Artificial ‘spin ice’ in a geometrically frustrated lattice of nanoscale ferromagnetic islands. Nature 439, 303–306 (2006).

Ke, X. et al. Energy minimization and ac demagnetization in a nanomagnet array. Phys. Rev. Lett. 101, 037205 (2008).

Nisoli, C. et al. Ground state lost but degeneracy found: The effective thermodynamics of artificial spin ice. Phys. Rev. Lett. 98, 217203 (2007).

Ritort, F. & Sollich, P. Glassy dynamics of kinetically constrained models. Adv. Phys. 52, 219–342 (2003).

Janke, W. (ed.) Rugged Free Energy Landscapes (Lecture Notes in Physics, Vol. 736, Springer, 2008).

Möller, G. & Moessner, R. Magnetic multipole analysis of kagome and artificial spin-ice dipolar arrays. Phys. Rev. B 80, 140409(R) (2009).

Tanaka, M., Saitoh, E. & Miyajima, H. Magnetic interactions in a ferromagnetic honeycomb nanoscale network. Phys. Rev. B 73, 052411 (2006).

Qi, Y., Brintlinger, T. E. & Cumings, J. Direct observation of the ice rule in an artificial kagomé spin ice. Phys. Rev. B 77, 094418 (2008).

Wehrl, A. General properties of entropy. Rev. Mod. Phys. 50, 221–260 (1978).

Penrose, O. Foundations of Statistical Mechanics (Pergamon, 1970).

Jaynes, E. T. Information theory and statistical mechanics. Phys. Rev. 106, 620–630 (1957).

Schlijper, A. G. & Smit, B. Two-sided bounds on the free energy from local states in Monte Carlo simulations. J. Stat. Phys. 56, 247–259 (1989).

Schlijper, A. G. & van Bergen, A. R. D. Local-states method for the calculation of free energies in Monte Carlo simulations of lattice models. Phys. Rev. A 41, 1175–1178 (1990).

Cover, T. M. & Thomas, J. A. Elements of Information Theory (Wiley, 1991).

Dembo, A. & Zeitouni, O. Large Deviations Techniques and Applications 2nd edn (Springer, 1998).

Acknowledgements

We acknowledge the financial support from the Army Research Office and the National Science Foundation MRSEC program (DMR-0820404) and the National Nanotechnology Infrastructure Network. We thank C. Leighton and M. Erickson for permalloy growth.

Author information

Authors and Affiliations

Contributions

P.S. and V.H.C. conceived the initial idea of this project and kept it on track. X.K., J.L. and D.M.G. carried out the experiments and collected data. C.N. made theoretical contributions. P.E.L. analysed data and developed theory.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing financial interests.

Supplementary information

Supplementary Information

Supplementary Information (PDF 254 kb)

Rights and permissions

About this article

Cite this article

Lammert, P., Ke, X., Li, J. et al. Direct entropy determination and application to artificial spin ice. Nature Phys 6, 786–789 (2010). https://doi.org/10.1038/nphys1728

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/nphys1728

This article is cited by

-

Kagome qubit ice

Nature Communications (2023)

-

Real-space observation of ergodicity transitions in artificial spin ice

Nature Communications (2023)

-

Advances in artificial spin ice

Nature Reviews Physics (2019)

-

Realization of ground state in artificial kagome spin ice via topological defect-driven magnetic writing

Nature Nanotechnology (2018)

-

Interaction modifiers in artificial spin ices

Nature Physics (2018)