Abstract

Network science investigates the architecture of complex systems to understand their functional and dynamical properties. Structural patterns such as communities shape diffusive processes on networks. However, these results hold under the strong assumption that networks are static entities where temporal aspects can be neglected. Here we propose a generalized formalism for linear dynamics on complex networks, able to incorporate statistical properties of the timings at which events occur. We show that the diffusion dynamics is affected by the network community structure and by the temporal properties of waiting times between events. We identify the main mechanism—network structure, burstiness or fat tails of waiting times—determining the relaxation times of stochastic processes on temporal networks, in the absence of temporal–structure correlations. We identify situations when fine-scale structure can be discarded from the description of the dynamics or, conversely, when a fully detailed model is required due to temporal heterogeneities.

Similar content being viewed by others

Introduction

The relation between network structure and dynamics has attracted the attention of researchers from different disciplines over the years1,2,3,4,5. These works are rooted in the observation that in contexts as diverse as the Internet, society and biology, networks tend to possess complex patterns of connectivity, with a significant level of heterogeneity4. In addition, a broad range of real-world dynamical processes, from information to virus spreading, is akin to diffusion. If the effect of structure, such as communities or degree heterogeneity, on diffusive processes is now well known6,7, the impact of the temporal properties of individual nodes is poorly understood1,8. Yet, empirical evidence indicates that real-world networked systems are often characterized by complex temporal patterns of activity, including a fat-tailed distribution of times9,10,11,12,13,14,15,16, correlations between events17,18 and non-stationarity19,20,21. This is in remarkable contrast to a vast majority of mathematical models, which assume homogeneous interaction dynamics on networks1,2,22,23. In this work, we focus on the impact of structure and the waiting-time distribution on dynamics, and set aside any other temporal properties. This subject has triggered intense theoretical work in recent years, for example, in relation to anomalous diffusion, but predominantly on lattice-like, annealed24 and random structures20,23,25,26,27,28. These limitations leave a fundamental question open, with important applications for the modelling of temporal networks: what are the effects of complex patterns, simultaneously in time and in structure, on the approach to equilibrium of diffusion processes?

To comprehend the interplay between temporal and structural patterns, we focus on a broad class of linear multi-agent systems describing N interacting nodes, defined by

where xi, the ith component of x, represents the observed state of node i. The N × N real matrix L encodes the mutual influences in the network, with non-zero entries indicating the presence of a link. D is either d/dt, the delay Dxi(t)=xi(t+1), or any other causal operator acting linearly on the trajectory of each entry xi(t). This equation couples network structure (represented by L) and time evolution (represented by D) by describing a system where every node i has a state xi(t)=Fui(t), where ui(t)=ΣjLijxj(t) represents the input, or influence of the neighbouring states on node i. The operator F is the so-called transfer function29,30, defined as the inverse of D (see Methods).

A classic example is heat diffusion on networks, where every node has a temperature xi evolving according to the Fourier Law

where μ is the characteristic time of the dynamics and L is a Laplacian of the network. The same set of equations can represent, possibly up to a change of variables, a basic model for the evolution of people’s opinions31, robots’ positions in the physical space30,32, approach to synchronization33,34 or the dynamics of a continuous-time random walker35—our main example from now. In any case, the dynamical properties of the system are described by the spectral properties of the coupling matrix. The constraints imposed by the conservation of probability imply that the Laplacian dynamics is characterized by a stationary state, associated to the dominant eigenvector of L, which we will assume to be unique, as is the case in a large class of systems, for example, strongly connected networks. A key quantity is thus the second dominant eigenvalue, also called the spectral gap6, which provides us with the relaxation time to stationarity, usually called mixing time36 for stochastic diffusion processes. The spectral gap determines the speed of convergence to the stationary state and measures the effective size of the system in terms of dynamics. The spectral gap is also related to important structural and dynamical properties of the system, such as the existence of bottlenecks and communities in the underlying network7,30.

In this study, we generalize the concept of spectral gap and of mixing time to random processes with general causal operator D, and focus in detail on operators with long-term memory, naturally emerging in diffusion with bursty dynamics. After showing connections between the theory of random walks and that of multi-agents systems, we identify the temporal and structural mechanisms driving the asymptotic dynamics of the system and provide examples when each mechanism prevails. By doing so, we show that the form of the temporal operator D may either slow down or accelerate mixing as compared with the differential operator equation (2). The results are further exploited to assess the possibility of coarse-graining the dynamics based on the network community structure and tested using numerical simulations on synthetic temporal networks calibrated with empirical data.

Results

Random walks with arbitrary waiting times

The generalized dynamics of the random walker, illustrated in Fig. 1a, is defined as follows. A walker arriving at a node i jumps towards a neighbouring node within a time interval [Δt,Δt+δ] with probability ρ(Δt)δ (for small δ). In line with a standard discrete-time random walk process, the jump is directed towards a neighbour j with probability Pij. The probability density function ρ(Δt) is called the waiting- time distribution of the walker. At each hop, the waiting time Δt is reset to zero and consecutive waiting times are independent. The evolution of probability of the presence of a stochastic process in each state is ruled by the so-called master equation, or Kolmogorov forward equation, well known for this family of generalized walks24,37,38. We prefer to adopt here the equivalent, dual, viewpoint of Kolmogorov backward equation39, which belongs to the class of processes defined by equation (1) and can be analysed using the toolbox typical to multi-agent systems30,32, such as the transfer function formalism and eigenmode decomposition.

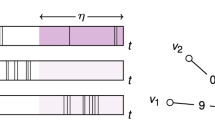

(a) A random walk process can be illustrated by a letter (or banknote and so on) being randomly passed from neighbour to neighbour on a social network. Temporal patterns of waiting times between arrival and departure of the letter may be homogeneous (for instance, discrete or exponentially distributed times) or heterogeneous (for instance, bursts). (b) The relaxation time measures the characteristic time to reach equilibrium from any starting condition. (c) The competition between structure and temporal patterns regulates the relaxation time, or mixing time, of stochastic processes.

We assign a fixed real-valued observable xobs,i to every node i and consider the value observed at time t by a walker starting initially from node j. This value is a random variable, with an expected value denoted by xj(t), taking initial value xj(0)=xobs,j. The random walk is ergodic and mixing on a strongly connected aperiodic network (aperiodicity is only required for the discrete time, when ρ(Δt) is a Dirac distribution). In this case, x(t) describes a consensus dynamics, meaning that the individual values asymptotically converge to one another, xi(∞)=xj(∞). If we choose the observable xobs,j=0 at all nodes j, except l at node k, then xi(t) is precisely the probability for the random walker starting at i to be found at k at time t. Therefore, the Kolmogorov backward equation, a consensus dynamics, embeds in particular the evolution of the probability of the presence on nodes.

The walker, assumed to have just hopped at time zero and finding itself at node i (another origin of time would not change the asymptotic decay times, of main interest in this work), hops again at time Δt with probability density ρ(Δt) and moves towards a neighbour j with probability Pij. The expected value observed by the walker at time t is xobs,i if it is still waiting for its first hop (t<Δt) and otherwise Σj Pijxj(t−Δt), by induction on the number of hops. Therefore, we obtain the vector equation

with the discrete transition matrix P of entries Pij. The convolution in time in the last term calls for a Laplace transform

For simplicity, we use the same notations for functions in the time and in the Laplace domain, only distinguished by their variable, namely t or Δt for time and s for Laplace. This is justified as time and Laplace domain representations encode the same single physical object, for example, a probability or an observable. The same holds for operator D, thought of as acting in the time domain (for instance D=d/dt) or the Laplace domain (D=s) according to the context.

The Laplace transform ρ(s) is the moment generating function of the waiting-time distribution ρ(Δt), as it encodes the moment in its Taylor series  , where μ is the expected waiting time (first moment), σ2 is the variance and μ2+σ2 is the second moment. Using the fact that convolution (respectively, integration from 0 to t) in the time domain corresponds to the usual product (respectively, division by s) in the Laplace domain40, equation (3) reduces to

, where μ is the expected waiting time (first moment), σ2 is the variance and μ2+σ2 is the second moment. Using the fact that convolution (respectively, integration from 0 to t) in the time domain corresponds to the usual product (respectively, division by s) in the Laplace domain40, equation (3) reduces to

or equivalently

where we have made the dependence on the initial condition explicit by using the relation xobs=x(t=0) and where L=P–I denotes the (normalized) Laplacian of the network. This is an instance of equation (1), which shows that an input–output relationship is often best expressed in the Laplace domain rather than in the temporal domain. In this case, the transfer function F(s) is defined by the algebraic relation F−1(s)=1/ρ(s)−1=D(s), up to the initial condition term, implicit in equation (1). See Methods for a derivation of equation (1) in a more general context.

From temporal networks to random walks

Diffusive processes often take place on temporal networks where individuals initiate from time-to-time short-lived contacts with their neighbours. A random walker can represent, for example, a letter or a banknote passing from hand to hand through first contact initiated by the current node. The formalism described above focuses on the statistical properties of the waiting times of a walker on a node15,25, and not of the inter-contact times (the times between two subsequent contacts from a given node to another), as often considered in the literature20,27,28,41,42,43. To illustrate this difference, let us consider an idealized scenario, where the network looks locally similar to a directed tree, to avoid indirect correlations due to cycles44, where the inter-contact time t between two contacts initiated by the same node is characterized by the same probability distribution ρcontact(t), and where activations on different edges are an independent random process. The corresponding waiting-time distribution ρ(Δt) for the random walker can be determined from ρcontact45,46.

For example, the classic inspection paradox, or bus paradox, observes that the waiting time has a mean

which can be much higher than the average inter-contact time μcontact in case of bursts. This fact has been used in the literature to deduce that burst contact statistics slowdown diffusion in a complex network43. Nevertheless, it should not be confounded with the results presented in this paper, which will focus on the statistical properties of the waiting-time distribution and identify, among others, that its variance plays a significant role on the asymptotic behaviour of the walker. In the above scenario, the variance of ρ(Δt) depends on the third moment of the inter-contact time distribution ρcontact(t) and is associated to a mechanism distinct from that of the bus paradox (equation (7)).

Eigenmodes for heterogeneous temporal operators

Equation (1) typically takes the form of an integro-differential equation. However, it simplifies into the differential equation (2) in the case of a memoryless random walker. Memoryless refers to the case when the probability of hopping between Δt and Δt+δ, knowing that the walker has waited at least Δt, is independent of Δt. This leads to an exponential waiting-time distribution45 ρ(Δt)=e−Δt/μ/μ, in other words an unconditional probability e−Δt/μδ/μ of jumping between Δt and Δt+δ, for small δ and for mean waiting time μ. In that case, we find D(s)=1/ρ(s)−1=μs in the Laplace domain, indeed recovering equation (2) in the time domain. The differential equation can then be analysed by changing the variables x to a linear combination of the eigenvectors vk of the Laplacian L, of eigenvalues λ0=0>λ1,λ2,...,λN−1≥−2 as follows30:

For simplicity we have supposed that the underlying network is undirected, connected and, in case of a discrete-time random walk, non-bipartite. Thus, the eigenvalues are real and the stationary state is uniquely defined6. Every zk(t), solution of Dzk(t)=λkzk(t), is called an eigenfunction of the operator D and here takes the form of a decaying exponential. The problem is thus solved by decoupling structural and temporal variables, first by identifying structural eigenvectors (vk), and then how they evolve in time (zk(t)). The resulting fundamental solutions zk(t)vk for equation (1) are called modes, or eigenmodes, of the system.

A similar analysis can be performed in the Laplace domain in the case of an arbitrary waiting-time distribution ρ(Δt), and thus when equation (1) has an arbitrary temporal operator D. In that case, the elementary solutions zk(t), associated to zk(s), need not be exponential functions and are obtained as solutions of equation (6), where the Laplacian is reduced to its eigenvalue λk

As before, any trajectory of the system can be expressed as a linear superposition of some or all the N modes.

Characteristic decay times of eigenmodes

Despite their non-exponential nature, a broad class of eigenfunctions defined by equation (9) still have a characteristic time τk describing the asymptotic decay of zk(t) to equilibrium as  (Fig. 1b). We find that the decay time can be accurately estimated by performing a small s expansion in the Laplace domain (see Methods for the derivation and range of validity):

(Fig. 1b). We find that the decay time can be accurately estimated by performing a small s expansion in the Laplace domain (see Methods for the derivation and range of validity):

where

is a measure of the burstiness of the temporal process based on the first and second moments of its distribution. Burstiness β equals to zero for a Poisson process (memoryless waiting times, ρ(Δt)=e−Δt/μ/μ) and ranges from−1/2 (if ρ(Δt) is a Dirac distribution) to arbitrarily large positive values (for highly bursty activity). This expression emerges naturally from the dynamical process and can be viewed as a measure of burstiness, equivalent to the commonly used burstiness measure47.

The estimate provided by equation (10) can be further tightened whenever the distribution ρ(Δt) of waiting times contains a fat tail, possibly softened by an exponential tail. The archetypical example is a power law with soft cutoff,  , a frequent model in human dynamics supported by empirical evidence9,14,41. More generally,

, a frequent model in human dynamics supported by empirical evidence9,14,41. More generally,  , where ρ0 is a fat tailed distribution, that is, decreasing subexponentially. The non-analytic point created in ρ(s) by the fat tail leads to an additional term in the characteristic time (see Methods)

, where ρ0 is a fat tailed distribution, that is, decreasing subexponentially. The non-analytic point created in ρ(s) by the fat tail leads to an additional term in the characteristic time (see Methods)

which can be approximated as follows, if |λk|−1 and β have different orders of magnitude

Mixing times and dominating mechanisms

Of particular importance is the slowest non-stationary mode (k=1), or mixing mode, the characteristic decay time of which represents the worst-case relaxation time of any initial condition to stationarity. We call this time the mixing time of the process, in generalization of the classic memoryless case, where it is given by  , determined by the so-called spectral gap

, determined by the so-called spectral gap  (refs 6, 36). This quantity is related to the presence of bottlenecks (that is, weak connections between groups of highly connected nodes, a.k.a. network communities) in the network (via Cheeger’s inequality48,49). It is approximated as

(refs 6, 36). This quantity is related to the presence of bottlenecks (that is, weak connections between groups of highly connected nodes, a.k.a. network communities) in the network (via Cheeger’s inequality48,49). It is approximated as

This expression shows that the asymptotic dynamics of each mode is determined by the competition between three factors: a structural factor  associated to the spectral properties of the Laplacian of the underlying network and two temporal factors associated to the shape of the waiting-time distribution, namely its burstiness (μβ) and its exponential tail (τtail). The slowest (largest) of these factors dominates the asymptotic dynamics (Fig. 1c).

associated to the spectral properties of the Laplacian of the underlying network and two temporal factors associated to the shape of the waiting-time distribution, namely its burstiness (μβ) and its exponential tail (τtail). The slowest (largest) of these factors dominates the asymptotic dynamics (Fig. 1c).

We emphasize that the burstiness and the fat tail effects are not necessarily related46. For instance, a power law ρ(Δt)∝(Δt+1)−3 restricted to times Δt≤T (sharp cutoff), is arbitrarily bursty but has no tail at all (τtail=0) for large but finite T. On the other hand, the delayed power law ρ(Δt)∝(Δt−T)−4 restricted to times Δt≥T+1 has a fat tail (it decreases subexponentially, τtail=∞) but low burstiness β for large T, as the mean time μ increases without bound and the variance remains constant. In the latter case, as for all pure power laws, ρ(Δt)∼Δt−γ for large Δt, the mixing time τmix, as τtail, is actually infinite, reflecting that mixing or relaxation to stationarity occurs only with subexponential convergence. In general, the properties of the tail of the distribution depend on the high-order moments of the distribution and not only on the first two moments as captured in the coefficient of burstiness.

In Fig. 2, we study the mechanism dominating the mixing time in toy synthetic temporal networks with different waiting-time distributions. Not surprisingly, structure is the driving mechanism when waiting times are narrowly distributed around a mean value, as in the case of Dirac (discrete time, Fig. 2c) and Erlang distributions (resembling a discrete-time distribution with small fluctuations, Fig. 2d). For those, the slowdown factor  , comparing the exact mixing time (computed with equation (18) in Methods), with what it would be with memoryless waiting times

, comparing the exact mixing time (computed with equation (18) in Methods), with what it would be with memoryless waiting times  , takes value in [0.6,1]. This indicates a limited speed up of the mixing due to negative burstiness, β<0, whereas the structure plays a major role through

, takes value in [0.6,1]. This indicates a limited speed up of the mixing due to negative burstiness, β<0, whereas the structure plays a major role through  traversing orders of magnitude.

traversing orders of magnitude.

We illustrate the findings with a simple network composed of two communities, where the community structure is modulated by rewiring edges starting from two separated random graphs, as shown in a. Each panel (b–f) shows (on the top bar) for each value of the rewiring probability the slowdown factor Θ, ratio of the exact mixing time for a given waiting-time distribution and for the exponential case (Poisson process). Each panel corresponds to a different waiting-time distribution and the colourbar scale on the right represents the values of Θ for the respective colourmap. Θ close to one indicates that non-trivial waiting-time patterns can be neglected in the determination of the mixing time. On the other hand, a high Θ indicates that the details of the network structure are overshadowed by the temporal dynamics. (b) The reference case, that is, the exponential waiting times. We observe a speedup (Θ<1) for (c) Dirac (discrete) and (d) Erlang distributions, and a slowdown (Θ>1) for (e) a power law with sharp cutoff and for (f) a power law with soft cutoff. From left to right, we show how this factor depends on the network structure by progressively removing the community structure (increasing  ). The bottom bar of each panel shows, according to equation (14), which is the dominant factor, either structure, burstiness or tail, regulating the relaxation time for each model of waiting times. See Methods for details on the synthetic networks and waiting-time distributions.

). The bottom bar of each panel shows, according to equation (14), which is the dominant factor, either structure, burstiness or tail, regulating the relaxation time for each model of waiting times. See Methods for details on the synthetic networks and waiting-time distributions.

On the other hand, competition between structure and time appears in scenarios of high temporal heterogeneity. For example, only strong communities are able to dominate power-law waiting times (Fig. 2e,f). The effect of structure on the mixing times is otherwise removed as burstiness (Fig. 2e) or tail (Fig. 2f) becomes the leading mechanism, scaling the mixing times up to 14-fold in the shown configurations. The transitions between the different mechanisms for a range of power-law configurations are presented in Supplementary Fig. 1 and Supplementary Note 1.

Model reduction

The use of coarse-graining through time-scale separation, which is the separate treatment of fast and slow dynamics that coexist inside a system, is crucial to reduce the complexity of systems made of a large number of interacting entities50,51,52. This procedure is well known for differential equations such as equation (2). In this case, it consists in neglecting fast decaying modes, for example, with decay time less than a certain threshold τtresh—an approximation invalid for early times but acceptable for times significantly larger than τtresh. Only the dominant modes, thus fewer variables, are left in the reduced model. Decreasing the threshold time, one produces a full hierarchy of increasingly more accurate models, but also with increasingly more variables. Reduced models have a clear interpretation from the structural point of view, as fast modes typically correspond to the fast convergence of the probabilities of the presence on nodes to a quasi-equilibrium within a network community. This process is followed by a slow equilibration of the population of random walkers trapped in each community to a global equilibrium52,53,54. Each new reduced variable can therefore be interpreted as the slow-varying probability of the presence in each community, as if it had been collapsed into a single node. The hierarchy of increasingly more accurate and complex reduced models corresponds in this structural picture to a hierarchy of increasingly finer partitions into communities. Given that the decay times of the different modes in equation (2) correspond to the Laplacian eigenvalues, the k-community partition is unsurprisingly found to be encoded into the k dominant Laplacian eigenvectors, in a way that is decoded by spectral clustering algorithms55,56.

This methodology can be extended to a more general temporal operators D, where the successive decay times now depend on the Laplacian eigenvalues and the temporal characteristics following equation (13). However, the effect of temporality may have non-trivial consequences, as it may limit the number of reduced models. As an extreme scenario, let us consider a complex network in which the transient dynamics is dominated by the fat tail of the waiting-time distribution, such that all transient modes decay for large times as  . Following equation (8), the approach to stationarity is described by the superposition of modes, all of the form

. Following equation (8), the approach to stationarity is described by the superposition of modes, all of the form  , for various functions fk(t) that decay more slowly than exponentially, as for example

, for various functions fk(t) that decay more slowly than exponentially, as for example  . Among those, any mode can only become negligible with respect to another after a very long time. For all practical purposes, the system exhibits an inherently complex dynamics with no timescale separation, as the fine-scale structure (the finest being at the level of a single node) and the large-scale network communities have equal or similar impact on the random walk dynamics. As a consequence, only two reduced models are available, one in which all N modes are considered and another described solely by the stationary state.

. Among those, any mode can only become negligible with respect to another after a very long time. For all practical purposes, the system exhibits an inherently complex dynamics with no timescale separation, as the fine-scale structure (the finest being at the level of a single node) and the large-scale network communities have equal or similar impact on the random walk dynamics. As a consequence, only two reduced models are available, one in which all N modes are considered and another described solely by the stationary state.

In general, only the Laplacian eigenvalues smaller in magnitude than μ/τtail determine corresponding decay times. Just similar to that in classical memoryless case, they generate a hierarchy of timescales and reduced models corresponding to multiple levels of community structures. For instance, if five eigenvalues are smaller than μ/τtail, thus 0=λ0>λ1>⋯>λ4>−μ/τtail, then there is a reduced diffusion model capturing the movement of the random walker between five aggregated nodes. The mixing, internal to each community and decaying as  , is considered instantaneous at large-enough timescales. Coarser aggregation, for example, based on two communities and two eigenvalues λ0, λ1, may be relevant, although valid only at even larger times. However, aggregation based on any finer partitioning (other than into one-node communities) has the same accuracy as the five-community model and thus little practical value as a reduced model. The degeneracy of characteristic decay times therefore limits the number of useful reduced models. This implies that a decomposition into communities is not necessarily associated to a timescale separation, or a reduced model, of the dynamics. For this reason, only models incorporating 1–5 or N-dominating modes are adequate for this particular example. This smaller choice of reduced models has ambivalent consequences. It limits the set of resolutions at which to describe the dynamics, but also provides a natural level for community structure (an open problem in multi-scale community detection57,58,59,60,61), defined as the finest partitioning yielding a reduced model for the system.

, is considered instantaneous at large-enough timescales. Coarser aggregation, for example, based on two communities and two eigenvalues λ0, λ1, may be relevant, although valid only at even larger times. However, aggregation based on any finer partitioning (other than into one-node communities) has the same accuracy as the five-community model and thus little practical value as a reduced model. The degeneracy of characteristic decay times therefore limits the number of useful reduced models. This implies that a decomposition into communities is not necessarily associated to a timescale separation, or a reduced model, of the dynamics. For this reason, only models incorporating 1–5 or N-dominating modes are adequate for this particular example. This smaller choice of reduced models has ambivalent consequences. It limits the set of resolutions at which to describe the dynamics, but also provides a natural level for community structure (an open problem in multi-scale community detection57,58,59,60,61), defined as the finest partitioning yielding a reduced model for the system.

Numerical analysis

Equation (14) shows that the mixing time depends in first approximation only on the mean, variance and tail of the waiting-time distribution, whereas other properties of the distribution are irrelevant. With numerical simulations, we validate this approximation and provide some quantitative intuition on the competition between the three factors regulating the mixing. We construct synthetic temporal networks respecting our assumptions, for example, stationarity or the absence of correlations and calibrate them with the static structure and the inter-contact time distribution observed on a number of empirical data sets. The empirical networks used for calibration correspond to face-to-face interactions between visitors in a museum (SPM), between conference attendees (SPC)13 and between hospital staff (SPH)16; email communication within a university (EMA)10; sexual contacts between sex workers and their clients (SEX)14; and communication between members of a dating site (POK)12 (see Methods). On these networks, we observe the waiting-time distribution and the spectral gap, which allows to compute both the exact mixing time, with equation (18) in Methods, and its approximation in equation (14). The results, reported in Table 1, show a good agreement, except for SPC, as analysed later in this section.

Except for SEX42 and POK data sets, in the other cases the temporal heterogeneity substantially increases the mixing times (see slowdown factor Θ in Table 1). Table 2 shows that in these networks the dominant factor regulating the mixing time depends on the characteristics of the system. Fat tails of the waiting-time distribution drive the relaxation for the cases of face-to-face contacts (SPM, SPC and SPH). However, the structure is the leading mechanism behind the networks corresponding to other situations of human communication (EMA, SEX and POK).

If communities are completely removed by randomizing the network structure, the link sparsity of SEX and POK networks guarantees that structure remains dominating, as the sparsity results in the inevitable creation of bottlenecks for diffusion even in a random network. On the other hand, in the EMA network, which has a relatively dense connected structure, the absence of communities leads to temporal dominance (Table 2). Finally, when the contact times are uniformly distributed, we recover the well-known result that network structure, with or without communities (Table 2), is the main factor regulating the convergence to stationarity.

As the raw empirical temporal networks do not necessarily abide by our simplifying assumptions, one cannot validate our theoretical derivations, for example, by estimating the mixing time directly from simulations on empirical data. Indeed the periodic rythms or correlations between successive jumps or other temporal patterns may induce effects not captured by our formula, as discussed in Supplementary Table 1 and Supplementary Note 3.

Empirical data are collected during a finite time span T, leaving the choice between a model for ρ(Δt) that is limited to T (sharp cutoff) or extrapolated to infinite times with a tail decay (soft cutoff). The latter may occur, for instance, if we have theoretical or practical reasons to prefer a fat-tail-based model (for instance power law) with exponential cutoff for ρ(Δt). This choice is of little impact on the results, provided a sufficient observation time T (Supplementary Note 2). When temporal patterns trump structure, we have a self consistency condition  (Table 2) for SPM and SPH, expressing that both models lead to similar mixing times, albeit through the different mechanisms of either burstiness (sharp) or fat tail (soft). For SPC, self-consistency is not attained. This happens possibly because the observation time of ∼2 days is not sufficiently large to dilute the irregularity on the observed inter-contact time distribution (thus, in the simulated waiting times) induced by the inactivity during night periods. Consequently, this makes the tail difficult to measure, as it has not clearly emerged from a still transient behaviour, and a significant mismatch is observed between exact and approximate mixing (Table 2).

(Table 2) for SPM and SPH, expressing that both models lead to similar mixing times, albeit through the different mechanisms of either burstiness (sharp) or fat tail (soft). For SPC, self-consistency is not attained. This happens possibly because the observation time of ∼2 days is not sufficiently large to dilute the irregularity on the observed inter-contact time distribution (thus, in the simulated waiting times) induced by the inactivity during night periods. Consequently, this makes the tail difficult to measure, as it has not clearly emerged from a still transient behaviour, and a significant mismatch is observed between exact and approximate mixing (Table 2).

We evaluate the possible sizes of reduced models for the data as follows. As the eigenvalues λk of the Laplacian range between 0 and −2, the number of modes with structurally determined decay times (−μ/τtail<λk≤0) can be roughly evaluated to Nμ/2τtail on an N-node network, if eigenvalues are evenly spaced. A similar reasoning holds whenever burstiness dominates the tail effect. This analysis reveals three different scenarios in the structure-dominated data sets considered above: (i) SEX benefits from a full hierarchy of reduced models, as μ/2τtail>1. (ii) The dynamics on EMA can be approximated with just three modes, associated to three network communities, as 0=λ0>λ1>λ2>−μ/τtail>λ3>…, while a more detailed description, unless at the node level, would not gain any significant accuracy as further modes are all degenerate with the same decay time μ/τtail. (iii) POK exhibits a practically full hierarchy of reduced models, as around one third of its modes are determined by structure, μ/2τtail=0.33; therefore, the finest reduced model is at a few-node community level. This spectral analysis is comforted by applying a multiscale community detection algorithm to the empirical networks, which finds a partition into three communities dominated by fat-tail effects for EMA, but not for POK or SEX (Fig. 3).

(a) Although the mixing time of diffusion on a network may be defined by the community structure, diffusion in the communities themselves may be regulated by structural or temporal patterns. (b) The original network constructed from an empirical data set is divided into communities with a multi-resolution community detection algorithm, for different values of the resolution parameter (see Methods). For each partition, we identify the fraction of communities with more than 10 nodes (y axis), where either structure or tail dominates (according to equation (14)), and plot against the number of communities detected at each partition (x axis). This confirms our spectral analysis, which predicts a compact description of the diffusion dynamics on EMA into three modes describing a probability flow between three communities, each dominated by fat-tailed waiting times. Also in agreement with the spectral viewpoint, this is not the case for SEX or POK, unless at the level of a few-node communities.

Discussion

We have presented a unified mathematical framework to calculate the relaxation time to equilibrium in a wide variety of stochastic processes on networked temporal systems. Our formalism is able to refer to arbitrary linear multi-agent complex systems, including linearizations of non-linear dynamical models such as Kuramoto oscillators or non-Laplacian diffusion dynamics such as Susceptible-Infected-Recovered epidemics, as detailed in Methods. It is also possible to accommodate non-uniformity of parameters as nodes are not identical in real systems. Our results are particularly relevant to improve the understanding of temporal networks, by highlighting the important interplay between structure and the temporal statistics of the network. Our formalism is different from previous studies of random walks on temporal networks that focus on homogeneous temporal patterns23 or do not account for the competition between structure and time15. We emphasize that questions related to ordering, not timing of events, such as the number of hops before stationarity or the succession of nodes most probably traversed by the walker, depend on the structure alone and not of hops timing statistics. Moreover, key mechanisms have been left aside in our modelling approach, such as the non-stationarity or periodicity of most empirical networks19,20,21 and the existence of correlations between edge activations, and therefore preferred pathways of diffusion17,18,41. Important future work includes their incorporation in our mathematical framework and the identification of dominant mechanisms in empirical data.

We have shown that in the absence of some temporal correlations, the characteristic times of the dynamics are dominated either by temporal or by structural heterogeneities, as those observed in real-life systems. The competing factors are not only observed in different classes of networks modelled from empirical data but also at different hierarchical levels of the same network represented by its different scales of community structure. We have also identified two contrasting metrics of the statistics of waiting times, burstiness and fat tails. We have shown that they regulate the dynamics on the network in different ways. In systems where temporal patterns are the dominating factor, the reduced models obtained by aggregation of communities as commonly used in practice are not necessarily relevant, as small-scale details are impenetrably intertwined with large-scale structure to form a complex global dynamics. In general, the temporal characteristics impose the natural description levels of the dynamics. Altogether, our results suggest the need for a critical assessment of a complexity/accuracy trade-off when modelling network dynamics. In some classes of real-world systems, the burden of increased model complexity may not compensate the incremental gain in knowledge, whereas other systems require the fine network structure as a key ingredient to any realistic modelling.

Methods

Overview

We provide a description of multi-agent linear dynamics, detail the derivation of approximation (12) and its range of validity, illustrate the generality of the framework beyond random walks and describe the empirical data and numerical implementations. In the Supplementary Information, we discuss on power laws and cut offs (Supplementary Note 1 and Supplementary Fig. 1), the evaluation of waiting-time distributions in empirical data (Supplementary Note 2) and the adequacy of our theoretical framework in empirical networks with correlations and non-stationarity (Supplementary Note 3).

Linear multi-agent systems

We derive equation (1) for general linear-networked systems, or multi-agent systems30, with an illustration on consensus dynamics. Every node i is modelled by a linear agent whose internal state is initially zero, and that converts an input signal ui with an operator Fi—the so-called transfer function—into a state signal xi=Fiui. Here, ui represents a time trajectory ui(t) or its Laplace transform ui(s), and similarly for the state trajectory xi. The transfer function Fi is an operator mapping input trajectories ui to state trajectories xi, which is requested to be linear, causal (if ui(t)=0 for all t<T, then the same holds for xi(t)) and time invariant (shifting ui(t) in time results in shifting xi(t)). Under those conditions, this operator takes the form xi(s)=Fi(s)ui(s), a simple product of functions, in the Laplace domain.

When using Laplace transforms, it is customary to use time domain trajectories that are zero for negative times. We account for the state initial condition xi(0)=xi,0 with another input term vi that can be, for example, an impulse exciting the rest state to any desired initial condition at time zero. The agent dynamics therefore writes xi(s)=Fi(s)(ui(s)+vi(s)). For instance if Fi is the integration operator in the time domain,  from the initial value at time 0, or in other terms

from the initial value at time 0, or in other terms  (for the Dirac impulse δ(t)), then in the Laplace domain we find xi(s)=1/s(ui(s)+xi,0); in this case, the Laplace domain operator is the multiplication by 1/s and vi is a Dirac impulse in the time domain, a constant in the Laplace domain that encodes the initial condition. One can as well invert the transfer operator,

(for the Dirac impulse δ(t)), then in the Laplace domain we find xi(s)=1/s(ui(s)+xi,0); in this case, the Laplace domain operator is the multiplication by 1/s and vi is a Dirac impulse in the time domain, a constant in the Laplace domain that encodes the initial condition. One can as well invert the transfer operator,  , and write dxi/dt=ui(t)+xi,0δ(t) or sxi(s)=ui(s)+xi,0; here, the Dirac impulse is seen as arising from differentiating the discontinuous step of the state from rest to initial condition xi,0.

, and write dxi/dt=ui(t)+xi,0δ(t) or sxi(s)=ui(s)+xi,0; here, the Dirac impulse is seen as arising from differentiating the discontinuous step of the state from rest to initial condition xi,0.

Connecting agents such that the input ui(t) of agent i is a weighted sum of other agents’ states, Σj Lijxj(t) leads to a global dynamics dx/dt=L x+x0δ(t), or equivalently s x(s)=L x(s)+x0, where L is the matrix of entries Lij, x is the vector of states xi and x0 is the vector of initial conditions xi,0. When L is a Laplacian (rows summing to zero, non-negative off-diagonal weights), this is a simple consensus system where agents (for instance, robots or individuals) change their state (for instance, position, opinion modelled as a real number) towards an average of their neighbours’ states. With arbitrary interconnection weights and arbitrary transfer functions, one similarly obtains

where D is the diagonal matrix of transfer functions  (D reduces to a scalar in case of identical agents Fi=Fj) and v the vector of initial condition input vi. The case when L remains Laplacian, but D is arbitrary, describes general, higher-order consensus systems where the agents converge to equal values on a strongly connected network through arbitrarily complicated internal dynamics, for example, modelling realistic robots or vehicles. For example, vehicles of mass m driven by a force and friction will obey

(D reduces to a scalar in case of identical agents Fi=Fj) and v the vector of initial condition input vi. The case when L remains Laplacian, but D is arbitrary, describes general, higher-order consensus systems where the agents converge to equal values on a strongly connected network through arbitrarily complicated internal dynamics, for example, modelling realistic robots or vehicles. For example, vehicles of mass m driven by a force and friction will obey  . An abuse of notation allows to drop implicitly the initial condition and write simply dx/dt=Lx and more generally equation (1), Dx=Lx, instead of equation (15) above. Discrete-time systems are recovered with a discrete-derivative operator Dx(t)=x(t+1)−x(t).

. An abuse of notation allows to drop implicitly the initial condition and write simply dx/dt=Lx and more generally equation (1), Dx=Lx, instead of equation (15) above. Discrete-time systems are recovered with a discrete-derivative operator Dx(t)=x(t+1)−x(t).

Exact and approximate characteristic times for random walkers

We derive the approximate characteristic times (equation (12)) of decaying modes associated to a general random walk. The dominant mode associated to λ0=0 is the stationary distribution, unique for an ergodic random walk. The relaxation time to the stationary distribution, generalizing the well-known mixing time of Markov chain theory36, is the characteristic time of the slowest decaying mode after the stationary mode, usually associated to λ1, as we suppose here. An example of a case when λ1 is not associated to the mixing mode, but rather λN, is the discrete random walk, where the distribution is a Dirac distribution at μ, with zero variance, and the network is bipartite or close to bipartite.

The characteristic decay time of a time-domain function  can be found in the Laplace domain, as f(s) is defined and analytic over all s of real part larger than −1/τdecay, but undefined or non-analytic in at least one point s of real part−1/τdecay. For example, the Laplace transform of

can be found in the Laplace domain, as f(s) is defined and analytic over all s of real part larger than −1/τdecay, but undefined or non-analytic in at least one point s of real part−1/τdecay. For example, the Laplace transform of  is 1/(1+τdecays), with pole at s=−1/τdecay, and the Laplace transform of

is 1/(1+τdecays), with pole at s=−1/τdecay, and the Laplace transform of  for γ>1 is defined, but is non-analytic at s=−1/τdecay.

for γ>1 is defined, but is non-analytic at s=−1/τdecay.

The eigenfunction zk(t) associated to λk is the solution of D(s)zk(s)=λkzk(s)+D(s)zk(t=0)/s, derived in the text as equation (6), with D(s)=1/ρ(s)−1 and L replaced with λk. The decay time of zk(t) is found if we find the right-most non-analytic point of

derived in the main text as equation (9). Note that for λk≠0, the singularity in s=0 is only apparent as D(s)=μs−(σ2−μ2)s2/2+… This expression is non-analytic in s in exactly two circumstances: (i) D(s) is non-meromorphic in s, meaning that it cannot be defined analytically in a punctured neighbourhood of s (a neighbourhood of s−s) or (ii) D(s)=λk.

Case (i) happens when ρ(s) is non-meromorphic in s. A typical example is when  , where ρ0(t) is a fat-tailed distribution, in other words decreases more slowly than any exponential, leading to a non-meromorphic transfer function—a rare occurrence in standard systems theory. The non-analytic point is precisely s=−1/τtail.

, where ρ0(t) is a fat-tailed distribution, in other words decreases more slowly than any exponential, leading to a non-meromorphic transfer function—a rare occurrence in standard systems theory. The non-analytic point is precisely s=−1/τtail.

Case (ii) commands to solve the equation

whose solution sk enters the expression for the exact characteristic decay time, combining the two cases:

which can be approximated from an expansion of ρ(s). It is noteworthy that ρ(s)=1−μs+(μ2+σ2)s2/2−⋯ is also called the moment-generating function, whose successive derivatives around s=0 are the moments of the distribution, up to sign. We consider the Padé (that is, rational) approximation:

equivalent for small s to a second-order Taylor approximation in terms of accuracy. This approximates the transfer function D−1(s)=F(s)=ρ(s)/(1−ρ(s)) as

where the adimensional term  takes its minimum value at −1/2 for the discrete-time random walk, zero for a memoryless process and arbitrary large values for highly heterogeneous distributions. It is therefore a suitable measure of the burstiness of the process41,47. Such a transfer function is called Proportional-Integral, a common class in systems theory29. The equation D(s)=λk is approximately solved by 1/sk≈μ(|λk|−1+β). A possible non-analytic point at s=−1/τtail caps the characteristic decay time of eigenfunction k to

takes its minimum value at −1/2 for the discrete-time random walk, zero for a memoryless process and arbitrary large values for highly heterogeneous distributions. It is therefore a suitable measure of the burstiness of the process41,47. Such a transfer function is called Proportional-Integral, a common class in systems theory29. The equation D(s)=λk is approximately solved by 1/sk≈μ(|λk|−1+β). A possible non-analytic point at s=−1/τtail caps the characteristic decay time of eigenfunction k to

If λk−1 and β are of different orders of magnitude, one may further approximate as

in which the influence of the fat tail, the structure and the burstiness are decoupled. The mixing time is associated with λ1. Figure 4 shows that typically half of the modes have structurally determined decay times, namely those with positive 1+λk, if eigenvalues are uniformly spaced between 0 and −2, in apparent contradiction with the back of the envelope calculation Nμ/2τtail proposed in the main text, which can reach N. This is a consequence of the progressive degradation of the Padé approximation for larger s (larger eigenvalues).

Computation of the characteristic time of the distribution  (blue curve) with a fat-tailed ρ0 and a non-analytic point at s=−1/τtail. The exact characteristic decay times τk are found from the eigenvalues and the blue curve, following equation (18). In this example, only three modes, including the stationary, are influenced by the structure of the network, whereas the other modes collapse to a single decay time τtail. The dynamics can thus be approximated by three modes, typically describing aggregate probability flows between three network communities. The approximate solution equation (12) follows the red curve, constructed from the Padé approximant ρapprox, see equation (19). As eigenvalues λk change from 0 to −2, the values 1/(1+λk) can take any real value >1 or <−1.

(blue curve) with a fat-tailed ρ0 and a non-analytic point at s=−1/τtail. The exact characteristic decay times τk are found from the eigenvalues and the blue curve, following equation (18). In this example, only three modes, including the stationary, are influenced by the structure of the network, whereas the other modes collapse to a single decay time τtail. The dynamics can thus be approximated by three modes, typically describing aggregate probability flows between three network communities. The approximate solution equation (12) follows the red curve, constructed from the Padé approximant ρapprox, see equation (19). As eigenvalues λk change from 0 to −2, the values 1/(1+λk) can take any real value >1 or <−1.

Even for small eigenvalues, for example, for  , the approximation (equation (12)), thus also equation (14), has a limited range of validity. The underlying Padé approximation (equation (19)) is valid for small-enough s, while for large-enough s the exact solution is τtail. The small s behaviour is captured by the first and second moments, and the large s behaviour, in other terms the tail τtail, determines the growth of high-order moments. This double approximation based on high and low moments may explain why the approximation is successful on diverse data sets (see Fig. 3a). However, it is likely to fail precisely when the intermediate moments (third, fourth and so on), not covered by the approximation, dominate the behaviour of the moment generating function ρ(s). This occurs for instance in the case of a power law of exponent >3 with sharp cutoff at a large time: although the first and second moment remain bounded and the tail is absent, the intermediate moments grow without bound with the cutoff time. However, such distributions are rarely used as models for real-life data. On the other hand, numerical experiments showed excellent accuracy for a wide range of power laws with soft cutoff.

, the approximation (equation (12)), thus also equation (14), has a limited range of validity. The underlying Padé approximation (equation (19)) is valid for small-enough s, while for large-enough s the exact solution is τtail. The small s behaviour is captured by the first and second moments, and the large s behaviour, in other terms the tail τtail, determines the growth of high-order moments. This double approximation based on high and low moments may explain why the approximation is successful on diverse data sets (see Fig. 3a). However, it is likely to fail precisely when the intermediate moments (third, fourth and so on), not covered by the approximation, dominate the behaviour of the moment generating function ρ(s). This occurs for instance in the case of a power law of exponent >3 with sharp cutoff at a large time: although the first and second moment remain bounded and the tail is absent, the intermediate moments grow without bound with the cutoff time. However, such distributions are rarely used as models for real-life data. On the other hand, numerical experiments showed excellent accuracy for a wide range of power laws with soft cutoff.

Beyond random walks

Random walks are many times formally identical to the asymptotic behaviour of non-linear dynamical models and also serve as a prototype for a wider class of dynamics that includes epidemic spreading. For example, power networks may be modelled as a network of second-order Kuramoto oscillators33 whose state in every node is a phase (an angle) θi influenced by its neighbours

where I is the inertia, α the friction and ω describes the natural frequency of the oscillation. In this construction, we assume identical parameters for each node. After the change of variables φi=θi−ωt/α, the linearization around the synchronized equilibrium (φi=φj is constant for all times) is written

which is formally identical to a consensus equation structured by the Laplacian L33. Hence, it supports the same formalism as random walks, after the operator D is changed accordingly. The linear dynamics associated to the asymptotic behaviour is often a crucial first step in understanding the non-linear dynamics of the network at some time scale.

Another class of dynamical systems where our results apply are linear dynamics given by equation (1), but where the interaction matrix L does not have a Laplacian structure and where the dominant eigenmode is not necessarily stationary. This is the case, for instance, for epidemic processes where infected individuals diffuse on a meta-population network, where nodes are large populations (cities and so on), and the individuals may either reproduce (by contamination of a healthy individual) or die (or recover). One classic model for this process is a multi-type branching process. This is akin to a random walk whose number of walkers is not preserved in time, as random events are not limited to hops but also death or split. This kind of model also emerges as the linearization of classic compartmental epidemic models such as Susceptible-Infected or Susceptible-Infected-Recovered62. The waiting time between two consecutive events may also be described by an arbitrary distribution ρ(Δt). The expected number of walkers in time may again be described by an equation Dx=Lx, where D depends on ρ in the same way as before, but now L is no more a Laplacian and in particular may have negative and positive eigenvalues. If the epidemics is supercritical, then the system is unstable. This implies the existence of unstable eigenmodes (λk>0) where the number of walkers grow exponentially as  , with τk now approximated by

, with τk now approximated by  . In this case, the fat tail of the waiting-time distribution does not play any role for the unstable eigenmodes. From this formula, we see that although the decay of stable eigenmodes tends to slow down due to burstiness and possibly fat tail, the unstable eigenmodes are boosted by burstiness. As a consequence, it leads in all eigenmodes to a long-term increase of the infected population due to the temporal heterogeneity.

. In this case, the fat tail of the waiting-time distribution does not play any role for the unstable eigenmodes. From this formula, we see that although the decay of stable eigenmodes tends to slow down due to burstiness and possibly fat tail, the unstable eigenmodes are boosted by burstiness. As a consequence, it leads in all eigenmodes to a long-term increase of the infected population due to the temporal heterogeneity.

Numerical analysis

We use six data sets of empirical networks: SPM, SPC13 and SPH16; EMA10; SEX14; and POK12 (see Table 3).

The synthetic networks used in Fig. 2 are formed by 1,000 nodes and 10,000 links equally divided into two groups, initially disconnected (rewiring probability equal to zero). Within each group, pairs of nodes are formed between uniformly chosen nodes. A fraction of links are rewired to weaken communities. Rewiring consists of uniformly choosing two pairs of nodes and swapping the pairs. Rewiring probability equal to 1 removes any significant community structure from the network. Communities refer to groups of nodes with more connections between themselves than with nodes at other groups. The spectral gap is calculated for each network configuration. The exemplified waiting-time distribution are ρ(Δt)=exp(−Δt/μ)/μ with μ=1 (exponential); =1.0 (discrete); ∝Δtk−1 exp(−kΔt/μ) with k=3 and μ=3 (Erlang law); ∝Δt−γ with γ=2 and cutoff at T=1,000 (power law with sharp cutoff); ∝Δt−γexp(−Δt/τtail) with γ=3 and τtail=20 (power law with soft cutoff).

The synthetic temporal networks used in Table 2 and Fig. 3 are constructed by using the unweighted version of the empirical networks as underlying fixed structures. The randomized networks used in Table 2 are obtained by uniformly selecting two pairs of nodes of the original network and then swapping the respective contacts. In the dynamic network, a node and its links alternate between inactive and active states. To fit this synthetic temporal network to our theoretical assumptions, we assume that all links of a node are activated simultaneously, the system is time invariant (no daily or weekly cycles), exhibits no temporal correlations between successive jumps and node-activation times are sampled from the same distribution of interactivation times (node homogeneity). The active state of a node and its links lasts for one time step δt, and then the node and links return to the inactive state. The distribution of interactivation times (a.k.a. inter-contact times) corresponding to the original times is obtained by pooling the times between two subsequent activations of the same node, in other words two consecutive contacts established between the node and its neighbourhood, as observed in the empirical networks20,42. The randomized times in Table 2 correspond to activation times sampled from exponential distributions with the same mean as the corresponding empirical cases. To obtain the waiting-time estimates presented in Table 2, we simulate a random walk in these synthetic networks. A random walker starts in a randomly chosen node. As time goes by, a walker remains in the node until its new activation and then hops to a uniformly chosen neighbour. The waiting time is thus the time elapsed between the arrival and departure of the walker in a node. We simulate a single random walker and let it hop 300,000 times, starting at 10 uniformly chosen nodes; hence, we collect a total of 3 million points for the statistics of waiting times. Besides that, for each starting configuration we discard the initial 5,000 hops to remove the stochastic transient. The waiting-time distribution observed in the simulations provides us with estimates of τmix, μ, σ2 and τtail. The mixing time τmix is estimated from the distribution expressed in the Laplace domain through equation (18) (for k=1, the mixing mode). This equation is exact, as the synthetic data has been constructed so as to satisfy the conditions of validity under which it is proved. One could also estimate the mixing time from the convergence of random trajectories starting in a definite node towards stationarity; however, this exercise is computationally demanding. The exponential tail decay time τtail is estimated using least-square fitting by a line in a lin-log plot of the second half of the waiting-time distribution data, thus between Tmax/2 and Tmax, where Tmax is the largest observed waiting time. Indeed, an exponential decay translates into a straight line in a lin-log plot, the slope of which determines the decay time. The choice of the interval [Tmax/2, Tmax] to perform a linear fit is steered by the want for a simple criterion uniform across data sets. This may be inappropriate for data sets where the observation time forces a cutoff on the data sets before the tail decay has had time to emerge, for instance in SPC, where a more careful definition of tail, for example, on a smaller interval, may be more adequate.

Let us interpret some elementary observations that can be made on Table 2. From (a) to (b), τtail is only approximately preserved, because it is recomputed from the simulated waiting-time distribution on a different network, thus a slightly different distribution, even though the inter-contact times are sampled from the same distribution in both panels. In (c) and (d), the inter-contact time distribution is memoryless (exponential), thus identical to the waiting-time distribution regardless of the network, and τtail coincides with μ. From (a) to (c), the quantity  drops sharply, although

drops sharply, although  is clearly preserved as the network is unaltered. The reason is that although the mean inter-contact time is preserved by construction, the mean waiting time also depends on the variance of the inter-contact times, in virtue of the bus paradox, and this variance is clearly strongly affected by the time randomization. The drop in

is clearly preserved as the network is unaltered. The reason is that although the mean inter-contact time is preserved by construction, the mean waiting time also depends on the variance of the inter-contact times, in virtue of the bus paradox, and this variance is clearly strongly affected by the time randomization. The drop in  from (b) to (d) is associated to the same mechanism.

from (b) to (d) is associated to the same mechanism.

To identify the community structure of the empirical networks in Fig. 3, we use the partition stability method54 using a freely available implementation63. The method uses a simple diffusion process such that different communities are detected at different time scales according to the potential of the communities to trap the diffusion at the given time scale. Optimal communities have been derived for values of the resolution parameter decreasing from 102,101.95,101.9,101.85,…, with increasingly fine partitions. After identifying the relevant communities, we discard the inter-community links and calculate the spectral gap of each individual community of at least ten nodes.

Additional information

How to cite this article: Delvenne, J.-C. et al. Diffusion on networked systems is a question of time or structure. Nat. Commun. 6:7366 doi: 10.1038/ncomms8366 (2015).

References

Bansal, S., Read, J., Pourbohloul, B. & Meyers, L. A. The dynamic nature of contact networks in infectious disease epidemiology. J. Biol. Dyn. 4, 478–489 (2010).

Barrat, A., Barthélemy, M. & Vespignani, A. Dynamical Processes on Complex Networks Cambridge University Press (2012).

Moody, J. The Oxford Handbook of Analytical Sociology pages 447–474Oxford University Press (2009).

Newman, M. E. J. Networks: An Introduction Oxford University Press (2010).

Vespignani, A. Modelling dynamical processes in complex socio-technical systems. Nat. Phys. 8, 32–39 (2012).

Chung, F. R. K. Spectral Graph Theory American Mathematical Society (1996).

Lovász, L. Random walks on graphs: a survey. Boy. Soc. Math. Stud. 2, 1–46 (1993).

Holme, P. & Saramäki, J. Temporal networks. Phys. Rep. 519, 97–125 (2012).

Barabási, A. -L. The origin of bursts and heavy tails in human dynamics. Nature 435, 207–211 (2005).

Eckmann, J. -P., Moses, E. & Sergi, D. Entropy of dialogues creates coherent structures in e-mail traffic. Proc. Natl Acad. Sci. 101, 14333–14337 (2004).

Haerter, J. O., Jamtveit, B. & Mathiesen, J. Communication dynamics in finite capacity social networks. Phys. Rev. Lett. 109, 168701 (2012).

Holme, P., Edling, C. R. & Liljeros, F. Structure and time-evolution of an Internet dating community. Soc. Net. 26, 155–174 (2004).

Isella, L. et al. What’s in a crowd? Analysis of face-to-face behavioral networks. J. Theor. Biol 271, 166–180 (2011).

Rocha, L. E. C., Liljeros, F. & Holme, P. Information dynamics shape the sexual networks of Internet-mediated prostitution. Proc. Natl Acad. Sci. 107, 5706–5711 (2010).

Starnini, M., Baronchelli, A., Barrat, A. & Pastor-Satorras, R. Random walks on temporal networks. Phys. Rev. E 85, 056115 (2012).

Vanhems, P. et al. Estimating potential infection transmission routes in hospital wards using wearable proximity sensors. PLOS One 8, e73970 (2013).

Rosvall, M., Esquivel, A. V., Lancichinetti, A., West, J. D. & Lambiotte, R. Memory in network flows and its effects on spreading dynamics and community detection. Nat. Commun. 5, 4630 (2014).

Scholtes, I. et al. Causality-driven slow-down and speed-up of diffusion in non-Markovian temporal networks. Nat. Commun. 5, 5024 (2014).

Holme, P. & Liljeros, F. Birth and death of links control disease spreading in empirical contact networks. Sci. Rep. 4, 4999 (2014).

Rocha, L. E. C. & Blondel, V. D. Bursts of vertex activation and epidemics in evolving networks. PLoS Comput. Biol. 9, e1002974 (2013).

Horváth, D. X. & Kertész, J. Spreading dynamics on networks: the role of burstiness, topology and non-stationarity. N. J. Phys. 16, 073037 (2014).

Malmgren, R. D., Stouffer, D. B., Motter, A. E. & Amaral, L. A. N. A Poissonian explanation for heavy tails in e-mail communication. Proc. Natl Acad. Sci. 105, 18153–18158 (2008).

Perra, N. et al. Random walks and search in time-varying networks. Phys. Rev. Lett. 109, 238701 (2012).

Klafter, J. & Sokolov, I. M. First Steps in Random Walks: From Tools to Applications Oxford University Press (2011).

Iribarren, J. L. & Moro, E. Impact of human activity patterns on the dynamics of information diffusion. Phys. Rev. Lett. 103, 038702 (2009).

Jo, H. -H., Perotti, J. I., Kaski, K. & Kertész, J. Analytically solvable model of spreading dynamics with non-Poissonian. Phys. Rev. X 4, 011041 (2014).

Min, B., Goh, K. -I. & Vazquez, A. Spreading dynamics following bursty human activity patterns. Phys. Rev. E 83, 036102 (2011).

Vazquez, A., Rácz, B., Lukács, A. & Barabási, A. -L. Impact of non-Poissonian activity patterns on spreading processes. Phys. Rev. Lett. 98, 158702 (2007).

Aström, K. J. & Murray, R. M. Feedback Systems: An Introduction for Scientists and Engineers Princeton University Press (2010).

Fax, J. A. & Murray, R. M. Information flow and cooperative control of vehicle formations. Autom. Cont. 49, 1465–1476 (2004).

Blondel, V. D., Hendrickx, J. M., Olshevsky, A. & Tsitsiklis, J. N. Convergence in multiagent coordination, consensus, and flocking. Proc. 44th IEEE Conf. Decision Control, 2996-3000 (2005).

Jadbabaie, A., Lin, J. & Morse, A. S. Coordination of groups of mobile autonomous agents using nearest neighbor rules. Autom. Control IEEE Trans. 48, 988–1001 (2003).

Dörfler, F. & Bullo, F. Synchronization and transient stability in power networks and nonuniform Kuramoto oscillators. SIAM J. Cont. Opt. 50, 1616–1642 (2012).

Strogatz, S. H. From Kuramoto to Crawford: exploring the onset of synchronization of in populations of coupled oscillators. Phys. D 143, 1–20 (2000).

Yin, G. G. & Zhang, Q. Continuous-Time Markov Chains and Applications: A Two-time-scale Approach volume 37, Springer (2012).

Levin, D. A., Peres, Y. & Wilmer, E. L. Markov Chains and Mixing Times American Mathematical Society (2008).

Hoffmann, T., Porter, M. A. & Lambiotte, R. Generalized master equations for non-Poisson dynamics on networks. Phys. Rev. E 86, 046102 (2012).

Montroll, E. W. & Weiss, G. H. Random walks on lattices ii. J. Math. Phys. 6, 167–181 (1965).

Kolmogoroff, A. Uber die analytischen Methoden in der Wahrscheinlichkeitsrechnung. Math. Ann. 104, 415–458 (1931).

Dyke, P. An Introduction to Laplace Transforms and Fourier Series Springer (2014).

Kivelä, M. et al. Multiscale analysis of spreading in a large communication network. J. Stat. Mech. 2012, P03005 (2012).

Rocha, L. E. C., Liljeros, F. & Holme, P. Simulated epidemics in an empirical spatiotemporal network of 50,185 sexual contacts. PLoS Comput. Biol. 7, e1001109 (2011).

Karsai, M. et al. Small but slow world: how network topology and burstiness slow down spreading. Phys. Rev. E 83, 025102 (2011).

Speidel, L., Lambiotte, R., Aihara, K. & Masuda, N. Steady state and mean recurrence time for random walks on stochastic temporal networks. Phys. Rev. E 91, 012806 (2015).

Kleinrock, L. Queueing Systems: Theory. I Wiley-Interscience (1975).

Lambiotte, R., Tabourier, L. & Delvenne, J. -C. Burstiness and spreading on temporal networks. Eur. Phys. J. B 86, 7 (2013).

Goh, K. I. & Barabási, A. -L. Burstiness and memory in complex systems. Europhys. Lett. 81, 4 (2008).

Cheeger, J. Problems in Analysis pages 195–199Princeton University Press (1970).

Diaconis, P. & Stroock, D. Geometric bounds for eigenvalues of Markov chains. Ann. Appl. Probab. 1, 36–61 (1991).

Gfeller, D. & De Los Rios, P. Spectral coarse graining and synchronization in oscillator networks. Phys. Rev. Lett. 100, 174104 (2008).

Kokotovic, P., Khalil, H. K. & O’reilly, J. Singular Perturbation Methods in Control: Analysis and Design volume 25, Society for Industrial and Applied Mathematics (1987).

Simon, H. A. & Ando, A. Aggregation of variables in dynamic systems. Econometrica 29, 111–138 (1961).

Simonsen, I. Diffusion and networks: a powerful combination!. Phys. A 357, 317–330 (2005).

Delvenne, J. -C., Schaub, M. T., Yaliraki, S. N. & Barahona, M. Dynamics On and Of Complex Networks volume 2, pages 221–242Springer (2013).

Shen, H. -W. & Cheng, X. -Q. Spectral methods for the detection of network community structure: A comparative analysis. J. Stat. Mech. 2010, P10020 (2010).

Von Luxburg, U. A tutorial on spectral clustering. Stat. Comput. 17, 395–416 (2007).

Reichardt, J. & Bornholdt, S. Statistical mechanics of community detection. Phys. Rev. E 74, 016110 (2006).

Ronhovde, P. & Nussinov, Z. Multiresolution community detection for megascale networks by information-based replica correlations. Phys. Rev. E 80, 016109 (2009).

Fortunato, S. Community detection in graphs. Phys. Rep. 486, 75–174 (2010).

Karrer, B., Levina, E. & Newman, M. E. J. Robustness of community structure in networks. Phys. Rev. E 77, 046119 (2008).

Delmotte, A., Tate, E. W., Yaliraki, S. N. & Barahona, M. Protein multi-scale organization through graph partitioning and robustness analysis: Application to the myosin light chain interaction. Phys. Biol. 8, 055010 (2011).

Keeling, M. J. & Ross, J. V. On methods for studying stochastic disease dynamics. J. R. Soc. Interface 5, 171–181 (2008).

Delmotte, A., Schaub, M. T., Yaliraki, S. N. & Barahona, M. Community Detection using the stability of a graph partition https://github.com/michaelschaub/PartitionStability (2012).

Acknowledgements

We thank Michael Schaub for carefully reading the manuscript. J.C.D. and R.L. thank financial support of IAP DYSCO and ARC ‘Mining and Optimization of Big Data Models’. R.L. acknowledges support from FNRS and from FP7 project ‘Optimizr’. L.E.C.R. is an FNRS chargé de recherches and thanks VR for financial support.

Author information

Authors and Affiliations

Contributions

J.C.D., L.E.C.R. and R.L. conceived the project and wrote the manuscript. J.C.D. derived the analytical results. L.E.C.R. performed the numerical simulations and analysed the data.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing financial interests.

Supplementary information

Supplementary Information

Supplementary Figure 1, Supplementary Table 1, Supplementary Notes 1-3 and Supplementary References (PDF 551 kb)

Rights and permissions

About this article

Cite this article

Delvenne, JC., Lambiotte, R. & Rocha, L. Diffusion on networked systems is a question of time or structure. Nat Commun 6, 7366 (2015). https://doi.org/10.1038/ncomms8366

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/ncomms8366

This article is cited by

-

Bursts of communication increase opinion diversity in the temporal Deffuant model

Scientific Reports (2024)

-

Temporal-topological properties of higher-order evolving networks

Scientific Reports (2023)

-

The shape of memory in temporal networks

Nature Communications (2022)

-

Analysis of the competition among viral strains using a temporal interaction-driven contagion model

Scientific Reports (2022)

-

Transition from simple to complex contagion in collective decision-making

Nature Communications (2022)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.