Abstract

Mathematical models are an essential component of quantitative science. They generate predictions about the future, based on information available in the present. In the spirit of simpler is better; should two models make identical predictions, the one that requires less input is preferred. Yet, for almost all stochastic processes, even the provably optimal classical models waste information. The amount of input information they demand exceeds the amount of predictive information they output. Here we show how to systematically construct quantum models that break this classical bound, and that the system of minimal entropy that simulates such processes must necessarily feature quantum dynamics. This indicates that many observed phenomena could be significantly simpler than classically possible should quantum effects be involved.

Similar content being viewed by others

Introduction

A mathematical model of a system of interest is an algorithmic abstraction of its observable output. Envision that the given system is encased within a black box, such that we observe only its output. Within a second box resides a computer that executes a model of this system with appropriate input. For the model to be accurate, we expect these boxes to be operationally indistinguishable; their output is statistically equivalent, such that no external observer can differentiate which box contains the original system.

There are numerous distinct models for any given system. Consider a system of interest consisting of two binary switches. At each time-step, the system emits a 0 or 1 depending on whether the state of the two switches coincides, and one of the two switches is chosen at random and flipped. The obvious model that simulates this system keeps track of both switches, and thus requires an input of entropy 2. Yet, the output is simply a sequence of alternating 0s and 1s, and can thus be modelled knowing only the value of the previous emission. Intuition suggests that this alternative is more efficient; it demands only an input of entropy 1 (that is, a single bit), when the original model required two. This intuition can be formalized by defining the optimal model of a particular behaviour is the one whose input is of minimal entropy. Indeed, this definition has been already adopted as a principle of computational mechanics1,2.

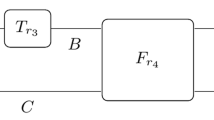

Efficient mathematical models carry operational consequence. The practical application of a model necessitates its physical realization within a corresponding simulator (Fig. 1). Therefore, should a model demand an input of entropy C, its physical realization must contain the capacity to store that information. The construction of simpler mathematical models for a given process allows potential construction of simulators with reduced information-storage requirements. Thus, we can directly infer the minimal complexity of an observed process once we know its simplest model. If a process exhibits observed statistics that require an input of entropy C to model, then whatever the underlying mechanics of the observed process, we require a system of entropy C to simulate its future statistics.

A mathematical model is defined by a stochastic function f that maps relevant data from the present, x, to desired output statistics that coincides with the process it seeks to model. To implement this model, we must realize it within some physical simulator. To do this, we (a) encode x within a suitable physical system, (b) evolve the system according to a physical implementation of f and (c) retrieve the predictions of model by appropriate measurement. On the other hand, given a simulator with entropy C that outputs statistically identical predictions, we can always construct a corresponding mathematical model that takes the initial state of this system as input. Thus the input entropy of a model and the initial entropy of its corresponding simulator coincide (this is also a lower bound on the amount of information the simulator must store). In this article, we regard both models and simulators as algorithms that map input states to desired output statistics, with implicit understanding that the two terms are interchangeable. The former emphasizes the mathematical nature of these algorithms, whereas the latter their physical realization.

These observations motivate maximally efficient models; models that generate desired statistical behaviour, while requiring minimal input information. In this article, we show that even when such behaviour aligns with simple stochastic processes, such models are almost always quantum. For any given stochastic process, we outline its provably simplest classical model. We show that unless improvement over this optimal classical model violates the second law of thermodynamics, our construction and a superior quantum model and its corresponding simulator can always be constructed.

Results

Framework and tools

We can characterize the observable behaviour of any dynamical process by a joint probability distribution  , where

, where  are random variables that govern the system's observed behaviour, respectively, in the past and the future. Each particular realization of the process has a particular past

are random variables that govern the system's observed behaviour, respectively, in the past and the future. Each particular realization of the process has a particular past  , with probability

, with probability  . Should there exist a model for this behaviour with an input of entropy C, then we may compress

. Should there exist a model for this behaviour with an input of entropy C, then we may compress  within a system S of entropy C, such that systematic actions on S generates random variables whose statistics obey

within a system S of entropy C, such that systematic actions on S generates random variables whose statistics obey  .

.

We seek the maximally efficient model, such that C is minimized. As the past contains exactly  (the mutual information between past and future) about the future, the model must require an input of entropy at least E (this remains true for quantum systems5). On the other hand, there appears no obvious reason a model should require anything more. We say that the resulting model, where

(the mutual information between past and future) about the future, the model must require an input of entropy at least E (this remains true for quantum systems5). On the other hand, there appears no obvious reason a model should require anything more. We say that the resulting model, where  , is ideal. It turns out that for many systems such models do not exist.

, is ideal. It turns out that for many systems such models do not exist.

Consider a dynamical system observed at discrete times  , with possible discrete outcomes

, with possible discrete outcomes  dictated by random variables Xt. Such a system can be modelled by a stochastic process6, where each realization is specified by a sequence of past outcomes

dictated by random variables Xt. Such a system can be modelled by a stochastic process6, where each realization is specified by a sequence of past outcomes  , and exhibits a particular future

, and exhibits a particular future  with probability

with probability  . Here,

. Here,  , referred to as excess entropy7,8, is a quantity of relevance in diverse disciplines ranging from spin systems9 to measures of brain complexity10. How can we construct the simplest simulator of such behaviour, preferably with input entropy of no more than E?

, referred to as excess entropy7,8, is a quantity of relevance in diverse disciplines ranging from spin systems9 to measures of brain complexity10. How can we construct the simplest simulator of such behaviour, preferably with input entropy of no more than E?

The brute force approach is to create an algorithm that samples from  given complete knowledge of

given complete knowledge of  . Such a construction accepts

. Such a construction accepts  directly as input, resulting in the required entropy of

directly as input, resulting in the required entropy of  , where

, where  denotes the Shannon entropy of the complete past. This is wasteful. Consider the output statistics resulting from a sequence of coin flips, such that

denotes the Shannon entropy of the complete past. This is wasteful. Consider the output statistics resulting from a sequence of coin flips, such that  is the uniform distribution over all binary strings. E equals 0 and yet C is infinite. It should not require infinite memory to mimic a single coin; better approaches exist.

is the uniform distribution over all binary strings. E equals 0 and yet C is infinite. It should not require infinite memory to mimic a single coin; better approaches exist.

Simplest classical models

ɛ-machines are the provably optimal classical solution3,4. They rest on the rationale that to exhibit desired future statistics, a system need not distinguish differing pasts,  , if their future statistics coincide. This motivates the equivalence relation, ∼, on the set of all past output histories, such that

, if their future statistics coincide. This motivates the equivalence relation, ∼, on the set of all past output histories, such that  . To sample from

. To sample from  for a particular

for a particular  , a ɛ-machine need not store

, a ɛ-machine need not store  , only which equivalence class,

, only which equivalence class,  belongs to. Each equivalence class is referred to as a causal state.

belongs to. Each equivalence class is referred to as a causal state.

For any stochastic process  with emission alphabet Σ, we may deduce its causal states

with emission alphabet Σ, we may deduce its causal states  that form the state space of its corresponding ɛ-machine. At each time step t, the machine operates according to a set of transition probabilities

that form the state space of its corresponding ɛ-machine. At each time step t, the machine operates according to a set of transition probabilities  ; the probability that the machine will output

; the probability that the machine will output  , and transition to Sk given that it is in state Sj. The resulting ɛ-machine, when initially set to state

, and transition to Sk given that it is in state Sj. The resulting ɛ-machine, when initially set to state  , generates a sequence

, generates a sequence  according to probability distribution

according to probability distribution  as it iterates through these transitions. The resulting ɛ-machine thus has internal entropy

as it iterates through these transitions. The resulting ɛ-machine thus has internal entropy

where S is the random variable that governs  and pj is the probability that

and pj is the probability that  .

.

The provable optimality of ɛ-machines among all classical models motivates Cμ as an intrinsic property of a given stochastic process, rather than just a property of ɛ-machines. Referred to in literature as the statistical complexity4,11, its interpretation as the minimal amount of information storage required to simulate such a given process has been applied to quantify self-organization12, the onset of chaos3 and complexity of protein configuration space13. Such interpretations, however, implicitly assume that classical models are optimal. Should a quantum simulator be capable of exhibiting the same output statistics with reduced entropy, this fundamental interpretation of Cμ may require review.

Classical models are not ideal

There is certainly room for improvement. For many stochastic processes, Cμ is strictly greater than E (ref. 11); the ɛ-machine that models such processes is fundamentally irreversible. Even if the entire future output of such an ɛ-machine was observed, we would still remain uncertain which causal state the machine was initialized in. Some of that information has been erased, and thus, in principle, need never be stored. In this paper, we show that for all such processes, quantum processing helps; for any ɛ-machine such that Cμ>E, there exists a quantum system, a quantum ɛ-machine with entropy Cq, such that Cμ>Cq≥E. Therefore, the corresponding model demands an input with entropy no greater than Cq.

The key intuition for our construction lies in identifying the cause of irreversibility within classical ɛ-machines, and addressing it within quantum dynamics. An ɛ-machine distinguishes two different causal states provided they have differing future statistics, but makes no distinction based on how much these futures differ. Consider two causal states, Sj or Sk, that both have potential to emit output r at the next time step and transition to some coinciding causal state Sl. Should this occur, some of the information required to completely distinguish Sj and Sk has been irreversibly lost. We say that Sj and Sk share non-distinct futures. In fact, this is both necessary and sufficient condition for Cμ>E (see Methods for proof).

The irreversibility condition

Given a stochastic process  with excess entropy E and statistical complexity Cμ, let its corresponding ɛ-machine have transition probabilities

with excess entropy E and statistical complexity Cμ, let its corresponding ɛ-machine have transition probabilities  . Then Cμ>E iff there exists a non-zero probability that two different causal states, Sj and Sk, will both make a transition to a coinciding causal state Sl on emission of a coinciding output r∈Σ, that is,

. Then Cμ>E iff there exists a non-zero probability that two different causal states, Sj and Sk, will both make a transition to a coinciding causal state Sl on emission of a coinciding output r∈Σ, that is,  . We refer to this as the irreversibility condition.

. We refer to this as the irreversibility condition.

This condition highlights the fundamental limitation of any classical model. To generate desired statistics, any classical model must record each binary property A such that  , regardless of how much these distributions overlap. In contrast, quantum models are free of such restriction. A quantum system can store causal states as quantum states that are not mutually orthogonal. The resulting quantum ɛ-machine differentiates causal states sufficiently to generate correct statistical behaviour. Essentially, they save memory by 'partially discarding' A, and yet retain enough information to recover statistical differences between

, regardless of how much these distributions overlap. In contrast, quantum models are free of such restriction. A quantum system can store causal states as quantum states that are not mutually orthogonal. The resulting quantum ɛ-machine differentiates causal states sufficiently to generate correct statistical behaviour. Essentially, they save memory by 'partially discarding' A, and yet retain enough information to recover statistical differences between  .

.

Improved quantum models

Given an ɛ-machine with causal states Sj and transition probabilities  , we define quantum causal states

, we define quantum causal states

where |r〉 and |k〉 form orthogonal bases on Hilbert spaces of size |Σ| and |S|, respectively. A quantum ɛ-machine accepts a quantum state |Sj〉 as input in place of Sj. Thus, such a system has an internal entropy of

where  . Cq is clearly strictly less than Cμ provided not all |Sj〉 are mutually orthogonal14.

. Cq is clearly strictly less than Cμ provided not all |Sj〉 are mutually orthogonal14.

This is guaranteed whenever Cμ>E. The irreversibility condition implies that there exists two causal states, Sj and Sk, which will both make a transition to a coinciding causal state Sl on emission of a coinciding output r∈Σ, that is,  . Consequently

. Consequently  , and thus |Sj〉 is not orthogonal with respect to 〈Sj|.

, and thus |Sj〉 is not orthogonal with respect to 〈Sj|.

A quantum ɛ-machine initialized in state |Sj〉 can synthesise black-box behaviour that is statistically identical to a classical ɛ-machine initialized in state Sj. A simple method is to first measure |Sj〉 in the basis |r〉|k〉, resulting in measurement values r, k and then to set r as output x0 and prepare the quantum state |Sk〉. Repetition of this process generates a sequence of outputs x1,x2,... according to the same probability distribution as the original ɛ-machine and hence  . (We note that while the simplicity of the above method makes it easy to understand and amiable to experimental realization, there is room for improvement. The decoding process prepares Sk based on the value of k, and thus still requires Cμ bits of memory. However, there exist more sophisticated protocols without such limitation, such that the entropy of the quantum ɛ-machine remains at Cq at all times. One is detailed in Methods). These observations lead to the central result of our paper.

. (We note that while the simplicity of the above method makes it easy to understand and amiable to experimental realization, there is room for improvement. The decoding process prepares Sk based on the value of k, and thus still requires Cμ bits of memory. However, there exist more sophisticated protocols without such limitation, such that the entropy of the quantum ɛ-machine remains at Cq at all times. One is detailed in Methods). These observations lead to the central result of our paper.

Theorem: Consider any stochastic process  with excess entropy E, whose optimal classical model has input entropy Cμ>E. Then, we may construct a quantum system that generates identical statistics, with input entropy Cq<Cμ. In addition, the entropy of this system never exceeds Cq while generating these statistics.

with excess entropy E, whose optimal classical model has input entropy Cμ>E. Then, we may construct a quantum system that generates identical statistics, with input entropy Cq<Cμ. In addition, the entropy of this system never exceeds Cq while generating these statistics.

There always exists quantum models of greater efficiency than the optimal classical model, unless the optimal classical model is already ideal.

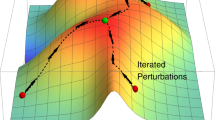

A concrete example of simulating perturbed coins

We briefly highlight these ideas with a concrete example of a perturbed coin. Consider a process  realized by a box that contains a single coin. At each time step, the box is perturbed such that the coin flips with probability 0<p<1, and the state of the coin is then observed. This results in a stochastic process, where each

realized by a box that contains a single coin. At each time step, the box is perturbed such that the coin flips with probability 0<p<1, and the state of the coin is then observed. This results in a stochastic process, where each  , governed by random variable Xt, represents the result of the observation at time t.

, governed by random variable Xt, represents the result of the observation at time t.

For any p≠0.5, this system has two causal states, corresponding to the two possible states of the coin; the set of pasts ending in 0, and the set of pasts ending in 1. We call these S0 and S1. The perturbed coin is its own best classical model, requiring exactly a system of entropy Cμ=1, namely the coin itself, to generate correct future statistics.

As p→0.5, the future statistics of S0 and S1 become increasingly similar. The stronger the perturbation, the less it matters what state the coin was in before perturbation. This is reflected by the observation that E→0 (in fact  (ref. 9), where

(ref. 9), where  is the Shannon entropy of a biased coin that outputs head with probability p (ref. 15)). Thus only

is the Shannon entropy of a biased coin that outputs head with probability p (ref. 15)). Thus only  of the information stored is useful, which tends to 0 as p→0.5.

of the information stored is useful, which tends to 0 as p→0.5.

Quantum ɛ-machines offer dramatic improvement. We encode the quantum causal states  within a qubit, which results in entropy

within a qubit, which results in entropy  . The non-orthogonality of |S0〉 and |S1〉 ensures that this will always be less than Cμ (ref. 16). As p→0.5, a quantum ɛ-machine tends to require negligible amount of memory to generate the same statistics compared with its classical counterpart (Fig. 2).

. The non-orthogonality of |S0〉 and |S1〉 ensures that this will always be less than Cμ (ref. 16). As p→0.5, a quantum ɛ-machine tends to require negligible amount of memory to generate the same statistics compared with its classical counterpart (Fig. 2).

While the excess entropy of the perturbed coin approaches zero as p→0.5 (red line), generating such statistics classically generally requires an entropy of Cμ=1 (green line). Encoding the past within a quantum system leads to significant improvement (purple line). (Here  ).) However, even the quantum protocol still requires an input entropy greater than the excess entropy.

).) However, even the quantum protocol still requires an input entropy greater than the excess entropy.

This improvement is readily apparent when we model a lattice of K independent perturbed coins, which output a number  that represents the state of the lattice after each perturbation. Any classical model must necessarily differentiate between 2 K equally likely causal states, and thus require an input of entropy K. A quantum ɛ-machine reduces this to KCq. For p>0.2, Cq<0.5, the initial condition of two perturbed coins may be encoded within a system of entropy 1. For p>0.4, Cq<0.1, a system of coinciding entropy can simulate 10 such coins. This indicates that quantum systems can potentially simulate N such coins on receipt of K≪N qubits, provided appropriate compression (through lossless encodings17) of the relevant past.

that represents the state of the lattice after each perturbation. Any classical model must necessarily differentiate between 2 K equally likely causal states, and thus require an input of entropy K. A quantum ɛ-machine reduces this to KCq. For p>0.2, Cq<0.5, the initial condition of two perturbed coins may be encoded within a system of entropy 1. For p>0.4, Cq<0.1, a system of coinciding entropy can simulate 10 such coins. This indicates that quantum systems can potentially simulate N such coins on receipt of K≪N qubits, provided appropriate compression (through lossless encodings17) of the relevant past.

Discussion

In this article, we have demonstrated that any stochastic process with no reversible classical model can be further simplified by quantum processing. Such stochastic processes are almost ubiquitous. Even the statistics of perturbed coins can be simulated by a quantum system of reduced entropy. In addition, the quantum reconstruction can be remarkably simple. Quantum operations on a single qubit, for example, allows construction of a quantum epsilon machine that simulates such perturbed coins. This allows potential for experimental validation with present day technology.

This result has significant implications. Stochastic processes have a ubiquitous role in the modelling of dynamical systems that permeate quantitative science, from climate fluctuations to chemical reaction processes. Classically, the statistical complexity Cμ is employed as a measure of how much structure a given process exhibits. The rationale is that the optimal simulator of such a process requires at least this much memory. The fact that this memory can be reduced quantum mechanically implies the counterintuitive conclusion that quantizing such simulators can reduce their complexity beyond this classical bound, even if the process they are simulating is purely classical. Many organisms and devices operate based on the ability to predict and thus react to the environment around them. The possibility of exploiting quantum dynamics to make identical predictions with less memory implies that such systems need not be as complex as one had originally thought.

This leads to the open question: is it always possible to find an ideal simulator? Certainly, Fig. 2 shows that our construction, while superior to any classical alternative, is still not wholly reversible. Although this irreversibility may indicate that more efficient quantum models exist, it is also possible that ideal models remain forbidden within quantum theory. Both cases are interesting. The former would indicate that the notion of stochastic processes 'hiding' information from the present11 is merely a construct of inefficient classical probabilistic models, whereas the latter hints at a source of temporal asymmetry within the framework of quantum mechanics; that it is fundamentally impossible to simulate certain observable statistics reversibly.

Methods

Proof of the irreversibility condition

Let the aforementioned ɛ-machine have causal states  and emission alphabet Σ. Consider an instance of the ɛ-machine at a particular time step t. Let St and Xt be the random variables that respectively govern its causal state and observed output at time t, such that the transition probabilities that define the ɛ-machine can be expressed as

and emission alphabet Σ. Consider an instance of the ɛ-machine at a particular time step t. Let St and Xt be the random variables that respectively govern its causal state and observed output at time t, such that the transition probabilities that define the ɛ-machine can be expressed as

We say an ordered pair  is a valid emission configuration iff

is a valid emission configuration iff  for some

for some  . That is, it is possible for an ɛ-machine in state Sj to emit r and transit to some Sk. Denote the set of all valid emission configurations by ΩE. Similarly, we say an ordered pair

. That is, it is possible for an ɛ-machine in state Sj to emit r and transit to some Sk. Denote the set of all valid emission configurations by ΩE. Similarly, we say an ordered pair  is a valid reception configuration iff

is a valid reception configuration iff  for some

for some  , and denote the set of all valid reception configurations by ΩR.

, and denote the set of all valid reception configurations by ΩR.

We define the transition function f: ΩE→ΩR, such that  if the ɛ-machine set to state Sj will transition to state Sk on emission of r. We also introduce the shorthand Xba to denote the list of random variables

if the ɛ-machine set to state Sj will transition to state Sk on emission of r. We also introduce the shorthand Xba to denote the list of random variables  . We now make use of (I) f is one-to-one iff there exist no distinct causal states, Sj and Sk, such that

. We now make use of (I) f is one-to-one iff there exist no distinct causal states, Sj and Sk, such that  for some Sl, (II)

for some Sl, (II)  iff f is one-to-one, (III)

iff f is one-to-one, (III)  , (IV)

, (IV)  implies Cμ=E, (V) Cμ=E implies

implies Cμ=E, (V) Cμ=E implies  . Each of these five statements will be proved in the subsequent sections.

. Each of these five statements will be proved in the subsequent sections.

Combining (I), (II), (III) and (V), we see that there exists a non-zero probability that two distinct causal states, Sj and Sk such that  for some Sl only if

for some Sl only if  . Meanwhile (I), (II) and (V) imply that there exists no two distinct causal states, Sj and Sk such that

. Meanwhile (I), (II) and (V) imply that there exists no two distinct causal states, Sj and Sk such that  for some Sl only if Cμ=E. The conditions for classical non-optimality follows.

for some Sl only if Cμ=E. The conditions for classical non-optimality follows.

Proof of statement I

Suppose f is one-to-one, then  iff Sj=Sk. Thus, there does not exist two distinct causal states, Sj and Sk such that

iff Sj=Sk. Thus, there does not exist two distinct causal states, Sj and Sk such that  for some Sl. Conversely, if f is not one-to-one, so that

for some Sl. Conversely, if f is not one-to-one, so that  . Let Sl be the state such that

. Let Sl be the state such that  .

.

Proof of statement II

Suppose f is one-to-one. Then for each  , there exists a unique (Sk, r) such that f(Sk, r)=(Sj, r). Thus, given

, there exists a unique (Sk, r) such that f(Sk, r)=(Sj, r). Thus, given  , we may uniquely deduce Sk. Therefore

, we may uniquely deduce Sk. Therefore  . Conversely, should

. Conversely, should  , and thus f is one-to-one.

, and thus f is one-to-one.

Proof of statement III

Note that (i)  and (ii) that, since the output of f is unique for a given

and (ii) that, since the output of f is unique for a given  . (ii) implies that

. (ii) implies that  since uncertainty can only decrease with additional knowledge and is bounded below by 0. Substituting this into (i) results in the relation

since uncertainty can only decrease with additional knowledge and is bounded below by 0. Substituting this into (i) results in the relation  . Thus

. Thus  .

.

Proof of statement IV

Now assume that  , recursive substitutions imply that

, recursive substitutions imply that  . In the limit where t→∞, the above equality implies Cμ–E=0.

. In the limit where t→∞, the above equality implies Cμ–E=0.

Proof of statement V

Since (i)  , and (ii)

, and (ii)  , it suffices to show that

, it suffices to show that  . Now

. Now  . But, by the Markov property of causal states,

. But, by the Markov property of causal states,  , thus

, thus  , as required.

, as required.

Constant entropy prediction protocol

Recall that in the simple prediction protocol, the preparation of the next quantum causal state was based on the result of a measurement in basis |k〉. Thus, although we can encode the initial conditions of a stochastic process within a system of entropy Cq, the decoding process requires an interim system of entropy Cμ. Although this protocol establishes that quantum models require less knowledge of the past, quantum systems implementing this specific prediction protocol still need Cμ bits of memory at some stage during their evolution.

This limitation is unnecessary. In this section, we present a more sophisticated protocol whose implementation has entropy Cq at all points of operation. Consider a quantum ɛ-machine initialized in state  . We refer the subsystem spanned by |r〉 as

. We refer the subsystem spanned by |r〉 as  , and the subsystem spanned by |k〉 as

, and the subsystem spanned by |k〉 as  .

.

To generate correct predictive statistics during each iteration, we first perform a general quantum operation on  that maps any given |Sj〉 to

that maps any given |Sj〉 to  is a second Hilbert space of dimension |Σ|. This operation always exists, because it is defined by Krauss operators

is a second Hilbert space of dimension |Σ|. This operation always exists, because it is defined by Krauss operators  that satisfy

that satisfy  is then emitted as output. Measurement of

is then emitted as output. Measurement of  in the |r〉 basis leads to a classical output r whose statistics coincide with that of its classical counterpart, x1. Finally, the remaining subsystem

in the |r〉 basis leads to a classical output r whose statistics coincide with that of its classical counterpart, x1. Finally, the remaining subsystem  is retained as the initial condition of the quantum ɛ-machine at the next time step.

is retained as the initial condition of the quantum ɛ-machine at the next time step.

A circuit representation of the protocol is documented in Fig. 3. The first step does not increase system entropy because entropy is conserved under addition of pure ancilla, while  for all j,k. The emission of

for all j,k. The emission of  leaves the ε-machine in state

leaves the ε-machine in state  , which has entropy Cq. Finally, the execution of the protocol does not require knowledge of the measurement result r (in fact, the quantum ɛ-machine can thus execute correctly even if all outputs remained unmeasured, and thus are truly ignorant of which causal state they are in). Thus, the physical application of the above protocol generates correct predication statistics without requiring more than memory Cq.

, which has entropy Cq. Finally, the execution of the protocol does not require knowledge of the measurement result r (in fact, the quantum ɛ-machine can thus execute correctly even if all outputs remained unmeasured, and thus are truly ignorant of which causal state they are in). Thus, the physical application of the above protocol generates correct predication statistics without requiring more than memory Cq.

Additional information

How to cite this article: Gu, M. et al. Quantum mechanics can reduce the complexity of classical models. Nat. Commun. 3:762 doi: 10.1038/ncomms1761 (2012).

References

Crutchfield, J. P. The calculi of emergence: computation, dynamics and induction. Physica D 75, 11–54 (1994).

Ray, A. Symbolic dynamic analysis of complex systems for anomaly detection. Signal Process. 84, 1115–1130 (2004).

Crutchfield, J. P. & Young, K. Inferring statistical complexity. Phys. Rev. Lett. 63, 105–108 (1989).

Rohilla, S. C. & Crutchfield, J. P. Computational mechanics: pattern and prediction, structure and simplicity. J Stat. Phys. 104, 817–879 (2001).

Holevo, A. S. Statistical problems in quantum physics. In Proceedings of the Second Japan—USSR Symposium on Probability Theory (eds Maruyama, G. and Prokhorov, J. V.) 104–119 (Springer-Verlag, Berlin, 1973) Lecture Notes in Mathematics, vol. 330.

Doob, J. L. Stochastic Processes (Wiley, New York, 1953).

Crutchfield, J. P. & Feldman, D. P. Regularities unseen, randomness observed: levels of entropy convergence. Chaos 13, 25–54 (2003).

Grassberger, P. Toward a quantitative theory of self-generated complexity. Int. J. Theor. Phys. 25, 907–938 (1986).

Crutchfield, J. P. & Feldman, D. P. Statistical complexity of simple one-dimensional spin systems. Phys. Rev. E 55, R1239–R1242 (1997).

Tononi, G., Sporns, O. & Edelman, G. M. A measure for brain complexity: relating functional segregation and integration in the nervous system. Proc. Natl Acad. Sci. 91, 5033–5037 (1994).

Crutchfield, J. P., Ellison, C. J. & Mahoney, J. R. Time's barbed arrow: irreversibility, crypticity, and stored information. Phys. Rev. Lett. 103, 094101 (2009).

Shalizi, C. R., Shalizi, K. L. & Haslinger, R. Quantifying self-organization with optimal predictors. Phys. Rev. Lett. 93, 118701 (2004).

Li, C. B., Yang, H. & Komatsuzaki, T. Multiscale complex network of protein conformational fluctuations in single-molecule time series. Proc. Natl Acad. Sci. 105, 536–541 (2008).

Nielsen, M. A. & Chuang, I. L. Quantum Computation and Quantum Information (Cambridge University Press, Cambridge, 2000).

Shannon, C. E. Prediction and entropy of printed English. Bell Syst. Tech. J. 30, 50–64 (1951).

Benenti, G. & Casati, G. S. Principles of Quantum Information and Computation II (World Scientific, 2007).

Bostroem, K. & Felbinger, T. Lossless quantum data compression and variable-length coding. Phys. Rev. A 65, 032313 (2002).

Acknowledgements

M.G. thanks C. Weedbrook, H. Wiseman, M. Hayashi, W. Son, T. Fritz, J. Thompson and K. Modi for helpful discussions. M.G. and E.R. are supported by the National Research Foundation and Ministry of Education, in Singapore. K.W. is funded through EPSRC grant EP/E501214/1. V.V. thank EPSRC, QIP IRC, Royal Society and the Wolfson Foundation, National Research Foundation (Singapore) and the Ministry of Education (Singapore) for financial support.

Author information

Authors and Affiliations

Contributions

M.G., K.W., E.R. and V.V. conceived the idea. M.G. and K.W. derived the technical results. M.G. prepared the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing financial interests.

Rights and permissions

About this article

Cite this article

Gu, M., Wiesner, K., Rieper, E. et al. Quantum mechanics can reduce the complexity of classical models. Nat Commun 3, 762 (2012). https://doi.org/10.1038/ncomms1761

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/ncomms1761

This article is cited by

-

Quantum Theory in Finite Dimension Cannot Explain Every General Process with Finite Memory

Communications in Mathematical Physics (2024)

-

Implementing quantum dimensionality reduction for non-Markovian stochastic simulation

Nature Communications (2023)

-

Quantum causal unravelling

npj Quantum Information (2022)

-

Surveying Structural Complexity in Quantum Many-Body Systems

Journal of Statistical Physics (2022)

-

Quantum-enhanced analysis of discrete stochastic processes

npj Quantum Information (2021)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.