Abstract

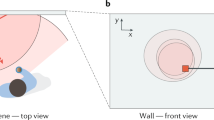

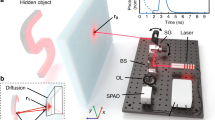

How to image objects that are hidden from a camera’s view is a problem of fundamental importance to many fields of research1,2,3,4,5,6,7,8,9,10,11,12,13,14,15,16,17,18,19,20, with applications in robotic vision, defence, remote sensing, medical imaging and autonomous vehicles. Non-line-of-sight (NLOS) imaging at macroscopic scales has been demonstrated by scanning a visible surface with a pulsed laser and a time-resolved detector14,15,16,17,18,19. Whereas light detection and ranging (LIDAR) systems use such measurements to recover the shape of visible objects from direct reflections21,22,23,24, NLOS imaging reconstructs the shape and albedo of hidden objects from multiply scattered light. Despite recent advances, NLOS imaging has remained impractical owing to the prohibitive memory and processing requirements of existing reconstruction algorithms, and the extremely weak signal of multiply scattered light. Here we show that a confocal scanning procedure can address these challenges by facilitating the derivation of the light-cone transform to solve the NLOS reconstruction problem. This method requires much smaller computational and memory resources than previous reconstruction methods do and images hidden objects at unprecedented resolution. Confocal scanning also provides a sizeable increase in signal and range when imaging retroreflective objects. We quantify the resolution bounds of NLOS imaging, demonstrate its potential for real-time tracking and derive efficient algorithms that incorporate image priors and a physically accurate noise model. Additionally, we describe successful outdoor experiments of NLOS imaging under indirect sunlight.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$29.99 / 30 days

cancel any time

Subscribe to this journal

Receive 51 print issues and online access

$199.00 per year

only $3.90 per issue

Buy this article

- Purchase on Springer Link

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

References

Freund, I. Looking through walls and around corners. Physica A 168, 49–65 (1990)

Wang, L., Ho, P. P., Liu, C., Zhang, G. & Alfano, R. R. Ballistic 2-D imaging through scattering walls using an ultrafast optical Kerr gate. Science 253, 769–771 (1991)

Huang, D . et al. Optical coherence tomography. Science 254, 1178–1181 (1991)

Bertolotti, J. et al. Non-invasive imaging through opaque scattering layers. Nature 491, 232–234 (2012)

Katz, O., Heidmann, P., Fink, M. & Gigan, S. Non-invasive single-shot imaging through scattering layers and around corners via speckle correlations. Nat. Photon. 8, 784–790 (2014)

Katz, O., Small, E. & Silberberg, Y. Looking around corners and through thin turbid layers in real time with scattered incoherent light. Nat. Photon. 6, 549–553 (2012)

Strekalov, D. V., Sergienko, A. V., Klyshko, D. N. & Shih, Y. H. Observation of two-photon “ghost” interference and diffraction. Phys. Rev. Lett. 74, 3600–3603 (1995)

Bennink, R. S., Bentley, S. J. & Boyd, R. W. “Two-photon” coincidence imaging with a classical source. Phys. Rev. Lett. 89, 113601 (2002)

Sen, P. et al. Dual photography. ACM Trans. Graph. 24, 745–755 (2005)

Bouman, K. L . et al. In IEEE 16th Int. Conference on Computer Vision 2270–2278 (IEEE, 2017); http://openaccess.thecvf.com/content_iccv_2017/html/Bouman_Turning_Corners_Into_ICCV_2017_paper.html

Klein, J., Peters, C., Martin, J., Laurenzis, M. & Hullin, M. B. Tracking objects outside the line of sight using 2D intensity images. Sci. Rep. 6, 32491 (2016)

Chan, S., Warburton, R. E., Gariepy, G., Leach, J. & Faccio, D. Non-line-of-sight tracking of people at long range. Opt. Express 25, 10109–10117 (2017)

Gariepy, G., Tonolini, F., Henderson, R., Leach, J. & Faccio, D. Detection and tracking of moving objects hidden from view. Nat. Photon. 10, 23–26 (2016)

Kirmani, A ., Hutchison, T ., Davis, J. & Raskar, R. In IEEE 12th Int. Conference on Computer Vision 159–166 (IEEE, 2009); http://ieeexplore.ieee.org/document/5459160/

Velten, A. et al. Recovering three-dimensional shape around a corner using ultrafast time-of-flight imaging. Nat. Commun. 3, 745 (2012)

Buttafava, M., Zeman, J., Tosi, A., Eliceiri, K. & Velten, A. Non-line-of-sight imaging using a time-gated single photon avalanche diode. Opt. Express 23, 20997–21011 (2015)

Gupta, O., Willwacher, T., Velten, A., Veeraraghavan, A. & Raskar, R. Reconstruction of hidden 3D shapes using diffuse reflections. Opt. Express 20, 19096–19108 (2012)

Wu, D. et al. In Computer Vision – ECCV 2012 (eds Fitzgibbon, A., et al.) 542–555 (Springer, 2012); https://link.springer.com/chapter/10.1007/978-3-642-33718-5_39

Tsai, C.-Y ., Kutulakos, K. N ., Narasimhan, S. G. & Sankaranarayanan, A. C. In Proc. IEEE Conference on Computer Vision and Pattern Recognition 7216–7224 (IEEE, 2017); http://openaccess.thecvf.com/content_cvpr_2017/html/Tsai_The_Geometry_of_CVPR_2017_paper.html

Heide, F ., Xiao, L ., Heidrich, W. & Hullin, M. B. In Proc. IEEE Conference on Computer Vision and Pattern Recognition 3222–3229 (IEEE, 2014); http://openaccess.thecvf.com/content_cvpr_2014/html/Heide_Diffuse_Mirrors_3D_2014_CVPR_paper.html

Schwarz, B. LIDAR: mapping the world in 3D. Nat. Photon. 4, 429–430 (2010)

McCarthy, A. et al. Kilometer-range, high resolution depth imaging via 1560 nm wavelength single-photon detection. Opt. Express 21, 8904–8915 (2013)

Kirmani, A. et al. First-photon imaging. Science 343, 58–61 (2014)

Shin, D. et al. Photon-efficient imaging with a single-photon camera. Nat. Commun. 7, 12046 (2016)

Abramson, N. Light-in-flight recording by holography. Opt. Lett. 3, 121–123 (1978)

Velten, A. et al. Femto-photography: capturing and visualizing the propagation of light. ACM Trans. Graph. 32, 44 (2013)

O’Toole, M. et al. In Proc. IEEE Conference on Computer Vision and Pattern Recognition 2289–2297 (IEEE, 2017); http://openaccess.thecvf.com/content_cvpr_2017/html/OToole_Reconstructing_Transient_Images_CVPR_2017_paper.html

Minkowski, H. Raum und zeit. Phys. Z. 10, 104–111 (1909)

Wiener, N. Extrapolation, Interpolation, and Smoothing of Stationary Time Series Vol. 7 (MIT Press, 1949)

Pharr, M ., Jakob, W. & Humphreys, G. Physically Based Rendering: From Theory to Implementation 3rd edn (Morgan Kaufmann, 2017)

Acknowledgements

We thank K. Zang for his expertise and advice on the SPAD sensor. We also thank B. A. Wandell, J. Chang, I. Kauvar, N. Padmanaban for reviewing the manuscript. M.O’T. is supported by the Government of Canada through the Banting Postdoctoral Fellowships programme. D.B.L. is supported by a Stanford Graduate Fellowship in Science and Engineering. G.W. is supported by a National Science Foundation CAREER award (IIS 1553333), a Terman Faculty Fellowship and by the KAUST Office of Sponsored Research through the Visual Computing Center CCF grant.

Author information

Authors and Affiliations

Contributions

M.O’T. conceived the method, developed the experimental setup, performed the indoor measurements and implemented the LCT reconstruction procedure. M.O’T. and D.B.L. performed the outdoor measurements. D.B.L. applied the iterative LCT reconstruction procedures shown in Supplementary Information. G.W. supervised all aspects of the project. All authors took part in designing the experiments and writing the paper and Supplementary Information.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing financial interests.

Additional information

Reviewer Information Nature thanks D. Faccio, V. Goyal and M. Laurenzis for their contribution to the peer review of this work.

Publisher's note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Supplementary Information

This file contains Supplementary Methods, a Supplementary Discussion, Supplementary Results and Derivations supporting the main manuscript. (PDF 45348 kb)

Supplementary Information

This file contains source code and data for Confocal NLOS imaging. It contains the MATLAB code and data for reproducing LCT and back-projection results that appear in the manuscript and supplementary information. (ZIP 30960 kb)

Confocal NLOS Imaging Based on the Light Cone Transform

High-level overview of confocal NLOS imaging. (MP4 28214 kb)

Confocal NLOS Imaging Based on the Light Cone Transform

A compilation of results from manuscript and supplementary information. (MP4 24394 kb)

Rights and permissions

About this article

Cite this article

O’Toole, M., Lindell, D. & Wetzstein, G. Confocal non-line-of-sight imaging based on the light-cone transform. Nature 555, 338–341 (2018). https://doi.org/10.1038/nature25489

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/nature25489

This article is cited by

-

Two-edge-resolved three-dimensional non-line-of-sight imaging with an ordinary camera

Nature Communications (2024)

-

Research Advances on Non-Line-of-Sight Imaging Technology

Journal of Shanghai Jiaotong University (Science) (2024)

-

Ultrasonic barrier-through imaging by Fabry-Perot resonance-tailoring panel

Nature Communications (2023)

-

Non-line-of-sight imaging with arbitrary illumination and detection pattern

Nature Communications (2023)

-

Tracing multiple scattering trajectories for deep optical imaging in scattering media

Nature Communications (2023)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.