Abstract

Undersampling and pixelation affect a number of imaging systems, limiting the resolution of the acquired images, which becomes particularly significant for wide-field microscopy applications. Various super-resolution techniques have been implemented to mitigate this resolution loss by utilizing sub-pixel displacements in the imaging system, achieved, for example, by shifting the illumination source, the sensor array and/or the sample, followed by digital synthesis of a smaller effective pixel by merging these sub-pixel-shifted low-resolution images. Herein, we introduce a new pixel super-resolution method that is based on wavelength scanning and demonstrate that as an alternative to physical shifting/displacements, wavelength diversity can be used to boost the resolution of a wide-field imaging system and significantly increase its space-bandwidth product. We confirmed the effectiveness of this new technique by improving the resolution of lens-free as well as lens-based microscopy systems and developed an iterative algorithm to generate high-resolution reconstructions of a specimen using undersampled diffraction patterns recorded at a few wavelengths covering a narrow spectrum (10–30 nm). When combined with a synthetic-aperture-based diffraction imaging technique, this wavelength-scanning super-resolution approach can achieve a half-pitch resolution of 250 nm, corresponding to a numerical aperture of ~1.0, across a large field of view (>20 mm2). We also demonstrated the effectiveness of this approach by imaging various biological samples, including blood and Papanicolaou smears. Compared with displacement-based super-resolution techniques, wavelength scanning brings uniform resolution improvement in all directions across a sensor array and requires significantly fewer measurements. This technique would broadly benefit wide-field imaging applications that demand larger space-bandwidth products.

Similar content being viewed by others

Introduction

High-resolution imaging across a wide field of view (FOV) is essential for various applications in different fields and requires imaging systems to have large space-bandwidth products. Ever since the widespread adoption of CCDs (charge-coupled devices) and complementary metal-oxide semiconductor (CMOS)-based image sensors to capture digital images, a tremendous amount of research and development effort has been devoted to optics, semiconductor technologies and signal processing to create high-resolution and wide-field imaging and microscopy systems. In conventional lens-based optical imaging designs, a large space-bandwidth product can be achieved by using higher magnification and larger lenses, and the image sensors are accordingly made larger in area with higher pixel counts. Another approach is to make image sensors with a smaller pixel pitch while still maintaining a relatively large active area. However, both of these approaches have drawbacks: Larger optical components make the imaging system bulky and significantly more expensive; in contrast, physically reducing the pixel size sacrifices the signal-to-noise ratio because a smaller light sensing area is made available for each pixel, reducing the external quantum efficiency of the imager chip1.

As an alternative, the optical signal processing community has provided a powerful framework, termed pixel super-resolution2, 3, 4, 5, 6, to obtain a high-resolution image from a series of low-resolution (that is, undersampled) images. Pixel super-resolution was originally developed in lens-based, point-to-point projection imaging systems2, 3, 4, 5, 6 and was later applied to lens-free and holographic imaging techniques7, 8, 9, 10, 11, 12 to significantly enhance the space-bandwidth product of the reconstructed images using both CCD and CMOS imager chips. In either implementation, lens-based or lens-free, this super-resolution framework requires low-resolution undersampled measurements to have sub-pixel shifts with respect to each other so that new and independent information can be exploited at each raw measurement (even though pixelated) to digitally synthesize a much smaller effective pixel size for the reconstructed image.

Herein, we introduce a fundamentally new pixel super-resolution method that utilizes wavelength scanning to significantly improve the resolution of an undersampled or pixelated imaging system, without the use of any lateral shifts or displacements. In this technique, the specimen is sequentially illuminated at a few wavelengths that are sampled from a rather narrow spectral range of 10–30 nm. Compared with sub-pixel displacement or lateral shift-based super-resolution techniques, wavelength scanning introduces a uniform resolution improvement across all the directions on the sample plane and requires significantly fewer raw measurements to be made. Making fewer measurements without sacrificing performance could greatly benefit high-speed wide-field imaging, field-portable microscopy and telemedicine applications, which are all sensitive to data transmission and storage.

We demonstrated the effectiveness of this new wavelength-scanning-based pixel super-resolution approach using lens-free holographic microscopy (Figure 1) to improve the resolution and the effective numerical aperture (NA) of a unit magnification imaging system by a factor of ~4 in all directions. Using 12 different illumination wavelengths between 480 and 513 nm, we achieved a half-pitch resolution of ~250 nm and an effective NA of ~1.0 across a large FOV of >20 mm2, which constitutes a space-bandwidth product of >1 billion. At the heart of these results is an iterative pixel super-resolution algorithm that was developed to obtain high-resolution complex (that is, phase and amplitude) reconstructions from undersampled (that is, pixelated) lens-free digital holograms acquired at different wavelengths.

Optical setup of wavelength-scanning pixel-super resolution. (a) A lens-free holographic on-chip microscope using wavelength-scanning pixel super-resolution. A fiber-coupled tunable light source is placed above the object. When performing pixel super-resolution, the wavelength is scanned within a spectral range of 10–30 nm. Multi-height and synthetic aperture imaging configurations are also integrated into this setup to enable phase retrieval. (b) Lens-free holograms at different wavelengths before and after digital sampling at the image sensor plane.

In the previous work, wavelength diversity in illumination has been mainly utilized for two general purposes. The first has been to obtain color or spectral information of a sample13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25; wavelength-dependent transmission, absorption or scattering features of the specimen enhance the contrast of the image and might reveal chemical and/or physical properties of biological samples. The second purpose has been to recover the phase of the optical wavefront in digital holography. Previous studies26, 27, 28, 29 have demonstrated a phase retrieval technique by tuning the wavelength of the illumination for non-dispersive objects. This wavelength-diversity-based phase retrieval approach requires the illumination to be tuned over a rather large spectral range26, 27, 28, 29 (that is, >60 nm) and assumes that the specimen maintains similar transmission properties across such a large bandwidth. Recently, a means of using wavelength diversity to provide a modest (for example, ~16%) improvement in resolution has also been reported30. However, this method also assumes that the transmission properties of the specimen remain unchanged across an even larger spectral range of >400 nm (that is, 460–870 nm), a condition that would not be satisfied for realistic samples, including, for example, pathology slides and most biological specimens.

In addition to significantly improving the resolution and the space-bandwidth product of the imaging system, our wavelength-scanning pixel super-resolution approach over a narrow band also helps in robust phase unwrapping to determine the optical path length differences between the sample and surrounding medium accurately31, 32, 33. For samples in which the optical path length is longer than the wavelength, the obtained phase map will be wrapped. In particular, when the object has sharp boundaries, such errors may be difficult to correct using state-of-the-art phase unwrapping techniques based on a single wavelength reconstruction34, 35, 36, 37. By making use of all the illumination wavelengths in our super-resolution approach, we also demonstrated robust phase unwrapping in our high-resolution microscopic phase images, correctly revealing the optical path length information of the samples.

In addition to lens-free holographic imaging techniques, we experimentally demonstrated that the same wavelength-scanning-based super-resolution framework can also be applied to improve the resolution of lens-based imaging systems with the introduction of a slight defocus, making this work broadly applicable to various coherent or partially coherent wide-field imaging modalities that are limited by pixelation or undersampling. Therefore, we believe that this new wavelength-scanning-based super-resolution technique would largely benefit wide-field and high-throughput microscopy applications that require enhanced space-bandwidth products.

Materials and methods

Optical setup

As depicted in Figure 1, the optical setup of the lens-free microscope consists of three major components: a light source, an image sensor array, and a specimen. A fiber-coupled, wavelength-tunable light source (WhiteLase-Micro, model VIS, Fianium Ltd, Southampton, UK) is used to perform the wavelength scanning. During the imaging process, the central wavelength of the source is scanned within a spectral range of 10–30 nm (for example, from 498 to 510 nm) with a step size of ~3 nm. The spectral linewidth of illumination at each wavelength is ~2 nm, and the power of the light source is adjusted to ~20 μW. The image sensor chip is a color CMOS sensor chip with a pixel size of 1.12 μm manufactured for cellphone camera modules (IU081, Sony Corporation, Tokyo, Japan). During the imaging process, the specimen is mounted on a transparent substrate and placed 100–500 μm above the image sensor chip. We merged our wavelength-scanning-based pixel super-resolution approach with both multi-height11 and synthetic aperture imaging12 configurations to obtain phase-retrieved, high-resolution reconstructions of the specimen. For synthetic-aperture-based imaging12, the fiber outlet of the light source is mounted on a rotational arm (PRM1Z8, Thorlabs, Newton, NJ, USA), and the image sensor is placed on a stage that can rotate over a horizontal plane. Therefore, the incident light can be set to arbitrary illumination angles, which is required for the synthetic aperture approach. For multi-height-based phase retrieval9, 11, the incremental height change between the image sensor and the specimen is enabled by a mechanical positioning stage (MAX606, Thorlabs). The image sensor is mounted on this mechanical stage, whereas the specimen is held by a three-dimensional-printed sample holder. After completing image capture for each height, the stage lowers the image sensor by 10–15 μm on average before the image capture for the next height starts. During the imaging process, all the necessary steps, including the wavelength scanning of the light source, multi-height and synthetic-aperture-related scans and data acquisition using the image sensor chip are automated by a custom-written LabVIEW code (Version 2011, National Instruments, Austin, TX, USA).

Wavelength calibration and dispersion compensation

Wavelength calibration of our light source is achieved using an optical spectrum analyzer (HR2000+, Ocean Optics, Amersham, UK). The intensity-weighted average wavelength of each measured spectrum is considered as our illumination wavelength. To achieve optimal resolution, the refractive index of the glass substrate (100 μm, N-BK7, Schott AG, Mainz, Germany) at each wavelength is also corrected using the dispersion formula for borosilicate glass.

Sample preparation

The grating lines used for resolution quantification are fabricated on a ~100 μm glass slide (N-BK7, Schott AG) using focused ion beam milling. Unstained Papanicolaou (Pap) smear slides are prepared through the ThinPrep method (Hologic, Inc., Marlborough, MA, USA). The blood smear samples are prepared using EDTA (ethylenediaminetetraacetic acid) anticoagulated human blood and stained with Wright’s stain38.

Mathematical formalism of wavelength-scanning pixel super-resolution

We assume that the specimen is a thin object mounted on a plane parallel to the image sensor chip and that the specimen is sequentially illuminated by multiple wavelengths {λk}. At a given wavelength λk, the object wave can be written as ok(x, y)=1+sk(x, y), where sk(x, y) represents the scattered object wave, immediately at the exit of the object plane (z=0, in Figure 1a). The two-dimensional Fourier transform of ok(x, y) can be written as Ok(fx, fy)=δ(fx, fy)+Sk(fx, fy). At the image sensor plane (z=z0 in Figure 1a), the Fourier transform of the intensity distribution, ik(x, y), can be written as (see Supplementary Information for details):

To simplify our notation, we hide the expression of the variables for spatial frequencies (fx, fy), and the superscript ‘−’ represents (−fx, −fy). On the right-hand side of Equation (1), the first item, δ, represents the background intensity; the second and third items are conjugate holographic terms, which represent the interference of the scattered object wave with the background wave at the sensor plane. The fourth item is the self-interference term, which can be considered negligible for weakly scattering objects. The expression for Tk can be written as follows:

Where Hk(fx, fy) is the free space transfer function, and the frequency shifts fx,k and fy,k are determined by the illumination wavelength and the incident angle (refer to the Supplementary Information for details). After the object intensity is sampled by an image sensor array with a pixel pitch of Δx and Δy, the discrete Fourier transform of the sensor’s output can be expressed as follows39:

where u and v are integers and fx and fy are discrete spatial frequency values. Note that Ipix,k(fx, fy)=Ik(fx, fy)·Pk(fx, fy), where Pk(fx, fy) represents the Fourier transform of the pixel function, that is, the two-dimensional responsivity distribution40 within each pixel: pk(x, y). Variables u and v represent the order of spatial aliasing due to pixelation, and (u, v)=(0,0) corresponds to the non-aliased real (that is, target) signal. The periodic nature of the discrete Fourier transform enables us to extend the expression of Isampled, k to a broader frequency space by upsampling (Figure 2). Based on these definitions, we can express the undersampled or pixelated lens-free hologram at a given wavelength λk as follows:

Reconstruction algorithm for wavelength-scanning pixel super-resolution, integrated with multi-height or synthetic-aperture-based lens-free phase retrieval. Refer to the Materials and Methods section for further details. The fourth column shows super-resolved and phase-retrieved reconstruction of the specimen. FFT, fast Fourier transform; IFFT, inverse fast Fourier transform.

The non-aliased target signal ok(x, y) or its spatial Fourier spectrum can be obtained under (u, v)=(0,0), that is, δ00+S00, k, which can be written as follows:

On the left side of Equation (5), we retain the pixel function P00, k, which can be removed later in the last step of the image reconstruction, using, for example, spatial deconvolution with a Wiener filter41, as illustrated in ref. 40. Equation (5) also shows that to obtain the non-aliased object at (u, v)=(0,0), one needs to eliminate or subtract four terms from the upsampled and back-propagated holographic term (that is,  ). To this end, the first item to eliminate,

). To this end, the first item to eliminate,  , is the twin image noise, a characteristic artifact of in-line holography. The second term in Equation (5), which contains

, is the twin image noise, a characteristic artifact of in-line holography. The second term in Equation (5), which contains  and

and  (u≠0, v≠0) in the summation, represents the effects of spatial aliasing and undersampling for both the real and twin image terms. The third item, which contains

(u≠0, v≠0) in the summation, represents the effects of spatial aliasing and undersampling for both the real and twin image terms. The third item, which contains  in the summation, is the periodic background artifact generated during the upsampling process, and the last item is the self-interference term and its upsampling related artifacts. Starting with the next sub-section, we will discuss a two-stage reconstruction algorithm for eliminating all four of these items listed on the right side of Equation (5) using wavelength scanning to enable super-resolved reconstructions of complex (that is, phase and amplitude) object functions.

in the summation, is the periodic background artifact generated during the upsampling process, and the last item is the self-interference term and its upsampling related artifacts. Starting with the next sub-section, we will discuss a two-stage reconstruction algorithm for eliminating all four of these items listed on the right side of Equation (5) using wavelength scanning to enable super-resolved reconstructions of complex (that is, phase and amplitude) object functions.

Reconstruction Stage 1: generation of a high-resolution initial guess of the specimen using wavelength diversity

As depicted in Figure 2, the reconstruction of the specimen image involves two stages. First, it should be noted that in Equation (5) the functions  have complex values with unit amplitude, and their phases are highly sensitive to changes in wavelength (see the Supplementary Information for details). Therefore, when the illumination wavelength (λk) is scanned over K different wavelengths that are uniformly spread across a narrow bandwidth, the set of functions

have complex values with unit amplitude, and their phases are highly sensitive to changes in wavelength (see the Supplementary Information for details). Therefore, when the illumination wavelength (λk) is scanned over K different wavelengths that are uniformly spread across a narrow bandwidth, the set of functions  can be considered rotating unit vectors, and by summing all these rotating vectors as a function of wavelength, we obtain the following:

can be considered rotating unit vectors, and by summing all these rotating vectors as a function of wavelength, we obtain the following:

This expression indicates that by summing all the back propagations at different wavelengths (for example, over a narrow spectral range of 10–30 nm), the reconstructed image, that is,  or

or  , can be significantly enhanced, whereas the spatial aliasing and undersampling-related terms with

, can be significantly enhanced, whereas the spatial aliasing and undersampling-related terms with  will be considerably suppressed. Therefore, in this first stage of our reconstruction process, we generate a high-resolution initial guess of the specimen by summing all the upsampled and back-propagated raw measurements, that is, low-resolution diffraction patterns. We subtract the artifact items {δuv (u≠0, v≠0)} before the back-propagation step to create a cleaner image.

will be considerably suppressed. Therefore, in this first stage of our reconstruction process, we generate a high-resolution initial guess of the specimen by summing all the upsampled and back-propagated raw measurements, that is, low-resolution diffraction patterns. We subtract the artifact items {δuv (u≠0, v≠0)} before the back-propagation step to create a cleaner image.

It should be noted that modifying Equation (6) into a weighted average at each spatial frequency point could achieve better suppression of spatial aliasing and undersampling-related artifacts. However, using our current computation platform, which is based on a central processing unit, the search for optimal weighting factors at each frequency point will significantly increase the total computation time. Therefore, in this proof-of-concept implementation, we choose a simpler summation approach to minimize the computation time for the generation of the initial object guess. The spatial aliasing and undersampling-related artifacts of this initial guess will be further eliminated and cleaned up during the second stage of our algorithm, as will be detailed next.

Reconstruction Stage 2: multi-wavelength-based iterative pixel super-resolution and phase retrieval

The second stage of our numerical reconstruction involves an iterative algorithm, which contains four sub-steps in each iteration:

(1) Knowing each raw measurement’s corresponding wavelength and incidence angle, we apply the corresponding plane wave illumination on the initial guess of the specimen (from Stage 1, discussed above) and propagate the optical field from the object plane to the image sensor plane using the angular spectrum approach42.

(2) The amplitude of the high-resolution field on the image sensor plane is updated using the low-resolution measurement at the corresponding wavelength. To this end, the intensity of the high-resolution field is convolved with the image sensor’s pixel function and downsampled to the same grid size as the pixelated raw measurement. The difference between the raw measurement and the downsampled intensity map is considered a low-resolution ‘correction’ map for each illumination wavelength. A high-resolution correction map can then be generated by taking the Kronecker product of this low-resolution map and the pixel function. To perform a smooth update, this high-resolution correction map is added to the high-resolution intensity distribution with a relaxation parameter, typically set to ~0.5 (see the Supplementary Information for details). After the smoothed update, a Wiener deconvolution filter that incorporates the image sensor’s noise level is applied to this updated intensity distribution. The square root of this filtered high-resolution intensity distribution is then applied to the amplitude of the field on the sensor plane while the phase map is kept unaltered.

(3) This updated field is then back-propagated to the object plane.

(4) The back-propagated field is used to update the transmission field on the object plane. This update is performed in the frequency domain (Figure 2) within a circular area whose center is determined by the corresponding illumination wavelength and angle. The radius of this circle is defined by the boundary within which all the spatial frequencies experience an attenuation of less than 3 dB after propagation in the spatial domain. This update on the object plane is also smoothed using a relaxation factor of ~0.5. After the update, the phase of the field on the object plane is converted to an optical path length map, and its amplitude is directly used as the transmission of the object.

The four steps described above are performed for every raw (that is, undersampled) measurement captured by the image sensor array. It is considered one iteration cycle when each one of the raw measurements has been used for amplitude update. Typically after 5–10 iteration cycles, the reconstruction converges. The convergence condition for the iteration is defined as follows43:

where  is the sum-squared error between the raw measurement and the downsampled intensity map43, ‘itr’ is the iteration number, and ɛ is a convergence constant determined by the noise level of the raw (that is, undersampled) measurements.

is the sum-squared error between the raw measurement and the downsampled intensity map43, ‘itr’ is the iteration number, and ɛ is a convergence constant determined by the noise level of the raw (that is, undersampled) measurements.

Phase retrieval using multi-height and synthetic aperture techniques

Multi-height9, 11, 44, 45, 46 and synthetic aperture12 techniques have been proven to be robust phase retrieval methods for lens-free on-chip imaging. In previously reported lens-free reconstructions9, 11, 12, pixel super-resolution and phase retrieval are carried out sequentially: at each height or illumination angle, lateral shift-based pixel super-resolution is first performed to obtain high-resolution diffraction patterns on the image sensor plane. These super-resolved diffraction patterns are then used by an iterative phase retrieval algorithm, in which wave propagations between the object plane and the image sensor plane are executed repeatedly9, 11, 12. However, in wavelength-scanning-based pixel super-resolution, raw measurements are essentially undersampled versions of different holograms. Therefore, we choose to use the same iterative algorithm detailed in the previous sub-section (that is, Reconstruction Stage 2) to realize resolution enhancement and phase retrieval altogether. More specifically, in the multi-height configuration, the specimen is illuminated sequentially at each wavelength, and the corresponding lens-free holograms are captured before the vertical scanning stage moves the sample or the image sensor to the next height. Each height will be labeled with index l; therefore, all the measurements {Isampled, k} and the corresponding transfer functions {Hk} and {Tuv, k} that are used in the previous derivations can be relabeled as {Isampled, kl}, {Hkl} and {Tuv, kl}, respectively. During the numerical reconstruction process, all the raw holograms are upsampled, back-propagated, and then summed together to generate the high-resolution initial guess at a given height. In Stage 2 of our reconstruction algorithm, the aforementioned four-step process is applied to each raw measurement. The same set of operations and processing also apply to the synthetic aperture technique12, except that index l now refers to each illumination angle instead of sample height.

In general, for pathology slides such as blood smears and Pap smears, the optical path length difference between the specimen (that is, biological tissue) and the medium (that is, air or the sealing glue) is rather small. Under these circumstances, phase unwrapping is not a concern; therefore, in the phase recovery process, we can use a scrambled order of {Isampled, kl} in each iteration cycle. However, when working with samples with larger optical path length differences, such as grating lines carved into a glass substrate, one extra step, that is, phase unwrapping, must be added after the reconstruction, and the order of iterations must be modified accordingly, which will be detailed in the next sub-section.

Multi-wavelength phase unwrapping

A robust phase unwrapping algorithm requires high-resolution and phase-retrieved reconstructions at multiple wavelengths; therefore, we divide the raw measurements into subsets, in which the wavelengths are identical or very similar (for example, Δλ≤5 nm), and perform the four-step reconstruction process previously discussed (as part of the Reconstruction Stage 2) on each subset separately. For example, reconstruction number 1 uses subset {Isampled, kl | k=1, l=1,…L}, No. 2 uses {Isampled, kl | k=2, l=1,…L} and so on. When the iterations for all these subsets are completed, we obtain high-resolution (that is, super-resolved) phase-retrieved reconstructions at multiple wavelengths, that is, {Ok}, whose phase maps {ϕk,wrapped} need unwrapping. Using these wrapped phase maps {ϕk,wrapped} at multiple wavelengths, we perform phase unwrapping to accurately reveal the optical path length differences between the specimen and the surrounding medium. Assuming that the optical path length difference is ΔL(x, y), the phase distribution at the object plane at each wavelength can be written as ϕk(x, y)=2π·ΔL(x, y)/λk. The wrapped phase can thus be expressed as ϕk,wrapped (x, y)=ϕk(x, y)±2Nπ, where −π<ϕk,wrapped≤π and N is an integer. These resulting wrapped phase maps {ϕk,wrapped} that are generated through super-resolved and phase-retrieved reconstructions at multiple wavelengths are then fed into an optimization algorithm47 that finds the optimum path length ΔLopt(x,y) at each spatial point (x,y) by minimizing a cost function defined as follows:

To avoid convergence to a local minimum and reduce the computation cost/time, we define a search range of [ΔL0−min{λk}/2, ΔL0+min{λk}/2], where ΔL0 is the initial guess of the optical path length:

where the total number of wavelengths (K) is typically 5–10. Within this search interval, we scan the values to find the optical path length ΔLopt(x, y) that minimizes the cost function, resulting in an unwrapped object phase image.

Computation platform used for super-resolved image reconstructions

Our reconstructions are performed using MATLAB (Version R2012a, MathWorks, Natick, MA, USA) on a desktop computer equipped with a 3.60-GHz central processing unit (Intel Xeon E5-1620) and 16 GB of random-access memory. For a 1 × 1 mm2 sub-region with an upsampling factor of seven, one iteration of our wavelength-scanning super-resolution routine takes ~1.2 s. For example, one cycle of our algorithm, which undergoes all the undersampled measurements (for example, seven wavelengths for each angle/height, and 22 angles/heights in total), takes ~3 min. In our proof-of-concept implementation, the iterations did not use either GPU (graphics processing unit) or parallel computing, which could significantly improve our overall computation time10. The total image reconstruction time could be further improved by implementing the algorithm in the C language rather than MATLAB.

Results and discussion

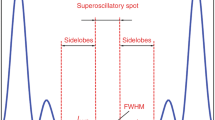

The physical basis for wavelength-scanning pixel super-resolution is the strong wavelength dependence of the undersampled interference patterns in coherent or partially coherent diffraction imaging systems such as lens-free, holographic microscopy (Figure 1) or defocused lens-based imaging systems. When illuminated at slightly different wavelengths, the high-frequency interference fringes caused by object scattering will change, causing the undersampled output of the image sensor chip to change as well (Figure 1b). Our derivations (Materials and Methods section) show that in the spatial frequency domain, the aliasing signal caused by pixel-induced undersampling is modulated by a complex transfer function whose phase is rather sensitive to even small wavelength changes, which makes it possible to use wavelength diversity within a narrow spectral range (that is, 10–30 nm) to cancel out the spatial aliasing term and enhance the resolution of the reconstructions beyond the pixel pitch.

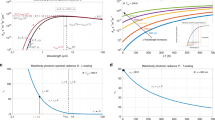

This spatial resolution improvement brought by our wavelength-scanning pixel super-resolution technique for different lens-free imaging configurations is clearly demonstrated in Figures 3, 4, 5. Without pixel super-resolution, lens-free microscopy with a unit magnification on-chip imaging geometry (in which the sample FOV equals the active area of the sensor array) can achieve a half-pitch resolution close to the image sensor’s pixel pitch (that is, ~1 μm in Figure 3a, using a CMOS sensor that has a 1.12-μm pixel pitch). For the same configuration depicted in Figure 3, utilizing the wavelength diversity in illumination boosts the half-pitch resolution to ~0.6 μm by using five different wavelengths between 498 and 510 nm (Figure 3c and 3d). When integrated with the multi-height phase retrieval technique9, 11, the resolution can be further improved to ~0.5 μm, corresponding to an effective NA of ~0.5 (Figure 4). Using the synthetic aperture technique12, however, can provide not only twin image elimination but also a significant increase in spatial bandwidth of the reconstructed images; these benefits enable us to take full advantage of the wavelength-scanning-based pixel super-resolution technique and achieve a half-pitch resolution of ~250 nm with an effective NA of ~1.0 under a unit magnification geometry, where the FOV is >20 mm2 (Figure 5). These results clearly demonstrate the pixel super-resolution capabilities of our wavelength-scanning approach.

Resolution improvement introduced by wavelength-scanning pixel super-resolution. (a) Reconstruction from a single raw measurement captured by an image sensor chip with a pixel pitch of 1.12 μm. (b, c) Lens-free reconstructions using lateral shift-based pixel super-resolution. b and c are reconstructed using super-resolved holograms synthesized from five and nine sub-pixel-shifted raw measurements, respectively. The corresponding sub-pixel shifts of the raw measurements are marked within the hologram shift tables. (d) Reconstruction using wavelength-scanning pixel super-resolution; five wavelengths are used within a scanning range of 498–510 nm and a scan step size of 3 nm.

Lens-free imaging using multi-height phase retrieval and wavelength-scanning pixel super-resolution. Five heights are used in each case shown in a–c. (a, b) are lens-free reconstructions using lateral shift-based pixel super-resolution and multi-height phase retrieval. a uses five and b uses nine sub-pixel-shifted raw measurements at each height. (c) Lens-free reconstruction using wavelength-scanning pixel super-resolution and multi-height phase retrieval; five wavelengths are used with a scanning range of 498–510 nm and a scan step size of 3 nm.

(a) Lens-free imaging using synthetic aperture and lateral shift-based pixel super-resolution. At each illumination angle, 6 × 6=36 sub-pixel lateral shifts, which are spread evenly over the pixel area, are used for super-resolution. (b) Lens-free imaging using synthetic aperture and wavelength-scanning pixel super-resolution. Twelve wavelengths are used within a scanning range of 480–513 nm and a scan step of 3 nm. The illumination angle scanning directions, ranges and scanning steps are the same in both a and b. (c) Image of the specimen obtained using a conventional lens-based microscope with a 60 × water-immersion objective lens (NA=1.0).

In addition to delivering a competitive resolution and NA, the wavelength-scanning-based super-resolution approach also offers better data efficiency compared with lateral shift-based pixel super-resolution techniques, that is, fewer raw measurements are needed for the same resolution improvement. In lateral shift-based pixel super-resolution, the sub-pixel shifts between the raw measurements are obtained by moving the light source, image sensor and/or the specimen with respect to each other7, 8, 11, 48, and the resolution improvement is direction dependent. Therefore, sub-pixel shifts that spread uniformly within a two-dimensional pixel area are preferred in lateral shift-based pixel super-resolution techniques to achieve optimal resolution enhancement. As a result, the number of raw measurements generally increases as a quadratic function of the pixel super-resolution factor. However, in the wavelength-scanning super-resolution approach, the resolution improvement caused by wavelength diversity is uniform in all lateral directions across the sensor array, which enables us to achieve competitive resolution with much fewer raw measurements compared with lateral shift-based super-resolution. To exemplify this important advantage of our approach, in a normal-illumination configuration, compared with the lateral shift technique, which needs nine measurements to achieve a half-pitch resolution of ~0.6 μm (Figures 3b and 3c), the wavelength-scanning technique requires only five raw measurements (Figure 3d) to reach the same imaging performance. Similarly, when combined with multi-height phase retrieval9, 11, wavelength-scanning super-resolution requires 25 raw measurements in total (five wavelengths at each of five heights), which is significantly smaller than the number required using lateral shifts (that is, 45 raw measurements as shown in Figure 4). When integrated with synthetic-aperture-based lens-free imaging12, an even higher spatial resolution can be achieved using wavelength diversity, and the advantages of wavelength scanning over lateral shifts become more significant. As shown in Figure 5, to achieve a half-pitch resolution of ~250 nm in all directions, the lateral shift-based super-resolution technique requires a total of 612 images (6 × 6 raw images at each one of the 17 illumination angles), whereas the presented wavelength-scanning technique only needs 204 images (12 wavelengths at each one of the 17 angles), corresponding to a 3-fold reduction in the number of images. Taking into account the wait time for the mechanical components in our bench-top system, the lateral shift-based technique at multiple illumination angles takes ~21.5 min in total, whereas our wavelength-scanning technique takes only ~4.8 min, showing a significant improvement in the imaging time. This important advantage in terms of the reduced number of measurements can also be translated into smaller data storage space, which is critical for increasing the speed and utility of high-resolution wide-field imaging techniques.

We should re-emphasize that wavelength-scanning super-resolution requires only a few wavelengths taken from a narrow spectral range (for example, 10–30 nm). With this new super-resolution approach, we can obtain high-resolution amplitude reconstructions of not only colorless but also colored (that is, stained/dyed) samples without further modifications in our reconstruction algorithm. We demonstrated this capability by imaging various biological samples, including unstained Pap smears (Figure 6) as well as stained blood smears (Figure 7). Because our lens-free reconstructions provide both amplitude and phase channels, we can also visualize the reconstructed images using different methods to create, for example, a digital phase contrast image12, 49 as illustrated in Figure 6.

Lens-free imaging of a Papanicolaou (Pap) smear using wavelength-scanning pixel super resolution and multi-height phase retrieval. (a) Super-resolved lens-free phase image of the Pap smear. Wavelength-scanning range: 510–531 nm, scanning step: 3 nm. (b, e) Regions of interest (ROI) No. 1 and 2, respectively. (c, f) Digital phase contrast images generated from lens-free reconstructions (refer to the Materials and Methods section for details). (d, g) Conventional lens-based phase contrast images obtained using a 40 × objective lens (NA=0.6).

Lens-free imaging of a blood smear using wavelength-scanning pixel super-resolution and synthetic aperture phase retrieval. Wavelength-scanning range: 520–541 nm, scanning step: 3 nm. Angle scanning (two axes) range: −35° to 35° with 5° steps. (a) Lens-free raw hologram captured using a CMOS image sensor with a pixel size of 1.12 μm and a FOV of ~20.5 mm2. (b) Lens-free reconstruction of a sub-region, marked with a yellow square within the full FOV. (c) Conventional lens-based microscope image using a 20 × objective lens (NA=0.45).

In addition to significantly improving resolution and mitigating undersampling, wavelength diversity also enables us to perform robust phase unwrapping and reveal the optical path length differences between a given specimen and surrounding medium. The retrieved phase reconstruction from a single wavelength is constrained to its principle value (−π, π], and therefore large optical path length differences can cause polarity errors that may not be corrected even by using state-of-the-art phase unwrapping algorithms34, 35, 36, 37 (see, for example, Figure 8a and 8b). Such polarity errors in phase reconstructions can also be mitigated by detecting the phase differences between the reconstructions at two different wavelengths33, 50. However, this two-wavelength phase unwrapping approach still faces the challenge of ambiguity or uniqueness50. In addition to achieving pixel super-resolution, we further utilized wavelength scanning to significantly improve the robustness of phase unwrapping by incorporating all the wavelengths of illumination that are used in pixel super-resolution to unwrap the reconstructed phase (Materials and Methods section). The phase unwrapping results shown in Figure 8c, 8f clearly illustrate that through optimization we can entirely rule out incorrect optical path length differences within our reconstructed images and achieve robust phase unwrapping in a super-resolved image.

Phase unwrapping using multiple wavelengths. The sample consists of four grating lines carved into a glass substrate using focused ion beam milling. (a) Lens-free phase image obtained using a single wavelength; phase map is wrapped. (b) Unwrapped lens-free phase image obtained from a single wavelength reconstruction using a two-dimensional phase unwrapping technique based on minimum network flow36. (c) Unwrapped lens-free phase image obtained using reconstructions from multiple wavelengths. Eleven wavelengths are used within a scanning range of 501–531 nm and a scan step size of 3 nm. Refer to the Materials and Methods section for further details on multi-wavelength phase unwrapping. (d–f) Depth profiles of a–c, respectively. The blue dashed line in f is the depth profile of the sample gratings measured using an atomic force microscope (AFM).

The wavelength-scanning pixel super-resolution approach, together with phase retrieval methods, including multi-height9, 11, synthetic aperture12 and object-support-based techniques51, 52, could constitute high-resolution imaging systems with greatly improved imaging speed. For a bench-top system, high-speed wavelength scanning can be realized using a fast tunable source (employing, for example, an acousto-optic tunable filter with a supercontinuum source) synchronized with the image sensor chip. Compared with lateral shift-based super-resolution setups, such a combination avoids motion blur and could increase the data acquisition speed to the maximum frame rate of the image sensor. Furthermore, the lateral shifts generated by the source-shifting approach7, 8, 9, 12 are determined by both the sample-to-sensor and sample-to-aperture distances, which can make it challenging to generate optimized lateral shifts for samples at different vertical heights. The wavelength-scanning approach, however, is performed with evenly distributed wavelengths regardless of sample height. Therefore, we believe that wavelength-scanning pixel super-resolution is more favorable that lateral shifting techniques for building high-resolution wide-field microscopes with high imaging speeds. In addition, the better data efficiency of our wavelength-scanning super-resolution approach can reduce the cost of data storage and transmission, benefiting telemedicine implementations and server-based remote reconstructions.

In addition to delivering competitive results on a bench-top system, the presented wavelength-scanning pixel super-resolution approach also holds great potential for field-portable microscopy applications. Compared with lateral shift-based pixel super-resolution, the wavelength-scanning approach does not require any mechanical motion or fiber bundle8, 52, which could make the mobile imaging platform more robust. Because the wavelength scanning range is narrow (that is, 10–30 nm), the combination of a few light-emitting diodes, each with a standard spectral bandwidth of 15–30 nm, and a variable optical thin-film filter to narrow the light-emitting diode spectra will be sufficient to implement wavelength-scanning super-resolution in a field-portable and cost-effective design.

Finally, we should emphasize that the presented wavelength-scanning pixel super-resolution approach, in addition to lens-free or holographic diffraction-based imaging systems, can also be applied to lens-based point-to-point imaging modalities. By introducing a simple defocus into a lens-based imaging system (by, for example, a relative axial shift of the sensor array, object and/or the objective lens), the entire wavelength diversity framework described in this manuscript would be able to achieve pixel super-resolution. For proof-of-concept, we experimentally demonstrated our wavelength-scanning pixel super-resolution approach using a conventional lens-based microscope with a 10 × objective lens (NA=0.3) and a CMOS sensor chip with a pixel size of 3.75 μm (Figure 9). To significantly expand the FOV of the microscope from ~0.17 to 1.20 mm2, we used a 0.38 × camera adaptor, which created an effective pixel size of ~0.99 μm, limiting the resolution of the microscope because of pixelation (Figure 9c). To considerably improve the resolution, back to the resolving capability of the objective lens (dictated by its NA), we defocused the target object by small increments of ~20 μm between 160 and 300 μm. We also scanned the illumination wavelength between 500 and 521 nm with a step size of 3 nm. Using these defocused microscope images in our wavelength-scanning super-resolution algorithm, we significantly improved the resolution of the reconstructed image (Figure 9d), matching the resolution determined by the NA of the objective lens while also expanding the image FOV by ~sevenfold (that is, 1.20 vs 0.17 mm2). These results confirm that our wavelength-scanning pixel super-resolution technique is broadly applicable to various lens-based and lens-free coherent and partially coherent wide-field imaging modalities.

Implementation of wavelength-scanning pixel super-resolution using a lens-based microscope. (a) Schematic of the optical setup. In this proof-of-concept experiment, a 10 × objective lens (NA=0.3) is used. The sample plane is scanned from 160 to 300 μm, defocused from the focal plane of the objective lens in 20-μm increments. The illumination wavelength is tuned between 500 and 521 nm with a step size of 3 nm. (b) Full FOV of a CMOS image sensor (pixel size: 3.75 μm) using the 10 × objective lens with a 0.38 × camera adaptor. (c) Lens-based image of a resolution test chart without wavelength-scanning pixel super-resolution. The half-pitch of the smallest resolved feature is 1.95 μm. (d) Lens-based image of the same resolution test chart with wavelength-scanning pixel super-resolution. The half-pitch of the smallest resolved feature is 0.78 μm.

Conclusions

We report a new wavelength-scanning-based pixel super-resolution technique that generates high-resolution reconstructions from undersampled raw measurements captured at multiple wavelengths within a narrow spectral range (that is, 10–30 nm). Compared with lateral shift-based super-resolution, this wavelength-scanning method avoids the need for shifting mechanical components and, more importantly, provides uniform resolution improvement along all the directions across the image sensor or sample plane. This framework enabled us to improve the resolution and effective NA of a wide-field lens-free microscope by a factor of ~4, achieving a half-pitch resolution of 250 nm with an NA of ~1.0 using significantly fewer measurements compared with those required by lateral shift-based super-resolution methods. Because this wavelength-scanning super-resolution technique utilizes a narrow spectral range, it allows for super-resolved imaging of both colorless (for example, unstained) and stained/dyed biological samples. Reconstructions at multiple wavelengths also enable robust phase unwrapping to reveal the optical path length differences between specimens and surrounding media. This new wavelength-scanning super-resolution approach would broadly benefit various lens-free as well as lens-based wide-field and high-throughput microscopy applications that require large space-bandwidth products.

References

Chen T, Catrysse PB, El Gamal A, Wandell BA . How small should pixel size be? Proc SPIE 2000; 3965: 451–459.

Tsai RY, Huang TS . Multiframe Image Restoration and Registration. Adv Comput Vis Image Process 1984; 1: 317–339.

Hardie RC, Barnard KJ, Bognar JG, Armstrong EE, Watson EA . High-resolution image reconstruction from a sequence of rotated and translated frames and its application to an infrared imaging system. Opt Eng 1998; 37: 247–260.

Elad M, Hel-Or Y . A fast super-resolution reconstruction algorithm for pure translational motion and common space-invariant blur. IEEE Trans Image Process 2001; 10: 1187–1193.

Park SC, Park MK, Kang MG . Super-resolution image reconstruction: a technical overview. IEEE Signal Process Mag 2003; 20: 21–36.

Farsiu S, Elad M, Milanfar P . Multiframe demosaicing and super-resolution of color images. IEEE Trans Image Process 2006; 15: 141–159.

Bishara W, Su T-W, Coskun AF, Ozcan A . Lensfree on-chip microscopy over a wide field-of-view using pixel super-resolution. Opt Express 2010; 18: 11181–11191.

Bishara W, Sikora U, Mudanyali O, Su T-W, Yaglidere O et al. Holographic pixel super-resolution in portable lensless on-chip microscopy using a fiber-optic array. Lab Chip 2011; 11: 1276–1279.

Greenbaum A, Ozcan A . Maskless imaging of dense samples using pixel super-resolution based multi-height lensfree on-chip microscopy. Opt Express 2012; 20: 3129–3143.

Isikman SO, Bishara W, Mavandadi S, Yu FW, Feng S et al. Lens-free optical tomographic microscope with a large imaging volume on a chip. Proc Natl Acad Sci USA 2011; 108: 7296–7301.

Greenbaum A, Zhang YB, Feizi A, Chung P-L, Luo W et al. Wide-field computational imaging of pathology slides using lens-free on-chip microscopy. Sci Transl Med 2014; 6: 267ra175.

Luo W, Greenbaum A, Zhang YB, Ozcan A . Synthetic aperture-based on-chip microscopy. Light: Sci Applic 2015; 4: e261.

Celebi ME, Schaefer G . Color Medical Image Analysis. Netherlands: Springer. 2013.

Yamaguchi I, Matsumura T, Kato J . Phase-shifting color digital holography. Opt Lett 2002; 27: 1108–1110.

Kato J, Yamaguchi I, Matsumura T . Multicolor digital holography with an achromatic phase shifter. Opt Lett 2002; 27: 1403–1405.

Ferraro P, Grilli S, Miccio L, Alfieri D, De Nicola S et al. Full color 3-D Imaging by digital holography and removal of chromatic aberrations. J Display Technol 2008; 4: 97–100.

Picart P, Tankam P, Mounier D, Peng ZJ, Li JC . Spatial bandwidth extended reconstruction for digital color Fresnel holograms. Opt Express 2009; 17: 9145–9156.

Kakue T, Tahara T, Ito K, Shimozato Y, Awatsuji Y et al. Parallel phase-shifting color digital holography using two phase shifts. Appl Opt 2009; 48: H244–H250.

Isikman SO, Sencan I, Mudanyali O, Bishara W, Oztoprak C et al. Color and monochrome lensless on-chip imaging of Caenorhabditis elegans over a wide field-of-view. Lab Chip 2010; 10: 1109–1112.

Garcia-Sucerquia J . Color lensless digital holographic microscopy with micrometer resolution. Opt Lett 2012; 37: 1724–1726.

Ito Y, Shimozato Y, Xia P, Tahara T, Kakue T et al. Four-wavelength color digital holography. J Display Technol 2012; 8: 570–576.

Greenbaum A, Feizi A, Akbari N, Ozcan A . Wide-field computational color imaging using pixel super-resolved on-chip microscopy. Opt Express 2013; 21: 12469–12483.

Mendoza-Yero O, Tajahuerce E, Lancis J, Garcia-Sucerquia J . Diffractive digital lensless holographic microscopy with fine spectral tuning. Opt Letters 2013; 38: 2107–2109.

Kiss MZ, Nagy BJ, Lakatos P, Göröcs Z, Tőkés S et al. Special multicolor illumination and numerical tilt correction in volumetric digital holographic microscopy. Opt Express 2014; 22: 7559–7573.

Dohet-Eraly J, Yourassowsky C, Dubois F . Color imaging-in-flow by digital holographic microscopy with permanent defect and aberration corrections. Opt Lett 2014; 39: 6070–6073.

Bao P, Zhang FC, Pedrini G, Osten W . Phase retrieval using multiple illumination wavelengths. Opt Lett 2008; 33: 309–311.

Bao P, Situ G, Pedrini G, Osten W . Lensless phase microscopy using phase retrieval with multiple illumination wavelengths. Appl Opt 2012; 51: 5486–5494.

Noom DWE, Boonzajer Flaes DE, Labordus E, Eikema KSE, Witte S . High-speed multi-wavelength Fresnel diffraction imaging. Opt Express 2014; 22: 30504–30511.

Sanz M, Picazo-Bueno JA, García J, Micó V . Improved quantitative phase imaging in lensless microscopy by single-shot multi-wavelength illumination using a fast convergence algorithm. Opt Express 2015; 23: 21352–21365.

Kazemzadeh F, Jin C, Molladavoodi S, Mei Y, Emelko MB et al. Lens-free spectral light-field fusion microscopy for contrast- and resolution-enhanced imaging of biological specimens. Opt Lett 2015; 40: 3862–3865.

Kühn J, Colomb T, Montfort F, Charrière F, Emery Y et al. Real-time dual-wavelength digital holographic microscopy with a single hologram acquisition. Opt Express 2007; 15: 7231–7242.

Desse J-M, Picart P, Tankam P . Digital three-color holographic interferometry for flow analysis. Opt Express 2008; 16: 5471–5480.

Khmaladze A, Kim M, Lo C-M . Phase imaging of cells by simultaneous dual-wavelength reflection digital holography. Opt Express 2008; 16: 10900–10911.

Itoh K . Analysis of the phase unwrapping algorithm. Appl Opt 1982; 21: 2470.

Takeda M, Gu Q, Kinoshita M, Takai H, Takahashi Y . Frequency-multiplex Fourier-transform profilometry: a single-shot three-dimensional shape measurement of objects with large height discontinuities and/or surface isolations. Appl Opt 1997; 36: 5347–5354.

Costantini M . A novel phase unwrapping method based on network programming. IEEE Trans Geosci Remote Sens 1998; 36: 813–821.

Volkov VV, Zhu YM . Deterministic phase unwrapping in the presence of noise. Opt Lett 2003; 28: 2156–2158.

Shen PF, Patterson LT . A simplified wright’s stain technique for routine avian blood smear staining. Poultry Sci 1983; 62: 923–924.

Oppenheim AV, Willsky AS, Nawab SH . Signals and Systems, 2nd edn. New York: Prentice Hall. 1996.

Greenbaum A, Luo W, Khademhosseinieh B, Su TW, Coskun AF et al. Increased space-bandwidth product in pixel super-resolved lensfree on-chip microscopy. Scientific Rep 2013; 3: 1717.

Gonzalez RC, Woods RE . Digital Image Processing, 3rd edn. New York: Prentice Hall. 2007.

Goodman J . Introduction to Fourier Optics, 3rd edn. California: Roberts and Company Publishers. 2004.

Allen LJ, McBride W, O’Leary NL, Oxley MP . Exit wave reconstruction at atomic resolution. Ultramicroscopy 2004; 100: 91–104.

Fienup JR . Reconstruction of an object from the modulus of its Fourier transform. Opt Lett 1978; 3: 27–29.

Fienup JR . Phase retrieval algorithms: a comparison. Appl Opt 1982; 21: 2758–2769.

Zhang Y, Pedrini G, Osten W, Tiziani H . Whole optical wave field reconstruction from double or multi in-line holograms by phase retrieval algorithm. Opt Express 2003; 11: 3234–3241.

Boyd S, Vandenberghe L . Convex Optimization. Cambridge, UK: Cambridge University Press. 2004.

Greenbaum A, Luo W, Su T-W, Göröcs Z, Xue L et al. Imaging without lenses: achievements and remaining challenges of wide-field on-chip microscopy. Nat Methods 2012; 9: 889–895.

Zernike F . Phase contrast, a new method for the microscopic observation of transparent objects. Physica 1942; 9: 686–698.

Gass J, Dakoff A, Kim MK . Phase imaging without 2π ambiguity by multiwavelength digital holography. Opt Lett 2003; 28: 1141–1143.

Mudanyali O, Tseng D, Oh C, Isikman SO, Sencan I et al. Compact, light-weight and cost-effective microscope based on lensless incoherent holography for telemedicine applications. Lab Chip 2010; 10: 1417–1428.

Greenbaum A, Sikora U, Ozcan A . Field-portable wide-field microscopy of dense samples using multi-height pixel super-resolution based lensfree imaging. Lab Chip 2012; 12: 1242–1245.

Acknowledgements

The Ozcan Research Group at UCLA gratefully acknowledges the support of the Presidential Early Career Award for Scientists and Engineers (PECASE), the Army Research Office (ARO; W911NF-13-1-0419 and W911NF-13-1-0197), the ARO Life Sciences Division, the ARO Young Investigator Award, the National Science Foundation (NSF) CAREER Award, the NSF CBET Division Biophotonics Program, the NSF Emerging Frontiers in Research and Innovation (EFRI) Award, the NSF EAGER Award, NSF INSPIRE Award, NSF PFI (Partnerships for Innovation) Award, the Office of Naval Research (ONR), and the Howard Hughes Medical Institute (HHMI). This work is based on research performed in a laboratory renovated by the National Science Foundation under Grant No. 0963183, which is an award funded under the American Recovery and Reinvestment Act of 2009 (ARRA). We acknowledge Adam Stieg of California NanoSystems Institute (CNSI) for his kind assistance with atomic force microscopy experiments.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Competing interests

The authors declare no conflict of interest.

Additional information

Note: Supplementary Information for this article can be found on the Light: Science & Applications' website .

Supplementary information

Rights and permissions

This work is licensed under a Creative Commons Attribution-NonCommercial-NoDerivs 4.0 International License. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in the credit line; if the material is not included under the Creative Commons license, users will need to obtain permission from the license holder to reproduce the material. To view a copy of this license, visit http://creativecommons.org/licenses/by-nc-nd/4.0/

About this article

Cite this article

Luo, W., Zhang, Y., Feizi, A. et al. Pixel super-resolution using wavelength scanning. Light Sci Appl 5, e16060 (2016). https://doi.org/10.1038/lsa.2016.60

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/lsa.2016.60

Keywords

This article is cited by

-

A hierarchically sampling global sparse transformer in data stream mining for lightweight image restoration

EURASIP Journal on Advances in Signal Processing (2023)

-

Rapid and stain-free quantification of viral plaque via lens-free holography and deep learning

Nature Biomedical Engineering (2023)

-

Single-shot wavelength-multiplexed phase microscopy under Gabor regime in a regular microscope embodiment

Scientific Reports (2023)

-

Pixel super-resolution with spatially entangled photons

Nature Communications (2022)

-

Automated molecular-image cytometry and analysis in modern oncology

Nature Reviews Materials (2020)