Abstract

Scattering media, such as diffused glass and biological tissue, are usually treated as obstacles in imaging. To cope with the random phase introduced by a turbid medium, most existing imaging techniques recourse to either phase compensation by optical means or phase recovery using iterative algorithms, and their applications are often limited to two-dimensional imaging. In contrast, we utilize the scattering medium as an unconventional imaging lens and exploit its lens-like properties for lensless three-dimensional (3D) imaging with diffraction-limited resolution. Our spatially incoherent lensless imaging technique is simple and capable of variable focusing with adjustable depths of focus that enables depth sensing of 3D objects that are concealed by the diffusing medium. Wide-field imaging with diffraction-limited resolution is verified experimentally by a single-shot recording of the 1951 USAF resolution test chart, and 3D imaging and depth sensing are demonstrated by shifting focus over axially separated objects.

Similar content being viewed by others

Introduction

Since the early work in the 1960s by Goodman et al.1, Leith and Upatnieks2 and Kogelnik and Pennington3, many methods have been proposed for imaging through diffusing media. These methods have a potentially wide range of applications from biomedical to astronomical imaging. Thus, imaging through an opaque diffusing medium with diffraction-limited resolution has become an important technical challenge because of these widely recognized needs. One straightforward strategy is imaging with ballistic photons selected either by coherence gating as in optical coherence tomography4, time gating as in femto-photography5, or holographic gating6, 7, 8. Because only a limited number of ballistic photons are used (with scattered photons being wasted) and also because the sequential scanning of the gate window is required, these methods are used mostly for imaging static objects through a weakly scattering medium. An alternative solution is to ‘descramble’ the phase of the scattered light2, 3, 9, 10 by means of phase compensation with a spatial light modulator11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21 or a hologram22. The spatial light modulator-based phase compensation11, 12, 13, 14 involves an iterative search for the unknown phase for compensation, and the holographic phase conjugation requires strict positional alignment of the hologram and the read-out beam2, 3, 10.

Another way to make use of the diffused light is to implement unconventional imaging techniques that are insensitive to phase perturbation. Among these are photon correlation holography23, remote imaging digital holography24, 25, coherence holography26, 27 and Doppler-shift digital holography28, 29 that can image through a dynamic diffusing media, but they are not lensless systems30. Freund proposed a lensless imaging technique based on speckle intensity correlation, in which he regarded a diffuser as a useful imaging device and made use of its memory effect31, 32. This idea was further developed by other researchers. Bertolotti et al.33 reported an angular correlation technique that can exclude prior calibration with a reference source (which was necessary in Freund’s scheme) although the sequential scanning of the illumination angle may prevent imaging dynamic objects. Katz et al. proposed another reference-free method34 and showed that the intensity autocorrelation of the scattered light is identical to the autocorrelation of the object image itself and that the object can be reconstructed using a phase retrieval algorithm35. The intensity correlation techniques were demonstrated only for two-dimensional (2D) images because of their intrinsic property of infinite depth of focus31. Recently, Takasaki et al.36 and Liu et al.37 presented phase–space analysis methods for three-dimensional (3D) imaging behind the diffuser. However, these techniques require a sequential data acquisition to create a Wigner function and are limited to objects made of a sparse set of point sources.

As described above, a majority of techniques regard a turbid medium as a nuisance in imaging and aim at coping with the random phase introduced by the turbid medium. An exception is the idea behind the speckle intensity correlation technique proposed by Freund for 2D imaging31, 32. In his seminal papers, Freund proposed a lensless imaging technique in which he regarded a diffuser as a useful imaging device and named it wall lens31, 32. Extending this idea, we make use of the turbid medium as a virtual imaging lens. We exploit the potential of the virtual imaging lens for 3D imaging; so that, it can form an image of 3D objects with variable focusing and controllable depth of focus. To avoid the computational burden of a 3D phase retrieval algorithm and the restricted field of view incidental to image recovery from the autocorrelation, we modify the intensity correlation technique and introduce a reference point source near the object. The reference point source, which is incoherent with the object illumination, has the role of a guide star in a manner similar to Goodman’s interferometric imaging38. The use of a reference source may set a certain restriction in some applications but provides a much simpler practical solution than that based on the autocorrelation combined with an iterative phase retrieval algorithm. Our solution is free from iterative phase retrieval, gives a wider field of view, and permits direct 3D image reconstruction from the cross-correlation between the intensity distributions on a pair of planes axially separated in the scattered fields. By virtue of the variable plane of focus (which is selectable by the distance between the cross-correlation planes) and the finite depth of focus (which is controllable by the size of the aperture defined by the illuminated area on the diffuser), this technique can perform direct depth sensing of 3D objects hidden behind the diffusing medium without recourse to phase–space analysis and has no restriction on the sparsity of objects. Experimental demonstrations of imaging through a polycarbonate diffuser and a thick chicken breast tissue are presented along with 3D imaging and looking around corners. Wide-field imaging with diffraction-limited resolution is verified by experiment for the 1951 USAF resolution test target recorded by a single shot, and 3D imaging and depth sensing are demonstrated by shifting focus over axially separated objects.

Materials and methods

Principle

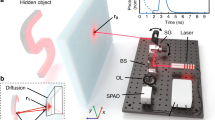

The sketch of the setup for imaging using a diffusing medium is shown in Figure 1. An object is illuminated by a spatially incoherent narrowband source. A reference point source is placed near the object. The scattering characteristic of the diffusing medium is represented by the point spread function (PSF)  between the reference and recording planes located at distances

between the reference and recording planes located at distances  and z from the diffuser, with

and z from the diffuser, with  and r being lateral position vectors (see Supplementary Information). As shown by Freund32, the PSF for a thin diffuser has a memory effect, which means that the PSF has angular shift invariance over the range of angles Δθ≈λ/t, with λ and t being the wavelength of light and the thickness of the diffusing medium, respectively. Angular shift invariance can be converted to lateral shift invariance such that

and r being lateral position vectors (see Supplementary Information). As shown by Freund32, the PSF for a thin diffuser has a memory effect, which means that the PSF has angular shift invariance over the range of angles Δθ≈λ/t, with λ and t being the wavelength of light and the thickness of the diffusing medium, respectively. Angular shift invariance can be converted to lateral shift invariance such that  with

with  , where

, where  is the lateral magnification. This lateral shift invariance holds within the range |Δr̂|<ẑΔθ≈λẑ/t and |Δr|<zΔθ≈λz/t. The lateral (or angular) memory effect has played a crucial role in correlation-based 2D image reconstructions31, 33, 34.

is the lateral magnification. This lateral shift invariance holds within the range |Δr̂|<ẑΔθ≈λẑ/t and |Δr|<zΔθ≈λz/t. The lateral (or angular) memory effect has played a crucial role in correlation-based 2D image reconstructions31, 33, 34.

Imaging using a diffusing medium: sketch of the experimental setup. A diffusing object is placed at a distance ẑ + Δẑ from a diffuser and is illuminated by a spatially incoherent narrowband light source. A reference point source, which is incoherent to the object beam and is laterally shifted by r̂0 from the object, is placed in the reference plane at a distance  from the diffuser. Speckle intensities are detected by an image sensor at distances z and z + Δz downstream from the diffuser.

from the diffuser. Speckle intensities are detected by an image sensor at distances z and z + Δz downstream from the diffuser.

Here, for 3D imaging, we further note and exploit the longitudinal memory effect that is expressed by  with

with  . This axial shift invariance holds true for small axial shifts of the reference and observation planes that satisfy the imaging condition of the virtual lens called a 'wall lens'31 such that

. This axial shift invariance holds true for small axial shifts of the reference and observation planes that satisfy the imaging condition of the virtual lens called a 'wall lens'31 such that  , with f being the focal length of the wall lens. Unlike a conventional lens that has a fixed focal length, the wall lens has an adaptive focal length that adjusts itself automatically to satisfy this imaging condition between the arbitrarily chosen planes. Strictly speaking, the 3D PSF is not shift invariant because of the anisotropy in the axial and lateral magnifications. However, we can make it perfectly shift invariant by choosing

, with f being the focal length of the wall lens. Unlike a conventional lens that has a fixed focal length, the wall lens has an adaptive focal length that adjusts itself automatically to satisfy this imaging condition between the arbitrarily chosen planes. Strictly speaking, the 3D PSF is not shift invariant because of the anisotropy in the axial and lateral magnifications. However, we can make it perfectly shift invariant by choosing  with m=1 or by appropriately rescaling the coordinates for unit magnification; so that, the shift invariant 3D PSF takes the form of a convolution kernel

with m=1 or by appropriately rescaling the coordinates for unit magnification; so that, the shift invariant 3D PSF takes the form of a convolution kernel  . Let the intensity distribution of the object and the off-axis reference point source be

. Let the intensity distribution of the object and the off-axis reference point source be  . The speckle intensity distribution detected on the observation plane at z + Δz is given by I(r;Δz)=S(r;Δz)*Iob(r;Δz) where

. The speckle intensity distribution detected on the observation plane at z + Δz is given by I(r;Δz)=S(r;Δz)*Iob(r;Δz) where  denotes the 3D convolution operation. Whereas the existing 2D imaging techniques33, 34, 39 compute 2D autocorrelation of a speckle pattern detected on a single plane, we compute the 3D auto-covariance of the speckle intensity distribution for 3D imaging:

denotes the 3D convolution operation. Whereas the existing 2D imaging techniques33, 34, 39 compute 2D autocorrelation of a speckle pattern detected on a single plane, we compute the 3D auto-covariance of the speckle intensity distribution for 3D imaging:

where  and

and  are the speckle intensity and the PSF, respectively, with their mean values being subtracted, and

are the speckle intensity and the PSF, respectively, with their mean values being subtracted, and  denotes 3D correlation. For simplicity, we have made an approximation that [ΔS(r;Δz)⊗ΔS(r;Δz)]≈δ(r;Δz) in Equation (1), assuming that the speckles have a negligibly small correlation length compared with the object structures. Substituting Iob(r;Δz)=O(r;Δz)+δ(r−r0;Δz) into Equation (1), we obtain

denotes 3D correlation. For simplicity, we have made an approximation that [ΔS(r;Δz)⊗ΔS(r;Δz)]≈δ(r;Δz) in Equation (1), assuming that the speckles have a negligibly small correlation length compared with the object structures. Substituting Iob(r;Δz)=O(r;Δz)+δ(r−r0;Δz) into Equation (1), we obtain

In Equation (2), the first term O(r+r0;Δz) gives a laterally shifted 3D image of the 3D object, whereas the second term O(−(r−r0);−Δz) gives a symmetrically shifted 3D image. We regard the remaining terms as noises appearing in the center. Thus, we can reconstruct the 3D image from the 3D auto-covariance. This approach, however, requires not only a huge amount of speckle data acquisition in full 3D space but also demands large computational efforts for the calculation of the 3D auto-covariance. We have a practical solution to this problem. In most applications, such as in microscopy, we are interested in observing an object by focusing on a particular plane in 3D space. In such a case, we can replace the 3D auto-covariance [ΔI(r;Δz)]⊗[ΔI(r;Δz)] with the 2D cross-covariance [ΔI(r;Δz)]⊗r[ΔI(r;0)] between the speckle intensities detected on two planes at z + Δz and z, where ⊗r denotes the 2D correlation with respect to r only. This significantly reduces the amount of data acquisition and computational burden. A 3D object can be explored by moving the plane of focus, which can be accomplished by changing the Δz of the 2D cross-correlation plane. Thus, the 3D imaging requires at least two axially shifted speckle intensity distributions for cross-correlation. However, a 2D object can be reconstructed from a single-shot speckle pattern by autocorrelation if the object is placed on the reference plane at  and the speckle pattern is recorded at Δz = 0. This permits instantaneous imaging of a dynamic object through a turbid medium.

and the speckle pattern is recorded at Δz = 0. This permits instantaneous imaging of a dynamic object through a turbid medium.

What are the resolution limits? Although we have made the approximation [ΔS(r;Δz)]⊗[ΔS(r;Δz)]≈δ(r;Δz), actual speckles have finite lateral and axial correlation lengths given by δrcorr.≈1.4λ(z/D) and  40, 41, with D being the diameter of a circular aperture stop attached to the diffuser. Through the convolution in Equation (1), this finite spread of the 3D speckle correlation sets the theoretical limit of the lateral and axial resolutions attainable by the proposed scheme (Supplementary Information). One may also note that the attainable resolutions correspond to the lateral resolution and the depth of focus (DOF) of the diffraction-limited lens. To satisfy the Shannon sampling theorem, at least two pixels of the image sensor must be included within the lateral correlation length of the speckle pattern. Therefore, for the symmetric geometry (

40, 41, with D being the diameter of a circular aperture stop attached to the diffuser. Through the convolution in Equation (1), this finite spread of the 3D speckle correlation sets the theoretical limit of the lateral and axial resolutions attainable by the proposed scheme (Supplementary Information). One may also note that the attainable resolutions correspond to the lateral resolution and the depth of focus (DOF) of the diffraction-limited lens. To satisfy the Shannon sampling theorem, at least two pixels of the image sensor must be included within the lateral correlation length of the speckle pattern. Therefore, for the symmetric geometry ( ) with unit magnification m=1, the sensor pixel size may sometimes set a practical limit of resolution. By reducing the object distance

) with unit magnification m=1, the sensor pixel size may sometimes set a practical limit of resolution. By reducing the object distance  while increasing the sensor distance z to satisfy the imaging condition of the wall lens

while increasing the sensor distance z to satisfy the imaging condition of the wall lens  , we can increase the magnification

, we can increase the magnification  and make

and make  . Now, the virtual wall lens plays the role of a microscope objective, and the resolution in the object space can be much higher than in the sensor space; therefore, we can avoid the sensor pixel size setting the practical limit of resolution. Remember that to maintain longitudinal shift invariance, an appropriate numerical rescaling of the coordinates is required in the calculation of the cross-covariance. In principle, the maximum field of view (FOV) is limited by the loss of the lateral memory effect—namely, the decorrelation of the scattered intensity field indicated by the speckle contrast C(p)=(p/sinhp)2 with p=2πΔrt/λz32, where Δr is the lateral shift of the speckle pattern, and t is the thickness of the transmissive scattering medium and the mean free path t* for a reflective scattering medium (see Supplementary Information). Thus, one may define a nominal FOV as Δr=(λz/πt) for which C(r) > 0.3. This nominal FOV is unlimited for an infinitely thin medium and decreases linearly with the increasing thickness of the scattering layer. In practice, however, the FOV is limited not only by the decorrelation but more significantly by the loss of speckle contrast caused by the large size, low sparsity and high complexity of the object37, which severely restricts the size and complexity of the object that can be reconstructed from the autocorrelation by using an algorithm for phase retrieval. We circumvent this difficulty and realize a wider FOV by introducing a reference point source that has the highest sparsity and permits direct reconstruction of the object without using the autocorrelation and the phase retrieval algorithm.

. Now, the virtual wall lens plays the role of a microscope objective, and the resolution in the object space can be much higher than in the sensor space; therefore, we can avoid the sensor pixel size setting the practical limit of resolution. Remember that to maintain longitudinal shift invariance, an appropriate numerical rescaling of the coordinates is required in the calculation of the cross-covariance. In principle, the maximum field of view (FOV) is limited by the loss of the lateral memory effect—namely, the decorrelation of the scattered intensity field indicated by the speckle contrast C(p)=(p/sinhp)2 with p=2πΔrt/λz32, where Δr is the lateral shift of the speckle pattern, and t is the thickness of the transmissive scattering medium and the mean free path t* for a reflective scattering medium (see Supplementary Information). Thus, one may define a nominal FOV as Δr=(λz/πt) for which C(r) > 0.3. This nominal FOV is unlimited for an infinitely thin medium and decreases linearly with the increasing thickness of the scattering layer. In practice, however, the FOV is limited not only by the decorrelation but more significantly by the loss of speckle contrast caused by the large size, low sparsity and high complexity of the object37, which severely restricts the size and complexity of the object that can be reconstructed from the autocorrelation by using an algorithm for phase retrieval. We circumvent this difficulty and realize a wider FOV by introducing a reference point source that has the highest sparsity and permits direct reconstruction of the object without using the autocorrelation and the phase retrieval algorithm.

Experimental Setup

The schematic diagrams of the experimental setups are shown in Supplementary Fig. S1. For imaging using a diffusing media, the object, a USAF test target, was placed 600 mm from the polycarbonate diffuser. The object was back-illuminated with a quasi-monochromatic spatially incoherent light of wavelength λ=532 nm, which was created by destroying the spatial coherence of the diverging beam from a Nd:YAG laser with a rotating diffuser. A calibrating point source, which was created with an optical fiber coupled to the same laser source (without passing through the rotating diffuser), was placed at an off-axial position close to the object with the help of a beam splitter (BS). To avoid overlap between the autocorrelation term and the translated object term in Equation (2), the off-axial distance of the reference point source was set 1.5 times greater than the size of the object. At the same time, the point source was made to remain close to the object such that same PSF was applied to the object and the reference. To ensure efficient use of the restricted FOV, the object was made coplanar with the reference point source. The lights from the object and the reference source were scattered by the diffusing medium and produced speckle patterns of their own, which were superimposed incoherently on an intensity basis in the observation plane. A SVS Vistek CCD camera (Seefeld, Germany) with 3280 × 4896 pixels and pixel size 7.45 × 7.45 μm was used to record the speckle distribution. For the experiments shown in Figure 2, both the object and the CCD were kept at the same distance 600 mm from the scattering medium to form a system with unit magnification that guarantees shift invariance of the PSF. The diameter of the aperture stop on the diffuser was 20 mm. Because the amount of light reaching the CCD was very small, the exposure time was increased to 1 s (maximum for the CCD). This long exposure time guarantees incoherent detection of the lights passing through the rotating diffuser. The same optical geometry was used for imaging using a thick biological tissue, except that the aperture size was reduced to 13 mm. In this case, the object was made by cutting a black paper into a 2D pattern 'H' and pasting it onto a diffuser. A 1.5-mm-thick chicken breast tissue was sandwiched between two glass slides and was mounted on a holder for use as the diffusive medium.

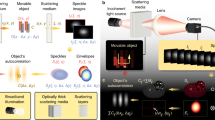

Lens-like imaging using scattering medium. (a) Microscope image of USAF 1951 resolution target. (b) The auto-covariance of the speckle intensity pattern with its central correlation peak blocked, and (c) the reconstructed object. The theoretical resolution limit for the given aperture size D=20 mm and λ=532 nm is ~19.4 μm. The experimental resolution limit is 19.7 μm, which is very close to the theoretical limit. In the present case, the imaging was performed with unit magnification. The magnification of the system can be increased further by bringing the diffuser close to the object. The USAF target was used as a diffusively transmissive 2D object for the experiments. The object and an image sensor (CCD) were kept at the same distance ẑ = z = 600 mm from the diffuser to implement 3D shift invariance with unit magnification m=1. The speckle intensity distribution on the CCD sensor was recorded with an exposure time of 1 s. The scale bars in a, c are 300 μm.

To demonstrate the variable focusing on desired planes in 3D space, the object was prepared by cutting triangular and rectangular holes in two pieces of paper and pasting it on both sides of a glass slide. In this way, the axial separation was provided between the two objects. The speckle patterns were detected at different observation planes by moving the CCD in small steps with a translating stage. Focusing was achieved by computing the cross-covariance between the two speckle patterns detected at the observation planes separated by the same distance as the axial separation between two objects (Supplementary Information). For the experiment involving looking around a corner, the transmissive diffusing sample was replaced by a rough aluminum plate, which behaves as a strong scatterer in reflection.

Results and discussion

Wide-field imaging using scattering medium

Experiments were performed with different objects to demonstrate the lens-like characteristics (wide-field, sharp, diffraction-limited imaging) of the diffusing medium. One such result is shown in Figure 2. A transmissive object, USAF resolution target (Figure 2a) was placed close to a calibrating point source in the same plane (reference plane) (Supplementary Fig. S1a).

The lights from the object and the reference were scattered by the diffuser to produce a superimposed speckle distribution on the CCD. Due to the memory effect, the auto-covariance of the recorded intensity distribution reconstructs the object, which is shown in Figure 2b. The diffraction-limited reconstruction of the object is shown in Figure 2c. The size of the object is 1 mm, but the FOV of our system is more than 2 mm. In general, correlation-based imaging systems, without calibrating a point source, are restricted by the complexity of the objects. An object with a complex structure, such as ours, may reduce the contrast of the autocorrelation peak substantially, and reconstruction may become impossible. Although the theoretical FOV of our scheme is nearly half the memory effect region, our scheme can reconstruct complex objects effectively over a wider FOV than other existing schemes. This is because, for complex objects, the practical FOV is limited by the contrast reduction of the correlation function rather than by the region of the memory effect. Irrespective of the object’s complexity, the proposed technique permits diffraction-limited image reconstruction directly from an instantaneous single-shot specklegram without recourse to an iterative phase retrieval algorithm. This advantage can be utilized for the imaging of a biological sample using fluorescent beads and also in imaging of dynamic objects through dynamic diffusing (turbulent) media. The magnification of our system can be varied by moving the scattering layer close to or far from the object.

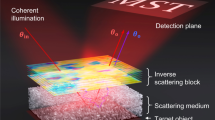

Imaging using a thick biological tissue

We performed the imaging using a ‘thick’ biological tissue, which challenges the validity of the proposed technique for a wider class of diffusive media. A 1.5-mm-thick chicken breast tissue sandwiched between two glass slides was used as an imaging system. The light scattered by this thick biological tissue was recorded as a speckle pattern. To optimize the speckle size on CCD, an aperture stop was attached to the diffuser. The amount of light passing through the thick diffuser is small, and the recorded speckle patterns are relatively dark (Figure 3b).

Chicken breast tissue or imaging system: a 1.5-mm-thick chicken breast tissue sandwiched between two glass slides was used as a diffusive medium. Non-invasive imaging of the ‘letter H’ using a spatially incoherent light source was performed through this biological tissue. (a) The object, ‘letter H’ (500 × 330 μm) (b) part of the recorded speckle pattern, (c) the autocorrelation, and (d) the reconstructed object. The scale bars in a, b are 150 μm (20 pixels) and 447 μm (60 pixels), respectively.

However, our calibration-based imaging technique allows enhanced detection of the weak object light. This effect of signal enhancement, which originates from the cross-correlation term  in Equation (2), is similar to the heterodyne gain in coherent imaging systems. The reconstructed images through freshly sliced tissue are shown in Figure 3c and 3d.

in Equation (2), is similar to the heterodyne gain in coherent imaging systems. The reconstructed images through freshly sliced tissue are shown in Figure 3c and 3d.

The number of object points resolvable within FOV is estimated to be (FOV/δrcorr.)2≈(D/t)2, where t is the thickness of the tissue. The number of resolvable points decreases with increasing thickness of the tissue t but is independent of the wavelength of light, indicating the future possibility of using infrared light with higher penetration into a biological tissue.

3D imaging using the variable focusing of a diffusing medium

The lens-like behavior of the scattering medium as a wall lens31 permits imaging of an object in 3D space by variable focusing. Experiments were performed to demonstrate imaging and depth sensing of an object axially shifted in 3D space. We noted the ‘3D memory effect’ and exploited the axial shift invariance as a means of variable-depth focusing. Both reference and observation planes were set at ẑ = z = 600 mm from the diffuser (to which an aperture stop of diameter D=37 mm was attached) to allow the diffuser to function as a lens with the focal length f=300 mm and the nominal DOF δzcorr.≈6.7λ(z/D)2 = 0.94 mm.

To implement the principle described in Equation (1), a 3D object (combination of a rectangle and a triangle, laterally and axially separated by 6.3 and 2 mm, respectively) was chosen. The plane containing the triangle was kept in the reference plane ( ), in which the reference point source was placed; therefore, the rectangle is axially separated by Δẑ = −2 mm. A sequence of 15 speckle patterns I(r,0),I(r,δz),…,I(r,14δz) was then recorded for different shifts Δz = (n−1)δz(n = 1,2,..,15) by axially moving the image sensor in the forward direction in small steps of δ z = 0.4 mm. The first speckle pattern I(r,0) was used as a reference speckle pattern for covariance calculations. At first, the image was reconstructed from the auto-covariance of I(r, 0) as in the previous experiments. Because the axial separation of the rectangle from the reference plane (Δẑ = −2 mm) was more than two times the DOF (δzcorr. = 0.94 mm), the rectangle in the reconstructed image was defocused as shown in Figure 4a. To demonstrate variable focusing, the cross-covariance was computed between the reference speckle I(r,0) and each of I(r,δz),…,I(r,14δz); thus, different object planes were sequentially brought into focus. The refocusing of the rectangle is shown in Figure 4b. The refocused image of the rectangle was obtained from the covariance between the first and 6th speckle patterns. This means that the rectangle object that was axially separated from triangle by Δẑ = −2 mm was brought into focus by giving the relative shift of Δz = (6−1)δ z = 2 mm to the recording plane. Considering DOF of 0.94 mm, we consider that Δz = 2 mm is in good agreement with the theoretical prediction given by Δz = −Δẑ = −2 mm. The images between these two extremes were focused on the object planes in the range of −2 mm<Δẑ<0 mm, which came into focus when the CCD positions satisfied the imaging condition of the wall lens. It is important to note from Equation (2) that the direction of movement of the image sensor determines which of the two conjugate images will come into focus.

), in which the reference point source was placed; therefore, the rectangle is axially separated by Δẑ = −2 mm. A sequence of 15 speckle patterns I(r,0),I(r,δz),…,I(r,14δz) was then recorded for different shifts Δz = (n−1)δz(n = 1,2,..,15) by axially moving the image sensor in the forward direction in small steps of δ z = 0.4 mm. The first speckle pattern I(r,0) was used as a reference speckle pattern for covariance calculations. At first, the image was reconstructed from the auto-covariance of I(r, 0) as in the previous experiments. Because the axial separation of the rectangle from the reference plane (Δẑ = −2 mm) was more than two times the DOF (δzcorr. = 0.94 mm), the rectangle in the reconstructed image was defocused as shown in Figure 4a. To demonstrate variable focusing, the cross-covariance was computed between the reference speckle I(r,0) and each of I(r,δz),…,I(r,14δz); thus, different object planes were sequentially brought into focus. The refocusing of the rectangle is shown in Figure 4b. The refocused image of the rectangle was obtained from the covariance between the first and 6th speckle patterns. This means that the rectangle object that was axially separated from triangle by Δẑ = −2 mm was brought into focus by giving the relative shift of Δz = (6−1)δ z = 2 mm to the recording plane. Considering DOF of 0.94 mm, we consider that Δz = 2 mm is in good agreement with the theoretical prediction given by Δz = −Δẑ = −2 mm. The images between these two extremes were focused on the object planes in the range of −2 mm<Δẑ<0 mm, which came into focus when the CCD positions satisfied the imaging condition of the wall lens. It is important to note from Equation (2) that the direction of movement of the image sensor determines which of the two conjugate images will come into focus.

3D imaging using a diffusing medium via spatial speckle intensity cross-covariance: (a) the object consisted of triangular and rectangular windows (enclosed in dashed square windows in both images), which are separated by 6.3 and 2 mm laterally and axially, respectively. (b) 3D imaging by the intensity cross-covariance method. The out-of-focus rectangular window in a (square 1) comes into focus (square 2) as the CCD is moved toward the in-focus plane. Multiple speckle patterns are recorded by moving the CCD in the forward direction with a step size of 0.4 mm. The images at different focus planes were reconstructed by computing the cross-covariance for these multiple speckle patterns. The scale bar is 1.5 mm.

Looking around a corner

Looking at an object out of the line of sight, such as behind a wall or around the corner, was achieved by implementing the principle of looking through a diffusing medium in a reflection mode. The speckled backscattered light from a diffused surface was used to image the hidden object.

As shown in Figure 5a, a rough aluminum plate was illuminated with reference and object lights. The object was kept out of the line of sight of a CCD sensor that was placed in the far field with its center on the line normal to the rough aluminum plate as to avoid specularly reflected light. The image was reconstructed from the auto-covariance of the speckle pattern created by the light scattered from the aluminum plate (Supplementary Fig. S2). The same resolution limit applies to this reflection mode of operation as in the transmissive diffuser mode because both are based on the same principle. The results are shown in Figure 5, in which (b) is the object, (c) is the recorded speckle pattern, (d) is the auto-covariance and (e) is the reconstructed object.

Imaging an object out of the line of sight: (a) the sketch of ‘looking around a corner’ setup. A rough aluminum plate was illuminated with reference and object lights. The speckle pattern created by the light scattered from the aluminum plate was detected by a CCD image sensor at the position that avoided specularly reflected light. (b) The object hidden around the corner, (c) a part of the recoded speckle pattern, (d) the auto-covariance of the speckle intensity and (e) the reconstructed object image. The scale bars in b, c are 150 and 447 μm (60 pixels), respectively.

Conclusion

We proposed a lensless, spatially incoherent imaging technique that aims at utilizing any diffusing surface as an optical element to perform lens-like imaging. Although the experimental results were presented only for the case of unit magnification  , the technique can be applied to other magnification levels by properly rescaling the lateral and axial coordinates as

, the technique can be applied to other magnification levels by properly rescaling the lateral and axial coordinates as  and

and  , respectively. Its prominent features—e.g., variable focusing and magnification, flexible working distances, ability to work in reflection and transmission modes and low light conditions—make this technique unique. Its ability to image complex objects with a large FOV is another unique feature. Variable focusing permits depth sensing of the 3D objects that are hiding behind the scattering media by selectively focusing on a desired plane in a volume. While 3D imaging requires a minimum of two speckle patterns to be recorded, a 2D object can be reconstructed from a single-shot speckle pattern. This permits instantaneous imaging of a dynamic object through a moving diffuser or a turbulent medium. Obviously, such vital advantages come with some limitations, and in this case, it is the reference source that may pose a constraint in certain applications. We have presented the results of preliminary experiments as a proof of principle. The results of the single-shot imaging through a polycarbonate diffuser and a ‘thick’ chicken breast tissue verify our claim of diffraction-limited wide-field imaging using a diffusing medium. The variable-depth focusing was demonstrated with a 3D object. To our knowledge, this is the first experimental demonstration of 3D imaging through a diffusing medium by noting and exploiting the 3D imaging potentials of Freund’s wall lens for scattering media.

, respectively. Its prominent features—e.g., variable focusing and magnification, flexible working distances, ability to work in reflection and transmission modes and low light conditions—make this technique unique. Its ability to image complex objects with a large FOV is another unique feature. Variable focusing permits depth sensing of the 3D objects that are hiding behind the scattering media by selectively focusing on a desired plane in a volume. While 3D imaging requires a minimum of two speckle patterns to be recorded, a 2D object can be reconstructed from a single-shot speckle pattern. This permits instantaneous imaging of a dynamic object through a moving diffuser or a turbulent medium. Obviously, such vital advantages come with some limitations, and in this case, it is the reference source that may pose a constraint in certain applications. We have presented the results of preliminary experiments as a proof of principle. The results of the single-shot imaging through a polycarbonate diffuser and a ‘thick’ chicken breast tissue verify our claim of diffraction-limited wide-field imaging using a diffusing medium. The variable-depth focusing was demonstrated with a 3D object. To our knowledge, this is the first experimental demonstration of 3D imaging through a diffusing medium by noting and exploiting the 3D imaging potentials of Freund’s wall lens for scattering media.

Author contributions

The conception of this work arose from joint discussions among all authors. The theoretical development of the principle was accomplished mainly by AS and DN and led by MT. Experiments were performed mainly by AS and led by GP and MT. WO conducted the overall research as the team leader. All authors discussed the principle and results of the experiments. The manuscript was written mainly by AS and MT with contributions from all authors.

References

Goodman JW, Huntley WH Jr, Jackson DW, Lehmann M . Wavefront reconstruction imaging through random media. Appl Phys Lett 1966; 8: 311–313.

Leith EN, Upatnieks J . Holographic imagery through diffusing media. J Opt Soc Am 1966; 56: 523.

Kogelnik H, Pennington KS . Holographic imaging through a random medium. J Opt Soc Am 1968; 58: 273–274.

Huang D, Swanson EA, Lin CP, Schuman JS, Stinson WG et al. Optical coherence tomography. Science 1991; 254: 1178–1181.

Velten A, Willwacher T, Gupta O, Veeraraghavan A, Bawendi MG et al. Recovering three-dimensional shape around a corner using ultrafast time-of-flight imaging. Nat Commun 2012; 3: 745.

Leith E, Chen C, Chen H, Chen Y, Dilworth D et al. Imaging through scattering media with holography. J Opt Soc Am A 1992; 9: 1148–1153.

Indebetouw G, Klysubun P . Imaging through scattering media with depth resolution by use of low-coherence gating in spatiotemporal digital holography. Opt Lett 2000; 25: 212–214.

Li SP, Zhong JG . Dynamic imaging through turbid media based on digital holography. J Opt Soc Am A 2014; 31: 480–486.

Hsieh CL, Pu Y, Grange R, Laporte G, Psaltis D . Imaging through turbid layers by scanning the phase conjugated second harmonic radiation from a nanoparticle. Opt Express 2010; 18: 20723–20731.

Yaqoob Z, Psaltis D, Feld MS, Yang C . Optical phase conjugation for turbidity suppression in biological samples. Nat Photonics 2008; 2: 110–115.

Vellekoop IM, Mosk AP . Focusing coherent light through opaque strongly scattering media. Opt Lett 2007; 32: 2309–2311.

Aulbach J, Gjonaj B, Jhonson PM, Mosk AP, Lagendijk A . Control of light transmission through opaque scattering media in space and time. Phys Rev Lett 2011; 106: 103901.

Mosk AP, Lagendijk A, Lerosey G, Fink M . Controlling waves in space and time for imaging and focusing in complex media. Nat Photonics 2012; 6: 283–292.

Park J, Park JH, Yu H, Park YK . Focusing through turbid media by polarization modulation. Opt Lett 2015; 40: 1667–1670.

Ghielmetti G, Aegerter CM . Scattered light fluorescence microscopy in three dimensions. Opt Express 2012; 20: 3744–3752.

Ghielmetti G, Aegerter CM . Direct imaging of fluorescent structures behind turbid layers. Opt Express 2014; 22: 1981–1989.

Yang X, Hsieh CL, Pu Y, Psaltis D . Three-dimensional scanning microscopy through thin turbid media. Opt Express 2012; 20: 2500–2506.

Katz O, Small E, Silberberg Y . Looking around corners and through thin turbid layers in real time with scattered incoherent light. Nat Photonics 2012; 6: 549–553.

Katz O, Small E, Guan YF, Silberberg Y . Noninvasive nonlinear focusing and imaging through strongly scattering turbid layers. Optica 2014; 1: 170–174.

Popoff S, Lerosey G, Fink M, Boccara AC, Gigan S . Image transmission through an opaque material. Nat Commun 2010; 1: 81.

Zhou EH, Ruan HW, Yang CH, Judkewitz B . Focusing on moving targets through scattering samples. Optica 2014; 1: 227–232.

Harm W, Roider C, Jesacher A, Bernet S, Ritsch-Marte M . Lensless imaging through thin diffusive media. Opt Express 2014; 22: 22146–22156.

Naik DN, Singh RK, Ezawa T, Miyamoto Y, Takeda M . Photon correlation holography. Opt Express 2011; 19: 1408–1421.

Singh AK, Naik DN, Pedrini G, Takeda M, Osten W . Looking through a diffuser and around an opaque surface: a holographic approach. Opt Express 2014; 22: 7694–7701.

Osten W, Faridian A, Gao P, Körner K, Naik D et al. Recent advances in digital holography [Invited]. Appl Opt 2014; 53: G44–G63.

Takeda M, Wang W, Duan ZH, Miyamoto Y . Coherence holography. Opt Express 2005; 13: 9629–9635.

Naik DN, Ezawa T, Miyamoto Y, Takeda M . Real-time coherence holography. Opt Express 2010; 18: 13782–13787.

Paturzo M, Finizio A, Memmolo P, Puglisi R, Balduzzi D et al. Microscopy imaging and quantitative phase contrast mapping in turbid microfluidic channels by digital holography. Lab Chip 2012; 12: 3073–3076.

Bianco V, Paturzo M, Gennari O, Finizio A, Ferraro P . Imaging through scattering microfluidic channels by digital holography for information recovery in lab on chip. Opt Express 2013; 21: 23985–23996.

Takeda M, Singh A, Naik D, Pedrini G, Osten W . Holographic correloscopy - unconventional holographic techniques for imaging a three-dimensional object through an opaque diffuser or via a scattering wall: a review. IEEE Trans Industr Inform 2016; 12: 1631–1640.

Freund I . Looking through walls and around corners. Physica A 1990; 168: 49–65.

Freund I, Rosenbluh M, Feng SC . Memory effects in propagation of optical waves through disordered media. Phys Rev Lett 1988; 61: 2328–2331.

Bertolotti J, van Putten EG, Blum C, Lagendijk A, Vos WL et al. Non-invasive imaging through opaque scattering layers. Nature 2012; 491: 232–234.

Katz O, Heidmann P, Fink M, Gigan S . Non-invasive single-shot imaging through scattering layers and around corners via speckle correlations. Nat Photonics 2014; 8: 784–790.

Fienup JR . Phase retrieval algorithms: a comparison. Appl Opt 1982; 21: 2758–2769.

Takasaki KT, Fleischer W . Phase-space measurement for depth-resolved memory-effect imaging. Opt Express 2014; 22: 31426–31433.

Liu HY, Jonas E, Tian L, Zhong JS, Recht B et al. 3D imaging in volumetric scattering media using phase-space measurements. Opt Express 2015; 23: 14461–14471.

Goodman JW . Analogy between holography and interferometric image formation. J Opt Soc Am 1970; 60: 506–509.

Dainty JC . Speckle interferometry in astronomy. In: Johnson H, Allen C, editors. Symposium on Recent Advances in Observational Astronomy. 1981 p95–111.

Goodman JW . Speckle Phenomena in Optics: Theory and Applications. Englewood: Roberts & Company; 2007, p84.

Dainty JC . Laser Speckle and Related Phenomena. Berlin: Springer; 1975.

Acknowledgements

Mitsuo Takeda and Dinesh N Naik are thankful to the Alexander von Humboldt Foundation for the opportunity of their research stay at ITO, Universität Stuttgart.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Competing interests

The authors declare no conflict of interest.

Additional information

Note: Supplementary Information for this article can be found on the Light: Science & Applications’ website.

Supplementary information

Rights and permissions

This work is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in the credit line; if the material is not included under the Creative Commons license, users will need to obtain permission from the license holder to reproduce the material. To view a copy of this license, visit http://creativecommons.org/licenses/by-nc-sa/4.0/

About this article

Cite this article

Singh, A., Naik, D., Pedrini, G. et al. Exploiting scattering media for exploring 3D objects. Light Sci Appl 6, e16219 (2017). https://doi.org/10.1038/lsa.2016.219

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/lsa.2016.219

Keywords

This article is cited by

-

Directly and instantly seeing through random diffusers by self-imaging in scattering speckles

PhotoniX (2023)

-

Roadmap on chaos-inspired imaging technologies (CI2-Tech)

Applied Physics B (2022)

-

A compact single channel interferometer to study vortex beam propagation through scattering layers

Scientific Reports (2020)

-

Lensless light-field imaging through diffuser encoding

Light: Science & Applications (2020)

-

Optical orbital-angular-momentum-multiplexed data transmission under high scattering

Light: Science & Applications (2019)