Abstract

Although the effects of deleterious alleles often are predicted to be greater in stressful environments, there is no theoretical basis for this prediction and the empirical evidence is mixed. Here we characterized the effects of three types of abiotic stress (thermal, oxidative and hyperosmotic) on two sets of nematode (Caenorhabditis elegans) mutation accumulation (MA) lines that differ by threefold in fitness. We compared the survival and egg-to-adult viability between environments (benign and stressful) and between fitness categories (high-fitness MA, low-fitness MA). If the environment and mutation load have synergistic effects on trait means, then the difference between the high and low-fitness MA lines should be larger in stressful environments. Although the stress treatments consistently decreased survival and/or viability, we did not detect significant interactions between fitness categories and environment types. In contrast, we did find consistent evidence for synergistic effects on (micro)environmental variation. The lack of signal in trait means likely reflects the very low starting fitness of some low-fitness MA lines, the potential for cross-stress responses and the context dependence of mutational effects. In addition, the large increases in the environmental variance in the stressful environments may have masked small changes in trait means. These results do not provide evidence for synergism between mutation and stress.

Similar content being viewed by others

Introduction

It is commonly believed by evolutionary biologists that the effects of deleterious alleles are magnified in stressful environments, where ‘stress’ is defined as ‘circumstances in which absolute fitness is reduced below the maximum possible’ (Armbruster and Reed, 2005; critiqued by Martin and Lenormand, 2006 and Agrawal and Whitlock, 2010). This supposed relationship between environmental stress and mutation has wide-ranging implications, from conservation biology to agriculture and public health. However, as pointed out by Agrawal and Whitlock (2010), there is no theoretical basis for supposing that deleterious effects should be greater in stressful environments, and the empirical evidence is decidedly mixed (for example, Kondrashov and Houle, 1994; Shabalina et al., 1997; Vassilieva et al., 2000; Jasnos et al., 2008; Rutter et al., 2010; Matsuba et al., 2013). The relationship between environmental stress and deleterious mutation is conceptually analogous to the relationship between different deleterious mutations: biological intuition suggests that, on average, epistasis should be synergistic (Kondrashov, 1995; Gillespie, 2004, Ch. 3). However, the empirical evidence for epistasis being generally synergistic is weak (for example, Sanjuán and Elena, 2006) and theoretical investigations have shown that the kind of epistasis that evolves sometimes depends on the particulars (for example, Azevedo et al., 2006).

An additional consideration is the degree to which the specific properties of deleterious mutations interact with the stressfulness of the environment. Most studies that have investigated this question have focused on inbreeding depression (for recent review, Reed et al., 2012). Although inbreeding depression is obviously important in its own right, the mutations responsible for inbreeding depression are a biased sample of all deleterious mutations because mutations with additive effects do not contribute to inbreeding depression and mutations with even moderately large effects that are not mostly recessive will have been removed by selection.

The most straightforward way to investigate the cumulative effects of an unbiased sample of deleterious mutations is by means of a mutation accumulation (MA) experiment, in which spontaneous mutations are allowed to accumulate in a population for which selection has been rendered minimally efficient (Mukai, 1964; reviewed by Halligan and Keightley, 2009). A number of researchers have investigated the effects of environmental context (including stressful environments) on the cumulative effects of spontaneous mutations. Overall, there is no consistent relationship between environmental stress and the mean effects of mutations, but the among-line variance (mutational variance, VM) tends to be greater in stressful environments (for review, Martin and Lenormand, 2006; Agrawal and Whitlock, 2010). Moreover, it has long been known that the (micro)environmental component of variance (VE, that is, the variance within a genetically uniform population raised under uniform conditions) is consistently greater under conditions of both genetic stress (for example, under inbreeding or in MA lines) and environmental stress relative to a wild-type genotype in a benign environment. That is, deleterious mutations and environmental stress both consistently ‘decanalize’ the phenotype (Baer, 2008; Gibson, 2009).

The lack of consistent signal in trait means combined with the consistently larger trait variances under stressful conditions is informative. Clearly, there is a class of mutant alleles that can have different effects under stressful conditions than under benign conditions. One possible explanation is that the stress-dependence (or not) of a deleterious mutation depends on the effect size of the mutation, similar to the well-documented relationship between effect size and dominance, wherein highly deleterious alleles are mostly recessive, whereas alleles of small effect are close to additive (Crow and Simmons, 1983).

To explore whether the lack of consistent signal in trait means is a consequence of comparing across MA lines that differ only slightly in fitness, we tested the effects of stressful environments on two sets of MA lines that were chosen specifically on the basis of large differences in fitness. Five lines were identified from each tail of the fitness distribution of a set of ~70 MA lines that accumulated mutations for 250 generations; on average, the five ‘high fitness’ MA lines have threefold higher fitness than the five ‘low fitness’ lines (Matsuba et al., 2012). Various lines of evidence suggest that the per-genome mutation rate in the base population is unlikely to be much less than one-half nor more than about two per generation (Denver et al., 2004; Phillips et al., 2009; Lipinski et al., 2011; Denver et al., 2012). Provided that the distribution of mutations among lines is Poisson (the standard assumption), it is very unlikely that the different sets of lines differ by nearly as much as threefold in the number of mutations unless the mutation rate itself has evolved. Preliminary evidence from whole-genome sequencing (~90% of the genome covered ⩾10 ×) suggests that the average rates of point mutations and small indels in MA lines derived from the high-fitness and low-fitness lines do not differ significantly (C F B, unpublished results). Thus, the difference in fitness between the high-fitness and low-fitness lines is probably not due to different average numbers of mutations between high-fitness and low-fitness lines.

We exposed the 10 MA lines and their common ancestor (G0) to three types of abiotic stress. As the influence of chronic thermal stress on mutational effects has been previously characterized (Vassilieva et al., 2000; Baer et al., 2006; Matsuba et al., 2013), we looked at acute thermal stress, imposing a treatment that was lethal by 30 h. We also exposed the nematodes to chronic oxidative stress (potential relationship with mutation is somewhat unclear, Joyner-Matos et al., 2011, 2013) and chronic hyperosmotic stress. We compared the trait mean for survival and egg-to-adult viability between environments (benign, stressful) and between fitness categories (high fitness, low fitness). If fitness effects are magnified under stressful conditions, then the reduction in survival and viability of the low-fitness MA lines relative to the high-fitness lines should be greater in the stressful environments than in the benign environments (as in inbreeding-stress interactions, Reed et al., 2012).

Materials and methods

Stocks and assay preparation

The 10 starting stocks (generation 250) from the Matsuba et al. (2012) second order MA experiment and the canonical Baer lab N2 ancestor (Baer et al., 2005) were acquired as live worms at the time that Matsuba et al. conducted their fitness assay. All lines were cryopreserved using standard methods. Unless otherwise noted, all nematodes were maintained at 20 °C, cultured on nematode growth medium (NGM) agar plates (60 mm diameter) that were inoculated with 50 μl of the OP50 strain of Escherichia coli.

Assays were conducted in two blocks, with each block containing the ancestral control (generation 0, G0) and a randomly selected set of high-fitness MA lines and low-fitness MA lines (MA generation 250). As noted in the Statistical Analyses section, the G0 data were removed from the analysis; further methodological details about G0 are presented in Supplementary Information. Immediately after the MA lines were thawed, five of the individuals that had been frozen and then thawed were randomly selected to start five replicates. The replicated MA lines were carried through three generations of single-individual descent at 4-day intervals. The MA line replicates then were expanded to large population size for one generation and age-synchronized with hypochlorite bleaching. Eggs isolated by the bleaching were allowed to develop to adulthood in standard conditions; the resulting gravid adults were used in the thermal stress assay and the eggs from the gravid adults (gravid adults and eggs picked on the same day) were used in the oxidative and hyperosmotic stress assays. In each assay we started twice as many ‘stress’ plates/wells as ‘benign’ (control) plates/wells to account for failure of the stress treatment; all ‘stress’ plates/wells in which the nematodes were alive by the first check (thermal stress) or at least one egg hatched (oxidative and osmotic stresses) were used.

Stress assays

The acute thermal stress assay was modified from Lithgow et al. (1995) and compared the survival of gravid adult nematodes at 35 °C with those in benign conditions (20 °C). Adult nematodes were placed in groups of 10 on pre-warmed (35 °C) or control (20 °C) NGM agar plates that were inoculated with E. coli. There were two ‘stress’ (35 °C) plates and one ‘benign’ (20 °C) plate for each MA line replicate. Plates were assigned random numbers and handled in random numerical order; plates were maintained in separate incubators. We analyzed the proportion of nematodes on each plate that survived to 20 h.

The chronic oxidative stress assay was modified from Yanase et al. (2002) and measured the ability of nematodes to develop from egg to adulthood in the presence of a chemical that induces singlet oxygen production. Paraquat (N,N’-dimethyl-4,4’-bipyridinium dichloride, from Sigma Aldrich, St Louis, MO, USA) was added to the NGM agar to a final concentration of 0.5 mM after the agar was autoclaved but before it was poured into the plates. Plates were inoculated with E. coli; because bacterial growth was somewhat slowed by the paraquat, we added substantial amounts of bacteria to the agar surface when nematodes were added to ensure that nematodes had sufficient food. Eggs were placed in groups of 10 on paraquat-containing plates or on control plates maintained at 20 °C. There were two ‘stress’ (paraquat) plates and one ‘benign’ (NGM) plate for each MA line replicate. Plates were assigned random numbers and handled in random numerical order. Plates were monitored daily for up to 7 days and the number of surviving nematodes and developmental stage of each nematode was recorded. The assay for a given plate was stopped when individuals on that plate reached the gravid adult stage. After nematodes were visibly gravid (but before they laid eggs) they were transferred individually to fresh, seeded NGM plates and incubated at 20 °C for 24 h. After the 24 h, the adult nematodes were removed, and plates were incubated another 24 h at 20 °C to allow hatching. Plates were stored at 4 °C until stained and counted (Baer et al., 2005) for the purposes of confirming fitness category and to confirm that the assay successfully imposed stress (by definition, 'stressful' environments decrease fitness, Martin and Lenormand, 2006). We analyzed the proportion of nematodes on a plate that survived to Day 3, the first day in which gravid adult nematodes were found on any plate. To assess developmental success, we analyzed egg-to-adult viability as a proportion of the 10 eggs placed on each plate.

The chronic hyperosmotic stress assay was modified from Solomon et al. (2004) and measured the ability of nematodes to develop from egg to adulthood in the presence of elevated salt. Sodium chloride was added to standard NGM (~50 mM NaCl) to a final concentration of 290 mM NaCl. Because the ‘high salt’ 60 × 15 mm agar plates dried and cracked, we conducted this assay in 24-well microwell plates. Each microwell received 3 ml of agar and was inoculated with E. coli; we did not note any effect of salt on bacterial growth. Eggs were placed in groups of five into each high salt well and in groups of 10 into each NGM well. There were four ‘stress’ (high salt) wells and one ‘benign’ (NGM) well per MA line replicate. The organization was randomized across each 24-well microwell plate. Wells were monitored daily, blind to ID, for up to 9 days and the number of surviving nematodes and developmental stage of each nematode was recorded. The assay for a given well was stopped when individuals in that well reached the gravid adult stage. Gravid adults were transferred to benign conditions for the reproduction assay (as above). We analyzed the proportion of nematodes in a well that survived to Day 3 and egg-to-adult viability as a proportion of the 5 or 10 eggs placed in each well.

We confirm fitness categorization and efficacy of the chronic stress treatments using the first day’s reproduction of nematodes that were maintained in control conditions (NGM agar). We analyzed survival (pooled across line) in the thermal stress assay to confirm the efficacy of the thermal stress treatment.

Statistical analyses

The typical approach used in nematode MA experiments is to assay the MA lines and their cryopreserved ancestor at the same time (for example, Keightley and Caballero, 1997). The ancestral (G0) control was initially included in these experiments but its performance was atypically bad in one block (atypical with respect to numerous fitness and performance assays of this stock over almost a decade). Rather than scale results relative to an unreliable control, we omit the ancestor altogether and simply compare the high-fitness and low-fitness lines to each other. Because we are interested in the relative performance of the two groups under different conditions and not in parameterizing mutational properties (for example, the mutational variance), the absence of the ancestral control is not a problem. We present G0 data in the Supplementary Tables.

Effects of fitness category and environment on trait mean

We analyzed five traits: survival in the acute thermal stress assay and survival and egg-to-adult viability in the chronic oxidative and hyperosmotic stress assays. To characterize the degree to which each of the five traits differed across fitness categories (high-fitness MA and low-fitness MA) and environment type (benign, stressful), we used restricted maximum likelihood as implemented in the MIXED procedure of SAS (code for all analyses are in the Supplementary Text). The independent variables were Environment (Benign, Stress), Fitness (High, Low), Block (1 or 2), Line (MA line nested within Fitness*Environment) and Replicate (nested within Line and serving as the residual variance). Environment, fitness and block were fixed effects; the other effects were random. Degrees of freedom were determined by the Kenward–Rogers method. WetestedthemodelTrait=Environment+Fitness+Block+Environment*Fitness+Block*Environment+Block*Fitness+Block*Environment*Fitness+Line(Environment × Fitness × Block)+Replicate(Line[Environment × Fitness × Block]). When the Block term was not significant, we repeated the analysis with the term removed.

We used χ2-tests in SigmaPlot (v. 11.0) to determine whether the distributions of ‘zeros’ (replicates in which no nematodes survived or achieved egg-to-adult viability) differed between environment types or fitness categories.

Change in variance

To assess the relationship between mutation load, environmental stress and the microenvironmental component of variance (VE), we calculated the squared within-line coefficient of variation for each MA line. Each data point was divided by its line mean; the variance among replicates of a line provides a mean-standardized estimate of VE. Following Crow (1958), we designate the squared within-line CV as Iw; I is dimensionless and permits meaningful comparison of traits that are measured on different scales. We used a Wilcoxon-Signed Rank test to determine whether Iw (pooled across lines and traits) differs between environments. We used a Mann–Whitney Rank Sum test (nonparametric T test) to determine whether Iw differs between fitness categories (pooled across trait) within each environment. We also used a Mann–Whitney Rank Sum test to compare the Iw differences between high and low-fitness MA lines (pooled across trait). These tests were conducted in SigmaPlot.

Results

Confirmation of fitness categorization and efficacy of stress treatments

Nematodes from the low-fitness MA lines have, on average, 75% lower first day’s reproductive output in benign conditions (NGM agar) than do nematodes from the high-fitness MA lines (fitness, P=0.035; Supplementary Table S1). The efficacy of the stress treatments is illustrated by the significant Environment terms for all five traits (Table 1) and is apparent from the ~56% decrease in nematode survival in the thermal stress treatment (P-values are in Table 1, line means in Supplementary Table S2) and ~72% and ~55% decrease in first day’s reproductive output of nematodes in the oxidative and hyperosmotic stress treatments, respectively (fitness, P=0.003; environment, P=0.019; fitness × environment, P=0.133; Supplementary Table S1). Summed across the five traits, the number of MA replicates in which none of nematodes survive or achieve egg-to-adult viability (that is, the number of ‘zeros’ out of 941 data points) is nearly 10-fold higher in the stressful environments than in the benign (stress, 250 ‘zeros’; benign, 28 ‘zeros’; P<0.0001).

Differences in stress tolerance between fitness categories

Although exposure to thermal stress for 20 h decreases mean survival in all lines (Supplementary Table S2), survival does not differ significantly between fitness categories (Table 1).

In the oxidative stress assay we analyzed two traits: (1) the proportion of nematodes that survive to Day 3, which was the first day that gravid adults were found on any of the plates and (2) the egg-to-adult viability over the 7-day assay (MA line means and variances and assay block means are in Supplementary Table S3). The proportion of nematodes that survive 3 days is significantly lower in the stressful environment than in the benign and lower in the low-fitness MA lines than in the high-fitness MA lines (Table 1). This is the only trait for which we detect a significant Fitness × Environment term. However, the interaction is in the opposite direction of that predicted by the stress-dependent mutation effect hypothesis; compared with the high-fitness lines, four of the five low-fitness lines do relatively better under stressful conditions than under benign conditions (Supplementary Table S3). Although we detect a significant Block term for this trait (Supplementary Table S3), none of the interaction terms containing Block is significant (all P⩾0.182). Nematodes maintained in benign conditions reach the gravid adult stage in 3 or 4 days; nematodes in the oxidative stress environment require an average of 6 days (Supplementary Table S3). The proportion of nematodes that achieves egg-to-adult viability is significantly lower in the stressful environment than in the benign and significantly lower in the low-fitness lines than in high-fitness lines.

We analyzed the same two traits in the hyperosmotic stress assay, except that egg-to-adult viability was measured for 9 days (Supplementary Table S4). The ability of nematodes to survive to 3 days differs by environment but not by fitness category (Table 1). Nematodes in benign conditions develop to the gravid adult stage in 3 days; nematodes maintained on hyperosmotic agar require an average of 6 days. Although significantly fewer nematodes achieve egg-to-adult viability in the stressful environment than in the benign, viability does not differ between fitness categories (Table 1). The Block and the Block × Environment terms are significant for this trait (P⩽0.015; Supplementary Table S4).

Summed across traits and environments, the low-fitness MA lines have significantly more ‘zeros’ (190 ‘zeros’; 16 in benign, 174 in stress) than do the high-fitness MA lines (88 ‘zeros’; 12 in benign, 76 in stress) (P<0.001). Although ‘zeros’ are more prevalent in the stressful environments, there is no significant relationship between the effects of fitness category and environment type on the number of ‘zero’ plates (P=0.259).

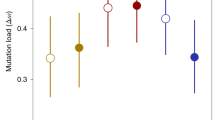

Changes in within-line variance

Pooled across traits and fitness categories, Iw is significantly greater in the stressful environments than in the benign (benign, 0.43±0.09; stress, 1.24±0.3; P=0.005; Table 2). Iw is significantly higher in the low-fitness lines than in the high-fitness lines in both environments (benign, P=0.044; stress, P=0.045). Pooled across the five traits, the change in Iw between stressful and benign environments does not differ significantly between fitness categories (high fitness, 0.19±0.1; low fitness, 1.42±0.5; P=0.44).

Discussion

Biological intuition leads to the supposition that deleterious effects should be magnified in stressful environments, but the evidence is decidedly inconsistent. One possible source of variation is that the stress-sensitivity of deleterious effects may depend on the magnitude of the effect under non-stressful conditions, analogous to the well-known relationship between dominance and effect size (Crow and Simmons, 1983). Here, we took advantage of two sets of MA lines previously identified to differ by about threefold in absolute fitness under benign conditions (Matsuba et al., 2012). This pattern in fitness is almost certainly due primarily to differences in the mutational effects rather than in the average number of mutations carried by the individual lines. Because the type of stress imposed potentially influences the strength of selection against mutations (for example, Wang et al., 2013), we exposed the MA lines to three classes of abiotic stress: acute thermal stress, chronic oxidative stress and chronic hyperosmotic stress.

The hypothesis of synergism between mutation load and environmental stress predicts that differences in mean survival and viability between the low-fitness and high-fitness MA lines should be greater in the stressful environments than in the benign. Our results do not provide any evidence to support this hypothesis. In fact, it is only in the benign environment that trait means of the low-fitness MA lines are consistently lower than those of the high-fitness lines. This pattern is not without precedent (Kishony and Leibler, 2003; Jasnos et al., 2008) and typically is attributed to context-dependent mutational effects (for example, Rutter et al., 2010). This lack of consistent synergism between fitness and environment also could occur when the lines reach a lower ‘plateau’ beyond which the only possible trait value is zero. Zeros are more prevalent in the low-fitness MA lines than in the high-fitness MA lines and more prevalent in the stressful environments than in the benign (although the fitness × environment term was not significant). Finally, the lack of statistical support for synergy (the fitness × environment terms) may partly reflect those cases in which low-fitness MA lines have higher survival or viability than do the high-fitness MA lines. A pattern emerges from the comparison of low-fitness MA lines with block-specific high-fitness MA means. Across the three benign environments, only two low-fitness MA lines have higher survival/viability than the high-fitness MA lines in the benign environments (MA Line 508 in thermal stress assay, MA Line 550 in both traits in the hyperosmotic stress assay; see Supplementary Tables). In the stressful environments, low-fitness MA lines frequently outperform high-fitness MA lines. Three of the five low-fitness MA lines have higher survival in thermal stress than do the high-fitness MA lines and (a different set of) three low-fitness MA lines have higher survival in oxidative stress. Interestingly, none of the low-fitness lines outperforms the high-fitness MA mean in the hyperosmotic stress assay. These patterns may reflect a form of cross-stress response (for example, Dragosits et al., 2013) as there is potential for overlap between physiological responses to genomic and abiotic stresses (Reed et al., 2012), and may reflect common features that underlie cellular responses to thermal and oxidative stresses (for example, Morano et al., 2012; Choe, 2013).

The current study joins a long list of works in which exposure to environmental stress does not necessarily alter trait means but does alter variances. Across taxa, stress tends to increase mutational (among-line) variance in MA lines and in inbred lines (for review, Martin and Lenormand, 2006; Agrawal and Whitlock, 2010; Reed et al., 2012). The relationship between stress and within-line, or microenvironmental, variance is not as clear, in part because studies tend to report within-line variance but do not test hypotheses about it (for example, Fry and Heinsohn, 2002). Several studies have documented increases in microenvironmental variance with exposure to a new, and generally stressful, environment (for example, Rutter et al., 2010); this increased variance could reflect variance in expression of individual genes (for example, Kristensen et al., 2006). As expected, given previous work with Caenorhabditis MA lines (Baer, 2008; Matsuba et al., 2012), we detected higher within-line variance in the low-fitness MA lines than in the high-fitness MA lines. In addition, within-line variance was higher when measured in the stressful environment, particularly when pooled across the five traits. Although synergistic effects of genomic and abiotic stresses are apparent in a visual inspection of the data, the small number of MA lines limits the power to detect a significant interaction.

In summary, the lack of significant differences in trait means between fitness categories likely reflects multiple factors, including the context dependence of mutational effects, the potential for cross-stress responses and the very low starting fitness of some low-fitness MA lines. In addition, large increases in the environmental variance in the stressful environments may have masked small changes in trait means. These results provide no support for synergism between mutation and stress. It is possible that we could detect a synergistic effect between environmental stress and mutation load if the abiotic stress also imposed a density-dependent effect (Agrawal and Whitlock, 2010).

Data archiving

Data available from the Dryad Digital Repository: http://dx.doi.org/10.5061/dryad.vq452.

References

Agrawal AF, Whitlock MC . (2010). Environmental duress and epistasis: how does stress affect the strength of selection on new mutations? Trends Ecol Evol 25: 450–458.

Armbruster P, Reed DH . (2005). Inbreeding depression in benign and stressful environments. Heredity 95: 235–242.

Azevedo RBR, Lohaus R, Srinivasan S, Dang KK, Burch CL . (2006). Sexual reproduction selects for robustness and negative epistasis in artificial gene networks. Nature 440: 87–90.

Baer CF . (2008). Quantifying the decanalizing effects of spontaneous mutations in rhabditid nematodes. Am Nat 172: 272–281.

Baer CF, Phillips N, Ostrow D, Avalos A, Blanton D, Boggs A et al. (2006). Cumulative effects of spontaneous mutations for fitness in Caenorhabditis: role of genotype, environment, and stress. Genetics 174: 1387–1395.

Baer CF, Shaw F, Steding C, Baumgartner M, Hawkins A, Houppert A et al. (2005). Comparative evolutionary genetics of spontaneous mutations affecting fitness in Rhabditid nematodes. Proc Natl Acad Sci USA 102: 5785–5790.

Choe KP . (2013). Physiological and molecular mechanisms of salt and water homeostasis in the nematode Caenorhabditis elegans. Am J Physiol Regul Integr Comp Physiol 305: R175–R186.

Crow JF . (1958). Some possibilities for measuring selection intensities in man. Hum Biol 30: 1–13.

Crow JF, Simmons MJ . (1983). The mutation load in Drosophila. In: Ashburner M, Carson HL, Thompson JN (eds). The Genetics and Biology of Drosophila. Academic Press: London, UK. pp 1–35.

Denver DR, Morris K, Kewalramani A, Harris KE, Chow A, Estes S et al. (2004). Abundance, distribution, and mutation rates of homopolymeric nucleotide runs in the genome of Caenorhabditis elegans. J Mol Evol 58: 587–595.

Denver DR, Wilhelm LJ, Howe DK, Gafner K, Dolan PC, Baer CF . (2012). Variation in base-substitution mutation in experimental and natural lineages of Caenorhabditis nematodes. Genome Biol Evol 4: 513–522.

Dragosits M, Mozhayskiy V, Quinones-Soto S, Park J, Tagkopoulos I . (2013). Evolutionary potential, cross-stress behavior and the genetic basis of acquired stress resistance in Escherichia coli. Mol Syst Biol 9: 643.

Fry JD, Heinsohn SL . (2002). Environment dependence of mutational parameters for viability in Drosophila melanogaster. Genetics 161: 1155–1167.

Gibson G . (2009). Decanalization and the origin of complex disease. Nat Rev Genet 10: 134–140.

Gillespie JH . (2004) Population Genetics: A Concise Guide. Johns Hopkins University Press: Baltimore, MD, USA.

Halligan DL, Keightley PD . (2009). Spontaneous mutation accumulation studies in evolutionary genetics. Annu Rev Ecol Evol Syst 40: 151–172.

Jasnos L, Tomala K, Paczesniak D, Korona R . (2008). Interactions between stressful environment and gene deletions alleviate the expected average loss of fitness in yeast. Genetics 178: 2105–2111.

Joyner-Matos J, Bean LC, Richardson H, Sammeli T, Baer CF . (2011). No evidence of elevated germline mutation accumulation under oxidative stress in Caenorhabditis elegans. Genetics 189: 1439–1447.

Joyner-Matos J, Hicks KA, Cousins D, Keller M, Denver DR, Baer CF et al. (2013). Evolution of a higher intracellular oxidizing environment in Caenorhabditis elegans under relaxed selection. PLoS One 8: e65604.

Keightley PD, Caballero A . (1997). Genomic mutation rates for lifetime reproductive output and lifespan in Caenorhabditis elegans. Proc Natl Acad Sci USA 94: 3823–3827.

Kishony R, Leibler S . (2003). Environmental stresses can alleviate the average deleterious effect of mutations. J Biol 2: 14.

Kondrashov AS . (1995). Contamination of the genome by very slightly deleterious mutations: why have we not died 100 times over? J Theor Biol 175: 583–594.

Kondrashov AS, Houle D . (1994). Genotype-environment interactions and the estimation of the genomic mutation rate in Drosophila melanogaster. Proc R Soc Lond B 258: 221–227.

Kristensen TN, Sorensen P, Pedersen KS, Kruhoffer M, Loeschcke V . (2006). Inbreeding by environmental interactions affect gene expression in Drosophila melanogaster. Genetics 173: 1329–1336.

Lipinski KJ, Farslow JC, Fitzpatrick KA, Lynch M, Katju V, Bergthorsson U . (2011). High spontaneous rate of gene duplication in Caenorhabditis elegans. Curr Biol 21: 1–5.

Lithgow GJ, White TM, Melov S, Johnson TE . (1995). Thermotolerance and extended life-span conferred by single-gene mutations and induced by thermal stress. Proc Natl Acad Sci USA 92: 7540–7544.

Martin G, Lenormand T . (2006). The fitness effect of mutations across environments: a survey in light of fitness landscape models. Evolution 60: 2413–2428.

Matsuba C, Lewis S, Ostrow DG, Salomon MP, Sylvestre L, Tabman B et al. (2012). Invariance (?) of mutational parameters for relative fitness over 400 generations of mutation accumulation in Caenorhabditis elegans. Genes Genom Genet 2: 1497–1503.

Matsuba C, Ostrow DG, Salomon MP, Tolani A, Baer CF . (2013). Temperature, stress and spontaneous mutation in Caenorhabditis briggsae and Caenorhabditis elegans. Biol Lett 9: 20120334.

Morano KA, Grant CM, Moye-Rowley WS . (2012). The response to heat shock and oxidative stress in Saccharomyces cerevisiae. Genetics 190: 1157–1195.

Mukai T . (1964). The genetic structure of natural populations of Drosophila melanogaster. I. Spontaneous mutation rate of polygenes controlling viability. Genetics 50: 1–19.

Phillips N, Salomon M, Custer A, Ostrow D, Baer CF . (2009). Spontaneous mutational and standing genetic (co)variation at dinucleotide microsatellites in Caenorhabditis briggsae and Caenorhabditis elegans. Mol Biol Evol 26: 659–669.

Reed DH, Fox CW, Enders LS, Kristensen TN . (2012). Inbreeding-stress interactions: evolutionary and conservation consequences. Ann NY Acad Sci 1256: 33–48.

Rutter MT, Shaw FH, Fenster CB . (2010). Spontaneous mutation parameters for Arabidopsis thaliana measured in the wild. Evolution 64: 1825–1835.

Sanjuán R, Elena SF . (2006). Epistasis correlates to genomic complexity. Proc Natl Acad Sci USA 103: 14402–14405.

Shabalina SA, Yapolsky LY, Kondrashov AS . (1997). Rapid decline of fitness in panmictic populations of Drosophila melanogaster maintained under relaxed natural selection. Proc Natl Acad Sci USA 94: 13034–13039.

Solomon A, Bandhakavi S, Jabbar S, Shah R, Beitel GJ, Morimoto RI . (2004). Caenorhabditis elegans OSR-1 regulates behavioral and physiological responses to hyperosmotic environments. Genetics 167: 161–170.

Vassilieva LL, Hook AM, Lynch M . (2000). The fitness effects of spontaneous mutations in Caenorhabditis elegans. Evolution 54: 1234–1246.

Wang AD, Sharp NP, Agrawal AF . (2013). Sensitivity of the distribution of mutational fitness effects to environment, genetic background, and adaptedness: a case study with Drosophila. Evolution 68: 840–853.

Yanase S, Yasuda K, Ishii N . (2002). Adaptive responses to oxidative damage in three mutants of Caenorhabditis elegans (age-1, mev-1 and daf-16) that affect life span. Mech Ageing Dev 123: 1579–1587.

Acknowledgements

This work was supported by Eastern Washington University (EWU) start-up funds to JJ-M, by an EWU Biology Department Minigrant to JRA and by NSF DEB-0717167 to CFB.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Competing interests

The authors declare no conflict of interest.

Additional information

Supplementary Information accompanies this paper on Heredity website

Supplementary information

Rights and permissions

About this article

Cite this article

Andrew, J., Dossey, M., Garza, V. et al. Abiotic stress does not magnify the deleterious effects of spontaneous mutations. Heredity 115, 503–508 (2015). https://doi.org/10.1038/hdy.2015.51

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/hdy.2015.51