Abstract

Psychophysical studies have frequently found that adults with normal hearing exhibit systematic errors (biases) in their auditory localisation judgments. Here we tested (i) whether systematic localisation errors could reflect reliance on prior knowledge, as has been proposed for other systematic perceptual biases, and (ii) whether auditory localisation biases can be reduced following training with accurate visual feedback. Twenty-four normal hearing participants were asked to localise the position of a noise burst along the azimuth before, during, and after training with visual feedback. Consistent with reliance on prior knowledge to reduce sensory uncertainty, we found that auditory localisation biases increased when auditory localisation uncertainty increased. Specifically, participants mis-localised auditory stimuli as being more eccentric than they were, and did so more when auditory uncertainty was greater. However, biases also increased with eccentricity, despite no corresponding increase in uncertainty, which is not readily explained by use of a simple prior favouring peripheral locations. Localisation biases decreased (improved) following training with visual feedback, but the reliability of the visual feedback stimulus did not change the effects of training. We suggest that further research is needed to identify alternative mechanisms, besides use of prior knowledge, that could account for increased perceptual biases under sensory uncertainty.

Similar content being viewed by others

Introduction

In daily life we are constantly surrounded by sounds originating from different locations within our environment, and we are usually able to estimate the source of the sound without any noticeable bias. However, psychophysical studies have found that, when asked to align a visual stimulus with a sound source, human adults show systematic errors in their judgments. Many studies report overestimations of the azimuth of a sound source1,2, though underestimations have been documented too3. Here we tested whether (1) such biases might indicate reliance on a “prior” for sound location, in line with Bayesian Decision Theory4 (detailed below), and whether (2) subjects can learn to reduce such biases after training with accurate visual feedback.

Lewald and Ehrenstein1 asked healthy participants (with normal sight and hearing) to adjust the position of a laser spot toward the perceived location of a band-pass filtered noise. Results indicated a general tendency to overestimate auditory eccentricity, with greater overestimations for more eccentric sound sources (reaching up to 10.4 degrees overestimation at 22 degrees eccentricity). In addition to eccentricity1,5, auditory localisation errors have been found to vary in magnitude according to the elevation6, frequency1, or bandwidth7 of the auditory stimulus, and according to the participant’s age2, eye8,9 or head10 position, and method of response10.

Differences in patterns of systematic error across response methods, or different eye and head positions, suggest that biases in somatosensory or visual modalities may contribute to the auditory localisation bias. For example, participants asked to point toward a transient visual stimulus have been found to overestimate the target’s position when fixating straight ahead, but to underestimate it when eye movements toward the target are allowed11 (but note biases independent of eye movements have also been reported12). However, regardless of the direction, size, or cause of the visual-auditory misalignments measured, the question arises as to why any perceived systematic discrepancy exists? Visual and auditory space have different frames of reference: visual space is initially eye-centred, based on direct projections to the retina, whereas auditory space is initially head-centred, computed from binaural differences and spectral cues. Consequently, one proposed explanation is that auditory-visual spatial mismatches arise due to shortcomings in accounting for the position of the eyes relative to the head when relating auditory to visual representations of location13,14. However, systematic errors have been documented even when the eyes remain stationary at straight ahead with respect to the head1,3,14. Moreover, humans continuously receive visual and auditory feedback from their environment, which should enable them to detect and correct for misalignments in cross-modal spatial representations. For example, wearing prism glasses causes the visual field to shift, altering the visual-motor mapping, but after a brief period of exposure, human adults quickly adapt to the visual displacement15. Hence, it is unclear why humans would not learn to similarly adapt or ‘recalibrate’ their visual-auditory mapping, so as to reduce any spatial inconsistencies.

Human perception and action are increasingly understood in terms of Bayesian Decision Theory (BDT16,17,18;). There is evidence that the nervous system reduces the overall uncertainty of estimates by combining multiple sensory estimates both with each other and also with prior knowledge18,19,20. The ideal (Bayesian) observer would change their relative reliance on each estimate (e.g. on vision vs. audition19), and on prior knowledge, as the relative reliabilities (i.e. signal-to-noise ratios) of each changes. This theoretical prediction is frequently met by behavioural data18,19,20, and also, more recently, by neuronal representations in animal models21.

The BDT framework has provided novel explanations of several important perceptual phenomena. For example, the classic effect of “visual capture” on non-visual perception in multisensory tasks has been shown to reverse when the relative cue reliabilities change19,20; and this behaviour is also observed in a BDT idea-observer, for whom perception is driven by the reliability-weighted average of each sensory estimate. Additionally – and of direct relevance to the present study – previously unexplained systematic biases in perception have been proposed to reflect Bayesian reliance on prior knowledge. For example, humans’ systematic underestimation of the speed of moving objects has been explained by use of a “slow motion prior”, reflecting the real-world statistics that objects are most likely to be static in natural visual scenes22,23. More recently, an equivalent slow-motion prior, leading to underestimations in the speed of sound motion has been reported24.

Evidence that systematic biases reflect the use of Bayesian priors come from findings that biases increase as sensory uncertainty increases. For example, the speed of a lower-contrast visual motion stimulus is more difficult to judge precisely and is also perceived as slower25, consistent with increased reliance on a prior for slow speeds22. In the present study we asked whether a similar approach might account for long-documented but, as yet, unexplained biases in human auditory localisation. Specifically, we tested, to our knowledge for the first time, whether auditory localisation biases increase as sensory uncertainty increases (i.e. signal-to-noise ratio decreases). Such a result would be consistent with use of a Bayesian prior for the eccentricity of sound sources.

A second aim of our study was to ask whether auditory localisation biases could be reduced after training with accurate visual feedback, and if so, whether feedback reliability impacted learning. Previous studies have found that sound localisation biases introduced experimentally by manipulating auditory cues are reduced following training26,27,28. To account for a bias, the perceptual system needs to identify the cause of the error as systematic as opposed to random (reflecting uncertainty in the sensory representation or prior). In line with this, adults have been found to more quickly reduce systematic errors in their motor reaching responses when the position of visual feedback was more certain29. Here we tested whether the effect of training on reductions in auditory localisation errors would similarly depend on the reliability of visual feedback. If participants were using visual feedback to adjust systematic errors (in the prior or sensory representation) we would expect participants trained with more reliable visual feedback to show greater improvements in accuracy. Alternatively, improvements in auditory localisation could reflect reduced reliance on prior knowledge due to changes in the uncertainty of the prior or sensory representation.

Results

First we considered whether biases in auditory localisation might be explained by the existence of a Bayesian prior. According to BDT, the influence of prior knowledge should increase when sensory information is less reliable. To test this, we measured participants’ auditory and visual localisation biases and variability, before any training, and assessed whether biases in auditory localisation increased as the signal-to-noise ratio (reliability) of the auditory stimulus decreased.

Variability and bias before training

Participants localised “more reliable” (A1, V1) and “less reliable” (A2, V2) auditory and visual stimuli. To manipulate the reliability of visual and auditory localisation, the background noise level was increased and the number of visible LEDs was reduced (see Method for details). Figure 1 shows mean auditory and visual localisation variability for each of the locations at which stimuli were presented (A) and across all locations (B). As intended, increasing the background noise level significantly increased the variability (i.e. reduced the reliability) of auditory localisation (σA2 > σA1, t[23] = 5.88, p < 0.001). Similarly, reducing the number of visible LEDs significantly increased the variability of visual localisation (σV2 > σV1, t[23] = 7.94, p < 0.001). Hence, our cue manipulations succeeded in varying sensory uncertainty.

(A) Mean variability at each location for each stimulus. (B) Mean variability across locations for each stimulus. *95% CI excludes 0; **99% CI excludes 0. (C) Mean bias at each location for each stimulus. **Means differ significantly on paired t-test with p < 0.01. (D) Mean bias for each stimulus across all locations tested. Grey dotted line in C indicates the line predicted by responding according to the mean of the target stimulus set. Black dotted line in C indicates the line predicted by responding according to the mean of the speakers presenting background noise. Error bars represent the standard error of the mean.

Figure 1C shows mean auditory and visual localisation biases at each of the locations at which stimuli were presented. Participants tended to overestimate the eccentricity of auditory stimuli at each of the locations tested, especially the “less reliable” auditory stimulus A2. Estimates of visual stimuli were more accurate. Biases are not explained by participants’ responding towards the mean location of the target stimulus set (depicted in Fig. 1C by the grey dashed line) or the mean location of the background speaker set (depicted in Fig. 1C by the black dashed line).

If participants combined sensory evidence with prior knowledge in the manner of an ideal (Bayesian) observer, they would weight prior knowledge more as sensory evidence becomes less reliable (more uncertain). Hence, if biases in localisation reflect (at least partly) the use of a prior, biases would be expected to increase as the reliability of sensory information decreases. As Fig. 1D shows, auditory localisation biases were significantly greater for the less reliable auditory cue than more reliable auditory cue (μA2 > μA1, t[23] = 7.56, p < 0.001). Mean biases (across locations) were also significantly greater for the less reliable visual cue than more reliable visual cue (μV2 > μV1, t[23] = 2.83, p = 0.009), however, participants did not consistently overestimate the azimuth of less/more reliable visual stimuli across all locations tested (see Fig. 1C).

Effects of eccentricity on variability and bias

In addition to the overall effects described in Fig. 1B and 1D, we also looked at the patterns of variability and bias across different locations (azimuths); Fig. 1A and 1C. Repeated-measures ANOVAs with stimulus reliability (A1, A2 or V1, V2) and azimuth (0, 2, 6, 9, 13 degrees) as within-subject factors were used to assess whether there was any effect of azimuth, or any interaction between reliability and azimuth, on localisation variability or bias. Where the assumption of sphericity was not met, Greenhouse-Geisser corrected values are reported.

For localisation variability with the auditory stimuli (A1, A2; Fig. 1A), a repeated-measures ANOVA indicated that there was a significant effect of stimulus reliability (F[1, 22] = 31.09, p < 0.001), but no significant effect of azimuth (F[2.78, 61.24] = 1.02, p = 0.385) and no significant interaction between reliability and azimuth (F[2.61, 57.43] = 1.52, p = 0.224). For localisation variability with the visual stimuli (V1, V2; Fig. 1A), a repeated-measures ANOVA indicated that there was a significant effect of both stimulus reliability (F[1, 23] = 63.00, p < 0.001) and azimuth (F[4, 92] = 17.27, p < 0.001), but no significant interaction between reliability and azimuth (F[4, 92] = 0.53, p = 0.715). Besides the main effects of our reliability manipulations (also seen in Fig. 1B), this shows (i) for audition, no further effect or interaction related to azimuth, i.e. similar variability across azimuths, whereas (ii) for vision, a main effect related to azimuth, with reliability decreasing away from central vision, as might be expected.

For localisation bias with the auditory stimuli (A1, A2; Fig. 1C), a repeated-measures ANOVA showed a significant effect of stimulus reliability (F[1, 22] = 54.24, p < 0.001) and azimuth (F[1.79, 39.28] = 5.08, p = 0.013), but no significant interaction between stimulus and azimuth (F[2.70, 59.32] = 2.55, p = 0.070). For localisation bias with the visual stimuli (A1, A2; Fig. 1C), there was a significant effect of stimulus reliability (F[1, 23] = 8.03, p = 0.009) and azimuth (F[1.89, 43.35] = 14.05, p < 0.001), but no significant interaction between reliability and azimuth (F[2.63, 60.37] = 2.33, p = 0.091). Besides the main effects of our reliability manipulations (also seen in Fig. 1D), this shows that for both audition and vision the degree of bias changed with eccentricity (main effect of azimuth), but in a similar manner for both reliability levels (no interaction).

Accounting for patterns of bias

With the exception of the 0 degrees location – which may be atypical, as it corresponds with the location of the fixation point – biases tended to increase with azimuth (Fig. 1C). According to Bayesian principles, this could be explained by a non-uniform prior (i.e., one that favoured more eccentric responses), combined with a situation in which sensory uncertainty increased as one progressed further from the azimuth (i.e., resulting in increasing reliance on the prior). However, in the case of auditory stimuli, uncertainty (variability) was quite stable (Fig. 1A), yet bias increased with azimuth (Fig. 1C). Specifically, post hoc comparisons with a Bonferonni adjustment showed that auditory localisation bias was significantly greater for stimuli presented at 13 degrees, than stimuli at 2 (p = 0.034), 6 (p = 0.022) and 9 (p = 0.040) degrees. Another explanation for non-uniform biases is that bias should be lower at locations favoured by the prior. However, the pattern of auditory biases in Fig. 1C also seems to run counter to this possibility: biases indicate overestimations in eccentricity (suggesting a prior shifted away from the centre), yet biases were greatest at the highest eccentricity – and so did not decrease, but increased, away from the centre.

A simple weighted linear combination of an unbiased Gaussian sensory likelihood and prior would predict that bias b varies with azimuth A by

where p is the azimuth of the prior and w is the weight given to the prior – this simplifies to

It is clear that this linear equation cannot produce positive gradients of the kind seen in Fig. 1C, since the weight term w has to be positive. The gradient is set by –wA, so when w is positive, the gradient –wA is negative.

We considered whether more complex interactions with, or forms of, the prior might explain these data, but were unable to formulate any that were convincing. For example, in “robust cue integration”30, estimates (here, the likelihood and the prior) are combined less as the conflict between them increases. However, for an effect of this kind to reverse the expected gradient so that priors increase (rather than decrease) towards the location of the prior (Fig. 1C) there would have to be extremely drastic changes in weighting for the prior over a very short span of azimuths. Our conclusion, therefore, is that although localisation biases increased with uncertainty, use of a prior in the BDT framework does not readily explain the entire pattern of results. For auditory localisation, bias appears to be a function both of the strength of the likelihood, and the absolute spatial location of the estimate.

Effects of training with feedback

To test whether sound localisation biases can be reduced with experience, we compared sound localisation during and after completion of auditory localisation with visual feedback, and compared biases and variability to before-training results.

Before-, during-, and after- training, all participants were asked to localise the less reliable auditory stimulus (A2); see Table 1. During training, half the participants received more reliable visual feedback (VF1), and half received less reliable feedback (VF2). The reliability of the visual feedback was manipulated to assess whether this affected the degree of any improvement in sound localisation accuracy. Errors in localisation could be systematic (bias), random (sensory uncertainty), or both. An ideal system would adapt more quickly to feedback that is more likely to reflect systematic than random errors29. If visual feedback were used to adjust inaccuracies, we might expect a more reliable visual feedback cue to lead to a greater reduction in bias. Repeated-measures ANOVAs with experimental phase (before-, during-, after- training) as the within-subjects factor and visual feedback reliability (VF1, VF2) as the between-subjects factor were run to assess whether phase or visual feedback reliability had an impact on A2-localisation bias or variability.

Bias with the trained auditory stimulus

As bias data did not meet the assumption of sphericity (χ2[2] = 12.72, p = 0.002), Greenhouse-Geisser corrections are reported. Experimental phase had a significant effect on bias (F[1.38,30.26] = 12.44, p < 0.001), while neither feedback reliability (F[1,22] = 0.02, p = 0.896) nor the interaction (F[1.38,30.26] = 0.97, p = 0.360) did. Figure 2A shows the mean bias for each of the experimental phases. Post hoc comparisons with a Bonferonni adjustment showed that bias was significantly reduced in training (t[23] = 4.02, Bonferonni-corrected p = 0.002) and after-training (t[23] = 3.26, Bonferonni-corrected p = 0.011) phases, compared to the before-training phase. There was no significant difference in bias between the after-training and during-training phase (t[23] = 1.89, Bonferonni-corrected p = 0.229).

Variability with the trained auditory stimulus

Experimental phase also had a significant effect on variability (F[2,44] = 17.06, p < 0.001). Feedback reliability did not have a significant effect (F[1,22] = 0.26, p = 0.614), although there was a significant interaction between phase and feedback reliability (F[2,44] = 5.65, p = 0.007): Participants trained with less reliable visual feedback showed significantly reduced variability during-training than before-training, whereas participants trained with more reliable visual feedback did not (see Supplementary Information S1). Figure 2B shows the mean variability for each of the experimental phases. Post hoc comparisons showed that variability was significantly reduced in training (t[23] = 4.46, Bonferonni-corrected p < 0.001) and after-training (t[23] = 4.17, Bonferonni-corrected p = 0.001) phases, compared to the pre-training phase. There was no significant change in variability between during-training and after-training phases (t[23] = 0.74, Bonferonni-corrected p > 0.999).

Bias and variability with the untrained auditory stimulus

Before- and after- training, all participants were also asked to localise the “more reliable” auditory stimulus (A1). Bias and variability of these judgments were analysed using repeated-measures ANOVAs with experimental phase (before- and after-training) as the within-subjects factor and visual feedback reliability (VF1, VF2) as the between-subject factor. Variability (F[1,22] = 16.91, p < 0.001) but not bias (F[1,22] = 3.89, p = 0.061) was significantly reduced in the after-training compared to before-training phase. There was no effect of visual feedback reliability on either bias (F[1,22] = 0.04, p = 0.836) or variability (F[1,22] = 0.09, p = 0.762), and no significant interactions (bias, F[1,22] = 0.09, p = 0.769; variability, F[1,22] = 0.54, p = 0.471). A1 bias and variability before- and after-training are plotted in Fig. 2.

We also asked whether the degree of change in bias or variability was different for the two auditory stimuli. Repeated-measures ANOVAs with experimental phase (before- and after-training) and stimulus (A1, A2) as the within-subjects factors, and visual feedback reliability (VF1, VF2) as the between-subject factor, indicated a significant interaction between experimental phase and stimulus for bias (F[1,22] = 7.14, p = 0.014), but not variability (F[1,22] = 0.63, p = 0.436). This reflected the finding reported above that bias was significantly reduced post-training for the “less reliable” (A2) stimulus, but not for the “more reliable” (A1) stimulus. There was no three-way interaction between experimental phase, stimulus and visual feedback, either in terms of bias (F[1,22] = 0.87, p = 0.362) or variability (F[1,22] = 0.75, p = 0.397). Note that measured A1 and A2 localisation biases were significantly greater than zero post-training (A1: t[23] = 3.42, p = 0.002, A2: t[23] = 5.26, p < 0.001), indicating that there was still scope for improvement.

The difference in change in bias across A1/A2 stimuli indicates that the results were not simply explained by participants changing their response strategy. The shift for the A2 stimulus was greater, in line with this stimulus being more noisy (and being the one that was trained). The different changes in bias are not explained by a “floor effect” (both biases reaching near-zero from different starting points), as for both A1 and A2 post-learning biases were significantly greater than zero (A1: t[23] = 3.42, p = 0.002, A2: t[23] = 5.26, p < 0.001; see Fig. 3A).

During the experiment, the speakers (outlined in blue) were hidden using an acoustically transparent curtain to ensure that participants were unaware of their locations. Participants maintained their head position fixed at straight ahead, using a chin rest (outlined in red), and entered responses using a dial (not visible) and keyboard (outlined in green).

Discussion

This study aimed to assess (i) whether systematic biases in auditory localisation can be explained by reliance on a prior and (ii) whether auditory localisation biases can be reduced following training with accurate visual feedback. We found that when the variability (uncertainty) of auditory localisation estimates increased, biases also increased. This is consistent with observers relying on prior knowledge to reduce the uncertainty in their auditory location estimates. However, inconsistent with reliance on prior knowledge, we found that biases towards the periphery also increased with eccentricity, despite no corresponding increase in uncertainty. We also saw significant effects of training: Both biases and variability declined in during- and after- training phases compared to before- training.

Participants showed a tendency to overestimate the eccentricity of a sound source that could not be explained by their simply responding toward the mean of the stimulus set (as has been reported for other judgments31,32). This systematic overestimation of sound location eccentricity is consistent with many previously reported findings1,13,14,33, although underestimations have also been reported3. Previous studies have explained biases in terms of factors such as distorted spatial working memory, leading to biases for transient as opposed to on-going auditory targets34,35, or errors in accounting for the position of the eyes relative to the head, causing mismatches between eye-centred visual space and head-centred auditory space13. Differences in the persistence and magnitude of biases have also been documented across age groups14 and different auditory frequencies or bandwidths1,7. We found that participants made systematic transient sound localisation errors, even though we controlled and monitored eye position (as did Refs 1, 3). We also found that biases increased with increased auditory localisation uncertainty. This overall pattern is consistent with the BDT prediction that participants combining sensory information with prior knowledge will show greater biases under greater sensory uncertainty. However, the full pattern of results across locations was not well explained by a prior, because a prior biasing estimates to be more eccentric should become less strong (not more) as eccentricity increases. This suggests that biases in auditory localisation are both a function of uncertainty and some other, as yet unknown, parameter.

Interestingly, as with auditory localisation, we also found that reducing the reliability of visual localisation led to an overall overestimation in the eccentricity of the visual target. However, relative to auditory biases, visual biases were small (<2°). Previous studies have found evidence of the use of a common prior for specific visual and auditory judgments (although as noted there are problems with interpreting our own results solely in terms of priors). For example, humans systematically underestimate the speed of moving objects and moving sounds, in line with their reliance on a common prior that objects in the environment are more likely to be static22,23,24. Here, however, unlike for auditory localisation, overestimations of visual target eccentricity were not observed at all azimuths tested, and for the more reliable visual stimulus, there was actually a tendency to underestimate the eccentricity of targets presented at central positions. Previous studies have also reported biases in visual target localisation, and these have been shown to vary according to the position of eye gaze11, the presence of other visual targets36,37, spatial attention38 and retention interval12. When, as in the present study, participants had to maintain fixation and head position at straight ahead, both consistent (2–4°) overestimations across eccentricities11,39 and underestimations that increase (up to 4°) with eccentricity have been found12. There is a cortical magnification of central visual space, since the fovea is represented by a higher number of neurons than the periphery. It is possible that visual biases reflect combined effects of (i) sensory likelihoods biased towards central space because of incomplete accounting for this magnification and (ii) a prior biased towards the periphery. The influences of these may vary by task, stimulus, and location. We found a visual bias whose direction and magnitude differed at different eccentricities and for the different visual cues. The two visual stimuli chosen to manipulate visual localisation reliability also differed in the extent of the visual field that they covered: the “more reliable” stimulus had a mean width of 24 degrees, whereas the “less reliable” stimulus had a mean width of 13 degrees. Since factors including the spatial distance between elements on a display37 have been found to influence visual biases, it may be that differences in the width and spacing of visual stimuli may account for the differences in the visual bias we observed.

To perceive objects and events in the environment accurately, and to adapt to bodily and sensory changes during development and ageing, humans must keep sensory estimates calibrated. Errors or perceived mis-matches (e.g. in prism adaptation15) provide the feedback for this kind of learning. One question raised in the Introduction was why auditory localisation biases should be so prevalent, given lifelong opportunities to correct errors. A BDT explanation, which we investigated, proposes that such “errors” might reflect use of a prior. In the second part of our study we also conducted an initial test to assess whether the reliability of visual feedback during training influences any reduction in auditory localisation biases. Auditory localisation variability, for the trained (less reliable) and untrained (more reliable) auditory stimulus, was reduced in after-training compared to before-training phases, irrespective of the reliability of visual feedback. Reductions in localisation bias were also seen for both auditory stimuli, but the reduction was greater for the less reliable (trained) auditory stimulus. One possibility for this reduction in bias following training is that participants simply adjusted their response strategy, so that their localisation estimates were closer to the feedback that had been provided. However, had this been the case, we would have expected participants to have adjusted their responses similarly for both the more and less reliable auditory stimuli, which they did not. This could, however, also reflect a failure to generalise any learning with the trained (A2) stimulus to the untrained (A1) stimulus.

During training, participants could have attributed localisation errors signalled by visual feedback to systematic error (bias) – due to a mismatch in auditory and visual spatial representations – or random – due to visual or auditory sensory uncertainty. Consequently, we had expected that observers provided with more reliable visual feedback would be more likely to attribute errors to a mismatch in the auditory and visual mapping, as opposed to random sensory noise29, and would therefore show greater learning (faster improvements in accuracy). However, visual feedback reliability did not influence learning.

A recent study Odegaard, et al.33 used a different approach to examine whether biased sensory likelihoods and/or priors account for visual and auditory localisation biases. They asked participants to localise auditory (noise burst) and visual (Gaussian disk) stimuli presented separately or together at various azimuthal locations (−13, −6.5, 0, 6.5, or 13 degrees), and then determined which of six quantitative models, that varied in terms of sensory likelihood and/or prior parameters, best fitted their data. Results indicated that participants tended to underestimate the eccentricity of visual-only stimuli and, consistent with our findings, overestimate the eccentricity of auditory-stimuli. Auditory and visual biases under bimodal stimulus presentation were dependent on whether the observer inferred common or independent causes for the simultaneously presented auditory and visual stimuli. Unimodal (auditory-only, visual-only) and bimodal (auditory and visual) data were best accounted for by a model that incorporated eight parameters, including a centrally biased visual-only likelihood, a peripherally biased auditory-only likelihood and a general prior for centre. This model was superior to others that assumed non-biased sensory likelihoods and unimodal priors. In the present study, we used an experimental manipulation, instead of a modelling approach, to investigate whether a prior could account for biases in auditory localisation. We found that auditory-only biases increased as auditory-only localisation uncertainty increased, which is consistent with increased reliance on an auditory (or general) peripheral spatial prior. However, it is not clear how this prior could account for biases that increase with eccentricity, despite stable uncertainty. It may be that a model incorporating both biased sensory likelihoods and unimodal (visual-central, auditory-peripheral) priors may be necessary to fully account for auditory and visual localisation biases. Further research that combines behavioural data with modelling approaches is needed to address whether and why such priors and sensory representation biases exist and, as discussed above, investigate mechanisms other than Bayesian use of priors that might explain perceptual biases that increase with uncertainty.

In summary, previous research has found that humans show biases in auditory localisation of varying magnitude and direction1,2,33. Here, we found that participants showed a tendency to overestimate the eccentricity of a sound source, and that these overestimations increased as a function of localisation uncertainty. This is consistent with the Bayesian Decision Theory principle that as sensory uncertainty increased participants increasingly relied on prior information. However auditory localisation overestimations also increased with eccentricity and it is not clear how a simple prior favouring peripheral locations could account for this pattern. Additionally, we found that auditory localisation biases decreased across experimental phases, providing evidence that accuracy can be improved with experience (as well as precision). Further research is needed to evaluate which alternative mechanisms could explain increases of bias under sensory uncertainty.

Methods

Participants

24 adults aged 18 to 24 years (6 male, M = 20.5yrs, SD = 1.9yrs) with normal vision and normal hearing participated. Participants were recruited through the UCL psychology online subject pool. The study received approval from the London Hampstead research ethics committee and was conducted in accordance to the Declaration of Helsinki. Informed written consent was obtained from all participants prior to participation.

Apparatus & Stimuli

Stimuli were presented using nine speakers (50 mm × 90 mm Visaton speakers SC 5.9) and up to 122 light-emitting diode pixels (Adafruit 12 mm diffused flat digital RGB LED pixels), mounted on a 2.5 m semi-circular ring (circle radius: 2.87 m), spanning −15 to +30 degrees (see Fig. 3). A further speaker was mounted on the wall, 20 degrees left of the ring. Stimulus presentation was controlled using Matlab (Version R2014a, The MathWorks Inc., Natick, Massachusetts, United States) and the Psychophysics toolbox extensions40,41,42, on a Windows 7 computer. The Matlab PsychPortAudio ASIO interface controlled audio presentation via a Focusrite Saffire PRO 40 (Focusrite plc, UK) sound card and audio signals were amplified using Lvpin Hi-Fi 2.1 (Lvpin Technology Co. Ltd, China) stereo amps. The sampling rate was 44.1 kHz and speakers were equalized for intensity using a sound level meter. An Arduino Uno microcontroller (SmartProjects, Strambino, Italy) was used to interface between the control computer and the LED pixels see ref. 43. Responses were made by rotating a dial (Griffin Technology PowerMate NA USB Controller) to control, (via Matlab), which LED pixel was illuminated. Eye position was monitored using a Tobii X120 (Tobii AB) eye tracker. An acoustically transparent curtain was arranged in front of the speakers.

Auditory stimuli were 100 msec (including 25 ms rise/fall time) band-pass-filtered noise bursts (tenth octave centred on 1000 Hz) presented at 50 dB SPL, (from speakers positioned at 0, 2, 6, 9, or 13 degrees, right of straight-ahead). These were hidden in background pink noise presented at 10 dB SPL (“more reliable” stimulus, which we denote A1) or 30 dB SPL (“less reliable”, stimulus, A2), (−35, 0, 1, 2, 3, 4, 6, 9, 13 and 18 degrees; mean position = 2°; mean position excluding speaker at −35° = 6°). Visual and visual feedback stimuli were 25 msec flashes of white light (4620 cd/m2) from either 45 (“more reliable”, V1, VF1) or 5 (“less reliable”, V2, VF2) LEDs, randomly sampled (on each trial, without replacement) based on a truncated normal distribution ranging from ±25 LEDs (with mean = 0, corresponding to the centre of the 50 LEDs, and standard deviation of 12 LEDs).

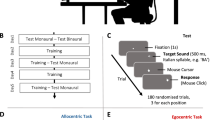

Procedure

The experiment was divided into four tasks, split over two days (see Table 1). During each task, participants were asked to maintain their eye gaze at a fixation cue, consisting of two LEDs emitting red light (1300 cd/m2) presented at 0 degrees. A chin-rest was used to fix participants’ head position, and an eye-tracker was used to monitor eye position (a quick eye-tracking calibration task was completed before commencing the experiment). Participants initialized experimental tasks by pressing a keyboard key, and, provided that the eye-tracker detected that participants were fixating in the correct position, the trial would commence.

Auditory and visual localisation before training

On the first day, participants completed an auditory localisation task and a visual localisation task. On each trial of the auditory localisation task, a brief noise burst was played at one of five speaker positions (0, 2, 6, 9, or 13 degrees relative to straight-ahead), while on each trial of the visual localisation task, a brief flash of light was presented from a sample of LEDs, the mean of which was centred at one of these same five positions (0, 2, 6, 9, 13, degrees). On each trial, following the stimulus presentation, two randomly selected adjacent LEDs (width spanning 1 degree) lit up. Participants were asked to move these two lights, by rotating the dial, toward the perceived source of the noise burst or light flash, maintaining their eyes fixed on the central fixation cue. Once participants were satisfied that the LEDs were aligned with the sound or flash location, they pressed a keyboard key to store their response and this immediately commenced the next trial. Each task consisted of four blocks of fifty trials (10 trials per location tested): two blocks with less reliable stimuli and two blocks with more reliable stimuli. Block order and stimulus location presentation was random. Prior to commencing the test blocks, participants completed a short practice block comprised of five trials (1 per location tested) with the more reliable stimulus.

Training

On the second day, participants completed an auditory localisation task with visual feedback. As in the initial auditory localisation task, on each trial, a brief noise burst was played at one of the five speaker positions, following which participants adjusted the position of two white LEDs until they were aligned with the perceived sound source. However, on pressing a keyboard key to store their response, participants were presented a brief flash of light from a sample of LEDs whose mean position was centred at the veridical sound source location. Again, the task consisted of four blocks of fifty trials each. The auditory stimulus was the same across all blocks, corresponding to the “less reliable” (A2) stimulus in the previous task. Visual feedback stimuli were also the same across all blocks for each participant, but varied across participants (see Table 1): twelve participants were presented with a more reliable visual cue as feedback (VF1), and twelve were presented with a less reliable visual cue as feedback (VF2). The properties of these were the same as of V1 and V2 respectively during the visual localisation task.

Auditory localisation after training

After training, the initial auditory localisation task was repeated exactly as before, with two auditory reliability levels (A1, A2) and without any feedback.

Data Analysis

Trials during which the mean and/or standard deviation of eye coordinate position exceeded 2 degrees from the fixation target were excluded (<2% trials for any participant). Due to the size of the experimental set-up, participants’ localisation estimates were restricted to a maximum of 30 degrees. To account for this, truncated normal distributions (truncation point at 31 degrees) were fitted to each participant’s localisation estimates, at each location (0, 2, 6, 9, 13 degrees), for each stimulus (A1, A2, V1, V2). The mean and standard deviation of these distributions provided measures of each participant’s localisation bias (participant mean estimate – correct location) and variability, respectively. Biases at each position were then averaged across locations for a measure of mean bias. The standard deviation of deviances, at each position, were also averaged for a measure of mean variability (uncertainty).

Additional Information

How to cite this article: Garcia, S. E. et al. Auditory Localisation Biases Increase with Sensory Uncertainty. Sci. Rep. 7, 40567; doi: 10.1038/srep40567 (2017).

Publisher's note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

Lewald, J. & Ehrenstein, W. H. Auditory-visual spatial integration: a new psychophysical approach using laser pointing to acoustic targets. The Journal of the Acoustical Society of America 104, 1586–1597 (1998).

Dobreva, M. S., O’Neill, W. E. & Paige, G. D. Influence of aging on human sound localization. Journal of neurophysiology 105, 2471–2486, doi: 10.1152/jn.00951.2010 (2011).

Miyauchi, R., Kang, D. G., Iwaya, Y. & Suzuki, Y. Relative localization of auditory and visual events presented in peripheral visual field. Multisensory research 27, 1–16 (2014).

Yuille, A. & Bulthoff, H. H. In Perception as Bayesian Inference (eds D. C. Knill & W. Richards ) 123–161 (Cambridge, MA: MIT Press, 1996).

Makous, J. C. & Middlebrooks, J. C. Two-dimensional sound localization by human listeners. The Journal of the Acoustical Society of America 87, 2188–2200 (1990).

Carlile, S., Leong, P. & Hyams, S. The nature and distribution of errors in sound localization by human listeners. Hearing research 114, 179–196 (1997).

Butler, R. A. The bandwidth effect on monaural and binaural localization. Hearing research 21, 67–73 (1986).

Lewald, J. & Getzmann, S. Horizontal and vertical effects of eye-position on sound localization. Hearing research 213, 99–106, doi: 10.1016/j.heares.2006.01.001 (2006).

Lewald, J. The effect of gaze eccentricity on perceived sound direction and its relation to visual localization. Hearing research 115, 206–216 (1998).

Lewald, J., Dorrscheidt, G. J. & Ehrenstein, W. H. Sound localization with eccentric head position. Behavioural brain research 108, 105–125 (2000).

Morgan, C. L. Constancy of egocentric visual direction. Perception & psychophysics 23, 61–68 (1978).

Sheth, B. R. & Shimojo, S. Compression of space in visual memory. Vision research 41, 329–341 (2001).

Razavi, B., O’Neill, W. E. & Paige, G. D. Auditory spatial perception dynamically realigns with changing eye position. The Journal of neuroscience: the official journal of the Society for Neuroscience 27, 10249–10258, doi: 10.1523/jneurosci.0938-07.2007 (2007).

Cui, Q. N., O’Neill, W. E. & Paige, G. D. Advancing age alters the influence of eye position on sound localization. Experimental brain research. Experimentelle Hirnforschung. Experimentation cerebrale 206, 371–379, doi: 10.1007/s00221-010-2413-1 (2010).

von Helmholtz, H. Treatise on Physiological Optics: Translated from the 3rd German edition by Southall JPC. Vol. 3 1924–5 (George Banta Publishing, 1993).

Ernst, M. O. In Human Body Perception From The Inside Out (eds G. Knoblich, M. Thornton, M. Grosjean & M. Shiffrar ) 105–131 (Oxford University Press, 2006).

Knill, D. C. & Richards, W. Perception as Bayesian Inference., (Cambridge University Press, 1996).

Kording, K. P. & Wolpert, D. M. Bayesian decision theory in sensorimotor control. Trends in cognitive sciences 10, 319–326, doi: 10.1016/j.tics.2006.05.003 (2006).

Alais, D. & Burr, D. The ventriloquist effect results from near-optimal bimodal integration. Current biology: CB 14, 257–262, doi: 10.1016/j.cub.2004.01.029 (2004).

Ernst, M. O. & Banks, M. S. Humans integrate visual and haptic information in a statistically optimal fashion. Nature 415, 429–433, doi: 10.1038/415429a (2002).

Gu, Y., Angelaki, D. E. & Deangelis, G. C. Neural correlates of multisensory cue integration in macaque MSTd. Nature neuroscience 11, 1201–1210, doi: 10.1038/nn.2191 (2008).

Stocker, A. A. & Simoncelli, E. P. Noise characteristics and prior expectations in human visual speed perception. Nature neuroscience 9, 578–585, doi: 10.1038/nn1669 (2006).

Welchman, A. E., Lam, J. M. & Bulthoff, H. H. Bayesian motion estimation accounts for a surprising bias in 3D vision. Proceedings of the National Academy of Sciences of the United States of America 105, 12087–12092, doi: 10.1073/pnas.0804378105 (2008).

Senna, I., Parise, C. V. & Ernst, M. O. Hearing in slow-motion: Humans underestimate the speed of moving sounds. Scientific reports 5, 14054, doi: 10.1038/srep14054 (2015).

Thompson, P. Perceived rate of movement depends on contrast. Vision research 22, 377–380 (1982).

Hofman, P. M., Van Riswick, J. G. & Van Opstal, A. J. Relearning sound localization with new ears. Nature neuroscience 1, 417–421, doi: 10.1038/1633 (1998).

Van Wanrooij, M. M. & Van Opstal, A. J. Relearning sound localization with a new ear. The Journal of neuroscience: the official journal of the Society for Neuroscience 25, 5413–5424, doi: 10.1523/jneurosci.0850-05.2005 (2005).

Shinn-Cunningham, B. Adapting to remapped auditory localization cues: a decision-theory model. Perception & psychophysics 62, 33–47 (2000).

Burge, J., Ernst, M. O. & Banks, M. S. The statistical determinants of adaptation rate in human reaching. Journal of vision 8, 20 21–19, doi: 10.1167/8.4.20 (2008).

Knill, D. C. Robust cue integration: A Bayesian model and evidence from cue-conflict studies with stereoscopic and figure cues to slant. Journal of vision 7, 5–5, doi: 10.1167/7.7.5 (2007).

Jazayeri, M. & Shadlen, M. N. Temporal context calibrates interval timing. Nature neuroscience 13, 1020–1026, doi: 10.1038/nn.2590 (2010).

Sciutti, A., Burr, D., Saracco, A., Sandini, G. & Gori, M. Development of context dependency in human space perception. Experimental brain research. Experimentelle Hirnforschung. Experimentation cerebrale 232, 3965–3976, doi: 10.1007/s00221-014-4021-y (2014).

Odegaard, B., Wozny, D. R. & Shams, L. Biases in Visual, Auditory, and Audiovisual Perception of Space. PLoS computational biology 11, e1004649, doi: 10.1371/journal.pcbi.1004649 (2015).

Dobreva, M. S., O’Neill, W. E. & Paige, G. D. Influence of age, spatial memory, and ocular fixation on localization of auditory, visual, and bimodal targets by human subjects. Experimental brain research. Experimentelle Hirnforschung. Experimentation cerebrale 223, 441–455, doi: 10.1007/s00221-012-3270-x (2012).

Lewald, J. & Ehrenstein, W. H. Spatial coordinates of human auditory working memory. Brain research. Cognitive brain research 12, 153–159 (2001).

Kerzel, D. Memory for the position of stationary objects: disentangling foveal bias and memory averaging. Vision research 42, 159–167, doi: 10.1016/S0042-6989(01)00274-7 (2002).

Musseler, J., van der Heijden, A. H., Mahmud, S. H., Deubel, H. & Ertsey, S. Relative mislocalization of briefly presented stimuli in the retinal periphery. Perception & psychophysics 61, 1646–1661 (1999).

Fortenbaugh, F. C. & Robertson, L. C. When here becomes there: attentional distribution modulates foveal bias in peripheral localization. Attention, perception & psychophysics 73, 809–828, doi: 10.3758/s13414-010-0075-5 (2011).

Bock, O. Contribution of retinal versus extraretinal signals towards visual localization in goal-directed movements. Experimental brain research. Experimentelle Hirnforschung. Experimentation cerebrale 64, 476–482 (1986).

Brainard, D. H. The Psychophysics Toolbox. Spatial vision 10, 433–436 (1997).

Pelli, D. G. The VideoToolbox software for visual psychophysics: transforming numbers into movies. Spatial vision 10, 437–442 (1997).

Kleiner, M., Brainard, D. H. & Pelli, D. G. “What’s new in Psychtoolbox-3?”. Perception 36, 14, doi: 10.1177/03010066070360s101 (2007).

Jones, P. R., Garcia, S. E. & Nardini, M. Digital LED Pixels: Instructions for use and a characterization of their properties. Behavior research methods, doi: 10.3758/s13428-015-0653-5 (2015).

Acknowledgements

Supported by grants from the National Institute for Health Research Biomedical Research Centre at Moorfields Eye Hospital National Health Service Foundation Trust and UCL Institute of Ophthalmology (UK).

Author information

Authors and Affiliations

Contributions

S.E.G. collected and analysed the data, and wrote the paper. S.E.G. and M.N. designed the study. P.R.J. provided technical support and conceptual advice. S.E.G., P.R.J., G.S.R. and M.N. all discussed the results and implications, and commented on the manuscript at all stages.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing financial interests.

Supplementary information

Rights and permissions

This work is licensed under a Creative Commons Attribution 4.0 International License. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in the credit line; if the material is not included under the Creative Commons license, users will need to obtain permission from the license holder to reproduce the material. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/

About this article

Cite this article

Garcia, S., Jones, P., Rubin, G. et al. Auditory Localisation Biases Increase with Sensory Uncertainty. Sci Rep 7, 40567 (2017). https://doi.org/10.1038/srep40567

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/srep40567

This article is cited by

-

Reaching measures and feedback effects in auditory peripersonal space

Scientific Reports (2019)

-

Where did that noise come from? Memory for sound locations is exceedingly eccentric both in front and in rear space

Cognitive Processing (2019)

-

A Novel Communication Value Task Demonstrates Evidence of Response Bias in Cases with Presbyacusis

Scientific Reports (2017)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.