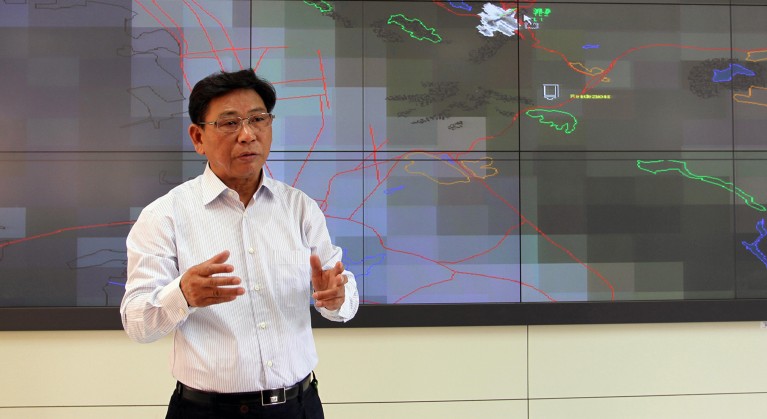

Shanlin Yang of Hefei University of Technology explains intelligent decision making for robots. Yang’s team is developing robots for hospitals that may one day be able to determine when patients are in pain.

Patients in critical condition often can’t directly communicate their level of pain, anxiety or delirium. Facial expressions and posture are used to assess those conditions, but in busy intensive care units (ICUs), healthcare professionals can be pressed for time which makes continuous monitoring difficult.

To assist ICU doctors, researchers at Hefei University of Technology (HFUT) in Anhui, China, are developing robots that may one day help determine a patient’s level of pain, anxiety and delirium by assessing their expression and posture. The same team is also fine-tuning precise, agile robots to assist surgeons. They are also developing the concept for an ‘Internet of Healthcare Systems’ (IHS).

Body language

“The core goal of our medical robotics research is to strengthen the capabilities of doctors’ hands and brains,” says Shanlin Yang, a professor of information management at HFUT, who leads the team.

Yang, along with Ouyang Bo, a biotech researcher at HFUT, have trained an AI model called HopFIR1 using images of human postures as a step towards robots that measure pain, anxiety and delirium.

The HopFIR algorithm has a small number of parameters. That simplicity makes it suitable for deployment on robots because the AI algorithm will require less processing power, and it will be easier to fine tune its performance, say the researchers.

Developing an understanding of human body language would also have other applications, the researchers argue. In a hectic ICU, reading body language could stop free-roving robotic assistants getting in the way of staff — a problem that would need ironing out before such robots could be set loose in an ICU, says Bo.

Robotic surgery

Robots are already in use around the world to assist surgical teams during operations. As healthcare trends further towards minimally invasive procedures, they will become increasingly important for laparoscopic surgery, predicts Yang.

In these procedures, a doctor inserts a laparoscope — an instrument carrying a camera and other surgical tools — through small incisions into a patient’s body to conduct surgeries, such as removing an appendix. The doctor must keep track of the position of their surgical tools, and at the same time evaluate images to assess how the operation is going. To assist surgeons, Yang and his colleagues are developing an automatic robotic control system2 powered by AI models that can track the positions of surgical tools.

Outside of the hospital, Yang believes that patients can also benefit from the IHS. Supported by high-speed internet, the IHS could bring together data from patients, their homes, health insurance and hospitals — and process the data with AI — to help deliver things such as intelligent healthcare, or a rapid response to infectious disease outbreaks, says Yang.

Seamless network

But developing the robots and healthcare systems of the future isn’t everything, says Yang. He believes that cultivating human talent, and creative researchers, will also continue to be key to a bright future for healthcare.

“We actively encourage young research students to undertake independent, creative innovative research,” he says. “Educating humans is still our most important mission.”