Bavarian science minister Markus Blume views part of a quantum computer with Dieter Kranzlmüller (left) at the Leibniz Supercomputing Center.Credit: Sven Hoppe/dpa/Alamy

Most researchers have never seen a quantum computer. Winfried Hensinger has five. “They’re all terrible,” he says. “They can’t do anything useful.”

In fact, all quantum computers could be described as terrible. Decades of research have yet to yield a machine that can kick off the promised revolution in computing. But enthusiasts aren’t concerned —and development is proceeding better than expected, researchers say.

“I’m not trying to take away from how much work there is to do, but we’re surprising ourselves about how much we’ve done,” says Jeannette Garcia, senior research manager for quantum applications and software at technology giant IBM in San Jose, California.

Nature Spotlight: Quantum computing

Hensinger, a physicist at the University of Sussex in Brighton, UK, published a proof of principle in February for a large-scale, modular quantum computer1. His start-up company, Universal Quantum in Haywards Heath, UK, is now working with engineering firm Rolls-Royce in London and others to begin the long and arduous process of building it.

If you believe the hype, computers that exploit the strange behaviours of the atomic realm could accelerate drug discovery, crack encryption, speed up decision-making in financial transactions, improve machine learning, develop revolutionary materials and even address climate change. The surprise is that those claims are now starting to seem a lot more plausible — and perhaps even too conservative.

According to computational mathematician Steve Brierley, whatever the quantum sweet spot turns out to be, it could be more spectacular than anything we can imagine today — if the field is given the time it needs. “The short-term hype is a bit high,” says Brierley, who is founder and chief executive of quantum-computing firm Riverlane in Cambridge, UK. “But the long-term hype is nowhere near enough.”

Justified scepticism

Until now, there has been good reason to be sceptical. Researchers have obtained only mathematical proofs that quantum computers will offer large gains over current, classical computers in simulating quantum physics and chemistry, and in breaking the public-key cryptosystems used to protect sensitive communications such as online financial transactions. “All of the other use cases that people talk about are either more marginal, more speculative, or both,” says Scott Aaronson, a computer scientist at the University of Texas at Austin. Quantum specialists have yet to achieve anything truly useful that could not be done using classical computers.

The problem is compounded by the difficulty of building the hardware itself. Quantum computers store data in quantum binary digits called quantum bits, or qubits, that can be made using various technologies, including superconducting rings; optical traps; and photons of light. Some technologies require cooling to near absolute zero, others operate at room temperature. Hensinger’s blueprint is for a machine the size of a football pitch, but others could end up installed in cars. Researchers cannot even agree on how the performance of quantum computers should be measured.

Whatever the design, the clever stuff happens when qubits are carefully coaxed into ‘superposition’ states of indefinite character — essentially a mix of digital ones and zeroes, rather than definitely being one or the other. Running algorithms on a quantum computer involves directing the evolution of these superposition states. The quantum rules of this evolution allow the qubits to interact to perform computations that are, in practical terms, impossible using classical computers.

That said, useful computations are possible only on quantum machines with a huge number of qubits, and those do not yet exist. What’s more, qubits and their interactions must be robust against errors introduced through the effects of thermal vibrations, cosmic rays, electromagnetic interference and other sources of noise. These disturbances can cause some of the information necessary for the computation to leak out of the processor, a situation known as decoherence. That can mean dedicating a large proportion of the qubits to error-correction routines that keep a computation on track.

A circuit design for IBM’s five-qubit superconducting quantum computer.Credit: IBM Research/SPL

This is where the scepticism about quantum computing begins. The world’s largest quantum computer in terms of qubits is IBM’s Osprey, which has 433. But even with 2 million qubits, some quantum chemistry calculations might take a century, according to a 2022 preprint2 by researchers at Microsoft Quantum in Redmond, Washington, and ETH Zurich in Switzerland. Research published in 2021 by scientists Craig Gidney at Google in Santa Barbara, California, and Martin Ekerå at the KTH Royal Institute of Technology in Stockholm, estimates that breaking state-of-the-art cryptography in 8 hours would require 20 million qubits3.

Yet, such calculations also offer a source of optimism. Although 20 million qubits looks out of reach, it’s a lot less than the one billion qubits of previous estimates4. And researcher Michael Beverland at Microsoft Quantum, who was first author of the 2022 preprint2, thinks that some of the obstacles facing quantum chemistry calculations can be overcome through hardware breakthroughs.

For instance, Nicole Holzmann, who leads the applications and algorithms team at Riverlane, and her colleagues have shown that quantum algorithms to calculate the ground-state energies of around 50 orbital electrons can be made radically more efficient5. Previous estimates of the runtime of such algorithms had come in at more than 1,000 years. But Holzmann and her colleagues found that tweaks to the routines — altering how the algorithmic tasks are distributed around the various quantum logic gates, for example — cut the theoretical runtime to just a few days. That’s a gain in speed of around five orders of magnitude. “Different options give you different results,” Holzmann says, “and we haven’t thought about many of these options yet.”

Quantum hop

At IBM, Garcia is starting to exploit these gains. In many ways, it’s easy pickings: the potential quantum advantage isn’t limited to calculations involving vast arrays of molecules.

One example of a small-scale but classically intractable computation that might be possible on a quantum machine is finding the energies of ground and excited states of small photoactive molecules, which could improve lithography techniques for semiconductor manufacturing and revolutionize drug design. Another is simulating the singlet and triplet states of a single oxygen molecule, which is of interest to battery researchers.

In February, Garcia’s team published6 quantum simulations of the sulfonium ion (H3S+). That molecule is related to triphenyl sulfonium (C18H15S), a photo-acid generator used in lithography that reacts to light of certain wavelengths. Understanding its molecular and photochemical properties could make the manufacturing technique more efficient, for instance. When the team began the work, the computations looked impossible, but advances in quantum computing over the past three years have allowed the researchers to perform the simulations using relatively modest resources: the H3S+ computation ran on IBM’s Falcon processor, which has just 27 qubits.

Towards quantum machine learning

Part of the IBM team’s gains are the result of measures that reduce errors in the quantum computers. These include error mitigation, in which noise is cancelled out using algorithms similar to those in noise-cancelling headphones, and entanglement forging, which identifies parts of the quantum circuit that can be separated out and simulated on a classical computer without losing quantum information. The latter technique, which effectively doubles the available quantum resources, was invented only last year7.

Michael Biercuk, a quantum physicist at the University of Sydney in Australia, who is chief executive and founder of Sydney-based start-up firm Q-CTRL, says such operational tweaks are ripe for exploration. Biercuk’s work aims to dig deeper into the interfaces between the quantum circuits and the classical computers used to control them, as well as understand the details of other components that make up a quantum computer. There is a “lot of space left on the table”, he says; early reports of errors and limitations have been naive and simplistic. “We are seeing that we can unlock extra performance in the hardware, and make it do things that people didn’t expect.”

Similarly, Riverlane is making the daunting requirements for a useful quantum computer more manageable. Brierley notes that drug discovery and materials-science applications might require quantum computers that can perform a trillion decoherence-free operations by current estimates — and that’s good news. “Five years ago, that was a million trillion,” he says.

Some firms are so optimistic that they are even promising useful commercial applications in the near future. Helsinki-based start-up Algorithmiq, for instance, says it will be able to demonstrate practical quantum advances in drug development and discovery in five years’ time. “We’re confident about that,” says Sabrina Maniscalco, Algorithmiq’s co-founder and chief executive, and a physicist at the University of Helsinki.

The long game

Maniscalco is just one of many who think that the first commercial applications of quantum computing will be in speeding up or gaining better control over molecular reactions. “If anything is going to give something useful in the next five years, it will be chemistry calculations,” says Ronald de Wolf, senior researcher at CWI, a research institute for mathematics and computer science in Amsterdam. That’s because of the relatively low resource requirements, adds Shintaro Sato, head of the Quantum Laboratory at Fujitsu Research in Tokyo. “This would be possible using quantum computers with a relatively small number of qubits,” he says.

Financial applications, such as risk management, as well as materials science and logistics optimization also have a high chance of benefiting from quantum computation in the near term, says Biercuk. Still, no one is taking their eyes off the longer-term, more speculative applications — including quantum versions of machine learning.

Machine-learning algorithms perform tasks such as image recognition by finding hidden structures and patterns in data, then creating mathematical models that allow the algorithm to recognize the same patterns in other data sets. Success typically involves vast numbers of parameters and voluminous amounts of training data. But with quantum versions of machine learning, the huge range of different states open to quantum particles means that the routines could require fewer parameters and much less training data.

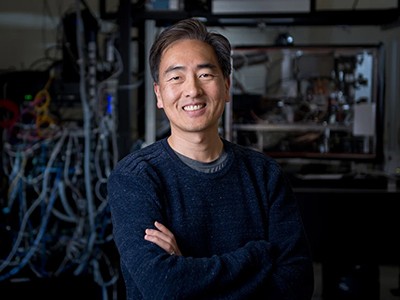

In exploratory work with South Korean car manufacturer Hyundai, Jungsang Kim at Duke University in Durham, North Carolina, and researchers at the firm IonQ in College Park, Maryland, developed quantum machine-learning algorithms that can tell the difference between ten road signs in laboratory tests (see go.nature.com/42tt7nr). Their quantum-based model used just 60 parameters to achieve the same accuracy as a classical neural network using 59,000 parameters. “We also need far fewer training iterations,” Kim says. “A model with 59,000 parameters requires at least 100,000 training data sets to train it. With quantum, your number of parameters is very small, so your training becomes extremely efficient as well.”

Quantum machine learning is nowhere near being able to outperform classical algorithms, but there is room to explore, Kim says.

In the meantime, this era of quantum inferiority represents an opportunity to validate the performance of quantum algorithms and machines against classical computers, so that researchers can be sure about what they are delivering in the future, Garcia says. “That is what will give us confidence when we start pushing past what is classically possible.”

For most applications, that won’t be any time soon. Silicon Quantum Computing, a Sydney-based start-up, has been working closely with finance and communications firms and anticipates many years to go before payday, says director Michelle Simmons, who is also a physicist at the University of New South Wales in Sydney.

That’s not a problem, Simmons adds: Silicon Quantum Computing has patient investors. So, too, does Riverlane, says Brierley. “People do understand that this is a long-term play.”

And despite all the hype, it’s a slow-moving one as well, Hensinger adds. “There’s not going to be this one point when suddenly we have a rainbow coming out of our lab and all problems can be solved,” he says. Instead, it will be a slow process of improvement, spurred on by fresh ideas for what to do with the machines — and by clever coders developing new algorithms. “What’s really important right now is to build a quantum-skilled workforce,” he says.

Towards quantum machine learning

Towards quantum machine learning

Google’s quantum computer hits key milestone by reducing errors

Google’s quantum computer hits key milestone by reducing errors

First quantum computer to pack 100 qubits enters crowded race

First quantum computer to pack 100 qubits enters crowded race

How to get started in quantum computing

How to get started in quantum computing

Hello quantum world! Google publishes landmark quantum supremacy claim

Hello quantum world! Google publishes landmark quantum supremacy claim

Physicists propose football-pitch-sized quantum computer

Physicists propose football-pitch-sized quantum computer