The Perseus cluster contains thousands of galaxies in a cloud of super-heated gas. A tweet from NASA about the sound of the black hole at its centre has been played millions of times.Credit: NASA/CXC/SAO/E. Bulbul et al.

For astronomers who are sighted, the Universe is full of visual wonders. From shimmering planets to sparkling galaxies, the cosmos is spectacularly beautiful. But those who are blind or visually impaired cannot share that experience. So astronomers have been developing alternative ways to convey scientific information, such as using 3D printing to represent exploding stars, and sound to describe the collision of neutron stars.

On Friday, the journal Nature Astronomy will publish the latest in a series of articles on the use of sonification in astronomy1–3. Sonification describes the conversion of data (including research data) into digital audio files, which allows them to be heard, as well as read and seen. The researchers featured in Nature Astronomy show that sound representations can help scientists to better identify patterns or signals in large astronomical data sets1.

The work demonstrates that efforts to boost inclusivity and accessibility can have wider benefits. This is true not only in astronomy; sonification has also yielded discoveries in other fields that might otherwise not have been made. Research funders and publishers need to take note, and support interdisciplinary efforts that are simultaneously more innovative and inclusive.

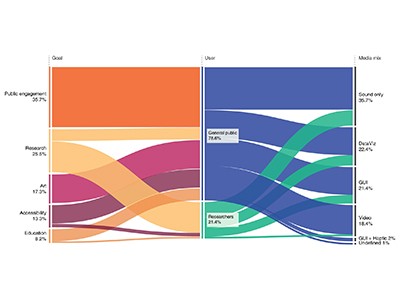

Sonification and sound design for astronomy research, education and public engagement

For decades, astronomers have been making fundamental discoveries by listening to data, as well as looking at it. In the early 1930s, Karl Jansky, a physicist at Bell Telephone Laboratories in New Jersey, traced static in radio communications to the centre of the Milky Way — a finding that led to the discovery of the Galaxy’s supermassive black hole and the birth of radio astronomy. More recently, Wanda Díaz-Merced, an astronomer at the European Gravitational Observatory in Cascina, Italy, who is blind, has used sonification in many pioneering projects, including the study of plasma patterns in Earth’s uppermost atmosphere4.

The number of sonification projects picked up around a decade ago, drawing in researchers from a range of backgrounds. Take Kimberly Arcand, a data-visualization expert and science communicator at the Center for Astrophysics, Harvard & Smithsonian in Cambridge, Massachusetts. Arcand began by writing and speaking about astronomy, particularly discoveries coming from NASA’s orbiting Chandra X-Ray Observatory. She then moved on to work that centred on the sense of touch; this included making 3D printed models of the ‘leftovers’ of exploded stars that conveyed details of the physics of these stellar explosions. When, in early 2020, the pandemic meant she was unable to get to a 3D printer, she shifted to working on sonification.

In August, NASA tweeted about the sound of the black hole at the centre of the Perseus galaxy cluster; the attached file has since been played more than 17 million times. In the same month, Arcand and others converted some of the first images from the James Webb Space Telescope into sound. They worked under the guidance of people who are blind and visually impaired to map the intensity and colours of light in the headline-grabbing pictures into audio.

Wanda Díaz-Merced has used sonification in many projects.Credit: NG Images/Alamy

These maps are grounded in technical accuracy. The sonification of an image of gas and dust in a distant nebula, for instance, uses loud high-frequency sounds to represent bright light near the top of the image, but lower-frequency loud sounds to represent bright light near the image’s centre. The black hole sonification translates data on sound waves travelling through space — created by the black hole’s impact on the hot gas that surrounds it — into the range of human hearing.

Scientists in other fields have also experimented with data sonification. Biophysicists have used it to help students understand protein folding5. Aspects of proteins are matched to sound parameters such as loudness and pitch, which are then combined into an audio representation of the complex folding process. Neuroscientists have explored whether it can help with the diagnosis of Alzheimer’s disease from brain scans6. Sound has even been used to describe ecological shifts caused by climate change in an Alaskan forest, with researchers assigning various musical instruments to different tree species7.

How one astronomer hears the Universe

In the long run, such approaches need to be rigorously evaluated to determine what they can offer that other techniques cannot. For all the technical accuracy displayed in individual projects, the Nature Astronomy series points out that there are no universally accepted standards for sonifying scientific data, and little published work that evaluates its effectiveness.

More funding would help. Many scientists who work on alternative data representations cobble together support from various sources, often in collaboration with musicians or sound engineers, and the interdisciplinary nature of such work makes it challenging to find sustained funding.

On 17 November, the United Nations Office for Outer Space Affairs will highlight the use of sonification in the space sciences in a panel discussion that includes Díaz-Merced and Arcand. This aims to raise awareness of sonification both as a research tool and as a way to reduce barriers to participation in astronomy. It’s time to wholeheartedly support these efforts in every possible way.

Sonification and sound design for astronomy research, education and public engagement

Sonification and sound design for astronomy research, education and public engagement

How one astronomer hears the Universe

How one astronomer hears the Universe

How science should support researchers with visual impairments

How science should support researchers with visual impairments

Using sound to explore events of the Universe

Using sound to explore events of the Universe

The sound of stars

The sound of stars